Real-Time Segmentation of Unstructured Environments by Combining Domain Generalization and Attention Mechanisms

Abstract

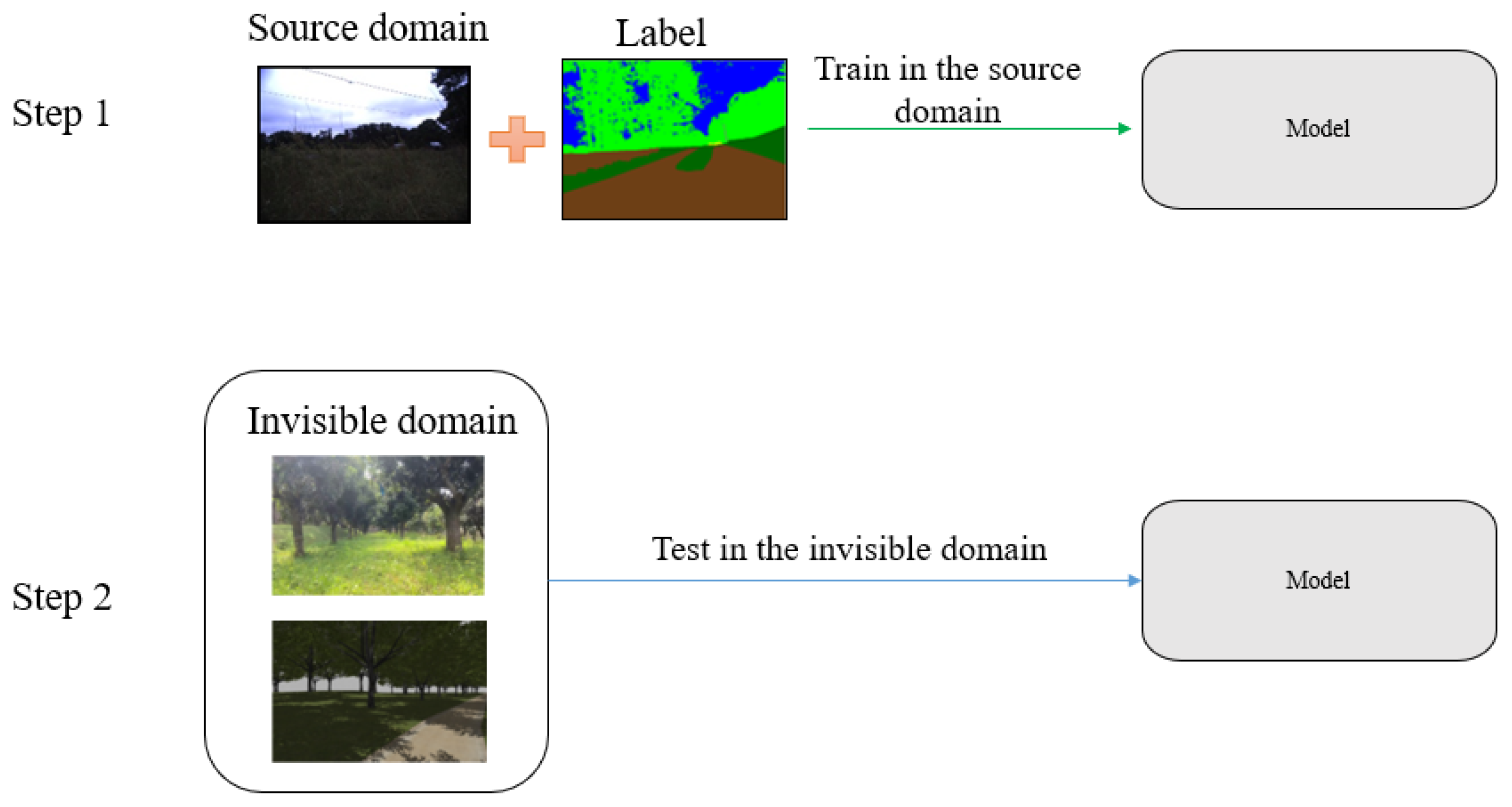

1. Introduction

2. Related Work

2.1. Semantic Segmentation

2.2. Semantic Segmentation in Unstructured Environments

2.3. Domain Migration Techniques

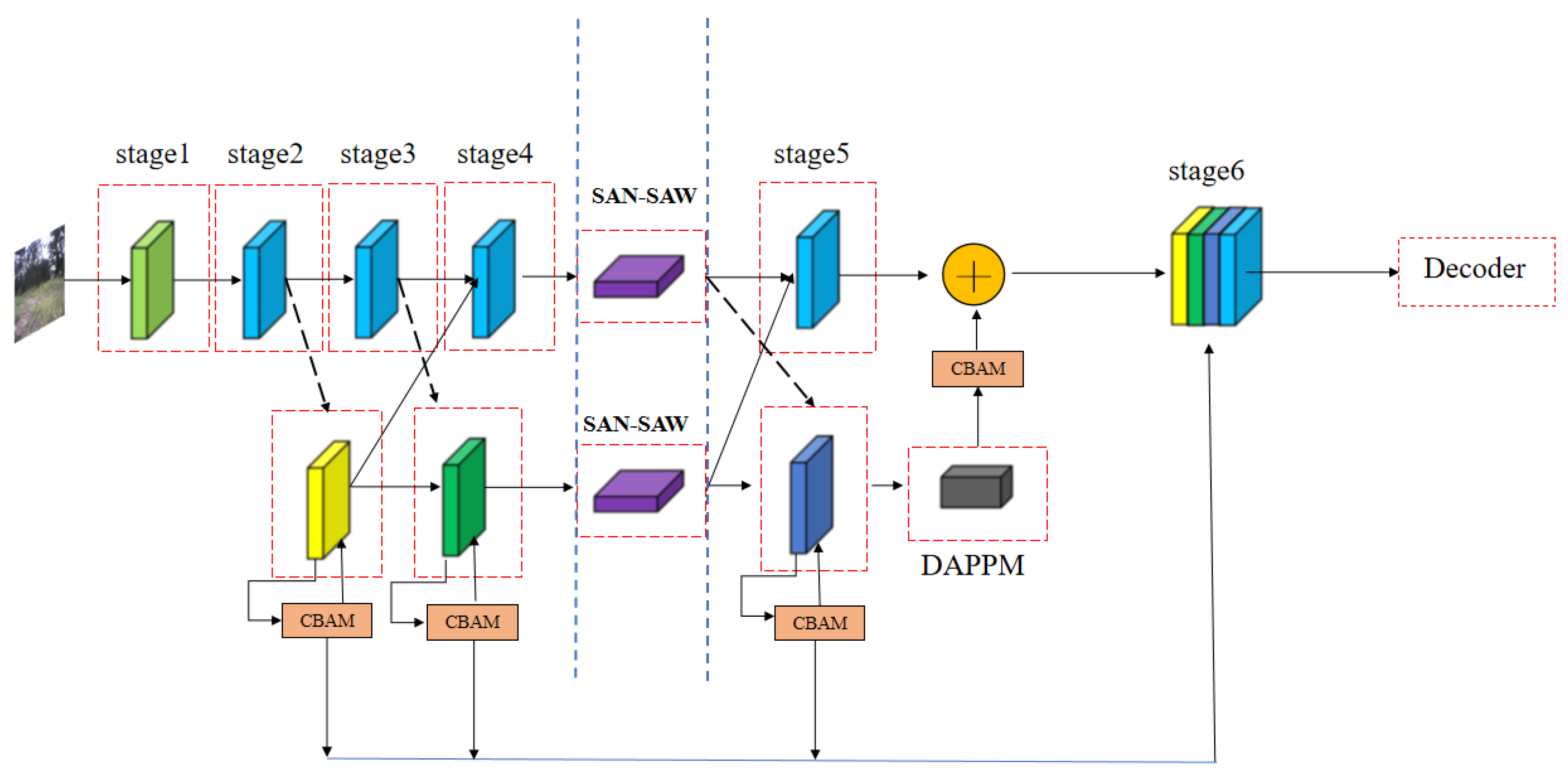

- The semantic-aware normalization and semantic-aware whitening (SAN–SAW) [19] module was implemented in the backbone network to improve the model’s generalization capability. This module is less computationally intensive and significantly improves the model’s feature representation in the target domains, resulting in enhanced accuracy in real-world scenarios.

- The convolutional block attention module (CBAM) [20] was incorporated into the model’s structure to advance its feature representation capability at each stage and capture finer details.

- The rare class sampling strategy (RCS) [21] was adopted to address the class imbalance problem in unorganized scenes, thereby improving accuracy and mitigating potential accuracy degradation.

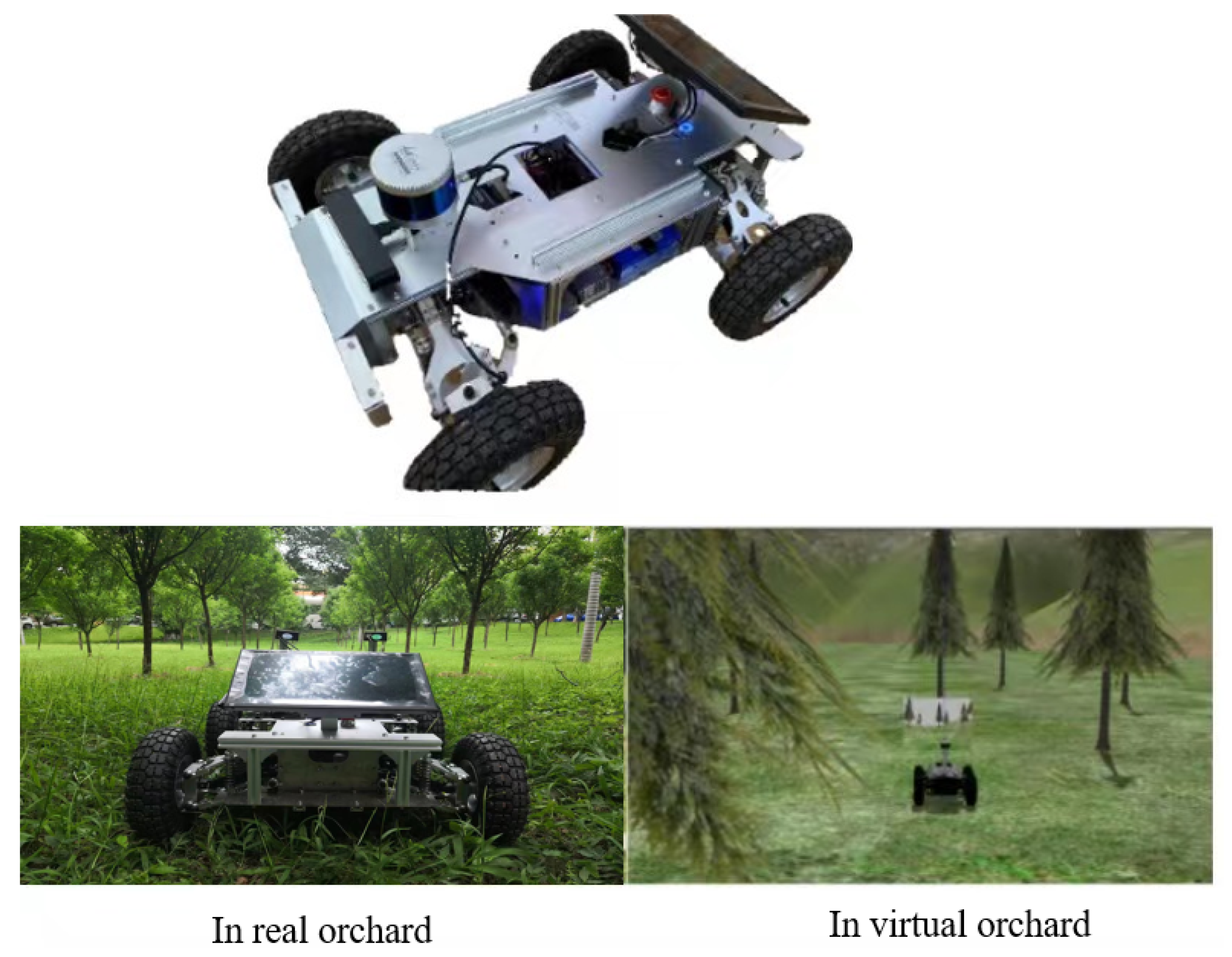

- Our model has been successfully deployed on an agricultural robot equipped with a Jetson Xavier NX and utilizing the robot operating system (ROS). Through training on the RUGD dataset [5] and testing in both real and virtual orchard environments, the experimental results demonstrate the model’s strong domain migration capabilities. It has proven to be a valuable tool in the field of precision agriculture, striking a balance between speed and accuracy.

3. Improved DDRNet23-Slim Lightweight Network

3.1. DDRNet23-Slim Network

3.2. Improved DDRNet23-Slim Network with SAN–SAW Module

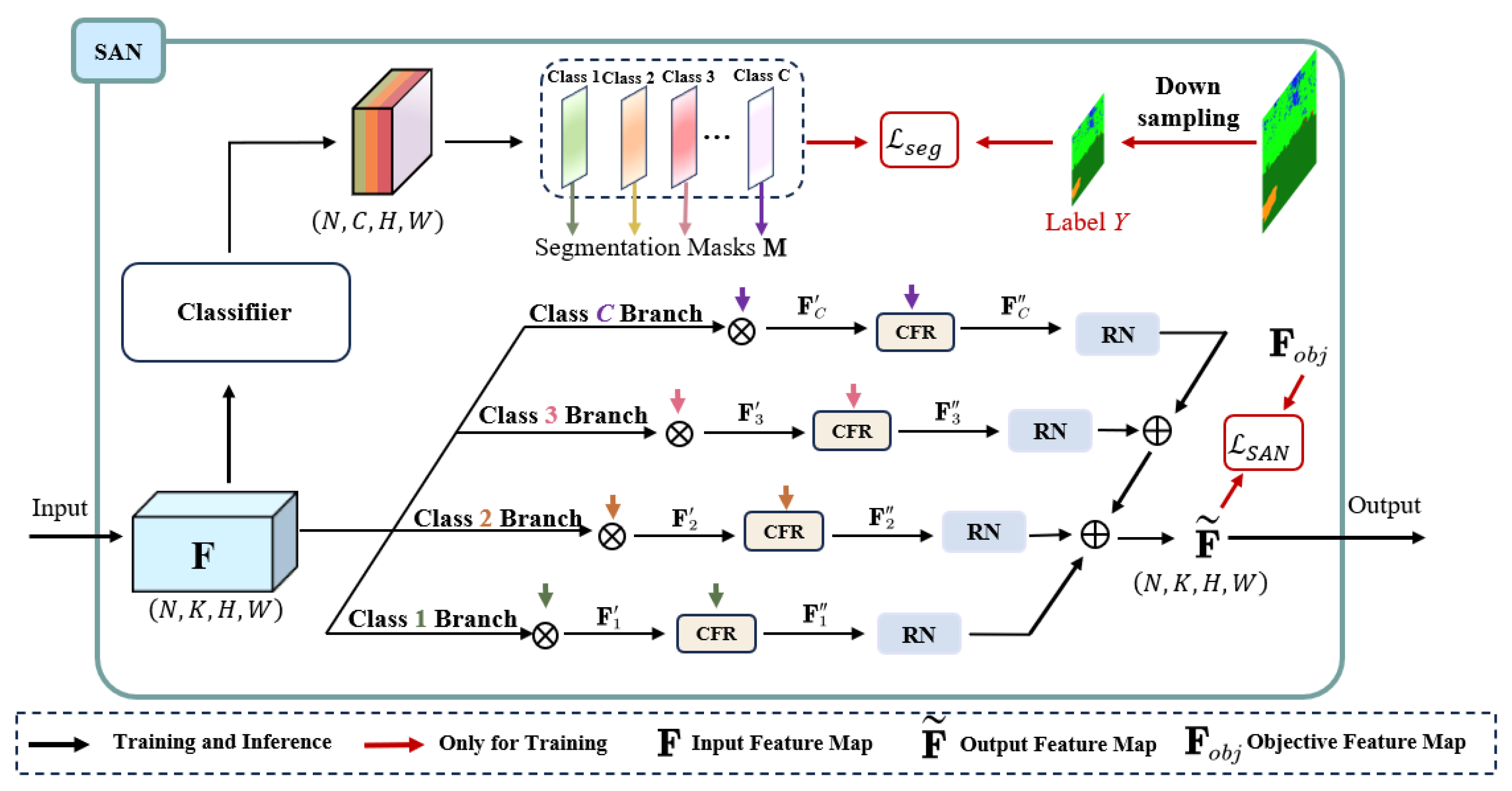

3.2.1. Semantic-Aware Normalization (SAN)

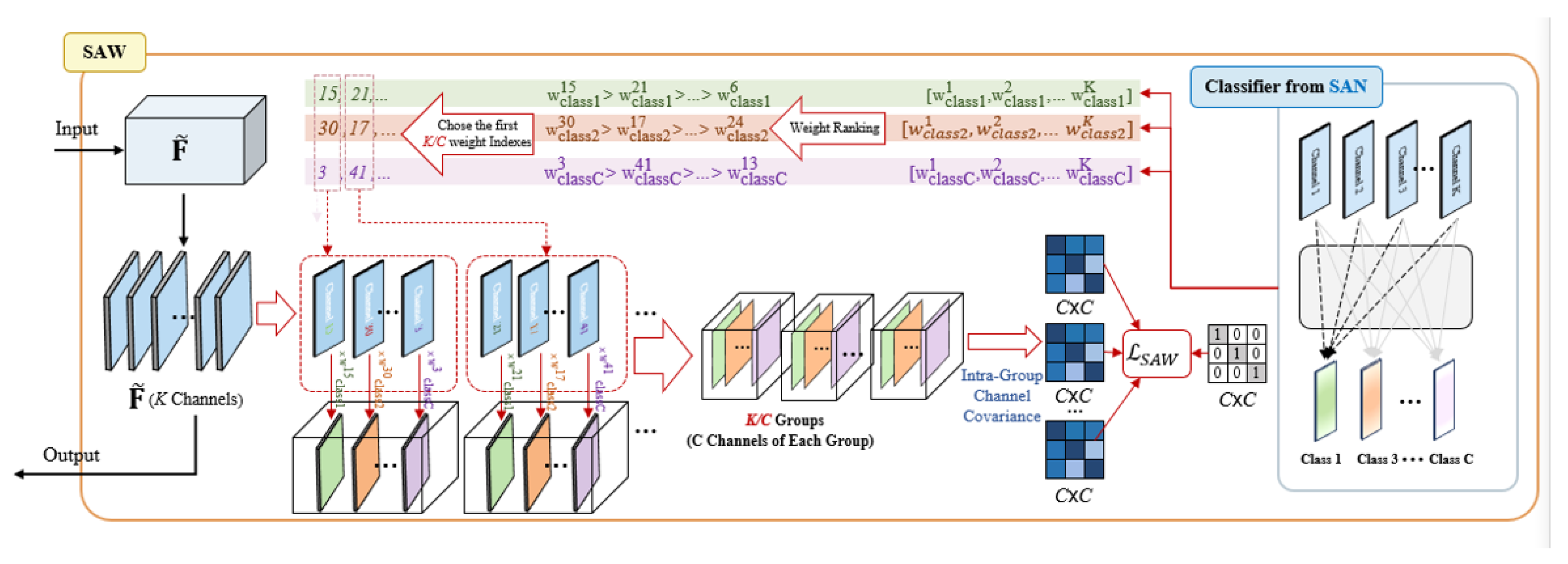

3.2.2. Semantic-Aware Whitening (SAW)

3.3. Incorporating Channel Attention and Spatial Attention Mechanisms

3.4. Rare Class Sampling Strategy

3.5. Loss Function

4. Experiment

4.1. Experimental Platform

4.2. Dataset

4.3. Model Training and Evaluation Methods

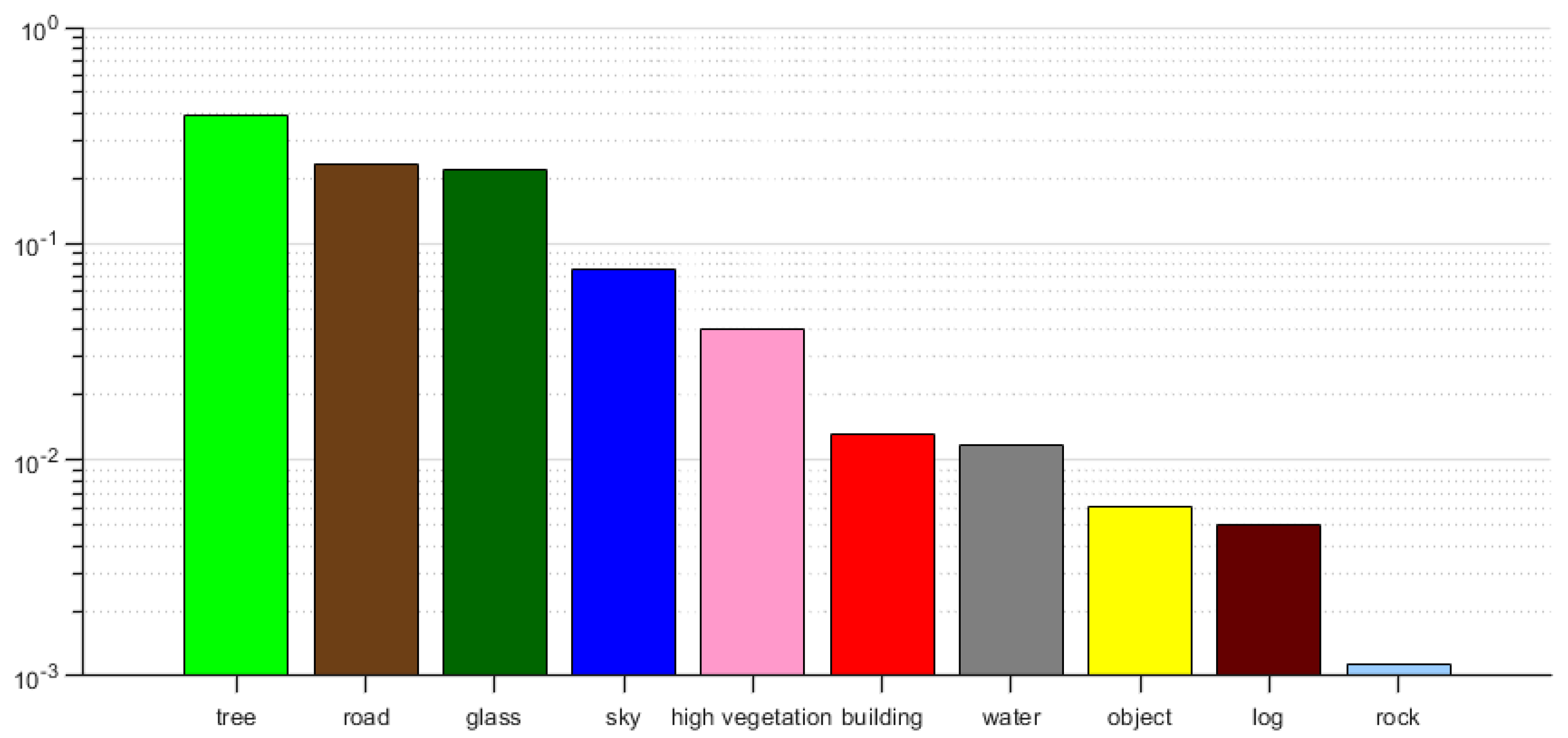

4.4. Rare Class Sampling Strategy

4.5. Analysis of Experimental Results

4.5.1. Ablation Experiment I

4.5.2. Ablation Experiment II

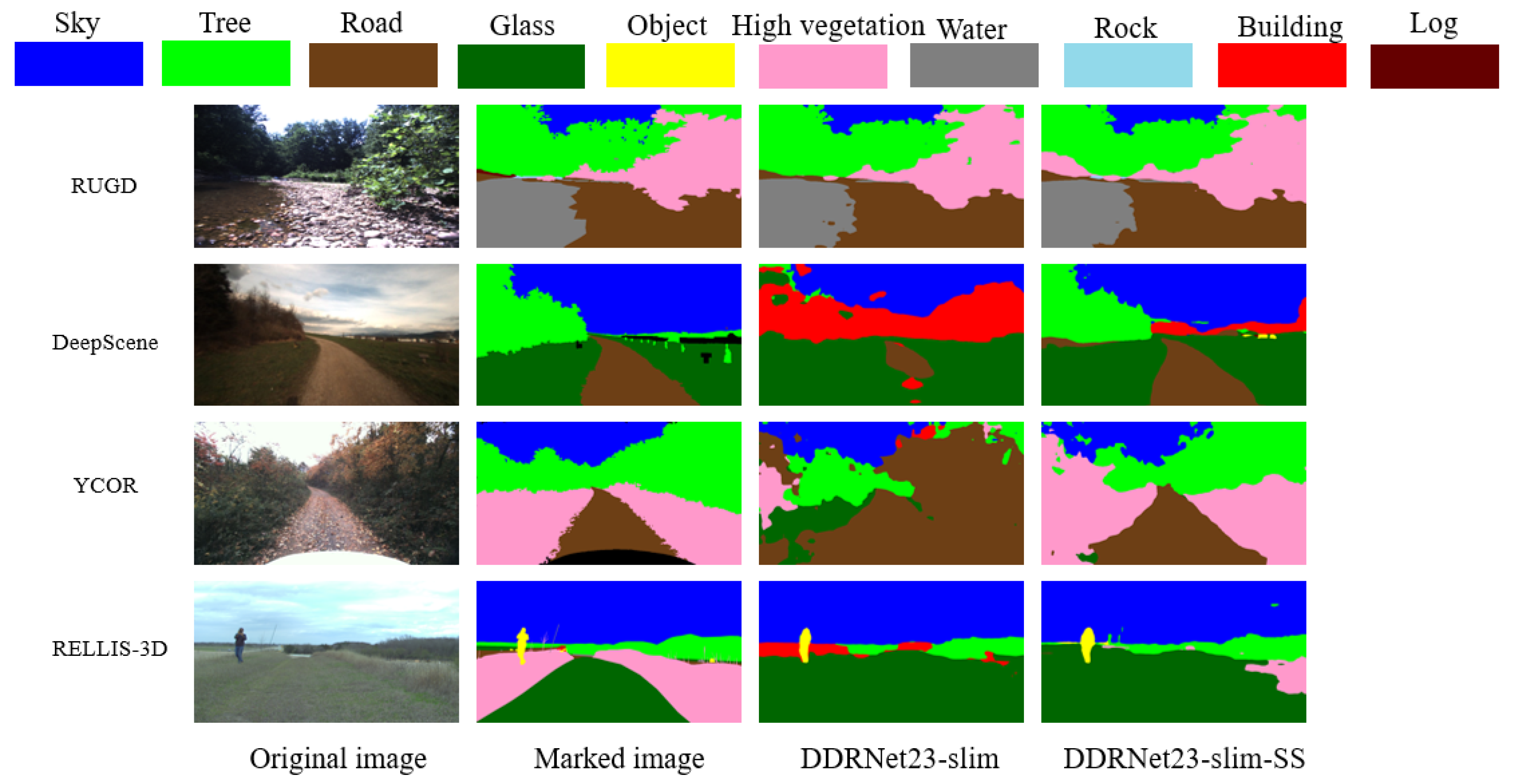

4.5.3. Model Performance Comparison

4.5.4. Deployed on ROS Robot Tested in Real-World and Simulated Environments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Grigorescu, S.; Trasnea, B.; Cocias, T.; Macesanu, G. A survey of deep learning techniques for autonomous driving. J. Field Robot. 2020, 37, 362–386. [Google Scholar] [CrossRef]

- Gupta, A.; Anpalagan, A.; Guan, L.; Khwaja, A.S. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array 2021, 10, 100057. [Google Scholar] [CrossRef]

- De Silva, V.; Roche, J.; Kondoz, A. Fusion of LiDAR and camera sensor data for environment sensing in driverless vehicles. arXiv 2018, arXiv:1710.06230v2. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Wigness, M.; Eum, S.; Rogers, J.G.; Han, D.; Kwon, H. A rugd dataset for autonomous navigation and visual perception in unstructured outdoor environments. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 5000–5007. [Google Scholar]

- Hoang, N.D.; Nguyen, Q.L. Fast local laplacian-based steerable and sobel filters integrated with adaptive boosting classification tree for automatic recognition of asphalt pavement cracks. Adv. Civ. Eng. 2018, 2018, 5989246. [Google Scholar] [CrossRef]

- Zhao, H.; Qin, G.; Wang, X. Improvement of canny algorithm based on pavement edge detection. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010. [Google Scholar]

- Huang, X.; Zhang, L. Road centreline extraction from high-resolution imagery based on multiscale structural features and support vector machines. Int. J. Remote Sens. 2009, 30, 1977–1987. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Baheti, B.; Innani, S.; Gajre, S.; Talbar, S. Eff-unet: A novel architecture for semantic segmentation in unstructured environment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 358–359. [Google Scholar]

- Liu, H.; Yao, M.; Xiao, X.; Cui, H. A hybrid attention semantic segmentation network for unstructured terrain on Mars. Acta Astronaut. 2023, 204, 492–499. [Google Scholar] [CrossRef]

- Jin, Y.; Han, D.; Ko, H. Trseg: Transformer for semantic segmentation. Pattern Recognit. Lett. 2021, 148, 29–35. [Google Scholar] [CrossRef]

- Guan, T.; Kothandaraman, D.; Chandra, R.; Sathyamoorthy, A.J.; Weerakoon, K.; Manocha, D. Ga-nav: Efficient terrain segmentation for robot navigation in unstructured outdoor environments. IEEE Robot. Autom. Lett. 2022, 7, 8138–8145. [Google Scholar] [CrossRef]

- Ganin, Y.; Lempitsky, V. Unsupervised domain adaptation by backpropagation. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 6–11 July 2015; pp. 1180–1189. [Google Scholar]

- Gan, C.; Yang, T.; Gong, B. Learning attributes equals multi-source domain generalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 87–97. [Google Scholar]

- Pan, H.; Hong, Y.; Sun, W.; Jia, Y. Deep dual-resolution networks for real-time and accurate semantic segmentation of traffic scenes. IEEE Trans. Intell. Transp. Syst. 2022, 24, 3448–3460. [Google Scholar] [CrossRef]

- Peng, D.; Lei, Y.; Hayat, M.; Guo, Y.; Li, W. Semantic-aware domain generalized segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2594–2605. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hoyer, L.; Dai, D.; Van Gool, L. Daformer: Improving network architectures and training strategies for domain-adaptive semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9924–9935. [Google Scholar]

- Zhou, K.; Liu, Z.; Qiao, Y.; Xiang, T.; Loy, C.C. Domain generalization in vision: A survey. arXiv 2021, arXiv:2103.02503. [Google Scholar]

- Li, Y.; Fang, C.; Yang, J.; Wang, Z.; Lu, X.; Yang, M.H. Universal style transfer via feature transforms. In Proceedings of the Advances in Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; 30. [Google Scholar]

- Cho, W.; Choi, S.; Park, D.K.; Shin, I.; Choo, J. Image-to-image translation via group-wise deep whitening-and-coloring transformation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10639–10647. [Google Scholar]

- Jadon, S. A survey of loss functions for semantic segmentation. In Proceedings of the 2020 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), Virtual, 27–29 October 2020; pp. 1–7. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Brostow, G.J.; Fauqueur, J.; Cipolla, R. Semantic object classes in video: A high-definition ground truth database. Pattern Recognit. Lett. 2009, 30, 88–97. [Google Scholar] [CrossRef]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. Semantickitti: A dataset for semantic scene understanding of lidar sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9297–9307. [Google Scholar]

- Jiang, P.; Osteen, P.; Wigness, M.; Saripalli, S. Rellis-3d dataset: Data, benchmarks and analysis. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 1110–1116. [Google Scholar]

- Valada, A.; Oliveira, G.L.; Brox, T.; Burgard, W. Deep multispectral semantic scene understanding of forested environments using multimodal fusion. In Proceedings of the 2016 International Symposium on Experimental Robotics, Tokyo, Japan, 3–6 October 2016; Springer: Berlin/Heidelberg, Germany, 2017; pp. 465–477. [Google Scholar]

- Maturana, D.; Chou, P.W.; Uenoyama, M.; Scherer, S. Real-time semantic mapping for autonomous off-road navigation. In Proceedings of the Field and Service Robotics: Results of the 11th International Conference, Zurich, Switzerland, 12–15 September 2017; Springer: Berlin/Heidelberg, Germany, 2018; pp. 335–350. [Google Scholar]

- Yang, Z.; Tan, Y.; Sen, S.; Reimann, J.; Karigiannis, J.; Yousefhussien, M.; Virani, N. Uncertainty-aware Perception Models for Off-road Autonomous Unmanned Ground Vehicles. arXiv 2022, arXiv:2209.11115. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Poudel, R.P.; Liwicki, S.; Cipolla, R. Fast-scnn: Fast semantic segmentation network. arXiv 2019, arXiv:1902.04502. [Google Scholar]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 325–341. [Google Scholar]

- Zhang, Y.; Yao, T.; Qiu, Z.; Mei, T. Lightweight and Progressively-Scalable Networks for Semantic Segmentation. arXiv 2022, arXiv:2207.13600. [Google Scholar] [CrossRef]

- Fan, M.; Lai, S.; Huang, J.; Wei, X.; Chai, Z.; Luo, J.; Wei, X. Rethinking bisenet for real-time semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 9716–9725. [Google Scholar]

| Dataset | DDRNet23-Slim | DDRNet23-Slim-SS | ||

|---|---|---|---|---|

| aAcc | mIoU | aAcc | mIoU | |

| RUGD [5] | 92.18 | 73.19 | 91.94 | 73.17 |

| DeepScene [30] | 47.46 | 39.85 | 71.52 | 54.88 |

| YCOR [31] | 79.86 | 35.99 | 81.73 | 56.06 |

| RELLIS-3D [29] | 71.88 | 48.01 | 81.13 | 61.07 |

| Paramas | 5.79M | 5.79M | ||

| Flops | 7.14 GFLOPs | 7.16 GFLOPs | ||

| Categories | BaseModel | +CBAM | +CBAM+RCS | |||

|---|---|---|---|---|---|---|

| acc | IoU | acc | IoU | acc | IoU | |

| road | 92.37 | 86.40 | 93.42 | 87.33 | 92.35 | 84.91 |

| glass | 90.96 | 84.25 | 93.36 | 86.14 | 92.44 | 85.58 |

| tree | 96.12 | 91.38 | 97.2 | 92.0 | 96.56 | 90.64 |

| high vegetation | 79.55 | 67.91 | 84.09 | 73.82 | 85.63 | 83.18 |

| object | 65.18 | 58.19 | 79.62 | 67.15 | 81.56 | 68.17 |

| building | 92.87 | 82.93 | 93.29 | 85.51 | 93.17 | 85.12 |

| log | 57.13 | 42.71 | 60.15 | 47.92 | 63.28 | 53.74 |

| sky | 87.66 | 79.83 | 89.79 | 79.86 | 85.42 | 78.14 |

| rock | 68.08 | 63.85 | 70.08 | 65.12 | 66.22 | 68.62 |

| water | 83.52 | 74.29 | 85.03 | 77.05 | 85.47 | 79.41 |

| Model Configuration | mAcc% | mIoU% | Nubmer of Parameters | Processing Speed/(FPS) |

|---|---|---|---|---|

| BaseModel | 81.34 | 73.17 | 5.79M | 91.57 |

| +CBAM | 84.60 | 76.19 | 5.79M | 86.09 |

| +CBAM+RCS | 85.21 | 77.75 | 5.79M | 86.09 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, N.; Zhao, W.; Liang, S.; Zhong, M. Real-Time Segmentation of Unstructured Environments by Combining Domain Generalization and Attention Mechanisms. Sensors 2023, 23, 6008. https://doi.org/10.3390/s23136008

Lin N, Zhao W, Liang S, Zhong M. Real-Time Segmentation of Unstructured Environments by Combining Domain Generalization and Attention Mechanisms. Sensors. 2023; 23(13):6008. https://doi.org/10.3390/s23136008

Chicago/Turabian StyleLin, Nuanchen, Wenfeng Zhao, Shenghao Liang, and Minyue Zhong. 2023. "Real-Time Segmentation of Unstructured Environments by Combining Domain Generalization and Attention Mechanisms" Sensors 23, no. 13: 6008. https://doi.org/10.3390/s23136008

APA StyleLin, N., Zhao, W., Liang, S., & Zhong, M. (2023). Real-Time Segmentation of Unstructured Environments by Combining Domain Generalization and Attention Mechanisms. Sensors, 23(13), 6008. https://doi.org/10.3390/s23136008