1. Introduction

Spectral imaging systems capture the spectral image of scenes and are widely used in applications such as color reproduction [

1,

2,

3], image enhancement [

4,

5], object recognition [

6,

7], and material classification [

8,

9,

10]. Among snapshot spectral imaging techniques, the multispectral filter array (MSFA)-based multispectral camera is rather compact and cost-effective for spectral image acquisition [

11]. The camera incorporates a mosaic of pre-determined spectral filters on top of the image sensor to form MSFAs that capture spatial-spectral data in a single shot. However, MSFA filters the spectral data at the pixel level, leading to a trade-off in spatial resolution [

12].

For a consumer-friendly camera, it is desirable to have the capability of delivering RGB-colored images. Traditionally, multispectral cameras render the reconstructed spectral image to produce RGB color images. However, this approach is thereby time-consuming, and the color appearance of the rendered RGB image is mostly irrelevant to the original scene. To our knowledge, very few studies have addressed the generation of display-ready RGB images by MSFA.

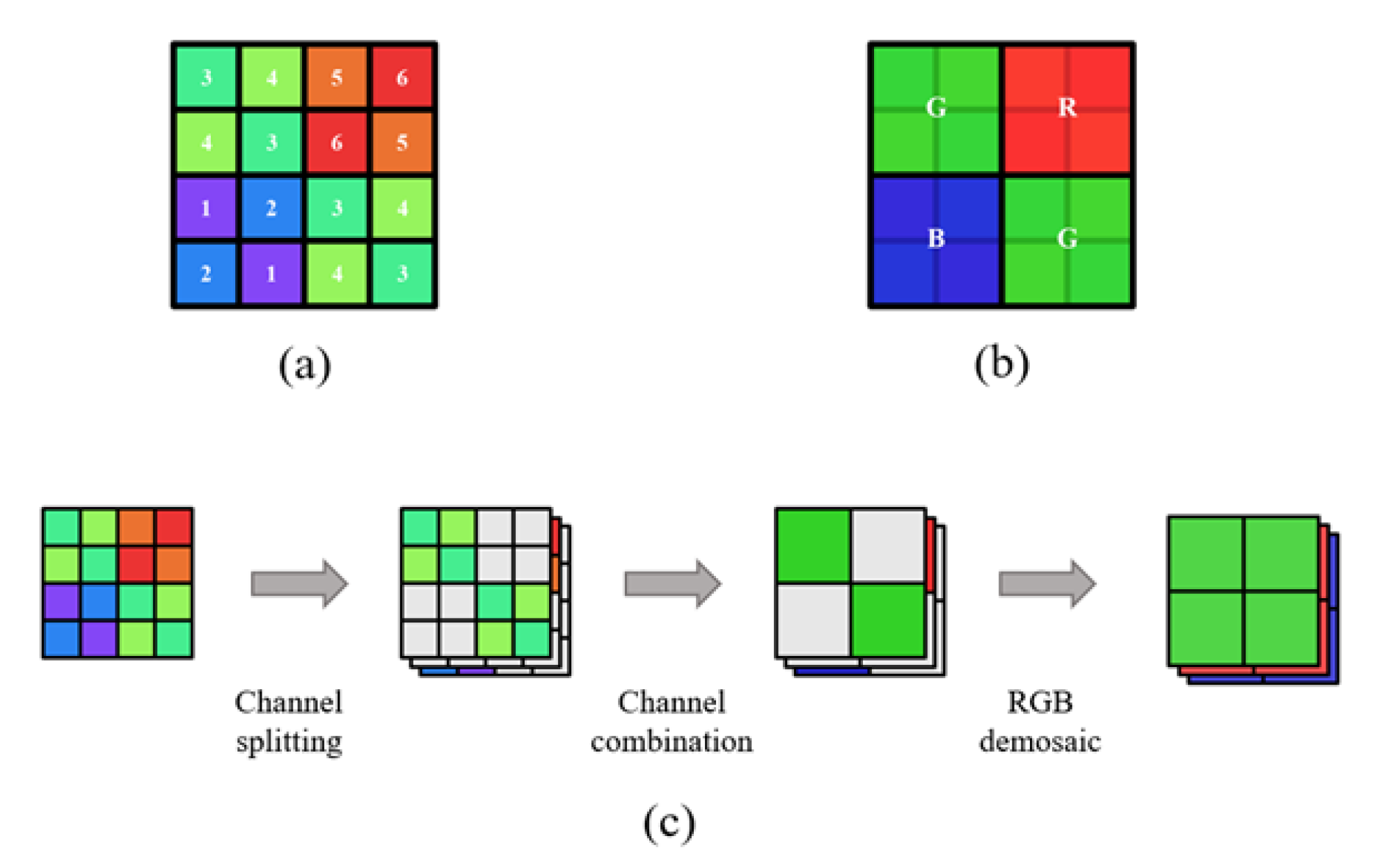

In this study, we propose an MSFA pattern. The pattern has the potential to simultaneously generate an RGB raw image and a multispectral image. The MSFA comprises six spectral channels for good spectral acquisition accuracy and adopts a channel assignment modeled after the form of Quad-Bayer RGB CFA (color filter array), as illustrated in

Figure 1a,b. The spectral channels are divided into three groups, which correspond to the red, green, and blue channels of RGB CFA. The specific MSFA layout enables the generation of a Bayer-form RGB raw image by merging the spectral channels, as shown in

Figure 1c. To achieve accurate spectral reconstruction and acceptable RGB image output, we devised a two-stage optimization scheme for the spectral sensitivity functions (SSFs).

The main objectives of this work are summarized as follows:

Propose a method to design a dual-mode MSFA pattern, as shown in

Figure 2. The method results in an MSFA pattern that enables the camera to output RGB raw images applicable to conventional RGB image signal processing (ISP) pipelines while capturing multispectral images like standard MSFAs;

Develop a two-stage optimization scheme consisting of a coarse stage via gradient descent (GD) and a refined stage via particle swarm optimization (PSO) to determine the optimal SSFs;

Present an optimal MSFA with (1) competitive accuracy in spectral reconstruction compared to existing MSFAs; and (2) comparable color error in the RGB image to that of commercial RGB cameras.

2. Related Works

Early research preferred MSFAs with a series of narrow-band filters for snapshot spectral image capture. As far as we know, Ramanath [

13] was one of the earliest to publicly propose the use of MSFA for multispectral image snapshots. In this study, a hexagonal filter arrangement is proposed that includes seven different spectral channels. Some straightforward MSFA layouts are then proposed. Brauers [

14] adopted a MSFA by arranging six channels in a 3 × 2 layout to simplify its configuration. Wu [

15] placed nine spectral channels, including an infrared channel, into a 3 × 3 MSFA layout to obtain more abundant spectral information. However, the excessive number of channels resulted in a suboptimal spatial sampling rate, which decreased the overall quality of the multispectral images.

Miao [

16,

17] proposes a generic method for allocating the spectral filters in the MSFA using a binary tree. This method can evenly distribute the pixel positions of each spectral channel. The following studies [

18,

19] improve its performance by developing corresponding demosaic methods. Several studies [

20,

21,

22,

23] then investigate the impact of SSF shape and the number of channels on the binary tree method. The conclusion suggests that 5 to 6 spectral channels are appropriate to balance between spatial and spectral accuracy, and wide-band SSFs have better performance on real-world spectral imaging.

Recently, researchers intend to combine one-shot multispectral imaging technology with RGB imaging. Some studies [

24,

25,

26] review the RGBN filter arrays, in which a near-infrared ray filter replaces one green filter in the conventional Bayer CFA. However, the design of RGBN filter arrays primarily focuses on RGB imaging and performs poorly in multispectral imaging.

Murakami [

27] suggests a 4-channel beam splitter with RGB sensors and an extra MSFA sensor, capturing high-resolution RGB images and multispectral images simultaneously. However, the four-sensor framework is extremely complex, and the beam splitter results in low spectral power for the MSFA sensor.

Although there have been extensive studies on optimizing MSFA for spectral reconstruction purposes, there is limited research dedicated to optimizing MSFA for the performance of generating display-ready RGB images. Only a few studies involve RGB applications of MSFA, and they primarily aim to enhance the color capturing capabilities of RGB cameras. The RGB raw images acquired using such designed spectral filter arrays are not compatible with conventional RGB ISP (Image Signal Processing) algorithms.

3. Preliminary

3.1. Multispectral Response Formation Model

Assuming that a scene is uniformly illuminated and captured by a camera equipped with

C-channel MSFA, the multispectral image raw response

at pixel location

u is given by

where

is the point in the scene corresponding to

.

is the SSF of the

i-th channel,

is the spectral irradiance of the illuminant,

is the spectral reflectance at

, and

is a spatial-dependent binary mask denoting the channel selection of the MSFA pattern, where

indicates that the pixel location

u is assigned to the

i-th spectral channel and 0 otherwise.

3.2. The Dual-Mode Output of Proposed MSFA

To balance spectral accuracy and spatial resolution, we adopt an approach that overlays the sensor with micro-filters of six types of spectral transmittances. As a result, the captured raw image is a single-channel array with a multi-spectral filter pattern. In this pattern, each pixel is only sensitive to a specific band of the spectrum out of the six available.

Figure 1a illustrates the proposed MSFA layout, in which the channels are sequentially numbered by their peak wavelengths for clarity.

Similar to the demosaicking procedure in conventional RGB cameras that interpolates 3-channel responses from a single-channel raw array, it is possible to produce a 6-channel multispectral image by interpolating the single-channel raw array from the proposed MSFA with some elaborate demosaic algorithms. We refer to this procedure as “multispectral mode” hereafter.

In addition to functioning in multispectral mode, the proposed MSFA can also operate in “RGB mode” because of its innovative micro-filter layout. Taking inspiration from the Quad-Bayer CFA, we group the six types of micro-filters into three categories (R, G, and B) and organize those with comparable transmittance in neighborhoods. We refer to each of these groups as quads, as they consist of 2 × 2 pixels and can be combined into a “big pixel”, as depicted in

Figure 1b. In RGB mode, the proposed MSFA generates a Bayer-pattern raw array. Conventional image processing algorithms, such as demosaicking, white balancing, and color correction, can be applied to this raw array to produce an RGB image, as shown in

Figure 1c.

Figure 3 compares the process of generating RGB images between the RGB mode of the proposed MSFA and the existing single-mode MSFA. It can be observed that the RGB image output of the proposed MSFA has higher fidelity to the original scene.

4. Methods

There have been extensive studies optimizing the arrangement of MSFA filters and spectral sensitivity functions, producing high-performance configurations. However, applying the conclusions from these studies directly to a dual-mode MSFA would be inappropriate. This is because the dual-mode MSFA is expected to produce color images in RGB mode. Its equivalent SSFs should be similar to those of a conventional RGB camera, which imposes an additional constraint on the dual-mode MSFA. As a result, it is crucial to develop a new optimization method to improve the performance of the dual-mode MSFA in both RGB and spectral modes. Thus, we developed an objective function that comprises the reconstruction error in both the multispectral and RGB modes. Furthermore, we implemented a two-stage optimization scheme to obtain the optimal configurations for the MSFA. Given that the 6-channel MSFA layout is deterministic, our focus will be on optimizing the SSFs.

4.1. Synthetic SSF

As conducted in previous works [

14,

20], we construct the SSF from some

prior formation models, in which a set of

K hyper-parameters

are utilized to control the exact distribution of the SSF

where

is the index of the optimized channels. The choice and the definition of

will be discussed in

Section 5.1.

The SSF matrix of the MSFA is then characterized as

With a slight abuse of notation, we denote the SSF to be optimized as hereafter, where P is the set of optimized hyper-parameters.

4.2. Definition of the Training Samples

Two sets of spectral response data, the calibration sample set

and the training sample set

, were employed in the optimization scheme according to different computational objectives.

is a set of spectral responses, which is used to calculate the pseudo-inverse matrix for spectral reconstruction in the multispectral mode, and the color-correction matrix in the RGB mode.

consists of small-sized spectral images, referred to as “image blocks”, which are exclusive with

. The specific composition of the training sample sets will be discussed in

Section 5.3.

4.3. Objective Function of SSF Optimization

In order to find the optimal SSFs for the proposed MSFA, both the spectral accuracy of the multispectral imaging mode and the color error of the RGB mode should be taken into consideration. For the multispectral mode, the spectral root-mean-square error (RMSE) is chosen to evaluate the spectral reproducibility of the reconstructed spectral images. For the RGB mode, it is more appropriate to evaluate color differences as images captured in this mode are primarily consumed for visual tasks. To this end, we develop an objective function incorporating the two aspects to ensure admirable spectral recovery accuracy and better RGB color reproduction.

4.3.1. Objective Function for Multispectral Mode

For the sake of simplicity, the mosaic and demosaic processes are impermanently disregarded in the following derivation. Under this condition, the captured image is a full-resolution multi-channel image rather than the single-channel raw image mentioned in

Section 3.1. The imaging process of the multispectral mode, as presented in Equation (1), can be represented in matrix form as

where

is the output raw array of the multispectral mode, followed by an img2column operator that reshapes a

H ×

W × 6 image into a 6 ×

N array, where

N =

H ×

W is the spatial size of the image.

is the 6 ×

M SSF matrix of the MSFA defined by Equation (3), where

M is the spectral resolution of SSF.

denotes the

M ×

N spectral irradiance image of the image scene, which is the element-wise product of the spectral reflectance of the scene and the illuminant spectral power distribution (SPD).

To alleviate the computational burden of optimization iterations, the pseudo-inverse method is employed for spectral reconstruction, in which the spectral irradiance image is reconstructed as

where

is the pseudo-inverse matrix that has been calculated from the calibration set beforehand:

where

is the multispectral responses of

.

With the pseudo-inverse matrix

, the estimated spectral radiance

of the training sample set can be obtained by

Finally, the RMSE loss of the multispectral mode can be expressed by the L2-norm of the estimated spectral irradiance

and the training set

as

4.3.2. Objective Function for RGB Mode

Since the mosaic and demosaic processes are disregarded here, the correlation between RGB images and multispectral images can be simply characterized by a convert matrix:

where

is the 3 ×

N RGB response matrix, and

is a 3

× 6 convert matrix. Referring to

Figure 1a,b, the value of the convert matrix for the proposed MSFA can be derived as

For the RGB mode, the standard formula of CIEDE2000 (

) is adopted to measure the color difference between the estimated RGB values and the ground truth. Accordingly, the device-dependent RGB responses should first be converted into the device-independent CIE1931 XYZ tristimulus for colorimetry calculation, and the correlation of XYZ tristimulus values

and multispectral image

can be given as

where

is the 3 × 3 color space conversion matrix, and

is the production of

and

.

Considering that the ground-truth XYZ tristimulus is obtained by integrating the spectral irradiance with the color mating function of the CIE1931 standard observer, Equation (11) can then be rewritten as

where

is the color matching function of the CIE1931 standard observer.

The convert matrix can then be derived by minimizing the mean-square error between the ground-truth XYZ values and the reconstructed ones via the pseudo-inverse method:

The XYZ tristimulus values

of the training sample set are then obtained by

Subsequently, the loss of the RGB mode is characterized by the CIEDE2000 color difference between the estimated XYZ tristimulus and the ground-truth XYZ tristimulus:

4.3.3. Overall Objective Function

The overall objective function of the dual-mode MSFA can be constructed by

where

is an adjustable scale factor to make a tradeoff between two items.

The previous derivations were conducted using full-resolution multispectral images. However, in practical situations, imaging sensors utilize only one microfilter per pixel, resulting in the capture of single-channel raw images. Consequently, the objective function should include functions representing both the mosaic pattern of the filter array and the demosaicking algorithm. With the reintroduction of the mosaic and demosaic processes, Equation (16) can be rewritten as

where

and

denote the mosaic and demosaic algorithms. The guided filter (GF) method [

26,

28] was adopted for the demosaic of multispectral mode. For lower demosaic error, the third spectral channel, which composes a part of the green channel in the RGB mode with a higher sampling rate, was assigned to be the principal channel in the GF method. On the other hand, for the RGB mode, bilinear interpolation was used for demosaic for the sake of computational convenience.

4.4. Two-Stage SSF Optimization

The SSF for the proposed MSFA is optimized in a coarse-to-fine scheme. In the coarse optimization stage, the stochastic gradient descent (SGD) algorithm is implemented to efficiently find a good solution. This solution serves as the initial estimation for the second refined optimization stage. Due to the intrinsic non-differentiability of the CIEDE2000 color difference in Equation (17), which hinders SGD from calculating the gradient of the objective with respect to the hyper-parameters in SSF, we slightly modify Equation (17) and replace the CIEDE2000 metric with a differentiable alternative. The second stage implements a refined optimization with a direct search algorithm to search for the optimal solution in a compact sub-space around the initial estimation from the first stage.

4.4.1. Coarse Optimization

To implement gradient-based optimization algorithms, an end-to-end differentiable model is required. This model builds up a forward graph from the trainable parameters, specifically the hyper-parameters in the SSF formation formula in our case, to the final objective. To this end, we temporarily resort to the L2-norm between the ground truth and the reconstructed spectral irradiance to approximate their CIEDE2000 color difference.

Specifically, in the coarse optimization stage, the hyper-parameters in the SSF are updated in an iterative manner: in each step, the gradient of the objective with respect to the hyper-parameters in the SSF is calculated by chain-rule back propagation, and the hyper-parameters for the next step are updated via the SGD algorithm, as shown in Algorithm 1:

| Algorithm 1: Stochastic Gradient Descent (SGD) |

Input: Training data , learning rate η, initialization

Output: Hyper-parameter vector

repeat

forward: ;

backward: calculate gradient via backpropagation;

update:

until stopping criterion is not met; |

4.4.2. Refined Optimization

The refined optimization produces the final optimal SSFs . It is achieved by updating the hyper-parameters based on the hyper-parameter vector , which is obtained in the coarse optimization.

Considering the high dimensions of hyper-parameters and the complications in calculating of

in Equation (17), a direct search algorithm, the second-order oscillating particle swarm optimization (SOPSO), is adopted for the refined optimization, as shown in Algorithm 2. The algorithm improves the classic PSO by adding a degenerative oscillating term

to avoid trapping in the local optimum:

| Algorithm 2: Second-order oscillating particle swarm optimization (SOPSO) |

Input: Training data , initialization , max iteration epoch N, swarm size k

Output: Hyper-parameter vector

repeat

for ;

;

end

;

if ;

;

end

until; |

5. Experiments

5.1. Formation of Synthetic SSF

Two types of parameterized SSFs were introduced for optimization. The first is based on the Gaussian function, following previous works [

14,

15,

20]. The SSF of the Gaussian-based spectral channel is defined by two parameters, the central wavelength and the bandwidth, as

where

denotes the Gaussian function,

and

are the central wavelength of the

i-th channel and the bandwidth of the

i-th channel, respectively. As the default values for the optimization, the central wavelengths of the six channels were uniformly distributed in the visible range, and their bandwidths were assigned to be 40 nm.

The other type is defined by the principal component analysis (PCA) results of a set of real-camera SSFs from Jiang [

29], in which the first five components are applied to construct the spectral channel as

where

is the

n-th principal component of the PCA results, and

is the weight of the

n-th component for the

i-th spectral channel. To balance the continuity of SSFs and computational complexity, the first five principal components were selected in the optimization process. The default

was assigned to be the average of the weights for the 84 RGB channels in Jiang [

29].

5.2. Methods for Comparison

To investigate the effect of the dual-mode pattern design on the performance of the MSFA, the single-mode imaging cameras, namely the MSFA-based multispectral cameras and the CFA-based RGB cameras, were adopted for comparisons. The binary-tree-based MSFAs by Monno [

20] and Li [

21] were taken into consideration as representatives for regularly sampling MSFAs. These two methods differ in the selection of SSFs: the former applies Gaussian-function-based SSFs, and the latter uses SSFs of real commercial filters. The MSFAs by Wu [

15] and Brauers [

14] were also adopted to show the performance of traditional MSFAs.

For the RGB mode, the SSFs of a Nikon D3X measured in our previous work [

30], a Canon 60D, and a SONY NEX-5N measured by Jiang [

29] were selected, representing the commercial RGB cameras.

5.3. Implementation Details

The gradient calculation and hyper-parameter update in the coarse stage are implemented in Pytorch. The base learning rate for the SGD is set to 2 × 10−4, with a weight decay of 10−9. We run the training for 500 epochs with the cosine-with-restarts learning rate adjustment scheduler, where the restart cycle and restart gamma are empirically set at 100 and 0.8, respectively.

For the refined stage, the SOPSO runs 30 epochs with a swarm size of 200, and the accelerated coefficients c1, c2, and ω are set to 1.5, 1.5, and 0.8, respectively.

The optimization was implemented on the ICVL [

31] dataset, in which the spectral images were randomly divided into two groups for model training and evaluation, respectively.

The calibration sample set contains 200 pixels randomly selected from the training group. On the other hand, in order to reduce the computational pressure of the cost function, 100 spectral image blocks with 96 × 96 spatial resolution were employed as the training sample set , which were generated by randomly cropping the spectral images in the training group of the dataset.

Furthermore, the intra-dataset accuracy results of both imaging modes were evaluated by the evaluation group, while the inter-dataset accuracy results were tested on the Harvard [

32] dataset.

6. Results and Discussion

6.1. Optimization Results

Based on the configuration in

Section 5.3, the two-stage optimization scheme was implemented on the ICVL dataset, resulting in the optimal SSFs for the two types of SSFs. The initial estimation from the coarse stage and the final optimal SSFs are shown in

Figure 4.

The accuracy results of the optimal dual-mode MSFA camera and the selected multispectral cameras are listed in

Table 1. The results indicate that the proposed dual-mode camera achieves comparable accuracy to the single-mode MSFA-based multispectral cameras. Specifically, the Gaussian-based camera performs best in terms of RMSE. This can be attributed to the inclusion of the RMSE-based term in the cost function for the multispectral mode. Similarly, the statistical results of its CIEDE2000 color difference display the highest color accuracy, except for the best 25% section, possibly due to the constraint of RGB mode. The AE values of the proposed MSFA are marginally lower compared to the comparisons, possibly due to the uneven spatial sampling of the proposed MSFA.

It is worth noting that the method proposed by Brauers demonstrates good performance in terms of AE. The MSFA of this method arranges all six spectral channels in a simple side-by-side configuration, resulting in a higher spatial sampling rate compared to other methods, except for the green channel. It is worth mentioning as well that our method using PCA-based SSFs and Li’s method exhibit significantly inferior performance, mainly due to stronger optimization constraints. The PCA-based method derives its SSFs from real camera SSFs, whereas Li’s method selects its SSFs from a set of commercial color filters.

To assess the generalization capability of the dual-mode camera, we evaluated its inter-dataset accuracy using the Harvard dataset, as presented in

Table 2. As anticipated, the accuracy of the proposed MSFA experiences a slight degradation, while the Gaussian-based MSFA remains the second-best performer across most metrics. The results suggest that even though the proposed MSFA is designed for dual-mode imaging, its performance in multispectral imaging is still comparable to cameras specialized for dedicated multispectral applications.

Also, we evaluated the RGB image accuracy of the dual-mode MSFA alongside the RGB comparisons. The intra-dataset results are listed in

Table 3. It can be observed that the advantages of RGB image accuracy for the proposed method are noteworthy compared to the commercial RGB cameras involved. The only exception is the best 25% section, likely attributed to spectral errors introduced by the direct combination of the subpixels for RGB output. The details of the pixel combination error are discussed in

Section 6.2.

Overall, the evaluation results suggest that the dual-mode MSFA exhibits an acceptable level of performance degradation in spectral image reconstruction compared to existing MSFAs specialized for multispectral imaging. Moreover, the RGB mode performs favorably when compared to commercial RGB cameras. The proposed MSFA is verified to have a good balance between multispectral and RGB imaging.

6.2. Validity of Channel Combination in RGB Mode

As is revealed above, the pixel combination in the RGB mode introduced an error for the RGB output, where the equivalent SSF of a single RGB channel is constructed by adding two spectral channels together. For example, compared to the ideal green pixel, the subpixels of channels 3 and 4 (referring to

Figure 1a) in the proposed MSFA lose the spectral information of channels 4 and 3, respectively, when combined in the RGB mode. This results in differences between the merged RGB pixel response and the ideal RGB response.

Therefore, it is critical to evaluate the actual loss of the pixel combination. The image accuracy of our method was therefore compared with that produced by the conventional RGB CFA.

Figure 5 illustrates the different approaches to delivering full-resolution RGB images. Approach (a) is the demosaic-free RGB imaging method, in which every pixel records the responses of the red, green, and blue channels. It is commonly used in tri-sensor RGB cameras that utilize beam splitters. Approach (b) denotes the most commonly used Bayer CFA. The channel SSFs of (a) and (b) are assigned the same as the SSFs of RGB mode by Equation (12). On the other hand, approaches (c) and (d) both denote the RGB output of the dual-mode MSFA. Their difference is that the multispectral channels in (c) demosaiced before pixel combination and then rearranged to produce Bayer CFA-like output, which is illustrated in

Figure 5c. Approach (d) is the method we proposed above, which directly combined the channels.

The intra-dataset results, measured by the CIEDE2000 color difference, are presented in

Table 4. It is notable that, compared to the common Bayer CFA, the performance of the proposed method has only trivial degradation. This indicates that the direct combination of the spectral channels does not cause unacceptable color errors and is thus appropriate for the RGB image generation of the dual-mode MSFA. Additionally, it is worth mentioning that the rearrange-based method performed slightly better than the Bayer CFA, which might depend on additional spatial information brought in by prepositive multispectral demosaic. Though it demands supplemental computing resources, this method could serve as an optional module for applications that prioritize high accuracy, such as image-based chromaticity measurement and the collection of spectral-RGB image databases.

6.3. Performance Degradation in Multispectral Mode

The layout of the dual-mode MSFA is not optimal for spectral reconstruction compared to the single-purpose MSFAs. This is mainly due to two reasons. Firstly, due to the constraints of the RGB mode, the sampling rates of spectral channels in different spatial directions are not identical in multispectral mode. Secondly, the optimization uses a loss function that includes not only the loss of the multi-spectral mode. In order to examine the impact on spectral reconstruction accuracy due to the ability to generate RGB output, we compared the proposed MSFA with its binary tree-based alternative, which has an equivalent number of channels, as illustrated in

Figure 6. An equal number of pixels were assigned to each channel to ensure a reasonable comparison.

Table 5 lists the RMSE results for the comparison. The section “Binary” denotes the results of a binary-tree-based MSFA applying the same SSFs as the proposed MSFA. It is evident that the SSFs are suboptimal for this configuration, as it leads to inferior accuracy results. The section “Binary (opt.)” represents the results of the binary-tree-based MSFA, in which the SSFs are optimized following a similar approach as existing studies on single-mode MSFA optimization. The optimization considered only the spectral error as the loss function, resulting in improved performance. To a certain extent, the performance gap between the optimal binary-tree-based MSFA and the proposed MSFA reflects the trade-off involved in converting a spectral imaging MSFA to a dual-mode MSFA. Nevertheless, given the favorable performance of the dual-mode MSFA, we consider the trade-off to be practical and reasonable. Furthermore, the dual-mode camera can enhance the user friendliness of snapshot multispectral cameras and expand their application range.

7. Conclusions

A first-of-its-kind dual-mode MSFA-based multispectral camera is proposed. Besides the ordinary output of a multispectral image, the dual-mode camera is able to generate RGB color images by combining the six spectral channels in pairs. To assess the performance of both modes of the MSFA-based camera, the accuracy of its reconstructed spectral image and RGB image were compared with the existing MSFA-based cameras and commercial RGB cameras, respectively. Evaluation results indicate that both modes of the proposed MSFA achieve comparable performance with the existing methods. Furthermore, two additional experiments were conducted to estimate the accuracy loss associated with dual-mode output. The results suggest that, compared to the optimal MSFA and RGB CFA layouts, the accuracy trade-off of the proposed method is practical and reasonable. The novel design of dual-mode MSFA can enhance the user friendliness of snapshot multispectral cameras and expand their application range.

Author Contributions

Conceptualization, Z.Y. and H.X.; methodology, Z.Y.; software, Z.Y.; validation, Z.Y., Y.H. and M.Y.; formal analysis, Z.Y.; investigation, Z.Y.; resources, Z.Y.; data curation, Z.Y. and Y.H.; writing—original draft preparation, Z.Y.; writing—review and editing, H.X.; visualization, Z.Y.; supervision, H.X.; funding acquisition, H.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Fundamental Research Funds for the Central Universities, grant number No. S20220156.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, B.; Song, S.; Gong, W.; Cao, X.; He, D.; Chen, Z.; Lin, X.; Li, F.; Sun, J. Color Restoration for Full-Waveform Multispectral LiDAR Data. Remote Sens. 2020, 12, 593. [Google Scholar] [CrossRef]

- Liang, W.; Hao, W.; Jin, H.; Xie, K. Color and Spectrum Dual-Fidelity Image Codec—A New Multispectral Image Codec Based on Color Space Values, Visual Trigonometric Curves and Principal Component Analysis to Improve Colorimetric and Spectral Accuracy. Spectrosc. Lett. 2021, 54, 395–404. [Google Scholar] [CrossRef]

- Akkoyun, F. Inexpensive Multispectral Imaging Device. Instrum. Sci. Technol. 2022, 50, 543–559. [Google Scholar] [CrossRef]

- Ma, X.; Tan, M.; Zhang, S.; Liu, S.; Sun, J.; Han, Y.; Li, Q.; Yang, Y. A Snapshot Near-Infrared Hyperspectral Demosaicing Method with Convolutional Neural Networks in Low Illumination Environment. Infrared Phys. Technol. 2023, 129, 104510. [Google Scholar] [CrossRef]

- Mei, L.; Jung, C. Low Light Image Enhancement by Multispectral Fusion and Convolutional Neural Networks. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; pp. 203–209. [Google Scholar]

- Zhang, J.; Yao, P.; Wu, H.; Xin, J.H. Automatic Color Pattern Recognition of Multispectral Printed Fabric Images. J. Intell. Manuf. 2023, 34, 2747–2763. [Google Scholar] [CrossRef]

- Ding, L.; Wang, Y.; Laganière, R.; Huang, D.; Fu, S. Convolutional Neural Networks for Multispectral Pedestrian Detection. Signal Process. Image Commun. 2020, 82, 115764. [Google Scholar] [CrossRef]

- Paoletti, M.E.; Haut, J.M.; Plaza, J.; Plaza, A. Deep Learning Classifiers for Hyperspectral Imaging: A Review. ISPRS J. Photogramm. Remote Sens. 2019, 158, 279–317. [Google Scholar] [CrossRef]

- Chen, B.; Shi, S.; Sun, J.; Chen, B.; Guo, K.; Du, L.; Yang, J.; Xu, Q.; Song, S.; Gong, W. Using HSI Color Space to Improve the Multispectral Lidar Classification Error Caused by Measurement Geometry. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3567–3579. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, X.; Feng, W.; Xu, J. Deep Learning Classification by ResNet-18 Based on the Real Spectral Dataset from Multispectral Remote Sensing Images. Remote Sens. 2022, 14, 4883. [Google Scholar] [CrossRef]

- Lapray, P.-J.; Wang, X.; Thomas, J.-B.; Gouton, P. Multispectral Filter Arrays: Recent Advances and Practical Implementation. Sensors 2014, 14, 21626–21659. [Google Scholar] [CrossRef]

- Mengu, D.; Tabassum, A.; Jarrahi, M.; Ozcan, A. Snapshot Multispectral Imaging Using a Diffractive Optical Network. Light. Sci. Appl. 2023, 12, 86. [Google Scholar] [CrossRef]

- Ramanath, R.; Snyder, W.E.; Qi, H. Mosaic Multispectral Focal Plane Array Cameras. In Proceedings of the Infrared Technology and Applications XXX, SPIE, Orlando, FL, USA, 30 August 2004; Volume 5406, pp. 701–712. [Google Scholar]

- Brauers, J.; Aach, T. A Color Filter Array Based Multispectral Camera. In 12. Workshop Farbbildverarbeitung, Ilmenau. 2006, pp. 5–6. Available online: https://www.lfb.rwth-aachen.de/bibtexupload/pdf/BRA06a.pdf (accessed on 17 July 2023).

- Wu, R.; Li, Y.; Xie, X.; Lin, Z. Optimized Multi-Spectral Filter Arrays for Spectral Reconstruction. Sensors 2019, 19, 2905. [Google Scholar] [CrossRef] [PubMed]

- Miao, L.; Qi, H.; Snyder, W.E. A Generic Method for Generating Multispectral Filter Arrays. In Proceedings of the 2004 International Conference on Image Processing, ICIP ’04, Singapore, 24–27 October 2004; Volume 5, pp. 3343–3346. [Google Scholar]

- Miao, L.; Qi, H. The Design and Evaluation of a Generic Method for Generating Mosaicked Multispectral Filter Arrays. IEEE Trans. Image Process. 2006, 15, 2780–2791. [Google Scholar] [CrossRef] [PubMed]

- Sun, B.; Zhao, Z.; Xie, D.; Yuan, N.; Yu, Z.; Chen, F.; Cao, C.; de Dravo, V.W. Sparse Spectral Signal Reconstruction for One Proposed Nine-Band Multispectral Imaging System. Mech. Syst. Signal Process. 2020, 141, 106627. [Google Scholar] [CrossRef]

- Hounsou, N.; Sanda Mahama, A.T.; Gouton, P. Extension of Luminance Component Based Demosaicking Algorithm to 4- and 5-Band Multispectral Images. Array 2021, 12, 100088. [Google Scholar] [CrossRef]

- Monno, Y.; Kikuchi, S.; Tanaka, M.; Okutomi, M. A Practical One-Shot Multispectral Imaging System Using a Single Image Sensor. IEEE Trans. Image Process. 2015, 24, 3048–3059. [Google Scholar] [CrossRef]

- Li, Y.; Majumder, A.; Zhang, H.; Gopi, M. Optimized Multi-Spectral Filter Array Based Imaging of Natural Scenes. Sensors 2018, 18, 1172. [Google Scholar] [CrossRef]

- Li, S.-X. Superiority of Optimal Broadband Filter Sets under Lower Noise Levels in Multispectral Color Imaging. Color Res. Appl. 2021, 46, 783–790. [Google Scholar] [CrossRef]

- Wang, X.; Thomas, J.-B.; Hardeberg, J.Y.; Gouton, P. Multispectral Imaging: Narrow or Wide Band Filters? J. Int. Colour Assoc. 2014, 12, 44–51. [Google Scholar]

- Park, C.; Kang, M.G. Color Restoration of RGBN Multispectral Filter Array Sensor Images Based on Spectral Decomposition. Sensors 2016, 16, 719. [Google Scholar] [CrossRef]

- Cao, Y.; Zhao, B.; Tong, X.; Chen, J.; Yang, J.; Cao, Y.; Li, X. Data-Driven Framework for High-Accuracy Color Restoration of RGBN Multispectral Filter Array Sensors under Extremely Low-Light Conditions. Opt. Express OE 2021, 29, 23654–23670. [Google Scholar] [CrossRef] [PubMed]

- Jee, S.; Kang, M.G. Sensitivity Improvement of Extremely Low Light Scenes with RGB-NIR Multispectral Filter Array Sensor. Sensors 2019, 19, 1256. [Google Scholar] [CrossRef] [PubMed]

- Murakami, Y.; Yamaguchi, M.; Ohyama, N. Hybrid-Resolution Multispectral Imaging Using Color Filter Array. Opt. Express OE 2012, 20, 7173–7183. [Google Scholar] [CrossRef] [PubMed]

- Monno, Y.; Tanaka, M.; Okutomi, M. Multispectral Demosaicking Using Guided Filter. In Proceedings of the Digital Photography VIII, SPIE, Burlingame, CA, USA, 24 January 2012; Volume 8299, pp. 204–210. [Google Scholar]

- Jiang, J.; Liu, D.; Gu, J.; Susstrunk, S. What Is the Space of Spectral Sensitivity Functions for Digital Color Cameras? In Proceedings of the 2013 IEEE Workshop on Applications of Computer Vision (WACV), Clearwater Beach, FL, USA, 15–17 January 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 168–179. [Google Scholar]

- Qiu, J.; Xu, H. Camera Response Prediction for Various Capture Settings Using the Spectral Sensitivity and Crosstalk Model. Appl. Opt. AO 2016, 55, 6989–6999. [Google Scholar] [CrossRef] [PubMed]

- Arad, B.; Ben-Shahar, O. Sparse Recovery of Hyperspectral Signal from Natural RGB Images. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 19–34. [Google Scholar]

- Chakrabarti, A.; Zickler, T. Statistics of Real-World Hyperspectral Images. In Proceedings of the CVPR 2011, Providence, RI, USA, 20–25 June 2011; pp. 193–200. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).