Triangle-Mesh-Rasterization-Projection (TMRP): An Algorithm to Project a Point Cloud onto a Consistent, Dense and Accurate 2D Raster Image

Abstract

1. Introduction

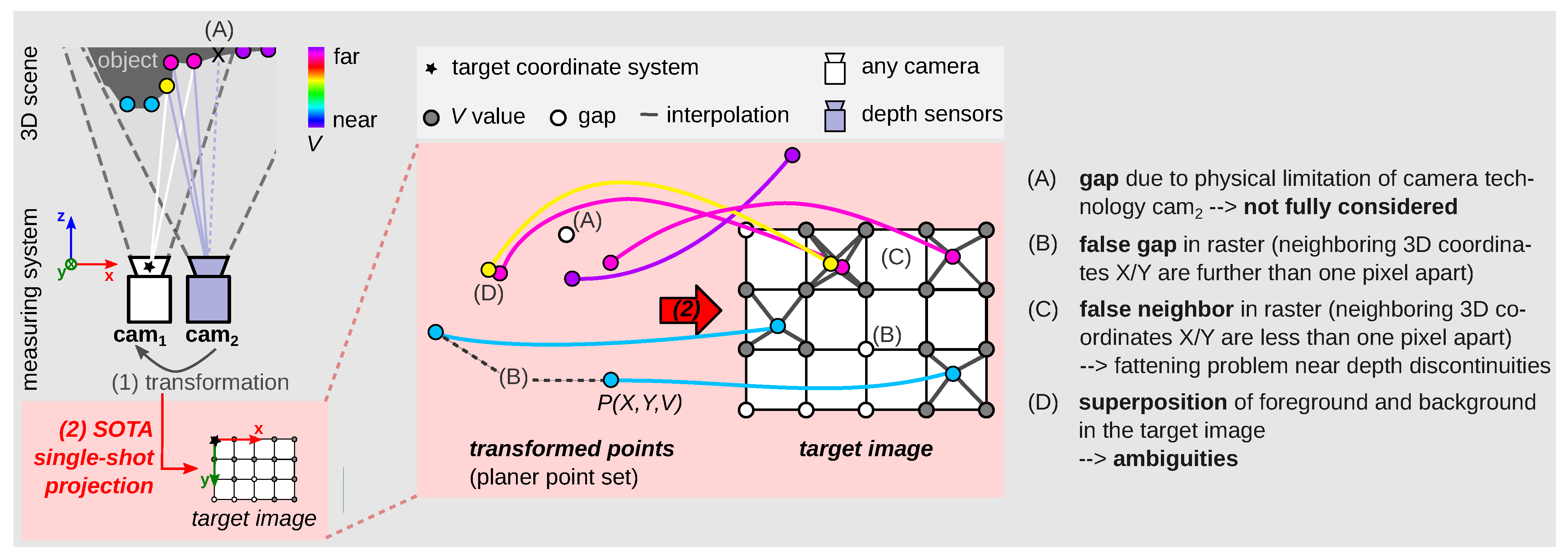

1.1. Challenges in Single-Shot Projection Methods

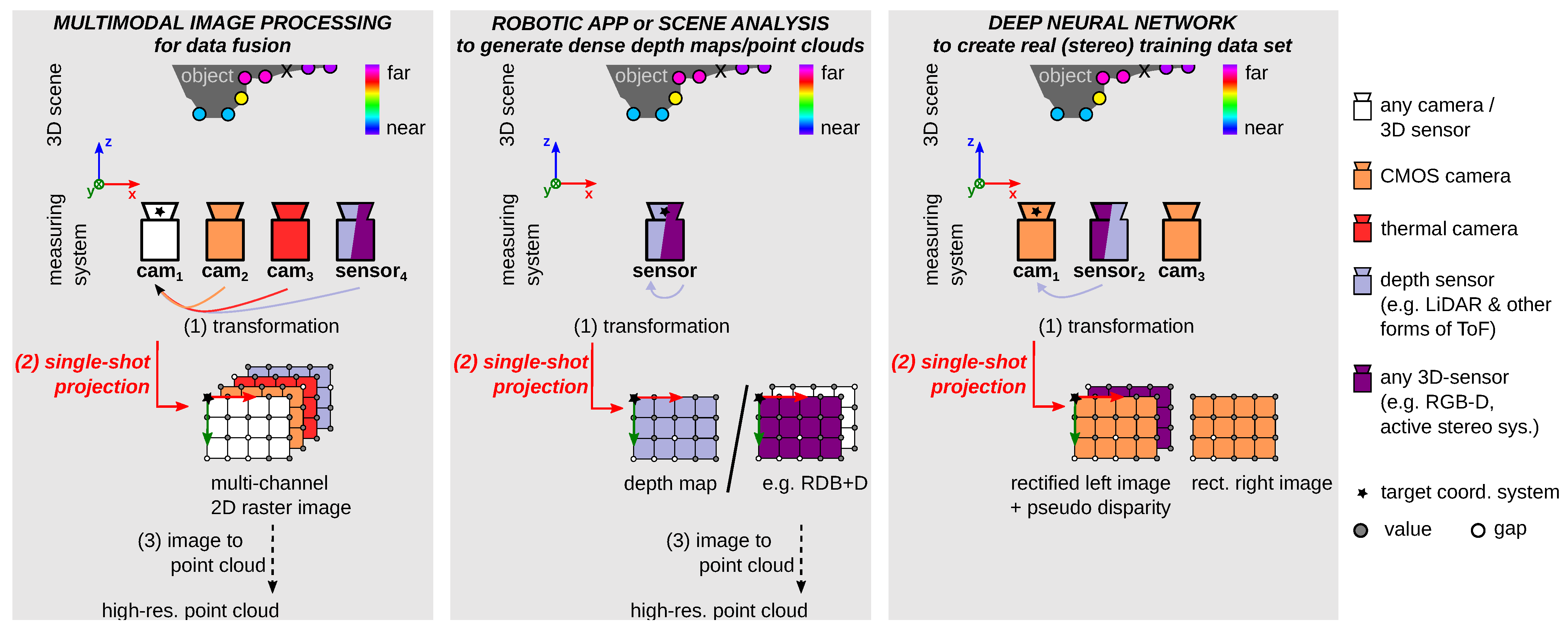

1.2. Use Cases of Single-Shot Projection Methods

1.2.1. General: Data Fusion of Multimodal Image Processing

1.2.2. Robotics Applications or Scene Analysis—The Need for Dense, Accurate Depth Maps or Point Clouds

1.2.3. Deep Neural Network—The Need for a Large Amount of Dense, Accurate Correspondence Data

1.3. The Main Contributions of Our Paper

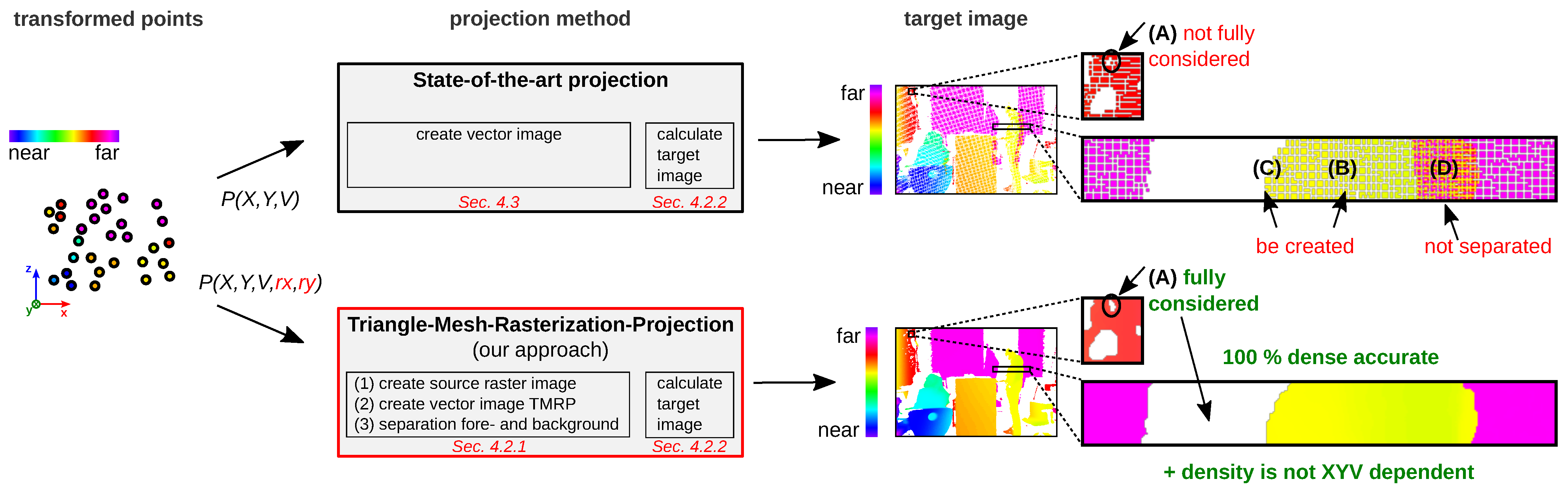

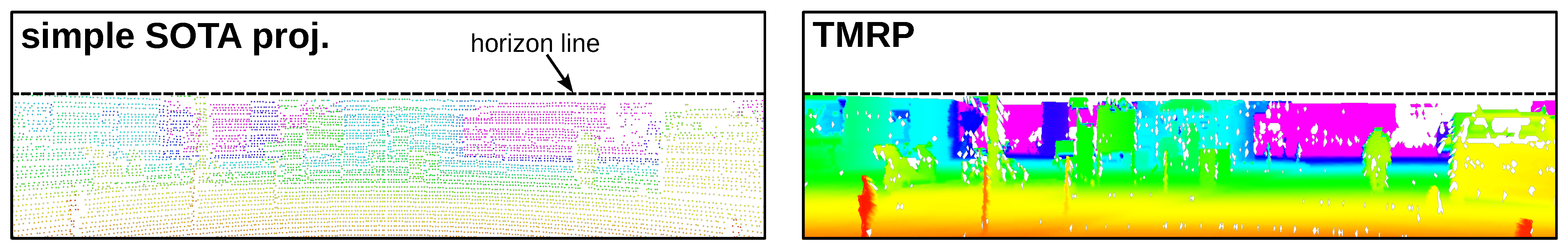

- We propose a novel algorithm, called Triangle-Mesh-Rasterization-Projection (TMRP), that projects points (single-shot) onto a camera target sensor, producing dense, accurate 2D raster images (Figure 2). Our TMRP (v1.0) software is available at http://github.com/QBV-tu-ilmenau/Triangle-Mesh-Rasterization-Projection (accessed on 1 July 2023).To fully understand the components of TMRP (Algorithm 1), we have written down the process and mathematics as pseudocode (see Appendix A). In addition, there is Supplementary Material for the mathematical background of some experiments (Section 6).

- Other single-shot projection methods are discussed, evaluated, and compared.

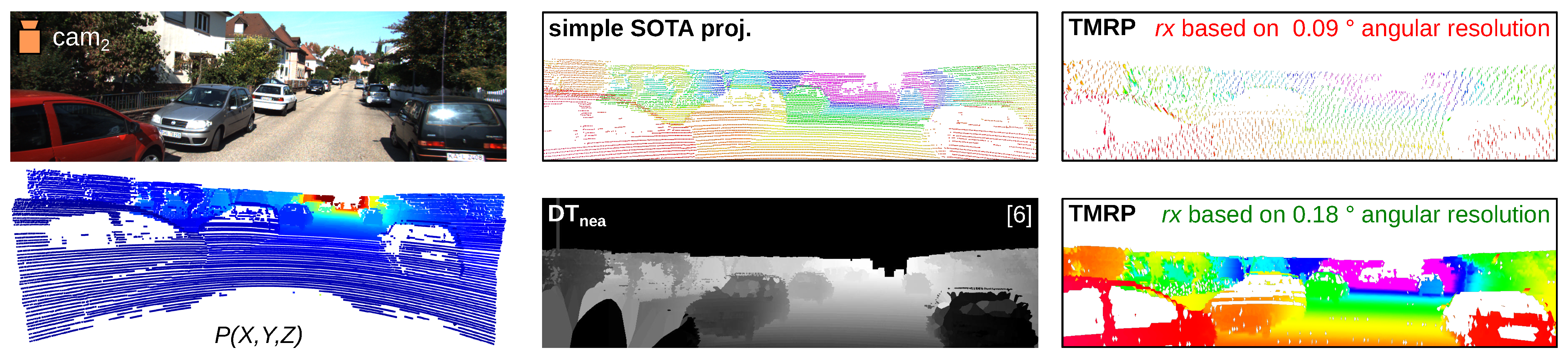

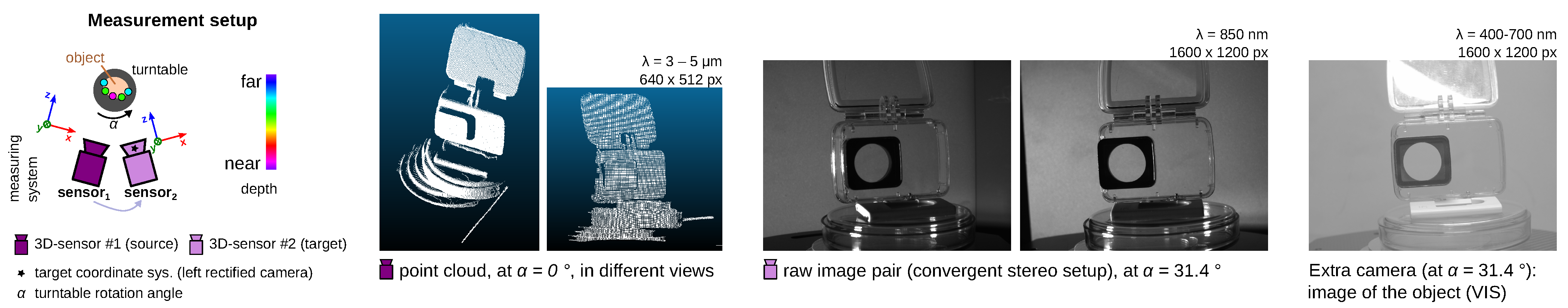

- We believe that our TMRP method will be highly useful in image analysis and enhancement (Section 1.2). To show the potential of TMRP, we also present several use cases (cf. measurement setup in Figure 3) using qualitative and quantitative experiments (Section 6). We define performance in terms of computation time, memory consumption, accuracy, and density.

2. Related Work

- (i)

- Simple SOTA projection (Algorithm 1).

- (ii)

- Conventional methods: The most commonly used interpolation approaches are nearest-neighbor, bilinear [7], bicubic, and Lanczos [53] schemes.Pros: Simple and present a low computational overhead.

- Polygon-based method—a research area with very little scientific literature [7]. The Delaunay triangles and nearest neighbor (DTnea) [7,8] based on LiDAR data alone, i.e., color/texture information from the camera, is not used (see Section 5).Pros: Inference from real measured data; the interpolation of all points is done regardless of the distance between the points of a triangle. This approach uses only data from LiDAR. The extra monocular camera is only considered for calibration and visualization purposes.Cons: Complexity of the algorithm is very high; dependence of results on data quality; noise or outliers in the input data can be amplified or generated; too high upsampling rates can distort the result and make it inaccurate.

- Deep learning-based upsampling: Also known as depth completion (where a distinction is made between methods for non-guided depth upsampling and image guided depth completion [37]). Here, highly non-linear kernels are used to provide better upsampling performance for complex scenarios [3]. Other representatives: [5,30,32,54,55].Pros: Better object delimitation, less noise, high overall accuracy.Cons: Difficult generalization to unseen data; boundary bleeding; training dataset with ground truth is required.

- (iii)

- Closed sourced SDK functions from (consumer) depth sensor manufacturers.

3. Processing Pipeline When Using TMRP

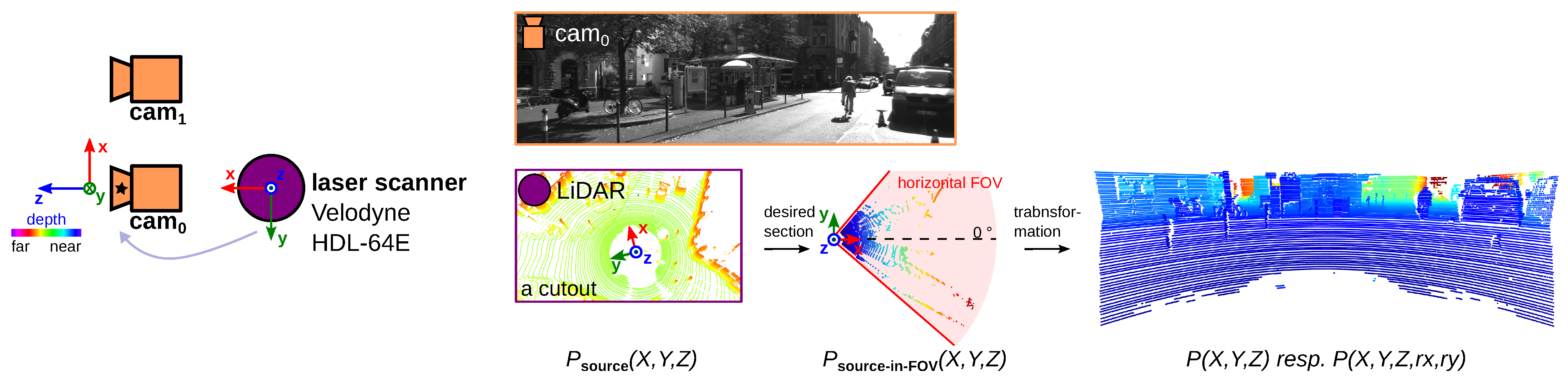

- Coordinate transformation: The source point cloud is transformed into the coordinate system of the target camera using the calibration parameter and then into the image plane. (In the Supplementary Material, the mathematical context is described for experiments #1 and #3. Another example is described for the KITTI dataset by Geiger et al. in [27,62].) In addition, the point cloud is extended by the raster information of the source camera. The result is a planar set of points .Optional extension: E.g., conversion of depth to disparity (see experiments #3 and #4).

- TMRP: The points can now be projected onto a dense accurate 2D raster image, called the target image, using TMRP (Section 4.2).

- Image to point cloud (optional): For applications based on 3D point clouds (Section 1.2), the dense, accurate (high-resolution) image must be converted to a point cloud (Figure 4).

4. Explanation of TMRP and Simple SOTA Projection Algorithms

4.1. Overview

| Algorithm 1 User definitions and procedure for the Triangle-Mesh-Rasterization-Projection (TMRP) and state-of-the-art (SOTA) projection. For more details, see Appendix A. |

|

|

|

|

|

4.2. Triangle-Mesh-Rasterization-Projection

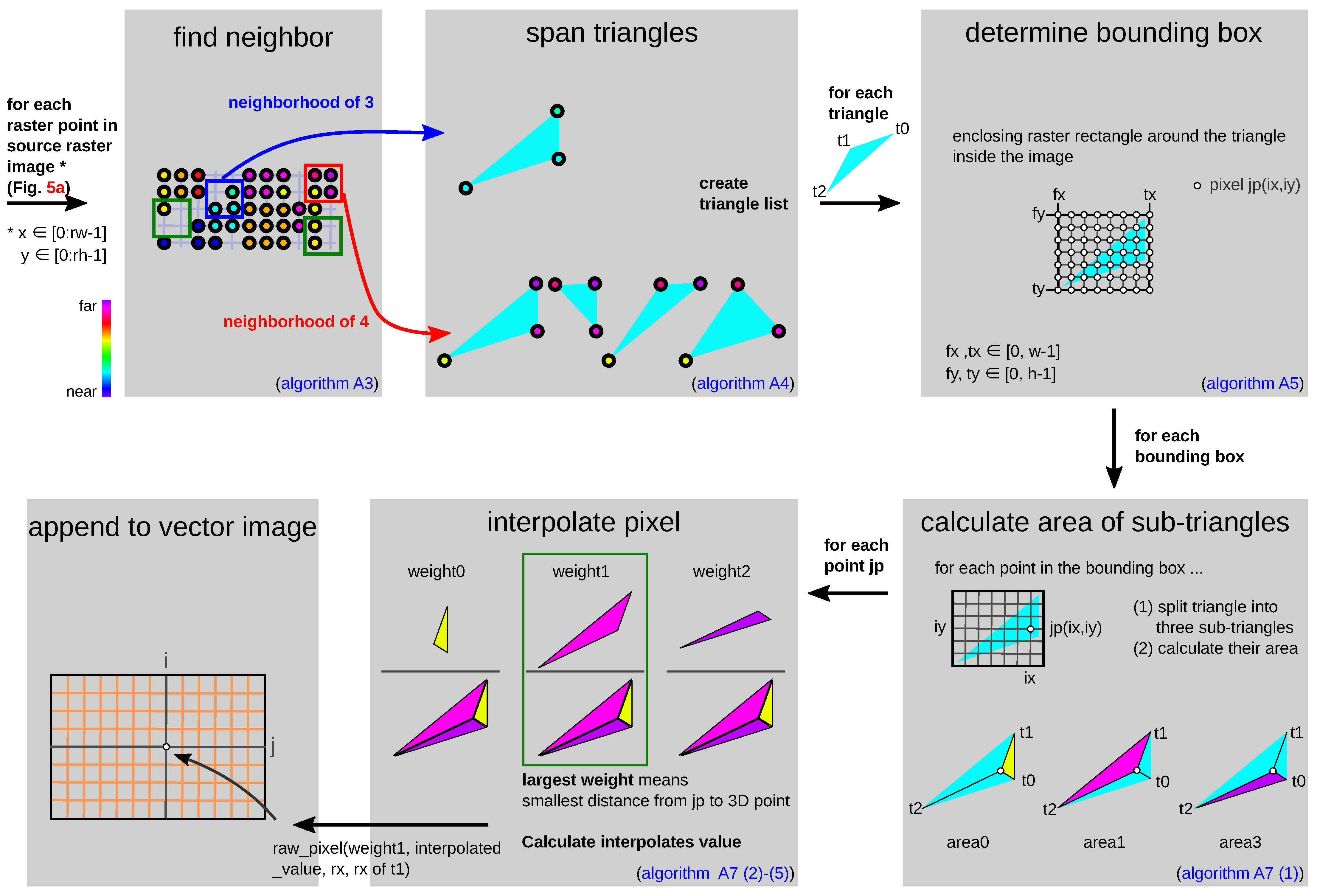

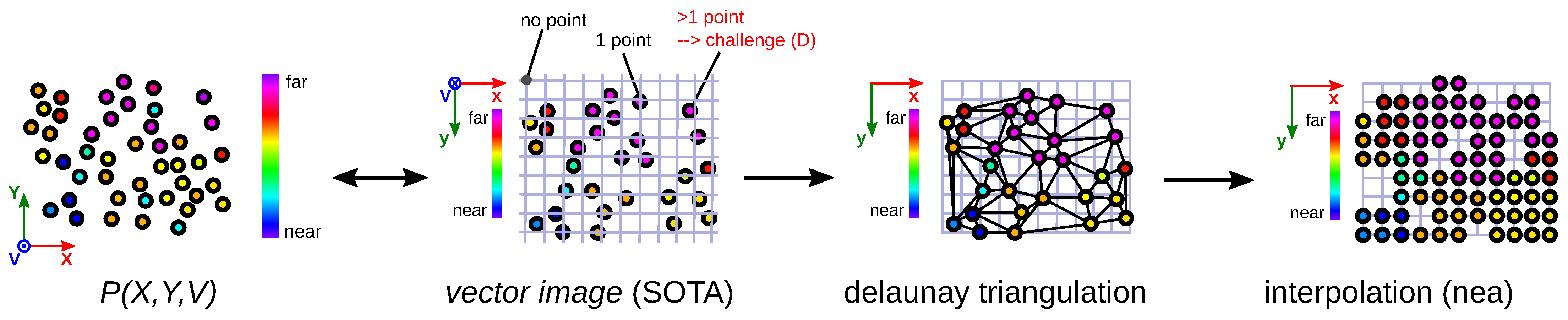

4.2.1. Part (I): Create Vector Image

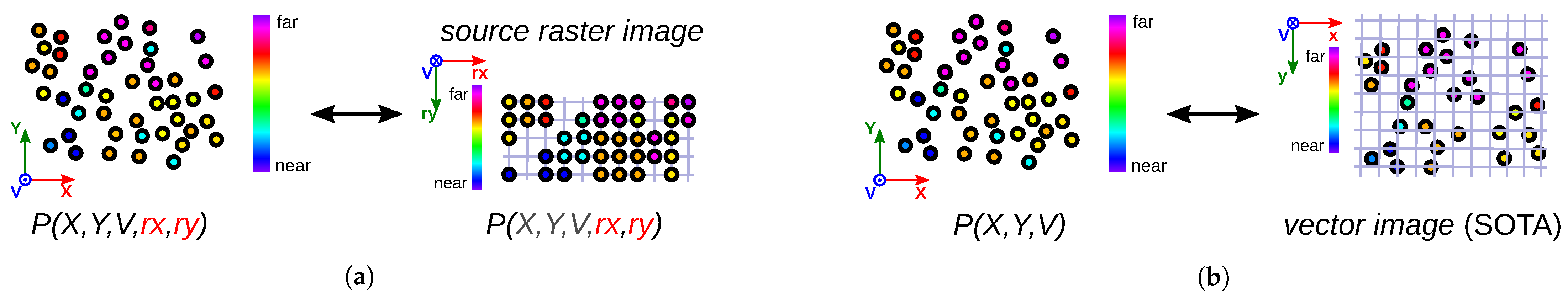

- (1)

- Create source raster image

- (2)

- Create vector image

- (3)

- Separation of Foreground and Background

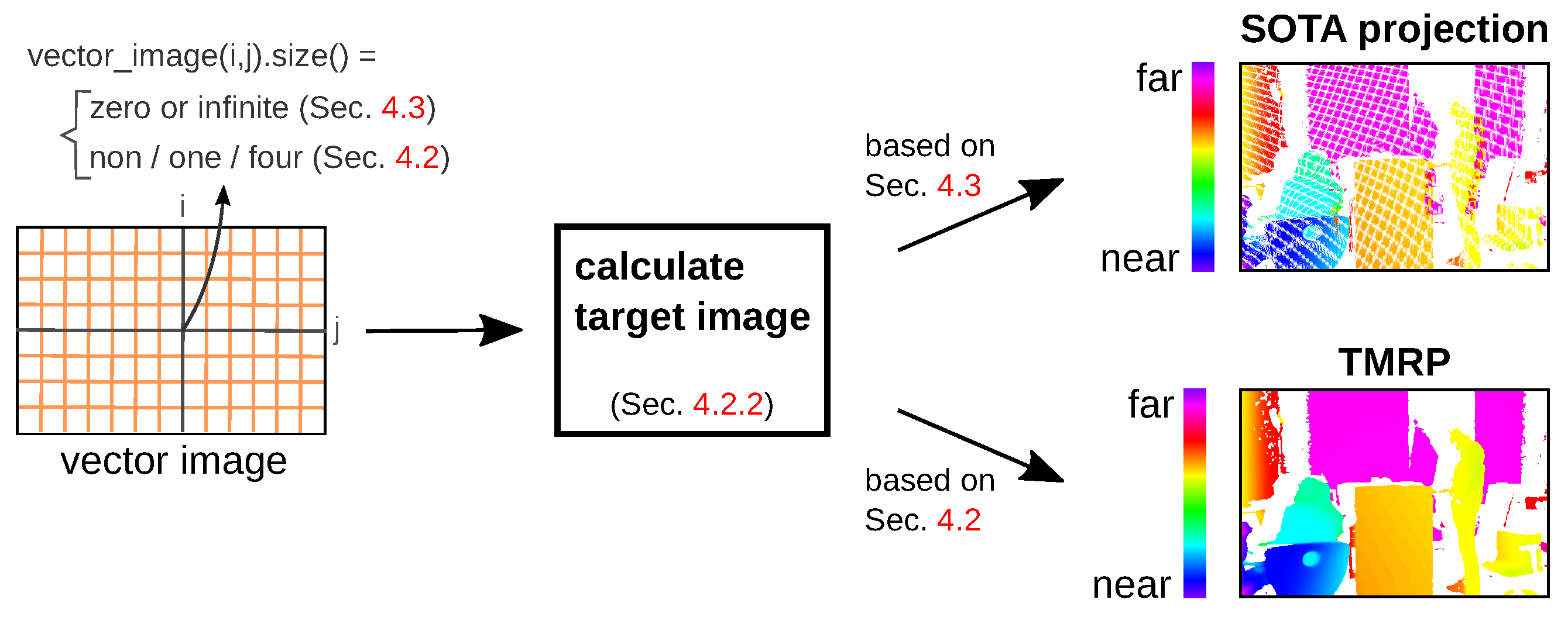

4.2.2. Part (II): Calculate Target Image

4.3. SOTA Projection

5. Comparison with Polygon-Based Method

6. Qualitative and Quantitative Experiments

6.1. Density and Accuracy

6.1.1. Experiment #1 —Qualitative Comparison of Simple SOTA Proj., DTnea, and TMRP

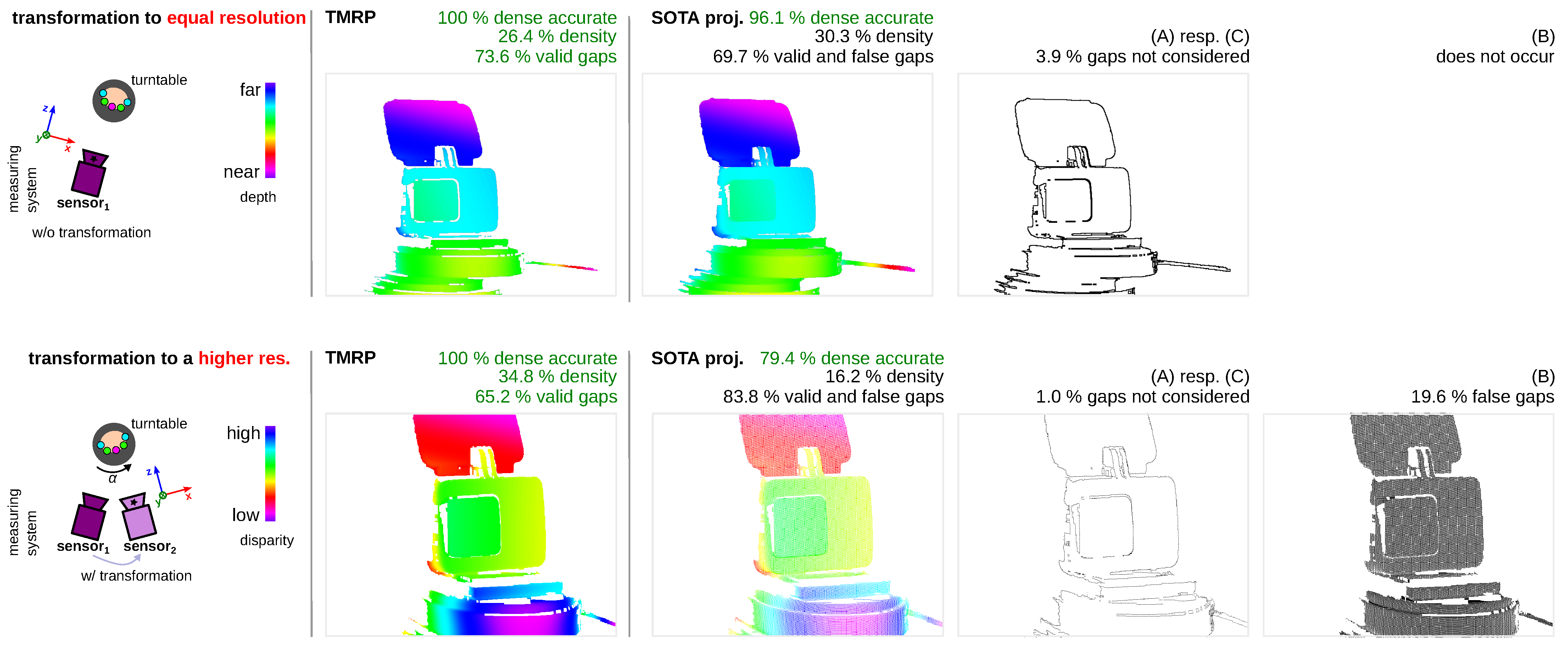

6.1.2. Experiment #2—Focus on Challenge (A) Resp. (C) Using a Test Specimen

6.1.3. Experiment #3—Focus on Challenge (B) Using a Test Specimen

6.1.4. Experiment #4—Focus on Influence of Points with Transformation into Equal/Unequal Resolution

6.2. Computation Time, Memory Usage and Complexity Class

7. Conclusions, Limitations, and Future Work

7.1. Conclusions

7.2. Limitations for Online Applications

7.3. Future Work

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| a.o. | among others |

| CNN | Convolutional neural network |

| coord. | coordinate |

| 2D/3D | two-/three-dimensional |

| DT | Delaunay triangles |

| FOV | Field of view |

| ICP | Iterative closest point |

| JBU | Joint bilateral upsampling |

| LiDAR | Light Detection and Ranging |

| nea | nearest neighbor |

| TMRP | Triangle-Mesh-Rasterization-Projection |

| PMMA | polymethyl methacrylate |

| proj. | projection |

| res. | resolution |

| resp. | respektive |

| RGB-D | Image with four channels: red, blue, green, depth |

| ROI | Region of interest |

| SDF | Signed distance function |

| SDK | Software development kit |

| SLAM | Simultaneous localization and mapping |

| SOTA | state-of-the-art |

| suppl. | supplementary |

| ToF | Time of flight |

Appendix A. Pseudocode of TMRP Algorithm

Appendix A.1. Overview

Appendix A.2. Definition of Data Types

| Listing A1. Definition of data types |

Appendix A.3. Pseudocode

| Procedure/Function | Algorithm No. | Notation |

|---|---|---|

| sourceRasterImage() | Algorithm A1 | |

| createVectorImageTMRP() | Algorithm A2 | |

| createNeighborPointList() | Algorithm A3 | |

| toTriangleList() | Algorithm A4 | |

| triangleBoundingBox() | Algorithm A5 | |

| clamp() | Algorithm A6 | |

| interpolate-pixel() | Algorithm A7 | |

| distance() | Algorithm A8 | |

| separationForegroundAndBackground() | Algorithm A9 | |

| createTargetImage() | Algorithm A10 | |

| createVectorImageSOTA() | Algorithm A11 | |

| createTargetImage() | Algorithm A10 |

| Algorithm A1 Create source raster image. |

|

|

|

| Algorithm A2 Create vector image TMRP. |

|

|

|

|

|

|

| Algorithm A3 Create neighbor point list. A function of Algorithm A2, (1). |

|

|

|

|

| Algorithm A4 Add to triangle list. A function of Algorithm A2, (1). |

|

|

|

| Algorithm A5 Create triangle bounding box. A function of Algorithm A2, (2). |

|

| Algorithm A6 Clamp function. A function of Algorithm A5. |

|

| Algorithm A7 Interpolate pixel. A function of Algorithm A2, (3). |

|

|

|

|

|

|

| Algorithm A8 Calculation of the distance between two points and calculation of a triangular area according to Heron’s formula . A function of Algorithm A7. |

|

|

| Algorithm A9 Clean separation of foreground and background. |

|

|

|

|

|

| Algorithm A10 Calculate target image. |

|

|

|

|

|

| Algorithm A11 Create vector image state-of-the-art (SOTA) projection. |

|

|

|

|

References

- Wu, Z.; Su, S.; Chen, Q.; Fan, R. Transparent Objects: A Corner Case in Stereo Matching. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA 2023), London, UK, 29 May–2 June 2023. [Google Scholar]

- Jiang, J.; Cao, G.; Deng, J.; Do, T.T.; Luo, S. Robotic Perception of Transparent Objects: A Review. arXiv 2023, arXiv:2304.00157. [Google Scholar]

- You, J.; Kim, Y.K. Up-Sampling Method for Low-Resolution LiDAR Point Cloud to Enhance 3D Object Detection in an Autonomous Driving Environment. Sensors 2023, 23, 322. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Xue, T.; Sun, L.; Liu, J. Joint Example-Based Depth Map Super-Resolution. In Proceedings of the 2012 IEEE International Conference on Multimedia and Expo, Melbourne, VIC, Australia, 9–13 July 2012; pp. 152–157. [Google Scholar] [CrossRef]

- Yang, Q.; Yang, R.; Davis, J.; Nister, D. Spatial-Depth Super Resolution for Range Images. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Kopf, J.; Cohen, M.F.; Lischinski, D.; Uyttendaele, M. Joint Bilateral Upsampling. ACM Trans. Graph. 2007, 26, 96. [Google Scholar] [CrossRef]

- Premebida, C.; Garrote, L.; Asvadi, A.; Ribeiro, A.P.; Nunes, U. High-resolution LIDAR-based depth mapping using bilateral filter. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 2469–2474. [Google Scholar] [CrossRef][Green Version]

- Asvadi, A.; Garrote, L.; Premebida, C.; Peixoto, P.; Nunes, J.U. Multimodal vehicle detection: Fusing 3D-LIDAR and color camera data; Multimodal Fusion for Pattern Recognition. Pattern Recognit. Lett. 2018, 115, 20–29. [Google Scholar] [CrossRef]

- Kolar, P.; Benavidez, P.; Jamshidi, M. Survey of Datafusion Techniques for Laser and Vision Based Sensor Integration for Autonomous Navigation. Sensors 2020, 20, 2180. [Google Scholar] [CrossRef]

- Svoboda, L.; Sperrhake, J.; Nisser, M.; Zhang, C.; Notni, G.; Proquitté, H. Contactless heart rate measurement in newborn infants using a multimodal 3D camera system. Front. Pediatr. 2022, 10, 897961. [Google Scholar] [CrossRef]

- Zhang, C.; Gebhart, I.; Kühmstedt, P.; Rosenberger, M.; Notni, G. Enhanced Contactless Vital Sign Estimation from Real-Time Multimodal 3D Image Data. J. Imaging 2020, 6, 123. [Google Scholar] [CrossRef]

- Gerlitz, E.; Greifenstein, M.; Kaiser, J.P.; Mayer, D.; Lanza, G.; Fleischer, J. Systematic Identification of Hazardous States and Approach for Condition Monitoring in the Context of Li-ion Battery Disassembly. Procedia CIRP 2022, 107, 308–313. [Google Scholar] [CrossRef]

- Zhang, Y.; Fütterer, R.; Notni, G. Interactive robot teaching based on finger trajectory using multimodal RGB-D-T-data. Front. Robot. AI 2023, 10, 1120357. [Google Scholar] [CrossRef]

- Zhang, Y.; Müller, S.; Stephan, B.; Gross, H.M.; Notni, G. Point Cloud Hand–Object Segmentation Using Multimodal Imaging with Thermal and Color Data for Safe Robotic Object Handover. Sensors 2021, 21, 5676. [Google Scholar] [CrossRef]

- Seichter, D.; Köhler, M.; Lewandowski, B.; Wengefeld, T.; Gross, H.M. Efficient RGB-D Semantic Segmentation for Indoor Scene Analysis. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13525–13531. [Google Scholar] [CrossRef]

- Zheng, Z.; Xie, D.; Chen, C.; Zhu, Z. Multi-resolution Cascaded Network with Depth-similar Residual Module for Real-time Semantic Segmentation on RGB-D Images. In Proceedings of the 2020 IEEE International Conference on Networking, Sensing and Control (ICNSC), Nanjing, China, 30 October–2 November 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Zhao, G.; Xiao, X.; Yuan, J.; Ng, G.W. Fusion of 3D-LIDAR and camera data for scene parsing. J. Vis. Commun. Image Represent. 2014, 25, 165–183. [Google Scholar] [CrossRef]

- Akhtar, M.R.; Qin, H.; Chen, G. Velodyne LiDAR and monocular camera data fusion for depth map and 3D reconstruction. Int. Soc. Opt. Photonics 2019, 11179, 111790E. [Google Scholar] [CrossRef]

- Chen, L.; He, Y.; Chen, J.; Li, Q.; Zou, Q. Transforming a 3-D LiDAR Point Cloud Into a 2-D Dense Depth Map Through a Parameter Self-Adaptive Framework. IEEE Trans. Intell. Transp. Syst. 2017, 18, 165–176. [Google Scholar] [CrossRef]

- Lahat, D.; Adalý, T.; Jutten, C. Challenges in multimodal data fusion. In Proceedings of the 2014 22nd European Signal Processing Conference (EUSIPCO), Lisbon, Portugal, 1–5 September 2014; pp. 101–105. [Google Scholar]

- Mkhitaryan, A.; Burschka, D. RGB-D sensor data correction and enhancement by introduction of an additional RGB view. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1077–1083. [Google Scholar] [CrossRef][Green Version]

- Song, Y.E.; Niitsuma, M.; Kubota, T.; Hashimoto, H.; Son, H.I. Mobile multimodal human-robot interface for virtual collaboration. In Proceedings of the 2012 IEEE 3rd International Conference on Cognitive Infocommunications (CogInfoCom), Kosice, Slovakia, 2–5 December 2012; pp. 627–631. [Google Scholar] [CrossRef]

- Cherubini, A.; Passama, R.; Meline, A.; Crosnier, A.; Fraisse, P. Multimodal control for human-robot cooperation. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2202–2207. [Google Scholar] [CrossRef]

- Siritanawan, P.; Diluka Prasanjith, M.; Wang, D. 3D feature points detection on sparse and non-uniform pointcloud for SLAM. In Proceedings of the 2017 18th International Conference on Advanced Robotics (ICAR), Hong Kong, China, 10–12 July 2017; pp. 112–117. [Google Scholar] [CrossRef]

- Rashed, H.; Ramzy, M.; Vaquero, V.; El Sallab, A.; Sistu, G.; Yogamani, S. FuseMODNet: Real-Time Camera and LiDAR Based Moving Object Detection for Robust Low-Light Autonomous Driving. In Proceedings of the The IEEE International Conference on Computer Vision (ICCV) Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Pasinetti, S.; Hassan, M.M.; Eberhardt, J.; Lancini, M.; Docchio, F.; Sansoni, G. Performance Analysis of the PMD Camboard Picoflexx Time-of-Flight Camera for Markerless Motion Capture Applications. IEEE Trans. Instrum. Meas. 2019, 68, 4456–4471. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Watson, J.; Aodha, O.M.; Turmukhambetov, D.; Brostow, G.J.; Firman, M. Learning Stereo from Single Images. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 722–740. [Google Scholar]

- Kendall, A.; Martirosyan, H.; Dasgupta, S.; Henry, P. End-to-End Learning of Geometry and Context for Deep Stereo Regression. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 66–75. [Google Scholar]

- Chang, J.; Chen, Y. Pyramid Stereo Matching Network. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 18–23 June 2018; pp. 5410–5418. [Google Scholar] [CrossRef]

- Zhang, F.; Prisacariu, V.; Yang, R.; Torr, P.H. GA-Net: Guided Aggregation Net for End-To-End Stereo Matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Xu, H.; Zhang, J. AANet: Adaptive Aggregation Network for Efficient Stereo Matching. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June 2020; pp. 1956–1965. [Google Scholar] [CrossRef]

- Poggi, M.; Tosi, F.; Batsos, K.; Mordohai, P.; Mattoccia, S. On the Synergies between Machine Learning and Binocular Stereo for Depth Estimation from Images: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5314–5334. [Google Scholar] [CrossRef]

- He, J.; Zhou, E.; Sun, L.; Lei, F.; Liu, C.; Sun, W. Semi-synthesis: A fast way to produce effective datasets for stereo matching. arXiv 2021, arXiv:2101.10811. [Google Scholar] [CrossRef]

- Rao, Z.; Dai, Y.; Shen, Z.; He, R. Rethinking Training Strategy in Stereo Matching. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–14. [Google Scholar] [CrossRef]

- Zama Ramirez, P.; Tosi, F.; Poggi, M.; Salti, S.; Di Stefano, L.; Mattoccia, S. Open Challenges in Deep Stereo: The Booster Dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Shenzhen, China, 4–7 November 2022. [Google Scholar]

- Uhrig, J.; Schneider, N.; Schneider, L.; Franke, U.; Brox, T.; Geiger, A. Sparsity Invariant CNNs. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 11–20. [Google Scholar] [CrossRef]

- Menze, M.; Geiger, A. Object scene flow for autonomous vehicles. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3061–3070. [Google Scholar] [CrossRef]

- Junger, C.; Notni, G. Optimisation of a stereo image analysis by densify the disparity map based on a deep learning stereo matching framework. In Proceedings of the Dimensional Optical Metrology and Inspection for Practical Applications XI—International Society for Optics and Photonics, Orlando, FL, USA, 3 April–13 June 2022; Volume 12098, pp. 91–106. [Google Scholar] [CrossRef]

- Ramirez, P.Z.; Costanzino, A.; Tosi, F.; Poggi, M.; Salti, S.; Stefano, L.D.; Mattoccia, S. Booster: A Benchmark for Depth from Images of Specular and Transparent Surfaces. arXiv 2023, arXiv:2301.08245. [Google Scholar]

- Erich, F.; Leme, B.; Ando, N.; Hanai, R.; Domae, Y. Learning Depth Completion of Transparent Objects using Augmented Unpaired Data. In Proceedings of the EEE International Conference on Robotics and Automation (ICRA 2023), London, UK, 29 May–2 June 2023. [Google Scholar]

- Landmann, M.; Heist, S.; Dietrich, P.; Speck, H.; Kühmstedt, P.; Tünnermann, A.; Notni, G. 3D shape measurement of objects with uncooperative surface by projection of aperiodic thermal patterns in simulation and experiment. Opt. Eng. 2020, 59, 094107. [Google Scholar] [CrossRef]

- Mayer, N.; Ilg, E.; Häusser, P.; Fischer, P.; Cremers, D.; Dosovitskiy, A.; Brox, T. A Large Dataset to Train Convolutional Networks for Disparity, Optical Flow, and Scene Flow Estimation. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, Nevada, USA, 27–30 June 2016. [Google Scholar]

- Ros, G.; Sellart, L.; Materzynska, J.; Vazquez, D.; Lopez, A.M. The SYNTHIA Dataset: A Large Collection of Synthetic Images for Semantic Segmentation of Urban Scenes. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 3234–3243. [Google Scholar] [CrossRef]

- Tosi, F.; Liao, Y.; Schmitt, C.; Geiger, A. SMD-Nets: Stereo Mixture Density Networks. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8938–8948. [Google Scholar]

- Friedman, E.; Lehr, A.; Gruzdev, A.; Loginov, V.; Kogan, M.; Rubin, M.; Zvitia, O. Knowing the Distance: Understanding the Gap Between Synthetic and Real Data For Face Parsing. arXiv 2023, arXiv:2303.15219. [Google Scholar]

- Whelan, T.; Kaess, M.; Leonard, J.; McDonald, J. Deformation-based Loop Closure for Large Scale Dense RGB-D SLAM. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013. [Google Scholar]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohli, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A.W. KinectFusion: Real-time dense surface mapping and tracking. In Proceedings of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar]

- Slavcheva, M. Signed Distance Fields for Rigid and Deformable 3D Reconstruction. Ph.D. Thesis, Technical University of Munich, Munich, Germany, 2018. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 6–21 June 2012; pp. 3354–3361. [Google Scholar] [CrossRef]

- Li, L.; Wang, R.; Zhang, X. A Tutorial Review on Point Cloud Registrations: Principle, Classification, Comparison, and Technology Challenges. Math. Probl. Eng. 2021, 2021, 9953910. [Google Scholar] [CrossRef]

- Matsuo, K.; Aoki, Y. Depth image enhancement using local tangent plane approximations. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 7–12 June 2015; pp. 3574–3583. [Google Scholar] [CrossRef]

- Fadnavis, S. Image Interpolation Techniques in Digital Image Processing: An Overview. Int. J. Eng. Res. Appl. 2014, 4, 70–73. [Google Scholar]

- Ferrera, M.; Boulch, A.; Moras, J. Fast Stereo Disparity Maps Refinement By Fusion of Data-Based And Model-Based Estimations. In Proceedings of the International Conference on 3D Vision (3DV), Quebec, QC, Canada, 16–19 September 2019. [Google Scholar]

- Zhang, Y.; Funkhouser, T. Deep Depth Completion of a Single RGB-D Image. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 175–185. [Google Scholar] [CrossRef]

- Wei, M.; Yan, Q.; Luo, F.; Song, C.; Xiao, C. Joint Bilateral Propagation Upsampling for Unstructured Multi-View Stereo. Vis. Comput. 2019, 35, 797–809. [Google Scholar] [CrossRef]

- Chen, J.; Adams, A.; Wadhwa, N.; Hasinoff, S.W. Bilateral Guided Upsampling. ACM Trans. Graph. 2016, 35, 203. [Google Scholar] [CrossRef]

- Françani, A.O.; Maximo, M.R.O.A. Dense Prediction Transformer for Scale Estimation in Monocular Visual Odometry. In Proceedings of the 2022 Latin American Robotics Symposium (LARS), 2022 Brazilian Symposium on Robotics (SBR), and 2022 Workshop on Robotics in Education (WRE), Sao Bernardo do Campo, Brazil, 18–21 October 2022. [Google Scholar]

- Fürsattel, P.; Placht, S.; Balda, M.; Schaller, C.; Hofmann, H.; Maier, A.; Riess, C. A Comparative Error Analysis of Current Time-of-Flight Sensors. IEEE Trans. Comput. Imaging 2016, 2, 27–41. [Google Scholar] [CrossRef]

- Pasinetti, S.; Nuzzi, C.; Luchetti, A.; Zanetti, M.; Lancini, M.; De Cecco, M. Experimental Procedure for the Metrological Characterization of Time-of-Flight Cameras for Human Body 3D Measurements. Sensors 2023, 23, 538. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Li, B.; Zhang, T.; Xia, T. Vehicle Detection from 3D Lidar Using Fully Convolutional Network. arXiv 2016, arXiv:1608.07916. [Google Scholar]

- Speck, H.; Munkelt, C.; Heist, S.; Kühmstedt, P.; Notni, G. Efficient freeform-based pattern projection system for 3D measurements. Opt. Express 2022, 30, 39534–39543. [Google Scholar] [CrossRef]

- Junger, C.; Notni, G. Investigations of closed source registration methods of depth technologies for human-robot collaboration. In Proceedings of the 60th IWK—Ilmenau Scientific Colloquium, Ilmenau, Germany, 4–8 September 2023. [Google Scholar]

| Algorithm (Sequential) | Computation Time | Max. RSS | Density | Dense, Accurate | |

|---|---|---|---|---|---|

| Unit (i9) | Unit (i7) | Unit (i9)/(i7) | ("visual") | ||

| SOTA proj. | 0.183 s | 0.254 s | 58.2 MiB | % | % |

| TMRP | s | s | MiB | 65.7 % | 100 % |

| Properties | SOTA Proj. | JBU [6] | Blaze SDK | DTnea [7] | TMRP (Ours) |

|---|---|---|---|---|---|

| Creates false neighbors in raster; Figure 2A,C | low | often | less | often | never |

| Creates false gaps in raster; Figure 2B | often | low | low | never | never |

| Resolution of ambiguities; Figure 2D | no | no | yes | yes | yes |

| Density is independent of | no | no | closed source | yes | yes |

| Required input | w/o | w/o | closed source | w/o | w/ |

| Computing effort | low | middle | middle–high | high | high |

| For almost any sensor & modality | yes | yes | no | yes | yes |

| Code available (open source & free) | yes | yes | no | yes | yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Junger, C.; Buch, B.; Notni, G. Triangle-Mesh-Rasterization-Projection (TMRP): An Algorithm to Project a Point Cloud onto a Consistent, Dense and Accurate 2D Raster Image. Sensors 2023, 23, 7030. https://doi.org/10.3390/s23167030

Junger C, Buch B, Notni G. Triangle-Mesh-Rasterization-Projection (TMRP): An Algorithm to Project a Point Cloud onto a Consistent, Dense and Accurate 2D Raster Image. Sensors. 2023; 23(16):7030. https://doi.org/10.3390/s23167030

Chicago/Turabian StyleJunger, Christina, Benjamin Buch, and Gunther Notni. 2023. "Triangle-Mesh-Rasterization-Projection (TMRP): An Algorithm to Project a Point Cloud onto a Consistent, Dense and Accurate 2D Raster Image" Sensors 23, no. 16: 7030. https://doi.org/10.3390/s23167030

APA StyleJunger, C., Buch, B., & Notni, G. (2023). Triangle-Mesh-Rasterization-Projection (TMRP): An Algorithm to Project a Point Cloud onto a Consistent, Dense and Accurate 2D Raster Image. Sensors, 23(16), 7030. https://doi.org/10.3390/s23167030