Text Sentiment Classification Based on BERT Embedding and Sliced Multi-Head Self-Attention Bi-GRU

Abstract

1. Introduction

- Main Contribution

- It is the first time that we propose the concept of a Bidirectional Slice Gated Recurrent Unit in this article. The slicing network can speed up the training of the model. Meanwhile, the model can reach the balance of performance and efficiency.

- It is the first time that we combine the multi-head self-attention mechanism with the sliced bi-directional Gated Recurrent Unit, which can learn hidden information in different subspaces.

- We take advantage of BERT nature to perform pre-training. The Sliced Bi-GRU can model each part in various individual sub-space. The MSA helps the model to increase semantic relevance issues. As a result, the performance of the proposed method keeps better by combination of BERT, Sliced Bi-GRU, and MSA.

2. Related Works

3. The Proposed Approach

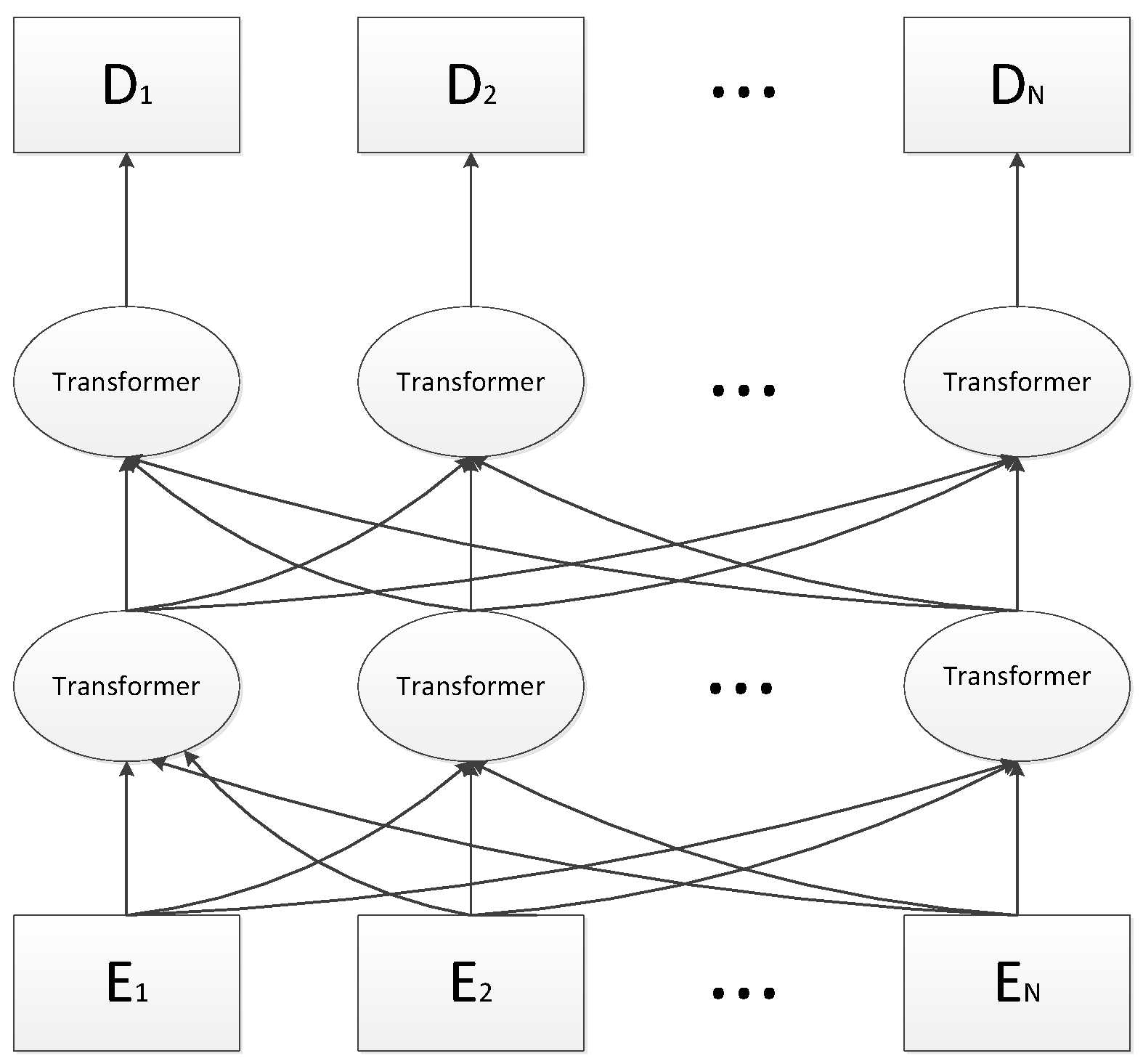

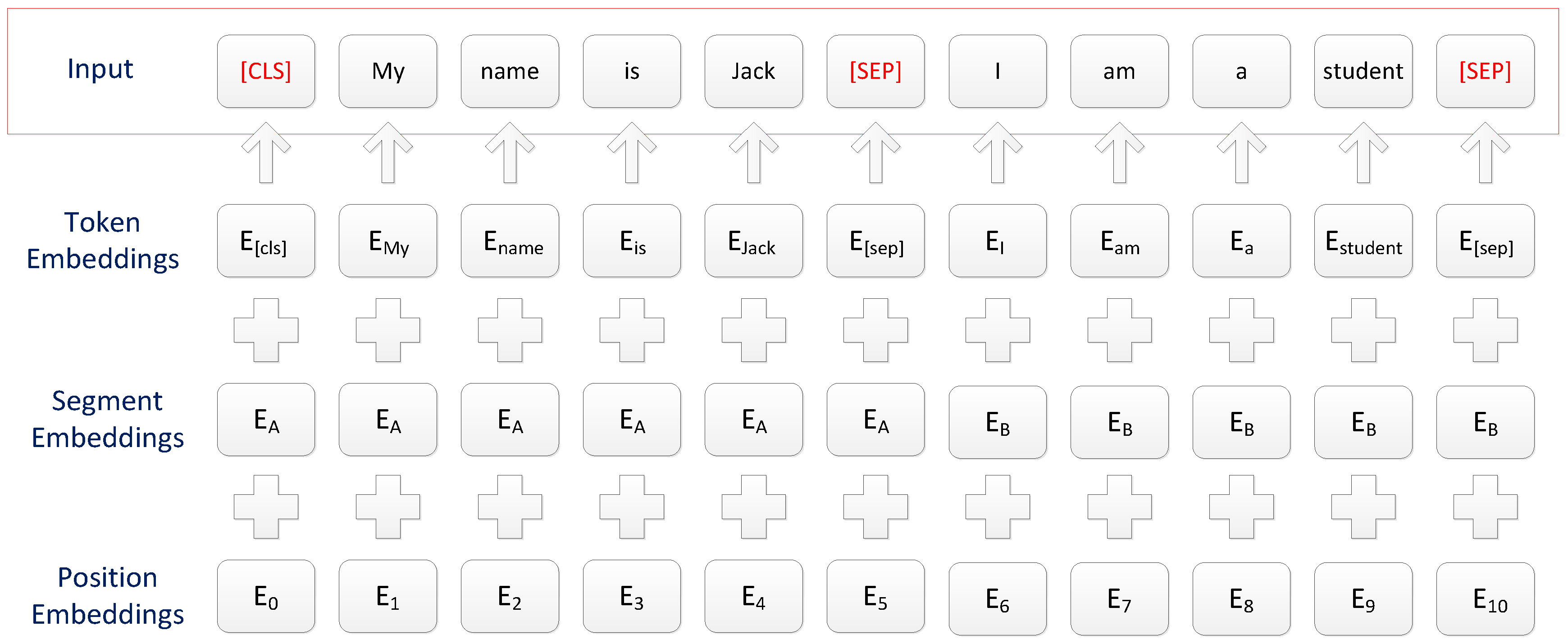

3.1. BERT Pre-Trained Language Model

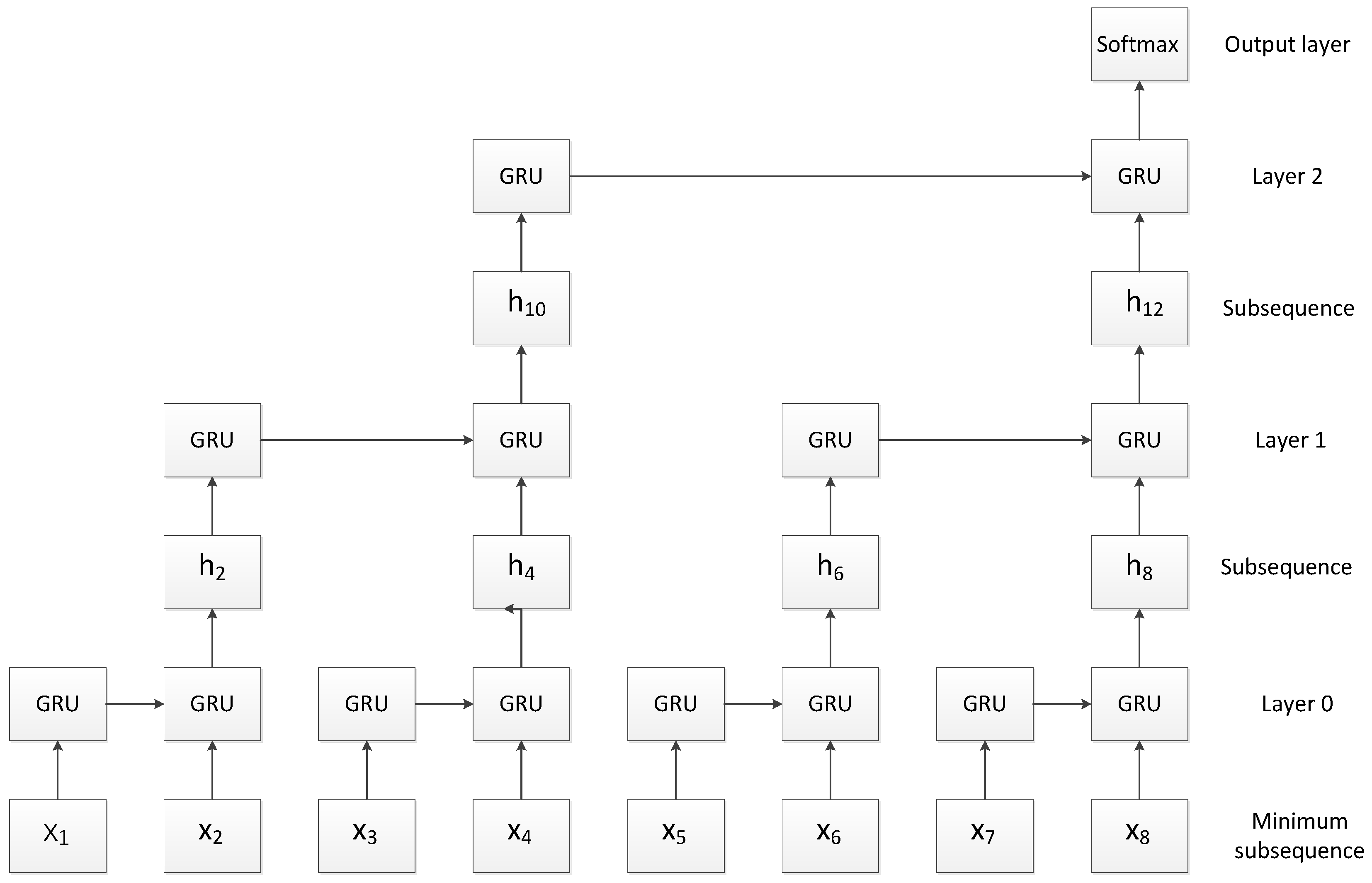

3.2. Slice Recurrent Neural Network

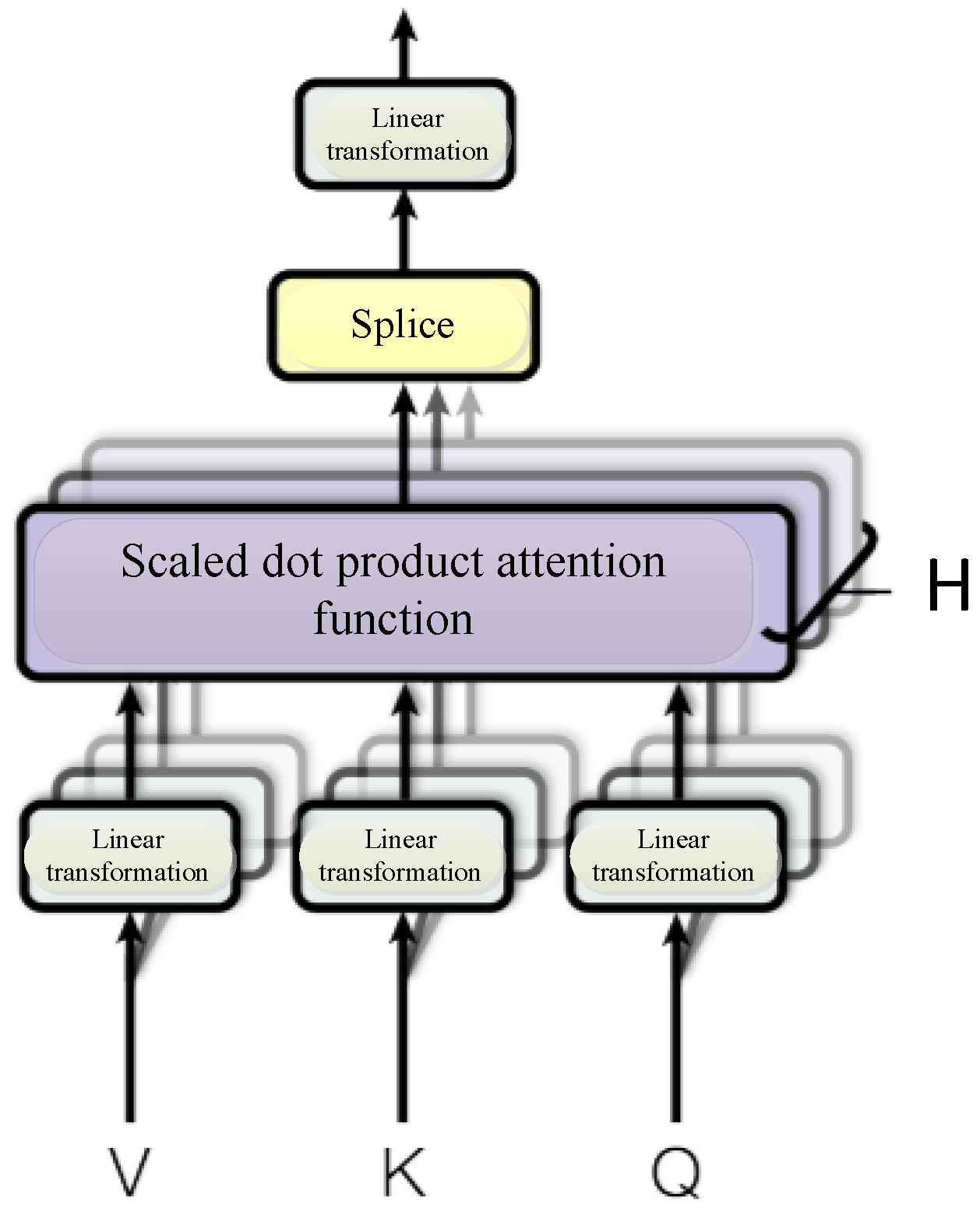

3.3. Multi-Head Self-Attention Mechanism

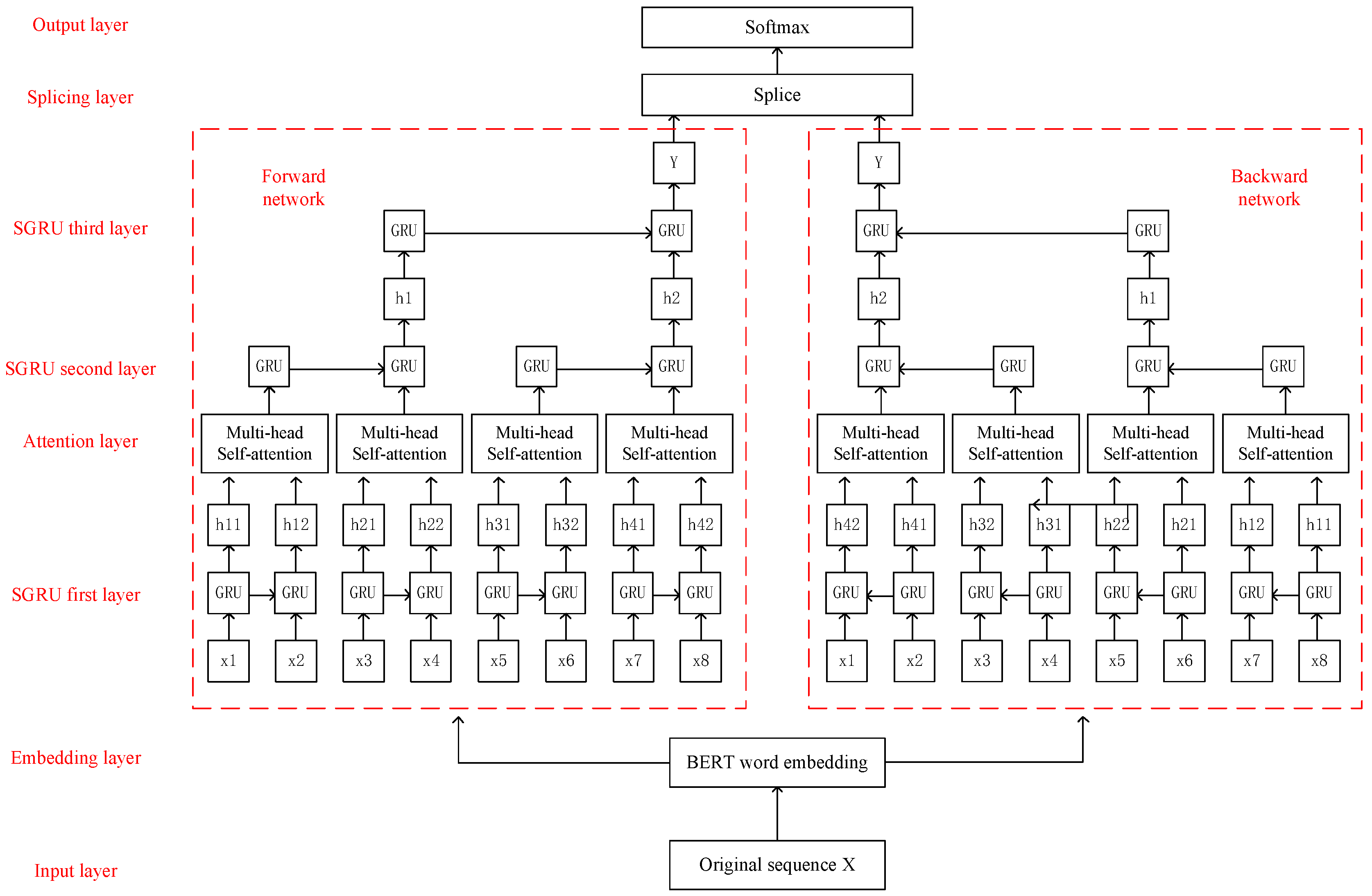

3.4. Multi-Head Self-Attention Aware Bi-SGRU Classification Model

4. Experiment Analysis

4.1. Dataset

4.2. Experimental Environment

4.3. Evaluation Metric

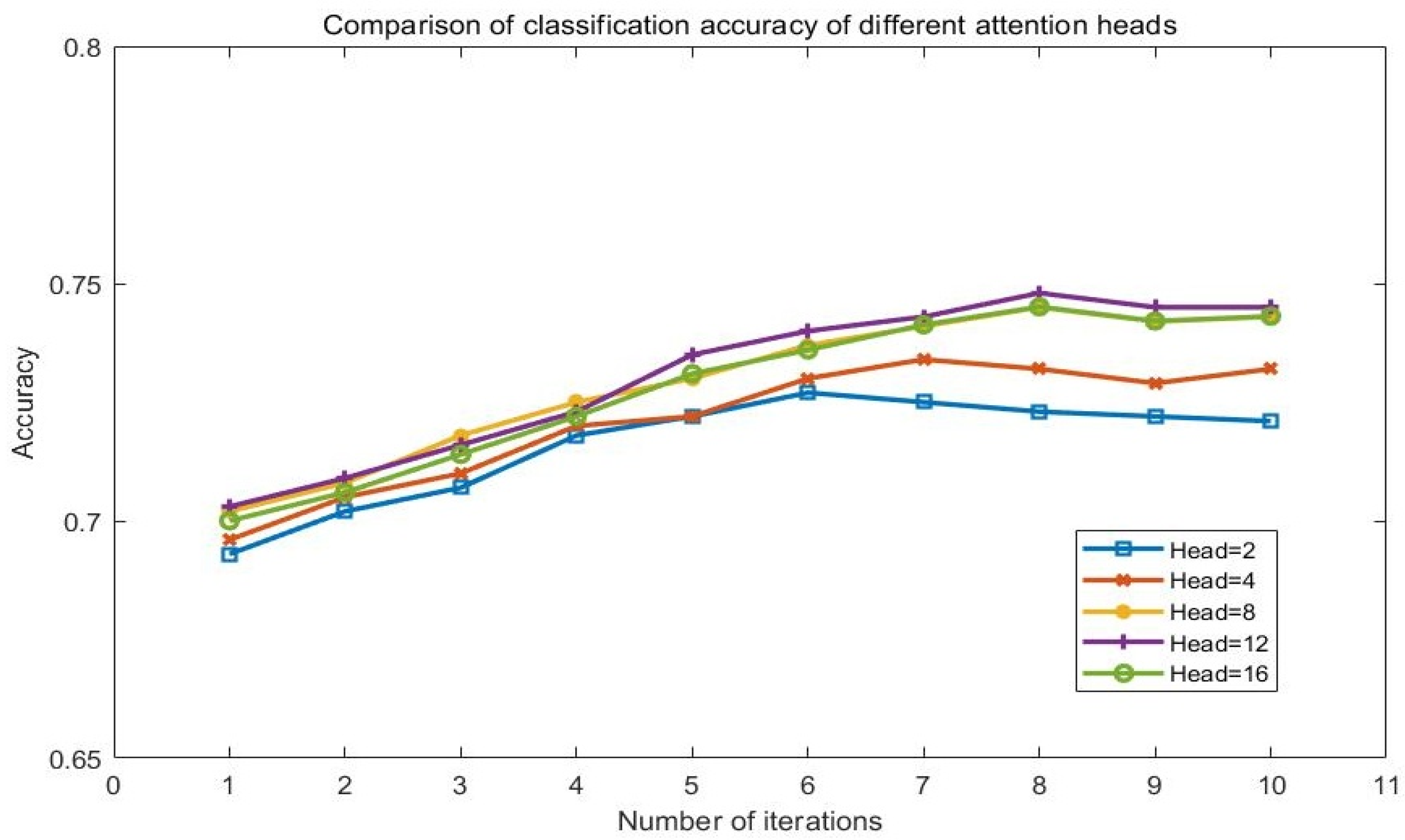

4.4. Parameter Configuration

4.5. Experiment Procedure

4.6. Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nasukawa, T.; Yi, J. Sentiment Analysis: Capturing Favorability Using Natural Language Processing. In Proceedings of the 2nd International Conference on Knowledge Capture, Sanibel Island, FL, USA, 23–25 October 2003; pp. 70–77. [Google Scholar]

- Cheng, X.; Zhu, L.; Zhu, Q.; Wang, J. The Framework of Network Public Opinion Monitoring and Analyzing System Based on Semantic Content Identification. J. Converg. Inf. Technol. 2010, 5, 48–55. [Google Scholar]

- Barnes, S.; Vidgen, R. Data triangulation in action: Using comment analysis to refine web quality metrics. In Proceedings of the 13th European Conference on Information, Regensburg, Germany, 26–28 May 2005; pp. 92–103. [Google Scholar]

- Mann, G.; Mimno, D.; Mccallum, A. Bibliometric impact measures leveraging topic analysis. In Proceedings of the 6th ACM/IEEE-CS Joint Conference on Digital Libraries, Chapel Hill, NC, USA, 11–15 June 2006; pp. 65–74. [Google Scholar]

- Cambria, E. Affective Computing and Sentiment Analysis. IEEE Intell. Syst. 2016, 31, 102–107. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. Comput. Sci. 2013, 25, 44–51. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C. Glove: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Lee, G.; Jeong, J.; Seo, S.; Kim, C.; Kang, P. Sentiment classification with word localization based on weakly supervised learning with a convolutional neural network. Knowl.-Based Syst. 2018, 152, 70–82. [Google Scholar] [CrossRef]

- Yu, Z.; Liu, G. Sliced Recurrent Neural Networks. arXiv 2018, arXiv:1807.02291. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Kim, Y. Convolutional neural networks for sentence classification. arXiv 2014, arXiv:1408.5882. [Google Scholar]

- Ouyang, X.; Zhou, P.; Li, C.; Liu, L. Sentiment analysis using convolutional neural network. In Proceedings of the IEEE International Conference on Computer and Information Technology Ubiquitous Computing and Communications Dependable, Liverpool, UK, 26–28 October 2015; pp. 2359–2364. [Google Scholar]

- Huang, S.; Bao, L.; Cao, Y.; Chen, Z.; Lin, C.-Y.; Ponath, C.R.; Sun, J.-T.; Zhou, M.; Wang, J. Smart Sentiment Classifier for Product Reviews. U.S. Patent Application 11/950,512, 9 October 2008. [Google Scholar]

- Bradbury, J.; Merity, S.; Xiong, C.; Socher, R. Quasi-recurrent neural networks. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017; pp. 568–577. [Google Scholar]

- Xue, W.; Li, T. Aspect Based Sentiment Analysis with Gated Convolutional Networks. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 2514–2523. [Google Scholar]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Zhang, X.; Zhao, J.; Lecun, Y. Character-level Convolutional Networks for Text Classification. Adv. Neural Inf. Process. Syst. 2015, 28, 649–657. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, Attend and Tell: Neural image caption generation with visual attention. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2048–2057. [Google Scholar]

- Zhang, Y.; Er, M.; Venkatesan, R.; Wang, N.; Pratama, M. Sentiment classification using Comprehensive Attention Recurrent models. In Proceedings of the International Joint Conference on Neural Networks, Vancouver, BC, Canada, 24–29 July 2016; pp. 1562–1569. [Google Scholar]

- Mishev, K.; Gjorgjevikj, A.; Stojanov, R.; Mishkovski, I.; Vodenska, I.; Chitkushev, L.; Trajanov, D. Performance Evaluation of Word and Sentence Embeddings for Finance Headlines Sentiment Analysis. In ICT Innovations 2019. Big Data Processing and Mining; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Park, H.; Song, M.; Shin, K. Deep learning models and datasets for aspect term sentiment classification: Implementing holistic recurrent attention on target-dependent memories. Knowl.-Based Syst. 2020, 187, 104825.1–104825.15. [Google Scholar] [CrossRef]

- Zeng, L.; Ren, W.; Shan, L. Attention-based bidirectional gated recurrent unit neural networks for well logs prediction and lithology identification. Neurocomputing 2020, 414, 153–171. [Google Scholar] [CrossRef]

- Yang, Z.; Yang, D.; Dyer, C.; He, X.; Smola, A.; Hovy, E. Hierarchical attention networks for document classification. In Proceedings of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1480–1489. [Google Scholar]

- Chen, Q.; Hu, Q.; Huang, J.; He, L.; An, W. Enhancing Recurrent Neural Networks with Positional Attention for Question Answering. In Proceedings of the 40th International Acm Sigir Conference, Tokyo, Japan, 7–11 August 2017; pp. 993–996. [Google Scholar]

- Cambria, E.; Li, Y.; Xing, Z.; Poria, S.; Kwok, K. SenticNet 6: Ensemble Application of Symbolic and Subsymbolic AI for Sentiment Analysis. In Proceedings of the 29th ACM International Conference on Information and Knowledge Management, ACM, New York, NY, USA, 19–23 October 2020; pp. 105–114. [Google Scholar]

- Ambartsoumian, A.; Popowich, F. Self-Attention: A Better Building Block for Sentiment Analysis Neural Network Classifiers. In Proceedings of the 9th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis, Brussels, Belgium, 31 October 2018; pp. 130–139. [Google Scholar]

- Shen, T.; Jiang, J.; Zhou, T.; Pan, R.; Long, G.; Zhang, C. Disan: Directional self-attention network for rnn/cnn-free language understanding. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 2185–2196. [Google Scholar]

- Akhtar, M.S.; Ekbal, A.; Cambria, E. How Intense Are You? Predicting Intensities of Emotions and Sentiments using Stacked Ensemble [Application Notes]. IEEE Comput. Intell. Mag. 2020, 15, 64–75. [Google Scholar] [CrossRef]

- Basiri, M.E.; Nemati, S.; Abdar, M.; Cambria, E.; Acharya, U.R. ABCDM: An Attention-based Bidirectional CNN-RNN Deep Model for sentiment analysis. Future Gener. Comput. Syst. 2021, 115, 279–294. [Google Scholar] [CrossRef]

- Howard, J.; Ruder, S. Universal Language Model Fine-tuning for Text Classification. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Long Papers), Melbourne, Australia, 15–20 July 2018; Volume 1, pp. 328–339. [Google Scholar]

- Yuan, Z.; Wu, S.; Wu, F.; Liu, J.; Huang, Y. Domain attention model for multi-domain sentiment classification. Knowl.-Based Syst. 2018, 155, 1–10. [Google Scholar] [CrossRef]

- Sutskever, I.; Vinyals, O.; Le, Q. Sequence to sequence learning with neural networks. Adv. Neural Inf. Process. Syst. 2014, 27, 3104–3112. [Google Scholar]

- Semenov, A.; Boginski, V.; Pasiliao, E. Neural Networks with Multidimensional Cross-Entropy Loss Functions. In Computational Data and Social Networks; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Oord, A.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.; Kavukcuoglu, K. WaveNet: A Generative Model for Raw Audio. arXiv 2016, arXiv:1609.03499v1. [Google Scholar]

- Cheng, J.; Dong, L.; Lapata, M. Long Short-Term Memory-Networks for Machine Reading. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 1526–1530. [Google Scholar]

- Cho, K.; Merriënboer, B.; Gülçehre, Ç.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using rnn encoder-decoder for statistical machine translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 1724–1734. [Google Scholar]

- Yang, Q.; Zhou, J.; Cheng, C.; Wei, X.; Chu, S. An Emotion Recognition Method Based on Selective Gated Recurrent Unit. In Proceedings of the 2018 IEEE International Conference on Progress in Informatics and Computing (PIC), Suzhou, China, 14–16 December 2018; pp. 33–37. [Google Scholar]

- Sun, C.; Liu, Y.; Jia, C.; Liu, B.; Lin, L. Recognizing Text Entailment via Bidirectional LSTM Model with Inner-Attention. In Intelligent Computing Methodologies; Springer: Cham, Switzerland, 2017; pp. 448–457. [Google Scholar]

- Huddar, M.G.; Sannakki, S.S.; Rajpurohit, V.S. Correction to: Attention-based multimodal contextual fusion for sentiment and emotion classification using bidirectional LSTM. Multimed. Tools Appl. 2021, 80, 13077. [Google Scholar] [CrossRef]

- Sachin, S.; Tripathi, A.; Mahajan, N.; Aggarwal, S.; Nagrath, P. Sentiment Analysis Using Gated Recurrent Neural Networks. SN Comput. Sci. 2020, 1, 74. [Google Scholar] [CrossRef]

- Yang, Q.; Rao, Y.; Xie, H.; Wang, J.; Wang, F.L.; Chan, W.H. Segment-level joint topic-sentiment model for online review analysis. IEEE Intell. Syst. 2019, 34, 43–50. [Google Scholar] [CrossRef]

| Dataset Name | Max Words | Average Words | Vocabulary | Category |

|---|---|---|---|---|

| Yelp 2015 dataset | 1092 | 108 | 228,715 | 5 |

| Amazon dataset | 441 | 83 | 1,274,916 | 5 |

| Hardware Information | Related Configuration |

|---|---|

| Operating system | Windows 10 |

| CPU | Intel i5-7200 |

| RAM | 8G |

| Graphics card | NVIDIA GeForce GTX1070 |

| Graphics card acceleration | CUDA 10.1/cudnn 7.1 |

| Parameter Name | Parameter Value |

|---|---|

| Word vector dimension | 768 |

| Batch_size | 100 |

| Number of filters | 50 |

| Number of Attention head | 12 |

| Number of iterations | 10 |

| Optimizer | Adam |

| Dropout rate | 0.5 |

| Learning rate | 0.001 |

| Loss function | Cross-Entropy Loss |

| Dataset | Model Name | Accuracy (%) | The Time Required for One Iteration (min) |

|---|---|---|---|

| Yelp 2015 dataset | DCCNN [34] | 71.83 | 4.25 |

| LSTM [35] | 74.05 | 163.38 | |

| GRU [36] | 73.76 | 136.74 | |

| SGRU [37] | 73.91 | 10.33 | |

| BiLSTM-Attention [38] | 74.18 | 277.75 | |

| BiLSTM with Attention [39] | 74.22 | 219.84 | |

| Our | 74.37 | 25.79 | |

| Amazon dataset | DCCNN [34] | 60.18 | 14.70 |

| LSTM [35] | 62.12 | 385.11 | |

| GRU [36] | 61.97 | 322.25 | |

| SGRU [37] | 62.03 | 40.05 | |

| BiLSTM-Attention [38] | 62.29 | 638.09 | |

| BiLSTM with Attention [39] | 62.34 | 77.70 | |

| Our | 62.57 | 101.83 |

| Dataset | Model | Accuracy (%) | Time (min) |

|---|---|---|---|

| Yelp 2015 dataset | SGRU(2,6) | 73.92 | 31.25 |

| SGRU(4,3) | 74.33 | 24.98 | |

| SGRU(8,2) | 74.37 | 25.79 | |

| SGRU(16,1) | 74.55 | 39.23 | |

| Amazon dataset | SGRU(2,6) | 62.05 | 118.32 |

| SGRU(4,3) | 62.54 | 103.52 | |

| SGRU(8,2) | 62.57 | 101.83 | |

| SGRU(16,1) | 62.80 | 137.63 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Wu, Z.; Liu, K.; Zhao, Z.; Wang, J.; Wu, C. Text Sentiment Classification Based on BERT Embedding and Sliced Multi-Head Self-Attention Bi-GRU. Sensors 2023, 23, 1481. https://doi.org/10.3390/s23031481

Zhang X, Wu Z, Liu K, Zhao Z, Wang J, Wu C. Text Sentiment Classification Based on BERT Embedding and Sliced Multi-Head Self-Attention Bi-GRU. Sensors. 2023; 23(3):1481. https://doi.org/10.3390/s23031481

Chicago/Turabian StyleZhang, Xiangsen, Zhongqiang Wu, Ke Liu, Zengshun Zhao, Jinhao Wang, and Chengqin Wu. 2023. "Text Sentiment Classification Based on BERT Embedding and Sliced Multi-Head Self-Attention Bi-GRU" Sensors 23, no. 3: 1481. https://doi.org/10.3390/s23031481

APA StyleZhang, X., Wu, Z., Liu, K., Zhao, Z., Wang, J., & Wu, C. (2023). Text Sentiment Classification Based on BERT Embedding and Sliced Multi-Head Self-Attention Bi-GRU. Sensors, 23(3), 1481. https://doi.org/10.3390/s23031481