Augmented Reality-Assisted Ultrasound Breast Biopsy

Abstract

:1. Introduction

2. Materials and Methods

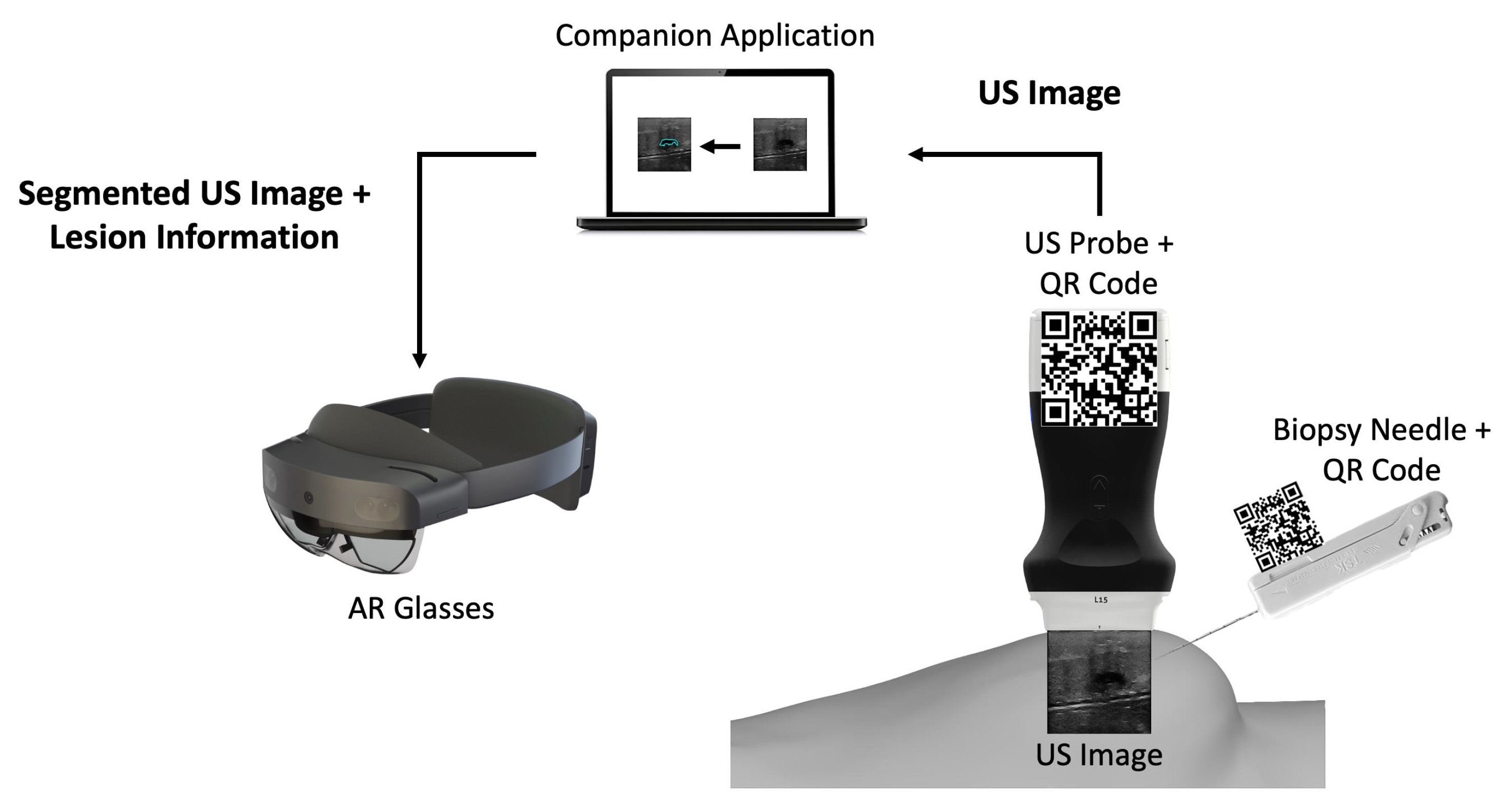

2.1. Overview

2.2. Companion Application

2.2.1. US Breast Lesion Segmentation

2.2.2. Communication Protocol

2.3. AR Application

2.3.1. Needle and US Probe Tracking

2.3.2. AR Hologram Generation

3. Results

3.1. Latency and Execution Times

3.2. Usability

4. Discussion

5. Study Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| US | Ultrasound |

| CNN | Convolutional Neural Network |

| AR | Augmented Reality |

| GPU | Graphics Processing Unit |

| GUI | Graphical User Interface |

| TCP | Transmission Control Protocol |

| UDP | User Datagram Protocol |

| SDK | Software Development Kit |

References

- Lei, S.; Zheng, R.; Zhang, S.; Wang, S.; Chen, R.; Sun, K.; Zeng, H.; Zhou, J.; Wei, W. Global patterns of breast cancer incidence and mortality: A population-based cancer registry data analysis from 2000 to 2020. Cancer Commun. 2021, 41, 1183–1194. [Google Scholar] [CrossRef] [PubMed]

- WHO-GLOBOCAN Database. Available online: https://gco.iarc.fr/today/home (accessed on 8 September 2022).

- McKinney, S.M.; Sieniek, M.; Godbole, V.; Godwin, J.; Antropova, N.; Ashrafian, H.; Back, T.; Chesus, M.; Corrado, G.S.; Darzi, A.; et al. International evaluation of an AI system for breast cancer screening. Nature 2020, 577, 89–94. [Google Scholar] [CrossRef] [PubMed]

- Wang, L. Early Diagnosis of Breast Cancer. Sensors 2017, 17, 1572. [Google Scholar] [CrossRef]

- Bick, U.; Trimboli, R.M.; Athanasiou, A.; Balleyguier, C.; Baltzer, P.A.T.; Bernathova, M.; Borbély, K.; Brkljacic, B.; Carbonaro, L.A.; Clauser, P.; et al. Image-guided breast biopsy and localisation: Recommendations for information to women and referring physicians by the European Society of Breast Imaging. Insights Into Imaging 2020, 11, 12. [Google Scholar] [CrossRef]

- Hindi, A.; Peterson, C.; Barr, R.G. Artifacts in diagnostic ultrasound. Rep. Med. Imaging 2013, 6, 29–48. [Google Scholar]

- Hu, Y.; Guo, Y.; Wang, Y.; Yu, J.; Li, J.; Zhou, S.; Chang, C. Automatic tumor segmentation in breast ultrasound images using a dilated fully convolutional network combined with an active contour model. Med. Phys. 2019, 46, 215–228. [Google Scholar] [CrossRef]

- Jiménez-Gaona, Y.; Rodríguez-Álvarez, M.J.; Lakshminarayanan, V. Deep-Learning-Based Computer-Aided Systems for Breast Cancer Imaging: A Critical Review. Appl. Sci. 2020, 10, 8298. [Google Scholar] [CrossRef]

- Wang, K.; Liang, S.; Zhang, Y. Residual Feedback Network for Breast Lesion Segmentation in Ultrasound Image; Springer Science and Business Media Deutschland GmbH: Berlin/Heidelberg, Germany, 2021; Volume 12901 LNCS, pp. 471–481. [Google Scholar] [CrossRef]

- Ayana, G.; Dese, K.; woon Choe, S. Transfer Learning in Breast Cancer Diagnoses via Ultrasound Imaging. Cancers 2021, 13, 738. [Google Scholar] [CrossRef]

- Tagliabue, E.; Dall’Alba, D.; Magnabosco, E.; Tenga, C.; Peterlik, I.; Fiorini, P. Position-based modeling of lesion displacement in ultrasound-guided breast biopsy. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1329–1339. [Google Scholar] [CrossRef]

- Heil, J.; Sinn, P.; Richter, H.; Pfob, A.; Schaefgen, B.; Hennigs, A.; Riedel, F.; Thomas, B.; Thill, M.; Hahn, M.; et al. RESPONDER–diagnosis of pathological complete response by vacuum-assisted biopsy after neoadjuvant chemotherapy in breast Cancer-a multicenter, confirmative, one-armed, intra-individually-controlled, open, diagnostic trial. BMC Cancer 2018, 18, 851. [Google Scholar] [CrossRef]

- Mahmood, F.; Mahmood, E.; Dorfman, R.G.; Mitchell, J.; Mahmood, F.U.; Jones, S.B.; Matyal, R. Augmented Reality and Ultrasound Education: Initial Experience. J. Cardiothorac. Vasc. Anesth. 2018, 32, 1363–1367. [Google Scholar] [CrossRef]

- Fuchs, H.; State, A.; Pisano, E.D.; Garrett, W.F.; Hirota, G.; Livingston, M.; Whitton, M.C.; Pizer, S.M. Towards Performing Ultrasound-Guided Needle Biopsies from Within a Head-Mounted Display; Springer: Berlin/Heidelberg, Germany, 1996; pp. 591–600. [Google Scholar] [CrossRef]

- Rosenthal, M.; State, A.; Lee, J.; Hirota, G.; Ackerman, J.; Keller, K.; Pisano, E.D.; Jiroutek, M.; Muller, K.; Fuchs, H. Augmented reality guidance for needle biopsies: An initial randomized, controlled trial in phantoms. Med. Image Anal. 2002, 6, 313–320. [Google Scholar] [CrossRef] [PubMed]

- Asgar-Deen, D.; Carriere, J.; Wiebe, E.; Peiris, L.; Duha, A.; Tavakoli, M. Augmented Reality Guided Needle Biopsy of Soft Tissue: A Pilot Study. Front. Robot. AI 2020, 7, 72. [Google Scholar] [CrossRef] [PubMed]

- Gouveia, P.F.; Costa, J.; Morgado, P.; Kates, R.; Pinto, D.; Mavioso, C.; Anacleto, J.; Martinho, M.; Lopes, D.S.; Ferreira, A.R.; et al. Breast cancer surgery with augmented reality. Breast 2021, 56, 14–17. [Google Scholar] [CrossRef] [PubMed]

- Cattari, N.; Condino, S.; Cutolo, F.; Ferrari, M.; Ferrari, V. In Situ Visualization for 3D Ultrasound-Guided Interventions with Augmented Reality Headset. Bioengineering 2021, 8, 131. [Google Scholar] [CrossRef]

- Guo, Z.; Tai, Y.; Du, J.; Chen, Z.; Li, Q.; Shi, J. Automatically Addressing System for Ultrasound-Guided Renal Biopsy Training Based on Augmented Reality. IEEE J. Biomed. Health Inform. 2021, 25, 1495–1507. [Google Scholar] [CrossRef] [PubMed]

- Kashiwagi, S.; Asano, Y.; Goto, W.; Morisaki, T.; Shibutani, M.; Tanaka, H.; Hirakawa, K.; Ohira, M. Optical See-through Head-mounted Display (OST-HMD)–assisted Needle Biopsy for Breast Tumor: A Technical Innovation. In Vivo 2022, 36, 848–852. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.; Plishker, W.; Matisoff, A.; Sharma, K.; Shekhar, R. HoloUS: Augmented reality visualization of live ultrasound images using HoloLens for ultrasound-guided procedures. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 385–391. [Google Scholar] [CrossRef]

- Costa, J.N.; Gomes-Fonseca, J.; Valente, S.; Ferreira, L.; Oliveira, B.; Torres, H.R.; Morais, P.; Alves, V.; Vilaca, J.L. Ultrasound training simulator using augmented reality glasses: An accuracy and precision assessment study. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, Scotland, UK, 11–15 July 2022; pp. 4461–4464. [Google Scholar] [CrossRef]

- Tolsgaard, M.G.; Todsen, T.; Sorensen, J.L.; Ringsted, C.; Lorentzen, T.; Ottesen, B.; Tabor, A. International Multispecialty Consensus on How to Evaluate Ultrasound Competence: A Delphi Consensus Survey. PLoS ONE 2013, 8, e57687. [Google Scholar] [CrossRef]

- Garland, M.; Grand, S.L.; Nickolls, J.; Anderson, J.; Hardwick, J.; Morton, S.; Phillips, E.; Zhang, Y.; Volkov, V. Parallel Computing Experiences with CUDA. IEEE Micro 2008, 28, 13–27. [Google Scholar] [CrossRef]

- Priimak, D. Finite difference numerical method for the superlattice Boltzmann transport equation and case comparison of CPU(C) and GPU(CUDA) implementations. J. Comput. Phys. 2014, 278, 182–192. [Google Scholar] [CrossRef]

- Valsalan, P.; Sriramakrishnan, P.; Sridhar, S.; Latha, G.C.P.; Priya, A.; Ramkumar, S.; Singh, A.R.; Rajendran, T. Knowledge based fuzzy c-means method for rapid brain tissues segmentation of magnetic resonance imaging scans with CUDA enabled GPU machine. J. Ambient. Intell. Humaniz. Comput. 2020, 1–14. [Google Scholar] [CrossRef]

- Gavali, P.; Banu, J.S. Chapter 6-Deep Convolutional Neural Network for Image Classification on CUDA Platform. In Deep Learning and Parallel Computing Environment for Bioengineering Systems; Sangaiah, A.K., Ed.; Academic Press: Cambridge, MA, USA, 2019; pp. 99–122. [Google Scholar] [CrossRef]

- Pereira, S.; Pinto, A.; Alves, V.; Silva, C.A. Brain Tumor Segmentation Using Convolutional Neural Networks in MRI Images. IEEE Trans. Med. Imaging 2016, 35, 1240–1251. [Google Scholar] [CrossRef] [PubMed]

- Ferreira, M.R.; Torres, H.R.; Oliveira, B.; Gomes-Fonseca, J.; Morais, P.; Novais, P.; Vilaca, J.L. Comparative Analysis of Current Deep Learning Networks for Breast Lesion Segmentation in Ultrasound Images. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, Scotland, UK, 11–15 July 2022; pp. 3878–3881. [Google Scholar] [CrossRef]

- Ribeiro, R.F.; Gomes-Fonseca, J.; Torres, H.R.; Oliveira, B.; Vilhena, E.; Morais, P.; Vilaca, J.L. Deep learning methods for lesion detection on mammography images: A comparative analysis. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, Scotland, UK, 11–15 July 2022; pp. 3526–3529. [Google Scholar] [CrossRef]

- Stevens, W.R.; Wright, G.R. TCP/IP Illustrated (3 Volume Set); Addison-Wesley Professional: Boston, MA, USA, 2001; p. 2152. [Google Scholar]

- Kurose, J.F.; Ross, K.W. Computer Networking: A Top-Down Approach; Addison-Wesley: Boston, MA, USA, 2010; p. 862. [Google Scholar]

- Bhatt, A.A.; Whaley, D.H.; Lee, C.U. Ultrasound-Guided Breast Biopsies. J. Ultrasound Med. 2021, 40, 1427–1443. [Google Scholar] [CrossRef] [PubMed]

- Shaw, R. Vector cross products in n dimensions. Int. J. Math. Educ. Sci. Technol. 1987, 18, 803–816. [Google Scholar] [CrossRef]

- Bluvol, N.; Kornecki, A.; Shaikh, A.; Fernandez, D.D.R.; Taves, D.H.; Fenster, A. Freehand Versus Guided Breast Biopsy: Comparison of Accuracy, Needle Motion, and Biopsy Time in a Tissue Model. Am. J. Roentgenol. 2009, 192, 1720–1725. [Google Scholar] [CrossRef]

- Rodríguez-Abad, C.; Fernández-de-la Iglesia, J.d.C.; Martínez-Santos, A.E.; Rodríguez-González, R. A Systematic Review of Augmented Reality in Health Sciences: A Guide to Decision-Making in Higher Education. Int. J. Environ. Res. Public Health 2021, 18, 4262. [Google Scholar] [CrossRef] [PubMed]

- Parekh, P.; Patel, S.; Patel, N.; Shah, M. Systematic review and meta-analysis of augmented reality in medicine, retail, and games. Vis. Comput. Ind. Biomed. Art 2020, 3, 21. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Costa, N.; Ferreira, L.; de Araújo, A.R.V.F.; Oliveira, B.; Torres, H.R.; Morais, P.; Alves, V.; Vilaça, J.L. Augmented Reality-Assisted Ultrasound Breast Biopsy. Sensors 2023, 23, 1838. https://doi.org/10.3390/s23041838

Costa N, Ferreira L, de Araújo ARVF, Oliveira B, Torres HR, Morais P, Alves V, Vilaça JL. Augmented Reality-Assisted Ultrasound Breast Biopsy. Sensors. 2023; 23(4):1838. https://doi.org/10.3390/s23041838

Chicago/Turabian StyleCosta, Nuno, Luís Ferreira, Augusto R. V. F. de Araújo, Bruno Oliveira, Helena R. Torres, Pedro Morais, Victor Alves, and João L. Vilaça. 2023. "Augmented Reality-Assisted Ultrasound Breast Biopsy" Sensors 23, no. 4: 1838. https://doi.org/10.3390/s23041838