The Virtual Sleep Lab—A Novel Method for Accurate Four-Class Sleep Staging Using Heart-Rate Variability from Low-Cost Wearables

Abstract

:1. Introduction

2. Methods

2.1. Participants

2.2. Sleep Training

2.3. Materials

2.4. Data and Statistical Analysis

2.5. Model Training, Testing, and Performance Measurement

3. Results

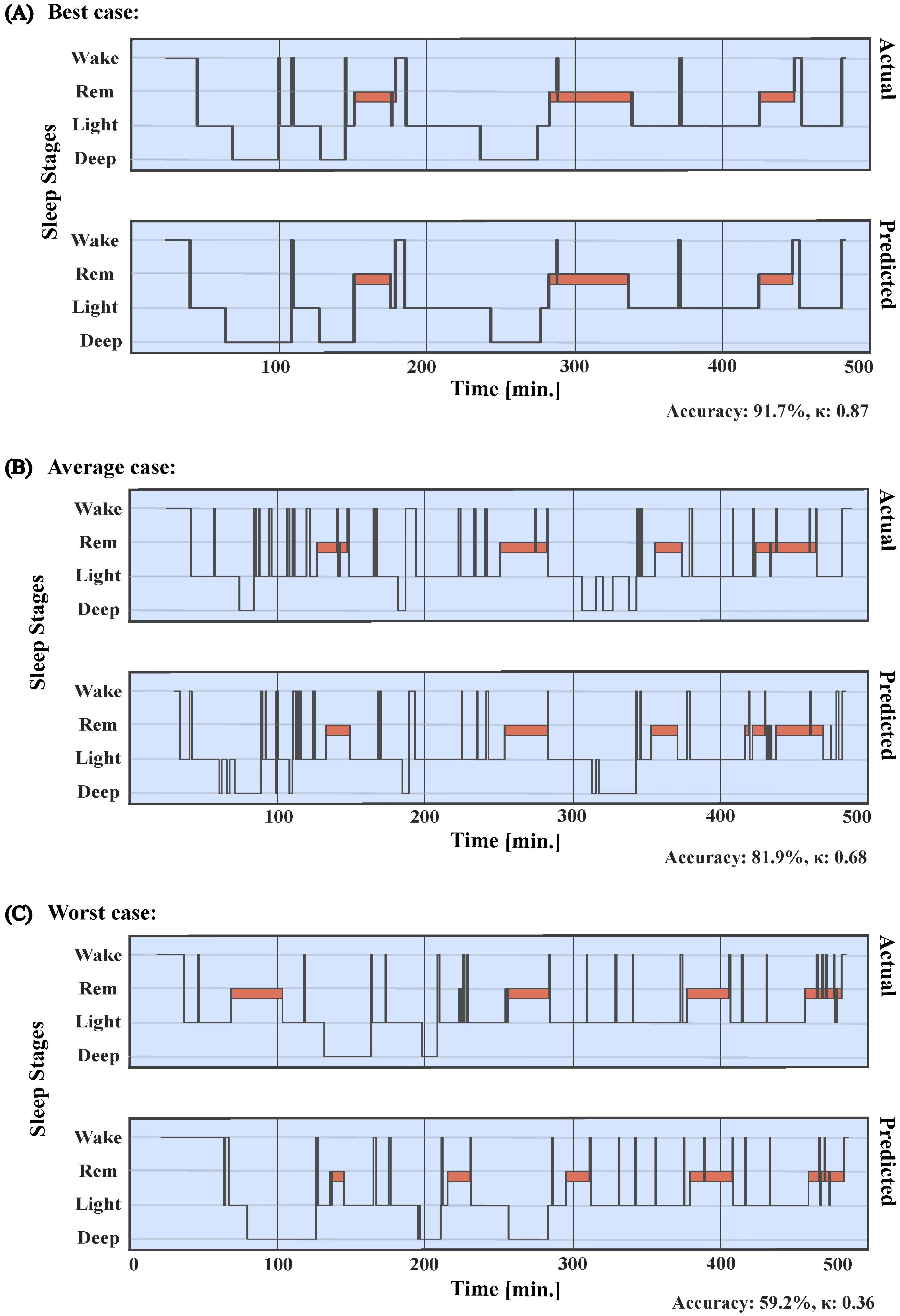

3.1. Model Performance

3.2. The Effects of Sleep Training on Subjective and Objective Sleep Variables

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IBI | Inter-beat Intervals |

| MCNN | multi-resolution neural network |

References

- Grandner, M.A. Epidemiology of insufficient sleep and poor sleep quality. In Sleep and Health; Elsevier: Amsterdam, The Netherlands, 2019; pp. 11–20. [Google Scholar]

- Chattu, V.K.; Manzar, M.D.; Kumary, S.; Burman, D.; Spence, D.W.; Pandi-Perumal, S.R. The global problem of insufficient sleep and its serious public health implications. Healthcare 2018, 7, 1. [Google Scholar]

- Andruškienė, J.; Varoneckas, G.; Martinkėnas, A.; Grabauskas, V. Factors associated with poor sleep and health-related quality of life. Medicina 2008, 44, 240–246. [Google Scholar] [CrossRef] [Green Version]

- Garbarino, S.; Lanteri, P.; Durando, P.; Magnavita, N.; Sannita, W.G. Co-morbidity, mortality, quality of life and the healthcare/welfare/social costs of disordered sleep: A rapid review. Int. J. Environ. Res. Public Health 2016, 13, 831. [Google Scholar] [CrossRef]

- Precedence Research. Sleep Aids Market. 2022. Available online: https://www.precedenceresearch.com/sleep-aids-market (accessed on 31 December 2021).

- Berry, R.B.; Brooks, R.; Gamaldo, C.E.; Harding, S.M.; Marcus, C.; Vaughn, B.V. The AASM manual for the scoring of sleep and associated events. In Rules, Terminology and Technical Specifications; American Academy of Sleep Medicine: Darien, IL, USA, 2012; Volume 176, p. 2012. [Google Scholar]

- Rasch, B.; Born, J. About sleep’s role in memory. Physiol. Rev. 2013, 93, 681–766. [Google Scholar] [CrossRef] [PubMed]

- Hauglund, N.L.; Pavan, C.; Nedergaard, M. Cleaning the sleeping brain–the potential restorative function of the glymphatic system. Curr. Opin. Physiol. 2020, 15, 1–6. [Google Scholar] [CrossRef]

- Tempesta, D.; Socci, V.; De Gennaro, L.; Ferrara, M. Sleep and emotional processing. Sleep Med. Rev. 2018, 40, 183–195. [Google Scholar] [CrossRef] [PubMed]

- Shapiro, C.M. Energy expenditure and restorative sleep. Biol. Psychol. 1982, 15, 229–239. [Google Scholar] [CrossRef]

- Sridhar, N.; Shoeb, A.; Stephens, P.; Kharbouch, A.; Shimol, D.B.; Burkart, J.; Ghoreyshi, A.; Myers, L. Deep learning for automated sleep staging using instantaneous heart rate. NPJ Digit. Med. 2020, 3, 1–10. [Google Scholar] [CrossRef]

- Aldredge, J.L.; Welch, A.J. Variations of heart-rate during sleep as a function of the sleep cycle. Electroencephalogr. Clin. Neurophysiol. 1973, 35, 193–198. [Google Scholar] [CrossRef]

- Chouchou, F.; Desseilles, M. heart-rate variability: A tool to explore the sleeping brain? Front. Neurosci. 2014, 8, 402. [Google Scholar] [CrossRef] [Green Version]

- Bušek, P.; Vaňková, J.; Opavskỳ, J.; Salinger, J.; Nevšímalová, S. Spectral analysis of heart-rate variability in sleep. Physiol. Res. 2005, 54, 369–376. [Google Scholar] [CrossRef]

- Versace, F.; Mozzato, M.; Tona, G.D.M.; Cavallero, C.; Stegagno, L. heart-rate variability during sleep as a function of the sleep cycle. Biol. Psychol. 2003, 63, 149–162. [Google Scholar] [CrossRef]

- Mendez, M.O.; Matteucci, M.; Castronovo, V.; Ferini-Strambi, L.; Cerutti, S.; Bianchi, A. Sleep staging from heart-rate variability: Time-varying spectral features and hidden Markov models. Int. J. Biomed. Eng. Technol. 2010, 3, 246–263. [Google Scholar] [CrossRef]

- Loh, H.W.; Ooi, C.P.; Vicnesh, J.; Oh, S.L.; Faust, O.; Gertych, A.; Acharya, U.R. Automated detection of sleep stages using deep learning techniques: A systematic review of the last decade (2010–2020). Appl. Sci. 2020, 10, 8963. [Google Scholar] [CrossRef]

- Sun, C.; Hong, S.; Wang, J.; Dong, X.; Han, F.; Li, H. A systematic review of deep learning methods for modeling electrocardiograms during sleep. Physiol. Meas. 2022, 43, 08TR02. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Li, Q.; Liu, C.; Shashikumar, S.P.; Nemati, S.; Clifford, G.D. Deep learning in the cross-time frequency domain for sleep staging from a single-lead electrocardiogram. Physiol. Meas. 2018, 39, 124005. [Google Scholar] [CrossRef] [PubMed]

- Imtiaz, S.A. A systematic review of sensing technologies for wearable sleep staging. Sensors 2021, 21, 1562. [Google Scholar] [CrossRef] [PubMed]

- Hedner, J.; White, D.P.; Malhotra, A.; Herscovici, S.; Pittman, S.D.; Zou, D.; Grote, L.; Pillar, G. Sleep staging based on autonomic signals: A multi-center validation study. J. Clin. Sleep Med. 2011, 7, 301–306. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Beattie, Z.; Oyang, Y.; Statan, A.; Ghoreyshi, A.; Pantelopoulos, A.; Russell, A.; Heneghan, C. Estimation of sleep stages in a healthy adult population from optical plethysmography and accelerometer signals. Physiol. Meas. 2017, 38, 1968. [Google Scholar] [CrossRef]

- Gasmi, A.; Augusto, V.; Beaudet, P.A.; Faucheu, J.; Morin, C.; Serpaggi, X.; Vassel, F. Sleep stages classification using cardio-respiratory variables. In Proceedings of the 2020 IEEE 16th International Conference on Automation Science and Engineering (CASE), Hong Kong, China, 20–21 August 2020; pp. 1031–1036. [Google Scholar]

- Fedorin, I.; Slyusarenko, K.; Lee, W.; Sakhnenko, N. Sleep stages classification in a healthy people based on optical plethysmography and accelerometer signals via wearable devices. In Proceedings of the 2019 IEEE 2nd Ukraine Conference on Electrical and Computer Engineering (UKRCON), Lviv, Ukraine, 2–6 July 2019; pp. 1201–1204. [Google Scholar]

- Miller, D.J.; Lastella, M.; Scanlan, A.T.; Bellenger, C.; Halson, S.L.; Roach, G.D.; Sargent, C. A validation study of the WHOOP strap against polysomnography to assess sleep. J. Sport. Sci. 2020, 38, 2631–2636. [Google Scholar] [CrossRef]

- Kuula, L.; Pesonen, A.K. Heart rate variability and firstbeat method for detecting sleep stages in healthy young adults: Feasibility study. JMIR MHealth UHealth 2021, 9, e24704. [Google Scholar] [CrossRef]

- Widasari, E.R.; Tanno, K.; Tamura, H. Automatic sleep stage detection based on HRV spectrum analysis. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 869–874. [Google Scholar]

- Donker, D.; Hasman, A.; Van Geijn, H. Interpretation of low kappa values. Int. J. Bio-Med. Comput. 1993, 33, 55–64. [Google Scholar] [CrossRef]

- Riemann, D.; Baglioni, C.; Bassetti, C.; Bjorvatn, B.; Dolenc Groselj, L.; Ellis, J.G.; Espie, C.A.; Garcia-Borreguero, D.; Gjerstad, M.; Gonçalves, M.; et al. European guideline for the diagnosis and treatment of insomnia. J. Sleep Res. 2017, 26, 675–700. [Google Scholar] [CrossRef]

- Dopheide, J.A. Insomnia overview: Epidemiology, pathophysiology, diagnosis and monitoring, and nonpharmacologic therapy. Am. J. Manag. Care 2020, 26, S76–S84. [Google Scholar]

- Soh, H.L.; Ho, R.C.; Ho, C.S.; Tam, W.W. Efficacy of digital cognitive behavioural therapy for insomnia: A meta-analysis of randomised controlled trials. Sleep Med. 2020, 75, 315–325. [Google Scholar] [CrossRef]

- Mitchell, L.J.; Bisdounis, L.; Ballesio, A.; Omlin, X.; Kyle, S.D. The impact of cognitive behavioural therapy for insomnia on objective sleep parameters: A meta-analysis and systematic review. Sleep Med. Rev. 2019, 47, 90–102. [Google Scholar] [CrossRef]

- Schaffarczyk, M.; Rogers, B.; Reer, R.; Gronwald, T. Validity of the polar H10 sensor for heart-rate variability analysis during resting state and incremental exercise in recreational men and women. Sensors 2022, 22, 6536. [Google Scholar] [CrossRef]

- Gilgen-Ammann, R.; Schweizer, T.; Wyss, T. RR interval signal quality of a heart rate monitor and an ECG Holter at rest and during exercise. Eur. J. Appl. Physiol. 2019, 119, 1525–1532. [Google Scholar] [CrossRef]

- Hettiarachchi, I.T.; Hanoun, S.; Nahavandi, D.; Nahavandi, S. Validation of Polar OH1 optical heart rate sensor for moderate and high intensity physical activities. PLoS ONE 2019, 14, e0217288. [Google Scholar] [CrossRef] [Green Version]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2017. [Google Scholar]

- Vest, A.N.; Da Poian, G.; Li, Q.; Liu, C.; Nemati, S.; Shah, A.J.; Clifford, G.D. An open source benchmarked toolbox for cardiovascular waveform and interval analysis. Physiol. Meas. 2018, 39, 105004. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Eldele, E.; Chen, Z.; Liu, C.; Wu, M.; Kwoh, C.K.; Li, X.; Guan, C. An attention-based deep learning approach for sleep stage classification with single-channel eeg. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 809–818. [Google Scholar] [CrossRef]

- Shaw, P.; Uszkoreit, J.; Vaswani, A. Self-attention with relative position representations. arXiv 2018, arXiv:1803.02155. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: https://www.tensorflow.org/ (accessed on 1 June 2022).

- Bakker, J.P.; Ross, M.; Cerny, A.; Vasko, R.; Shaw, E.; Kuna, S.; Magalang, U.J.; Punjabi, N.M.; Anderer, P. Scoring sleep with artificial intelligence enables quantification of sleep stage ambiguity: Hypnodensity based on multiple expert scorers and auto-scoring. Sleep 2022, 46, zsac154. [Google Scholar] [CrossRef] [PubMed]

- Viera, A.J.; Garrett, J.M. Understanding interobserver agreement: The kappa statistic. Fam. Med. 2005, 37, 360–363. [Google Scholar] [PubMed]

- Bland, J.M.; Altman, D.G. Measuring agreement in method comparison studies. Stat. Methods Med. Res. 1999, 8, 135–160. [Google Scholar] [CrossRef]

- Rosenberg, R.S.; Van Hout, S. The American Academy of Sleep Medicine inter-scorer reliability program: Sleep stage scoring. J. Clin. Sleep Med. 2013, 9, 81–87. [Google Scholar] [CrossRef] [Green Version]

- Biswal, S.; Sun, H.; Goparaju, B.; Westover, M.B.; Sun, J.; Bianchi, M.T. Expert-level sleep scoring with deep neural networks. J. Am. Med. Inform. Assoc. 2018, 25, 1643–1650. [Google Scholar] [CrossRef] [Green Version]

- Lee, Y.J.; Lee, J.Y.; Cho, J.H.; Choi, J.H. Interrater reliability of sleep stage scoring: A meta-analysis. J. Clin. Sleep Med. 2022, 18, 193–202. [Google Scholar] [CrossRef] [PubMed]

- Giavarina, D. Understanding bland altman analysis. Biochem. Medica 2015, 25, 141–151. [Google Scholar] [CrossRef] [Green Version]

- Herscovici, S.; Pe’er, A.; Papyan, S.; Lavie, P. Detecting REM sleep from the finger: An automatic REM sleep algorithm based on peripheral arterial tone (PAT) and actigraphy. Physiol. Meas. 2006, 28, 129. [Google Scholar] [CrossRef] [Green Version]

- Bresler, M.; Sheffy, K.; Pillar, G.; Preiszler, M.; Herscovici, S. Differentiating between light and deep sleep stages using an ambulatory device based on peripheral arterial tonometry. Physiol. Meas. 2008, 29, 571. [Google Scholar] [CrossRef]

- Radha, M.; Fonseca, P.; Moreau, A.; Ross, M.; Cerny, A.; Anderer, P.; Long, X.; Aarts, R.M. Sleep stage classification from heart-rate variability using long short-term memory neural networks. Sci. Rep. 2019, 9, 14149. [Google Scholar] [CrossRef] [Green Version]

- Habib, A.; Motin, M.A.; Penzel, T.; Palaniswami, M.; Yearwood, J.; Karmakar, C. Performance of a Convolutional Neural Network Derived from PPG Signal in Classifying Sleep Stages. IEEE Trans. Biomed. Eng. 2022, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Fonseca, P.; Long, X.; Radha, M.; Haakma, R.; Aarts, R.M.; Rolink, J. Sleep stage classification with ECG and respiratory effort. Physiol. Meas. 2015, 36, 2027. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fonseca, P.; van Gilst, M.M.; Radha, M.; Ross, M.; Moreau, A.; Cerny, A.; Anderer, P.; Long, X.; van Dijk, J.P.; Overeem, S. Automatic sleep staging using heart rate variability, body movements, and recurrent neural networks in a sleep disordered population. Sleep 2020, 43, zsaa048. [Google Scholar] [CrossRef] [PubMed]

- Rezaie, L.; Fobian, A.D.; McCall, W.V.; Khazaie, H. Paradoxical insomnia and subjective–objective sleep discrepancy: A review. Sleep Med. Rev. 2018, 40, 196–202. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Topalidis, P.; Heib, D.P.J.; Baron, S.; Eigl, E.-S.; Hinterberger, A.; Schabus, M. The Virtual Sleep Lab—A Novel Method for Accurate Four-Class Sleep Staging Using Heart-Rate Variability from Low-Cost Wearables. Sensors 2023, 23, 2390. https://doi.org/10.3390/s23052390

Topalidis P, Heib DPJ, Baron S, Eigl E-S, Hinterberger A, Schabus M. The Virtual Sleep Lab—A Novel Method for Accurate Four-Class Sleep Staging Using Heart-Rate Variability from Low-Cost Wearables. Sensors. 2023; 23(5):2390. https://doi.org/10.3390/s23052390

Chicago/Turabian StyleTopalidis, Pavlos, Dominik P. J. Heib, Sebastian Baron, Esther-Sevil Eigl, Alexandra Hinterberger, and Manuel Schabus. 2023. "The Virtual Sleep Lab—A Novel Method for Accurate Four-Class Sleep Staging Using Heart-Rate Variability from Low-Cost Wearables" Sensors 23, no. 5: 2390. https://doi.org/10.3390/s23052390