Wide-Field-of-View Multispectral Camera Design for Continuous Turfgrass Monitoring

Abstract

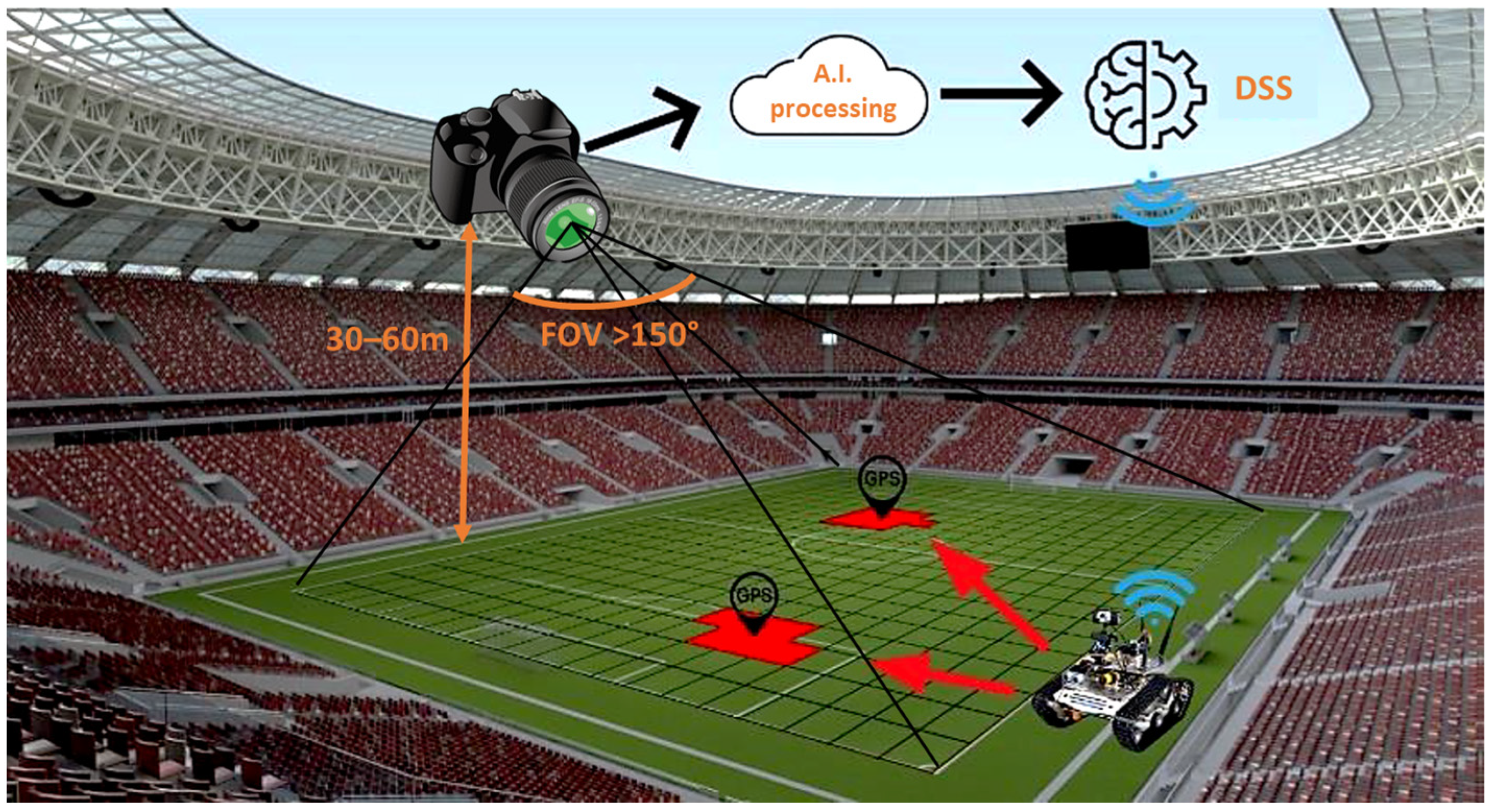

:1. Introduction

| Design Type | Number of Lenses | Effective Focal Length (mm) | f- Number | Full FOV (Degree) | Total Optical Length (mm) | MTF Value at a Frequency (lp/mm) | Detector Format (inch) | Surface Types | References | |

|---|---|---|---|---|---|---|---|---|---|---|

| Fujifilm-86 | - | 2.7 | 1.8–16 | 136.3 | 60.5 | 0.53 | - | 1/2 | All SPH | [11] |

| Fujifilm-57 | - | 1.8 | 1.4–16 | 185.0 | 60.3 | 0.8 | - | 1/2 | All SPH | [11] |

| SunexDSL180 | - | 0.97 | 2.0 | 200 | 17.1 | 14 | - | 1/2.8 | All SPH | [12,13] |

| Entaniya 220 | 10 | - | 2.0 | 220 | 36 | 2 | >0.4 @ 100 | - | All SPH | [13,14] |

| Nippon 1964 | 9 | 8 | 2.8 | 180 | 88 | 3.84 | - | 1.112 | All SPH | [15] |

| Nippon 1971 | 12 | 6.3 | 2.8 | 220 | 205.9 | 3.14 | - | 1.112 | All SPH | [15] |

| Olympus 1973 | 10 | 8 | 2.8 | 180 | 125 | 9.75 | - | 1.112 | All SPH | [15] |

| Asahi 1985 | 10 | 8 | 2.8 | 180 | 139.7 | 0.02 | - | 1.112 | All SPH | [15] |

| Coastal 1997 | 11 | 7.45 | 2.8 | 185 | 174 | 1.85 | - | 1.112 | All SPH | [15] |

| Design Type | Number of Lenses | Effective Focal Length (mm) | f- Number | Full FOV (degree) | Total Optical Length (mm) | MTF Value at a Frequency (lp/mm) | Detector Format (inch) | Surface Types | References | |

|---|---|---|---|---|---|---|---|---|---|---|

| Canopy lens | 13 | 2.21 | 2.97 | 180 | 70.36 | 32.5 | >0.6 @ 153 | 1/2 | All SPH | [16] |

| Compact lens | 6 | 2.5 | 230 | 17.3 | 0.03 | >0.3 @ 45 | 1/4 | 4 ASPH | [17] | |

| Low cost | 4 | 2.5 | 3 | 100 | 12.9 | 40 | >0.3 @ 40 | 1/4 | 2 ASPH | [18] |

| Simplified fisheye | 6 | 1 | 2.8 | 160 | 11.6 | 0.15 | >0.3 @ 115 | 1/3.2 | 1 ASPH | [19] |

| Ultrawide | 7 | 1.1 | 2.4 | 160 | 25 | 5 | >0.4 @ 178 | 1/6 | 4 ASPH | [20] |

| Miniature | 5 | 2.06 | 4 | 200 | 14.7 | 4.5 | >0.4 @ 75 | 2/3 | 1 ASPH | [21] |

| Zoom lens | 11 | 9.2 | 2.8 | 180 | 140 | 10 | >0.4 @ 50 | 1.112 | 2 ASPH | [22] |

| 360 lens | 7 | 1.43 | 2.0 | 190 | 27.0 | 7.1 | >0.1 @ 370 | 1/2.3 | 4 ASPH | [23] |

| Tracking lens | 4 | 0.963 | 2.5 | 180 | 11.8 | 1 | >0.6 @ 59.5 | 1/3 | 1 ASPH | [13] |

| Fingerprint | 3 | - | 1.6 | 128 | 2.4 | 1 | ≥0.5 @ 70 | - | All ASPH | [24] |

| Spaceborne | 5 | 3.3 | 2.9 | 140 | 85.5 | 74.6 | ≥0.4 @ 78 | 1/5.2 | 2 ASPH | [25] |

| Cascade | 6 | 50 | 3.1 | 116.4 | 230.32 | <0.2 | ≥0.285 @ 270 | 1/1.7 | 2 ASPH | [26] |

| Light field | 4 | 17.5 | - | 60 | - | - | - | 1/1.8 | SPH | [27] |

| Freeform | 5 | 1.8 | 2.44 | 109 | 3.19 | <2 | ≥0.31 @ 120 | 1/4.4 | 2 freeform | [28] |

| Design Type | Number of Lenses | Effective Focal Length (mm) | Wavelength Range (µm) | f- Number | Full FOV (Degree) | Total Optical Length (mm) | MTF Value at a Frequency (lp/mm) | Surface Types | References | |

|---|---|---|---|---|---|---|---|---|---|---|

| Commercially available WFOV visible imaging systems | ||||||||||

| Fluke WFOV | - | - | 8–14 | - | 46 × 34 | 165 | - | - | - | [29] |

| FLIR wide-angle lens | - | 9.66 | 8–14 | 1.3 | 45 × 33.8 | 38 | - | - | - | [30] |

| Fotric wide-angle lens | - | - | 8–14 | - | 76 × 57 | - | - | - | - | [31] |

| Research on WFOV visible imaging systems | ||||||||||

| Low-cost thermal lens | 2 | 13.6 | 8–14 | 1.1 | 48 | 32.6 | 3 | 0.5 @ 20 | All ASPH | [32] |

| LWIR earth sensor | 4 | 4.177 | 14–16 | 0.8 | 180 | - | 0.25 | 0.5 @ 15 | All ASPH | [33] |

| Spaceborne lens | 3 | - | 8–14 | 1 | 140 | 86.12 | 18.85 | 0.5 @ 15 | 1 ASPH | [34] |

| Monocentric design | 2 | 63.5 | 3–5 | 1 | 100 | 150 | - | 0.45 @ 70 | SPH | [35] |

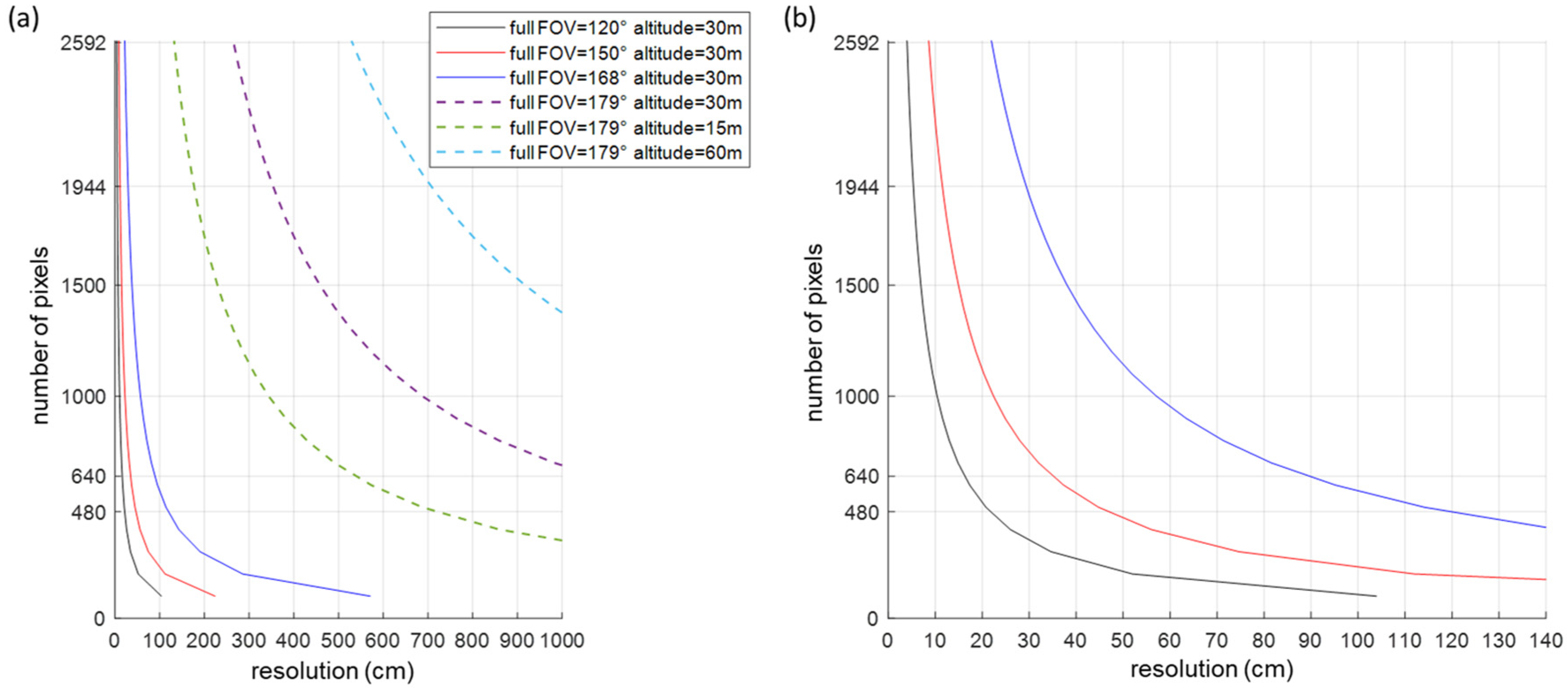

2. Materials and Methods: Optical Design Parameters and Trade-Offs

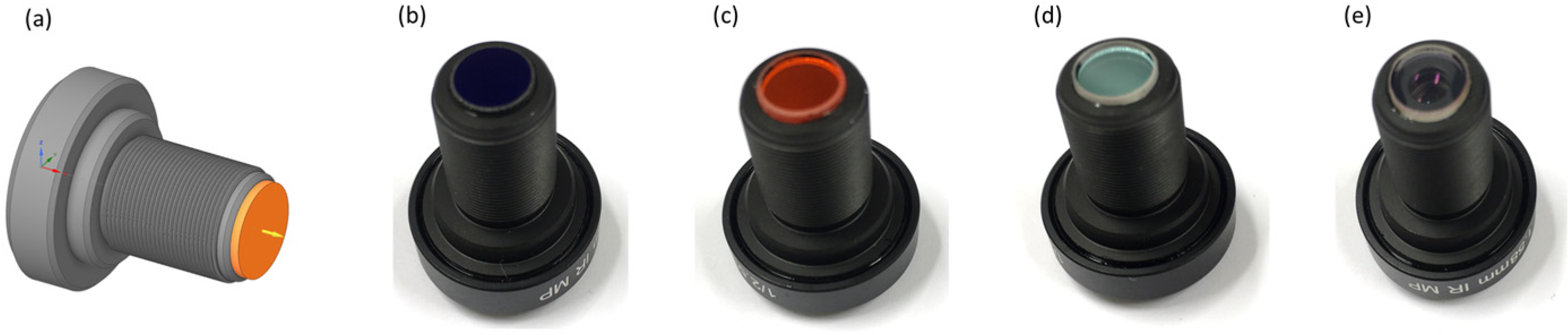

2.1. Visible and Near-Infrared Camera Design

2.2. Thermal Camera Design

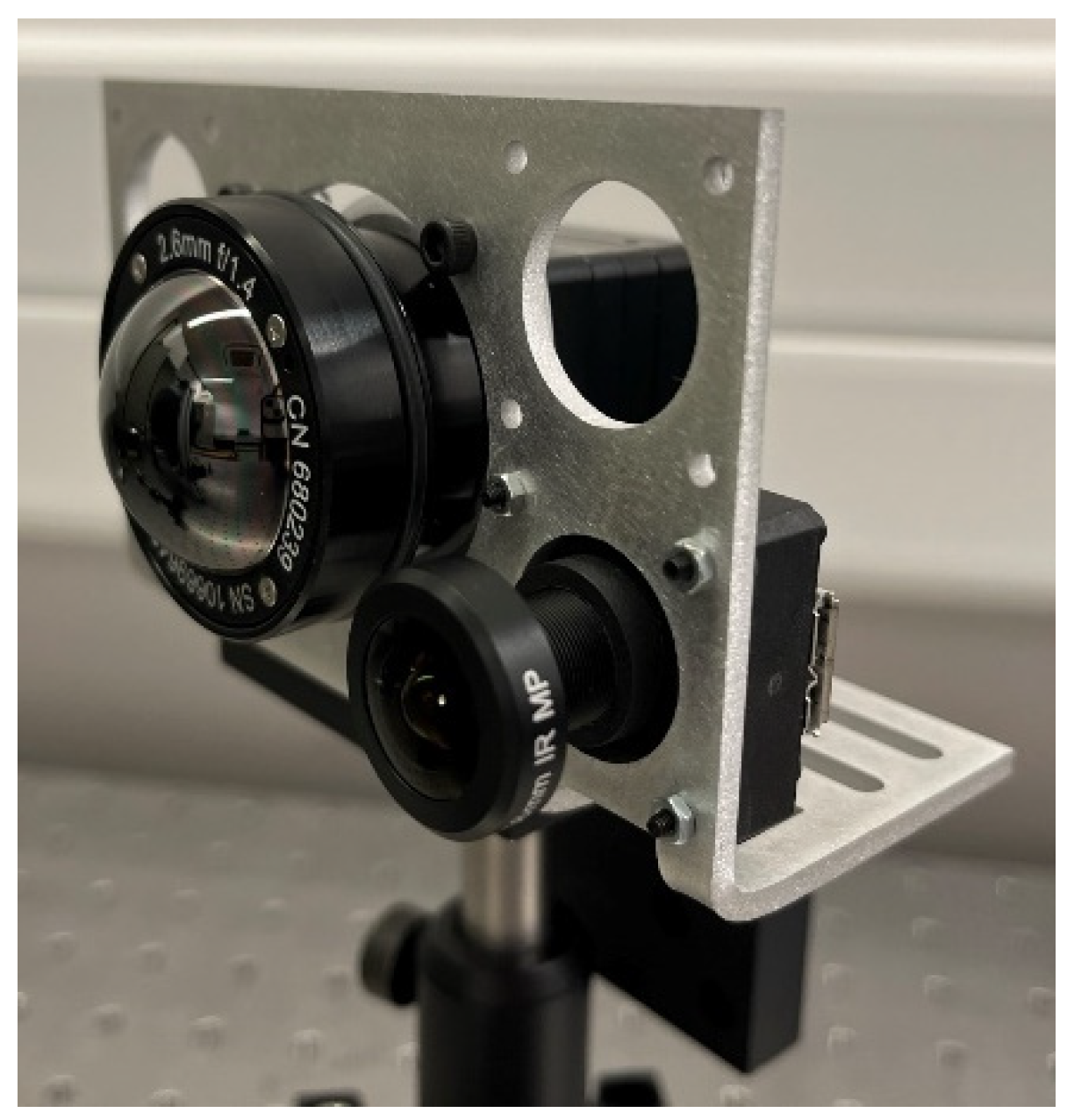

3. Results: Proof-of-Concept Demonstrator Evaluation

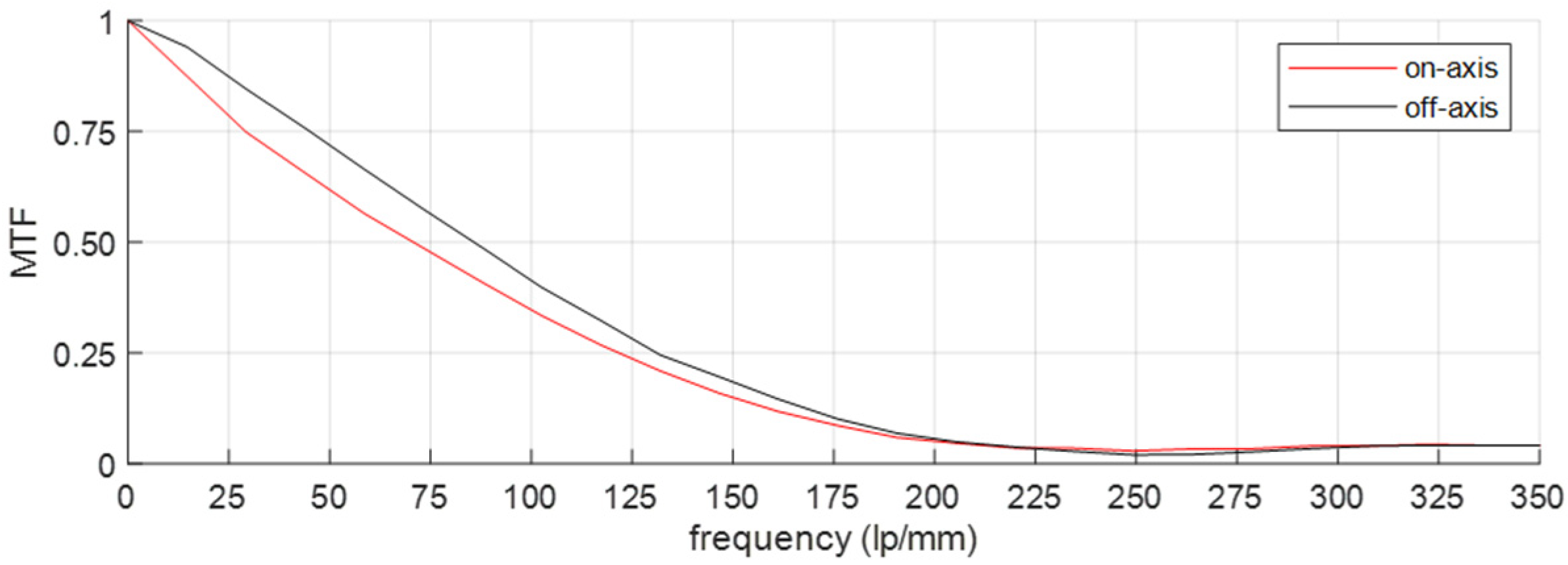

3.1. Characterization of the Visible and Near-Infrared Camera Channels

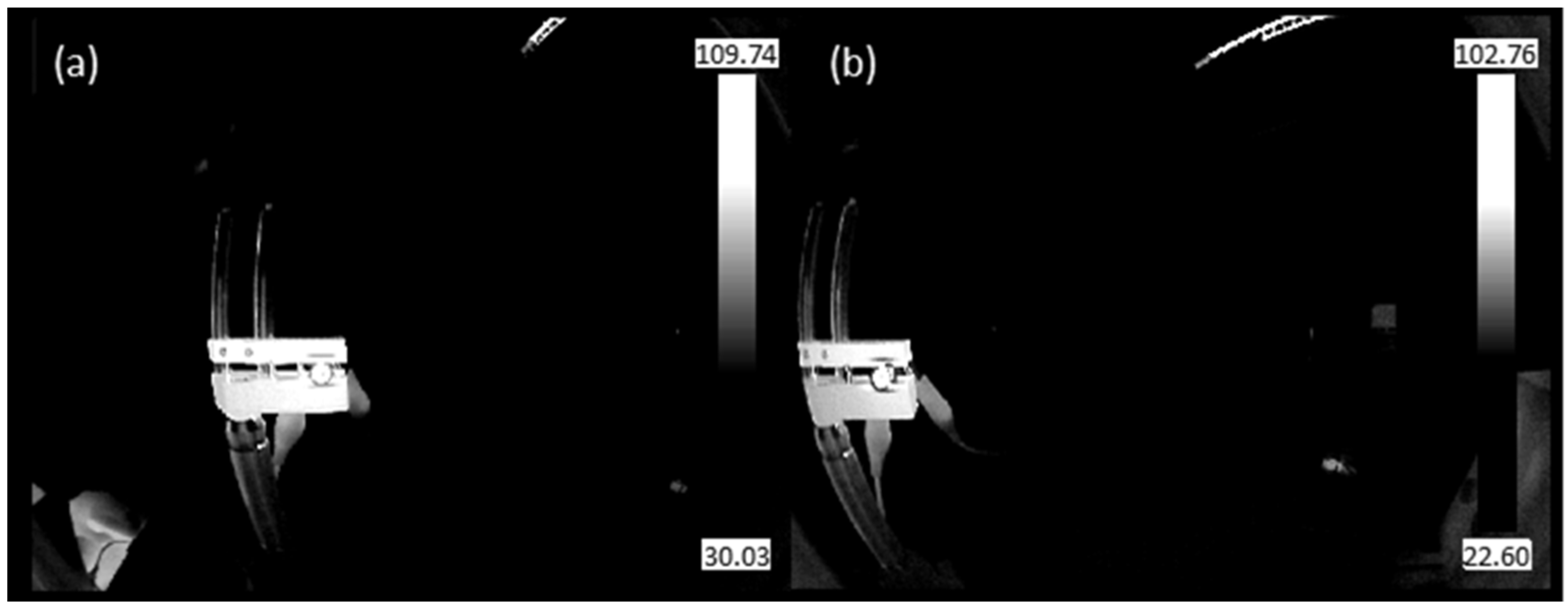

3.2. Characterization of the Thermal Camera Channel

4. Discussion and Future Perspectives

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- European Court of Auditors. Sustainable Water Use in Agriculture; European Court of Auditors: Luxembourg, 2021. [Google Scholar]

- European Commission Sustainable Use of Pesticides. Available online: https://ec.europa.eu/food/plants/pesticides/sustainable-use-pesticides_en (accessed on 24 June 2022).

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-Based Multispectral Remote Sensing for Precision Agriculture: A Comparison between Different Cameras. ISPRS J. Photogramm. Remote Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Hong, M.; Bremer, D.J.; van der Merwe, D. Thermal Imaging Detects Early Drought Stress in Turfgrass Utilizing Small Unmanned Aircraft Systems. Agrosyst. Geosci. Environ. 2019, 2, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Nebiker, S.; Annen, A.; Scherrer, M.; Oesch, D. A Light-Weight Multispectral Sensor for Micro UAV–Opportunities for Very High Resolution Airborne Remote Sensing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, XXXVI, 1193–1200. [Google Scholar]

- Caturegli, L.; Gaetani, M.; Volterrani, M.; Magni, S.; Minelli, A.; Baldi, A.; Brandani, G.; Mancini, M.; Lenzi, A.; Orlandini, S.; et al. Normalized Difference Vegetation Index versus Dark Green Colour Index to Estimate Nitrogen Status on Bermudagrass Hybrid and Tall Fescue. Int. J. Remote Sens. 2020, 41, 455–470. [Google Scholar] [CrossRef]

- Zhang, J.; Virk, S.; Porter, W.; Kenworthy, K.; Sullivan, D.; Schwartz, B. Applications of Unmanned Aerial Vehicle Based Imagery in Turfgrass Field Trials. Front. Plant Sci. 2019, 10, 279. [Google Scholar] [CrossRef] [Green Version]

- Bentley, J.; Olson, C. Field Guide to Lens Design. Greivenkamp, J.E., Ed.; SPIE Press: Washington, DC, USA, 2012; ISBN 9780819491640. [Google Scholar]

- Hou, F.; Zhang, Y.; Zhou, Y.; Zhang, M.; Lv, B.; Wu, J. Review on Infrared Imaging Technology. Sustainability 2022, 2022, 11161. [Google Scholar] [CrossRef]

- Edmund Optics The Correct Material for Infrared (IR) Applications. Available online: http://www.edmundoptics.com/resources/application-notes/optics/the-correct-material-for-infrared-applications/ (accessed on 13 October 2022).

- Fujifilm Optical Devices FE Series. Available online: https://www.fujifilm.com/us/en/business/optical-devices/machine-vision-lens/fe185-series (accessed on 11 November 2022).

- Sunex Tailored DistortionTM Miniature SuperFisheyeTM Lens. Available online: http://www.optics-online.com/OOL/DSL/DSL180.PDF (accessed on 11 November 2022).

- Sahin, F.E. Fisheye Lens Design for Sun Tracking Cameras and Photovoltaic Energy Systems. J. Photonics Energy 2018, 8, 035501. [Google Scholar] [CrossRef]

- Entaniya Entaniya Fisheye M12 220. Available online: https://products.entaniya.co.jp/en/products/entaniya-fisheye-m12-220/ (accessed on 11 November 2022).

- Kumler, J.J.; Bauer, M.L. Fish-Eye Lens Designs and Their Relative Performance. In Proceedings of the Current Developments in Lens Design and Optical Systems Engineering; SPIE: San Diego, CA, USA, 2000. [Google Scholar]

- Huang, Z.; Bai, J.; Hou, X. A Multi-Spectrum Fish-Eye Lens for Rice Canopy Detecting. In Proceedings of the Optical Design and Testing IV, Beijing, China, 18–20 October 2010; p. 78491Z. [Google Scholar]

- Xu, C.; Cheng, D.; Song, W.; Wang, Y.; Xu, C.; Cheng, D.; Song, W.; Wang, Y.; Wang, Q. Design of Compact Fisheye Lens with Slope-Constrained Q-Type Aspheres. In Proceedings of the Current Developments in Lens Design and Optical Engineering XIV., San Diego, CA, USA, 25–27 August 2013; p. 88410D. [Google Scholar]

- Yang, S.; Huang, K.; Chen, C.; Chang, R. Wide-Angle Lens Design. In Proceedings of the Computational Optical Sensing and Imaging, Kohala Coast, HI, USA, 22–26 June 2014; p. JTu5A.27. [Google Scholar]

- Samy, A.M.; Gao, Z. Simplified Compact Fisheye Lens Challenges and Design. J. Opt. 2015, 44, 409–416. [Google Scholar] [CrossRef]

- Sun, W.-S.; Tien, C.-L.; Chen, Y.-H.; Chu, P.-Y. Ultra-Wide Angle Lens Design with Relative Illumination Analysis. J. Eur. Opt. Soc. 2016, 11, 16001. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.; Niu, Y. Design and Analysis of Miniature High-Resolution Omnidirectional Gaze Optical Imaging System. Opt. Rev. 2017, 24, 334–344. [Google Scholar] [CrossRef]

- Yan, Y.; Sasian, J. Photographic Zoom Fisheye Lens Design for DSLR Cameras. Opt. Eng. 2017, 56, 095103. [Google Scholar] [CrossRef] [Green Version]

- Song, W.; Liu, X.; Lu, P.; Huang, Y.; Weng, D.; Zheng, Y.; Liu, Y.; Wang, Y. Design and Assessment of a 360° Panoramic and High-Performance Capture System with Two Tiled Catadioptric Imaging Channels. Appl. Opt. 2018, 57, 3429. [Google Scholar] [CrossRef]

- Peng, W.-J.; Cheng, Y.-C.; Lin, J.-S.; Chen, M.-F.; Sun, W.-S. Development of Miniature Wide-Angle Lens for in-Display Fingerprint Recognition. In Proceedings of the Design and Quality for Biomedical Technologies XIII, San Francisco, CA, USA, 1–2 February 2020; Volume 1123108, p. 7. [Google Scholar]

- Schifano, L.; Smeesters, L.; Geernaert, T.; Berghmans, F.; Dewitte, S. Design and Analysis of a Next-Generation Wide Field-of-View Earth Radiation Budget Radiometer. Remote Sens. 2020, 12, 425. [Google Scholar] [CrossRef] [Green Version]

- Ji, Y.; Zeng, C.; Tan, F.; Feng, A.; Han, J. Non-Uniformity Correction of Wide Field of View Imaging System. Opt. Express 2022, 30, 22123. [Google Scholar] [CrossRef]

- Kim, H.M.; Yoo, Y.J.; Lee, J.M.; Song, Y.M. A Wide Field-of-View Light-Field Camera with Adjustable Multiplicity for Practical Applications. Sensors 2022, 22, 3455. [Google Scholar] [CrossRef]

- Zhuang, Z.; Parent, J.; Roulet, P.; Thibault, S. Freeform Wide-Angle Camera Lens Enabling Mitigable Distortion. Appl. Opt. 2022, 61, 5449. [Google Scholar] [CrossRef]

- FLUKE Wide Angle Infrared Lens RSE. Available online: https://www.fluke.com/en/product/accessories/thermal-imaging/fluke-lens-wide2-rse (accessed on 11 November 2022).

- FLIR, T. FLIR 1196960 Wide Angle Lens for FLIR Thermal Imaging Cameras. Available online: https://www.globaltestsupply.com/product/flir-1196960-45-degree-lens-with-case (accessed on 11 November 2022).

- Heat, T. Super Wide-Angle Lens 76 Degree for Fotric 225 Thermal Imaging Camera. Available online: https://testheat.com/products/super-wide-angle-lens-76-degree-for-fotric-225-thermal-imager (accessed on 11 November 2022).

- Curatu, G. Design and Fabrication of Low-Cost Thermal Imaging Optics Using Precision Chalcogenide Glass Molding. In Proceedings of the Current Developments in Lens Design and Optical Engineering IX., San Diego, CA, USA, 11–12 August 2008; Volume 7060, p. 706008. [Google Scholar]

- Qiu, R.; Dou, W.; Kan, J.; Yu, K. Optical Design of Wide-Angle Lens for LWIR Earth Sensors. In Proceedings of the Image Sensing Technologies: Materials, Devices, Systems, and Applications IV, Anaheim, CA, USA, 12–13 April 2017; Volume 10209, p. 102090T. [Google Scholar]

- Schifano, L.; Smeesters, L.; Berghmans, F.; Dewitte, S. Wide-Field-of-View Longwave Camera for the Characterization of the Earth’s Outgoing Longwave Radiation. Sensors 2021, 21, 4444. [Google Scholar] [CrossRef]

- Zhang, J.; Qin, T.; Xie, Z.; Sun, L.; Lin, Z.; Cao, T.; Zhang, C. Design of Airborne Large Aperture Infrared Optical System Based on Monocentric Lens. Sensors 2022, 22, 9907. [Google Scholar] [CrossRef]

- Authority Soccer Official FIFA Soccer Field Dimensions: Every Part of the Field Included. Available online: https://authoritysoccer.com/official-fifa-soccer-field-dimensions/ (accessed on 25 June 2022).

- Schifano, L. Shortwave and Longwave Cameras. Innovative Spaceborne Optical Instrumentation for Improving Climate Change Monitoring. Ph.D. Thesis, Vrije Universiteit Brussel, Brussels, Belgium, 2022; pp. 53–63. [Google Scholar]

- National Instruments. Calculating Camera Sensor Resolution and Lens Focal Length; National Instruments: Austin, TX, USA, 2020. [Google Scholar]

- Lee, M.; Kim, H.; Paik, J. Correction of Barrel Distortion in Fisheye Lens Images Using Image-Based Estimation of Distortion Parameters. IEEE Access 2019, 7, 45723. [Google Scholar] [CrossRef]

- Park, J.; Byun, S.C.; Lee, B.U. Lens Distortion Correction Using Ideal Image Coordinates. IEEE Trans. Consum. Electron. 2009, 55, 987–991. [Google Scholar] [CrossRef]

- Wu, R.; Li, Y.; Xie, X.; Lin, Z. Optimized Multi-Spectral Filter Arrays for Spectral Reconstruction. Sensors 2019, 19, 2905. [Google Scholar] [CrossRef] [Green Version]

- Lapray, P.-J.; Wang, X.; Thomas, J.-B.; Gouton, P. Multispectral Filter Arrays: Recent Advances and Practical Implementation. Sensors 2014, 14, 21626–21659. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Baek, S.H.; Ikoma, H.; Jeon, D.S.; Li, Y.; Heidrich, W.; Wetzstein, G.; Kim, M.H. Single-Shot Hyperspectral-Depth Imaging with Learned Diffractive Optics. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 2631–2640. [Google Scholar]

- Fitz-Rodríguez, E.; Choi, C.Y. Monitoring Turfgrass Quality Using Multispectral Radiometry. Trans. Am. Soc. Agric. Eng. 2002, 45, 865–871. [Google Scholar] [CrossRef]

- Bremer, D.J.; Lee, H.; Su, K.; Keeley, S.J. Relationships between Normalized Difference Vegetation Index and Visual Quality in Cool-Season Turfgrass: II. Factors Affecting NDVI and Its Component Reflectances. Crop Sci. 2011, 51, 2219–2227. [Google Scholar] [CrossRef] [Green Version]

- Caturegli, L.; Lulli, F.; Foschi, L.; Guglielminetti, L.; Bonari, E.; Volterrani, M. Monitoring Turfgrass Species and Cultivars by Spectral Reflectance. Eur. J. Hortic. Sci. 2014, 79, 97–107. [Google Scholar]

- Labbé, S.; Lebourgeois, V.; Jolivot, A.; Marti, R. Thermal Infra-Red Remote Sensing for Water Stress Estimation in Agriculture. Options Méditerr. Série B. Etudes Rech. 2012, 67, 175–184. [Google Scholar]

- Ishimwe, R.; Abutaleb, K.; Ahmed, F. Applications of Thermal Imaging in Agriculture—A Review. Adv. Remote Sens. 2014, 3, 128–140. [Google Scholar] [CrossRef] [Green Version]

- Mitja, C.; Escofet, J.; Tacho, A.; Revuelta, R. Slanted Edge MTF. Available online: https://imagej.nih.gov/ij/plugins/se-mtf/index.html (accessed on 2 November 2022).

- Boreman, G.D. Modulation Transfer Function in Optical and Electro-Optical Systems; SPIE Press: Bellingham, WA, USA, 2001; ISBN 9780819480453. [Google Scholar]

- Dandrifosse, S.; Carlier, A.; Dumont, B.; Mercatoris, B. Registration and Fusion of Close-Range Multimodal Wheat Images in Field Conditions. Remote Sens. 2021, 13, 1380. [Google Scholar] [CrossRef]

- Prashar, A.; Jones, H.G. Infra-Red Thermography as a High-Throughput Tool for Field Phenotyping. Agronomy 2014, 4, 397–417. [Google Scholar] [CrossRef]

| Camera Channel | Wavelength Range (nm) | Full FOV (Degree) | Effective Focal Length (mm) | f-Number | Total Optical Length (mm) | MTF Value at a Frequency (lp/mm) | Detector Format (mm) | Pixel Size (µm) |

|---|---|---|---|---|---|---|---|---|

| Visible—NIR channel 1 | 648–750 nm | 179° | 1.58 | 2.8 | 29.8 | ≥0.5 @ 72 | 5.70 × 4.28 | 2.2 |

| Visible—NIR channel 2 | 556–634 nm | 179° | 1.58 | 2.8 | 29.8 | ≥0.5 @ 72 | 5.70 × 4.28 | 2.2 |

| Visible—NIR channel 3 | 641–697 nm + 823–900 nm | 179° | 1.58 | 2.8 | 29.8 | ≥0.5 @ 72 | 5.70 × 4.28 | 2.2 |

| Visible—NIR channel 4 | 400–723 nm | 179° | 1.58 | 2.8 | 29.8 | ≥0.5 @ 72 | 5.70 × 4.28 | 2.2 |

| Thermal | 8–14 µm | 164° | 2.6 | 1.4 | 43.7 | ≥0.5 @ 27 | 7.68 × 5.76 | 12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Smeesters, L.; Verbaenen, J.; Schifano, L.; Vervaeke, M.; Thienpont, H.; Teti, G.; Forconi, A.; Lulli, F. Wide-Field-of-View Multispectral Camera Design for Continuous Turfgrass Monitoring. Sensors 2023, 23, 2470. https://doi.org/10.3390/s23052470

Smeesters L, Verbaenen J, Schifano L, Vervaeke M, Thienpont H, Teti G, Forconi A, Lulli F. Wide-Field-of-View Multispectral Camera Design for Continuous Turfgrass Monitoring. Sensors. 2023; 23(5):2470. https://doi.org/10.3390/s23052470

Chicago/Turabian StyleSmeesters, Lien, Jef Verbaenen, Luca Schifano, Michael Vervaeke, Hugo Thienpont, Giancarlo Teti, Alessio Forconi, and Filippo Lulli. 2023. "Wide-Field-of-View Multispectral Camera Design for Continuous Turfgrass Monitoring" Sensors 23, no. 5: 2470. https://doi.org/10.3390/s23052470

APA StyleSmeesters, L., Verbaenen, J., Schifano, L., Vervaeke, M., Thienpont, H., Teti, G., Forconi, A., & Lulli, F. (2023). Wide-Field-of-View Multispectral Camera Design for Continuous Turfgrass Monitoring. Sensors, 23(5), 2470. https://doi.org/10.3390/s23052470