Abstract

Multi-focus image fusion plays an important role in the application of computer vision. In the process of image fusion, there may be blurring and information loss, so it is our goal to obtain high-definition and information-rich fusion images. In this paper, a novel multi-focus image fusion method via local energy and sparse representation in the shearlet domain is proposed. The source images are decomposed into low- and high-frequency sub-bands according to the shearlet transform. The low-frequency sub-bands are fused by sparse representation, and the high-frequency sub-bands are fused by local energy. The inverse shearlet transform is used to reconstruct the fused image. The Lytro dataset with 20 pairs of images is used to verify the proposed method, and 8 state-of-the-art fusion methods and 8 metrics are used for comparison. According to the experimental results, our method can generate good performance for multi-focus image fusion.

1. Introduction

Due to the limited depth of field of the optical lens, the imaging device sometimes cannot achieve clear focus imaging of all objects or areas in the same scene, resulting in defocus and blurring of the scene content outside the depth of field [,,,,]. In order to solve the above problems, multi-focus image fusion technology provides an effective way to synthesize the complementary information contained in multiple partially focused images in the same scene, and then generate an all-in-focus fusion image, which is more suitable for human observation or computer processing, and has wide application value in digital photography, microscopic imaging, holographic imaging, integrated imaging, and other fields [,,,,,,,,,].

Now, many multi-focus image fusion methods have been proposed. Especially, the methods based on multi-scale transform, sparse representation, edge-preserving filtering, and deep learning have achieved remarkable results in image fusion []. The curvelet [], surfacelet [], contourlet [,], and shearlet transforms [,,] are widely used in multi-scale transform fields. Vishwakarma et al. [] introduced the multi-focus image fusion algorithm via curvelet transform and the Karhunen–Loève Transform (KLT), and this method can achieve fused images with less noise and improve the information interpretation capability of the fused images. Yang et al. [] proposed the multi-focus image fusion method using a pulse-coupled neural network (PCNN) and sum-modified-Laplacian algorithms in the fast discrete curvelet transform domain. Zhang et al. [] proposed a multi-focus image fusion technique using a compound pulse-coupled neural network in a surfacelet domain, with a local sum-modified-Laplacian algorithm used as the external stimulus of the compound PCNN, and the results show that this method can achieve a good performance for multi-focus image fusion. Li et al. [] introduced multi-focus image fusion utilizing dynamic threshold neural P systems and a surfacelet transform, and the sum-modified- Laplacian algorithm and spatial frequency are regarded as the external inputs of dynamic threshold neural P systems for low- and high-frequency coefficients, respectively; consistent verification is used to obtain the final multi-focus fused image, and this method can solve the problem of artifacts. Xu et al. [] introduced an image fusion utilizing an enhanced cross-visual cortex model based on artificial selection and an impulse-coupled neural network in a nonsubsampled contourlet transform domain. This method can achieve outstanding edge information, high contrast, and brightness. Das et al. [] introduced a fuzzy-adaptive reduced pulse-coupled neural network for image fusion in a nonsubsampled contourlet transform domain, and this method can generate a fused image with higher contrast than other state-of-the-art image fusion algorithms. Li et al. [] introduced the multi-focus image fusion framework using multi-scale transform decomposition, where the nonsubsampled contourlet transform is used to obtain the basic fused image, and the energy of gradient of difference images is used to refine the basic fused image by integrating the average filter and median filter. This method can generate a high-definition fused image. Peng et al. [] proposed coupled neural P systems and a nonsubsampled contourlet transform for image fusion, and the quantitative and qualitative experimental results demonstrate the advantages of the fusion approach. Wang et al. [] introduced the complex shearlet features-motivated generative adversarial network for multi-focus image fusion. Li et al. [] proposed one multi-focus image fusion method via spatial frequency-motivated parameter-adaptive pulse-coupled neural network and an improved sum-modified- Laplacian in nonsubsampled shearlet transform domain, and visual inspection and objective evaluation verified the effectiveness of the fusion method. Amrita et al. [] proposed an image fusion method using a water wave optimization (WWO) algorithm in a nonsubsampled shearlet transform domain, and this method can obtain good fusion results. Luo et al. [] introduced multi-modal image fusion using a 3-D shearlet transform and T-S fuzzy reasoning, and this method can achieve good fusion results. Yin et al. [] proposed the parameter-adaptive pulse-coupled neural network (PAPCNN)-based multi-modal image fusion method in a nonsubsampled shearlet transform domain, where the weighted local energy and weighted sum of eight- neighborhood-based modified Laplacian algorithms are used for fusing the low-frequency components, and the PAPCNN-based fusion model is used for fusing the high-frequency components; the results generate state-of-the-art performance, according to the visual perception and objective assessments.

The sparse representation-based methods have been widely used in image restoration and image fusion [,,,,,,,,,]. Wang et al. [] proposed a joint patch clustering-based adaptive dictionary and sparse representation for multi-modality image fusion, where the Gaussian filter is used to separate the low- and high-components, the local energy-weighted strategy is used to fuse the low-frequency sub-bands, an over-complete adaptive learning dictionary is reconstructed by the joint patch clustering model, and a hybrid fusion rule depending on the similarity of the multi-norm of sparse representation coefficients is introduced to fuse the high-frequency sub-bands. This method has good robustness and wide application. Qin et al. [] proposed an improved image fusion algorithm using a discrete wavelet transform and sparse representation, and this method can achieve higher contrast and more image details. Liu et al. [] introduced an effective image fusion approach using convolutional sparse representation, and this method outperforms other image fusion algorithms in terms of visual and objective assessments. Liu et al. [] introduced an adaptive sparse representation model for multi-focus image fusion and denoising, and this approach generates good performance, according to the visual quality and objective assessment.

Edge-preserving filtering has been widely used in image enhancement, image smoothing, image denoising, and image fusion. Especially in the field of image fusion, it has a very significant effect. Li et al. [] introduced guided image filtering for image fusion. The base layer and detail layer are generated by guided image filtering decomposition, and the weighted average model is used as the fusion rule. This method is used in experiments on muti-spectral, multi-focus, multi-modal, and multi-exposure images for fusion, and it can obtain fast and effective fusion results. Zhang et al. [] introduced local extreme map guided image filtering for image fusion, such as medical images, multi-focus images, infrared and visual images, and multi-exposure images, and this method can generate good performance.

Deep learning-based image fusion methods have been widely used in image processing. Zhang et al. [] proposed an image fusion method using a convolutional neural network (IFCNN), and this method has good performance for multi-focus, infrared-visual, multi-modal medical and multi-exposure image fusion. Zhang et al. [] introduced a fast unified image fusion network based on the proportional maintenance of gradient and intensity, and this method can generate good fusion results. Xu et al. [] proposed the unified and unsupervised end-to-end image fusion network (U2Fusion), and this algorithm achieves better fusion effects compared to state-of-the-art fusion methods. Dong et al. [] proposed a multi-branch multi-scale deep learning image fusion algorithm based on denseNet, and this method can achieve excellent results and keep more feature information of the source images in the fused image.

In order to generate a high-quality multi-focus fusion image, a novel image fusion framework based on sparse representation and local energy is proposed. The source images are separated into the low- and high-frequency sub-bands by shearlet transform, then the sparse representation model is used for fusing the low-frequency sub-bands, and the local energy-based fusion rule is used for fusing the high-frequency sub-bands. The inverse shearlet transform is applied to reconstruct the fused image. Experimental results show that the proposed multi-focus image fusion method can retain more source image information.

2. Related Works

2.1. Shearlet Transform

In dimension , the shearlet transform (ST) for the signal can be defined as follows []:

where shows the shearlet transform. depicts the inner product. The ST projects onto the functions at scale , orientation , and location .

The element is named shearlet, and it can be generated by:

where the parameters , , and show the positive real numbers, real numbers, and 2-dimensional real vectors, respectively. can be computed by:

where consists of two matrixes: the shear transform matrix and the anisotropic dilation matrix . The corresponding equations can be computed by:

The inverse shearlet transform is computed by:

2.2. Sparse Representation

Sparse representation can effectively extract the essential characteristics of signals and can be represented by a linear combination of non-zero atoms in a set of dictionaries []. We define the signal and the over-complete dictionary . The purpose of sparse representation is to estimate the sparse vector with the fewest nonzero entries, such that . Suppose that training patches of size are rearranged to column vectors in the space, so the training database is constructed with each . The dictionary learning model can be depicted as follows:

where shows an error tolerance, shows the unknown sparse vectors corresponding to , and is the unknown dictionary to be learned. Some effective models, such as MOD and K-SVD, have been introduced to deal with this question. More details can be seen in reference [].

3. Proposed Fusion Method

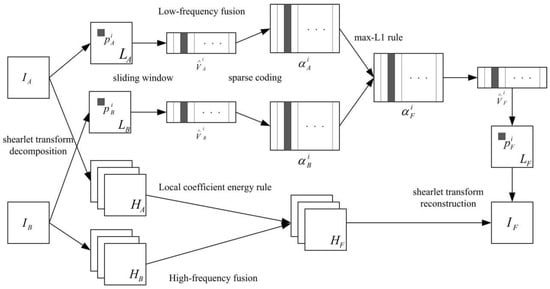

The proposed image fusion algorithm mainly contains four phases: shearlet transform decomposition, low-frequency fusion, high-frequency fusion, and shearlet transform reconstructed. The schematic diagram of the proposed approach is described in Figure 1.

Figure 1.

Diagram of the proposed fusion method.

3.1. Shearlet Transform Decomposition

The shearlet transform decomposition performs on the two source images to achieve the low-frequency components and the high-frequency components .

3.2. Low-Frequency Fusion

In the low-frequency component, the main energy of the image is concentrated, and the subject of the image is in the low-frequency component. In this section, and are merged with the sparse representation fusion method. The sliding window method is utilized to divide and into image patches with the size from upper left to lower right with the step length of pixels. Assume that there are patches depicted as and in and , respectively.

For each position , rearrange into column vectors and then normalize each vector’s mean value to zero to obtain by the following equations []:

where 1 shows an all-one valued vector, and and are the mean values of all the elements in and , respectively.

For the sparse coefficient vectors of , we can compute them utilizing the orthogonal matching pursuit (OMP) technique with the following formulas:

where denotes the learned dictionary that is trained by the K-singular value decomposition (K-SVD) method.

Then, and are merged with the “max-L1” rule to obtain the fused sparse vector:

The fused results of and can be computed by the following:

where the merged mean value can be calculated by the following:

The above process is iterated for all the source image patches in and to obtain all the fused vectors . Let denote the low-pass fused result. For each , reshape it into a patch and then plug into its original position in . As patches are overlapped, each pixel’s value in is averaged over its accumulation times.

3.3. High-Frequency Fusion

The high-frequency components contain a great deal of detailed information, and the high-frequency components are fused using the local coefficient energy, which is described as follows []:

where represents the high-frequency coefficients at pixel , and is a local window with size . Let and show the local windows centered at pixel in and , respectively. The high-frequency fused result is achieved by the following:

3.4. Shearlet Transform Reconstruction

The inverse shearlet transform is performed on and to reconstruct the final fused image .

4. Experimental Results and Discussions

In this section, 20 pairs of multi-focus images from the Lytro dataset [] (Figure 2) are selected to experiment with the subjective and objective evaluation metrics to demonstrate the effectiveness of the proposed multi-focus image fusion algorithm. Compared with the latest published algorithms, we can highlight the advantages of our image fusion algorithm. The eight state-of-the-art image fusion methods are selected for comparison, and the methods are nonsubsampled contourlet transform and fuzzy-adaptive reduced pulse-coupled neural network (NSCT) [], image fusion using the curvelet transform (CVT) [], image fusion with parameter-adaptive pulse-coupled neural network in nonsubsampled shearlet transform domain (NSST) [], image fusion framework based on convolutional neural network (IFCNN) [], fast unified image fusion network based on the proportional maintenance of gradient and intensity (PMGI) [], unified unsupervised image fusion network (U2Fusion) [], local extreme map guided multi-modal image fusion (LEGFF) [], and zero-shot multi-focus image fusion (ZMFF) []. A single image fusion evaluation index cannot fully reflect the image quality, and multiple evaluation indexes can be used together to more objectively analyze the data and image information. The eight metrics are used as the objective evaluation, and the metrics are the edge-based similarity measurement [], the human perception inspired metric [], the structural similarity-based metric introduced by Yang et al. [], the structural similarity-based metric [], the gradient-based metric [], the nonlinear correlation information entropy [], the mutual information [], and the phase congruency-based metric []. Figure 3, Figure 4, Figure 5, Figure 6 and Figure 7 show the corresponding fusion results, and Figure 8 and Table 1, Table 2, Table 3, Table 4 and Table 5 show the corresponding metrics data. In our method, the decomposition levels of the shearlet is 4, and the direction numbers are [10, 10, 18, 18]. The dictionary size is set to 256, and the iteration number of K-SVD is fixed to 180. The patch size is 6 × 6, the step length is set to 1, and the error tolerance is set to 0.1.

Figure 2.

Lytro dataset.

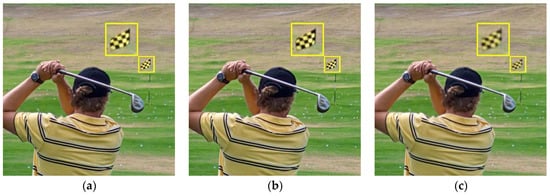

Figure 3.

Fusion results on the first pair of images. (a) NSCT; (b) CVT; (c) NSST; (d) IFCNN; (e) PMGI; (f) U2Fusion; (g) LEGFF; (h) ZMFF; (i) Proposed.

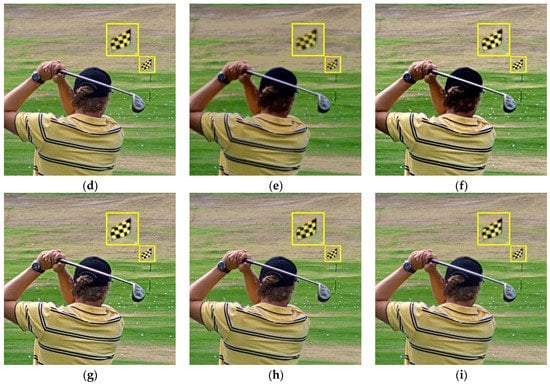

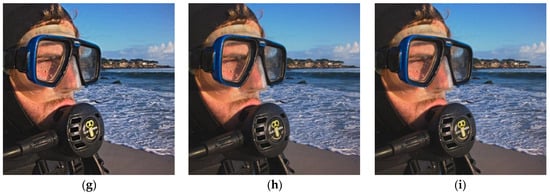

Figure 4.

Fusion results on the second pair of images. (a) NSCT; (b) CVT; (c) NSST; (d) IFCNN; (e) PMGI; (f) U2Fusion; (g) LEGFF; (h) ZMFF; (i) Proposed.

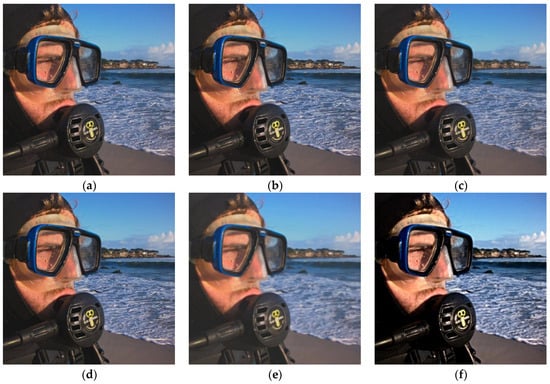

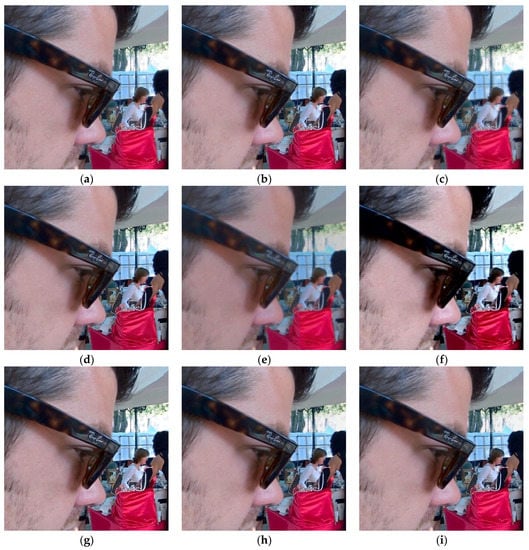

Figure 5.

Fusion results on the third pair of images. (a) NSCT; (b) CVT; (c) NSST; (d) IFCNN; (e) PMGI; (f) U2Fusion; (g) LEGFF; (h) ZMFF; (i) Proposed.

Figure 6.

Fusion results on the fourth pair of images. (a) NSCT; (b) CVT; (c) NSST; (d) IFCNN; (e) PMGI; (f) U2Fusion; (g) LEGFF; (h) ZMFF; (i) Proposed.

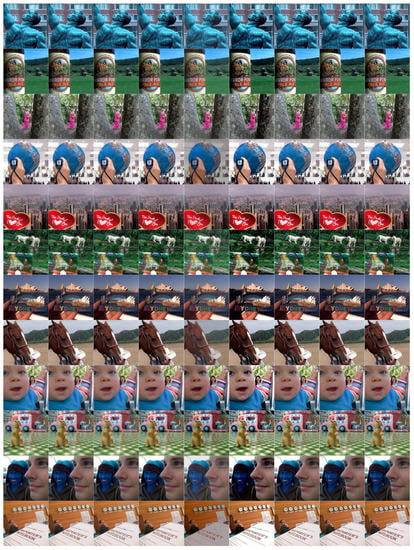

Figure 7.

Fusion results on other images in Figure 2.

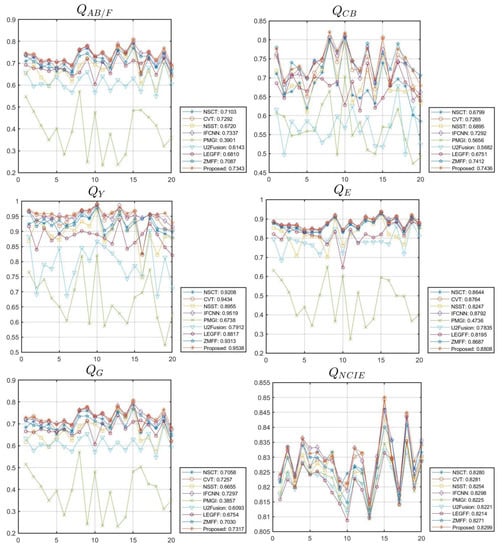

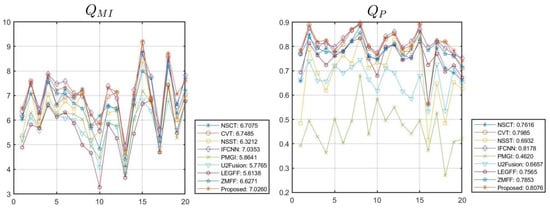

Figure 8.

Line chart of metrics data with different methods in Figure 2.

Table 1.

Objective evaluation of methods in Figure 3.

Table 2.

Objective evaluation of methods in Figure 4.

Table 3.

Objective evaluation of methods in Figure 5.

Table 4.

Objective evaluation of methods in Figure 6.

Table 5.

Average metrics data of different methods in Figure 8.

Figure 3 shows the fused images of different methods on the first pair of images in Figure 2, and Table 1 shows the corresponding metrics data. The fused images generated by the NSCT, CVT, and NSST algorithms are blurred in some areas. The PMGI method generates a dark image, and it is distorted and blurred. The IFCNN, U2Fusion, LEGFF, and ZMFF methods generate higher brightness. Compared with the other fusion methods, our method has the best fusion result, and more complementary image information is retained. The enlarged area in the images allows observing some details in the fused images. From Table 1, we can see that the metrics date of , , , , , , and generated by our method are the best, and the corresponding values are 0.7446, 0.9708, 0.8868, 0.7273, 0.8243, 6.5008, and 0.7860, respectively. The ZMFF method generates the best value of with 0.7802, and our method, which achieves the value 0.7760, is ranked second.

Figure 4 shows the fused images of different methods on the second pair of images in Figure 2, and Table 2 depicts the corresponding metrics data. The fused images generated by the NSCT, CVT, IFCNN, LEGFF, and ZMFF algorithms produce a considerable fusion effect, and the images are similar. The NSST algorithm produces clearer close-range information, while the distant information, such as the outline of the mountain, is relatively fuzzy. The PMGI algorithm produces a fuzzy fusion image, which does not achieve the effect of information complementarity, and the definition is obviously low, so it is difficult to observe the details in the image. The U2Fusion method improves the brightness of some areas of the image, such as the man’s face area, but the head, mouth, and neck areas of the man are obviously dark, so it is impossible to observe these parts of the information. Compared with the other fusion algorithms, our algorithm obtained clear close and distant information, achieved the effect of information complementarity, and maintained the image details well, and the result is easy to observe in the image. From Table 2, we can see that the metrics date of , , and computed by our method are the best, with the corresponding values 0.6924, 0.9593, and 0.8684, respectively.

Figure 5 shows the fused images of different methods on the third pair of images in Figure 2, and Table 3 shows the corresponding metrics data. The fused images generated by the NSCT and NSST algorithms are blurred in the girl’s face area. The CVT, IFCNN, LEGFF, and ZMFF methods generate all-focus images. The PMGI approach generates a distorted and blurred fusion image, making it impossible to obtain details in the images. Some areas in the fused image acquired by the U2Fusion method are very dark, such as the collar of the boys and girls, the tongue and hair of the boys, and the leaves. Our algorithm obtains a full-focus image, and the details of the source images are preserved well. From Table 3, we can see that the metrics date of , , , , and generated by our method are the best, with the corresponding values 0.7134, 0.9589, 0.8710, 0.7139, and 0.8194, respectively.

Figure 6 shows the fused images of different methods on the third pair of images in Figure 2, and Table 4 shows the corresponding metrics data. The fused images generated by the NSCT, CVT, IFCNN, LEGFF, and ZMFF algorithms produce basic full-focus images. The NSST method has a blurred image, such as the contour information of the woman in the distance. The PMGI method produces a completely blurred effect, and it is dark. The U2Fusion method makes some areas too bright and some areas too dark, and does not achieve an effect of moderate brightness. Our method produces a clear full-focus image, and the information complementation achieves an optimal effect. From Table 4, we can see that the metrics date of , , , , , and generated by our method are the best, with the corresponding values 0.7148, 0.7301, 0.9584, 0.8691, 0.7162, and 0.8249, respectively.

Figure 7 shows the fused results of different methods on other images in Figure 2, and we can compare the fusion effect of different algorithms. Figure 8 shows the line chart of the metrics data with different methods in Figure 2, and we can observe the fluctuation of corresponding index values obtained by the different algorithms on the 20 groups of multi-focus images. The average metrics data of the different methods in Figure 8 are shown in Table 5, and from this table, we can notice that the metrics data ,, , , , and generated by the proposed method are the best. The values of and generated by the IFCNN method are the best; however, the two corresponding index values and obtained by our algorithm still rank second among all the algorithms and have obvious advantages. Through qualitative and quantitative evaluation and analysis, our algorithm achieves the best multi-focus image fusion effect.

5. Conclusions

In order to generate a clear full-focus image, a novel multi-focus image fusion method based on sparse representation and local energy in shearlet domain is introduced. The shearlet transform is utilized to decompose the source images into low- and high-frequency sub-bands; the sparse representation based fusion rule is used to fuse the low-frequency sub-band, and local energy based fusion rule is used to fuse the high-frequency sub-bands. Twenty groups of multi-focus images are tested, and the effectiveness of the algorithm proposed in this paper is verified through qualitative and quantitative evaluation and analysis. The average metrics data , , , , , and computed by our method are the best, and the corresponding values are 0.7343, 0.7436, 0.9538, 0.8808, 0.7317, and 0.8299, respectively; the values of and also generate relatively advanced data. In the future work, we will extend this algorithm to multi-exposure image fusion and other multi-modal image fusion.

Author Contributions

The experimental measurements and data collection were carried out by L.L. and H.M. The manuscript was written by L.L. with the assistance of M.L., Z.J. and H.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Cross-Media Intelligent Technology Project of Beijing National Research Center for Information Science and Technology (BNRist) under Grant No. BNR2019TD01022.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Vasu, G.T.; Palanisamy, P. Gradient-based multi-focus image fusion using foreground and background pattern recognition with weighted anisotropic diffusion filter. Signal Image Video Process. 2023. [Google Scholar] [CrossRef]

- Li, H.; Qian, W. Siamese conditional generative adversarial network for multi-focus image fusion. Appl. Intell. 2023. [Google Scholar] [CrossRef]

- Li, X.; Wang, X. Multi-focus image fusion based on Hessian matrix decomposition and salient difference focus detection. Entropy 2022, 24, 1527. [Google Scholar] [CrossRef] [PubMed]

- Jiang, L.; Fan, H. Multi-level receptive field feature reuse for multi-focus image fusion. Mach. Vis. Appl. 2022, 33, 92. [Google Scholar] [CrossRef]

- Mohan, C.; Chouhan, K. Improved procedure for multi-focus images using image fusion with qshiftN DTCWT and MPCA in Laplacian pyramid domain. Appl. Sci. 2022, 12, 9495. [Google Scholar] [CrossRef]

- Zhang, X.; He, H.; Zhang, J. Multi-focus image fusion based on fractional order differentiation and closed image matting. ISA Trans. 2022, 129, 703–714. [Google Scholar] [CrossRef]

- Zhang, X. Deep learning-based multi-focus image fusion: A survey and a comparative study. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4819–4838. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, L. Multi-focus image fusion with deep residual learning and focus property detection. Inf. Fusion 2022, 86–87, 1–16. [Google Scholar] [CrossRef]

- Wang, Z.; Li, X. A self-supervised residual feature learning model for multifocus image fusion. IEEE Trans. Image Process. 2022, 31, 4527–4542. [Google Scholar] [CrossRef] [PubMed]

- Aymaz, S.; Kose, C.; Aymaz, S. A novel approach with the dynamic decision mechanism (DDM) in multi-focus image fusion. Multimed. Tools Appl. 2023, 82, 1821–1871. [Google Scholar] [CrossRef]

- Luo, H.; U, K.; Zhao, W. Multi-focus image fusion through pixel-wise voting and morphology. Multimed. Tools Appl. 2023, 82, 899–925. [Google Scholar] [CrossRef]

- Jiang, L.; Fan, H.; Li, J. DDFN: A depth-differential fusion network for multi-focus image. Multimed. Tools Appl. 2022, 81, 43013–43036. [Google Scholar] [CrossRef]

- Li, L.; Ma, H. Pulse coupled neural network-based multimodal medical image fusion via guided filtering and WSEML in NSCT domain. Entropy 2021, 23, 591. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Ma, H. Saliency-guided nonsubsampled shearlet transform for multisource remote sensing image fusion. Sensors 2021, 21, 1756. [Google Scholar] [CrossRef]

- Xiao, Y.; Guo, Z.; Veelaert, P.; Philips, W. General image fusion for an arbitrary number of inputs using convolutional neural networks. Sensors 2022, 22, 2457. [Google Scholar] [CrossRef]

- Karim, S.; Tong, G. Current advances and future perspectives of image fusion: A comprehensive review. Inf. Fusion 2023, 90, 185–217. [Google Scholar] [CrossRef]

- Candes, E.; Demanet, L. Fast discrete curvelet transforms. Multiscale Model. Simul. 2006, 5, 861–899. [Google Scholar] [CrossRef]

- Lu, Y.M.; Do, M.N. Multidimensional directional filter banks and surfacelets. IEEE Trans. Image Process. 2007, 16, 918–931. [Google Scholar] [CrossRef]

- Do, M.N.; Vetterli, M. The contourlet transform: An efficient directional multiresolution image representation. IEEE Trans. Image Process. 2005, 14, 2091–2106. [Google Scholar] [CrossRef]

- Da, A.; Zhou, J.; Do, M. The nonsubsampled contourlet transform: Theory, design, and applications. IEEE Trans. Image Process. 2006, 15, 3089–3101. [Google Scholar]

- Guo, K.; Labate, D. Optimally sparse multidimensional representation using shearlets. SIAM J. Math. Anal. 2007, 39, 298–318. [Google Scholar] [CrossRef]

- Easley, G.; Labate, D.; Lim, W.Q. Sparse directional image representations using the discrete shearlet transform. Appl. Comput. Harmon. Anal. 2008, 25, 25–46. [Google Scholar] [CrossRef]

- Vishwakarma, A.; Bhuyan, M.K. Image fusion using adjustable non-subsampled shearlet transform. IEEE Trans. Instrum. Meas. 2019, 68, 3367–3378. [Google Scholar] [CrossRef]

- Vishwakarma, A.; Bhuyan, M. A curvelet-based multi-sensor image denoising for KLT-based image fusion. Multimed. Tools Appl. 2022, 81, 4991–5016. [Google Scholar] [CrossRef]

- Yang, Y.; Tong, S. A hybrid method for multi-focus image fusion based on fast discrete curvelet transform. IEEE Access 2017, 5, 14898–14913. [Google Scholar] [CrossRef]

- Zhang, B.; Zhang, C.; Liu, Y. Multi-focus image fusion algorithm based on compound PCNN in Surfacelet domain. Optik 2014, 125, 296–300. [Google Scholar] [CrossRef]

- Li, B.; Peng, H. Multi-focus image fusion based on dynamic threshold neural P systems and surfacelet transform. Knowl.-Based Syst. 2020, 196, 105794. [Google Scholar] [CrossRef]

- Xu, W.; Fu, Y. Medical image fusion using enhanced cross-visual cortex model based on artificial selection and impulse-coupled neural network. Comput. Methods Programs Biomed. 2023, 229, 107304. [Google Scholar] [CrossRef] [PubMed]

- Das, S.; Kundu, M.K. A neuro-fuzzy approach for medical image fusion. IEEE Trans. Biomed. Eng. 2013, 60, 3347–3353. [Google Scholar] [CrossRef]

- Li, L.; Ma, H.; Jia, Z.; Si, Y. A novel multiscale transform decomposition based multi-focus image fusion framework. Multimed. Tools Appl. 2021, 80, 12389–12409. [Google Scholar] [CrossRef]

- Peng, H.; Li, B. Multi-focus image fusion approach based on CNP systems in NSCT domain. Comput. Vis. Image Underst. 2021, 210, 103228. [Google Scholar] [CrossRef]

- Wang, L.; Liu, Z. The fusion of multi-focus images based on the complex shearlet features-motivated generative adversarial network. J. Adv. Transp. 2021, 2021, 5439935. [Google Scholar] [CrossRef]

- Li, L.; Si, Y.; Wang, L.; Jia, Z.; Ma, H. A novel approach for multi-focus image fusion based on SF-PAPCNN and ISML in NSST domain. Multimed. Tools Appl. 2020, 79, 24303–24328. [Google Scholar] [CrossRef]

- Amrita, S.; Joshi, S. Water wave optimized nonsubsampled shearlet transformation technique for multimodal medical image fusion. Concurr. Comput. Pract. Exp. 2023, 35, e7591. [Google Scholar] [CrossRef]

- Luo, X.; Xi, X. Multimodal medical volumetric image fusion using 3-D shearlet transform and T-S fuzzy reasoning. Multimed. Tools Appl. 2022, 1–36. [Google Scholar] [CrossRef]

- Yin, M.; Liu, X.; Liu, Y. Medical image fusion with parameter-adaptive pulse coupled neural network in nonsubsampled shearlet transform domain. IEEE Trans. Instrum. Meas. 2019, 68, 49–64. [Google Scholar] [CrossRef]

- Zha, Z.; Wen, B. Learning nonlocal sparse and low-rank models for image compressive sensing: Nonlocal sparse and low-rank modeling. IEEE Signal Process. Mag. 2023, 40, 32–44. [Google Scholar] [CrossRef]

- Zha, Z.; Yuan, X. From rank estimation to rank approximation: Rank residual constraint for image restoration. IEEE Trans. Image Process. 2020, 29, 3254–3269. [Google Scholar] [CrossRef]

- Zha, Z.; Yuan, X. Image restoration via simultaneous nonlocal self-similarity priors. IEEE Trans. Image Process. 2020, 29, 8561–8576. [Google Scholar] [CrossRef]

- Zha, Z.; Yuan, X. Image restoration using joint patch-group-based sparse representation. IEEE Trans. Image Process. 2020, 29, 7735–7750. [Google Scholar] [CrossRef]

- Zha, Z.; Yuan, X. A benchmark for sparse coding: When group sparsity meets rank minimization. IEEE Trans. Image Process. 2020, 29, 5094–5109. [Google Scholar] [CrossRef] [PubMed]

- Zha, Z.; Yuan, X. Group sparsity residual constraint with non-local priors for image restoration. IEEE Trans. Image Process. 2020, 29, 8960–8975. [Google Scholar] [CrossRef] [PubMed]

- Zha, Z.; Wen, B. Image restoration via reconciliation of group sparsity and low-rank models. IEEE Trans. Image Process. 2021, 30, 5223–5238. [Google Scholar] [CrossRef]

- Zha, Z.; Wen, B. A hybrid structural sparsification error model for image restoration. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 4451–4465. [Google Scholar] [CrossRef] [PubMed]

- Zha, Z.; Wen, B. Triply complementary priors for image restoration. IEEE Trans. Image Process. 2021, 30, 5819–5834. [Google Scholar] [CrossRef]

- Zha, Z.; Wen, B. Low-rankness guided group sparse representation for image restoration. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–15. [Google Scholar] [CrossRef]

- Wang, C.; Wu, Y. Joint patch clustering-based adaptive dictionary and sparse representation for multi-modality image fusion. Mach. Vis. Appl. 2022, 33, 69. [Google Scholar] [CrossRef]

- Qin, X.; Ban, Y.; Wu, P. Improved image fusion method based on sparse decomposition. Electronics 2022, 11, 2321. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X. Image fusion with convolutional sparse representation. IEEE Signal Process. Lett. 2016, 23, 1882–1886. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Z. Simultaneous image fusion and denoising with adaptive sparse representation. IET Image Process. 2015, 9, 347–357. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Hu, J. Image fusion with guided filtering. IEEE Trans. Image Process. 2013, 22, 2864–2875. [Google Scholar] [PubMed]

- Zhang, Y.; Xiang, W.; Zhang, S. Local extreme map guided multi-modal brain image fusion. Front. Neurosci. 2022, 16, 1055451. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y.; Sun, P. IFCNN: A general image fusion framework based on convolutional neural network. Inf. Fusion 2020, 54, 99–118. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, H.; Xiao, Y. Rethinking the image fusion: A fast unified image fusion network based on proportional maintenance of gradient and intensity. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence (AAAI), New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12797–12804. [Google Scholar]

- Xu, H.; Ma, J.; Jiang, J. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 502–518. [Google Scholar] [CrossRef]

- Dong, Y.; Chen, Z.; Li, Z.; Gao, F. A multi-branch multi-scale deep learning image fusion algorithm based on DenseNet. Appl. Sci.-Basel 2022, 12, 10989. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Wang, Z. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Z. A practical pan-sharpening method with wavelet transform and sparse representation. In Proceedings of the IEEE International Conference on Imaging Systems and Techniques (IST), Beijing, China, 22–23 October 2013; pp. 288–293. [Google Scholar]

- Nejati, M.; Samavi, S.; Shirani, S. Multi-focus image fusion using dictionary-based sparse representation. Inf. Fusion 2015, 25, 72–84. [Google Scholar] [CrossRef]

- Hu, X.; Jiang, J.; Liu, X.; Ma, J. ZMFF: Zero-shot multi-focus image fusion. Inf. Fusion 2023, 92, 127–138. [Google Scholar] [CrossRef]

- Qu, X.; Yan, J.; Xiao, H. Image fusion algorithm based on spatial frequency-motivated pulse coupled neural networks in nonsubsampled contourlet transform domain. Acta Autom. Sin. 2008, 34, 1508–1514. [Google Scholar] [CrossRef]

- Liu, Z.; Blasch, E.; Xue, Z. Objective assessment of multiresolution image fusion algorithms for context enhancement in night vision: A comparative study. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 94–109. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).