LESM-YOLO: An Improved Aircraft Ducts Defect Detection Model

Abstract

1. Introduction

- By analyzing the challenges in detecting defects in aircraft ducts under low-light conditions, we integrated a light enhancement module. This integration addresses the issue of low-quality defect images captured in low-light environments from a model perspective.

- By examining the characteristics of existing aircraft duct defects, we replaced the standard convolution modules with SPDConv modules. This effectively reduces information loss and preserves more detailed defect features.

- To address the complex environments and backgrounds present in aircraft duct defect detection, we incorporated an MLCA into the neck module, significantly enhancing the model’s detection performance.

2. Related Work

3. Proposed Method

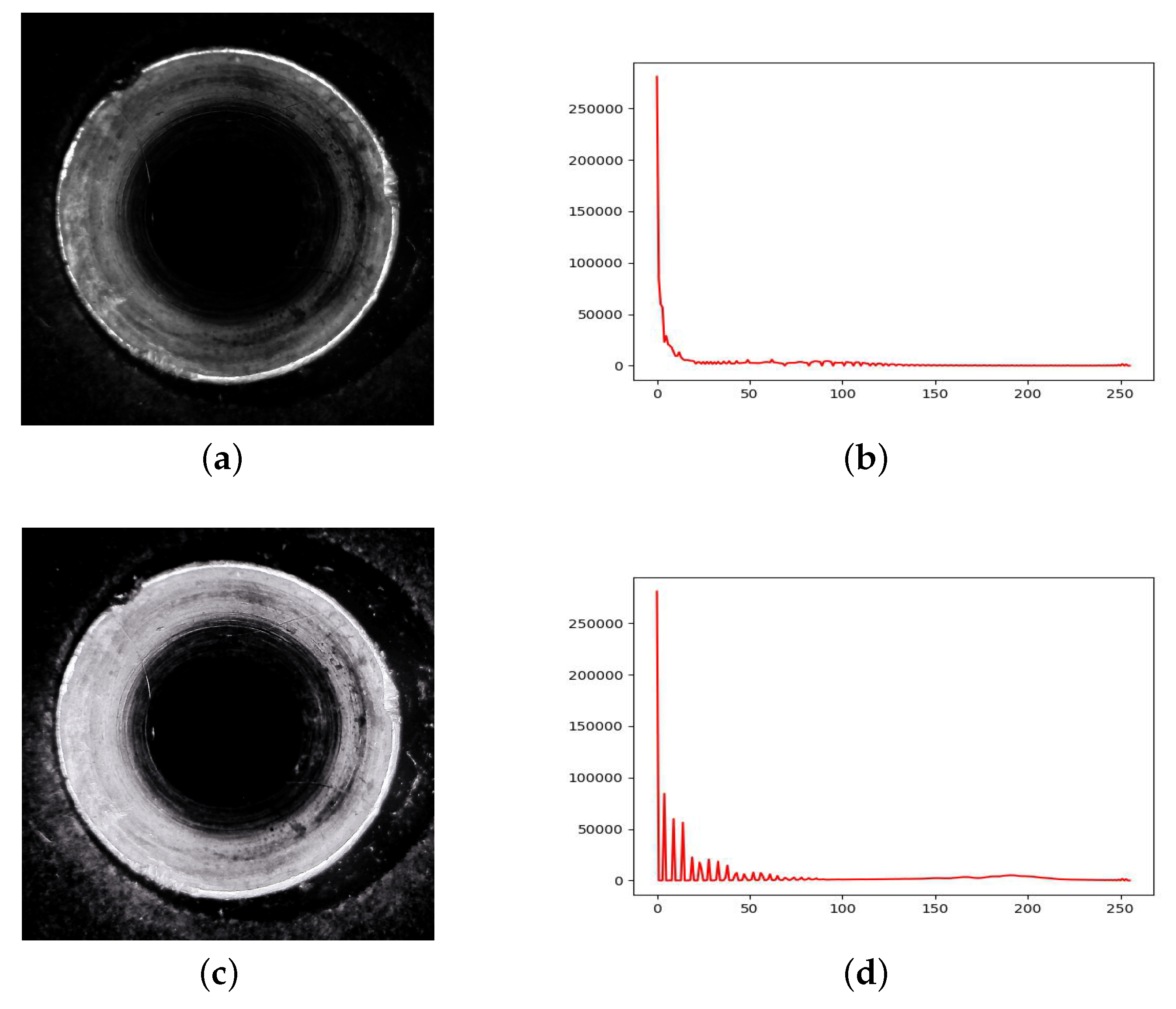

3.1. Low-Light Enhancement Module

3.2. SPDConv-Based Backbone

3.3. MLCA-Based Neck

4. Experiments and Analysis

4.1. Experimental Environment

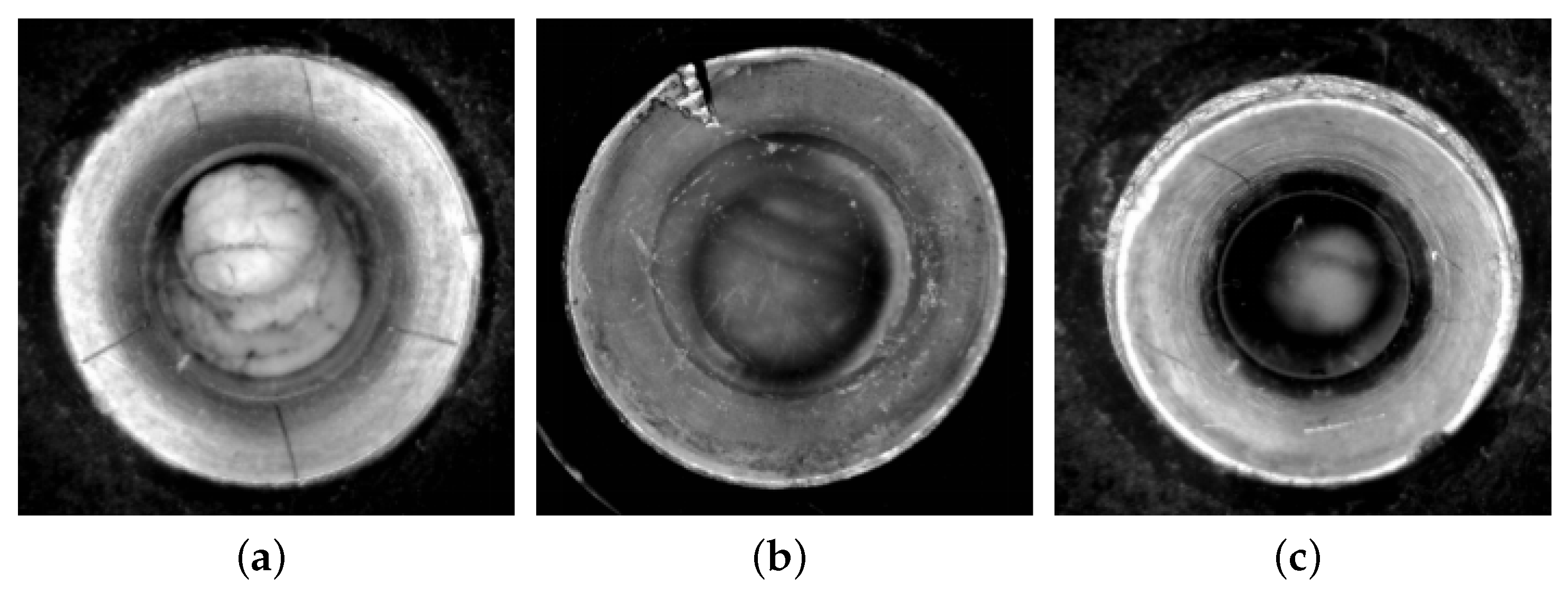

4.2. Dataset and Evaluation Metrics

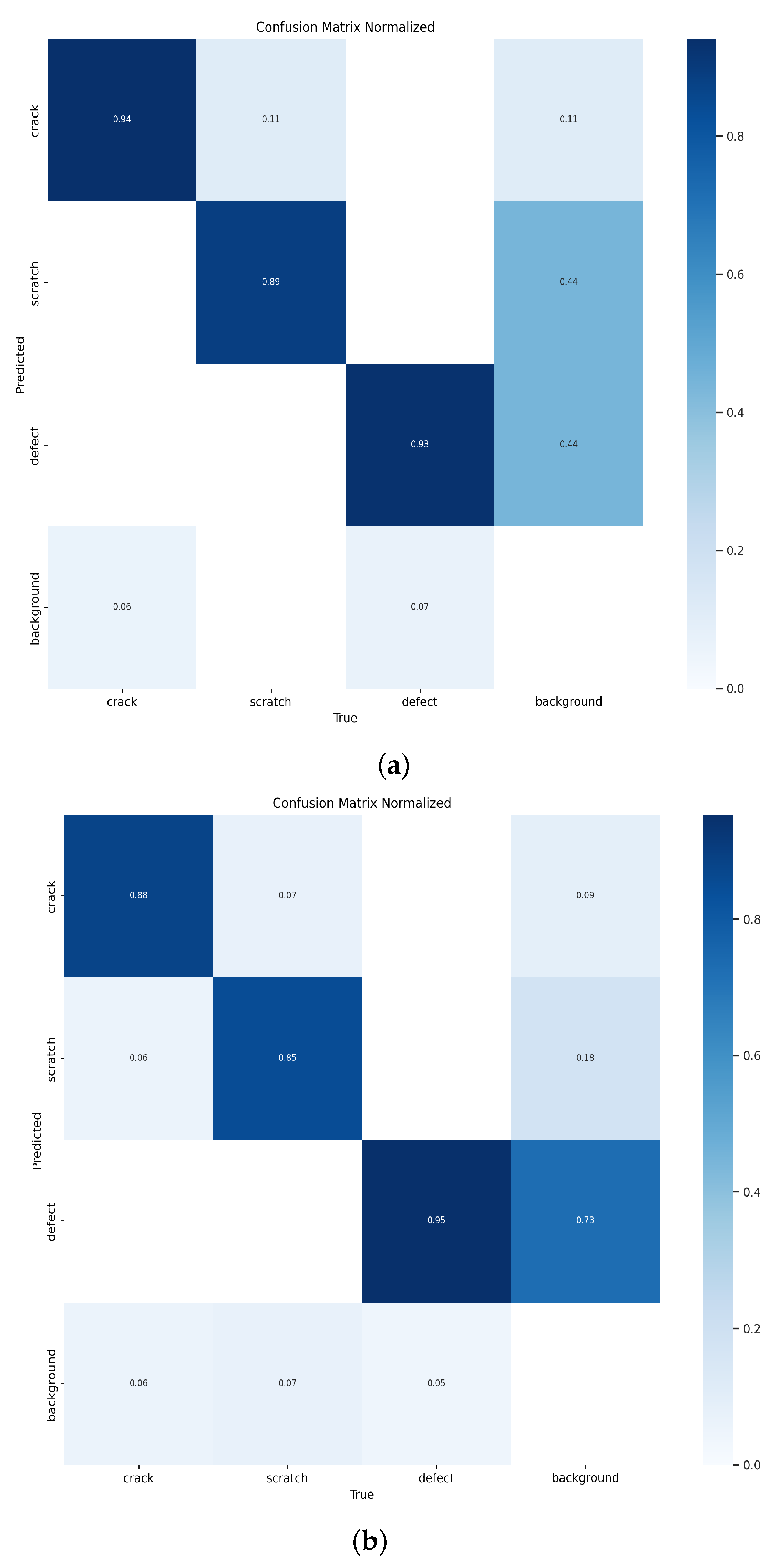

4.3. Ablation Experiment

4.4. Interpretability Experiment

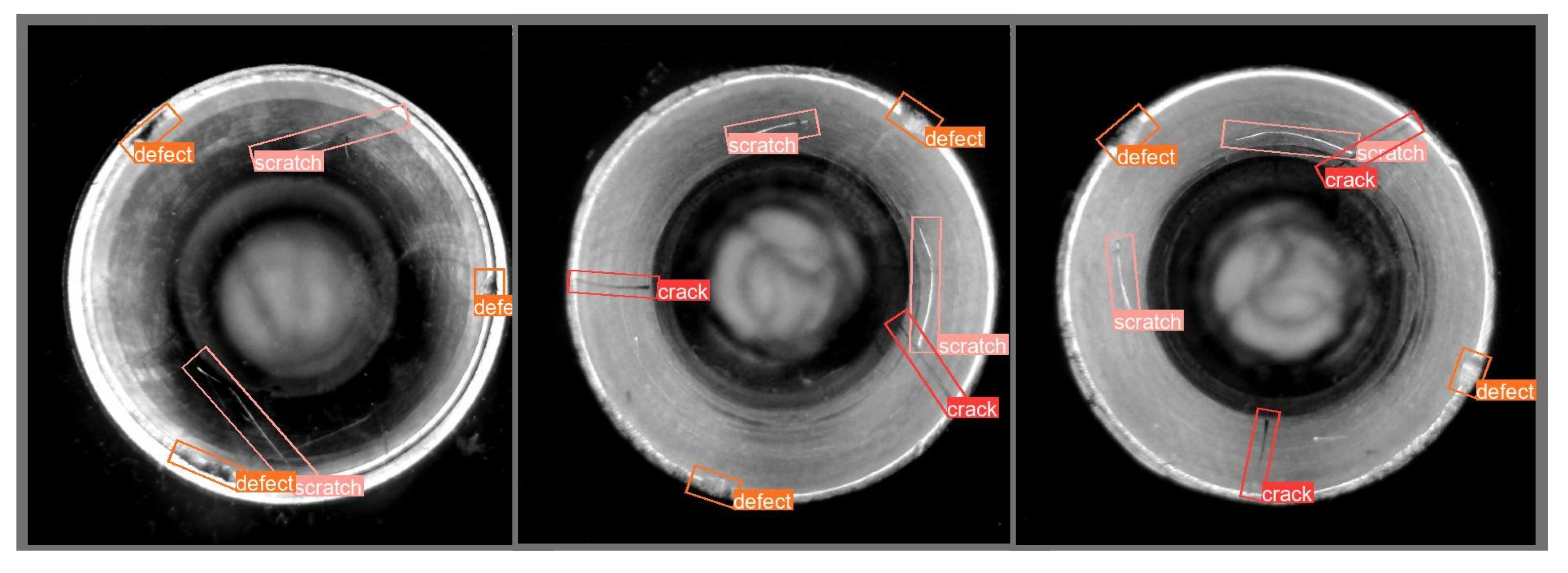

4.5. Comparison of Performance of Different Models

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, L.; Zou, L.; Fan, C.; Liu, Y. Feature weighting network for aircraft engine defect detection. Int. J. Wavelets Multiresolut. Inf. Process. 2020, 18, 2050012. [Google Scholar] [CrossRef]

- Bulnes, F.G.; Usamentiaga, R.; Garcia, D.F.; Molleda, J. An efficient method for defect detection during the manufacturing of web materials. J. Intell. Manuf. 2016, 27, 431–445. [Google Scholar] [CrossRef]

- Upadhyay, A.; Li, J.; King, S.; Addepalli, S. A Deep-Learning-Based Approach for Aircraft Engine Defect Detection. Machines 2023, 11, 192. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the Computer Vision & Pattern Recognition, Las Vegas, NV, USA, 21–30 June 2016. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3141–3149. [Google Scholar] [CrossRef]

- Park, J.; Woo, S.; Lee, J.Y.; Kweon, I.S. BAM: Bottleneck Attention Module. arXiv 2018, arXiv:1807.06514. [Google Scholar] [CrossRef]

- Mahajan, D.; Girshick, R.; Ramanathan, V.; He, K.; Paluri, M.; Li, Y.; Bharambe, A.; van der Maaten, L. Exploring the Limits of Weakly Supervised Pretraining. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; pp. 185–201. [Google Scholar] [CrossRef]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid Vision Transformer: A Versatile Backbone for Dense Prediction without Convolutions. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 548–558. [Google Scholar] [CrossRef]

- Yao, Y.; Ren, J.; Xie, X.; Liu, W.; Liu, Y.J.; Wang, J. Attention-Aware Multi-Stroke Style Transfer. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1467–1475. [Google Scholar] [CrossRef]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Learning Enriched Features for Real Image Restoration and Enhancement. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 492–511. [Google Scholar] [CrossRef]

- Wang, T.C.; Mallya, A.; Liu, M.Y. One-Shot Free-View Neural Talking-Head Synthesis for Video Conferencing. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10034–10044. [Google Scholar] [CrossRef]

- Su, Y.; Liu, X.; Guo, X. A Target Tracking Algorithm Based on Channel Attention and Spatial Attention. In Proceedings of the 2023 3rd International Symposium on Artificial Intelligence and Intelligent Manufacturing (AIIM), Chengdu, China, 27–29 October 2023; pp. 57–61. [Google Scholar] [CrossRef]

- Han, Y.; Huang, G.; Song, S.; Yang, L.; Wang, H.; Wang, Y. Dynamic Neural Networks: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 7436–7456. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Yang, G.; Hao, X.; Geng, L. Automotive Gear Defect Detection Method based on Yolov8 Algorithm. In Proceedings of the 2023 Asia Symposium on Image Processing (ASIP), Tianjin, China, 15–17 June 2023; pp. 19–23. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar]

- Wang, H.; Li, Z.; Wang, H. Few-Shot Steel Surface Defect Detection. IEEE Trans. Instrum. Meas. 2022, 71, 5003912. [Google Scholar] [CrossRef]

- Zhang, F.; Wang, X.; Zhou, S.; Wang, Y. DARDet: A Dense Anchor-Free Rotated Object Detector in Aerial Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8024305. [Google Scholar] [CrossRef]

- Wang, X.; Wang, G.; Dang, Q.; Liu, Y.; Hu, X.; Yu, D. PP-YOLOE-R: An efficient anchor-free rotated object detector. arXiv 2022, arXiv:2211.02386. [Google Scholar]

- Sunkara, R.; Luo, T. No More Strided Convolutions or Pooling: A New CNN Building Block for Low-Resolution Images and Small Objects. In Proceedings of the Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2022, Grenoble, France, 19–23 September 2022; Springer: Berlin/Heidelberg, Germany, 2023; pp. 443–459. [Google Scholar] [CrossRef]

- Yang, Z.; Wu, Q.; Zhang, F.; Zhang, X.; Chen, X.; Gao, Y. A New Semantic Segmentation Method for Remote Sensing Images Integrating Coordinate Attention and SPD-Conv. Symmetry 2023, 15, 1037. [Google Scholar] [CrossRef]

- Wan, D.; Lu, R.; Shen, S.; Xu, T.; Lang, X.; Ren, Z. Mixed local channel attention for object detection. Eng. Appl. Artif. Intell. 2023, 123, 106442. [Google Scholar] [CrossRef]

- Ma, L.; Ma, T.; Liu, R.; Fan, X.; Luo, Z. Toward Fast, Flexible, and Robust Low-Light Image Enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5637–5646. [Google Scholar]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-Reference Deep Curve Estimation for Low-Light Image Enhancement. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1777–1786. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, X.; Ma, J.; Liu, W.; Zhang, J. Beyond Brightening Low-light Images. Int. J. Comput. Vis. 2021, 129, 1013–1037. [Google Scholar] [CrossRef]

- Fang, Y.; Liao, B.; Wang, X.; Fang, J.; Qi, J.; Wu, R.; Niu, J.; Liu, W. You only look at one sequence: Rethinking transformer in vision through object detection. Adv. Neural Inf. Process. Syst. 2021, 34, 26183–26197. [Google Scholar]

| Environmental Parameter | Value |

|---|---|

| System environment | Ubuntu 22.04 |

| Deep learning framework | PyTorch 2.1.0 |

| Cuda version | 12.1 |

| GPU | RTX 4090 (24 GB) |

| CPU | Intel(R) Xeon(R) Platinum 8352V CPU @ 2.10 GHz |

| Programming language | Python 3.10 |

| Hyperparameters | Value |

|---|---|

| Learning rate | 0.01 |

| Image size | 640 × 640 |

| Momentum | 0.937 |

| Batch size | 4 |

| Epoch | 150 |

| Weight decay | 0.0005 |

| LE-Module | SPD-Conv | MLCA | P | R | mAP | FPS |

|---|---|---|---|---|---|---|

| 87.5 | 85.7 | 89.9 | 140.3 | |||

| √ | 89.6 | 91.3 | 92.7 | 135.8 | ||

| √ | 91.7 | 92.1 | 93.8 | 153.6 | ||

| √ | 91.4 | 89.9 | 94.1 | 128.9 | ||

| √ | √ | 95.5 | 90.1 | 97.1 | 124.4 | |

| √ | √ | √ | 94.8 | 92.8 | 96.3 | 138.7 |

| Models | Crack AP(%) | Scratch AP(%) | Defect AP(%) | mAP50 | FPS |

|---|---|---|---|---|---|

| Faster-RCNN | 80.89 | 72.49 | 73.81 | 75.73 | 9.6 |

| SSD | 94.34 | 89.86 | 90.69 | 91.63 | 43.2 |

| YOLOv3 | 85.15 | 81.06 | 83.21 | 83.14 | 54.1 |

| YOLOv4-tiny | 81.81 | 77.54 | 80.02 | 79.79 | 145.3 |

| YOLOv5 | 88.93 | 84.82 | 86.41 | 86.72 | 98.2 |

| YOLOv7-tiny | 90.13 | 84.63 | 87.53 | 87.43 | 102.3 |

| YOLOS-Ti | 88.64 | 85.18 | 86.67 | 86.83 | 113.6 |

| YOLOv8s | 90.82 | 86.76 | 95.12 | 90.90 | 140.3 |

| Our Model | 97.71 | 94.45 | 96.74 | 96.30 | 138.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wen, R.; Yao, Y.; Li, Z.; Liu, Q.; Wang, Y.; Chen, Y. LESM-YOLO: An Improved Aircraft Ducts Defect Detection Model. Sensors 2024, 24, 4331. https://doi.org/10.3390/s24134331

Wen R, Yao Y, Li Z, Liu Q, Wang Y, Chen Y. LESM-YOLO: An Improved Aircraft Ducts Defect Detection Model. Sensors. 2024; 24(13):4331. https://doi.org/10.3390/s24134331

Chicago/Turabian StyleWen, Runyuan, Yong Yao, Zijian Li, Qiyang Liu, Yijing Wang, and Yizhuo Chen. 2024. "LESM-YOLO: An Improved Aircraft Ducts Defect Detection Model" Sensors 24, no. 13: 4331. https://doi.org/10.3390/s24134331

APA StyleWen, R., Yao, Y., Li, Z., Liu, Q., Wang, Y., & Chen, Y. (2024). LESM-YOLO: An Improved Aircraft Ducts Defect Detection Model. Sensors, 24(13), 4331. https://doi.org/10.3390/s24134331