Abstract

Space targets move in orbit at a very high speed, so in order to obtain high-quality imaging, high-speed motion compensation (HSMC) and translational motion compensation (TMC) are required. HSMC and TMC are usually adjacent, and the residual error of HSMC will reduce the accuracy of TMC. At the same time, under the condition of low signal-to-noise ratio (SNR), the accuracy of HSMC and TMC will also decrease, which brings challenges to high-quality ISAR imaging. Therefore, this paper proposes a joint ISAR motion compensation algorithm based on entropy minimization under low-SNR conditions. Firstly, the motion of the space target is analyzed, and the echo signal model is obtained. Then, the motion of the space target is modeled as a high-order polynomial, and a parameterized joint compensation model of high-speed motion and translational motion is established. Finally, taking the image entropy after joint motion compensation as the objective function, the red-tailed hawk–Nelder–Mead (RTH-NM) algorithm is used to estimate the target motion parameters, and the joint compensation is carried out. The experimental results of simulation data and real data verify the effectiveness and robustness of the proposed algorithm.

1. Introduction

Inverse synthetic aperture radar (ISAR) is an important sensor for the observation and imaging of aerial and space targets. Compared with optical sensor, ISAR is free from the interference of sky background light and cloud occlusion, has better all-weather working ability, and has a long detection distance, so it plays an important role in space target surveillance [1,2,3,4]. The range resolution of ISAR depends on the radar bandwidth, and the azimuth resolution depends on the relative motion between the target and the radar [5,6,7]. The target of interest for ISAR is non-cooperative, meaning that the motion parameters is unknown in advance, which poses a significant challenge to high-quality ISAR imaging [8]. Generally speaking, the motion of the target relative to the radar can be divided into two parts: translational motion and rotation motion [9,10]. The rotational motion provides the azimuth resolution that is needed for imaging, while the translational motion will cause range cell misalignment and phase error, which make the ISAR image defocused and blurry. Therefore, in order to achieve high-quality ISAR imaging, the translational motion needs to be compensated for [11].

The key to high-quality ISAR imaging lies in the precise compensation for translational motion. Current methods of translational motion compensation (TMC) are divided into two main categories. One category is non-parametric TMC methods, also known as adjacent TMC methods, which are carried out in two steps: range alignment (RA) [12,13,14] and phase adjustment (PA) [15,16,17,18]. RA can eliminate range cell misalignment. The resemblance between adjacent range profiles is utilized to find the correct number of range cells for shifting each range profile [19]. Apart from resemblance-based methods, there is another type of RA algorithm that relies on optimizing the quality measures of the alignment. The methods based on the entropy and the contrast of the average range profile are presented in [20] and [21], respectively. After RA, PA is utilized to eliminate phase error. The algorithms for PA mainly include the following: the dominant scatters algorithm [22], the Doppler centroid tracking (DCT) algorithm [23], the phase gradient autofocus (PGA) algorithm [24], and the eigenvector-based algorithm [25]. There are also algorithms based on image quality assessment, such as the minimum entropy algorithm [26,27,28] and the maximum contrast algorithm [29,30,31].

Another category is parametric methods, also referred to as joint TMC methods [32,33,34,35]. These methods utilize entropy or contrast as the focus quality assessment metrics for ISAR images. Subsequently, the motion of the target is modeled as a high-order polynomial, and the optimal image focus quality assessment metrics are used to solve for the motion parameters of the target. Then, range cell misalignment and phase error are compensated for simultaneously. This compensation method does not depend on the resemblance between adjacent range profiles, thus avoiding the impact of residual RA error on PA. Therefore, it can achieve better TMC even under low signal-to-noise ratio (SNR) conditions.

It is noteworthy that the above-mentioned TMC methods are all aimed at low-speed targets such as airplanes and ships. For high-speed moving targets such as satellites and missiles, the motion velocity is usually several kilometers per second, and the “stop-go” model is no longer applicable. The high resolution range profile (HRRP) will be stretched due to the high-speed motion of the target, which affects the accuracy of RA, thereby affecting subsequent PA and the ISAR image would be seriously blurred [36]. Therefore, before performing TMC, it is necessary to estimate the velocity of the target and carry out high-speed motion compensation (HSMC).

The current HSMC methods are divided into two main categories. One category is based on signal parameter estimation, which models the echo of each pulse as a multi-component higher-order phase signal and then estimates the signal parameters through fractional Fourier transform (FrFT) [37,38], integrating cubic phase functions (ICPF) [39], etc., and further obtaining the target motion velocity. This category relies on the accurate estimation of signal parameters and is easily affected by noise. The other category is based on the focusing quality of HRRP [40,41], constructing compensation terms with different speeds to compensate for the echo. The target motion velocity is estimated by optimizing the focusing quality of HRRP. Waveform entropy and contrast are both commonly used focusing quality assessment metrics. The limitation of these algorithms lies in the fact that they process each pulse independently, resulting in a large error in velocity estimation. Owing to the separate processing of echoes, within one coherent processing interval (CPI), the high-speed motion estimation error of each pulse gradually accumulates, leading to a poor overall high-speed compensation effect.

In ISAR imaging, due to transmission loss or limitations in the transmitted energy, the issue of a low SNR of the target echo often occurs. In the case of a low SNR, both HSMC and TMC face challenges [42,43]. Wang [44] proposed an HSMC method based on the minimum entropy of two-dimensional ISAR images, which achieves high-quality imaging under low SNRs. However, this method assumes that the translational error can be eliminated through TMC, leaving only the high-speed motion error in the echo. In practice, due to the presence of high-speed motion error, TMC becomes very difficult. Therefore, the assumption of this method is overly idealized, which significantly limits its applicability. In the case of low SNR, the precision of HSMC diminishes, and the residual error of HSMC will lead to a decrease in the precision of TMC, which severely degrades the quality of ISAR imaging.

Aiming to perform high-quality ISAR imaging of space targets under a low SNR, a noise-robust joint motion compensation algorithm for ISAR imaging-based entropy minimization is proposed in this paper. Firstly, the influence of the high-speed motion and translational motion of the space targets on the echo in de-chirp mode is analyzed, and the signal model of the space targets is established. Considering the continuity of the motion of the target in a CPI, the motion of the target is modeled as a high-order polynomial, and the motion polynomial coefficients are optimized by minimizing two-dimensional image entropy. Based on the established minimum entropy optimization model, the red-tailed hawk–Nelder–Mead (RTH-NM) algorithm is used to solve the minimum entropy optimization problem, and then joint motion compensation is realized. Electromagnetic simulation data and Yak-42 measured data verify the effectiveness of the joint motion compensation algorithm. Compared with existing algorithms, this algorithm is innovative in the following aspects:

- A novel joint compensation model for the simultaneous compensation of high-speed motion and translational motion is proposed for the first time. Existing methods typically separate HSMC and TMC into two steps. The residual error from HSMC will affect the accuracy of TMC. However, in this paper, a parametric joint compensation model is used to simultaneously compensate for the high-speed motion and translation motion of the target. The joint motion compensation reduces the impact of residual error from HSMC on TMC, thus achieving higher motion compensation accuracy.

- Many existing parametric motion compensation methods rely on gradient-based approaches to solve problems, which necessitate intricate derivative calculations and are highly sensitive to the selection of initial values. In this paper, a two-step optimization method that synergizes the red-tailed hawk (RTH) algorithm with the Nelder–Mead (NM) algorithm, called the RTH-NM algorithm, is used to estimate the target motion parameters. The RTH algorithm facilitates the avoidance of local optima during parameter optimization, enabling a preliminary search for parameters. The NM algorithm, on the other hand, achieves a more refined search, ensuring the precision of motion parameters. The integration of both algorithms enables rapid convergence towards an accurate solution, identifying the optimal motion parameters. In comparison to gradient-based methods, this approach proves to be more effective and pragmatic.

- This algorithm fully utilizes the high SNR gain accumulated from two-dimensional ISAR images, which is beneficial for the joint compensation of high-speed motion and translation motion under low-SNR conditions. It improves the accuracy of motion compensation, leading to the enhanced quality of ISAR images.

This study is based on the following assumptions: (1) Random disturbances in the envelope caused by the radar system and the changing sampling wave gate are not considered. (2) Within the imaging CPI, the relative rotation angle of the target is small, and the equivalent rotation angular velocity of the target is constant.

This paper is organized as follows. Section 2 introduces the de-chirp signal mode for space targets. In Section 3, a joint motion compensation model based on entropy minimization is established, and the RTH-NM algorithm is used to estimate the target motion parameters. In Section 4, the experimental results of simulation data and real data are given, and the effectiveness and robustness of the proposed algorithm are analyzed. Finally, some conclusions are summarized in Section 5.

2. De-Chirp Signal Model for Space Targets

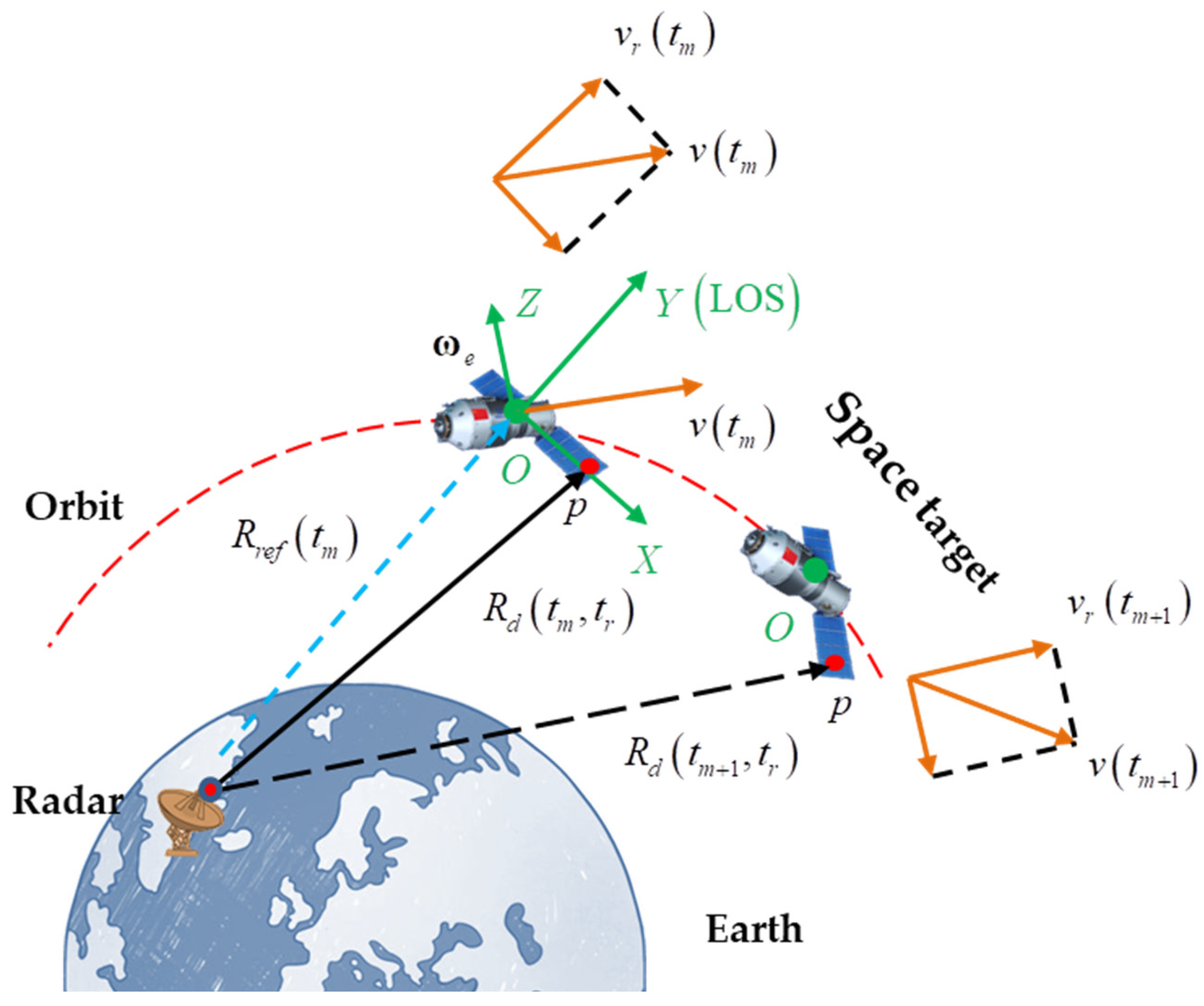

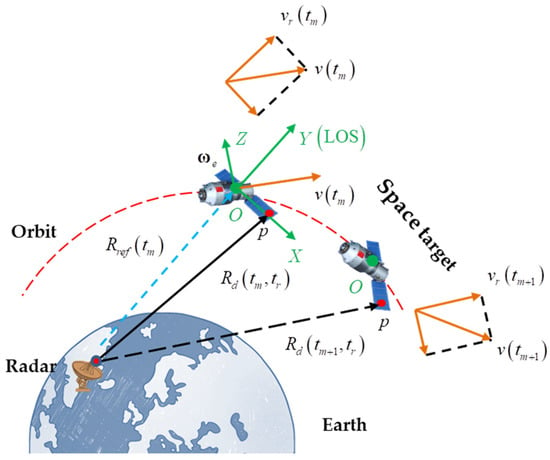

The imaging geometric configuration of the radar and space target is shown in Figure 1. is the origin of the coordinate system, located at the centroid of the space target. The direction of the radar line of sight (LOS) corresponds to the -axis. Assuming the effective rotational vector (ERV) of the target is , then the direction of is the -axis of the coordinate system. The -axis of the coordinate system can be obtained by the right-hand rule. The plane is the image projection plane (IPP). Supposing that the wideband radar transmits a linear frequency modulated (LFM) signal,

where , and , , and represent pulse width, carrier frequency, and frequency modulation rate, respectively. is the full time, where is the fast time and is the slow time.

Figure 1.

Observation geometry for space target.

As shown in Figure 1, assuming that the space target consists of scattering centers and is an arbitrary scattering center, the radar echo of can be written as

where is the echo time delay of , is the instantaneous distance from to radar at , is the propagation speed of light, and is the backscattering coefficient of . Due to the high-speed motion of the space target, the distance change of the target within one pulse width needs to be considered. So, the distance from to the radar can be rewritten as

where is the distance change with , and is the distance change with . Considering the short duration of a pulse, the variation in velocity within a pulse can be neglected. That is, if the target can be approximated as moving at a constant speed within a pulse, then can be approximated as

where is the radial velocity of the target at . By taking the conjugate multiplication of the echo signal with the reference signal, de-chirp processing can be carried out. The reference signal is

where , is the reference distance at . After de-chirp processing, we can obtain the output signal as follows:

where . For the sake of conciseness, let represent the new fast time. Then, Equation (6) can be rewritten as

Assuming that the coordinate of in the imaging plane is , and considering that the relative rotation angle of the target within the CPI is small and the rotation is usually uniform, the instantaneous distance from to radar is given by

where is the translational motion of the target centroid, is the angular velocity, and . Substituting Equation (8) into Equation (7) and performing the Taylor expansion yields

where represents the distance of from the reference point at , and the phase in Equation (9) can be divided into nine terms. The first term is the phase error term, and the second term is the range cell misalignment term; both of them are caused by the translational motion of the target. The third term is the rotational Doppler term of , which is the source of the ISAR azimuth resolution. The fourth term is the range migration term caused by rotational motion, which usually does not exceed a range cell in ISAR imaging, and its impact can be negligible. The fifth term is constant and can be ignored. The sixth term is the range compression term of . The seventh term is the envelope walk term, and the eighth term is the range profile stretched term; they are all caused by the target’s high-speed motion. The ninth term is the residual video phase (RVP) error, which can be removed by RVP compensation. From Equation (9), we can discover that high-speed motion leads to envelope walk and a stretched range profile. Due to the reduction in similarity between HRRP caused by the stretch of the range profile, the accuracy of the adjacent TMC method will decrease. Simultaneously, the high-speed motion of the target also causes envelope walk, which breaks the homology between the envelope walk and the phase error of translation motion and will also reduce the effectiveness of parametric TMC. Under low-SNR conditions, the impact of high-speed motion will be more significant, which may lead to the failure of traditional motion compensation methods and the inability to achieve ISAR imaging.

Subsequently, the seventh phase term of (9) is further analyzed and written as

where and . Since both and are related to the fast time , both of which will cause envelope walk, the echo from the same scattering point will be distributed across different range cells within different pulses. Therefore, their impact needs to be analyzed.

is related to the position of each scattering point . The frequency resolution in the range frequency domain is , where represents the total number of range cells, and is the sampling frequency. The range cell offset caused by can be expressed as

is independent of the position of the scattering point , and the range cell offset caused by can be expressed as

The ratio of the range cell offset caused by and is

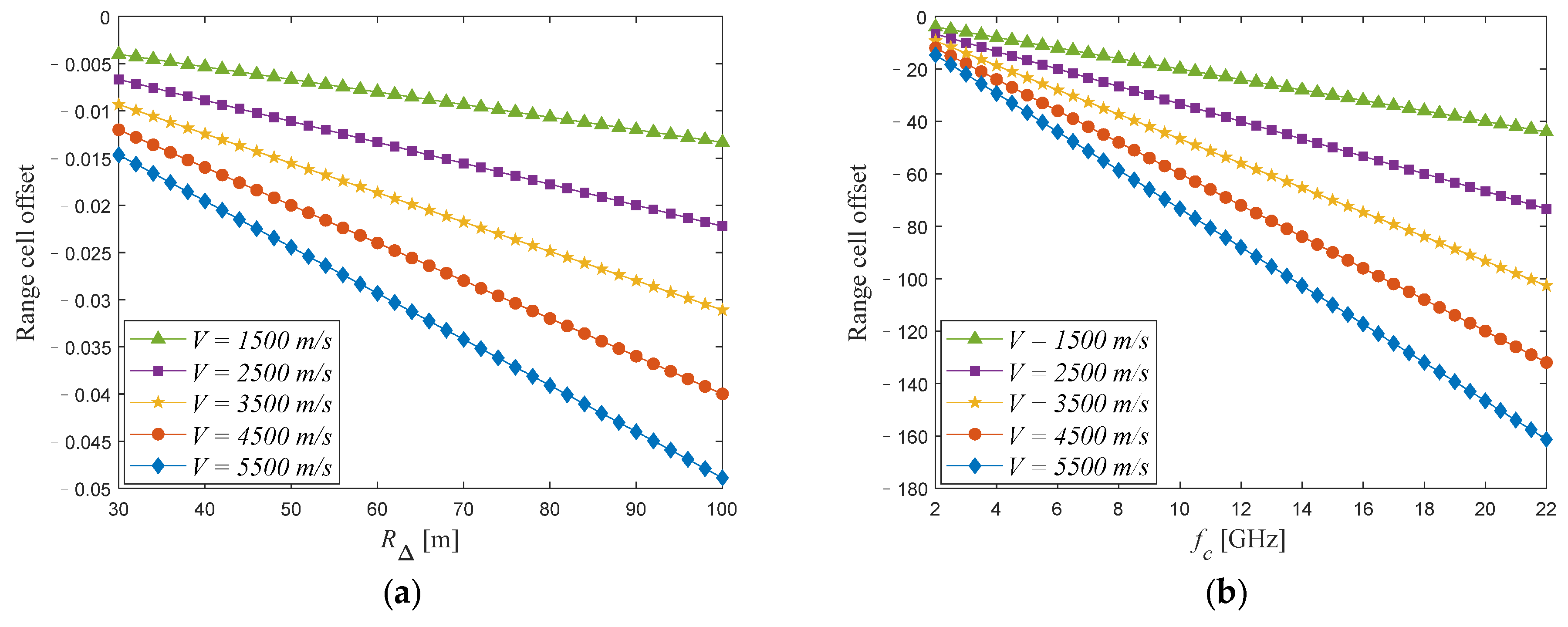

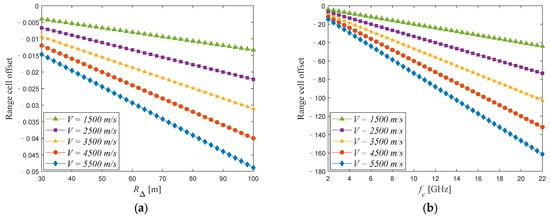

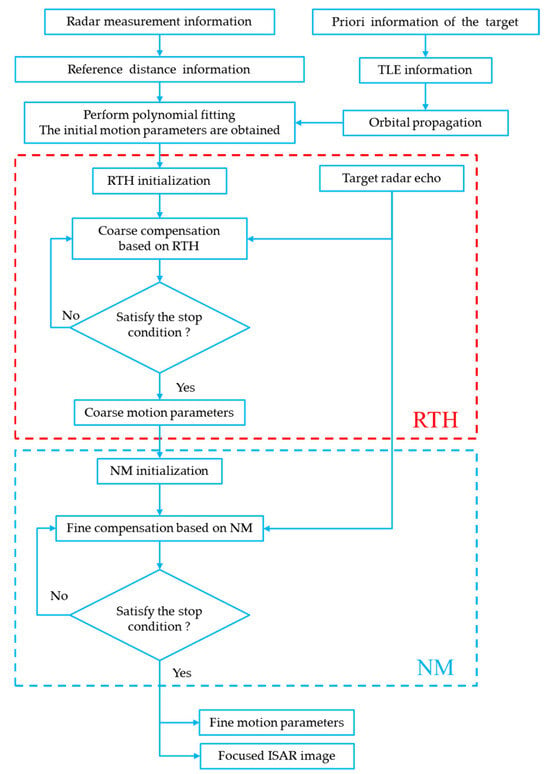

Subsequently, a simulation analysis is conducted. In the simulation, the frequency modulation rate is set to . Considering that the maximum size of the space target currently observed is within one hundred meters, we set the maximum of to . is the velocity of space target. The simulation results are shown in Figure 2.

Figure 2.

Range cell offset analysis with different factors. (a) Variation in with under different . (b) Variation in with under different . (c) Variation in with under different . (d) Variation in with under different . (e) Variation in with under different . (f) Variation in with under different .

As can be seen from Figure 2, the range cell offset caused by is basically less than 0.05 range cells, while the range cell offset caused by can reach several tens or even hundreds of range cells, and is maintained at the order of magnitude of 10−4 when is greater than 10 GHz. That is to say, the range cell offset is mainly affected by ; therefore, we ignore . Similar conclusions can also be found in [45]. At the same time, we can observe that when the target’s velocity changes to about 400 m/s, the variation in can reach approximately 10 range cells. It is precisely because of the variation in that the homology between range cell misalignment and phase error is disrupted, and the joint TMC method will become unusable as a result. After the above analysis, we can obtain the final space target echo signal as follows:

where is the space target echo of the ideal turntable model and can be represented as

According to Equation (14), the signal model for joint compensation for high-speed motion and translational motion is

By discretizing the echo and performing the fast Fourier transform (FFT) with respect to and , the ISAR image after joint motion compensation can be expressed as

where is the ISAR image after compensation. and are the serial numbers of the range cells and Doppler cells, respectively. and , where is the number of range cells and is the number of Doppler cells. is the discrete form of . and are the discrete form of and . denotes complex noise. Equation (17) is the signal model of the final ISAR images after joint motion compensation. In the following sections, the joint motion compensation algorithm based on parametric minimum entropy optimization uses this signal model.

3. Optimization of Joint Motion Compensation

3.1. Optimization of Motion Parameters Based on Minimum Entropy

Based on the joint motion compensation model established in the previous subsection, joint motion compensation can be achieved if the motion parameters of the target can be accurately estimated. Therefore, the key issue lies in how to accurately estimate the motion parameters of the target. Unlike aircraft and vessels, space targets typically move along orbital trajectories utilizing a three-axis stabilization mode, and the motion is relatively stable. Without the loss of generality, the radial motion of the space target can be model as an -order polynomial:

and the radial velocity of the space target can also be expressed as

where represents the order of each term in the polynomial, , and represents the coefficient of each order. is pulse repetition time (PRT). For the convenience of description, polynomial coefficients can be written as a polynomial coefficient vector . At the same time, the reference distance information used in de-chirp processing can also be obtained from radar measurement information, which is represented as . It is important to note that, in this instance, a high level of precision for is not necessary. It is only necessary to know exactly what reference distance is used during de-chirp processing; even if there are errors in , it will not impact the accuracy of the method proposed. After using and for joint motion compensation, the ISAR image of the target can be obtained as follows:

If the value of is accurately obtained, the high-speed motion and translational motion of the target will be compensated for, and a well-focused ISAR image will be obtained. Hence, the problem of joint motion compensation is essentially an optimal parameter estimation problem. Image entropy [46] is a commonly used evaluation metric in the field of ISAR imaging to measure the quality of image focus. The smaller the entropy, the clearer the image, and the better the focusing performance of the image. Therefore, in this paper, image entropy is chosen as the cost function to implement the optimization of the target motion parameter .

The ISAR image after joint motion compensation by , the estimated value of , can be expressed as

The image entropy of is related to , and it can be represented as

where is the image intensity that can be expressed as

The target motion parameter can be obtained by minimizing the image entropy , expressed as

Many algorithms can be used to solve the problem in Equation (24), such as gradient-based methods and intelligent optimization algorithms. However, gradient-based algorithms are complex in calculating derivatives and sensitive to the choice of initial points. Given the inability to provide initial values for the target motion parameters with great precision, the use of gradient-based methods is restricted. To achieve the optimization of target motion parameters, this paper adopts intelligent optimization algorithms to solve the above optimization problems, and the specific steps will be introduced in the next section.

3.2. Parameters Optimization Based on RTH-NM

Based on the joint motion compensation optimization model established in Section 3.1, the RTH-NM algorithm is used to estimate the target motion parameters, thereby achieving precise joint motion compensation.

The RTH algorithm is a new nature-inspired metaheuristic optimization algorithm inspired by the red-tailed hawk’s hinting behaviors of a predatory bird. The RTH algorithm exhibits strong robustness and a rapid convergence rate, so it is used to optimize the motion parameters of the space target. The utilization of the RTH algorithm can mitigate the risk of target motion parameter estimation becoming trapped in local optima. Nevertheless, the motion parameters derived from the RTH algorithm often lack sufficient precision, and conducting a highly accurate search for these parameters is time-consuming. To address this, the NM algorithm is applied to enhance the precision of the motion parameters. The specific steps for the RTH-NM algorithm will be described in detail below.

Due to the inability to accurately obtain the target motion parameters, the RTH algorithm needs to be used for a coarse search. The specific steps of an RTH coarse search are as follows:

Step 1 (initialization): The following parameters of the RTH algorithm need to be initialized: the number of red-tailed hawks , the maximum number of iterations , the initial iteration number , the echo to be compensated , the radar de-chirp reference distance information , the target motion polynomial order , and the search space for target motion parameters . In this paper, , , and are set to 120, 250, and 1, respectively. and can be obtained from the radar system. and can be obtained from the Two-Line Element Set (TLE) information and .

Step 2 (generating the initial position): Based on , the initial position of a red-tailed hawk can be obtained, where is a -row -column matrix. Calculate the image entropy according to Equation (22), and the optimal position of the red-tailed hawk is .

Step 3 (high soaring): The position of the red-tailed hawk is continuously updated, and joint motion compensation is performed using the motion parameters corresponding to each position to obtain the target ISAR image. The image entropy is calculated according to Equation (22), and the position with the minimum entropy is obtained to update the optimal position . The position update formula of the red-tailed hawk is shown in Equation (25):

where represents the position of the red-tailed hawk at the iteration , is the mean position, represents the levy flight distribution function that can be calculated according to Equation (26), and denotes the transition factor function that can be calculated according to Equation (28).

where is a constant (0.01), is a constant (1.5), and and are random numbers between 0 and 1.

Step 4 (Low soaring): The hawk surrounds the prey by flying much lower to the ground in a spiral line. The position update formula of red-tailed hawk is shown in Equation (29):

where and denote direction coordinates, which can be calculated as follows:

where denotes the initial value of the radius, which varies from 0 to 1. is the angle gain, which varies from 5 to 15. is a random number between 0 and 1. is a control gain that varies from 1 to 2.

Step 5 (stooping and swooping): The hawk suddenly stoops and attacks the prey from the best-obtained position in the low soaring stage. The position update formula of red-tailed hawk is shown in Equation (29):

where and are step sizes and can be calculated as follows:

where and are the acceleration and the gravity factors, which can be calculated as follows:

Step 6 (termination condition judgment): If the number of iterations reaches the maximum value , terminate the search process; otherwise, return to step 3 and continue the search. Finally, the global optimal position is output as the optimal motion parameter, that is, .

After the search using the RTH algorithm, the coarse target motion parameters are obtained, but they are not precise enough. Consequently, a refined search is required to obtain the fine target motion parameters. The NM algorithm is used for the precise search of motion parameters, and the specific steps are as follows.

Step 1 (initialization): Use the result obtained from the RTH algorithm as the initial input, and initialize L + 2 points , serving as the vertices of the L + 1 simplex.

Step 2 (order): Based on the motion parameters corresponding to each vertex , perform joint motion compensation to obtain ISAR images, and calculate the entropy of the ISAR images. Then, reorder the vertices according to to satisfy . Check whether the stopping conditions are met.

Step 3 (centroid): Discard the worst point , and calculate the centroid of the first +1 vertices, .

Step 4 (reflection): Calculate the reflection point . If is better than but worse than , that is, , then replace with to construct a new L + 1-simplex and continue with step 2.

Step 5 (expansion): If the reflection point is the optimum, that is, , then calculate the expansion point . If the expansion point is better than the reflection point, that is, , then replace with and continue with step 2; otherwise, replace with and then continue with step 2.

Step 6 (contraction): If , calculate the contraction point . If , then replace with and continue with step 3; otherwise, proceed to step 7. If , calculate the inner contraction point . If the inner contraction point is better than the worst point, then replace the worst point with ; otherwise, proceed to step 7.

Step 7 (shrink): Use to replace all points except the current optimum point, and then continue with step 2.

In the aforementioned steps, , , , and represent the reflection, expansion, contraction, and reduction coefficients, respectively, with values typically being , , , and . After further optimization using the NM algorithm, the precise target motion parameters can be obtained. By utilizing for joint motion compensation, a high-quality ISAR image of the target can be achieved.

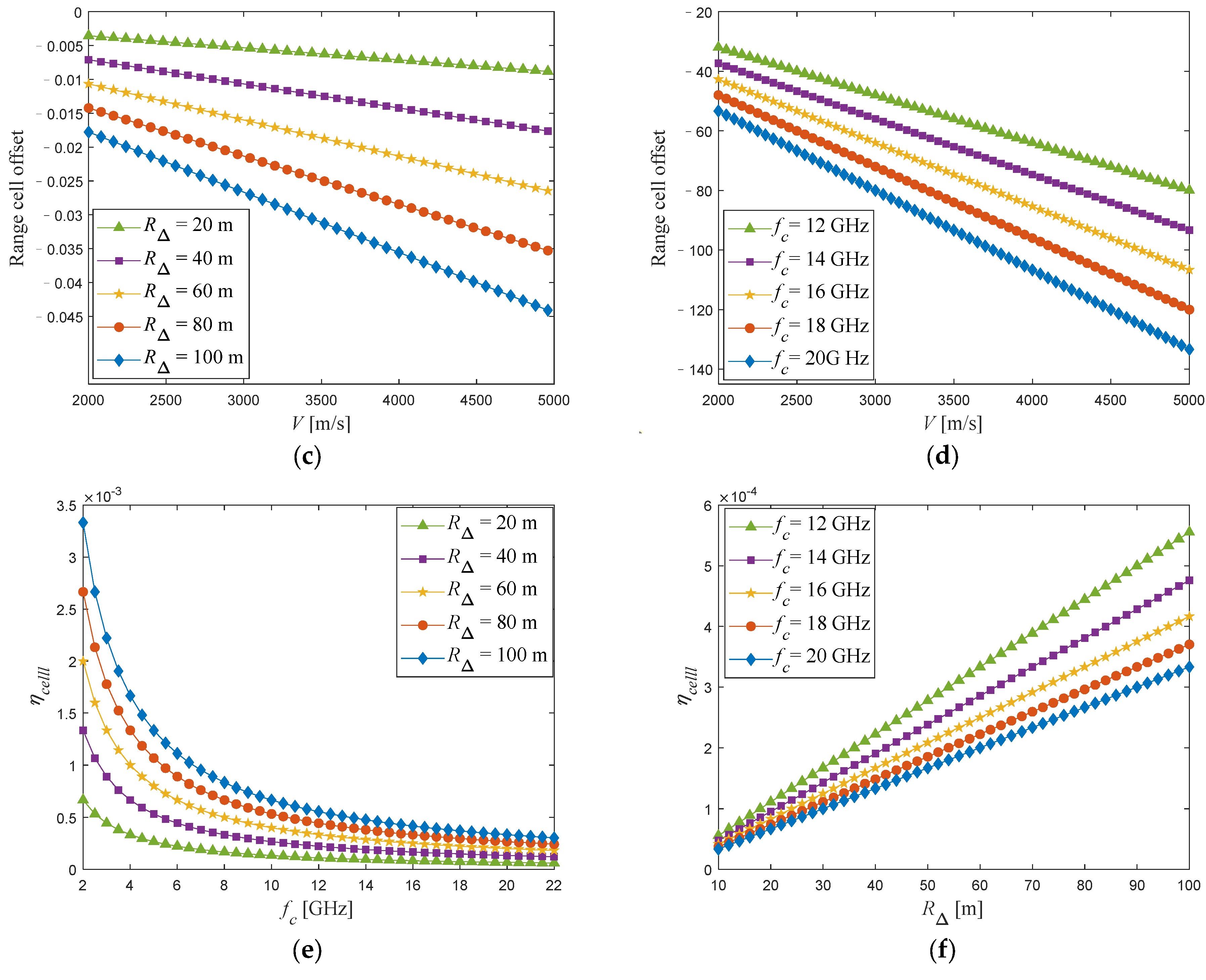

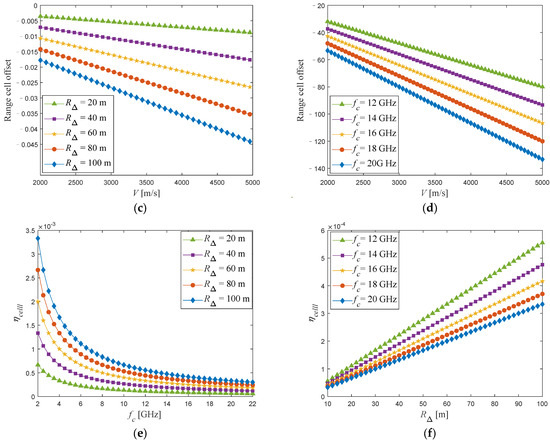

In summary, the flowchart of the joint motion compensation algorithm based on the RTH-NM algorithm proposed in this paper is shown in Figure 3.

Figure 3.

Flowchart of joint motion compensation algorithm based on the RTH-NM algorithm.

4. Experiment and Discussion

In this subsection, different experiments were designed to demonstrate the performance of the proposed algorithm. The experiments are divided into two types. The first type was conducted using electromagnetic simulation data, and echo simulations were performed using the physical optics (PO) method [47]. The second type was conducted using Yak-42 real measurement data, and the effectiveness and robustness of the proposed algorithm were further verified. The orbital motion of the target in the experiments was calculated from the TLE [48] information, and four different imaging apertures were selected for motion compensation experiments. All the images are generated by the range-Doppler algorithm (RDA); the difference lies in the use of different HSMC algorithms and TMC algorithms. In all experiments, the proposed algorithm is compared with three other algorithms. The first method is the minimum-entropy high-speed motion compensation, minimum-entropy range alignment, and minimum-entropy phase compensation algorithm, referred to as the ME+MERA+MEPA algorithm. The second involves using ICPF for high-speed motion compensation, maximum-contrast range alignment, and maximum-contrast autofocus, referred to as the ICPF+MCRA+MCPA algorithm. The other is the minimum-entropy high-speed motion compensation, minimum-entropy range alignment and sparse Bayesian learning (SBL) minimum-entropy phase compensation algorithm in [18], referred to as the ME+MERA+SBLMEPA algorithm.

4.1. Experiments Based on Electromagnetic Simulation

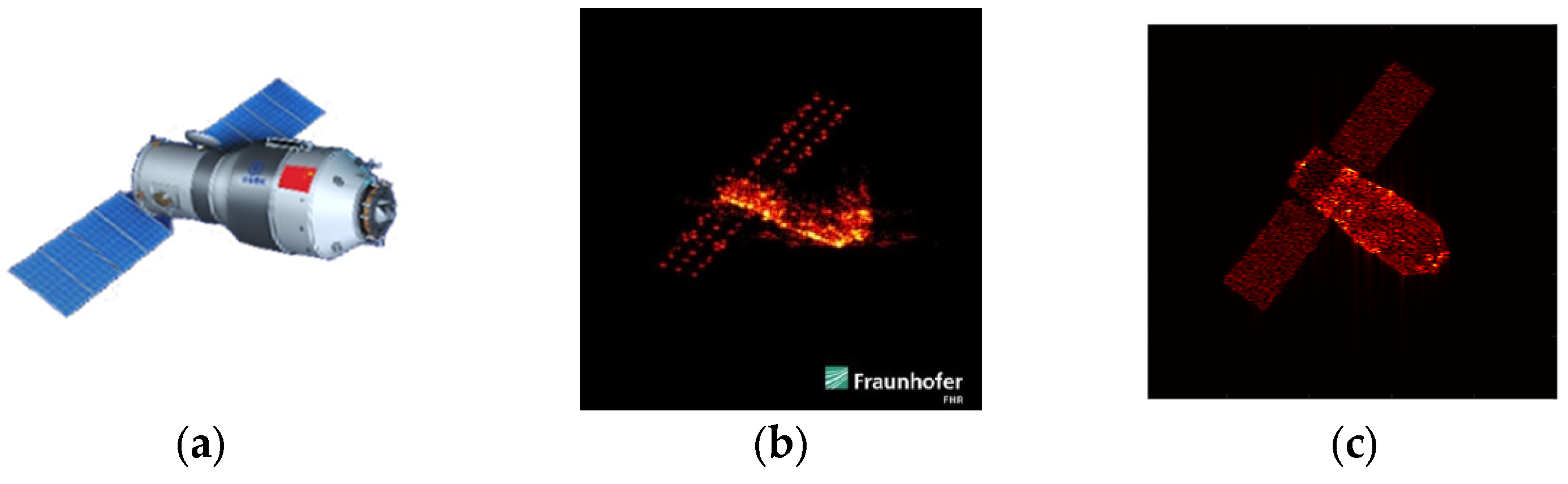

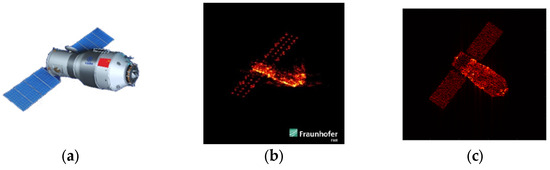

Since satellite data are rarely publicly available, the experimental data in this subsection were acquired through electromagnetic simulations based on the Tiangong-1 (TG-1) satellite model. Its three-dimensional model is depicted in Figure 4a. All simulations utilized triangular facet models, segmenting the target surface into tens of thousands of equivalent scatterings. To illustrate the effectiveness of the EM simulation, Figure 4 presents a comparison between the actual ISAR image of TG-1 (Figure 4b) and the EM-simulated ISAR image (Figure 4c). The comparison results indicate that the quality of the generated imagery is comparable to that of the measured ISAR image, thereby supporting the research presented in this paper.

Figure 4.

TG-1 Model and its ISAR imaging results. (a) The CAD model of TG-1 satellite. (b) Real ISAR image. (c) EM simulation ISAR image.

In order to demonstrate the performance of the proposed joint motion compensation algorithm under different motion conditions, different orbit motions are added to the echo. The orbit of the TG-1 satellite is chosen as the simulation orbit, and its TLE [49] is shown in Table 1. The orbit motion of the TG-1 satellite can be calculated by the Simplified General Perturbations 4 (SGP4) model according to the TLE information. The TLE of the TG-1 satellite [49] is listed below.

Table 1.

Radar parameters of the simulation.

- 37820U 11053A 16266.35688463 .00025497 00000-0 24137-3 0 9991,

- 37820 042.7662 24.7762 0015742 351.0529 104.2087 15.66280400 28580 8.

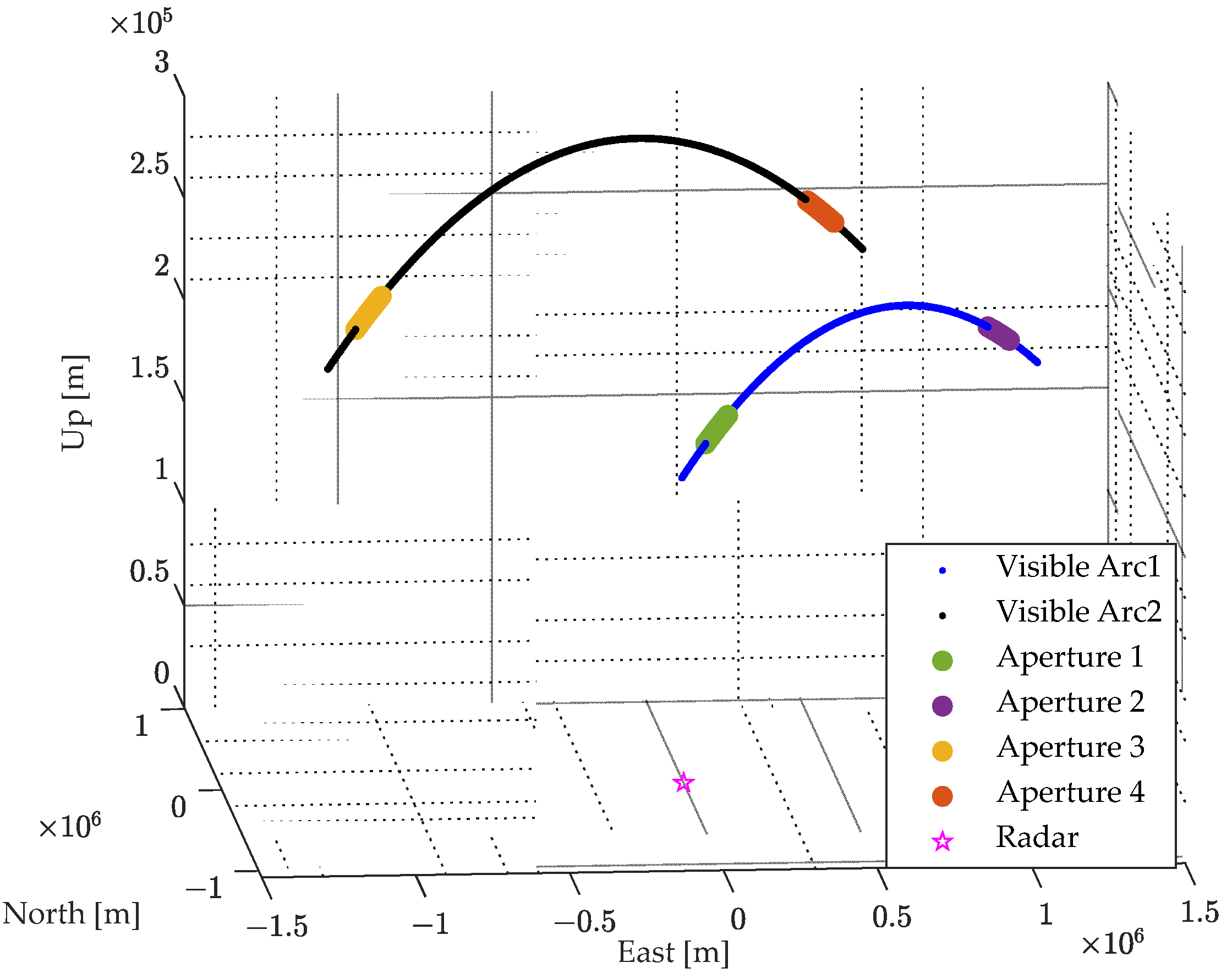

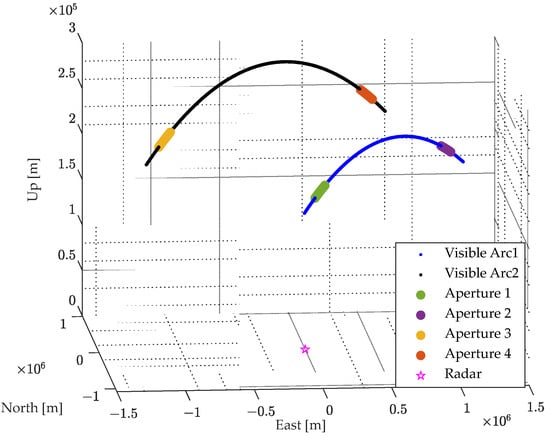

The imaging scene configuration is depicted in Figure 5, where the ground-based radar is situated at (29.7° N, 119.8° E), transmitting an LFM signal. The imaging parameters are detailed in Table 1. For echo simulation, four distinct imaging apertures from two visibility arcs is selected, with the respective imaging periods as follows:

Figure 5.

ISAR imaging scene configuration.

- Aperture 1: 22 September 2016 19:0:11~22 September 2016 19:0:25.

- Aperture 2: 22 September 2016 19:3:11~22 September 2016 19:3:25.

- Aperture 3: 22 September 2016 20:35:58~22 September 2016 20:36:12.

- Aperture 4: 22 September 2016 20:39:58~22 September 2016 20:40:12.

Generally, due to the relatively short duration of the imaging CPI, a fourth-order polynomial is sufficient to describe the motion of the target. Therefore, the motion of the target is modeled as a fourth-order polynomial. The coefficients for the polynomials of various orders for imaging apertures 1–4 are shown in Table 2.

Table 2.

Motion parameters of the target in various apertures.

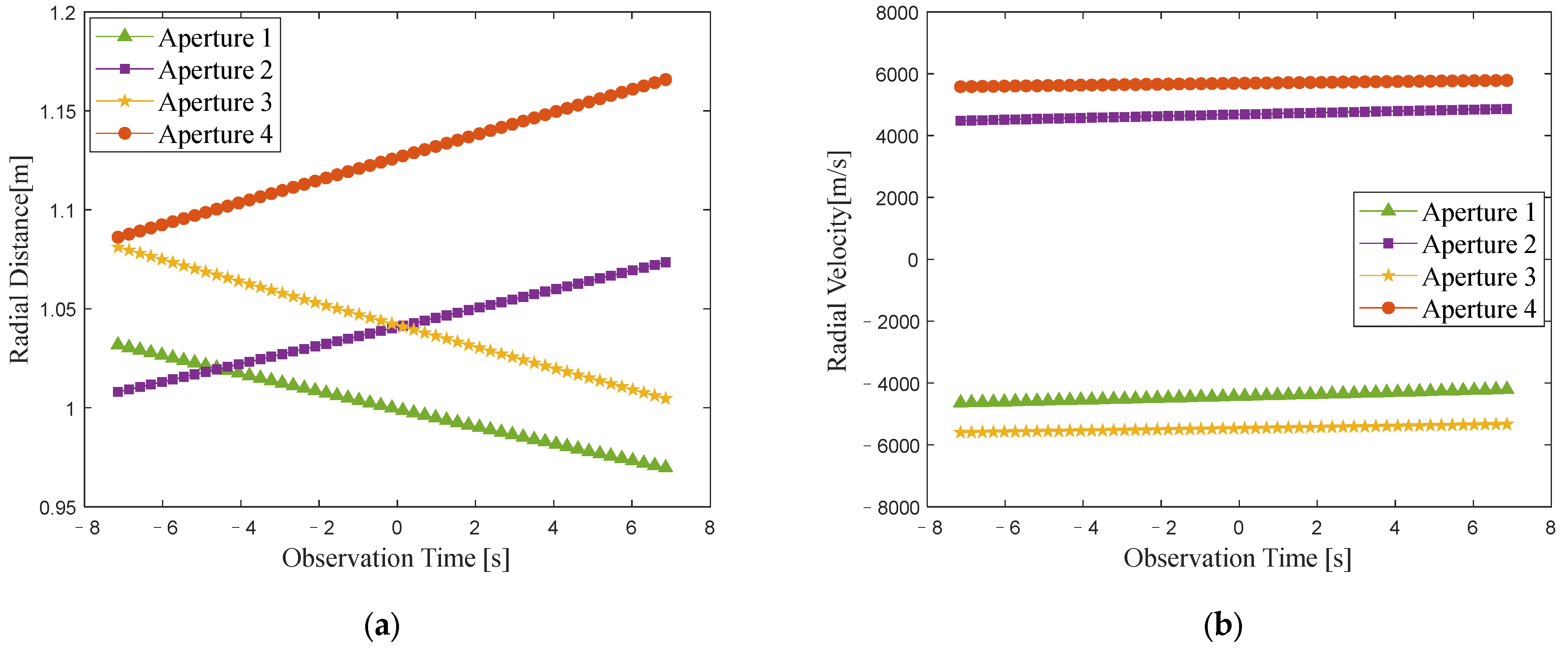

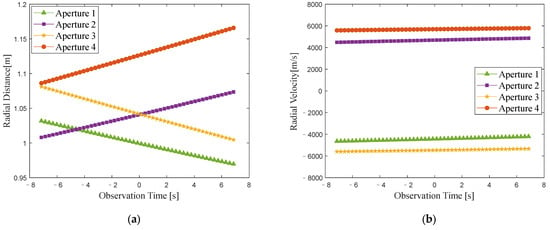

The variations in the radial distance and radial velocity of the target under different imaging apertures are illustrated in Figure 6. The magnitude of radial velocity varies between 4000 and 6000 m/s, with the maximum change in radial velocity reaching approximately 380 m/s within the CPI. According to the analysis in Section 2, there are high-speed motion errors in the echo, which can cause range profile stretching and range cell shifts. Traditional methods use a single echo to estimate the velocity to operate HSMC, leading to residual errors in high-speed motion that affect the precision of subsequent TMC. This impact is more severe in the case of low SNR. After employing appropriate HSMC methods and TMC methods, the ISAR image of the target can be obtained. It is worth noting that since the raw echo without any compensation cannot be focused for imaging and lacks reference value, we directly present the ISAR imaging results after HSMC and TMC under different imaging apertures.

Figure 6.

Variations in radial distance and radial velocity within each imaging aperture. (a) Variation in the radial distance. (b) Variation in the radial velocity.

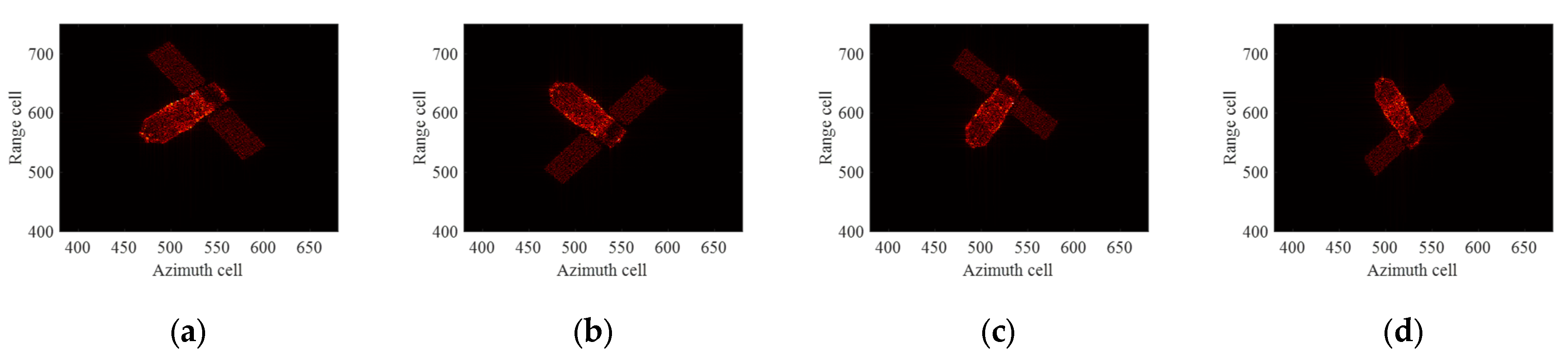

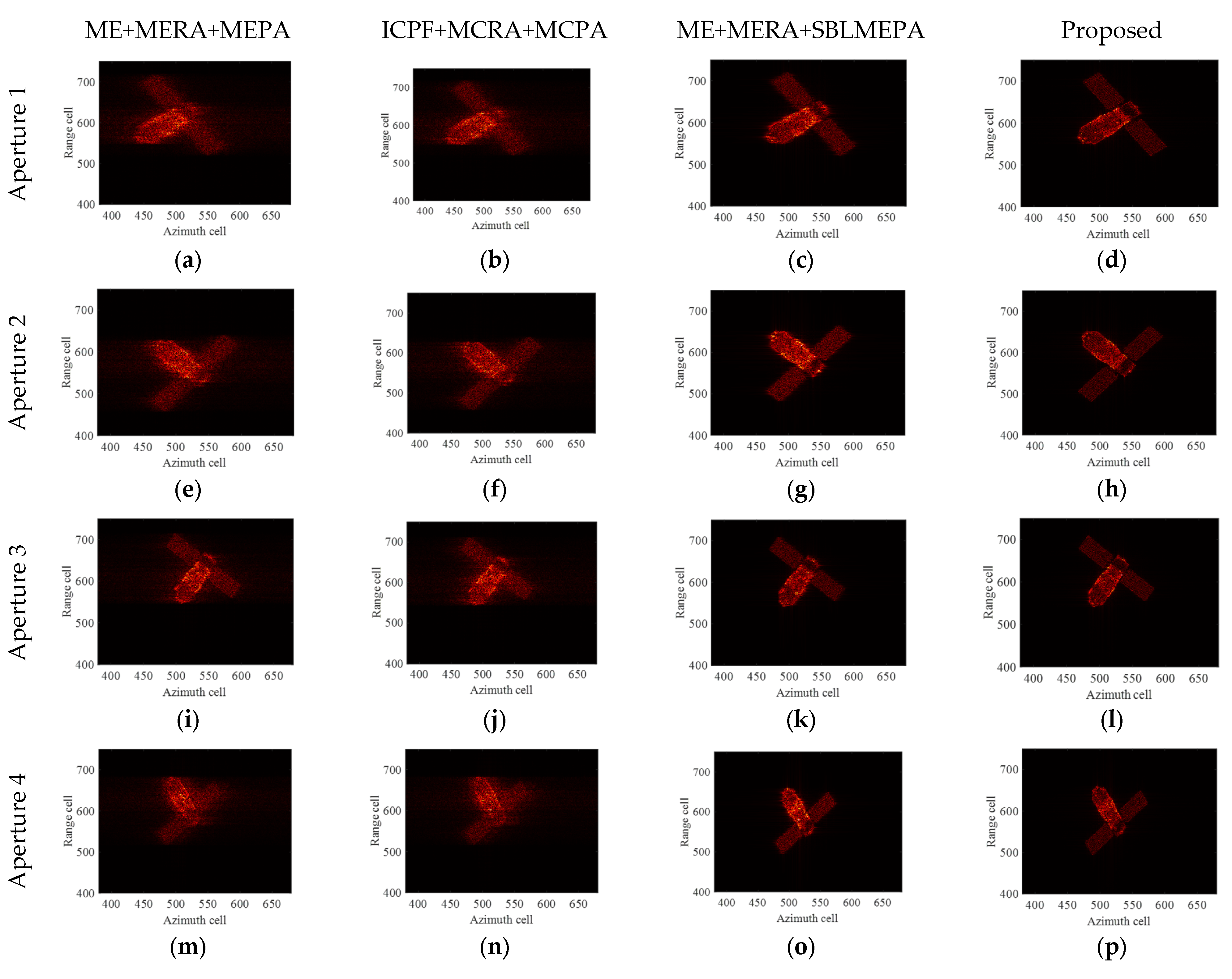

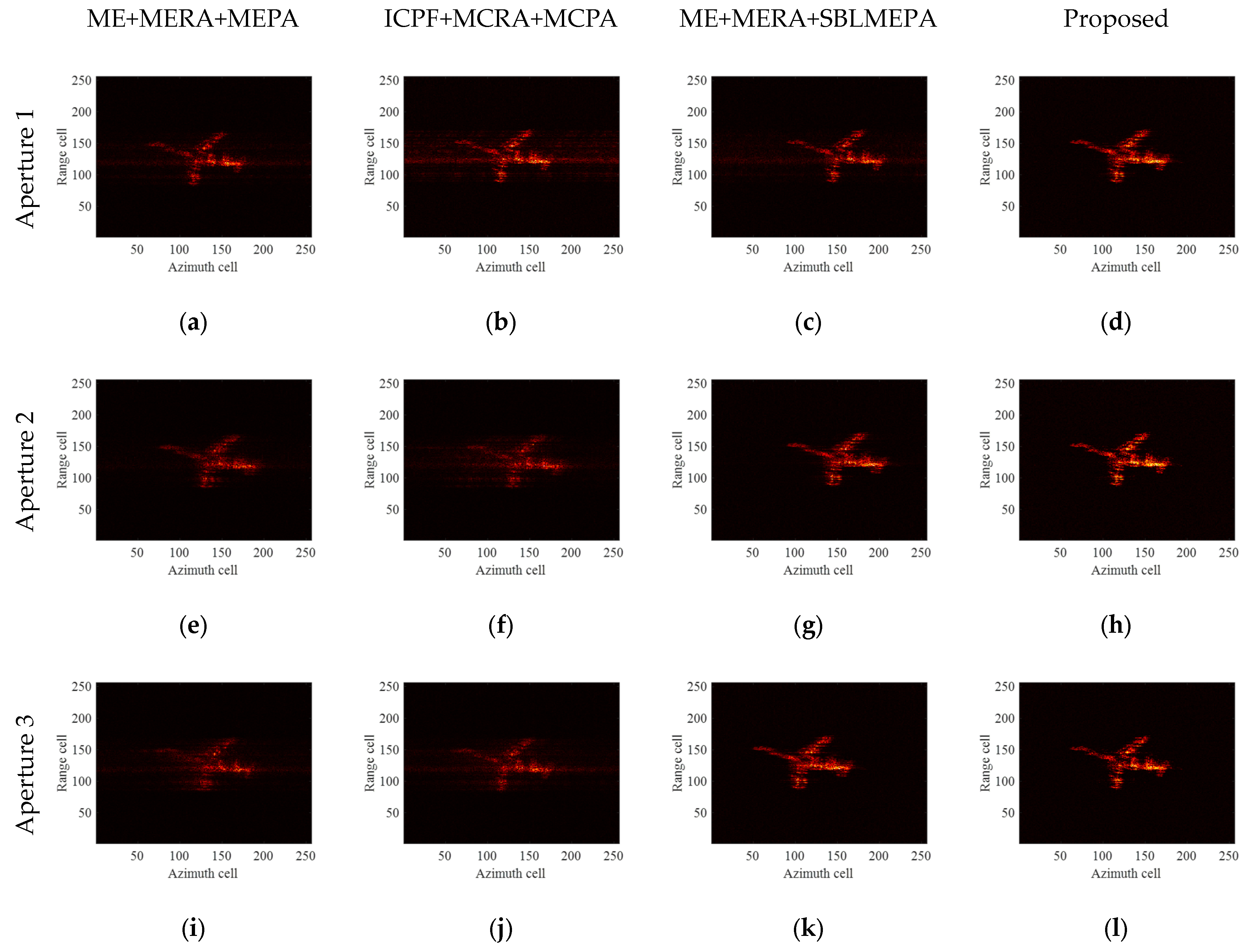

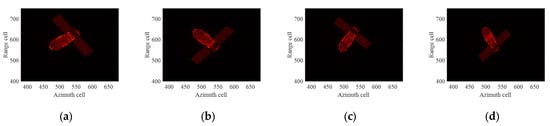

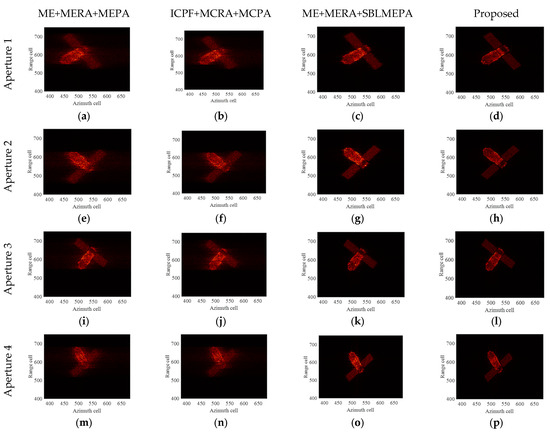

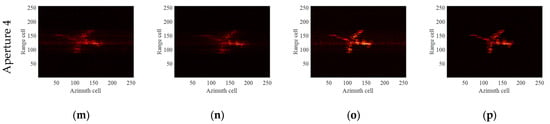

The ideal ISAR images under four different imaging apertures are given as shown in Figure 7. It can be seen that the imaging results under different imaging apertures are significantly different due to the different imaging perspectives and the motion states of the target. The first column of Figure 8 displays the ISAR images obtained after compensation using the ME+MERA+MEPA method, and the second column of Figure 8 shows the ISAR images obtained after compensation using the ICPF+MCRA+MCPA algorithm. It can be observed that the image focusing quality achieved by the ME+MERA+MEPA algorithm is essentially the same as that of the ICPF+MCRA+MCPA algorithm. Due to residual errors in HSMC, both images are slightly defocused and blurred. The third column of Figure 7 displays the focused ISAR images obtained by the ME+MERA+SBLMEPA algorithm. Due to the phase compensation based on sparse Bayesian entropy minimization adopted in the ME+MERA+SBLMEPA algorithm, the defocusing and blurring of the images are eliminated to a certain extent, and the focusing quality of the images is higher than that of the images obtained by the ME+MERA+MEPA algorithm and the ICPF+MCRA+MCPA algorithm. The fourth column of Figure 8 displays the focused ISAR images obtained by the proposed algorithm. It can be seen that the proposed algorithm obtains a better focused image due to joint compensation for high-speed motion and translational motion, which eliminates the residual error. In order to quantify the imaging results, the entropy of the images after motion compensation by different algorithms is given, as shown in Table 3. It can be seen from Table 3 that compared with the ME+MERA+MEPA algorithm, the ICPF+MCRA+MCPA algorithm, and the ME+MERA+SBLMEPA algorithm, the image entropy obtained by the proposed algorithm is smaller and closer to the ideal image. Therefore, the proposed method has better motion compensation performance.

Figure 7.

Ideal ISAR images for different imaging apertures. (a) Aperture 1; (b) Aperture 2; (c) Aperture 3; (d) Aperture 4.

Figure 8.

Imaging results of TG-I electromagnetic simulation data under different motion conditions. (a–d) Imaging results by different algorithms of aperture 1; (e–h) imaging results by different algorithms of aperture 2; (i–l) imaging results by different algorithms of aperture 3; and (m–p) imaging results by different algorithms of aperture 4.

Table 3.

Entropy of images acquired by different algorithms.

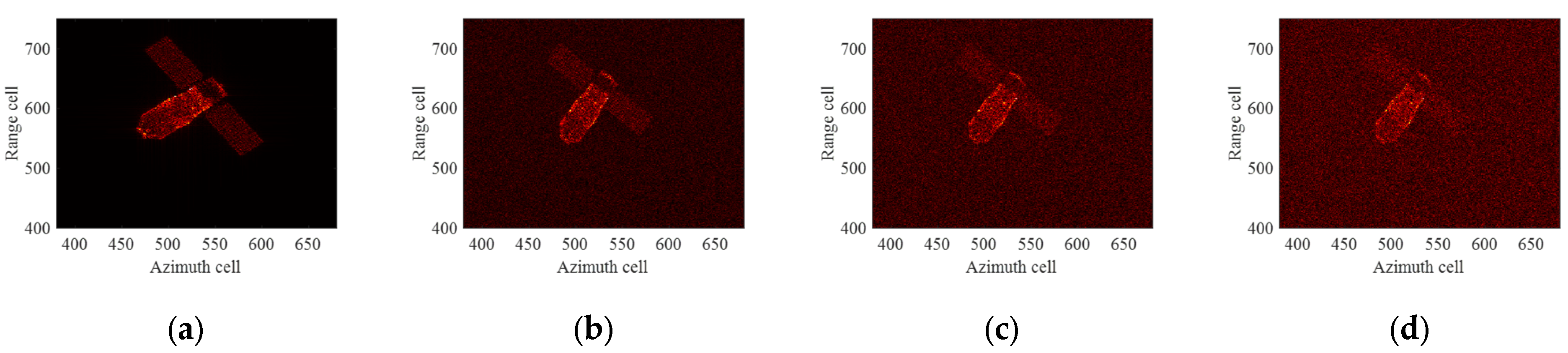

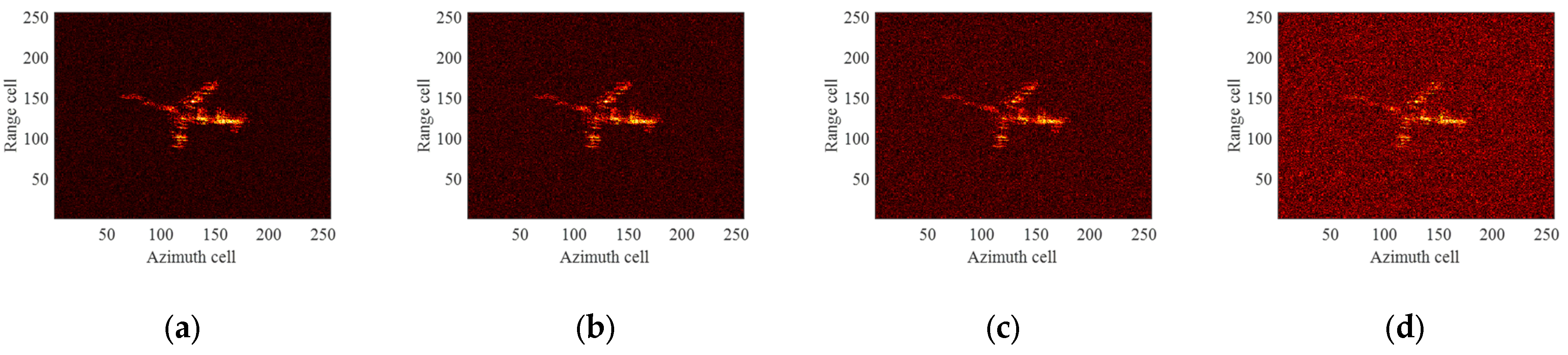

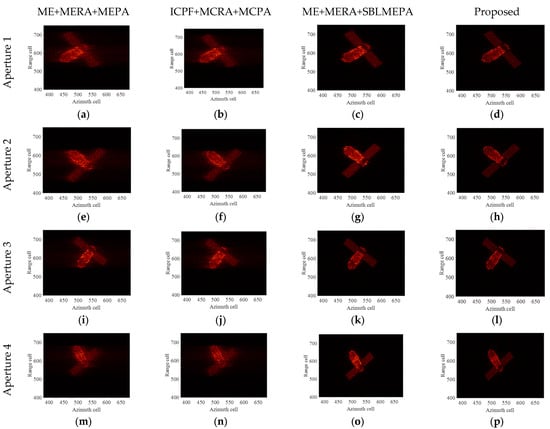

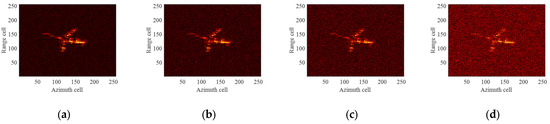

To verify the performance of the proposed algorithm under low-SNR conditions, complex Gaussian white noise is added to the electromagnetic simulation data to produce different SNRs (from 0 dB to −13 dB). The electromagnetic simulation data and orbital data of aperture 3 are chosen for the experiment. Figure 9 presents the ideal images under different SNRs. The ISAR images after compensation by different motion compensation algorithms are shown in Figure 10. The first and second columns show the images obtained after motion compensation using the ME+MERA+MEPA algorithm and the ICPF+MCRA+MCPA algorithm. The third and fourth columns present the images obtained using the ME+MERA+SBLMEPA algorithm and the proposed algorithm. In Figure 10, it is noteworthy that due to intense noise, there are residual errors in HSMC, and the correlation of the HRRP is reduced, leading to a decrease in the precision of TMC. As a result, the images obtained by the traditional motion compensation algorithms are of poor quality with artifacts in the images. When the SNR is less than −5 dB, the images begin to defocus, and when the SNR is less than −9 dB, all traditional motion compensation algorithms fail, making focused imaging virtually impossible. Compared to the other two algorithms, the ME+MERA+SBLMEPA algorithm performs better, but it also has limited performance when the SNR is lower than −9 dB. This is due to the fact that at this time, the intense noise will result in residual errors in motion compensation. Consequently, it is not possible to enhance the image focusing effect by improving the accuracy of PA. In contrast, the proposed algorithm can achieve precise motion compensation and achieve better focusing results when the SNR is not lower than −13 dB. Table 4 provides the entropy of motion-compensated images after applying different algorithms at various SNRs. Table 4 indicates that the proposed algorithm demonstrates the best performance, achieving the lowest image entropy compared to other algorithms and coming closer to the ideal image entropy. Therefore, the motion compensation performance and robustness of the proposed algorithm are further demonstrated.

Figure 9.

Ideal ISAR images under different SNRs. (a) SNR = 0 dB; (b) SNR = −5 dB; (c) SNR = −9 dB; (d) SNR = −13 dB.

Figure 10.

Imaging results of TG-I electromagnetic simulation data under different SNRs. (a–d) ISAR imaging results obtained by different algorithms, SNR = 0 dB; (e–h) ISAR imaging results obtained by different algorithms, SNR = −5 dB; (i–l) ISAR imaging results obtained by different algorithms, SNR = −9 dB; and (m–p) ISAR imaging results obtained by different algorithms, SNR = −13 dB.

Table 4.

Entropy of images under different SNRs.

Experiments show that the proposed algorithm is unable to compensate for target motion when the SNR is below −13 dB and decreases further, resulting in severe blurring of the compensated images. This is because the relationship between focus quality and image entropy is not consistent when the data contain very strong noise, and the image entropy depends almost entirely on the strong noise, independent of the joint motion compensation. In this case, a higher SNR gain can be obtained using more pulses for the imaging process, and then well-focused images can be generated by the proposed algorithm. Overall, the algorithm shows good robustness when dealing with noise.

4.2. Experiments Based on Measured Yak-42 Data

In order to verify the performance of the proposed algorithm on the measured data, this section conducts a performance analysis of the proposed algorithm using the Yak-42 measured data. The orbital motion with different apertures and different noises are added to the data, and different TSMC and TMC algorithms are executed. The YAK-42 aircraft dataset was recorded by a C-band ISAR experimental system with a center frequency of 5.52 GHz and a bandwidth 400 MHz. The de-chirp sampling rate is 10 MHz.

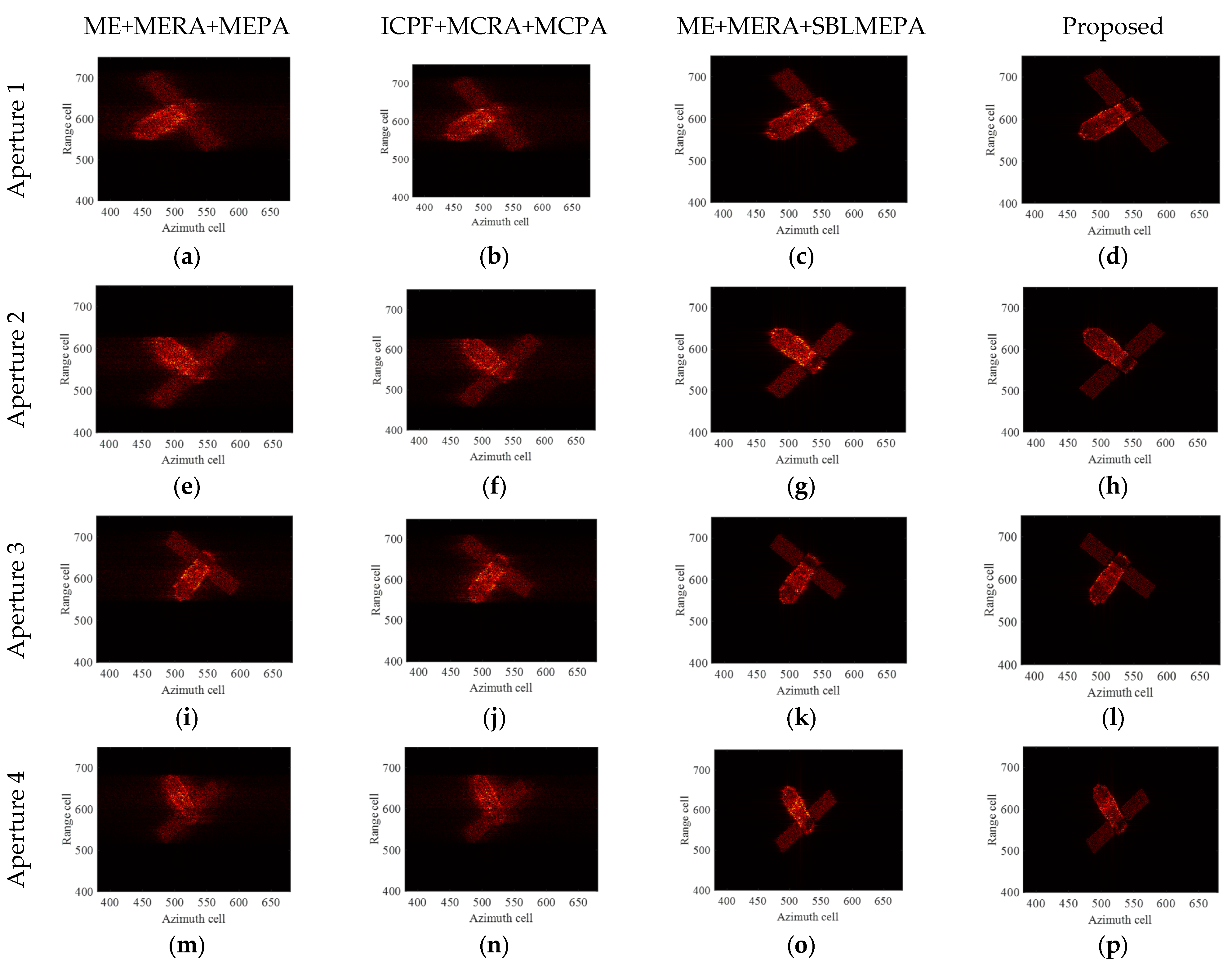

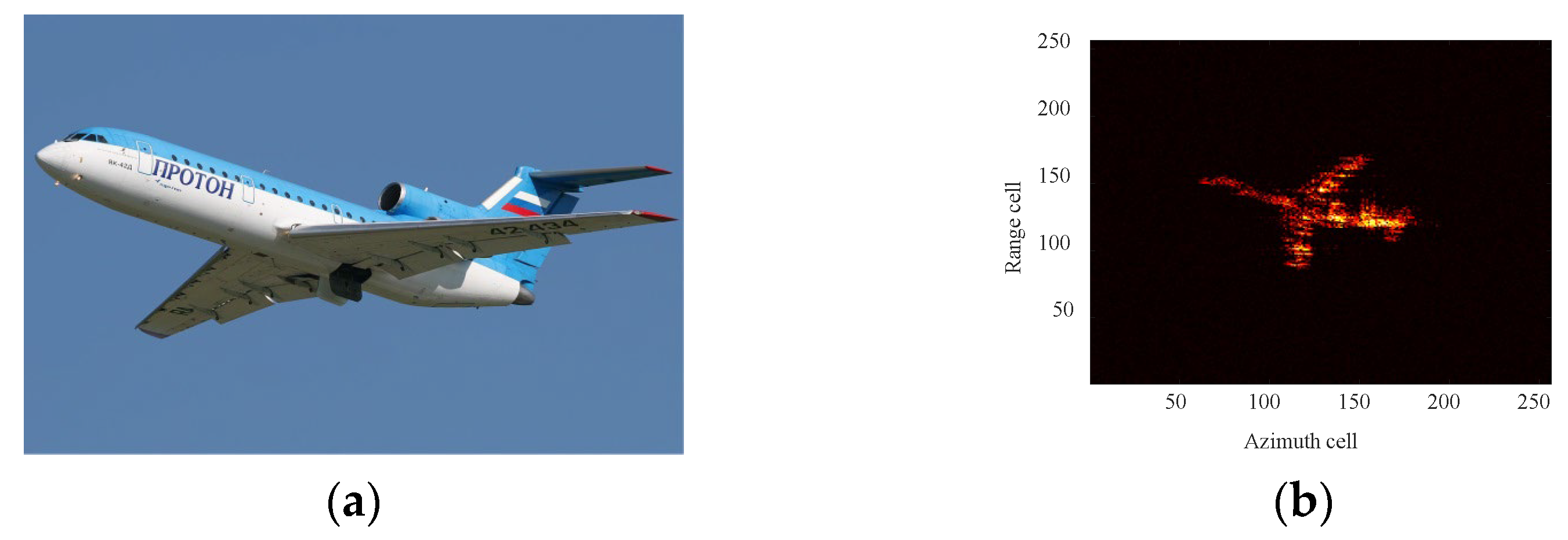

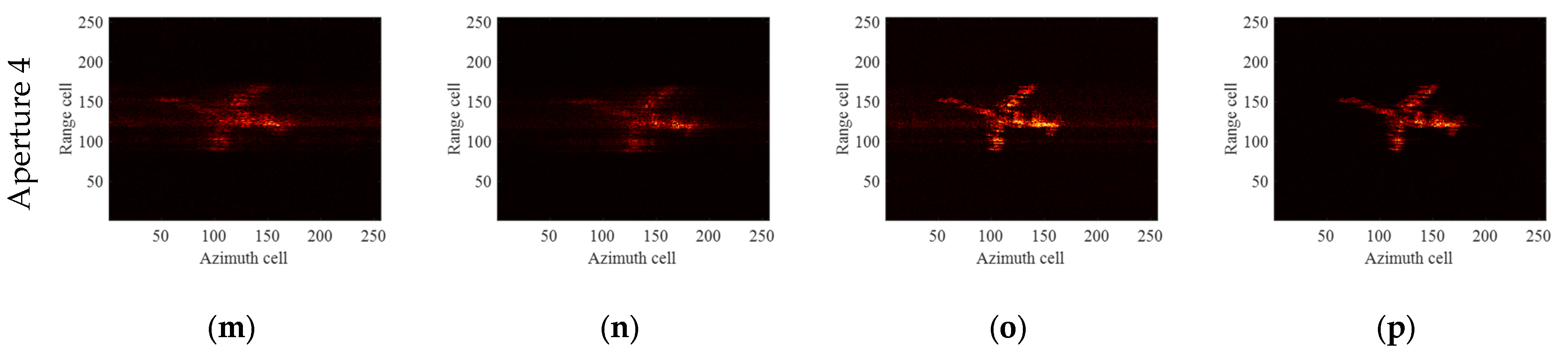

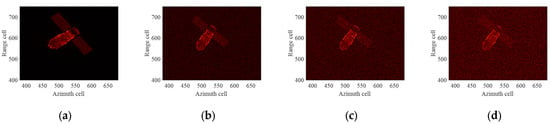

The image of the Yak-42 aircraft is shown in Figure 11a, and its ideal ISAR image is shown in Figure 11b. The velocity of the aircraft is relatively small compared to that of space targets, so the effect of speed on the echo can be essentially ignored. Therefore, the motions of different imaging apertures from Table 2 were incorporated into the raw radar echo of YAK-42. As with the previous experiment, different algorithms are used for HSMC and TMC, and the corresponding imaging results are shown in Figure 12.

Figure 11.

Optical image and ideal ISAR imaging of Yak-42 airplane. (a) Optical image; (b) ISAR image.

Figure 12.

Imaging results of Yak-42 measured data under different motion conditions. (a–d) Imaging results by different algorithms of aperture 1; (e–h) imaging results by different algorithms of aperture 2; (i–l) imaging results by different algorithms of aperture 3; and (m–p) imaging results by different algorithms of aperture 4.

It can be clearly seen from Figure 12 that compared with the ME+MERA+MEPA algorithm, the ICPF+MCRA+MCPA algorithm, and the ME+MERA+SBLMEPA algorithm, no matter which aperture movement is added to the YAK-42 measured data, the proposed algorithm can obtain significantly clearer images. On the contrary, the images obtained by the ME+MERA+MEPA algorithm and the ICPF+MCRA+MCPA algorithm have poor focusing quality, the images are defocused, and there are many artifacts. Compared with the ME+MERA+MEPA algorithm and the ICPF+MCRA+MCPA algorithm, the focusing quality of the images obtained by utilizing the ME+MERA+SBLMEPA algorithm has been improved, but there is still a small amount of artifacts and localized defocusing. In order to better show the advantages of the proposed algorithm, Table 5 gives the image entropy after motion compensation using different HSMC and TMC algorithms. It can be seen that the image entropy compensated for by the proposed algorithm is the smallest, and it still has the best performance on the measured data.

Table 5.

Entropy of images acquired by different algorithms using Yak-42 measured data.

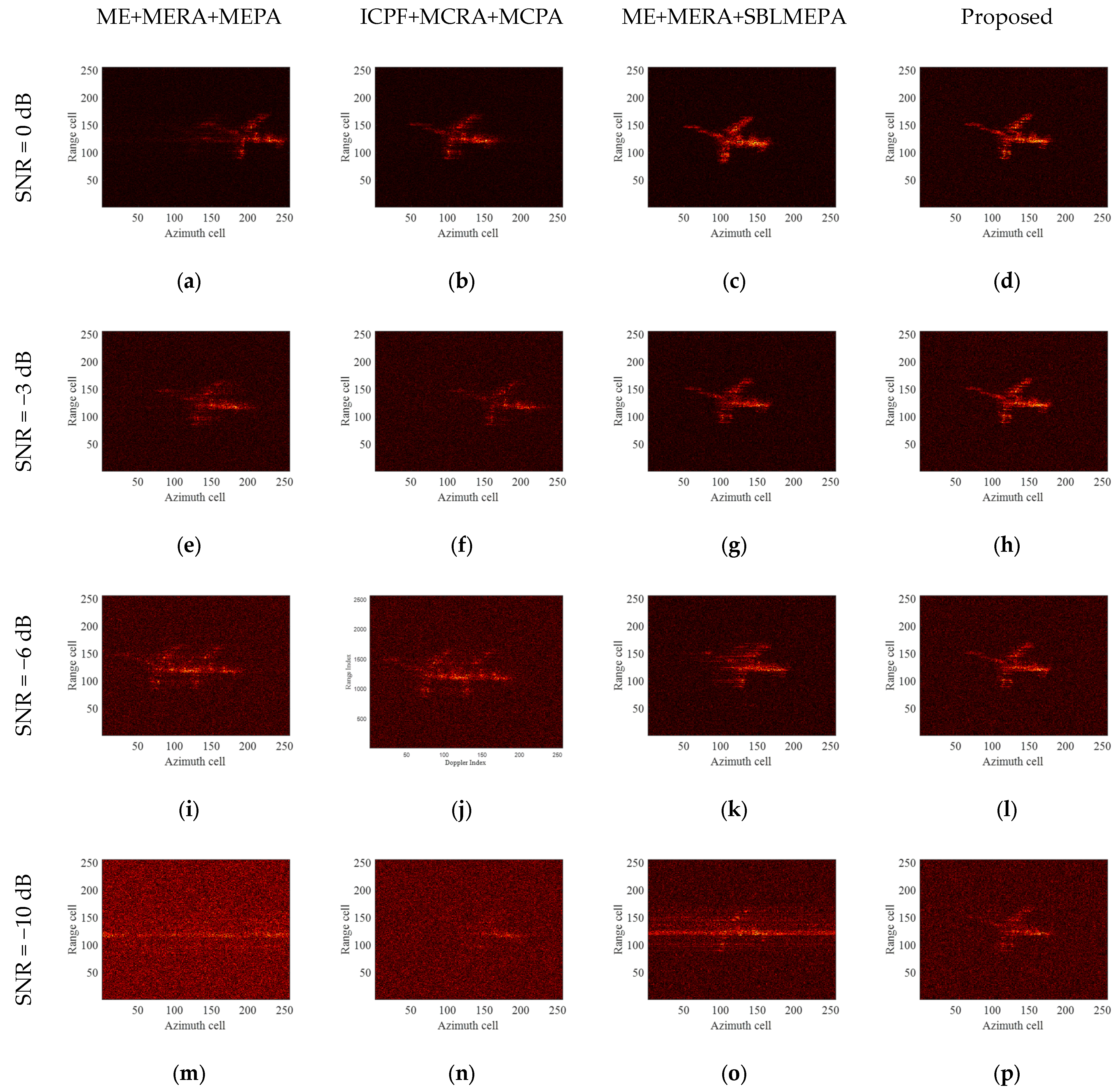

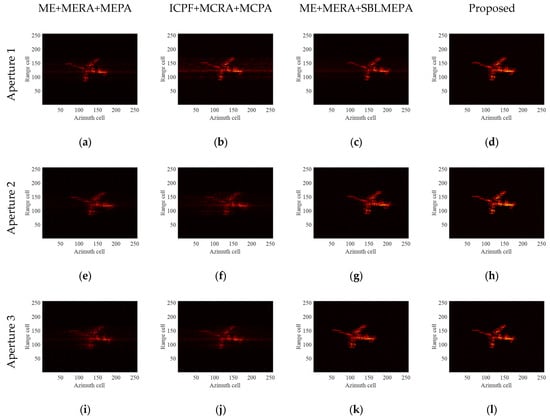

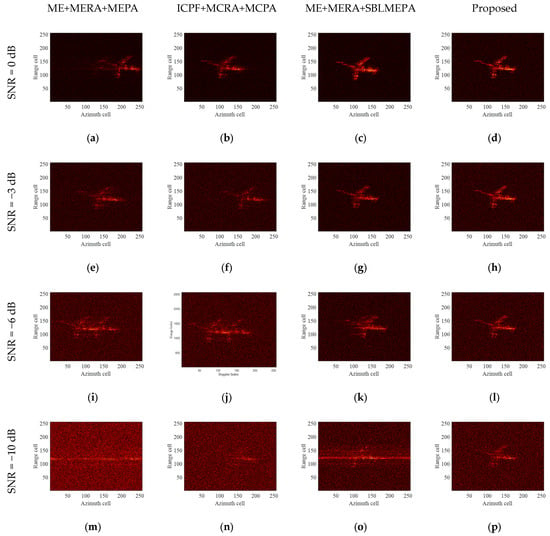

In order to verify the performance of the proposed algorithm under different noises, noises with different SNR are added to the YAK-42 data, and the motion parameters of the target are consistent with those of aperture 3. The ideal ISAR images under different SNRs are shown in Figure 13. The imaging results after using different HSMC and TMC algorithms are shown in Figure 14. Different columns are the imaging results obtained using different motion compensation algorithms. By comparison, it can be found that the ME+MERA+MEPA algorithm and the ICPF+MCRA+MCPA algorithm are basically unable to image when the SNR is lower than −3 dB because the Yak-42 measured data are more complex than the electromagnetic simulation data. The motion compensation performance of the ME+MERA+SBLMEPA algorithm on Yak-42 measured data is somewhat better than the other two conventional algorithms, but it also fails to focus the image when the SNR is below −5 dB. On the contrary, the proposed algorithm obtains a well-focused image at a low SNR (no less than −10 dB). Similarly, the entropy of the compensated images under different SNRs is shown in Table 6. It can be seen that the performance of the proposed method is the best.

Figure 13.

Ideal ISAR images under different SNRs. (a) SNR = 0 dB; (b) SNR = −3 dB; (c) SNR = −6 dB; (d) SNR = −10 dB.

Figure 14.

Imaging results of Yak-42 measured data under different SNRs. (a–d) ISAR imaging results obtained by different algorithms, SNR = 0 dB; (e–h) ISAR imaging results obtained by different algorithms, SNR = −3 dB; (i–l) ISAR imaging results obtained by different algorithms, SNR = −6 dB; and (m–p) ISAR imaging results obtained by different algorithms, SNR = −10 dB.

Table 6.

Entropy of images with different SNRs based on Yak-42 measured data.

The complexity of the algorithm is also one of the factors that need to be considered in the actual processing. Table 7 gives a comparison of the operation time of the proposed algorithm with other algorithms. The CPU time is obtained by Matlab 2021a coding using a personal computer equipped with an Intel Core i5-6200 U 2.4 GHz processor and 16 GB of memory. A total of 200 Monte Carlo simulations were performed and averaged. It can be seen from Table 7 that the proposed algorithm takes longer than the other three algorithms. This is because the proposed algorithm accurately estimates the target motion parameters, and the complexity of the RTH algorithm itself is high, resulting in a longer operation time. However, compared with a shorter time to obtain defocused images, if high-quality ISAR images can be obtained, it is acceptable to use a relatively long time. At the same time, if the computing performance of the computer can be enhanced, the time consumption of this algorithm will be reduced further.

Table 7.

Computation time comparison.

5. Conclusions

The high-speed motion of a space target will lead to a stretch in HRRP and affect the accuracy of the subsequent TMC. Under low-SNR conditions, the residual error of high-speed motion may even lead to the failure of traditional TMC algorithms. A new parametric joint motion compensation algorithm is proposed for the ISAR imaging of space targets under low-SNR conditions. In this paper, a joint compensation algorithm for high-speed motion and translational motion is innovatively carried out, which reduces the influence of residual error of high-speed motion on TMC. In this algorithm, the target motion in a CPI is modeled as a high-order polynomial, and a parameterized minimum entropy optimization model is established. By making full use of the TLE information and radar measurement information of the target, the RTH-NM algorithm is used to quickly and accurately search the motion polynomial coefficients of the target, so as to realize joint compensation for the high-speed motion and translation motion of the target and to obtain high-quality ISAR imaging. The algorithm has good noise robustness and can accurately compensate for the high-speed motion and translation motion of the target under low-SNR conditions. Electromagnetic simulation data and measured data experiments verify the effectiveness of the proposed algorithm. However, our algorithm is more complex than the traditional algorithm, which is our next improvement. Meanwhile, the performance of the proposed algorithm is limited under the conditions of target acceleration maneuvering and sparse apertures. Our future work will focus on these issues.

Author Contributions

Conceptualization, J.L. and C.Y.; methodology, J.L. and Y.Z.; software, J.L., C.Y. and C.X.; writing—original draft preparation, J.L. and Y.Z.; writing—review and editing, J.L., J.H., and P.L.; visualization, J.H. and P.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are available on request from the authors.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhu, X.; Zhang, Y.; Lu, W.; Fang, Y.; He, J. An ISAR Image Component Recognition Method Based on Semantic Segmentation and Mask Matching. Sensors 2023, 23, 7955. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Jiang, L.; Li, M.; Ren, X.; Wang, Z. Slow-Spinning Spacecraft Cross-Range Scaling and Attitude Estimation Based on Sequential ISAR Images. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 7469–7485. [Google Scholar] [CrossRef]

- Hou, C.; Zhang, R.; Yang, K.; Li, X.; Yang, Y.; Ma, X.; Guo, G.; Yang, Y.; Liu, L.; Zhou, F. Non-Cooperative Target Attitude Estimation Method Based on Deep Learning of Ground and Space Access Scene Radar Images. Mathematics 2023, 11, 745. [Google Scholar] [CrossRef]

- Lu, W.; Zhang, Y.; Xu, C.; Lin, C.; Huo, Y. A Deep Learning-Based Satellite Target Recognition Method Using Radar Data. Sensors 2019, 19, 2008. [Google Scholar] [CrossRef] [PubMed]

- Duan, J.; Ma, Y.; Zhang, L.; Xie, P. Abnormal Dynamic Recognition of Space Targets From ISAR Image Sequences With SSAE-LSTM Network. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Cui, X.-C.; Fu, Y.-W.; Su, Y.; Chen, S.-W. Space Target Attitude Estimation Based on Projection Matrix and Linear Structure. IEEE Signal Process. Lett. 2023, 30, 918–922. [Google Scholar] [CrossRef]

- Zhou, Z.; Jin, X.; Liu, L.; Zhou, F. Three-Dimensional Geometry Reconstruction Method from Multi-View ISAR Images Utilizing Deep Learning. Remote Sens. 2023, 15, 1882. [Google Scholar] [CrossRef]

- Yang, Z.; Zhang, C.; Liang, D.; Xie, X. An Efficient Inverse Synthetic Aperture Imaging Approach for Non-Cooperative Space Targets under Low-Signal-to-Noise-Ratio Conditions. Electronics 2023, 12, 4527. [Google Scholar] [CrossRef]

- He, T.; Tian, B.; Wang, Y.; Li, S.; Xu, S.; Chen, Z. Joint ISAR imaging and azimuth scaling under low SNR using parameterized compensation and calibration method with entropy minimum criterion. Eurasip J. Adv. Signal Process. 2023, 2023, 68. [Google Scholar] [CrossRef]

- Kim, J.-W.; Kim, S.; Cho, H.; Song, W.-Y.; Yu, J.-W. Fast ISAR motion compensation using improved stage-by-stage approaching algorithm. J. Electromagn. Waves Appl. 2021, 35, 1587–1600. [Google Scholar] [CrossRef]

- Ustun, D.; Toktas, A. Translational Motion Compensation for ISAR Images Through a Multicriteria Decision Using Surrogate-Based Optimization. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4365–4374. [Google Scholar] [CrossRef]

- Yuan, Y.; Luo, Y.; Kang, L.; Ni, J.; Zhang, Q. Range Alignment in ISAR Imaging Based on Deep Recurrent Neural Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 3154586. [Google Scholar] [CrossRef]

- Liu, F.; Huang, D.; Guo, X.; Feng, C. Translational Motion Compensation for Maneuvering Target Echoes With Sparse Aperture Based on Dimension Compressed Optimization. IEEE Trans. Geosci. Remote Sens. 2022, 60, 3169275. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, L.; Bi, G.; Liu, H.; Bi, H. Novel ISAR Range Alignment via Minimizing the Entropy of the Sum Range Profile. In Proceedings of the 21st International Radar Symposium (IRS), Warsaw, Poland, 5–7 October 2020; pp. 135–138. [Google Scholar]

- Wang, J.; Liu, X.; Zhou, Z. Minimum-entropy phase adjustment for ISAR. IEE Proc. Radar Sonar Navig. 2004, 151, 203–209. [Google Scholar] [CrossRef]

- Martorella, M.; Berizzi, F.; Bruscoli, S. Use of genetic algorithms for contrast and entropy optimization in ISAR autofocusing. Eurasip J. Appl. Signal Process. 2006, 2006, 87298. [Google Scholar] [CrossRef]

- Kang, M.-S.; Bae, J.-H.; Lee, S.-H.; Kim, K.-T. Efficient ISAR Autofocus via Minimization of Tsallis Entropy. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 2950–2960. [Google Scholar] [CrossRef]

- Xia, S.; Wang, Y.; Zhang, J.; Dai, F. High-Speed Maneuvering Target Inverse Synthetic Aperture Radar Imaging and Motion Parameter Estimation Based on Fast Spare Bayesian Learning and Minimum Entropy. Remote Sens. 2023, 15, 3376. [Google Scholar] [CrossRef]

- Chen, C.C.; Andrews, H.C. Target-motion-induced radar imaging. IEEE Trans. Aerosp. Electron. Syst. 1980, 16, 2–14. [Google Scholar] [CrossRef]

- Liu, A.; Li, Y.; Zhu, X. ISAR Range Alignment Using Improved Envelope Minimum Entropy Algorithm. Signal Process. 2005, 21, 49–51. [Google Scholar]

- Li, Y.; Zhang, T.; Ding, Z.; Gao, W.; Chen, J. An improved inverse synthetic aperture radar range alignment method based on maximum contrast. J. Eng. 2019, 2019, 5467–5470. [Google Scholar] [CrossRef]

- YuanBao, H.; YiMing, Z.; Zheng, B. The SAR/ISAR autofocus based on the multiple dominant scatterers synthesis. J. Xidian Univ. 2001, 28, 105–110. [Google Scholar]

- Itoh, T.; Sueda, H.; Watanabe, Y. Motion compensation for ISAR via centroid tracking. IEEE Trans. Aerosp. Electron. Syst. 1996, 32, 1191–1197. [Google Scholar] [CrossRef]

- Wahl, D.E.; Eichel, P.H.; Ghiglia, D.C.; Jakowatz, C.V. Phase gradient autofocus—A robust tool for high-resolution sar phase correction. IEEE Trans. Aerosp. Electron. Syst. 1994, 30, 827–835. [Google Scholar] [CrossRef]

- Liu, Q.; Wang, Y. A Fast Eigenvector-Based Autofocus Method for Sparse Aperture ISAR Sensors Imaging of Moving Target. IEEE Sens. J. 2019, 19, 1307–1319. [Google Scholar] [CrossRef]

- Munoz-Ferreras, J.M.; Perez-Martinez, F.; Datcu, M. Generalisation of inverse synthetic aperture radar autofocusing methods based on the minimisation of the Renyi entropy. IET Radar Sonar Navig. 2010, 4, 586–594. [Google Scholar] [CrossRef]

- Zhang, S.; Liu, Y.; Li, X.; Bi, G. Autofocusing for Sparse Aperture ISAR Imaging Based on Joint Constraint of Sparsity and Minimum Entropy. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 998–1011. [Google Scholar] [CrossRef]

- Wang, J. Convergence of the fixed-point algorithm in ISAR imaging. Remote Sens. Lett. 2023, 14, 993–1001. [Google Scholar] [CrossRef]

- Cai, J.; Martorella, M.; Chang, S.; Liu, Q.; Ding, Z.; Long, T. Efficient Nonparametric ISAR Autofocus Algorithm Based on Contrast Maximization and Newton’s Method. IEEE Sens. J. 2021, 21, 4474–4487. [Google Scholar] [CrossRef]

- Wang, H.; Yang, Q.; Wang, H.; Deng, B. Precise motion compensation method in terahertz ISAR imaging based on sharpness maximization. Electron. Lett. 2022, 58, 813–815. [Google Scholar] [CrossRef]

- Yang, T.; Shi, H.; Guo, J.; Liu, M. FMCW ISAR Autofocus Imaging Algorithm for High-Speed Maneuvering Targets Based on Image Contrast-Based Autofocus and Phase Retrieval. IEEE Sens. J. 2020, 20, 1259–1267. [Google Scholar] [CrossRef]

- Zhang, L.; Sheng, J.-l.; Duan, J.; Xing, M.-d.; Qiao, Z.-j.; Bao, Z. Translational motion compensation for ISAR imaging under low SNR by minimum entropy. Eurasip J. Adv. Signal Process. 2013, 2013, 33. [Google Scholar] [CrossRef]

- Liu, L.; Zhou, F.; Tao, M.; Sun, P.; Zhang, Z. Adaptive Translational Motion Compensation Method for ISAR Imaging Under Low SNR Based on Particle Swarm Optimization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 5146–5157. [Google Scholar] [CrossRef]

- Liu, F.; Huang, D.; Guo, X.; Feng, C. Joint Range Alignment and Autofocus Method Based on Combined Broyden-Fletcher-Goldfarb-Shanno Algorithm and Whale Optimization Algorithm. IEEE Trans. Geosci. Remote Sens. 2023, 61, 3306474. [Google Scholar] [CrossRef]

- Chen, R.; Jiang, Y.; Liu, Z.; Zhang, Y.; Jiang, B. A novel spaceborne ISAR imaging approach for space target with high-order translational motion compensation and spatial variant MTRC correction. Int. J. Remote Sens. 2023, 44, 6549–6578. [Google Scholar] [CrossRef]

- Tian, B.; Lu, Z.; Yongxiang, L.; Xiang, L. High Velocity Motion Compensation of IFDS Data in ISAR Imaging Based on Adaptive Parameter Adjustment of Matched Filter and Entropy Minimization. IEEE Access 2018, 6, 34272–34278. [Google Scholar] [CrossRef]

- Min, C.; Yaowen, F.; Weidong, J.; Xiang, L.; Zhaowen, Z. High resolution range profile imaging of high speed moving targets based on fractional fourier transform. In Proceedings of the MIPPR 2007: Automatic Target Recognition and Image Analysis; and Multispectral Image Acquisition, Wuhan, China, 15–17 November 2007. [Google Scholar]

- Zhang, S.; Sun, S.; Zhang, W.; Zong, Z.; Yeo, T. High-resolution bistatic ISAR image formation for high-speed and complex-motion targets. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3520–3531. [Google Scholar] [CrossRef]

- Tian, B.; Chen, Z.; Xu, S.; Liu, Y. ISAR imaging compensation of high speed targets based on integrated cubic phase function. In Proceedings of the 8th Symposium on Multispectral Image Processing and Pattern Recognition (MIPPR)—Multispectral Image Acquisition, Processing, and Analysis, Wuhan, China, 26–27 October 2013. [Google Scholar]

- BaoFeng, G.; ZhaoRui, L.; Yi, X.; Lin, S.; Ning, H.; Xiaoxiu, Z. ISAR Speed Compensation Algorithm for High-speed Moving Target Based on Simulate Anneal. In Proceedings of the 2019 IEEE 19th International Conference on Communication Technology (ICCT), Xi’an, China, 16–19 October 2019; pp. 1595–1599. [Google Scholar]

- Sheng, J.; Fu, C.; Wang, H.; Liu, Y. High Speed Motion Compensation for Terahertz ISAR Imaging; Shanghai Radio Equipment Research Institute: Shanghai, China; Shanghai Engineering Research Center of Target Identification and Environment Perception: Shanghai, China; Shanghai Institute of Satellite Engineering: Shanghai, China, 2017. [Google Scholar]

- Liu, Y.; Zhang, S.; Zhu, D.; Li, X. A Novel Speed Compensation Method for ISAR Imaging with Low SNR. Sensors 2015, 15, 18402–18415. [Google Scholar] [CrossRef] [PubMed]

- Dong, L.; Muyang, Z.; Hongqing, L.; Yong, L.; Guisheng, L. A Robust Translational Motion Compensation Method for ISAR Imaging Based on Keystone Transform and Fractional Fourier Transform Under Low SNR Environment. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 2140–2156. [Google Scholar] [CrossRef]

- Wang, J.; Li, Y.; Song, M.; Huang, P.; Xing, M. Noise Robust High-Speed Motion Compensation for ISAR Imaging Based on Parametric Minimum Entropy Optimization. Remote Sens. 2022, 14, 2178. [Google Scholar] [CrossRef]

- Shao, S.; Liu, H.; Wei, J. GEO Targets ISAR Imaging with Joint Intra-Pulse and Inter-Pulse High-Order Motion Compensation and Sub-Aperture Image Fusion at ULCPI. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- ShangHui, Z.; YongXiang, L.; Xiang, L. Fast Entropy Minimization Based Autofocusing Technique for ISAR Imaging. IEEE Trans. Signal Process. 2015, 63, 3425–3434. [Google Scholar] [CrossRef]

- Boag, A. A fast physical optics (FPO) algorithm for high frequency scattering. IEEE Trans. Antennas Propag. 2004, 52, 197–204. [Google Scholar] [CrossRef]

- Lemmens, S.; Krag, H. Two-Line-Elements-Based Maneuver Detection Methods for Satellites in Low Earth Orbit. J. Guid. Control Dyn. 2014, 37, 860–868. [Google Scholar] [CrossRef]

- Ma, J.-T.; Gao, M.-G.; Guo, B.-F.; Dong, J.; Xiong, D.; Feng, Q. High resolution inverse synthetic aperture radar imaging of three-axis-stabilized space target by exploiting orbital and sparse priors. Chin. Phys. B 2017, 26, 108401. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).