Analysis of Gait Kinematics in Smart Walker-Assisted Locomotion in Immersive Virtual Reality Scenario

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Materials

2.3. UFES vWalker

2.4. The 3D Motion Capture System

2.5. Immersive VR Scenario

2.6. Experimental Protocol

2.7. Variables

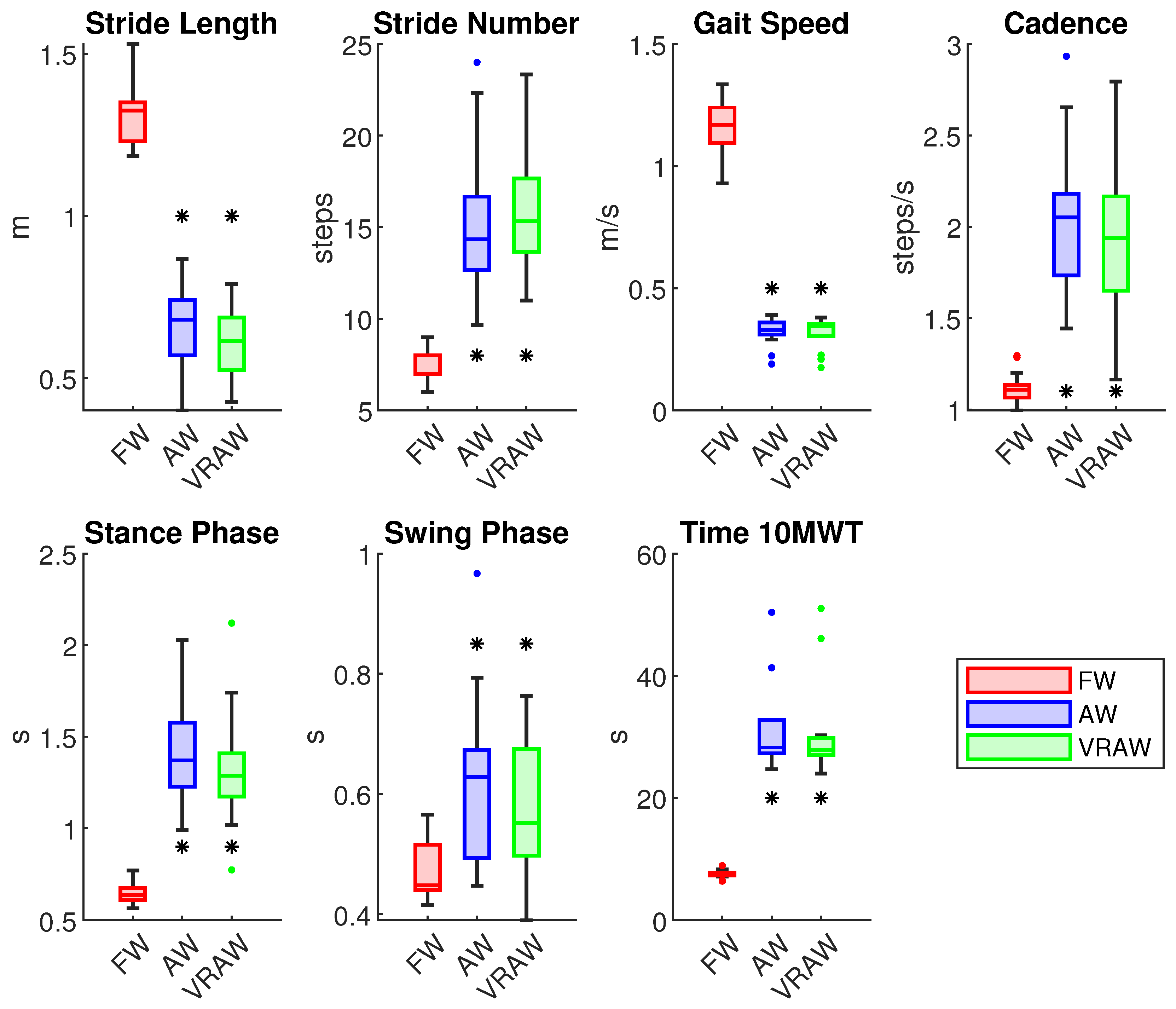

- Stride length (meters): the mean of the distance between two consecutive heel strikes of the same foot in the 10 MWT.

- Stride number: the number of steps in the 10 MWT.

- Gait speed (meters per second): the mean walk velocity in the 10 MWT.

- Cadence (steps per second): the mean number of steps per second in the 10 MWT.

- Stance phase (seconds): the mean time in the stance phase in each gait cycle in the 10 MWT.

- Swing phase (seconds): the mean time in the swing phase in each gait cycle in the 10 MWT.

- Time (seconds): the time to complete the 10 MWT.

2.8. Statistical Analysis

3. Results

3.1. Spatiotemporal Parameters

3.2. Hip Joint

3.3. Knee Joint

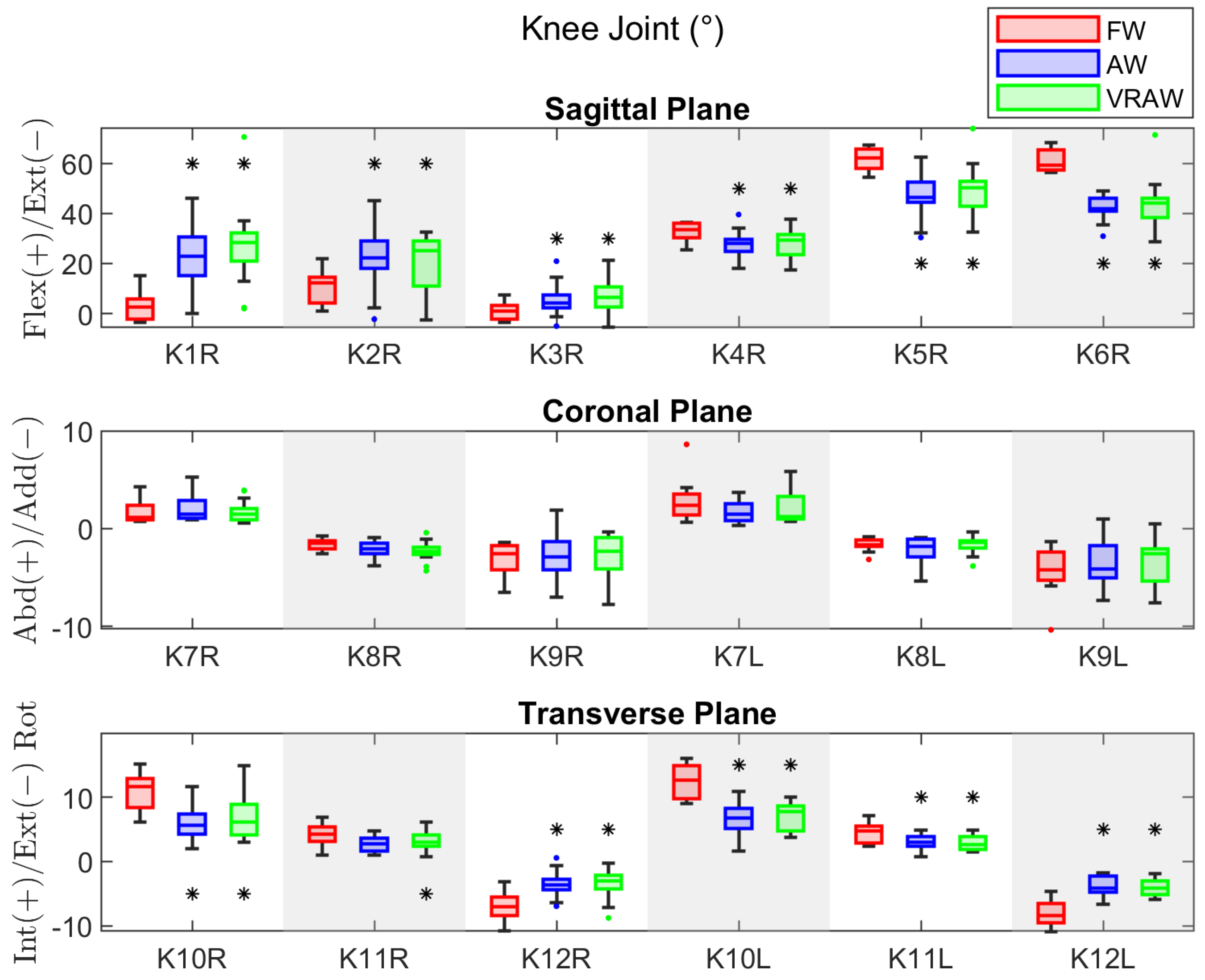

3.4. Ankle Joint

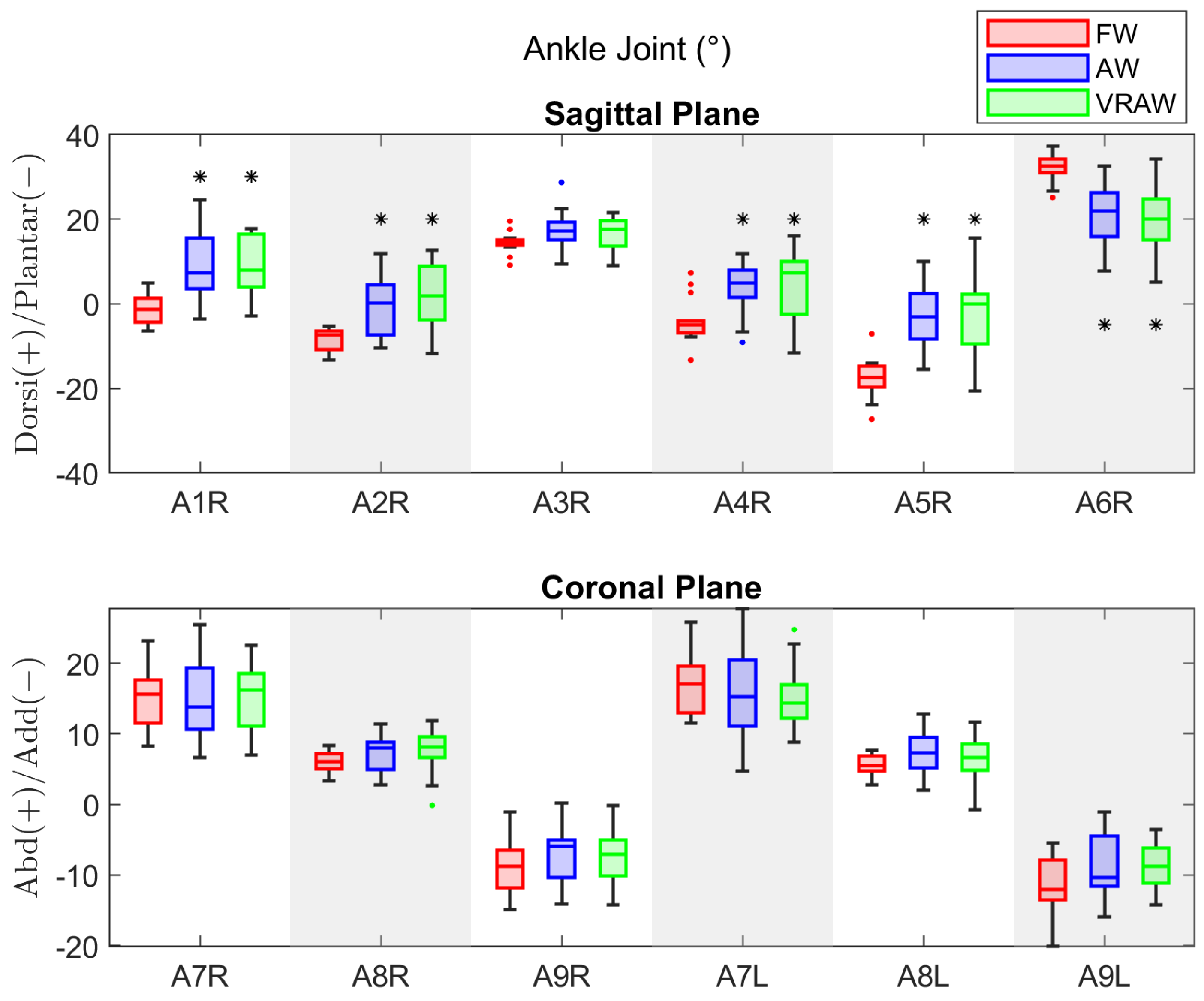

3.5. SEQ

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| 10 MWT | 10-Meter Walk Test |

| AW | Smart Walker-assisted Gait |

| FW | Free Walking |

| HREI | Human–Robot-Environment Interaction |

| HRI | Human–Robot Interaction |

| LiDAR | Light Detection and Ranging |

| MC | Motion Capture |

| OC | Odometry and Control |

| ROS | Robot Operating System |

| SEQ | Suitability Evaluation Questionnaire for Virtual Rehabilitation 254 Systems |

| SSQ | Simulator Sickness Questionnaire |

| SW | Smart Walker |

| VRAW | Smart Walker-assisted Gait Plus VR Assistance |

| VR | Virtual Reality |

| VRI | Virtual Reality Integration |

| WHO | World Health Organization |

Appendix A

| Parameters | FW | AW | VRAW | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean (SD) | Min | Max | Mean (SD) | Min | Max | Mean (SD) | Min | Max | |

| Stride Length (m) | 1.32 (0.1) | 1.19 | 1.54 | 0.64 (0.11) | 0.44 | 0.80 | 0.67 (0.13) | 0.48 | 0.85 |

| Stride Number (steps) | 7.29 (0.8) | 6 | 9 | 16.48 (3.03) | 12 | 23 | 15.69 (3.93) | 11 | 24 |

| Gait Speed (m/s) | 1.14 (0.11) | 0.90 | 1.3 | 0.32 (0.07) | 0.17 | 0.38 | 0.33 (0.05) | 0.19 | 0.39 |

| Cadence (steps/s) | 1.12 (0.09) | 0.99 | 1.31 | 1.97 (0.42) | 1.21 | 2.72 | 2.08 (0.43) | 1.45 | 2.99 |

| Stance Phase (s) | 0.65 (0.07) | 0.57 | 0.78 | 1.37 (0.35) | 0.80 | 2.05 | 1.45 (0.33) | 0.99 | 1.95 |

| Swing Phase (s) | 0.47 (0.05) | 0.41 | 0.57 | 0.59 (0.12) | 0.40 | 0.80 | 0.63 (0.15) | 0.45 | 1.02 |

| Time 10 MWT (s) | 7.52 (0.65) | 6.35 | 8.90 | 30.61 (8.44) | 23.98 | 53.44 | 32.11 (8.71) | 24.70 | 50.38 |

| Parameter | F Statistic | p | Effect Size () | Contrasts | p | Effect Size (d) |

|---|---|---|---|---|---|---|

| Stride Length | F (2, 26) = 239.05 | <0.001 | 0.89 | FW vs. AW | <0.001 | 4.64 |

| FW vs. VRAW | <0.001 | 4.17 | ||||

| AW vs. VRAW | =1 | 0.45 | ||||

| Stride Number | F (1.42, 18.2) = 50.80 * | <0.001 | 0.69 | FW vs. AW | <0.001 | 3.01 |

| FW vs. VRAW | <0.001 | 2.11 | ||||

| AW vs. VRAW | =1 | 0.36 | ||||

| Gait Speed | F (2, 26) = 963.49 | <0.001 | 0.96 | FW vs. AW | <0.001 | 8.94 |

| FW vs. VRAW | <0.001 | 9.67 | ||||

| AW vs. VRAW | =1 | 0.12 | ||||

| Cadence | F (2, 26) = 52.80 | <0.001 | 0.61 | FW vs. AW | <0.001 | 2.17 |

| FW vs. VRAW | <0.001 | 2.31 | ||||

| AW vs. VRAW | =0.72 | 0.33 | ||||

| Stance Phase | F (2, 26) = 55.27 | <0.001 | 0.63 | FW vs. AW | <0.001 | 2.19 |

| FW vs. VRAW | <0.001 | 2.42 | ||||

| AW vs. VRAW | =0.96 | 0.28 | ||||

| Swing Phase | F (1.32, 17.16) = 13.58 | <0.001 | 0.28 | FW vs. AW | =0.003 | 1.44 |

| FW vs. VRAW | =0.001 | 1.25 | ||||

| AW vs. VRAW | =0.26 | 0.49 | ||||

| Time 10 MWT | F (2, 26) = 67.39 | <0.001 | 0.73 | FW vs. AW | <0.001 | 2.79 |

| FW vs. VRAW | <0.001 | 2.96 | ||||

| AW vs. VRAW | =1 | 0.15 |

| Parameters | FW | AW | VRAW | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean (SD) | Min | Max | p | Mean (SD) | Min | Max | p | Mean (SD) | Min | Max | p | ||

| H1 (°) | R | 28.23 (2.99) | 24.02 | 33.87 | =0.54 | 50.83 (7.42) | 38.77 | 70.11 | =0.89 | 49.70 (6.05) | 39.98 | 64.28 | =0.33 |

| L | 27.85 (2.82) | 24.11 | 35.22 | 50.7 0(7.45) | 42.27 | 67.83 | 50.61 (5.20) | 43.05 | 59.57 | ||||

| H2 (°) | R | 22.32 (3.41) | 16.90 | 29.26 | =0.77 | 45.28 (6.76) | 35.13 | 62.96 | =0.57 | 43.84 (6.33) | 35.78 | 59.57 | =0.73 |

| L | 22.53 (3.10) | 18.25 | 30.42 | 44.73 (6.06) | 37.59 | 57.36 | 44.24 (5.00) | 33.91 | 53.49 | ||||

| H3 (°) | R | −8.42 (3.46) | −15.75 | −4.04 | =0.08 | 18.98 (8.99) | 6.82 | 35.94 | =0.99 | 19.46 (8.29) | 7.40 | 33.39 | =0.40 |

| L | −7.51 (3.05) | −13.59 | −2.44 | 19.22 (8.87) | 9.62 | 38.32 | 18.72 (7.14) | 7.81 | 33.96 | ||||

| H4 (°) | R | −2.85 (3.25) | −9.06 | 2.19 | =0.16 | 24.85 (9.66) | 8.82 | 42.56 | =0.99 | 23.95 (8.17) | 12.03 | 38.52 | =0.58 |

| L | −2.16 (3.49) | −8.30 | 4.86 | 24.85 (9.38) | 14.37 | 44.08 | 23.45 (8.03) | 13.53 | 42.28 | ||||

| H5 (°) | R | 32.79 (2.65) | 28.00 | 36.10 | =0.65 | 52.85 (8.03) | 39.58 | 71.96 | =0.69 | 50.71 (7.42) | 35.31 | 65.87 | =0.08 |

| L | 32.58 (2.70) | 27.26 | 36.88 | 53.20 (7.79) | 43.54 | 70.58 | 52.40 (6.72) | 36.93 | 62.34 | ||||

| H6 (°) | R | 41.22 (4.04) | 34.75 | 49.02 | =0.06 | 34.45 (5.30) | 24.18 | 44.24 | =0.98 | 32.78 (6.05) | 19.97 | 41.56 | =0.71 |

| L | 40.10 (3.28) | 35.15 | 45.55 | 34.46 (4.17) | 27.28 | 41.78 | 33.99 (6.23) | 20.10 | 42.14 | ||||

| H7 (°) | R | 9.41 (3.30) | 5.24 | 16.09 | =0.11 | 9.60 (2.65) | 4.93 | 14.46 | =0.75 | 9.81 (2.87) | 5.64 | 15.11 | =0.71 |

| L | 10.5 (2.93) | 6.61 | 17.43 | 9.71 (2.57) | 5.40 | 15.55 | 9.60 (3.18) | 3.44 | 14.53 | ||||

| H8 (°) | R | −4.32 (1.75) | −9.98 | −3.87 | =0.85 | −8.47 (2.67) | −12.88 | −3.18 | =0.07 | −8.45 (2.91) | −11.55 | −0.35 | =0.23 |

| L | −7.27 (2.11) | −10.56 | −3.71 | −10.23 (2.01) | −13.15 | −6.20 | −9.55 (4.94) | −13.99 | 6.18 | ||||

| H9 (°) | R | 2.05 (2.73) | −1.29 | 6.18 | =0.10 | 1.12 (4.20) | −5.29 | 7.52 | =0.12 | 1.93 (6.25) | −3.67 | 21.70 | =0.06 |

| L | 3.23 (2.56) | −0.56 | 8.21 | −0.54 (2.61) | −3.98 | 5.36 | 0.34 (6.04) | −6.97 | 17.12 | ||||

| H10 (°) | R | 9.81 (4.24) | 4.14 | 18.67 | =0.27 | 7.58 (3.15) | 3.78 | 14.55 | =0.90 | 8.11 (3.24) | 3.25 | 15.97 | =0.90 |

| L | 9.23 (3.84) | 2.71 | 15.65 | 7.69 (2.74) | 3.90 | 13.35 | 8.15 (3.56) | 2.18 | 13.54 | ||||

| H11 (°) | R | 7.47 (3.28) | 2.55 | 14.05 | =0.09 | 9.80 (4.49) | 1.11 | 17.27 | =0.50 | 9.24 (4.87) | 0.51 | 18.67 | =0.27 |

| L | 6.01 (2.77) | 2.24 | 13.23 | 9.12 (6.04) | 1.10 | 21.69 | 10.11 (5.69) | 0.89 | 19.48 | ||||

| H12 (°) | R | −2.34 (4.65) | −13.61 | 6.15 | =0.35 | 2.22 (4.63) | −6.07 | 10.00 | =0.34 | 1.50 (5.12) | −8.70 | 10.88 | =0.45 |

| L | −3.21 (4.24) | −11.17 | 2.02 | 1.44 (5.12) | −5.41 | 13.72 | 1.16 (5.07) | −7.18 | 9.63 | ||||

| Parameter | F Statistic | p | Effect Size () | Contrasts | p (R) | Effect Size (d) | p (L) | Effect Size (d) | |

|---|---|---|---|---|---|---|---|---|---|

| H1 | FW vs. AW | <0.001 | 3.23 | <0.001 | 2.75 | ||||

| R | F (2, 26) = 116.47 | <0.001 | 0.77 | FW vs. VRAW | <0.001 | 3.34 | <0.001 | 4.07 | |

| L | F (2, 26) = 103.68 | <0.001 | 0.80 | AW vs. VRAW | =1 | 0.22 | =1 | 0.01 | |

| H2 | FW vs. AW | <0.001 | 4.44 | <0.001 | 3.50 | ||||

| R | F (2, 26) = 152.21 | <0.001 | 0.79 | FW vs. VRAW | <0.001 | 3.34 | <0.001 | 3.95 | |

| L | F (2, 26) = 129.53 | <0.001 | 0.83 | AW vs. VRAW | =1 | 0.30 | =1 | 0.08 | |

| H3 | FW vs. AW | <0.001 | 3.61 | <0.001 | 3.40 | ||||

| R | F (2, 26) = 166.04 | <0.001 | 0.77 | FW vs. VRAW | <0.001 | 4.40 | <0.001 | 4.30 | |

| L | F (2, 26) = 161.35 | <0.001 | 0.78 | AW vs. VRAW | =1 | 0.08 | =1 | 0.10 | |

| H4 | FW vs. AW | <0.001 | 3.34 | <0.001 | 3.31 | ||||

| R | F (1.7, 22.1) = 120.51 * | <0.001 | 0.77 | FW vs. VRAW | <0.001 | 4.04 | <0.001 | 3.40 | |

| L | F (1.82, 23.92) = 110.34 * | <0.001 | 0.75 | AW vs. VRAW | =1 | 0.15 | =1 | 0.22 | |

| H5 | FW vs. AW | <0.001 | 2.64 | <0.001 | 2.51 | ||||

| R | F (2, 26) = 66.51 | <0.001 | 0.67 | FW vs. VRAW | <0.001 | 2.25 | <0.001 | 2.83 | |

| L | F (2, 26) = 64.56 | <0.001 | 0.72 | AW vs. VRAW | =0.63 | 0.35 | =1 | 0.10 | |

| H6 | FW vs. AW | <0.001 | 1.82 | <0.001 | 1.81 | ||||

| R | F (2, 26) = 26.67 | <0.001 | 0.35 | FW vs. VRAW | <0.001 | 1.59 | =0.01 | 0.95 | |

| L | F (1.34, 17.42) = 8.54 * | =0.001 | 0.27 | AW vs. VRAW | =0.59 | 0.36 | =1 | 0.09 | |

| H7 | FW vs. AW | =1 | 0.09 | =1 | 0.25 | ||||

| R | F (1.24, 16.12) = 0.12 * | =0.70 | 0.003 | FW vs. VRAW | =1 | 0.12 | =1 | 0.20 | |

| L | F (1.3, 16.9) = 0.39 * | =0.48 | 0.02 | AW vs. VRAW | =1 | 0.12 | =1 | 0.04 | |

| H8 | FW vs. AW | =0.46 | 0.40 | =0.40 | 0.47 | ||||

| R | F (2.26, 16.38) = 1.99 | =0.15 | 0.04 | FW vs. VRAW | =0.28 | 0.40 | =0.28 | 0.48 | |

| L | F (1.16, 15.08) = 1.05 * | =0.21 | 0.11 | AW vs. VRAW | =1 | 0.001 | =1 | 0.12 | |

| H9 | FW vs. AW | =0.58 | 0.36 | =0.46 | 1.76 | ||||

| R | F (1.26, 16.38) = 0.22 | =0.61 | 0.001 | FW vs. VRAW | =1 | 0.02 | =0.28 | 0.48 | |

| L | F (1.16, 15.08) = 0.96 * | =0.30 | 0.09 | AW vs. VRAW | =1 | 0.17 | =1 | 0.17 | |

| H10 | FW vs. AW | =0.38 | 0.44 | =0.64 | 0.35 | ||||

| R | F (2, 26) = 1.96 | =0.16 | 0.07 | FW vs. VRAW | =0.57 | 0.37 | =1 | 0.17 | |

| L | F (2, 26) = 0.69 | =0.51 | 0.03 | AW vs. VRAW | =1 | 0.15 | =1 | 0.10 | |

| H11 | FW vs. AW | =0.13 | 0.60 | =0.16 | 0.56 | ||||

| R | F (2, 26) = 2.28 | =0.12 | 0.06 | FW vs. VRAW | =0.59 | 0.36 | =0.07 | 0.68 | |

| L | F (2, 26) = 4.02 | =0.03 | 0.11 | AW vs. VRAW | =1 | 0.13 | =1 | 0.18 | |

| H12 | FW vs. AW | =0.005 | 1.04 | =0.015 | 0.89 | ||||

| R | F (2, 26) = 9.17 | <0.001 | 0.15 | FW vs. VRAW | =0.017 | 0.88 | =0.008 | 0.98 | |

| L | F (2, 26) = 9.58 | <0.001 | 0.17 | AW vs. VRAW | =1 | 0.17 | =1 | 0.07 | |

| Parameters | FW | AW | VRAW | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean (SD) | Min | Max | p | Mean (SD) | Min | Max | p | Mean (SD) | Min | Max | p | ||

| K1 (°) | R | 2.86 (5.34) | −3.67 | 15.09 | =0.11 | 26.89 (16.57) | 2.01 | 70.49 | =0.35 * | 22.79 (13.31) | −0.03 | 46.05 | =0.88 |

| L | 3.81 (4.85) | −4.34 | 14.78 | 26.33 (14.81) | 1.29 | 63.51 | 22.97 (13.71) | −0.23 | 45.54 | ||||

| K2 (°) | R | 10.43 (6.43) | 1.13 | 22.01 | =0.35 * | 20.39 (11.55) | −2.50 | 32.42 | =0.29 * | 21.89 (11.66) | −2.23 | 44.93 | =0.67 |

| L | 11.81 (5.23) | −1.24 | 18.04 | 19.53 (9.76) | 2.24 | 30.13 | 21.32 (11.97) | 1.45 | 44.08 | ||||

| K3 (°) | R | 0.95 (3.44) | −3.67 | 7.45 | =0.06 | 6.68 (6.67) | −5.60 | 21.35 | =0.86 | 5.54 (6.50) | −5.01 | 20.91 | =0.74 |

| L | 2.03 (3.2) | −4.69 | 6.86 | 6.87 (6.30) | −1.06 | 23.33 | 5.76 (5.89) | −2.34 | 20.35 | ||||

| K4 (°) | R | 32.73 (3.34) | 25.30 | 36.31 | =0.35 | 28.22 (6.02) | 17.41 | 37.58 | =0.84 | 27.48 (5.81) | 17.96 | 39.53 | =0.70 |

| L | 33.26 (4.00) | 22.48 | 39.58 | 28.44 (4.74) | 21.03 | 38.72 | 27.1 (4.88) | 17.79 | 37.50 | ||||

| K5 (°) | R | 61.87 (4.35) | 54.25 | 67.43 | =0.39 * | 49.62 (10.78) | 32.46 | 73.87 | =0.04 | 47.67 (9.09) | 30.37 | 62.33 | =0.03 |

| L | 62.59 (4.06) | 56.80 | 68.57 | 52.18 (8.25) | 36.84 | 68.25 | 50.08 (7.78) | 34.21 | 60.35 | ||||

| K6 (°) | R | 60.92 (4.13) | 56.42 | 68.17 | =0.63 | 44.42 (9.66) | 28.80 | 71.32 | =0.03 * | 42.42 (4.98) | 30.89 | 48.91 | =0.02 |

| L | 60.56 (3.26) | 54.99 | 67.25 | 46.96 (8.28) | 31.62 | 67.25 | 44.43 (5.83) | 32.74 | 51.51 | ||||

| K7 (°) | R | 1.67 (1.16) | 0.71 | 4.25 | =0.02 * | 1.76 (1.13) | 0.59 | 3.92 | =0.85 | 2.08 (1.28) | 0.89 | 5.27 | =0.26 * |

| L | 2.85 (2.04) | 0.65 | 8.61 | 2.08 (1.51) | 0.77 | 5.84 | 1.71 (1.11) | 0.30 | 3.69 | ||||

| K8 (°) | R | −1.59 (0.56) | −2.59 | −0.73 | =0.72 | −2.30 (1.00) | −4.31 | −0.40 | =0.09 | −2.13 (0.87) | −3.82 | −0.92 | =0.69 |

| L | −1.65 (0.59) | −3.14 | −0.78 | −1.66 (0.87) | −3.81 | −0.36 | −2.24 (1.33) | −5.31 | −0.89 | ||||

| K9 (°) | R | −2.92 (1.46) | −6.53 | −1.40 | =0.05 * | −3.01 (2.36) | −7.76 | −0.33 | =0.65 | −2.74 (2.51) | −7.02 | 1.87 | =0.38 |

| L | −4.2 (2.32) | −10.33 | −1.30 | −3.38 (2.34) | −7.59 | 0.52 | −3.46 (2.52) | −7.33 | 1.00 | ||||

| K10 (°) | R | 11.16 (2.82) | 6.17 | 15.18 | =0.04 | 6.74 (3.21) | 2.98 | 14.90 | =0.79 | 6.04 (2.74) | 2.01 | 11.7 | =0.20 |

| L | 12.53 (2.55) | 9.03 | 16.02 | 6.96 (2.20) | 3.78 | 10.01 | 6.88 (2.51) | 1.69 | 10.91 | ||||

| K11 (°) | R | 4.14 (1.66) | 0.94 | 6.87 | =0.44 | 3.16 (1.41) | 0.73 | 6.13 | =0.60 | 2.64 (1.18) | 0.95 | 4.74 | =0.13 |

| L | 4.59 (1.56) | 2.35 | 7.15 | 2.95 (1.19) | 1.56 | 4.91 | 3.08 (1.23) | 0.72 | 4.88 | ||||

| K12 (°) | R | −7.02 (2.06) | −10.98 | −3.09 | =0.11 | −3.57 (2.34) | −8.77 | −0.29 | =0.49 | −3.4 (2.05) | −6.95 | 0.57 | =0.29 |

| L | −7.94 (2.03) | −10.95 | −4.71 | −4.01 (1.33) | −5.94 | −1.92 | −4 (1.53) | −6.69 | −1.78 | ||||

| Parameter | F Statistic | p | Effect Size () | Contrasts | p (R) | Effect Size (d) | p (L) | Effect Size (d) | |

|---|---|---|---|---|---|---|---|---|---|

| K1 | FW vs. AW | <0.001 | 1.42 | <0.001 | 1.43 | ||||

| R | F (2, 26) = 23.33 | <0.001 | 0.43 | FW vs. VRAW | <0.001 | 1.44 | <0.001 | 4.34 | |

| L | F (2, 26) = 23.37 | <0.001 | 0.43 | AW vs. VRAW | =0.55 | 0.38 | =0.54 | 0.38 | |

| K2 | FW vs. AW | =0.016 | 0.89 | <0.001 | 0.85 | ||||

| R | F (2, 26) = 9.78 | <0.001 | 0.21 | FW vs. VRAW | =0.005 | 1.06 | <0.001 | 0.90 | |

| L | F (2, 26) = 129.53 | <0.001 | 0.17 | AW vs. VRAW | =1 | 0.16 | =1 | 0.15 | |

| K3 | FW vs. AW | =0.006 | 1.02 | =0.03 | 0.78 | ||||

| R | F (2, 26) = 8.95 | =0.001 | 0.17 | FW vs. VRAW | =0.04 | 0.78 | =0.05 | 0.73 | |

| L | F (2, 26) = 5.69 | =0.008 | 0.14 | AW vs. VRAW | =1 | 0.25 | =1 | 0.19 | |

| K4 | FW vs. AW | =0.004 | 1.07 | =0.003 | 1.09 | ||||

| R | F (2, 26) = 14.45 | <0.001 | 0.18 | FW vs. VRAW | =0.001 | 1.24 | =0.002 | 1.17 | |

| L | F (2, 26) = 15.31 | <0.001 | 0.27 | AW vs. VRAW | =1 | 0.22 | =0.43 | 0.42 | |

| K5 | FW vs. AW | =0.002 | 1.23 | <0.001 | 1.56 | ||||

| R | F (2, 26) = 19.83 | <0.001 | 0.37 | FW vs. VRAW | <0.001 | 1.72 | <0.001 | 1.97 | |

| L | F (2, 26) = 28.67 | <0.001 | 0.40 | AW vs. VRAW | =1 | 0.21 | =0.81 | 0.31 | |

| K6 | FW vs. AW | <0.001 | 1.57 | <0.001 | 1.46 | ||||

| R | F (2, 26) = 30.09 | <0.001 | 0.62 | FW vs. VRAW | <0.001 | 2.67 | <0.001 | 2.86 | |

| L | F (2, 26) = 29.27 | =0.001 | 0.59 | AW vs. VRAW | =1 | 0.20 | =1 | 0.25 | |

| K7 | FW vs. AW | =1 | 0.08 | =0.13 | 0.59 | ||||

| R | F (2, 26) = 0.77 | =0.47 | 0.02 | FW vs. VRAW | =0.84 | 0.30 | =0.09 | 0.64 | |

| L | F (2, 26) = 4.55 | =0.02 | 0.08 | AW vs. VRAW | =1 | 0.22 | =0.82 | 0.30 | |

| K8 | FW vs. AW | =0.11 | 0.61 | =1 | 0.02 | ||||

| R | F (2, 26) = 3.13 | =0.06 | 0.12 | FW vs. VRAW | =0.20 | 0.53 | =0.25 | 0.50 | |

| L | F (1.4, 18.2) = 1.75 * | =0.09 | 0.08 | AW vs. VRAW | =1 | 0.14 | =0.40 | 0.42 | |

| K9 | FW vs. AW | =1 | 0.05 | =0.28 | 0.48 | ||||

| R | F (2, 26) = 0.14 | =0.86 | 0.01 | FW vs. VRAW | =1 | 0.09 | =0.87 | 0.29 | |

| L | F (2, 26) = 1.15 | =0.33 | 0.02 | AW vs. VRAW | =1 | 0.14 | =1 | 0.03 | |

| K10 | FW vs. AW | <0.001 | 1.23 | <0.001 | 2.01 | ||||

| R | F (2, 26) = 21.73 | <0.001 | 0.39 | FW vs. VRAW | <0.001 | 1.84 | <0.001 | 2.55 | |

| L | F (2, 26) = 54.85 | <0.001 | 0.56 | AW vs. VRAW | =1 | 0.23 | =1 | 0.03 | |

| K11 | FW vs. AW | =0.18 | 0.54 | =0.01 | 0.94 | ||||

| R | F (2, 26) = 6.30 | =0.005 | 0.17 | FW vs. VRAW | =0.01 | 0.93 | =0.01 | 0.90 | |

| L | F (2, 26) = 4.02 | <0.001 | 0.25 | AW vs. VRAW | =0.55 | 0.37 | =1 | 0.11 | |

| K12 | FW vs. AW | <0.001 | 1.32 | <0.001 | 1.97 | ||||

| R | F (2, 26) = 49.43 | <0.001 | 0.39 | FW vs. VRAW | <0.001 | 1.87 | <0.001 | 2.25 | |

| L | F (2, 26) = 49.43 | <0.001 | 0.58 | AW vs. VRAW | =1 | 0.07 | =1 | 0.003 | |

| Parameters | FW | AW | VRAW | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean (SD) | Min | Max | p | Mean (SD) | Min | Max | p | Mean (SD) | Min | Max | p | ||

| A1 (°) | R | −1.24 (3.44) | −6.34 | 4.92 | =0.43 | 8.83 (7.14) | −2.89 | 17.73 | =0.97 | 9.14 (7.67) | −3.63 | 24.60 | =0.97 |

| L | −0.64 (4.30) | −8.95 | 6.54 | 8.85 (6.60) | 0.13 | 17.90 | 8.12 (7.23) | −1.07 | 18.59 | ||||

| A2 (°) | R | −8.34 (2.68) | −13.27 | −5.29 | =0.22 | 2.0 (7.44) | −11.73 | 12.70 | =0.98 | −0.60 (7.26) | −10.37 | 11.83 | =0.98 |

| L | −7.37 (2.67) | −13.73 | −2.13 | 2.03 (6.69) | −12.26 | 12.88 | 0.18 (6.96) | −14.36 | 7.72 | ||||

| A3 (°) | R | 14.45 (2.47) | 9.17 | 19.54 | =0.007 | 16.85 (3.83) | 9.02 | 21.50 | =0.79 | 17.55 (4.48) | 9.46 | 28.69 | =0.79 |

| L | 15.52 (2.43) | 12.60 | 22.06 | 17.06 (3.70) | 10.89 | 21.69 | 16.45 (3.72) | 11.09 | 21.03 | ||||

| A4 (°) | R | −3.95 (5.41) | −13.27 | 7.36 | =0.80 | 4.52 (8.45) | −11.59 | 16.12 | =0.80 | 3.85 (6.16) | −9.1 | 11.89 | =0.91 |

| L | −4.15 (5.06) | −10.10 | 7.46 | 4.72 (6.32) | −11.10 | 12.08 | 2.16 (6.43) | −12.26 | 8.92 | ||||

| A5 (°) | R | −17.73 (4.78) | −27.26 | −7.10 | =0.99 | −3.02 (9.78) | −20.63 | 15.50 | =0.99 | −3.61 (7.53) | −15.48 | 9.98 | =0.44 |

| L | −17.73 (6.38) | −27.90 | −3.29 | −4.54 (8.70) | −21.69 | 9.15 | −6.31 (8.35) | −22.46 | 3.47 | ||||

| A6 (°) | R | 32.18 (3.48) | 25.10 | 37.27 | =0.28 | 20.29 (8.04) | 5.10 | 34.23 | =0.28 | 21.47 (6.86) | 7.74 | 32.60 | =0.26 |

| L | 33.26 (4.71) | 25.52 | 41.89 | 22.07 (7.78) | 9.31 | 33.79 | 23.05 (6.73) | 14.23 | 36.35 | ||||

| A7 (°) | R | 15.10 (4.27) | 8.28 | 23.18 | =0.22 | 14.91 (4.71) | 6.99 | 22.53 | =0.22 | 14.55 (5.74) | 6.66 | 25.41 | =0.87 |

| L | 16.95 (4.18) | 11.51 | 25.84 | 15.08 (4.56) | 8.83 | 24.74 | 15.64 (6.99) | 4.69 | 27.77 | ||||

| A8 (°) | R | 6.08 (1.29) | 3.35 | 8.30 | =0.30 | 7.79 (3.30) | −0.12 | 11.87 | =0.29 | 7.37 (2.71) | 2.84 | 11.43 | =0.19 |

| L | 5.62 (1.36) | 2.83 | 7.69 | 6.54 (3.02) | −0.72 | 11.68 | 7.58 (2.96) | 2.05 | 12.80 | ||||

| A9 (°) | R | −9.02 (3.94) | −14.88 | −1.10 | =0.15 | −7.11 (3.86) | −14.21 | −0.17 | =0.15 | −7.17 (4.04) | −14.09 | 0.24 | =0.19 |

| L | −11.33 (4.05) | −20.14 | −5.44 | −8.54 (3.14) | −14.25 | −3.51 | −8.64 (4.45) | −15.87 | −1.03 | ||||

| Parameter | F Statistic | p | Effect Size () | Contrasts | p (R) | Effect Size (d) | p (L) | Effect Size (d) | |

|---|---|---|---|---|---|---|---|---|---|

| A1 | FW vs. AW | <0.001 | 1.39 | =0.001 | 1.23 | ||||

| R | F (1.42, 18.46) = 17.04 * | <0.001 | 0.38 | FW vs. VRAW | <0.001 | 1.40 | =0.008 | 0.98 | |

| L | F (1.14, 18.46) = 9.10 * | <0.001 | 0.34 | AW vs. VRAW | =1 | 0.08 | =0.54 | 0.25 | |

| A2 | FW vs. AW | =0.002 | 1.20 | =0.002 | 1.15 | ||||

| R | F (1.36, 17.68) = 10.40 * | <0.001 | 0.35 | FW vs. VRAW | =0.013 | 0.94 | =0.007 | 1.00 | |

| L | F (2, 26) = 11.61 | <0.001 | 0.35 | AW vs. VRAW | =0.11 | 0.63 | =1 | 0.25 | |

| A3 | FW vs. AW | =0.34 | 0.45 | =0.77 | 0.32 | ||||

| R | F (1.42, 18.46) = 2.16 * | =0.06 | 0.12 | FW vs. VRAW | =0.22 | 0.52 | =1.00 | 0.19 | |

| L | F (1.1, 14.3) = 0.58 * | =0.36 | 0.14 | AW vs. VRAW | =1 | 0.23 | =0.30 | 0.47 | |

| A4 | FW vs. AW | =0.03 | 0.78 | =0.004 | 1.07 | ||||

| R | F (2, ) = 7.53 | =0.002 | 0.25 | FW vs. VRAW | =0.01 | 0.93 | =0.07 | 0.68 | |

| L | F (2, 26) = 9.40 | <0.001 | 0.30 | AW vs. VRAW | =1 | 0.08 | =0.35 | 0.45 | |

| A5 | FW vs. AW | <0.001 | 1.35 | <0.001 | 1.66 | ||||

| R | F (2, 26) = 21.35 | <0.001 | 0.46 | FW vs. VRAW | <0.001 | 1.50 | =0.005 | 1.05 | |

| L | F (2, 26) = 18.73 | <0.001 | 0.37 | AW vs. VRAW | =1 | 0.07 | =0.81 | 0.25 | |

| A6 | FW vs. AW | <0.001 | 1.50 | <0.001 | 1.69 | ||||

| R | F (2, 26) = 18.66 | <0.001 | 0.43 | FW vs. VRAW | <0.001 | 1.31 | =0.002 | 1.19 | |

| L | F (2, 26) = 29.27 | <0.001 | 0.39 | AW vs. VRAW | =1 | 0.15 | =1 | 0.14 | |

| A7 | FW vs. AW | =1 | 0.03 | =0.66 | 0.34 | ||||

| R | F (2, 26) = 0.07 | =0.93 | 0.002 | FW vs. VRAW | =1 | 0.09 | =1 | 0.17 | |

| L | F (2, 26) = 0.70 | =0.50 | 0.02 | AW vs. VRAW | =1 | 0.08 | =1 | 0.12 | |

| A8 | FW vs. AW | =0.16 | 0.56 | =0.91 | 0.28 | ||||

| R | F (2, 26) = 2.71 | =0.08 | 0.07 | FW vs. VRAW | =0.18 | 0.55 | =0.14 | 0.59 | |

| L | F (2, 26) = 2.87 | =0.74 | 0.09 | AW vs. VRAW | =1 | 0.14 | =0.48 | 0.39 | |

| A9 | FW vs. AW | =0.57 | 0.36 | =0.12 | 0.61 | ||||

| R | F (1.26, 16.38) = 1.87 | =0.17 | 0.05 | FW vs. VRAW | =0.47 | 0.40 | =0.16 | 0.56 | |

| L | F (2, 26) = 4.14 | =0.03 | 0.10 | AW vs. VRAW | =1 | 0.03 | =1 | 0.03 | |

References

- World Health Organization. Ageing and Health. 2024. Available online: https://www.who.int/health-topics/ageing (accessed on 5 February 2024).

- Ravankar, A.A.; Tafrishi, S.A.; Luces, J.V.S.; Seto, F.; Hirata, Y. Care: Cooperation of ai robot enablers to create a vibrant society. IEEE Robot. Autom. Mag. 2022, 30, 8–23. [Google Scholar] [CrossRef]

- Rudnicka, E.; Napierała, P.; Podfigurna, A.; Męczekalski, B.; Smolarczyk, R.; Grymowicz, M. The World Health Organization (WHO) approach to healthy ageing. Maturitas 2020, 139, 6–11. [Google Scholar] [CrossRef] [PubMed]

- Ma, L.; Chan, P. Understanding the physiological links between physical frailty and cognitive decline. Aging Dis. 2020, 11, 405. [Google Scholar] [CrossRef]

- Osoba, M.Y.; Rao, A.K.; Agrawal, S.K.; Lalwani, A.K. Balance and gait in the elderly: A contemporary review. Laryngoscope Investig. Otolaryngol. 2019, 4, 143–153. [Google Scholar] [CrossRef]

- Winter, D.A. Biomechanics and Motor Control of Human Movement; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Gimigliano, F.; Negrini, S. The World Health Organization “Rehabilitation 2030: A call for action”. Eur. J. Phys. Rehabil. Med. 2017, 53, 155–168. [Google Scholar] [CrossRef]

- Ferreira, B.; Menezes, P. Gamifying motor rehabilitation therapies: Challenges and opportunities of immersive technologies. Information 2020, 11, 88. [Google Scholar] [CrossRef]

- Howard, M.C. A meta-analysis and systematic literature review of virtual reality rehabilitation programs. Comput. Hum. Behav. 2017, 70, 317–327. [Google Scholar] [CrossRef]

- Mikolajczyk, T.; Ciobanu, I.; Badea, D.I.; Iliescu, A.; Pizzamiglio, S.; Schauer, T.; Seel, T.; Seiciu, P.L.; Turner, D.L.; Berteanu, M. Advanced technology for gait rehabilitation: An overview. Adv. Mech. Eng. 2018, 10, 1687814018783627. [Google Scholar] [CrossRef]

- Canning, C.G.; Allen, N.E.; Nackaerts, E.; Paul, S.S.; Nieuwboer, A.; Gilat, M. Virtual reality in research and rehabilitation of gait and balance in Parkinson disease. Nat. Rev. Neurol. 2020, 16, 409–425. [Google Scholar] [CrossRef]

- Saposnik, G. Virtual reality in stroke rehabilitation. In Ischemic Stroke Therapeutics: A Comprehensive Guide; Springer: Cham, Switzerland, 2016; pp. 225–233. [Google Scholar]

- Kaplan, A.D.; Cruit, J.; Endsley, M.; Beers, S.M.; Sawyer, B.D.; Hancock, P.A. The effects of virtual reality, augmented reality, and mixed reality as training enhancement methods: A meta-analysis. Hum. Factors 2021, 63, 706–726. [Google Scholar] [CrossRef]

- Patil, V.; Narayan, J.; Sandhu, K.; Dwivedy, S.K. Integration of virtual reality and augmented reality in physical rehabilitation: A state-of-the-art review. In Revolutions in Product Design for Healthcare: Advances in Product Design and Design Methods for Healthcare; Springer: Singapore, 2022; pp. 177–205. [Google Scholar]

- Vieira, C.; da Silva Pais-Vieira, C.F.; Novais, J.; Perrotta, A. Serious game design and clinical improvement in physical rehabilitation: Systematic review. JMIR Serious Games 2021, 9, e20066. [Google Scholar] [CrossRef] [PubMed]

- Kern, F.; Winter, C.; Gall, D.; Käthner, I.; Pauli, P.; Latoschik, M.E. Immersive virtual reality and gamification within procedurally generated environments to increase motivation during gait rehabilitation. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 500–509. [Google Scholar]

- Amin, F.; Waris, A.; Syed, S.; Amjad, I.; Umar, M.; Iqbal, J.; Gilani, S.O. Effectiveness of Immersive Virtual Reality Based Hand Rehabilitation Games for Improving Hand Motor Functions in Subacute Stroke Patients. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 2060–2069. [Google Scholar] [CrossRef] [PubMed]

- Casuso-Holgado, M.J.; Martín-Valero, R.; Carazo, A.F.; Medrano-Sánchez, E.M.; Cortés-Vega, M.D.; Montero-Bancalero, F.J. Effectiveness of virtual reality training for balance and gait rehabilitation in people with multiple sclerosis: A systematic review and meta-analysis. Clin. Rehabil. 2018, 32, 1220–1234. [Google Scholar] [CrossRef]

- Tieri, G.; Morone, G.; Paolucci, S.; Iosa, M. Virtual reality in cognitive and motor rehabilitation: Facts, fiction and fallacies. Expert Rev. Med. Devices 2018, 15, 107–117. [Google Scholar] [CrossRef]

- Li, X.; Luh, D.B.; Xu, R.H.; An, Y. Considering the consequences of cybersickness in immersive virtual reality rehabilitation: A systematic review and meta-analysis. Appl. Sci. 2023, 13, 5159. [Google Scholar] [CrossRef]

- Horsak, B.; Simonlehner, M.; Schöffer, L.; Dumphart, B.; Jalaeefar, A.; Husinsky, M. Overground walking in a fully immersive virtual reality: A comprehensive study on the effects on full-body walking biomechanics. Front. Bioeng. Biotechnol. 2021, 9, 780314. [Google Scholar] [CrossRef]

- Canessa, A.; Casu, P.; Solari, F.; Chessa, M. Comparing Real Walking in Immersive Virtual Reality and in Physical World using Gait Analysis. In Proceedings of the VISIGRAPP (2: HUCAPP), Prague, Czech Republic, 25–27 February 2019; pp. 121–128. [Google Scholar]

- Held, J.P.O.; Yu, K.; Pyles, C.; Veerbeek, J.M.; Bork, F.; Heining, S.M.; Navab, N.; Luft, A.R. Augmented reality–based rehabilitation of gait impairments: Case report. JMIR mHealth uHealth 2020, 8, e17804. [Google Scholar] [CrossRef]

- Khanuja, K.; Joki, J.; Bachmann, G.; Cuccurullo, S. Gait and balance in the aging population: Fall prevention using innovation and technology. Maturitas 2018, 110, 51–56. [Google Scholar] [CrossRef]

- Calabrò, R.S.; Cacciola, A.; Bertè, F.; Manuli, A.; Leo, A.; Bramanti, A.; Naro, A.; Milardi, D.; Bramanti, P. Robotic gait rehabilitation and substitution devices in neurological disorders: Where are we now? Neurol. Sci. 2016, 37, 503–514. [Google Scholar] [CrossRef]

- Yuan, F.; Klavon, E.; Liu, Z.; Lopez, R.P.; Zhao, X. A systematic review of robotic rehabilitation for cognitive training. Front. Robot. AI 2021, 8, 605715. [Google Scholar] [CrossRef]

- Hao, J.; Buster, T.W.; Cesar, G.M.; Burnfield, J.M. Virtual reality augments effectiveness of treadmill walking training in patients with walking and balance impairments: A systematic review and meta-analysis of randomized controlled trials. Clin. Rehabil. 2023, 37, 603–619. [Google Scholar] [CrossRef]

- Zukowski, L.A.; Shaikh, F.D.; Haggard, A.V.; Hamel, R.N. Acute effects of virtual reality treadmill training on gait and cognition in older adults: A randomized controlled trial. PLoS ONE 2022, 17, e0276989. [Google Scholar] [CrossRef]

- Winter, C.; Kern, F.; Gall, D.; Latoschik, M.E.; Pauli, P.; Käthner, I. Immersive virtual reality during gait rehabilitation increases walking speed and motivation: A usability evaluation with healthy participants and patients with multiple sclerosis and stroke. J. Neuroeng. Rehabil. 2021, 18, 68. [Google Scholar] [CrossRef]

- Andrade, R.M.; Sapienza, S.; Mohebbi, A.; Fabara, E.E.; Bonato, P. Transparent Control in Overground Walking Exoskeleton Reveals Interesting Changing in Subject’s Stepping Frequency. IEEE J. Transl. Eng. Health Med. 2023, 12, 182–193. [Google Scholar] [CrossRef]

- Martins, M.; Santos, C.; Frizera, A.; Ceres, R. A review of the functionalities of smart walkers. Med. Eng. Phys. 2015, 37, 917–928. [Google Scholar] [CrossRef]

- Machado, F.; Loureiro, M.; Mello, R.C.; Diaz, C.A.; Frizera, A. A novel mixed reality assistive system to aid the visually and mobility impaired using a multimodal feedback system. Displays 2023, 79, 102480. [Google Scholar] [CrossRef]

- Moreira, R.; Alves, J.; Matias, A.; Santos, C. Smart and assistive walker–asbgo: Rehabilitation robotics: A smart–walker to assist ataxic patients. In Robotics in Healthcare: Field Examples and Challenges; Springer: Cham, Switzerland, 2019; pp. 37–68. [Google Scholar]

- Sierra M, S.D.; Múnera, M.; Provot, T.; Bourgain, M.; Cifuentes, C.A. Evaluation of physical interaction during walker-assisted gait with the AGoRA Walker: Strategies based on virtual mechanical stiffness. Sensors 2021, 21, 3242. [Google Scholar] [CrossRef]

- Jimenez, M.F.; Mello, R.C.; Loterio, F.; Frizera-Neto, A. Multimodal Interaction Strategies for Walker-Assisted Gait: A Case Study for Rehabilitation in Post-Stroke Patients. J. Intell. Robot. Syst. 2024, 110, 13. [Google Scholar] [CrossRef]

- Cifuentes, C.A.; Frizera, A. Human-Robot Interaction Strategies for Walker-Assisted Locomotion; Springer: Cham, Switzerland, 2016; Volume 115. [Google Scholar]

- Scheidegger, W.M.; De Mello, R.C.; Jimenez, M.F.; Múnera, M.C.; Cifuentes, C.A.; Frizera-Neto, A. A novel multimodal cognitive interaction for walker-assisted rehabilitation therapies. In Proceedings of the 2019 IEEE 16th International Conference on Rehabilitation Robotics (ICORR), Toronto, ON, Canada, 24–28 June 2019; pp. 905–910. [Google Scholar]

- Cardoso, P.; Mello, R.C.; Frizera, A. Handling Complex Smart Walker Interaction Strategies with Behavior Trees. In Advances in Bioengineering and Clinical Engineering; Springer: Cham, Switzerland, 2022; pp. 297–304. [Google Scholar]

- Rocha-Júnior, J.; Mello, R.; Bastos-Filho, T.; Frizera-Neto, A. Development of Simulation Platform for Human-Robot-Environment Interface in the UFES CloudWalker. In Proceedings of the Brazilian Congress on Biomedical Engineering, Vitoria, Brazil, 26–30 October 2020; Springer: Cham, Switzerland, 2020; pp. 1431–1437. [Google Scholar]

- Mello, R.C.; Ribeiro, M.R.; Frizera-Neto, A. Implementing cloud robotics for practical applications. In Springer Tracts in Advanced Robotics; Springer: Cham, Switzerland, 2023; Volume 10. [Google Scholar] [CrossRef]

- Loureiro, M.; Machado, F.; Mello, R.C.; Frizera, A. A virtual reality based interface to train smart walker’s user. In Proceedings of the XII Congreso Iberoamericano de Tecnologias de Apoyo a la Discapacidad, Sao Carlos, Brazil, 20–22 November 2023; pp. 1–6. [Google Scholar]

- Machado, F.; Loureiro, M.; Mello, R.C.; Diaz, C.A.; Frizera, A. UFES vWalker: A Preliminary Mixed Reality System for Gait Rehabilitation using a smart walker. In Proceedings of the XII Congreso Iberoamericano de Tecnologias de Apoyo a la Discapacidad, Sao Carlos, Brazil, 20–22 November 2023; pp. 1–6. [Google Scholar]

- Roberts, M.; Mongeon, D.; Prince, F. Biomechanical parameters for gait analysis: A systematic review of healthy human gait. Phys. Ther. Rehabil. 2017, 4, 10–7243. [Google Scholar] [CrossRef]

- Schepers, M.; Giuberti, M.; Bellusci, G. Xsens MVN: Consistent tracking of human motion using inertial sensing. Xsens Technol. 2018, 1, 1–8. [Google Scholar]

- Folstein, M.F.; Folstein, S.E.; McHugh, P.R. “Mini-mental state”: A practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 1975, 12, 189–198. [Google Scholar] [CrossRef]

- Keemink, A.Q.; Van der Kooij, H.; Stienen, A.H. Admittance control for physical human–robot interaction. Int. J. Robot. Res. 2018, 37, 1421–1444. [Google Scholar] [CrossRef]

- Robert-Lachaine, X.; Mecheri, H.; Larue, C.; Plamondon, A. Validation of inertial measurement units with an optoelectronic system for whole-body motion analysis. Med. Biol. Eng. Comput. 2017, 55, 609–619. [Google Scholar] [CrossRef]

- Benedetti, M.; Catani, F.; Leardini, A.; Pignotti, E.; Giannini, S. Data management in gait analysis for clinical applications. Clin. Biomech. 1998, 13, 204–215. [Google Scholar] [CrossRef]

- Kirtley, C. Clinical Gait Analysis: Theory and Practice; Elsevier Health Sciences: Philadelphia, PA, USA, 2006. [Google Scholar]

- Kennedy, R.S.; Lane, N.E.; Berbaum, K.S.; Lilienthal, M.G. Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 1993, 3, 203–220. [Google Scholar] [CrossRef]

- Gil-Gómez, J.A.; Gil-Gómez, H.; Lozano-Quilis, J.A.; Manzano-Hernández, P.; Albiol-Pérez, S.; Aula-Valero, C. SEQ: Suitability evaluation questionnaire for virtual rehabilitation systems. Application in a virtual rehabilitation system for balance rehabilitation. In Proceedings of the 2013 7th International Conference on Pervasive Computing Technologies for Healthcare and Workshops, Venice, Italy, 5–8 May 2013; pp. 335–338. [Google Scholar]

- Field, A.P.; Miles, J.; Field, Z. Discovering statistics using R/Andy Field, Jeremy Miles, Zoë Field; Sage: Thousand Oaks, CA, USA, 2012; pp. 664–666. [Google Scholar]

- Baniasad, M.; Farahmand, F.; Arazpour, M.; Zohoor, H. Kinematic and electromyography analysis of paraplegic gait with the assistance of mechanical orthosis and walker. J. Spinal Cord Med. 2020, 43, 854–861. [Google Scholar] [CrossRef]

- Mun, K.R.; Lim, S.B.; Guo, Z.; Yu, H. Biomechanical effects of body weight support with a novel robotic walker for over-ground gait rehabilitation. Med. Biol. Eng. Comput. 2017, 55, 315–326. [Google Scholar] [CrossRef]

- Ishikura, T. Biomechanical analysis of weight bearing force and muscle activation levels in the lower extremities during gait with a walker. Acta Medica Okayama 2001, 55, 73–82. [Google Scholar]

- Musahl, V.; Engler, I.D.; Nazzal, E.M.; Dalton, J.F.; Lucidi, G.A.; Hughes, J.D.; Zaffagnini, S.; Della Villa, F.; Irrgang, J.J.; Fu, F.H.; et al. Current trends in the anterior cruciate ligament part II: Evaluation, surgical technique, prevention, and rehabilitation. Knee Surg. Sport. Traumatol. Arthrosc. 2022, 30, 34–51. [Google Scholar] [CrossRef]

- Hornby, T.G.; Reisman, D.S.; Ward, I.G.; Scheets, P.L.; Miller, A.; Haddad, D.; Fox, E.J.; Fritz, N.E.; Hawkins, K.; Henderson, C.E.; et al. Clinical practice guideline to improve locomotor function following chronic stroke, incomplete spinal cord injury, and brain injury. J. Neurol. Phys. Ther. 2020, 44, 49–100. [Google Scholar] [CrossRef]

- Maxey, L.; Magnusson, J. Rehabilitation for the Postsurgical Orthopedic Patient; Elsevier Health Sciences: St. Louis, MO, USA, 2013. [Google Scholar]

- Wang, C.; Xu, Y.; Zhang, L.; Fan, W.; Liu, Z.; Yong, M.; Wu, L. Comparative efficacy of different exercise methods to improve cardiopulmonary function in stroke patients: A network meta-analysis of randomized controlled trials. Front. Neurol. 2024, 15, 1288032. [Google Scholar] [CrossRef] [PubMed]

- Dumont, G.D. Hip instability: Current concepts and treatment options. Clin. Sport. Med. 2016, 35, 435–447. [Google Scholar] [CrossRef] [PubMed]

- Baker, R.; Esquenazi, A.; Benedetti, M.G.; Desloovere, K. Gait analysis: Clinical facts. Eur. J. Phys. Rehabil. Med. 2016, 52, 560–574. [Google Scholar] [PubMed]

| Hip Joint | Knee Joint | Ankle Joint |

|---|---|---|

| H1: flexion at heel strike. | K1: flexion at heel strike. | A1: dorsiflexion at heel strike. |

| H2: max. flexion at load. response. | K2: max. flexion at load. response. | A2: max. plantar dorsiflex. at load. response. |

| H3: max. extension in stance phase. | K3: max. extension in stance phase. | A3: max. dorsiflexion in stance phase. |

| H4: flexion at toe-off. | K4: flexion at toe-off. | A4: dorsiflexion at toe-off. |

| H5: max. flexion in swing phase. | K5: max. flexion in swing phase. | A5: max. dorsiflexion in swing phase. |

| H6: total sagittal plane excursion. | K6: total sagittal plane excursion. | A6: total sagittal plane excursion. |

| H7: total coronal plane excursion. | K7: total coronal plane excursion. | A7: total coronal plane excursion. |

| H8: max. adduction in stance phase. | K8: max. adduction in stance phase. | A8: max. abduction in stance phase. |

| H9: max. abduction in swing phase. | K9: max. abduction in swing phase. | A9: max. adduction in swing phase. |

| H10: total transverse plane excursion. | K10: total transverse plane excursion. | |

| H11: max. internal rot. in stance phase. | K11: max. internal rot. in stance phase. | |

| H12: max. external rot. in swing. | K12: max. external rot. in swing phase. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Loureiro, M.; Elias, A.; Machado, F.; Bezerra, M.; Zimerer, C.; Mello, R.; Frizera, A. Analysis of Gait Kinematics in Smart Walker-Assisted Locomotion in Immersive Virtual Reality Scenario. Sensors 2024, 24, 5534. https://doi.org/10.3390/s24175534

Loureiro M, Elias A, Machado F, Bezerra M, Zimerer C, Mello R, Frizera A. Analysis of Gait Kinematics in Smart Walker-Assisted Locomotion in Immersive Virtual Reality Scenario. Sensors. 2024; 24(17):5534. https://doi.org/10.3390/s24175534

Chicago/Turabian StyleLoureiro, Matheus, Arlindo Elias, Fabiana Machado, Marcio Bezerra, Carla Zimerer, Ricardo Mello, and Anselmo Frizera. 2024. "Analysis of Gait Kinematics in Smart Walker-Assisted Locomotion in Immersive Virtual Reality Scenario" Sensors 24, no. 17: 5534. https://doi.org/10.3390/s24175534