Energy-Efficient Anomaly Detection and Chaoticity in Electric Vehicle Driving Behavior

Abstract

:1. Introduction

- Generalization of the models was achieved by using multiple real-world driving data;

- The performance of the existing anomaly detection techniques has been increased with unsupervised hybrid anomaly detection models;

- Excessive energy consumption was revealed with the help of anomaly detection, contributing to energy efficiency in electric vehicles;

- The dataset was also evaluated with chaoticity metrics, and the future prediction of driving behaviors was also supported.

2. Materials and Methods

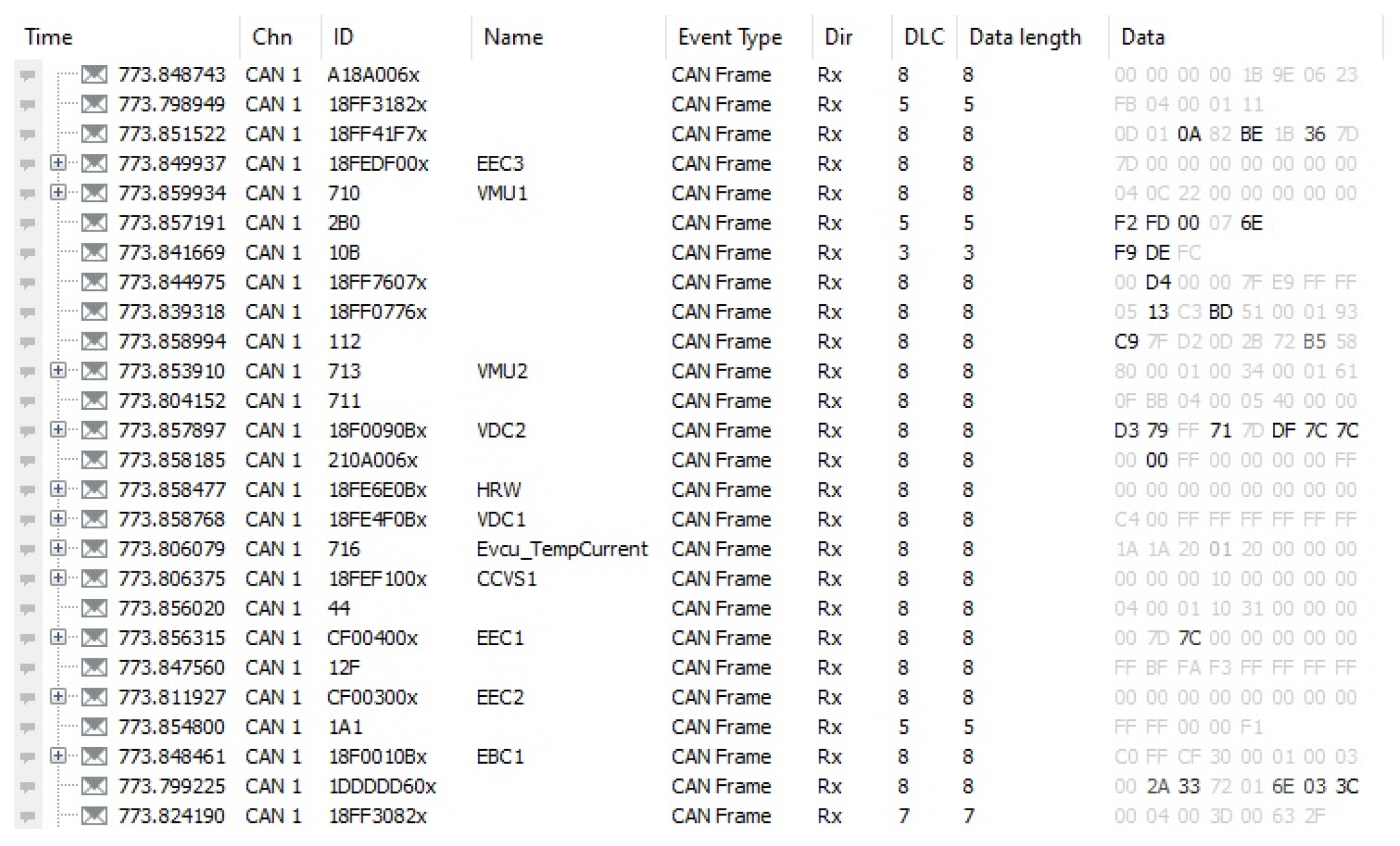

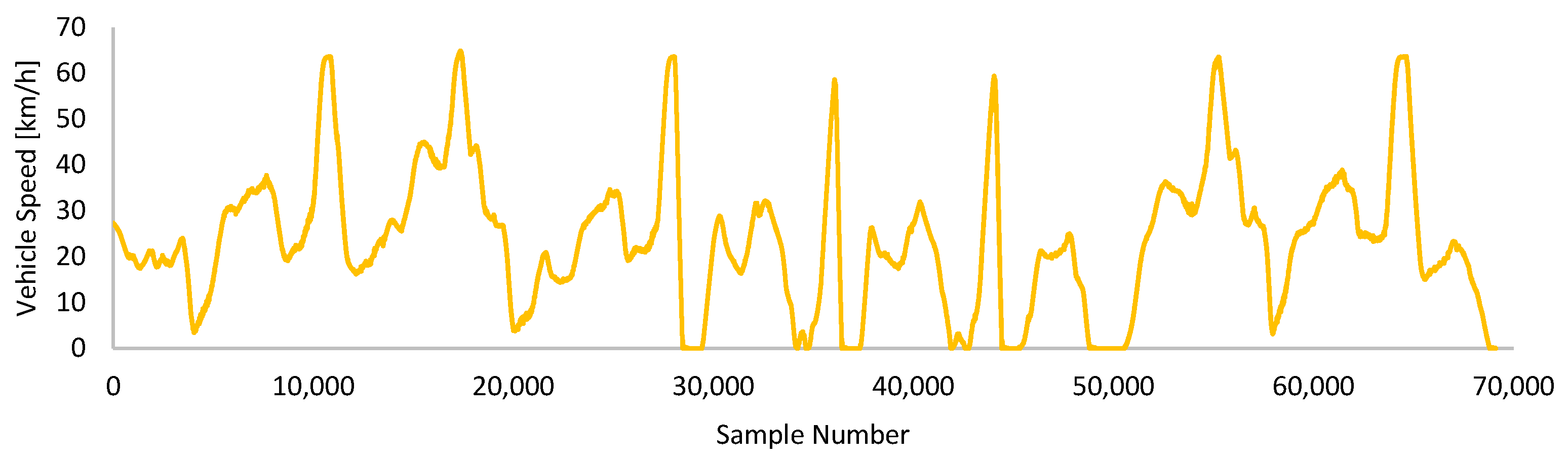

2.1. Real-World Driving Data

2.2. Data Preprocessing

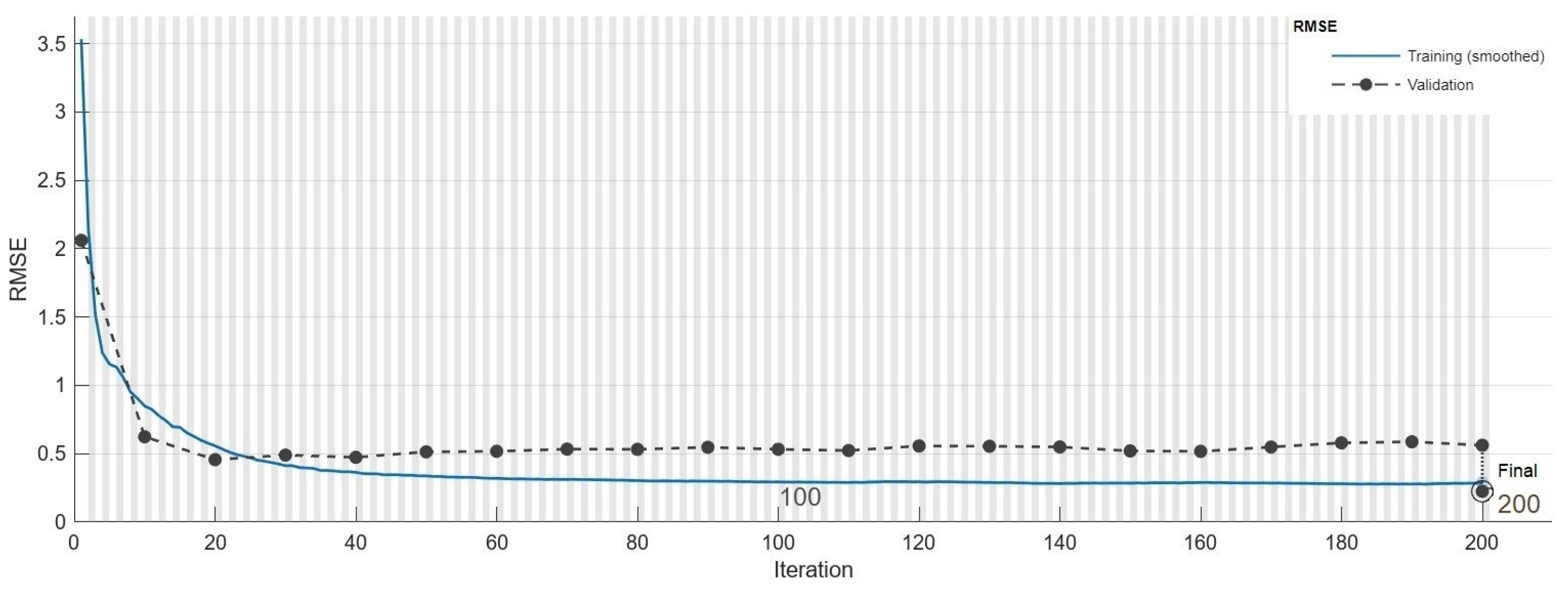

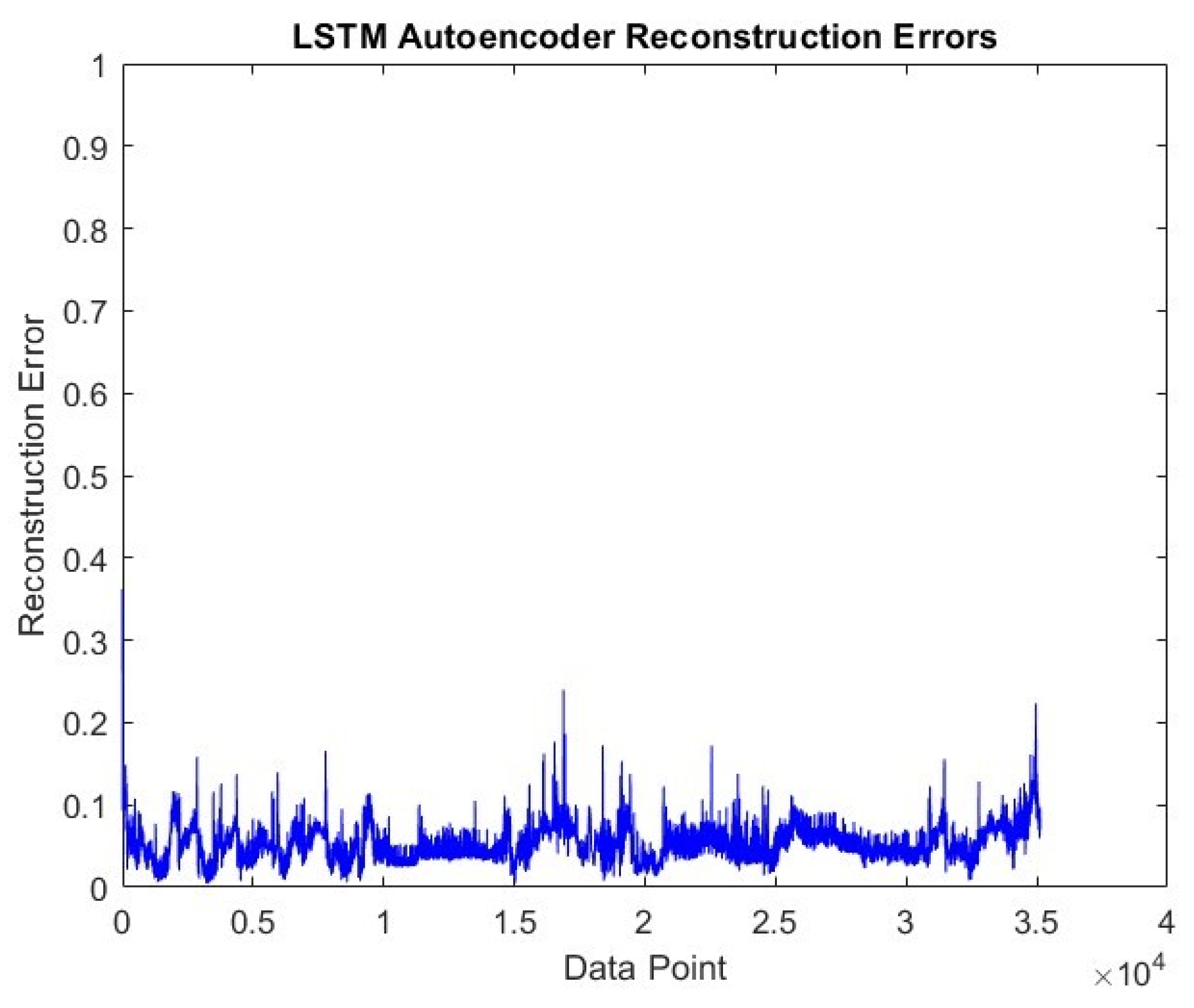

2.3. Anomaly Detection Mechanisms

| Algorithm 1 Hybrid Model-1 |

| Input: Original data, LSTM-Autoencoder anomaly labels, LOF anomaly labels Output: Anomaly rates per feature, overall anomaly rate, evaluation scores, potential energy savings 1: Load LSTM-Autoencoder anomaly labels 2: Load LOF anomaly labels 3: Read and normalize original data 4: Create hybrid anomaly labels (LSTM-Autoencoder AND LOF) 5: for each feature i in features do 6: labels <- combine LSTM-Autoencoder and LOF anomaly matrices for feature i 7: silhouetteScores <- calculateSilhouette(normalizedFeatures[i], labels) 8: meanSilhouetteScore <- mean(silhouetteScores) 9: dbi <- calculateDaviesBouldin(normalizedFeatures[i], labels) 10: chs <- calculateCalinskiHarabasz(normalizedFeatures[i], labels) 11: Print meanSilhouetteScore, dbi, chs, anomaly rate for feature i 12: end for 13: overallAnomalyRate <- calculateOverallAnomalyRate(hybridLabels) 14: Print overallAnomalyRate 15: anomalousPower <- identifyAnomalousPower(power, hybridLabels) 16: potentialEnergySavings <- calculateEnergySavings(anomalousPower) 17: Print potentialEnergySavings 18: for each feature i in features do 19: Plot normal and anomalous data points for feature i 20: end for function calculateDaviesBouldin(X, labels) k <- max(labels) clusterMeans <- zeros(k, size(X, 2)) clusterS <- zeros(k, 1) for i <- 1 to k do clusterPoints <- X[labels == i, :] clusterMeans[i, :] <- mean(clusterPoints, 1) clusterS[i] <- mean(sqrt(sum((clusterPoints - lusterMeans[i, :]).^2, 2))) end for R <- zeros(k) for i <- 1 to k do for j <- 1 to k do if i != j then R[i, j] <- (clusterS[i] + clusterS[j])/sqrt(sum((clusterMeans[i, :] − clusterMeans[j, :]).^2)) end if end for end for D <- max(R, [], 2) dbi <- mean(D) return dbi end function function calculateCalinskiHarabasz(X, labels) k <- max(labels) n <- size(X, 1) clusterMeans <- zeros(k, size(X, 2)) overallMean <- mean(X) betweenClusterDispersion <- 0 withinClusterDispersion <- 0 for i <- 1 to k do clusterPoints <- X[labels == i, :] clusterSize <- size(clusterPoints, 1) clusterMeans[i, :] <- mean(clusterPoints, 1) betweenClusterDispersion <- betweenClusterDispersion + clusterSize × sum((clusterMeans[i, :] − overallMean).^2) withinClusterDispersion <- withinClusterDispersion + sum(sum((clusterPoints − clusterMeans[i, :]).^2)) end for chs <- (betweenClusterDispersion/(k − 1))/(withinClusterDispersion/(n − k)) return chs end function |

| Algorithm 2 Hybrid Model-2 |

| Input: Original data, LSTM-Autoencoder anomaly labels, Mahalanobis anomaly labels Output: Anomaly rates per feature, overall anomaly rate, evaluation scores, potential energy savings 1: Load LSTM-Autoencoder anomaly labels 2: Load Mahalanobis anomaly labels 3: Read and normalize original data 4: Create hybrid anomaly labels (LSTM-Autoencoder OR Mahalanobis) 5: for each feature i in features do 6: labels <- combine LSTM-Autoencoder and Mahalanobis anomaly matrices for feature i 7: silhouetteScores <- calculateSilhouette(normalizedFeatures[i], labels) 8: meanSilhouetteScore <- mean(silhouetteScores) 9: dbi <- calculateDaviesBouldin(normalizedFeatures[i], labels) 10: chs <- calculateCalinskiHarabasz(normalizedFeatures[i], labels) 11: Print meanSilhouetteScore, dbi, chs, anomaly rate for feature i 12: end for 13: overallAnomalyRate <- calculateOverallAnomalyRate(hybridLabels) 14: Print overallAnomalyRate 15: anomalousPower <- identifyAnomalousPower(power, hybridLabels) 16: potentialEnergySavings <- calculateEnergySavings(anomalousPower) 17: Print potentialEnergySavings 18: for each feature i in features do 19: Plot normal and anomalous data points for feature i 20: end for function calculateDaviesBouldin(X, labels) k <- max(labels) clusterMeans <- zeros(k, size(X, 2)) clusterS <- zeros(k, 1) for i <- 1 to k do clusterPoints <- X[labels == i, :] clusterMeans[i, :] <- mean(clusterPoints, 1) clusterS[i] <- mean(sqrt(sum((clusterPoints − clusterMeans[i, :]).^2, 2))) end for R <- zeros(k) for i <- 1 to k do for j <- 1 to k do if i != j then R[i, j] <- (clusterS[i] + clusterS[j])/sqrt(sum((clusterMeans[i, :] − clusterMeans[j, :]).^2)) end if end for end for D <- max(R, [], 2) dbi <- mean(D) return dbi end function function calculateCalinskiHarabasz(X, labels) k <- max(labels) n <- size(X, 1) clusterMeans <- zeros(k, size(X, 2)) overallMean <- mean(X) betweenClusterDispersion <- 0 withinClusterDispersion <- 0 for i <- 1 to k do clusterPoints <- X[labels == i, :] clusterSize <- size(clusterPoints, 1) clusterMeans[i, :] <- mean(clusterPoints, 1) betweenClusterDispersion <- betweenClusterDispersion + clusterSize × sum((clusterMeans[i, :] − overallMean).^2) withinClusterDispersion <- withinClusterDispersion + sum(sum((clusterPoints − clusterMeans[i, :]).^2)) end for chs <- (betweenClusterDispersion/(k − 1))/(withinClusterDispersion/(n − k)) return chs end function |

2.4. Anomaly Detection Performance Evaluation

2.5. Chaoticity Assessment

3. Results

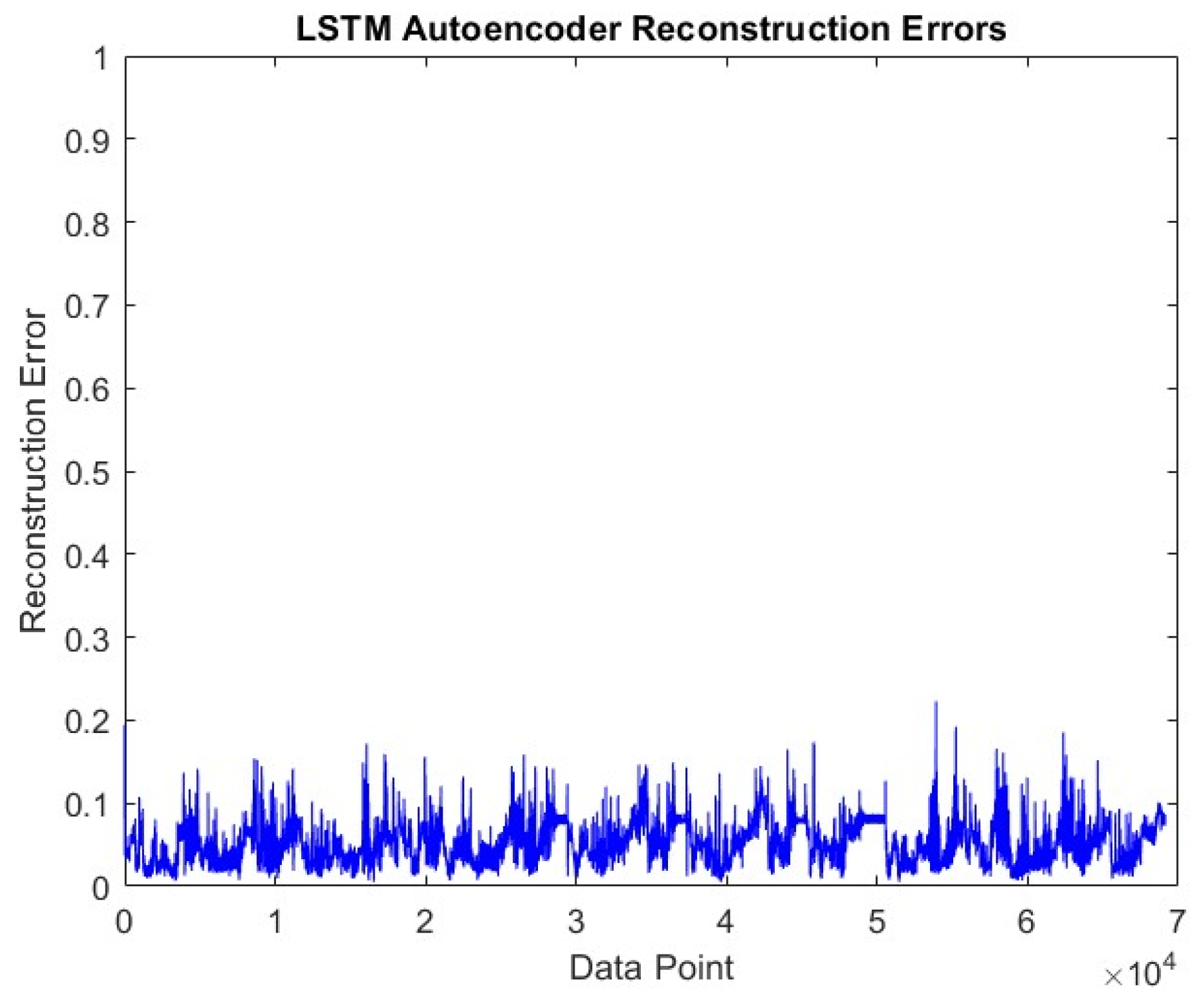

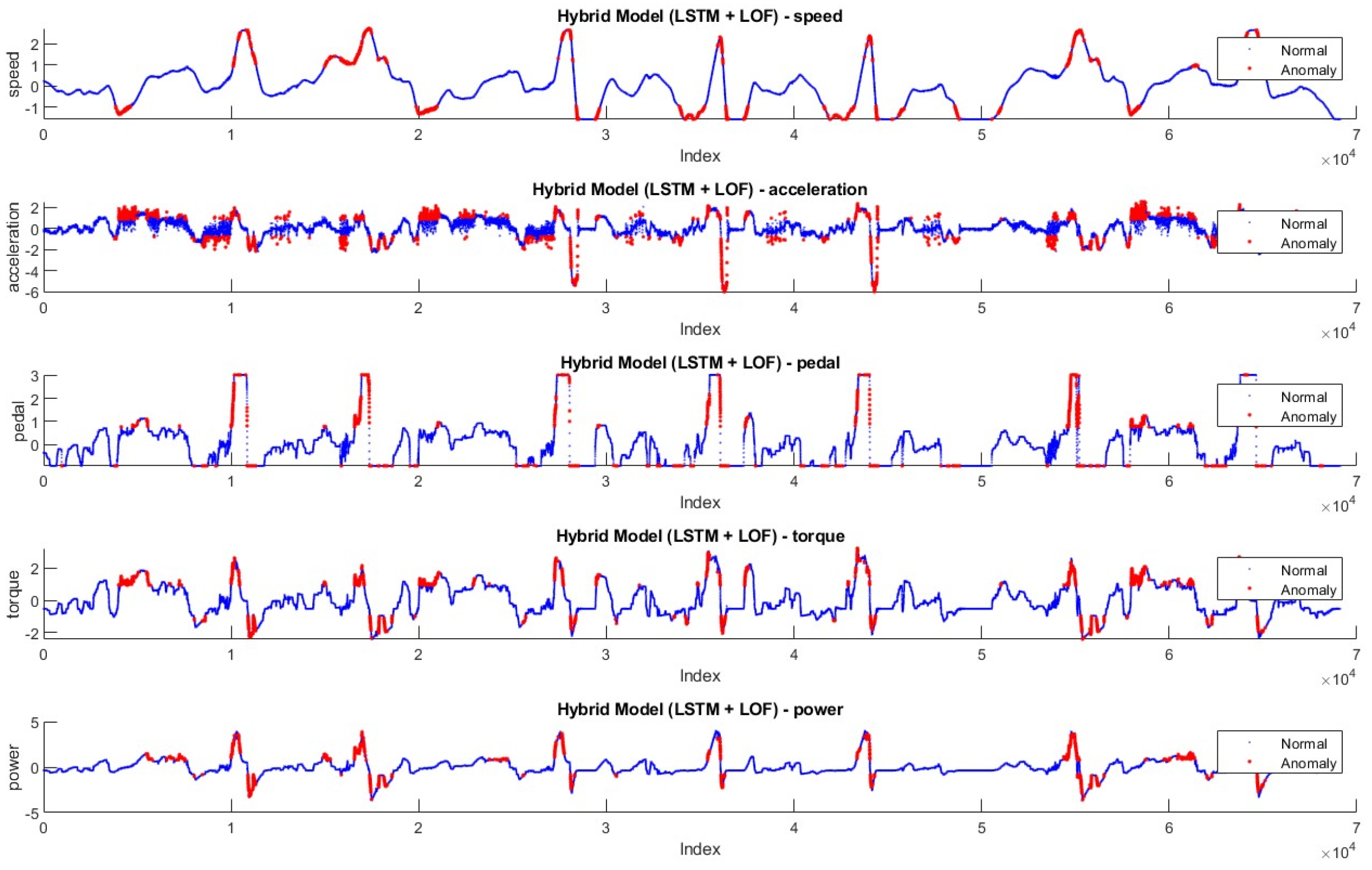

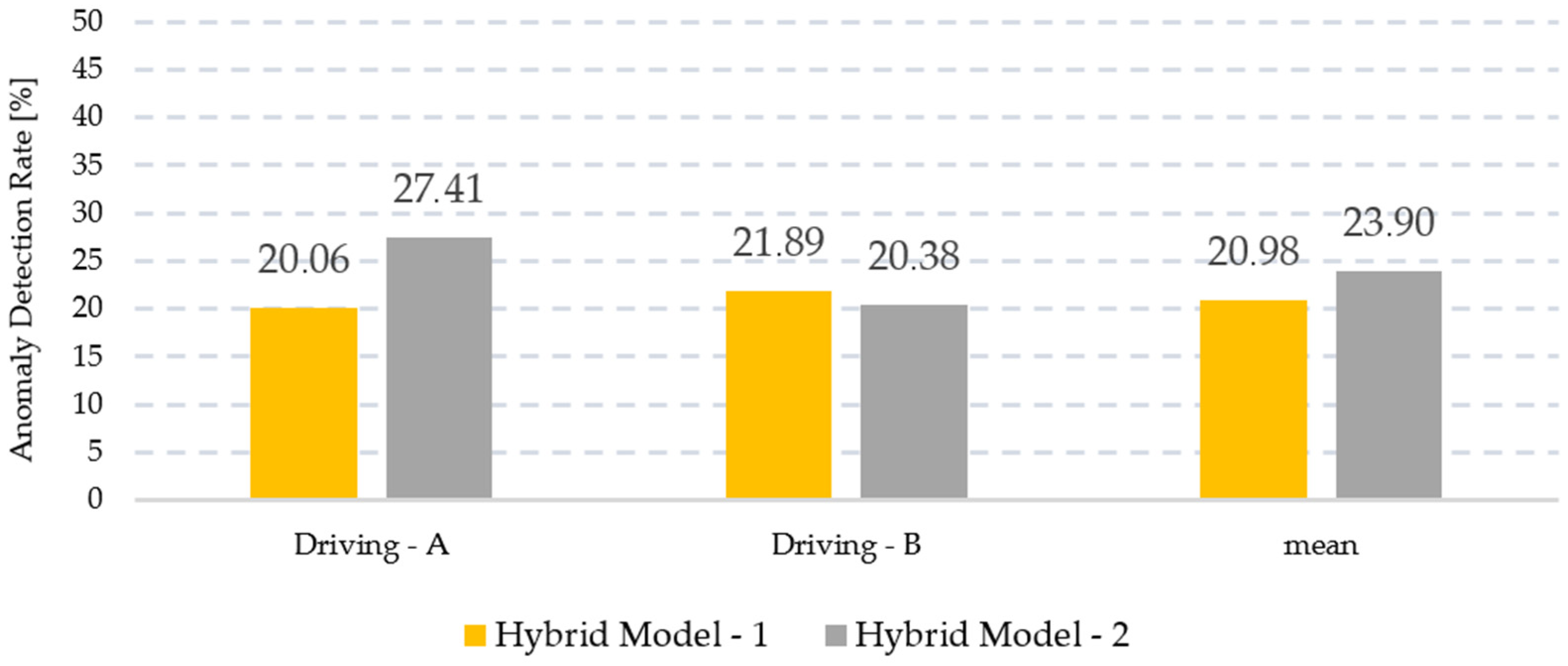

3.1. Performance Results on Driving-A

3.2. Performance Results for Driving-B

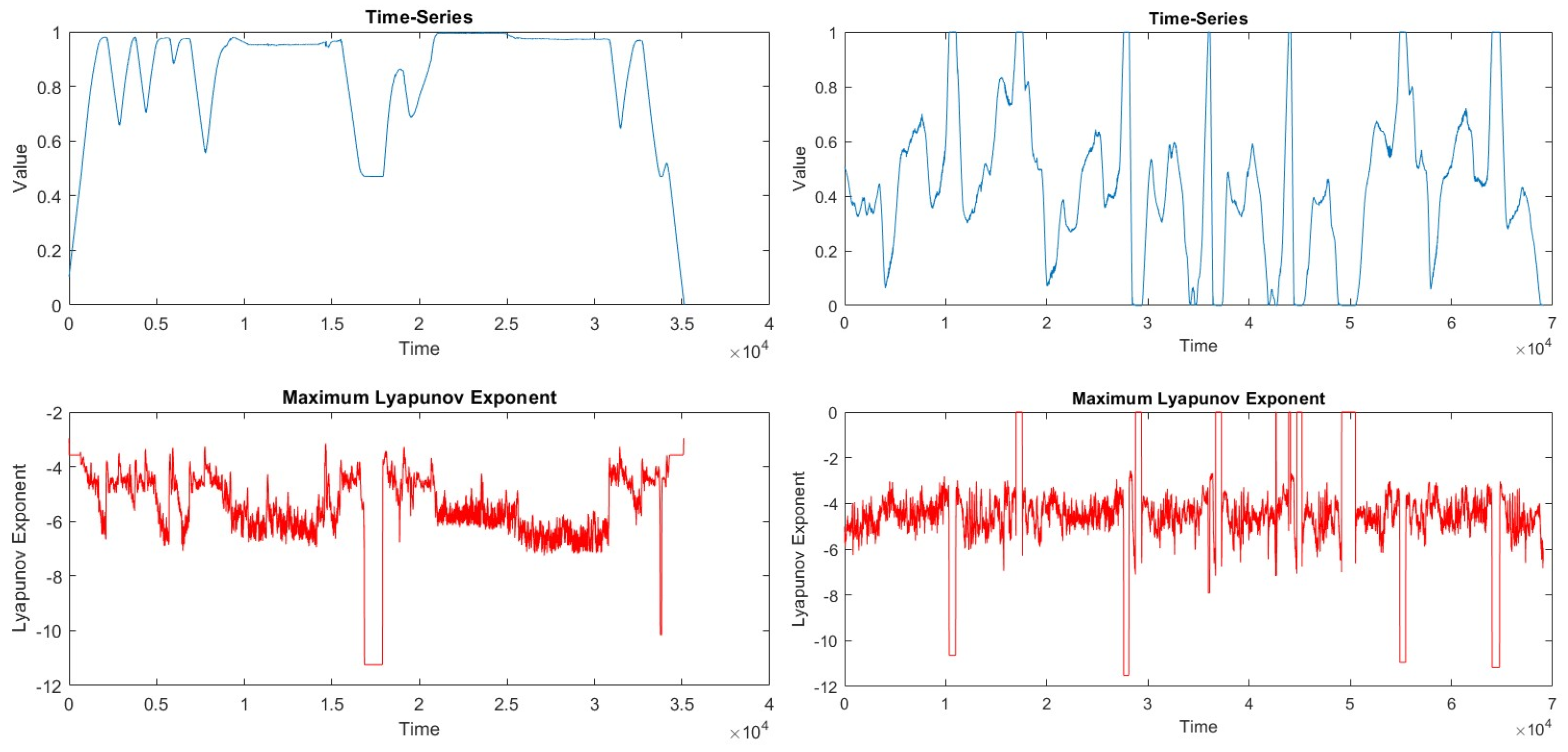

3.3. Chaoticity Assessment Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Samariya, D.; Thakkar, A. A Comprehensive Survey of Anomaly Detection Algorithms. Ann. Data Sci. 2023, 10, 829–850. [Google Scholar] [CrossRef]

- Dalal, S.; Lilhore, U.K.; Foujdar, N.; Simaiya, S.; Ayadi, M.; Almujally, N.A.; Ksibi, A. Next-Generation Cyber Attack Prediction for IoT Systems: Leveraging Multi-Class SVM and Optimized CHAID Decision Tree. J. Cloud Comput. 2023, 12, 137. [Google Scholar] [CrossRef]

- Ghuge, M.; Ranjan, N.; Mahajan, R.A.; Upadhye, P.A.; Shirkande, S.T.; Bhamare, D. Deep Learning Driven QoS Anomaly Detection for Network Performance Optimization. J. Electr. Syst. 2023, 19, 97–104. [Google Scholar] [CrossRef]

- Jeffrey, N.; Tan, Q.; Villar, J.R. A Hybrid Methodology for Anomaly Detection in Cyber–Physical Systems. Neurocomputing 2024, 568, 127068. [Google Scholar] [CrossRef]

- Qin, H.; Yan, M.; Ji, H. Application of Controller Area Network (CAN) Bus Anomaly Detection Based on Time Series Prediction. Veh. Commun. 2021, 27, 100291. [Google Scholar] [CrossRef]

- Wickramasinghe, C.S.; Marino, D.L.; Mavikumbure, H.S.; Cobilean, V.; Pennington, T.D.; Varghese, B.J.; Rieger, C.; Manic, M. RX-ADS: Interpretable Anomaly Detection Using Adversarial ML for Electric Vehicle CAN Data. IEEE Trans. Intell. Transp. Syst. 2023, 24, 14051–14063. [Google Scholar] [CrossRef]

- Ryan, C.; Murphy, F.; Mullins, M. End-to-End Autonomous Driving Risk Analysis: A Behavioural Anomaly Detection Approach. IEEE Trans. Intell. Transp. Syst. 2021, 22, 1650–1662. [Google Scholar] [CrossRef]

- Kakar, J.K.; Hussain, S.; Kim, S.C.; Kim, H. TimeTector: A Twin-Branch Approach for Unsupervised Anomaly Detection in Livestock Sensor Noisy Data (TT-TBAD). Sensors 2024, 24, 2453. [Google Scholar] [CrossRef]

- Iqbal, A.; Amin, R.; Alsubaei, F.S.; Alzahrani, A. Anomaly Detection in Multivariate Time Series Data Using Deep Ensemble Models. PLoS ONE 2024, 19, e0303890. [Google Scholar] [CrossRef]

- Rosero-Montalvo, P.D.; István, Z.; Tözün, P.; Hernandez, W. Hybrid Anomaly Detection Model on Trusted IoT Devices. IEEE Internet Things J. 2023, 10, 10959–10969. [Google Scholar] [CrossRef]

- Ghanem, T.F.; Elkilani, W.S.; Abdul-kader, H.M. A Hybrid Approach for Efficient Anomaly Detection Using Metaheuristic A Hybrid Approach for Efficient Anomaly Detection Using Metaheuristic Methods. J. Adv. Res. 2015, 6, 609–619. [Google Scholar] [CrossRef] [PubMed]

- Mushtaq, E.; Zameer, A.; Rubina, N. Knacks of a Hybrid Anomaly Detection Model Using Deep Auto-Encoder Driven Gated Recurrent Unit. Comput. Networks 2023, 226, 109681. [Google Scholar] [CrossRef]

- Lachekhab, F.; Benzaoui, M.; Tadjer, S.A.; Bensmaine, A.; Hamma, H. LSTM-Autoencoder Deep Learning Model for Anomaly Detection in Electric Motor. Energies 2024, 17, 2340. [Google Scholar] [CrossRef]

- Lee, Y.; Park, C.; Kim, N.; Ahn, J.; Jeong, J. LSTM-Autoencoder Based Anomaly Detection Using Vibration Data of Wind Turbines. Sensors 2024, 24, 2833. [Google Scholar] [CrossRef] [PubMed]

- Adesh, A.; Shobha, G.; Shetty, J.; Xu, L. Local Outlier Factor for Anomaly Detection in HPCC Systems. J. Parallel Distrib. Comput. 2024, 192, 104923. [Google Scholar] [CrossRef]

- Fadul, A. Anomaly Detection Based on Isolation Forest and Local Outlier Factor. Ph.D. Thesis, Africa University, Mutare, Zimbabwe, 2023. [Google Scholar]

- Jung, S.; Kim, M.; Kim, E.; Kim, B.; Kim, J.; Cho, K.; Park, H.; Kim, S. The Early Detection of Faults for Lithium-Ion Batteries in Energy Storage Systems Using Independent Component Analysis with Mahalanobis Distance. Energies 2024, 17, 535. [Google Scholar] [CrossRef]

- Kritzman, M.; Li, Y. Skulls, Financial Turbulence, and Risk Management. Financ. Anal. J. 2010, 66, 30–41. [Google Scholar] [CrossRef]

- Pang, J.; Liu, D.; Peng, Y.; Peng, X. Temporal Dependence Mahalanobis Distance for Anomaly Detection in Multivariate Spacecraft Telemetry Series. ISA Trans. 2023, 140, 354–367. [Google Scholar] [CrossRef]

- Aydin, I.; Karakose, M.; Akin, E. Chaotic-Based Hybrid Negative Selection Algorithm and Its Applications in Fault and Anomaly Detection. Expert Syst. Appl. 2010, 37, 5285–5294. [Google Scholar] [CrossRef]

- Moso, J.C.; Cormier, S.; de Runz, C.; Fouchal, H.; Wandeto, J.M. Streaming-Based Anomaly Detection in ITS Messages. Appl. Sci. 2023, 13, 7313. [Google Scholar] [CrossRef]

- Yun, K.; Yun, H.; Lee, S.; Oh, J.; Kim, M.; Lim, M.; Lee, J.; Kim, C.; Seo, J.; Choi, J. A Study on Machine Learning-Enhanced Roadside Unit-Based Detection of Abnormal Driving in Autonomous Vehicles. Electronics 2024, 13, 288. [Google Scholar] [CrossRef]

- Barbosa, G.B.; Fernandes, W.L.; Greco, M.; Peixoto, D.H.N. Numerical Modeling of Lyapunov Exponents for Structural Damage Identification. Buildings 2023, 13, 1802. [Google Scholar] [CrossRef]

- Vogl, M. Chaos Measure Dynamics in a Multifactor Model for Financial Market Predictions. Commun. Nonlinear Sci. Numer. Simul. 2024, 130, 107760. [Google Scholar] [CrossRef]

- Tian, Z. Chaotic Characteristic Analysis of Network Traffic Time Series at Different Time Scales. Chaos Solitons Fractals 2020, 130, 109412. [Google Scholar] [CrossRef]

- PlotaRoute Route Planner. Available online: https://www.plotaroute.com/routeplanner (accessed on 21 August 2024).

- Çetin, V.; Yıldız, O. A Comprehensive Review on Data Preprocessing Techniques in Data Analysis. Pamukkale Univ. J. Eng. Sci. 2022, 28, 299–312. [Google Scholar] [CrossRef]

- Mallikharjuna Rao, K.; Saikrishna, G.; Supriya, K. Data Preprocessing Techniques: Emergence and Selection towards Machine Learning Models—A Practical Review Using HPA Dataset. Multimed. Tools Appl. 2023, 82, 37177–37196. [Google Scholar] [CrossRef]

- Ramadhan, N.G.; Adiwijaya; Maharani, W.; Gozali, A.A. Chronic Diseases Prediction Using Machine Learning with Data Preprocessing Handling: A Critical Review. IEEE Access 2024, 12, 80698–80730. [Google Scholar] [CrossRef]

- Wilf, H.S. Algorithms and Complexity; A K Peters: Natick, MA, USA, 1994; ISBN 1568811780. [Google Scholar]

- Cormen, T.H.; Leiserson, C.E.; Rivest, R.L.; Stein, C. Introduction to Algorithms; The MIT Press: Cambridge, MA, USA, 2022; ISBN 9780262046305. [Google Scholar]

- Abdiansah, A.; Wardoyo, R. Time Complexity Analysis of Support Vector Machines (SVM) in LibSVM. Int. J. Comput. Apl. 2015, 128, 28–34. [Google Scholar] [CrossRef]

- Alizade, M.; Kheni, R.; Price, S.; Sousa, B.C.; Cote, D.L.; Neamtu, R.; Price, S. A Comparative Study of Clustering Methods for Nanoindentation Mapping Data. Integr. Mater. Manuf. Innov. 2024, 13, 526–540. [Google Scholar] [CrossRef]

- Kossakov, M.; Mukasheva, A.; Balbayev, G.; Seidazimov, S.; Mukammejanova, D.; Sydybayeva, M. Quantitative Comparison of Machine Learning Clustering Methods for Tuberculosis Data Analysis. Eng. Proc. 2024, 60, 20. [Google Scholar] [CrossRef]

- Ros, F.; Riad, R.; Guillaume, S. Neurocomputing PDBI: A Partitioning Davies-Bouldin Index for Clustering Evaluation. Neurocomputing 2023, 528, 178–199. [Google Scholar] [CrossRef]

- Shutaywi, M.; Kachouie, N.N. Silhouette Analysis for Performance Evaluation in Machine Learning with Applications to Clustering. Entropy 2021, 23, 759. [Google Scholar] [CrossRef]

- Benaya, R.; Sibaroni, Y.; Ihsan, A.F. Clustering Content Types and User Roles Based on Tweet Text Using K-Medoids Partitioning Based. J. Comput. Syst. Inform. 2023, 4, 749–756. [Google Scholar] [CrossRef]

- Krysmon, S.; Claßen, J.; Dorscheidt, F.; Pischinger, S.; Trendafilov, G.; Düzgün, M. RDE Calibration—Evaluating Fundamentals of Clustering Approaches to Support the Calibration Process. Vehicles 2023, 5, 404–423. [Google Scholar] [CrossRef]

- Saitta, S.; Raphael, B.; Smith, I.F.C. A Comprehensive Validity Index for Clustering. Intell. Data Anal. 2008, 12, 529–548. [Google Scholar] [CrossRef]

- Cengizler, C.; Un, M.K. Evaluation of Calinski-Harabasz Criterion as Fitness Measure for Genetic Algorithm Based Segmentation of Cervical Cell Nuclei Evaluation of Calinski-Harabasz Criterion as Fitness Cervical Cell Nuclei. Br. J. Math. Comput. Sci. 2017, 22, 33729. [Google Scholar] [CrossRef]

- Scarciglia, A.; Catrambone, V.; Bonanno, C.; Valenza, G. A Multiscale Partition-Based Kolmogorov–Sinai Entropy for the Complexity Assessment of Heartbeat Dynamics. Bioengineering 2022, 9, 80. [Google Scholar] [CrossRef]

- Pilgrim, I.; Taylor, R.P. Fractal Analysis of Time-Series Data Sets: Methods and Challenges; Intechopen: London, UK, 2019. [Google Scholar]

- Zahedi, M.R.S.; Mohammadi, S.; Heydari, A. Kaiser Window Efficiency in Calculating the Exact Fractal Dimension by the Power Spectrum Method. J. Math. Ext. 2023, 17, 1–25. [Google Scholar]

- Paun, M.-A.; Paun, V.-A.; Paun, V.-P. Monetary Datum Fractal Analysis Using the Time Series Theory. Symmetry 2023, 15, 1896. [Google Scholar] [CrossRef]

- Maleki, S.; Maleki, S.; Jennings, N.R. Unsupervised Anomaly Detection with LSTM Autoencoders Using Statistical Data-Filtering. Appl. Soft Comput. 2021, 108, 107443. [Google Scholar] [CrossRef]

- Tayeh, T.; Aburakhia, S.; Myers, R.; Shami, A. An Attention-Based ConvLSTM Autoencoder with Dynamic Thresholding for Unsupervised Anomaly Detection in Multivariate Time Series. Mach. Learn. Knowl. Extr. 2022, 4, 350–370. [Google Scholar] [CrossRef]

- Jia, D.; Zhang, X.; Zhou, J.T.; Lai, P.; Wei, Y. Dynamic Thresholding for Video Anomaly Detection. IET Image Process. 2022, 16, 2973–2982. [Google Scholar] [CrossRef]

| Algorithm Step | Time Complexity O Notation |

|---|---|

| Steps 1–2 | O(1) |

| Step 3 | O(n × d) |

| Step 4 | O(n) |

| Steps 5–12 | O(f × (n2 + k × n × d) |

| Steps 13–14 | O(n) |

| Steps 15–17 | O(n) |

| Steps 18–20 | O(f × n) |

| Data Feature | LSTM | LOF | Mahalanobis Distance |

|---|---|---|---|

| Speed | 6.06% | 3.75% | 13.22% |

| Acceleration | 6.87% | 2.85% | 9.54% |

| Pedal | 9.57% | 3.84% | 11.48% |

| Torque | 6.65% | 2.65% | 9.16% |

| Power | 5.48% | 1.78% | 5.29% |

| TOTAL | 14.79% | 7.99% | 15.94% |

| Data Feature | Hybrid Model-1 | Hybrid Model-2 |

|---|---|---|

| Speed | 8.15% | 13.46% |

| Acceleration | 8.44% | 17.06% |

| Pedal | 11.68% | 17.73% |

| Torque | 8.10% | 16.55% |

| Power | 6.57% | 13.19% |

| TOTAL | 20.06% | 27.41% |

| Data Feature | Silhouette | Davies–Bouldin Index | Calinski–Harabasz Index |

|---|---|---|---|

| Speed | 0.74 | 0.44 | 12,251.48 |

| Acceleration | 0.43 | 1.85 | 6840.92 |

| Pedal | 0.47 | 0.82 | 6908.50 |

| Torque | 0.48 | 1.76 | 7625.86 |

| Power | 0.37 | 1.17 | 12,723.62 |

| Data Feature | Silhouette | Davies–Bouldin Index | Calinski–Harabasz Index |

|---|---|---|---|

| Speed | 0.87 | 0.35 | 91,618.38 |

| Acceleration | 0.53 | 1.94 | 5134.52 |

| Pedal | 0.67 | 0.35 | 37,947.00 |

| Torque | 0.56 | 1.70 | 6282.94 |

| Power | 0.55 | 0.99 | 11,263.14 |

| Data Feature | LSTM | LOF | Mahalanobis Distance |

|---|---|---|---|

| Speed | 5.80% | 4.67% | 7.80% |

| Acceleration | 5.80% | 3.95% | 4.54% |

| Pedal | 5.96% | 3.81% | 8.24% |

| Torque | 5.41% | 3.36% | 4.96% |

| Power | 4.53% | 2.53% | 6.17% |

| TOTAL | 16.62% | 8.58% | 7.80% |

| Data Feature | Hybrid Model-1 | Hybrid Model-2 |

|---|---|---|

| Speed | 8.34% | 12.05% |

| Acceleration | 7.79% | 12.92% |

| Pedal | 7.79% | 18.28% |

| Torque | 7.18% | 10.78% |

| Power | 5.98% | 10.35% |

| TOTAL | 21.89% | 20.38% |

| Data Feature | Silhouette | Davies–Bouldin Index | Calinski–Harabasz Index |

|---|---|---|---|

| Speed | 0.52 | 2.40 | 3707.32 |

| Acceleration | 0.66 | 3.36 | 104.76 |

| Pedal | 0.35 | 19.76 | 76.70 |

| Torque | 0.56 | 13.85 | 2362.25 |

| Power | 0.67 | 3.80 | 3482.46 |

| Data Feature | Silhouette | Davies–Bouldin Index | Calinski–Harabasz Index |

|---|---|---|---|

| Speed | 0.60 | 1.85 | 10,139.20 |

| Acceleration | 0.62 | 4.89 | 1545.93 |

| Pedal | 0.59 | 2.89 | 6971.01 |

| Torque | 0.56 | 4.34 | 2120.08 |

| Power | 0.73 | 1.83 | 11,795.85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Savran, E.; Karpat, E.; Karpat, F. Energy-Efficient Anomaly Detection and Chaoticity in Electric Vehicle Driving Behavior. Sensors 2024, 24, 5628. https://doi.org/10.3390/s24175628

Savran E, Karpat E, Karpat F. Energy-Efficient Anomaly Detection and Chaoticity in Electric Vehicle Driving Behavior. Sensors. 2024; 24(17):5628. https://doi.org/10.3390/s24175628

Chicago/Turabian StyleSavran, Efe, Esin Karpat, and Fatih Karpat. 2024. "Energy-Efficient Anomaly Detection and Chaoticity in Electric Vehicle Driving Behavior" Sensors 24, no. 17: 5628. https://doi.org/10.3390/s24175628