UAV Visual and Thermographic Power Line Detection Using Deep Learning

Abstract

:1. Introduction

- A dataset of synchronized and georeferenced visible and thermographic images with labels.

- A processing pipeline for visible and thermographic images based on YOLOv8.

- A summary of the experimental results and discussion of topics to be addressed in future research.

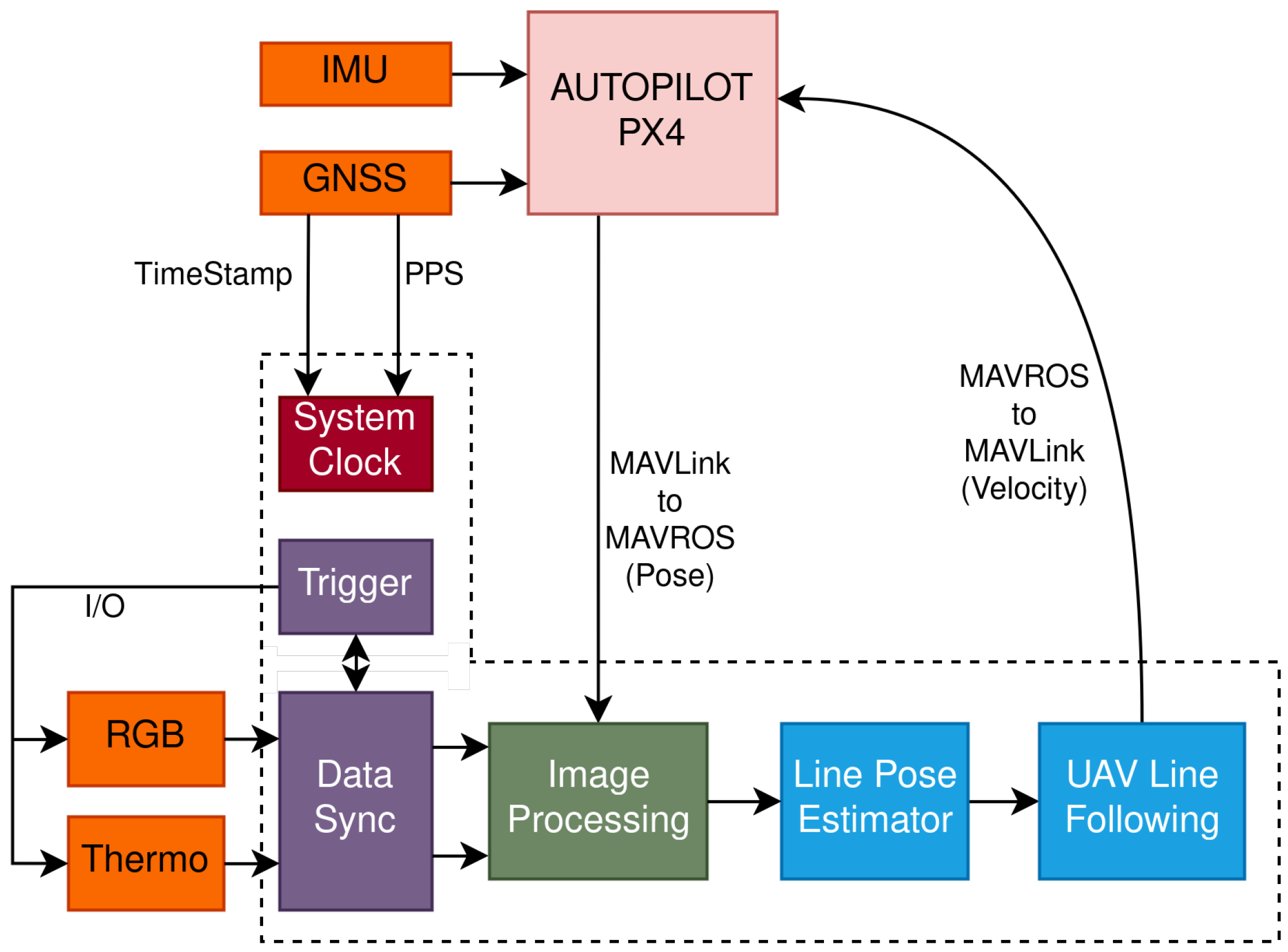

2. Multimodal Power Line Detection Approach

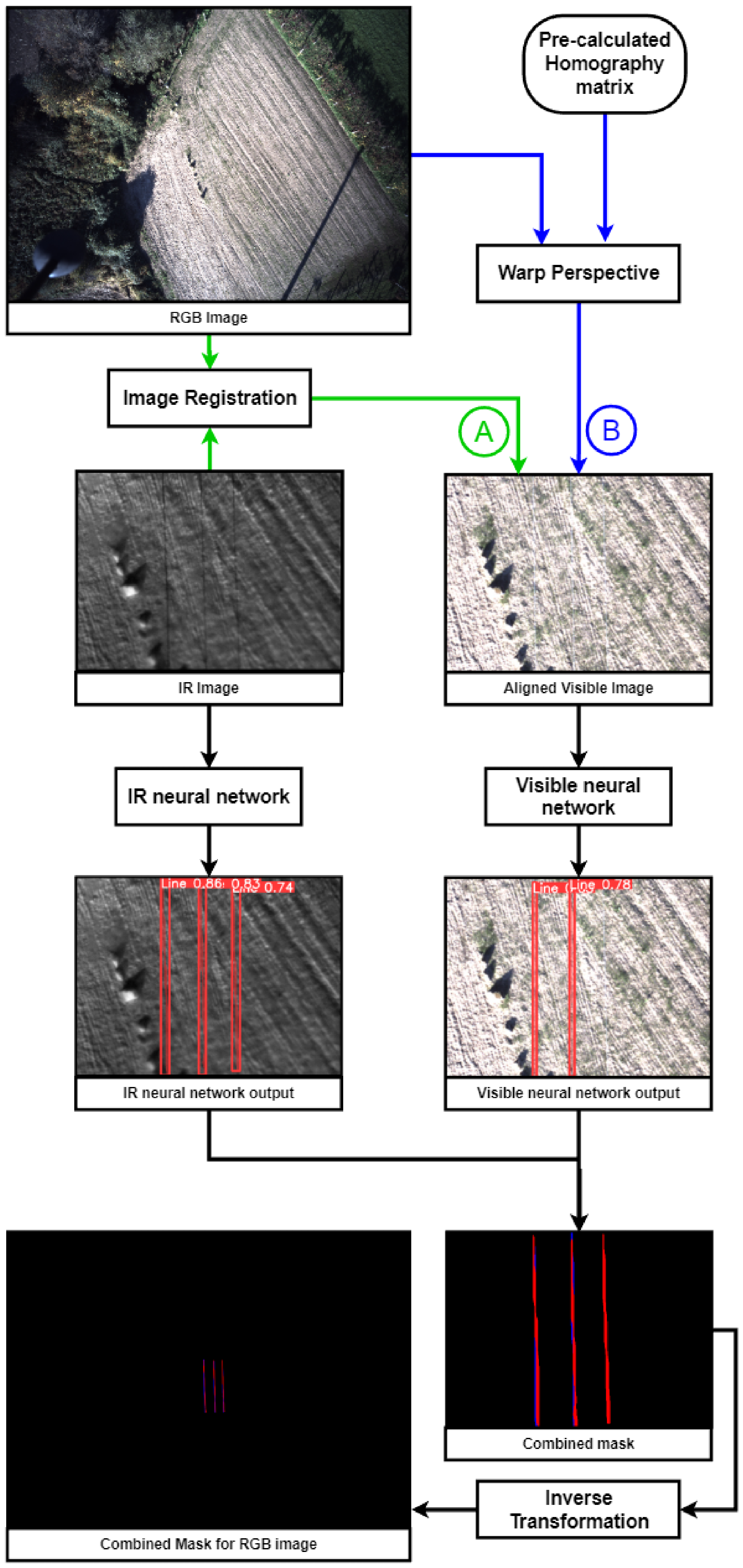

3. Implemented Image Processing Approach

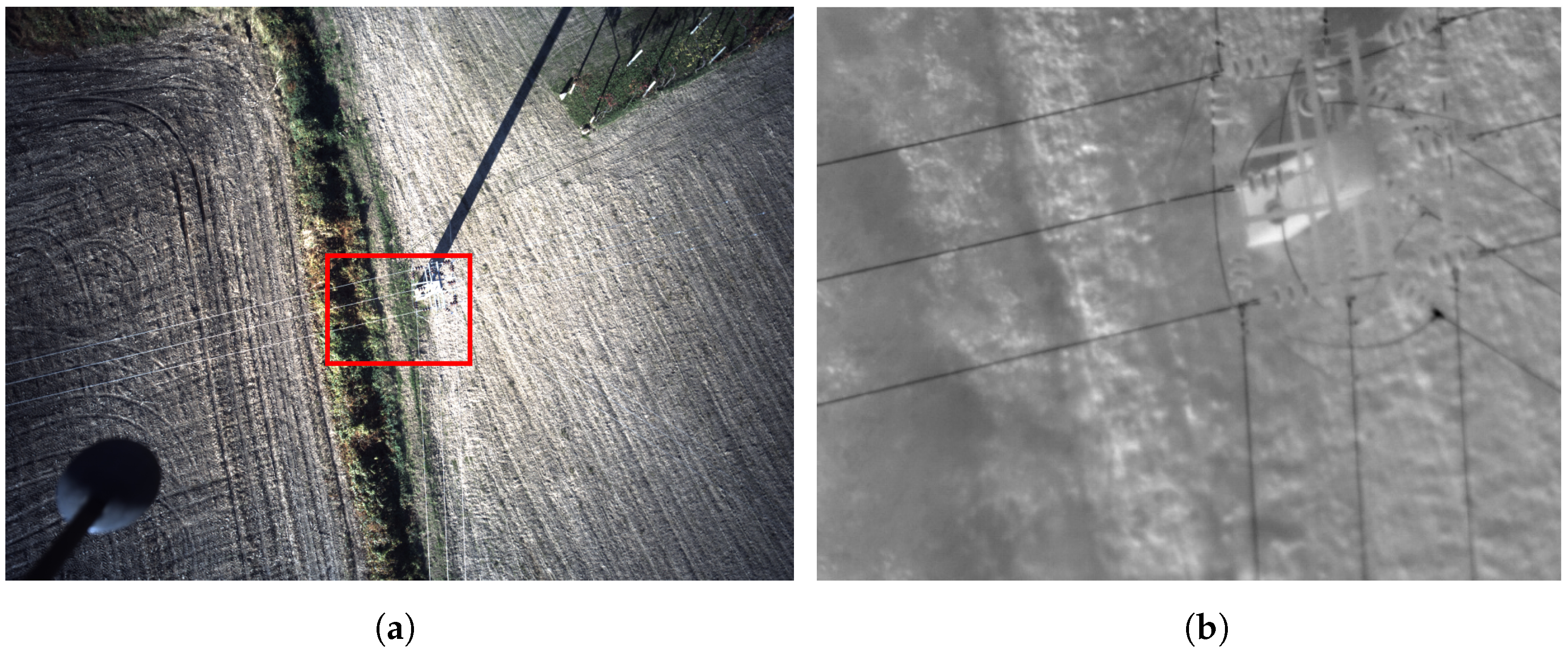

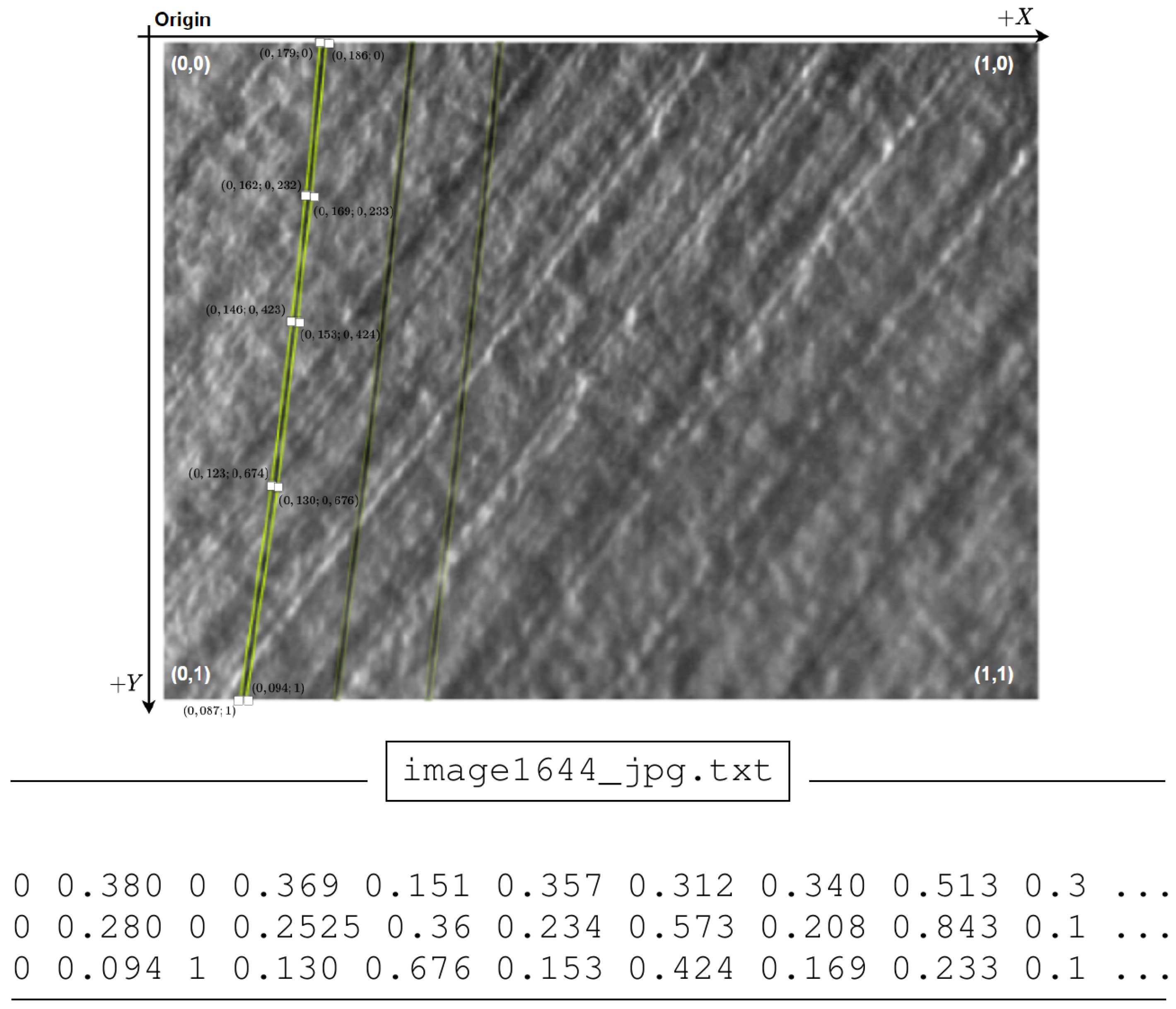

4. Dataset and Data Preparation

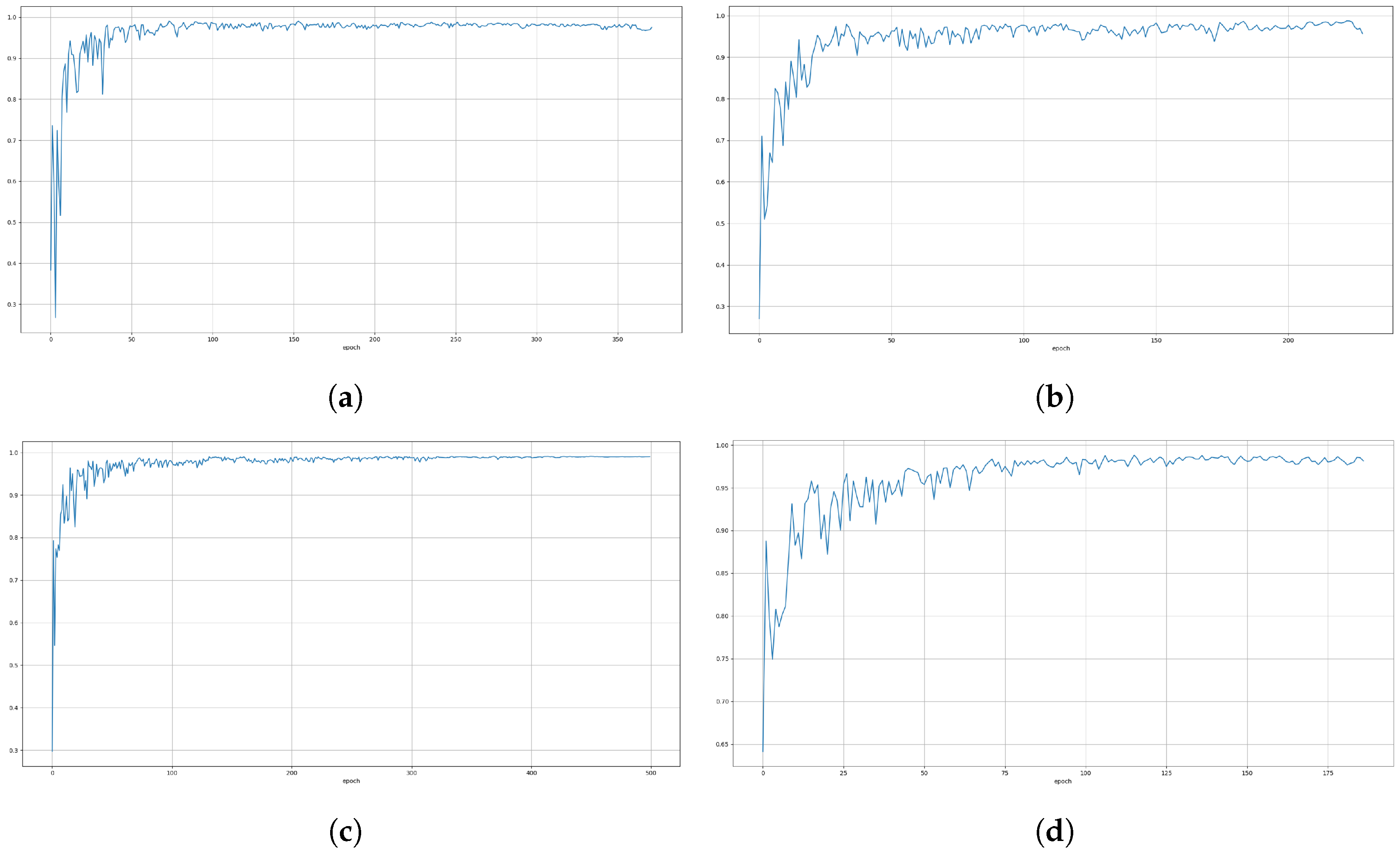

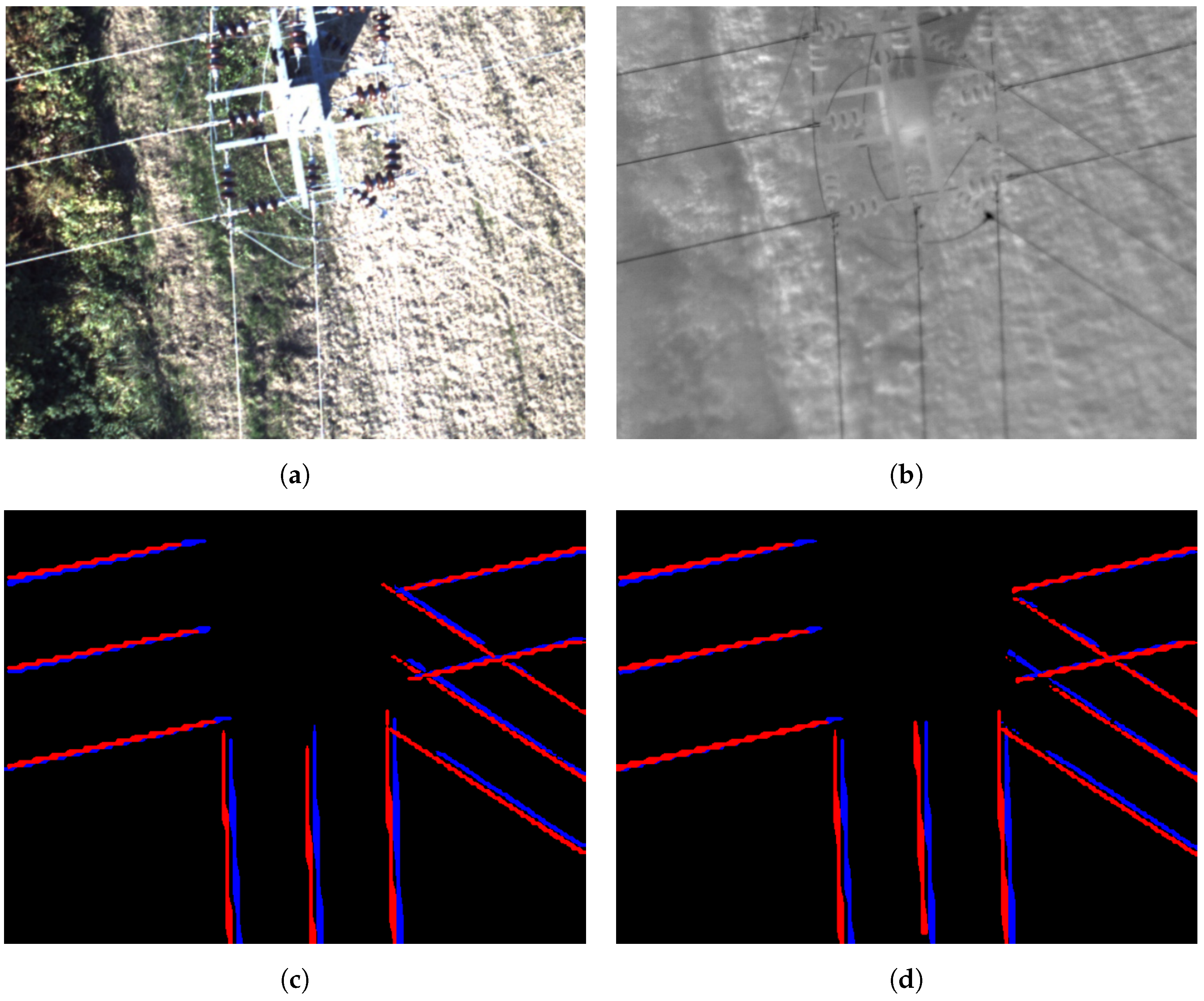

5. Results and Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- International Energy Agency. Net Zero by 2050; International Energy Agency: Paris, France, 2021; p. 224. [Google Scholar] [CrossRef]

- Pagnano, A.; Höpf, M.; Teti, R. A Roadmap for Automated Power Line Inspection. Maintenance and Repair. Proc. CIRP 2013, 12, 234–239. [Google Scholar] [CrossRef]

- Katrasnik, J.; Pernus, F.; Likar, B. A Survey of Mobile Robots for Distribution Power Line Inspection. IEEE Trans. Power Deliv. 2010, 25, 485–493. [Google Scholar] [CrossRef]

- Aggarwal, R.; Johns, A.; Jayasinghe, J.; Su, W. Overview of the condition monitoring of overhead lines. Electr. Power Syst. Res. 2000, 53, 15–22. [Google Scholar] [CrossRef]

- Sampedro, C.; Rodriguez-Vazquez, J.; Rodriguez-Ramos, A.; Carrio, A.; Campoy, P. Deep Learning-Based System for Automatic Recognition and Diagnosis of Electrical Insulator Strings. IEEE Access 2019, 7, 101283–101308. [Google Scholar] [CrossRef]

- Matikainen, L.; Lehtomäki, M.; Ahokas, E.; Hyyppä, J.; Karjalainen, M.; Jaakkola, A.; Kukko, A.; Heinonen, T. Remote sensing methods for power line corridor surveys. ISPRS J. Photogramm. Remote Sens. 2016, 119, 10–31. [Google Scholar] [CrossRef]

- Chen, C.; Yang, B.; Song, S.; Peng, X.; Huang, R. Automatic Clearance Anomaly Detection for Transmission Line Corridors Utilizing UAV-Borne LIDAR Data. Remote Sens. 2018, 10, 613. [Google Scholar] [CrossRef]

- Mills, S.J.; Gerardo Castro, M.P.; Li, Z.; Cai, J.; Hayward, R.; Mejias, L.; Walker, R.A. Evaluation of Aerial Remote Sensing Techniques for Vegetation Management in Power-Line Corridors. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3379–3390. [Google Scholar] [CrossRef]

- Mirallès, F.; Pouliot, N.; Montambault, S. State-of-the-art review of computer vision for the management of power transmission lines. In Proceedings of the 2014 3rd International Conference on Applied Robotics for the Power Industry, Foz do Iguacu, Brazil, 14–16 October 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Whitworth, C.C.; Duller, A.W.G.; Jones, D.I.; Earp, G.K. Aerial video inspection of overhead power lines. Power Eng. J. 2001, 15, 25–32. [Google Scholar] [CrossRef]

- Liew, C.F.; DeLatte, D.; Takeishi, N.; Yairi, T. Recent Developments in Aerial Robotics: A Survey and Prototypes Overview. arXiv 2017, arXiv:1711.10085. [Google Scholar] [CrossRef]

- Drones for Power Line Inspections. Available online: https://www.utilityproducts.com/line-construction-maintenance/article/16003823/drones-for-power-line-inspections (accessed on 6 December 2019).

- A Bird’s-Eye View: Drones in the Power Sector. Available online: https://www.powermag.com/a-birds-eye-view-drones-in-the-power-sector/ (accessed on 6 December 2019).

- Robotic Unmanned Drone NDT Inspections for Energy. Available online: https://www.aetosgroup.com/ (accessed on 6 December 2019).

- Lages, W.F.; de Oliveira, V.M. A survey of applied robotics for the power industry in Brazil. In Proceedings of the 2012 2nd International Conference on Applied Robotics for the Power Industry (CARPI), Zurich, Switzerland, 11–13 September 2012; pp. 78–82. [Google Scholar] [CrossRef]

- Li, X.; Guo, Y. Application of LiDAR technology in power line inspection. IOP Conf. Ser. Mater. Sci. Eng. 2018, 382, 052025. [Google Scholar] [CrossRef]

- Wronkowicz, A. Automatic fusion of visible and infrared images taken from different perspectives for diagnostics of power lines. Quant. Infrared Thermogr. J. 2016, 13, 155–169. [Google Scholar] [CrossRef]

- Stockton, G.R.; Tache, A. Advances in applications for aerial infrared thermography. In Thermosense XXVIII: Defense and Security Symposium, Orlando, FL, USA, 17–21 April 2006; Miles, J.J., Peacock, G.R., Knettel, K.M., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2006; Volume 6205, pp. 99–109. [Google Scholar] [CrossRef]

- Jalil, B.; Leone, G.R.; Martinelli, M.; Moroni, D.; Pascali, M.A.; Berton, A. Fault Detection in Power Equipment via an Unmanned Aerial System Using Multi Modal Data. Sensors 2019, 19, 3014. [Google Scholar] [CrossRef]

- Shams, F.; Omar, M.; Usman, M.; Khan, S.; Larkin, S.; Raw, B. Thermal Imaging of Utility Power Lines: A Review. In Proceedings of the 2022 International Conference on Engineering and Emerging Technologies (ICEET), Kuala Lumpur, Malaysia, 27–28 October 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, L.; Wang, B.; Chen, X.; Wang, Q.; Zheng, T. High Speed Automatic Power Line Detection and Tracking for a UAV-Based Inspection. In Proceedings of the 2012 International Conference on Industrial Control and Electronics Engineering, Xi’an, China, 23–25 August 2012; pp. 266–269. [Google Scholar] [CrossRef]

- Sharma, H.; Bhujade, R.; Adithya, V.; Balamuralidhar, P. Vision-based detection of power distribution lines in complex remote surroundings. In Proceedings of the 2014 Twentieth National Conference on Communications (NCC), Kanpur, India, 28 February–2 March 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Yang, T.W.; Yin, H.; Ruan, Q.Q.; Han, J.D.; Qi, J.T.; Yong, Q.; Wang, Z.T.; Sun, Z.Q. Overhead power line detection from UAV video images. In Proceedings of the 2012 19th International Conference on Mechatronics and Machine Vision in Practice (M2VIP), Auckland, New Zealand, 28–30 November 2012; pp. 74–79. [Google Scholar]

- Yan, G.; Li, C.; Zhou, G.; Zhang, W.; Li, X. Automatic Extraction of Power Lines From Aerial Images. IEEE Geosci. Remote Sens. Lett. 2007, 4, 387–391. [Google Scholar] [CrossRef]

- Golightly, I.; Jones, D. Visual control of an unmanned aerial vehicle for power line inspection. In Proceedings of the ICAR ’05, 12th International Conference on Advanced Robotics, Seattle, WA, USA, 18–20 July 2005; pp. 288–295. [Google Scholar] [CrossRef]

- Gerke, M.; Seibold, P. Visual inspection of power lines by U.A.S. In Proceedings of the 2014 International Conference and Exposition on Electrical and Power Engineering (EPE), Iasi, Romania, 16–18 October 2014; pp. 1077–1082. [Google Scholar] [CrossRef]

- Cerón, A.; B., I.F.M.; Prieto, F. Power line detection using a circle based search with UAV images. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 632–639. [Google Scholar] [CrossRef]

- Santos, T.; Moreira, M.; Almeida, J.; Dias, A.; Martins, A.; Dinis, J.; Formiga, J.; Silva, E. PLineD: Vision-based power lines detection for Unmanned Aerial Vehicles. In Proceedings of the 2017 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Coimbra, Portugal, 26–28 April 2017; pp. 253–259. [Google Scholar] [CrossRef]

- Tong, W.G.; Li, B.S.; Yuan, J.S.; Zhao, S.T. Transmission line extraction and recognition from natural complex background. In Proceedings of the 2009 International Conference on Machine Learning and Cybernetics, Baoding, China, 12–15 July 2009; pp. 12–15. [Google Scholar]

- Li, Z.; Liu, Y.; Hayward, R.; Zhang, J.; Cai, J. Knowledge-based power line detection for UAV surveillance and inspection systems. In Proceedings of the 2008 23rd International Conference Image and Vision Computing New Zealand, Christchurch, New Zealand, 26–28 November 2008; pp. 1–6. [Google Scholar] [CrossRef]

- Du, S.; Tu, C. Power line inspection using segment measurement based on HT butterfly. In Proceedings of the 2011 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), Xi’an, China, 14–16 September 2011; pp. 1–4. [Google Scholar] [CrossRef]

- Nguyen, V.N.; Jenssen, R.; Roverso, D. Automatic autonomous vision-based power line inspection: A review of current status and the potential role of deep learning. Int. J. Electr. Power Energy Syst. 2018, 99, 107–120. [Google Scholar] [CrossRef]

- Varghese, A.; Gubbi, J.; Sharma, H.; Balamuralidhar, P. Power infrastructure monitoring and damage detection using drone captured images. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 1681–1687. [Google Scholar] [CrossRef]

- Stambler, A.; Sherwin, G.; Rowe, P. Detection and Reconstruction of Wires Using Cameras for Aircraft Safety Systems. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 697–703. [Google Scholar] [CrossRef]

- Nguyen, V.N.; Jenssen, R.; Roverso, D. LS-Net: Fast Single-Shot Line-Segment Detector. arXiv 2019, arXiv:1912.09532. [Google Scholar] [CrossRef]

- Li, B.; Chen, C.; Dong, S.; Qiao, J. Transmission line detection in aerial images: An instance segmentation approach based on multitask neural networks. Signal Process. Image Commun. 2021, 96, 116278. [Google Scholar] [CrossRef]

- Song, J.; Qian, J.; Li, Y.; Liu, Z.; Chen, Y.; Chen, J. Automatic Extraction of Power Lines from Aerial Images of Unmanned Aerial Vehicles. Sensors 2022, 22, 6431. [Google Scholar] [CrossRef]

- Diniz, L.; Faria Pinto, M.; Melo, A.; Honorio, L. Visual-based Assistive Method for UAV Power Line Inspection and Landing. J. Intell. Robot. Syst. 2022, 106, 41. [Google Scholar] [CrossRef]

- Son, H.S.; Kim, D.K.; Yang, S.H.; Choi, Y.K. Real-Time Power Line Detection for Safe Flight of Agricultural Spraying Drones Using Embedded Systems and Deep Learning. IEEE Access 2022, 10, 54947–54956. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar] [CrossRef]

- Terven, J.; Cordova-Esparza, D. A Comprehensive Review of YOLO: From YOLOv1 to YOLOv8 and Beyond. arXiv 2023, arXiv:2304.00501. [Google Scholar] [CrossRef]

- Choi, H.; Koo, G.; Kim, B.J.; Kim, S.W. Real-time Power Line Detection Network using Visible Light and Infrared Images. In Proceedings of the 2019 International Conference on Image and Vision Computing New Zealand (IVCNZ), Dunedin, New Zealand, 2–4 December 2019; pp. 1–6. [Google Scholar]

- Zuiderveld, K. Contrast Limited Adaptive Histogram Equalization. In Graphics Gems IV; Academic Press Professional, Inc.: Cambridge, MA, USA, 1994; pp. 474–485. [Google Scholar]

- Yang, L.; Fan, J.; Huo, B.; Li, E.; Liu, Y. PLE-Net: Automatic power line extraction method using deep learning from aerial images. Expert Syst. Appl. 2022, 198, 116771. [Google Scholar] [CrossRef]

- Satheeswari Damodaran, L.S.; Swaroopan, N.J. Overhead power line detection from aerial images using segmentation approaches. Automatika 2024, 65, 261–288. [Google Scholar] [CrossRef]

- Kaliappan, V.K.; V, M.S.; Shanmugasundaram, K.; Ravikumar, L.; Hiremath, G.B. Performance Analysis of YOLOv8, RCNN, and SSD Object Detection Models for Precision Poultry Farming Management. In Proceedings of the 2023 IEEE 3rd International Conference on Applied Electromagnetics, Signal Processing, & Communication (AESPC), Bhubaneswar, India, 24–26 November 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Sapkota, R.; Ahmed, D.; Karkee, M. Comparing YOLOv8 and Mask RCNN for object segmentation in complex orchard environments. arXiv 2024, arXiv:2312.07935. [Google Scholar] [CrossRef]

- Sohan, M.; Sai Ram, T.; Rami Reddy, C.V. A Review on YOLOv8 and Its Advancements. In Data Intelligence and Cognitive Informatics, Proceedings of the ICDICI 2023: 4th International Conference on Data Intelligence and Cognitive Informatics, Tirunelveli, India, 27–28 June 2023; Jacob, I.J., Piramuthu, S., Falkowski-Gilski, P., Eds.; Springer: Singapore, 2024; pp. 529–545. [Google Scholar]

- Azevedo, F.; Dias, A.; Almeida, J.; Oliveira, A.; Ferreira, A.; Santos, T.; Martins, A.; Silva, E. Lidar-based real-time detection and modeling of power lines for unmanned aerial vehicles. Sensors 2019, 19, 1812. [Google Scholar] [CrossRef] [PubMed]

- Dias, A.; Almeida, J.; Oliveira, A.; Santos, T.; Martins, A.; Silva, E. Unmanned Aerial Vehicle for Wind-Turbine Inspection. Next Step: Offshore. In Proceedings of the OCEANS 2022, Hampton Roads, VA, USA, 17–20 October 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Silva, P.; Dias, A.; Pires, A.; Santos, T.; Amaral, A.; Rodrigues, P.; Almeida, J.; Silva, E. 3D Reconstruction of historical sites using an UAV. In Proceedings of the CLAWAR, Moscow, Russia, 24–26 August 2020. [Google Scholar]

- Dias, A.; Mucha, A.P.; Santos, T.; Pedrosa, D.; Amaral, G.; Ferreira, H.; Oliveira, A.; Martins, A.; Almeida, J.; Almeida, C.M.; et al. ROSM—Robotic Oil Spill Mitigations. In Proceedings of the OCEANS 2019, Marseille, France, 17–20 June 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO. Available online: https://github.com/ultralytics/ultralytics (accessed on 31 December 2023).

- Jaykumaran. YOLOv9 Instance Segmentation on Medical Dataset. Available online: https://learnopencv.com/yolov9-instance-segmentation-on-medical-dataset#aioseo-conclusion (accessed on 30 July 2024).

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. arXiv 2024, arXiv:2304.08069. [Google Scholar] [CrossRef]

| Model | Size (Pixels) | mAPbox 50–95 | mAPmask 50–95 | Speed CPU ONNX (ms) | Speed A100 TensorRT (ms) | Params (M) | FLOPs (B) |

|---|---|---|---|---|---|---|---|

| YOLOv8n-seg | 640 | 36.7 | 30.5 | 96.1 | 1.21 | 3.4 | 12.6 |

| YOLOv8s-seg | 640 | 44.6 | 36.8 | 155.7 | 1.47 | 11.8 | 42.6 |

| YOLOv8m-seg | 640 | 49.9 | 40.8 | 317.0 | 2.18 | 27.3 | 110.2 |

| YOLOv8l-seg | 640 | 52.3 | 42.6 | 572.4 | 2.79 | 46.0 | 220.5 |

| YOLOv8x-seg | 640 | 53.4 | 43.4 | 712.1 | 4.02 | 71.8 | 344.1 |

| Model | GPU | |||||

| Thermal | Visible | |||||

| Pre-Process (ms) | Inference (ms) | Post-Process (ms) | Pre-Process (ms) | Inference (ms) | Post-Process (ms) | |

| YOLOv8n | 2.5 | 8.3 | 0.9 | 2.4 | 7.5 | 0.9 |

| YOLOv8s | 2.5 | 16.6 | 0.8 | 2.6 | 16.6 | 1.0 |

| Model | CPU | |||||

| Thermal | Visible | |||||

| Pre-process (ms) | Inference (ms) | Post-process (ms) | Pre-process (ms) | Inference (ms) | Post-process (ms) | |

| YOLOv8n | 1.9 | 99.1 | 0.3 | 2.1 | 99.2 | 0.3 |

| YOLOv8s | 2.1 | 213.2 | 0.2 | 2.2 | 210.2 | 0.3 |

| Thermal Images | |||||

| Model | Detected Instances | Box | Mask | ||

| mAP@0.5 (%) | mAP@[0.5:0.95] (%) | mAP@0.5 (%) | mAP@[0.5:0.95] (%) | ||

| YOLOv8n | 141 | 98.4 | 93.0 | 98.4 | 65.2 |

| YOLOv8s | 141 | 96.9 | 88.9 | 96.9 | 62.3 |

| Visible Images | |||||

| Model | Detected Instances | Box | Mask | ||

| mAP@0.5 (%) | mAP@[0.5:0.95] (%) | mAP@0.5 (%) | mAP@[0.5:0.95] (%) | ||

| YOLOv8n | 135 | 97.0 | 86.7 | 91.8 | 47.3 |

| YOLOv8s | 135 | 95.7 | 85.5 | 90.5 | 46.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Santos, T.; Cunha, T.; Dias, A.; Moreira, A.P.; Almeida, J. UAV Visual and Thermographic Power Line Detection Using Deep Learning. Sensors 2024, 24, 5678. https://doi.org/10.3390/s24175678

Santos T, Cunha T, Dias A, Moreira AP, Almeida J. UAV Visual and Thermographic Power Line Detection Using Deep Learning. Sensors. 2024; 24(17):5678. https://doi.org/10.3390/s24175678

Chicago/Turabian StyleSantos, Tiago, Tiago Cunha, André Dias, António Paulo Moreira, and José Almeida. 2024. "UAV Visual and Thermographic Power Line Detection Using Deep Learning" Sensors 24, no. 17: 5678. https://doi.org/10.3390/s24175678

APA StyleSantos, T., Cunha, T., Dias, A., Moreira, A. P., & Almeida, J. (2024). UAV Visual and Thermographic Power Line Detection Using Deep Learning. Sensors, 24(17), 5678. https://doi.org/10.3390/s24175678