Enhancing Grapevine Node Detection to Support Pruning Automation: Leveraging State-of-the-Art YOLO Detection Models for 2D Image Analysis

Abstract

1. Introduction

1.1. Related Work

1.2. Contributions

- Nodes are detected with the further objective of being considered for pruning rules;

- Node detection is achieved by the algorithm even when visualizing the entire grapevine;

- Elimination of artificial background requirement for the implementation of the detection model ensures acceptable accuracy, practicality, and versatility in real-world vineyard environments, where natural backgrounds vary widely;

- Further implementation on a real-time system is possible, not producing inference times that compromise the pruning task execution.

2. Materials and Methods

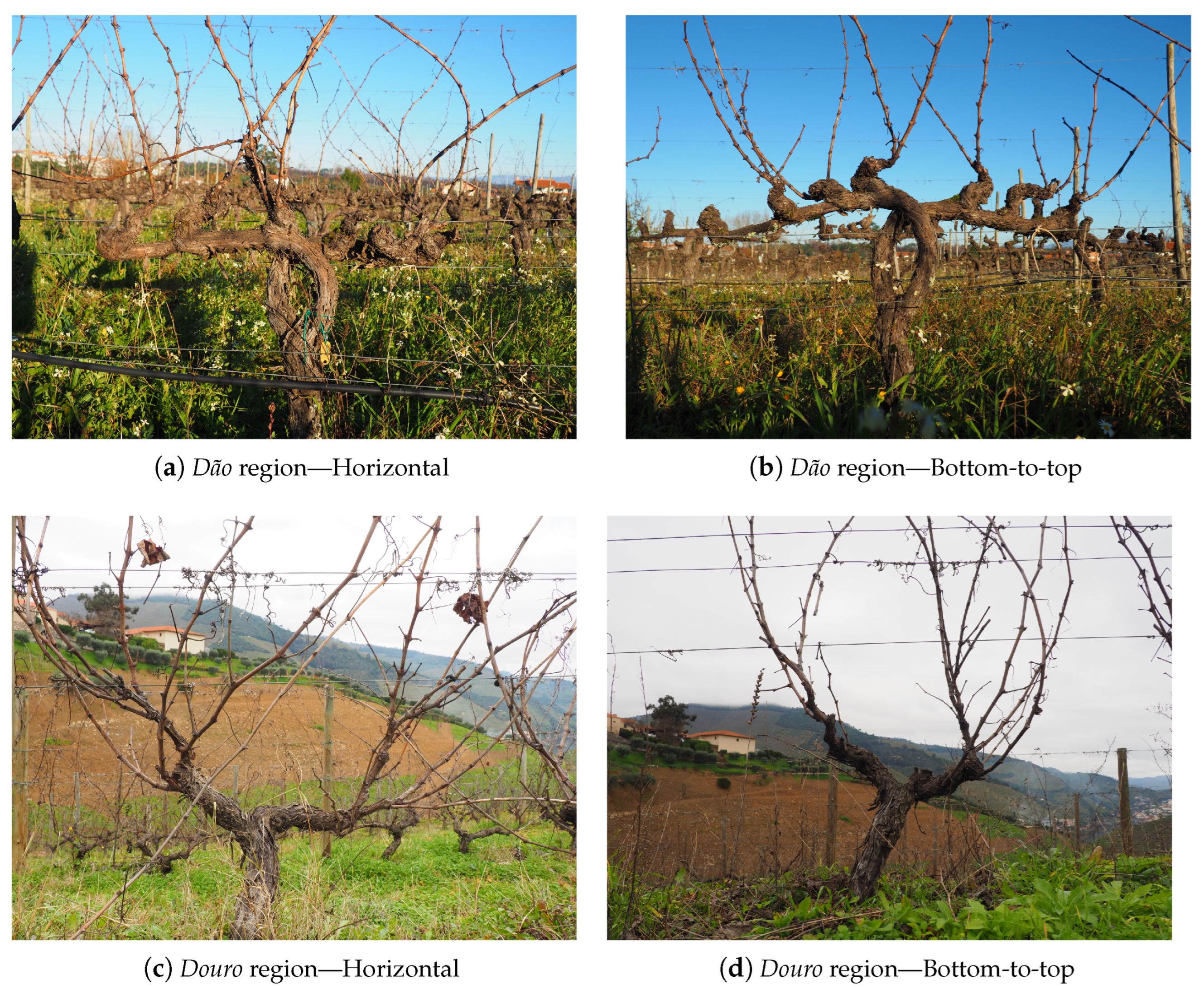

2.1. Datasets

2.2. Deep Learning Models

2.3. Training Configuration and Augmentations

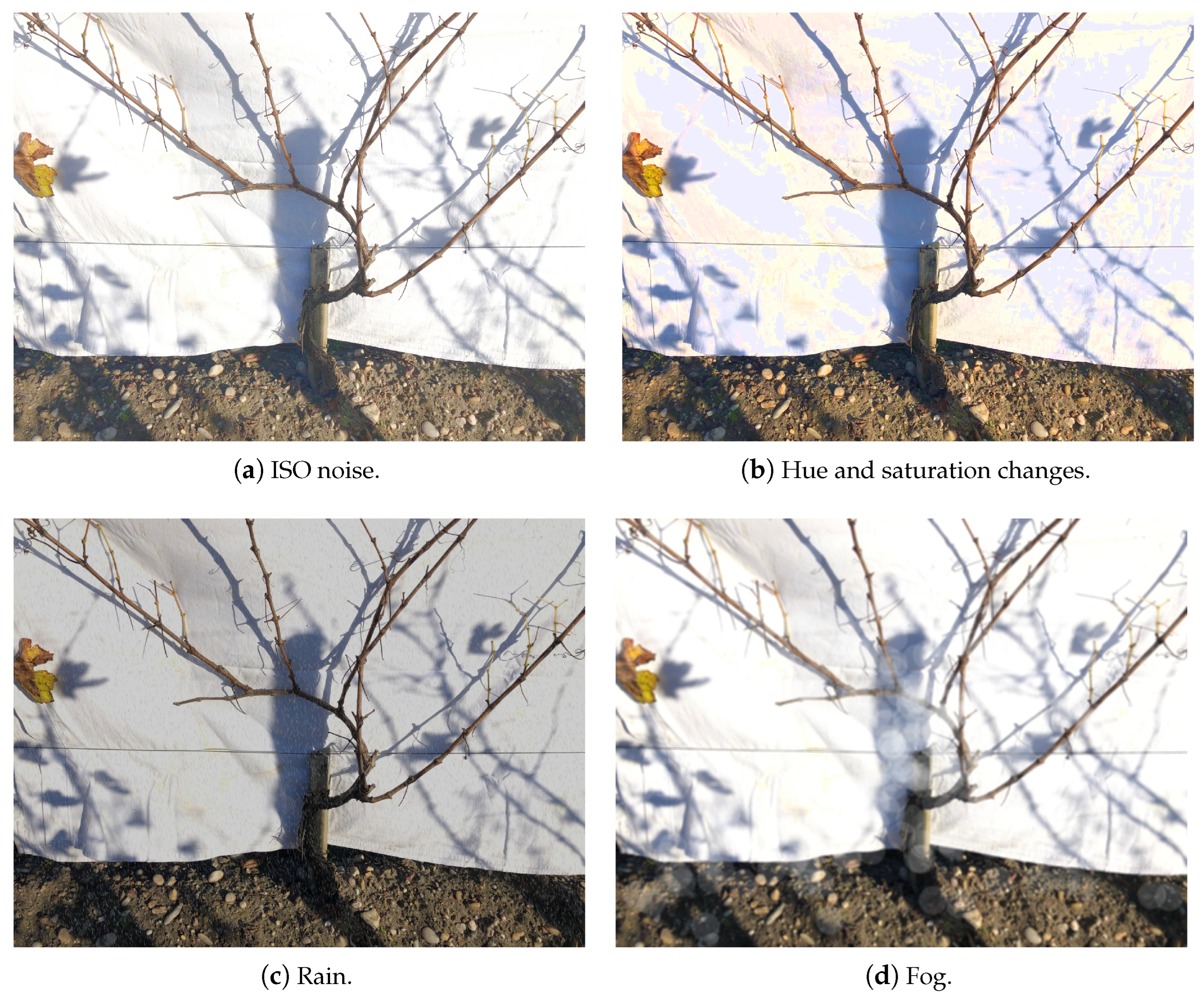

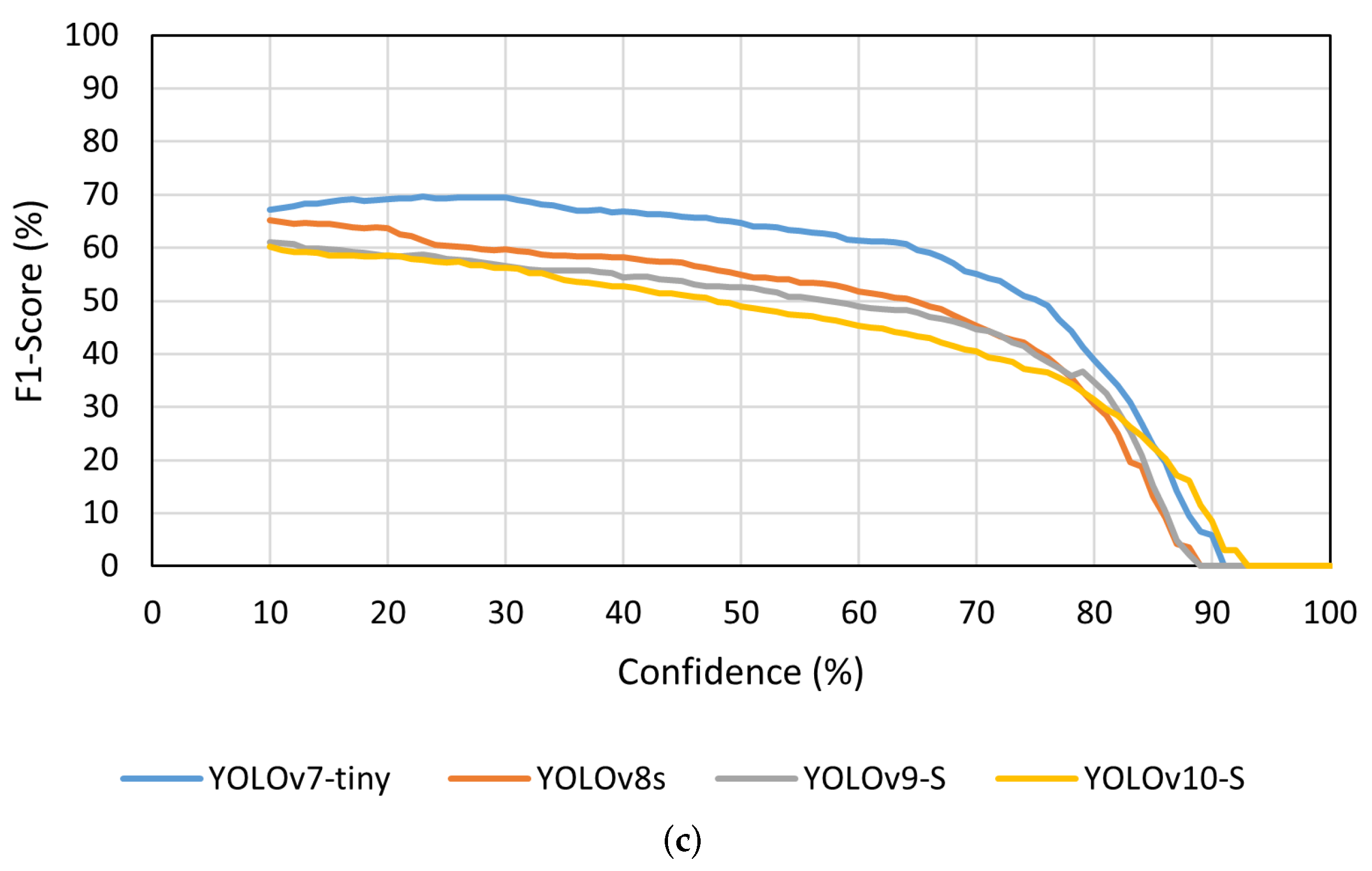

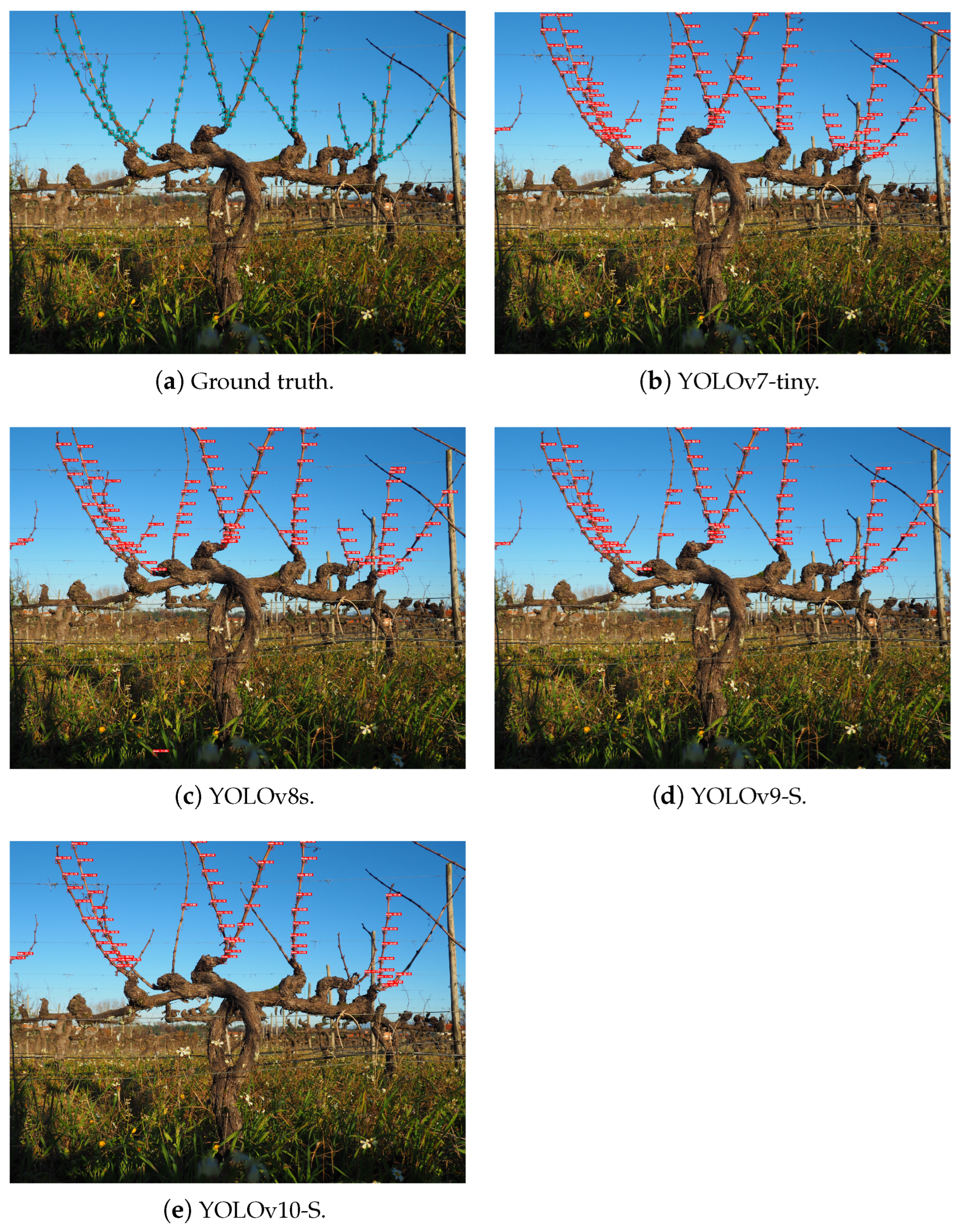

3. Results

Validation and Field Trials

4. Discussion

4.1. Adaptability to Different Grapevine’s Configurations and Environments

4.2. Capability of Implementation in a Real-Time System

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 2D | Two-dimensional |

| AP | Average Precision |

| CIoU | Complete Intersection over Union |

| CNN | Convolutional Neural Network |

| CVAT | Computer Vision Annotation Tool |

| DFL | Distribution Focal Loss |

| FCN | Fully Convolutional Network |

| FLOPS | Floating Point Operations Per Second |

| GELAN | Generalized Efficient Layer Aggregation Network |

| mAP | Mean Average Precision |

| MS COCO | Microsoft Common Objects in Context |

| NMS | Non-Maximum Suppression |

| PGI | Programmable Gradient Information |

| SHG | Stacked Hourglass Network |

| YOLO | You Only Look Once |

References

- Botterill, T.; Paulin, S.; Green, R.D.; Williams, S.; Lin, J.; Saxton, V.; Mills, S.; Chen, X.; Corbett-Davies, S. A Robot System for Pruning Grape Vines. J. Field Robot. 2017, 34, 1100–1122. [Google Scholar] [CrossRef]

- Poni, S.; Sabbatini, P.; Palliotti, A. Facing Spring Frost Damage in Grapevine: Recent Developments and the Role of Delayed Winter Pruning—A Review. Am. J. Enol. Vitic. 2022, 73, 211–226. [Google Scholar] [CrossRef]

- Reich, L. The Pruning Book; Taunton Press: Newtown, CT, USA, 2010. [Google Scholar]

- Silwal, A.; Yandun, F.; Nellithimaru, A.; Bates, T.; Kantor, G. Bumblebee: A Path Towards Fully Autonomous Robotic Vine Pruning. arXiv 2021, arXiv:2112.00291. [Google Scholar] [CrossRef]

- Williams, H.; Smith, D.; Shahabi, J.; Gee, T.; Nejati, M.; McGuinness, B.; Black, K.; Tobias, J.; Jangali, R.; Lim, H.; et al. Modelling wine grapevines for autonomous robotic cane pruning. Biosyst. Eng. 2023, 235, 31–49. [Google Scholar] [CrossRef]

- Oliveira, F.; Tinoco, V.; Magalhães, S.; Santos, F.N.; Silva, M.F. End-Effectors for Harvesting Manipulators—State of the Art Review. In Proceedings of the 2022 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Santa Maria da Feira, Portugal, 29–30 April 2022; pp. 98–103. [Google Scholar] [CrossRef]

- He, L.; Schupp, J. Sensing and Automation in Pruning of Apple Trees: A Review. Agronomy 2018, 8, 211. [Google Scholar] [CrossRef]

- Collins, C.; Wang, X.; Lesefko, S.; De Bei, R.; Fuentes, S. Effects of canopy management practices on grapevine bud fruitfulness. OENO ONE 2020, 54, 313–325. [Google Scholar] [CrossRef]

- Cuevas-Velasquez, H.; Gallego, A.J.; Tylecek, R.; Hemming, J.; Van Tuijl, B.; Mencarelli, A.; Fisher, R.B. Real-time Stereo Visual Servoing for Rose Pruning with Robotic Arm. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 7050–7056. [Google Scholar] [CrossRef]

- Villegas Marset, W.; Pérez, D.S.; Díaz, C.A.; Bromberg, F. Towards practical 2D grapevine bud detection with fully convolutional networks. Comput. Electron. Agric. 2021, 182, 105947. [Google Scholar] [CrossRef]

- Gentilhomme, T.; Villamizar, M.; Corre, J.; Odobez, J.M. Towards smart pruning: ViNet, a deep-learning approach for grapevine structure estimation. Comput. Electron. Agric. 2023, 207, 107736. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Wang, C.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8. 2023. Available online: https://docs.ultralytics.com/pt/models/yolov8/ (accessed on 17 October 2024).

- Wang, C.Y.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Lavrador da Silva, A.; João Fernão-Pires, M.; Bianchi-de-Aguiar, F. Portuguese vines and wines: Heritage, quality symbol, tourism asset. Ciência Téc. Vitiv. 2018, 33, 31–46. [Google Scholar] [CrossRef]

- Oliveira, F.A.; Silva, D.Q. Douro & Dão Grapevines Dataset for Node Detection; CERN Data Centre: Prévessin-Moëns, France, 2024. [Google Scholar] [CrossRef]

- Casas, G.G.; Ismail, Z.H.; Limeira, M.M.C.; da Silva, A.A.L.; Leite, H.G. Automatic Detection and Counting of Stacked Eucalypt Timber Using the YOLOv8 Model. Forests 2023, 14, 2369. [Google Scholar] [CrossRef]

- Xie, S.; Sun, H. Tea-YOLOv8s: A Tea Bud Detection Model Based on Deep Learning and Computer Vision. Sensors 2023, 23, 6576. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2015, arXiv:1405.0312. [Google Scholar]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Jocher, G. YOLOv5 by Ultralytics; CERN Data Centre: Prévessin-Moëns, France, 2020. [Google Scholar] [CrossRef]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized focal loss: Learning qualified and distributed bounding boxes for dense object detection. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 6–12 December 2020. NIPS ’20. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12993–13000. [Google Scholar] [CrossRef]

- Vougioukas, S.G. Agricultural Robotics. Annu. Rev. Control. Robot. Auton. Syst. 2019, 2, 365–392. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations: Concepts and components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Hsueh, B.Y.; Li, W.; Wu, I.C. Stochastic Gradient Descent With Hyperbolic-Tangent Decay on Classification. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; pp. 435–442. [Google Scholar] [CrossRef]

- Prechelt, L. Early Stopping—But When? In Neural Networks: Tricks of the Trade; Orr, G.B., Müller, K.R., Eds.; Springer: Berlin/Heidelberg, Germany, 1998; pp. 55–69. [Google Scholar] [CrossRef]

- Butko, N.J.; Movellan, J.R. Optimal scanning for faster object detection. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2751–2758. [Google Scholar] [CrossRef]

- Wenkel, S.; Alhazmi, K.; Liiv, T.; Alrshoud, S.; Simon, M. Confidence Score: The Forgotten Dimension of Object Detection Performance Evaluation. Sensors 2021, 21, 4350. [Google Scholar] [CrossRef] [PubMed]

- Zahid, A.; Mahmud, M.S.; He, L.; Heinemann, P.; Choi, D.; Schupp, J. Technological advancements towards developing a robotic pruner for apple trees: A review. Comput. Electron. Agric. 2021, 189, 106383. [Google Scholar] [CrossRef]

| Augmentation Operation | Values | Description |

|---|---|---|

| Horizontal Flip | - | Flips the image horizontally |

| Scale | 0.7× | Scales down the image by 30% |

| 1.3× | Scales up the image by 30% | |

| Rotation | −15° | Rotates the image −15° |

| +15° | Rotates the image 15° | |

| Hue, Saturation and Value | −15 ≤ hue ≤ 1 | Changes the image’s hue, saturation and value levels |

| −20 ≤ saturation ≤ 20 | ||

| −30 ≤ value ≤ 30 | ||

| CLAHE | contrast limit = 4 | Applies Contrast Limited Adaptive Histogram Equalization to the image |

| grid size = 8 × 8 | ||

| Emboss | 0.4 ≤ alpha ≤ 0.6 | Embosses the image and overlays the result with the original image |

| 0.5 ≤ strength ≤ 1.5 | ||

| Sharpen | 0.2 ≤ alpha ≤ 0.5 | Sharpens the image and overlays the result with the original image |

| 0.5 ≤ lightness ≤ 1.5 | ||

| Optical Distortion | −0.15 | Applies negative optical distortion to the image |

| +0.15 | Applies positive optical distortion to the image | |

| Gaussian Blur | blur ≤ 7 | Blurs the image using a Gaussian filter |

| ≤ 5 | ||

| Glass Blur | = 0.5 | Applies glass blur to the image |

| = 2 | ||

| ISO Noise | colour shift = 0.15 | Applies ISO noise to the image |

| intensity = 0.6 | ||

| Random Rain | - | Adds random rain to the image |

| Random Fog | - | Adds random fog to the image |

| Random Snow | - | Adds random snow to the image |

| Spatter Mud | - | Adds mud spatter to the image |

| Spatter Rain | - | Adds rain spatter to the image |

| Parameter | YOLOv7-tiny | YOLOv8s | YOLOv9-S | YOLOv10-S |

|---|---|---|---|---|

| Input Size | 640 × 640 px | 640 × 640 px | 640 × 640 px | 640 × 640 px |

| Batch Size | 16 | 16 | 16 | 16 |

| Initial Learning Rate | 0.01 | 0.01 | 0.01 | 0.01 |

| Final Learning Rate | 0.0002 | 0.0002 | 0.0002 | 0.002 |

| Optimizer | AdamW | AdamW | AdamW | AdamW |

| Cos_lr Function | False | True | True | True |

| Cls_mosaic Parameter | - | 50 | 50 | 50 |

| Dataset | Model | Input Size | Precision | Recall | F1-Score | mAP@50 | Precision | Recall | F1-Score | mAP@50 | Average |

|---|---|---|---|---|---|---|---|---|---|---|---|

| (px) | Confidence ≥ 10% | On the Best F1-Score | IoU | ||||||||

| 3D2cut | YOLOv7-tiny | 640 × 640 | 84.5% | 86.8% | 85.6% | 83.4% | 84.5% | 86.8% | 85.6% | 83.4% | 78.6% |

| YOLOv8s | 90.9% | 73.8% | 81.5% | 71.3% | 90.9% | 73.8% | 81.5% | 71.3% | 80.5% | ||

| YOLOv9-S | 91.1% | 76.2% | 83% | 74.6% | 91.1% | 76.2% | 83% | 74.6% | 80.9% | ||

| YOLOv10-S | 87.9% | 77.3% | 82.3% | 75.3% | 87.9% | 77.3% | 82.3% | 75.3% | 80.6% | ||

| YOLOv7-tiny | 1280 × 1280 | 71.8% | 92.6% | 80.9% | 86.6% | 88.8% | 84.3% | 86.5% | 80.1% | 76.3% | |

| YOLOv8s | 76.3% | 91.4% | 83.2% | 84.9% | 87.6% | 86.8% | 87.2% | 80.8% | 77% | ||

| YOLOv9-S | 78.4% | 93.1% | 85.1% | 88.5% | 84.1% | 90.5% | 87.2% | 86.1% | 78.3% | ||

| YOLOv10-S | 80.6% | 91.4% | 85.7% | 86.8% | 85.8% | 89.8% | 87.8% | 85.2% | 79.1% | ||

| Dão | YOLOv7-tiny | 640 × 640 | 79% | 49.5% | 60.8% | 44.5% | 79% | 49.5% | 60.8% | 44.5% | 70.8% |

| YOLOv8s | 85.5% | 19% | 31.1% | 18.8% | 85.5% | 19% | 31.1% | 18.8% | 69.6% | ||

| YOLOv9-S | 76.6% | 16% | 26.4% | 13.9% | 76.6% | 16% | 26.4% | 13.9% | 63.2% | ||

| YOLOv10-S | 78.9% | 13.9% | 23.7% | 12% | 78.9% | 13.9% | 23.7% | 12% | 67.2% | ||

| YOLOv7-tiny | 1280 × 1280 | 73.4% | 70.4% | 71.8% | 61.5% | 76.4% | 69.7% | 72.9% | 60.8% | 74.8% | |

| YOLOv8s | 76.4% | 64.7% | 70% | 53.9% | 76.4% | 64.7% | 70% | 53.9% | 73.6% | ||

| YOLOv9-S | 79.3% | 61.4% | 69.2% | 50.8% | 79.3% | 61.4% | 69.2% | 50.8% | 72.2% | ||

| YOLOv10-S | 80% | 55.4% | 65.5% | 46.1% | 80% | 55.4% | 65.5% | 46.1% | 73.3% | ||

| Douro | YOLOv7-tiny | 640 × 640 | 72.2% | 47% | 56.9% | 42% | 72.2% | 47% | 56.9% | 42% | 66.5% |

| YOLOv8s | 67.3% | 18.7% | 29.2% | 14.6% | 67.3% | 18.7% | 29.2% | 14.6% | 61.7% | ||

| YOLOv9-S | 70.6% | 19.9% | 31% | 15.9% | 70.6% | 19.9% | 31% | 15.8% | 64.1% | ||

| YOLOv10-S | 68.5% | 18.9% | 29.6% | 14.5% | 68.5% | 18.9% | 29.6% | 14.5% | 62.4% | ||

| YOLOv7-tiny | 1280 × 1280 | 63.9% | 70.6% | 67.1% | 62.8% | 74.2% | 65.5% | 69.6% | 59.3% | 68.5% | |

| YOLOv8s | 72.7% | 58.9% | 65.1% | 52% | 72.7% | 58.9% | 65.1% | 52% | 68.2% | ||

| YOLOv9-S | 71.1% | 53.5% | 61.1% | 46.4% | 71.1% | 53.5% | 61.1% | 46.4% | 67% | ||

| YOLOv10-S | 77.4% | 49.2% | 60.2% | 44.9% | 77.4% | 49.2% | 60.2% | 44.9% | 69.2% | ||

| Model | Input Size (px) | Average Inference Time (ms) |

|---|---|---|

| YOLOv7-tiny | 640 × 640 | 20.52 |

| YOLOv8s | 323.79 | |

| YOLOv9-S | 63.82 | |

| YOLOv10-S | 51.59 | |

| YOLOv7-tiny | 1280 × 1280 | 88.79 |

| YOLOv8s | 502.52 | |

| YOLOv9-S | 288.53 | |

| YOLOv10-S | 260.21 |

| Model | Number of Parameters | FLOPS |

|---|---|---|

| YOLOv7-tiny | 6.2 M | 13.8 G |

| YOLOv8s | 11.2 M | 28.6 G |

| YOLOv9-S | 7.1 M | 26.4 G |

| YOLOv10-S | 7.2 M | 21.6 G |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oliveira, F.; da Silva, D.Q.; Filipe, V.; Pinho, T.M.; Cunha, M.; Cunha, J.B.; dos Santos, F.N. Enhancing Grapevine Node Detection to Support Pruning Automation: Leveraging State-of-the-Art YOLO Detection Models for 2D Image Analysis. Sensors 2024, 24, 6774. https://doi.org/10.3390/s24216774

Oliveira F, da Silva DQ, Filipe V, Pinho TM, Cunha M, Cunha JB, dos Santos FN. Enhancing Grapevine Node Detection to Support Pruning Automation: Leveraging State-of-the-Art YOLO Detection Models for 2D Image Analysis. Sensors. 2024; 24(21):6774. https://doi.org/10.3390/s24216774

Chicago/Turabian StyleOliveira, Francisco, Daniel Queirós da Silva, Vítor Filipe, Tatiana Martins Pinho, Mário Cunha, José Boaventura Cunha, and Filipe Neves dos Santos. 2024. "Enhancing Grapevine Node Detection to Support Pruning Automation: Leveraging State-of-the-Art YOLO Detection Models for 2D Image Analysis" Sensors 24, no. 21: 6774. https://doi.org/10.3390/s24216774

APA StyleOliveira, F., da Silva, D. Q., Filipe, V., Pinho, T. M., Cunha, M., Cunha, J. B., & dos Santos, F. N. (2024). Enhancing Grapevine Node Detection to Support Pruning Automation: Leveraging State-of-the-Art YOLO Detection Models for 2D Image Analysis. Sensors, 24(21), 6774. https://doi.org/10.3390/s24216774