Abstract

Car-sharing systems require accurate demand prediction to ensure efficient resource allocation and scheduling decisions. However, developing precise predictive models for vehicle demand remains a challenging problem due to the complex spatio-temporal relationships. This paper introduces USTIN, the Unified Spatio-Temporal Inference Prediction Network, a novel neural network architecture for demand prediction. The model consists of three key components: a temporal feature unit, a spatial feature unit, and a spatio-temporal feature unit. The temporal unit utilizes historical demand data and comprises four layers, each corresponding to a different time scale (hourly, daily, weekly, and monthly). Meanwhile, the spatial unit incorporates contextual points of interest data to capture geographic demand factors around parking stations. Additionally, the spatio-temporal unit incorporates weather data to model the meteorological impacts across locations and time. We conducted extensive experiments on real-world car-sharing data. The proposed USTIN model demonstrated its ability to effectively learn intricate temporal, spatial, and spatiotemporal relationships, and outperformed existing state-of-the-art approaches. Moreover, we employed negative binomial regression with uncertainty to identify the most influential factors affecting car usage.

1. Introduction

Car-sharing companies have gained significant popularity in modern society due to their cost-effectiveness and convenience, providing a flexible alternative to traditional car ownership. These services alleviate various issues related to lease payments, maintenance, and parking, making them an appealing option for users seeking a hassle-free mobility solution. Beyond individual benefits, these systems contribute to reduced traffic congestion, lower carbon emissions, and minimized air pollution, positioning them as a sustainable and environmentally friendly transportation option.

However, the spatial and temporal distribution of cars across company parking stations presents a critical challenge for car-sharing firms. Accurate demand prediction is essential for optimizing resource allocation, enhancing rental rates, and improving customer satisfaction. To address these challenges, these companies leverage GPS tracking data to predict demand patterns and allocate resources effectively. These data contain a wide range of factors, such as temporal features (e.g., the average demand value in the last four time intervals), spatial features (e.g., longitude and latitude of the parking station), meteorological features (e.g., weather conditions), event features (e.g., holidays), and categories of points of interest near every station []. Various techniques, including predictive analytics and machine learning algorithms, aid in identifying demand trends and patterns, enabling companies to adjust their operations accordingly.

To ensure a balanced distribution of cars across various parking lots throughout the day, we propose a comprehensive Unified Spatio-Temporal Inference Prediction Network (USTIN) model. The USTIN model is a unified architecture that incorporates a temporal feature unit, a spatial feature unit, and a spatio-temporal feature unit. Leveraging a combination of Temporal Convolutional Networks (TCN), Long Short-Term Memory (LSTM), and Graph Convolutional Network (GCN), the model effectively processes and analyzes the data. Furthermore, the utilization of negative binomial regression with uncertainty has allowed for the analysis of the most influential factors affecting car usage in parking stations.

The highlights of our work include the following:

- -

- Proposed USTIN, a unified neural architecture for car-sharing demand prediction, integrating temporal, spatial, and spatio-temporal features across multiple units.

- -

- Achieved state-of-the-art prediction accuracy by effectively capturing complex spatial, temporal, and spatio-temporal influences on car demand.

- -

- Identified the most influential demand factors through negative binomial regression with uncertainty to further enhance predictions.

The rest of the paper is organized as follows: Section 2 provides a literature review of the current studies on serial prediction models. In Section 3, we introduce an overview of the methods used. Section 4 details the experimental framework employed to evaluate our approach’s performance. Section 5 analyzes our prediction results. Finally, Section 6 concludes the paper and outlines potential directions for future research.

2. Literature Review

The objective of the traffic prediction problem is to predict future traffic flow using historical data. The key work in this area includes the DMVST-NET proposed by [], employing local CNN and LSTM to model spatial and temporal relationships in flow. Additionally, graph deep learning techniques have gained prominence for relationship modeling within traffic networks. The authors of ref. [] proposed a multi-graph convolutional network and an Attention-based Spatial-Temporal Graph Neural Network (ASTGNN) to model the relationships within flow networks. Similarly, the authors of ref. [] developed a Hybrid Spatio-Temporal Graph Convolutional Network (H-STGCN) to deduce future travel time from upcoming traffic volume.

Furthermore, the challenge of predicting traffic flow is closely related to the growing need for accurate car-sharing system demand prediction []. Car-sharing services have exploded in popularity in recent years as an alternative mode of urban transportation. However, effectively managing these systems requires the reliable prediction of where and when vehicles will be needed. As such, many studies have begun exploring predictive models of car-sharing demand, and investigating different influencing factors. The authors of ref. [] looked into the effects of time horizons, environmental conditions, and learning algorithm types on the prediction of vehicle availability in car-share systems. The authors of ref. [] estimated the distance to the closest available vehicle in a fleet, whereas other researchers examined multidimensional optimization problems like station-based vehicle relocation [,].

Recent studies have introduced innovative models to enhance efficiency from multiple perspectives. The authors of ref. [] compared spatially implicit Random Forest models with spatially aware methods for the spatially aware analysis of car-sharing demand. The authors of ref. [] evaluated the use of Long Short-Term Memory (LSTM) and Prophet techniques for predicting the demand for car-sharing services. Furthermore, the authors of ref. [] proposed a maximum entropy approach for modeling car-sharing parking dynamics.

Advancements in deep learning have shown promise in extracting spatial and temporal features for demand prediction []. However, effectively modeling spatial factors remains a challenge. Several studies have considered the influence of Points of Interest (POIs) near parking stations [,]. Notably, spatial imbalances between vehicle supply and demand have been addressed with relocation strategies []. Nevertheless, these models often exhibit limitations in capturing detailed spatial factors.

Despite recent strides, prior works lack multi-time scale designs to capture periodical seasonalities. Addressing this gap, the authors of refs. [,] introduced different timeframe durations, yet model performance diminishes in regions with varying demand densities.

This study bridges existing gaps by integrating POIs and meteorological features, taking into consideration varied time scales and addressing travel demand density. The proposed model aims to enhance the accuracy and generalization capacity, offering a holistic approach to travel demand prediction.

3. Methodology

3.1. Unified Spatio-Temporal Inference Prediction Network

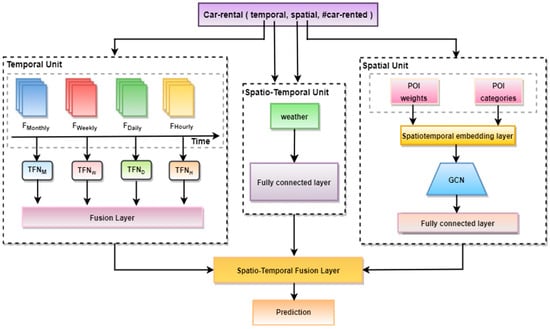

The overall architecture of the proposed Unified Spatio-Temporal Inference Prediction Network (USTIN) model is described in Figure 1. The model predicts the number of vehicles that are going to be used at a given prediction horizon.

Figure 1.

Structure of Unified Spatio-Temporal Inference Prediction Network (USTIN).

Our approach incorporates three distinct units: a temporal feature unit, a spatial feature unit, and a spatio-temporal feature unit []. The different units extract key frames, enabling an accurate prediction of travel demand. The temporal unit is designed to capture temporal dependencies and comprises four layers, each corresponding to a different time scale. The spatial unit focuses on capturing spatial dependencies using Points of Interest (POIs), while the spatio-temporal unit integrates weather data to effectively capture spatio-temporal correlations. Finally, the outputs obtained from each unit are combined in the feature module fusion and training unit to generate accurate predictions of passenger demand.

3.1.1. The Temporal Feature Unit

The temporal feature module contains four time scale-related layers, namely

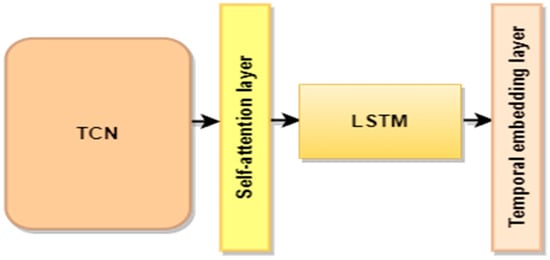

The demand data for each layer are defined as a tensor G. Each layer corresponds to a Temporal Fusion Network (TFN) structure that effectively captures the temporal correlation, as shown in Figure 2.

Figure 2.

Structure of the Temporal Fusion Network (TFN).

- Temporal convolutional network layer (TCN)

The tensor G is fed into a TCN layer to capture the temporal dependencies in the input data. The output is then denoted as follows:

: the weight matrix of the convolutional filter.

: the bias term.

: the convolution operation.

- 2.

- Self-attention mechanism layer

A self-attention mechanism is used to learn the attention weights that determine the importance of the features:

: weight matrices for the query and key projections.

: dimension of the key vectors.

- 3.

- Long short-term memory layer (LSTM)

The output of the self-attention mechanism enhances the LSTM’s capacity to capture temporal dependencies. This process is represented as follows:

where

: input, forget, output, and candidate cell state vectors, respectively.

: weight matrices for input gate, forget gate, output gate, and candidate cell state, respectively.

weight matrices for input gate, forget gate, output gate, and candidate cell state, respectively, associated with the previous hidden state.

bias terms for input gate, forget gate, output gate, and candidate cell state, respectively.

: the cell state at time .

: the cell state from the previous time step.

: the hidden state at time .

: sigmoid activation function.

element-wise multiplication.

- 4.

- Temporal embedding layer

The temporal embedding layer is used to embed the input into a lower-dimensional space that captures the temporal relationships:

: weight matrix.

: bias.

The four time scale-related layers are then fused. ⊗ denotes the Hadamard product, , , are the weight matrices of the time scale-related layers, and is the bias. The output of the temporal feature module is defined according to Equation (10).

3.1.2. The Spatial Feature Unit

To effectively process Point of Interest features (POIs), we have designed a model architecture that comprises the following:

- Spatial density calculation

POI density represents the concentration of various points of interest around every parking station. We comprehensively consider the number of POIs and the spatial distance within a Radius R.

: the distance between station and ;

r: the radius of the Earth;

: the difference in latitude between station and ;

: the difference in longitude between station and ;

: latitude of station ;

: latitude of station .

The density of each POI () is determined as follows:

- 2.

- Regression model

The car-sharing variance is significantly greater than its average, showing an over-dispersion phenomenon []. Therefore, we use the negative binomial distribution to estimate the parameters. The regression model is given by the following:

The model includes the order quantity for each car-sharing station , the density of the POI category (, …, ), an intercept (), coefficients (, …, ) for corresponding variables, and an error term ().

The coefficient (, …, ) values are estimated using Maximum Likelihood Estimation (MLE), with a 5% significance level.

- 3.

- Spatiotemporal embedding layer

The tensor and the vector , containing weights corresponding to the coefficients in Equation (13), are input into a spatiotemporal embedding layer:

- 4.

- Graph convolutional network layer (GCN)

The output of the spatiotemporal embedding layer is fed into a GCN layer. This layer employs the mean aggregation function to capture spatial relationships among POIs:

- 5.

- Fully connected layer

We used a neural network architecture with fully connected layers for feature extraction.

: weight of the fully connected layer.

: bias of the fully connected layer.

3.1.3. The Spatio-Temporal Feature Unit

We used a neural network architecture with fully connected layers for the meteorological features.

: meteorological feature sensor.

: weight of the fully connected layer.

: bias of the fully connected layer.

3.1.4. Feature Module Fusion and Training

The model integrates the obtained outputs via a weighted summation (Equation (18)).

The prediction result of passenger demand is obtained using Equation (19).

We adopt back-propagation with the Adam optimiser to improve the training efficiency [].

3.2. Influential Factors Analysis

To establish the correlation between car-sharing demand and influencing factors [], we use uncertainty estimation with coefficients (, …, ) from the regression model (Equation (13)).

3.2.1. Standard Error

The significance of the estimated values is assessed using the standard error.

: standard error.

: the coefficient of the corresponding variable.

3.2.2. Standard Errors of Marginal Effects

To provide a measure of uncertainty, standard errors of marginal effects are associated with the marginal effects of the predictors on the response variable.

: Partial derivatives of the predicted values ().

The significance of the factors’ impact is defined as follows:

3.2.3. p-Values for Marginal Effects

p-values for marginal effects provide insights into the significance of the factors.

: standard normal random variable.

: z-score of the i-th marginal effect.

4. Experiment

Section 4.1 provides an illustration of the dataset’s details, while Section 4.2 describes the experimental setting. Section 4.3 goes over the baseline models against which our model was evaluated. We describe the model configurations and the evaluation metrics in Section 4.4 and Section 4.5, respectively [].

4.1. Data Description

In our study, we used the Chongqing car-sharing company’s dataset for predicting car-sharing demand, along with weather data that were acquired via web crawling []. Furthermore, we obtained the point-of-interest dataset via web crawling to enhance the comprehensiveness of the features used in our predictive model.

4.1.1. Car-Sharing Dataset

The experiments were conducted using the pre-processed car-sharing operator dataset. The dataset contained more than 1 million records over 860 parking lots, from 1 January 2017, 00:00:00 to 31 March 2019, 23:00:00 [].

4.1.2. Weather Condition Dataset

In our work, we considered that meteorology data affected car-sharing demand []. Meteorology data, such as weather conditions and temperature, were collected using a Python-based Selenium web crawler to scrape the Chongqing weather condition from 1 January 2017, 00:00:00 to 31 March 2019, 23:00:00.

4.1.3. Points of Interest Dataset

The car-sharing dataset was augmented with Points of Interest (POIs) data using the Baidu API for web crawling. This process involved obtaining and integrating supplementary location-based information such as restaurants, cafes, museums, cultural landmarks, and so on. The data crawling aimed to enhance the quality and diversity of the original dataset.

Table 1 presents the influencing indicator system used to determine the potential demand for car-sharing.

Table 1.

Influencing indicator system of the potential demand for car-sharing.

4.2. Data Pre-Processing

Raw data may contain noise, outliers, missing values, or irrelevant features, which can negatively affect the performance of machine learning models []. Before analysis, we applied pre-processing methods as follows:

- (1)

- Imputation: Due to the numerical meaning of the missing values [], we replaced them using K-nearest neighbours’ imputation.

- (2)

- Normalization: The dataset was normalized using min-max scaling, involving scaling the numerical features to a range between 0 and 1.

- (3)

- Clustering: The parking stations were organized into four distinct classes using frequency-based clustering []:

- Class A: daily rented cars.

- Class B: frequently used cars.

- Class C: sometimes used cars.

- Class D: unlike other parking stations, cars of this class are rarely used.

Classes A, B, C, and D have different parking stations IDs, such as 16, 104, 6, and 25. - (4)

- Splitting the Dataset: We split the data between training and test sets. The training set starts from 1 January 2017 to 31 December 2018, and the test set from 1 January 2019 to 31 January 2019.

4.3. Experimental Setting

For the purpose of this study, we installed TensorFlow 1.14.0, Keras 2.2.4-tf, Pandas 0.23.4, Sklearn 0.21.1, Numpy 1.18.1, Matplotlib 3.1.0, and Statsmodels 0.10.1 [].

The models were implemented using a PC with an i7 Intel (R) Core™i7-7500U CPU running at 3.00 GHz and 8 GB RAM with the Windows 10 operating system under the Python 3.7 development environment [].

4.4. Baseline Methods

The following section outlines the baseline models against which we compared the proposed model:

- (1)

- Multiple layer perceptron (MLP)

MLP is a feedforward neural network []. The network learns to map input data to the target output using backpropagation, adjusting the weights to minimize the difference between the predicted and actual outputs.

- (2)

- K-Nearest Neighbours (KNN)

KNN works by finding the k closest neighbours; it makes predictions based on the outcome of the k neighbours closest to that point [].

- (3)

- Random Forest (RF)

A random forest is a collection of tree predictors [], where each tree is generated using a random vector sampled independently from the input vector [].

Table 2 represents the hyperparameter tuning results of the baseline models. We used the k-fold cross-validation method with k = 5 and grid search to avoid overfitting by finding the optimal hyperparameters.

Table 2.

Hyperparameters for baseline models.

- (4)

- eXtreme Gradient Boosting (XGBoost)

XGBoost is an efficient and scalable implementation of a gradient boosting framework by (Friedman, 2001) (Friedman et al., 2000). The package includes an efficient linear model solver and tree learning algorithm []. XGBoost fits the new model to new residuals of the previous prediction and then minimizes the loss while adding the latest prediction [].

- (5)

- CNN-LSTM model

CNN-LSTM is a hybrid model built by combining CNN with LSTM for improving the accuracy of forecasting []. The model comprises two main components: the first component consists of convolutional and pooling layers, in which complicated mathematical operations are performed to filter the input data and extract the useful information. The second component exploits the generated features by LSTM, which possess the ability to learn long-term and short-term dependencies through the utilization of feedback connections and dense layers [].

4.5. Model Configurations

We employed k-fold cross-validation with k = 5 and grid search for the hyperparameter tuning of the LSTM, TCN, and GCN models to avoid overfitting and find the optimal hyperparameters [].

Table 3 represents the optimized hyperparameter for our proposed USTIN model.

Table 3.

Hyperparameters for USTIN.

4.6. Evaluation Metrics

The evaluation metrics show how well the prediction fits the past data. They help in comparing prediction techniques using the same set of data [].

4.6.1. Mean Absolute Error (MAE)

The MAE is the mean of the absolute predicted error values.

4.6.2. Mean Square Error (MSE)

The MSE is calculated as the average of the squared predicted error values. It is well-known for putting more weight on large error values.

4.6.3. Root Square Mean Error (RMSE)

RMSE penalizes large prediction errors more compared to Mean Absolute Error (MAE):

4.6.4. Mean Absolute Percentage Error (MAPE)

The Mean Absolute Percentage Error (MAPE) is one of the most widely used measures of forecast accuracy. It can be defined by the following formula:

where is the actual value, is the forecast value, and denotes the number of fitted points.

5. Discussion

We compared our USTIN model against several baseline models, including KNN, LSTM, RF, and MLP. Metrics such as MAE, MSE, RMSE, and MAPE are used in respective order to evaluate the results and make comparisons between our model and other state-of-the-art models.

Table 4.

Evaluation results—full data.

Table 5.

Evaluation results—clustered data.

5.1. Car Usage Prediction

The main objective of this study was to build a predictive model for vehicle usage in parking lots. By predicting car usage, parking facility managers can optimize resource allocation, improve traffic flow, and enhance customer satisfaction.

5.1.1. Full Data Experiment

Table 4 illustrates a performance comparison between the proposed method and baseline methods for predicting car usage in every parking station. The results show that USTIN achieves the lowest MAE (0.0308), MSE (0.1541), RMSE (0.3925), and MAPE (0.1077) among all the methods. Notably, KNN and MLP perform poorly (i.e., KNN and MLP have a MAPE of 0.5709 and 0.8874, respectively). The poor performance of the baseline models can be attributed to their failure to model the different dependencies, unlike our proposed model, which leverages temporal, spatial, and spatio-temporal information to make predictions.

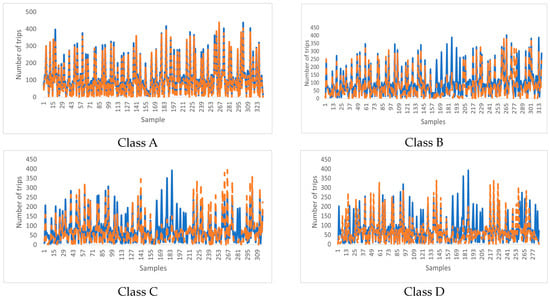

5.1.2. Clustered Data Experiment

We applied our model to the entire dataset, demonstrating its robust performance in predicting car-sharing demand. To further analyze the model’s performance, we also implemented our analysis in four distinct classes. For the sake of organization and not being redundant in our explanations, we only discuss the result analysis of class “A”, as other classes exhibit the same behavior and lead to the same conclusion [].

As can be seen from Table 5—Class A, USTIN yielded the best results and had smaller evaluation errors compared to all other models. Specifically, our model achieves significant error reductions, namely (42.14%, 63.55%, 39.63%, and 52.97%) against LSTM; (44.60%, 69.52%, 44.79%, and 37.57%) against KNN; (66.18%, 69.33%, 44.62%, and 54.99%) against MLP; (43.08%, 64.15%, 40.13%, and 55.16%) against XGBOOST; (66.18%, 69.33%, 44.62%, and 54.99%) against RF; (39.45%, 60.26%, 9.85%, and 31.47%) against TCN; (50.73%, 70.60%, 16.90%, and 49.46%) against GCN; and (66.54%, 69.10%, 40.91%, and 48.27%) against CNN-LSTM.

5.2. Most Influential Points of Interest

The negative binomial regression with uncertainty was used to determine the key factors that impact car usage.

5.2.1. Full Data Experiment

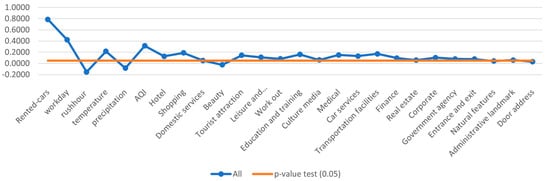

As can be seen in Table 6, numerous factors, such as the number of rented cars ( = 0.7865, p-value = 0.002), workday (= 0.4223, p-value = 0.005), and temperature ( = 0.2156, p-value = 0.034), positively influence car-sharing demand. Conversely, rush hour ( = −0.1523, p-value = 0.023) and precipitation (= −0.0842, p-value = 0.046) have negative coefficients, indicating a drop in car-sharing demand during these periods. Analyzing the influence of POIs on car-sharing demand reveals that factors like domestic services (p-value = 0.214), beauty (p-value = 0.467), culture media (p-value = 0.165), real estate (p-value = 0.134), government agency (p-value = 0.456), entrance and exit (p-value = 0.145), natural features (p-value = 0.214), administrative landmarks (p-value = 0.145), and door address (p-value = 0.130) have p-values higher than 0.05, indicating their insignificant influence on car-sharing demand.

Table 6.

Evaluation of negative binomial regression model—full data.

5.2.2. Clustered Data Experiment

- (1)

- Class A analysis.

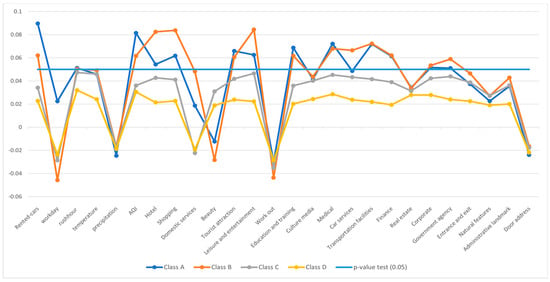

Several important POIs play a crucial role in influencing the strong car-sharing demand in Table 7—Class A. Tourist attractions ( = 0.0659, p-value = 0.002), education and training centres ( = 0.0687, p-value = 0.007), medical facilities ( = 0.0721, p-value = 0.001), finance hubs ( = 0.0614, p-value = 0.001), and government agencies ( = 0.0510, p-value = 0.032) exhibit a positive impact on car-sharing usage within this class. This suggests that areas with these amenities typically have greater rates of car-sharing use.

Table 7.

Evaluation of negative binomial regression model—clustered data.

- (2)

- Class B analysis.

Hotel and shopping amenities significantly impact demand prediction in Table 7—Class B. Their respective positive coefficients () (0.825, 0.0837) and respective significant p-values (0.011, 0.019) suggest that these main factors play a key role in car-sharing demand. In contrast, domestic services ( = 0.0482, p-value = 0.315), beauty centres ( = −0.284, p-value = 0.244), and tourist attractions ( = 0.0605, p-value = 0.076) show no impact on the car usage rate.

- (3)

- Class C analysis.

Hotels ( = 0.0427, p-value = 0.026) and shopping centres ( = 0.0412, p-value = 0.044) remain important factors, reinforcing their role as key factors in car-sharing demand in Table 7—Class C. Leisure and entertainment ( = 0.0465, p-value = 0.021) also play an important role in increasing car sharing.

- (4)

- Class D analysis.

The influential POIs in Table 7—Class D are different from those in other classes. Medical facilities ( = 0.0285, p-value = 0.045) are an important factor in driving car-sharing demand, while the other factors show less impact.

5.3. Results Analysis

5.3.1. Prediction Results

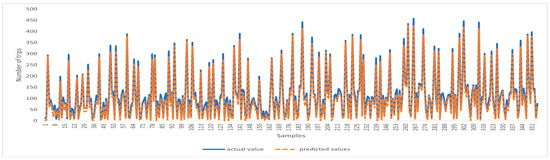

Figure 3 and Figure 4 present a comparison between the predicted values and the actual values obtained using the USTIN model. The results show the efficacy of the proposed neural network architecture. The integration of temporal features, spatial features, and spatio-temporal features has significantly enhanced the model’s predictive accuracy. Introducing spatial features allows the model to consider factors that are not inherently present in the spatial-temporal data but have a substantial influence on it. Furthermore, the spatio-temporal unit captures the influence of meteorological conditions across locations and time.

Figure 3.

Comparison of the predicted value and the real value using USTIN—full data.

Figure 4.

Comparison of the predicted value and the real value using USTIN—clustered data.

Overall, this study highlights the effectiveness of the proposed architecture in enhancing car-sharing demand prediction in urban environments.

5.3.2. The Contribution of Influencing Factors in Car-Sharing

Figure 5 and Figure 6 show the results of the negative binomial regression and provide insightful information on factors influencing car-sharing demand, including the number of rented cars, workday, temperature, and air quality. These factors play an important role in determining car-sharing usage. Furthermore, the evaluation results highlight the most influential Points of Interest alongside those with relatively minor impacts on car-sharing demand. Notably, tourist attractions, educational institutions, medical facilities, hotels, and shopping centers emerge as the most influential, while beauty centers, cultural landmarks, and government agencies exhibit less influence.

Figure 5.

Influence of indicators on the car-sharing demand—full data.

Figure 6.

Influence of indicators on the car-sharing demand—clustered data.

6. Conclusions

This research study has introduced the Unified Spatio-Temporal Inference Prediction Network (USTIN), an advanced architecture for predicting car usage across different parking lots. The proposed model integrates temporal, spatial, and spatio-temporal units and has demonstrated strong predictive effectiveness, outperforming other state-of-the-art models on real-world data. Notably, the temporal module adeptly captured both short- and long-term temporal demands, while the spatial module incorporates points-of-interest, enriching the contextual understanding of car usage. Additionally, the spatio-temporal module integrates meteorological data to effectively capture their influence across locations and time. Beyond car demand prediction, we used negative binomial regression with uncertainty to identify the key factors influencing car usage. The obtained results identified key drivers such as tourist destinations, hotels, and shopping centers.

While our approach offers promising results not only for online car-sharing demand prediction but also for other domains where temporal, spatial, and spatio-temporal features play a crucial role in prediction, it may exhibit some limitations in areas with very low car usage. In such regions, the model may lead to less accurate predictions. Our future research will focus on developing more advanced models that can capture the real-world complexity of spatio-temporal data. This would further enhance the efficiency of urban transportation systems and the field of spatio-temporal data analysis.

Author Contributions

Conceptualization: N.B. and S.D.A.Z.; methodology: N.B. and H.Z.; software: N.B.; validation: N.B.; formal analysis: H.Z. and N.B.; investigation: N.B.; resources: N.B. and H.Z.; data curation: N.B. and S.D.A.Z.; writing—original draft preparation: N.B.; writing—review and editing: N.B., H.Z. and L.D.; visualization: N.B.; supervision: H.Z.; project administration: H.Z.; funding acquisition: H.Z. All authors have read and agreed to the published version of the manuscript.

Funding

The research work was funded by the Beijing Municipal Natural Science Foundation (Grant No. 4212026) and the Foundation Enhancement Program (Grant No. 2021-JCJQ-JJ-0059).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Acknowledgments

The authors would like to express their sincere gratitude to the Beijing Municipal Natural Science Foundation and the Foundation Enhancement Program for their generous financial support. The authors are deeply appreciative of the support and resources provided by these organizations.

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.

References

- Yao, H.; Wu, F.; Ke, J.; Tang, X.; Jia, Y.; Lu, S.; Gong, P.; Ye, J.; Li, Z. Deep Multi-View Spatial-Temporal Network for Taxi Demand Prediction. Available online: www.aaai.org (accessed on 21 December 2021).

- Guo, S.; Lin, Y.; Wan, H.; Li, X.; Cong, G. Learning Dynamics and Heterogeneity of Spatial-Temporal Graph Data for Traffic Forecasting. IEEE Trans. Knowl. Data Eng. 2022, 34, 5415–5428. [Google Scholar] [CrossRef]

- Dai, R.; Xu, S.; Gu, Q.; Ji, C.; Liu, K. Hybrid Spatio-Temporal Graph Convolutional Network: Improving Traffic Prediction with Navigation Data. In Proceedings of the 26th ACM Sigkdd International Conference on Knowledge Discovery & Data Mining, Virtual, 23–27 August 2020; pp. 3074–3082. [Google Scholar] [CrossRef]

- Zhu, H.; Luo, Y.; Liu, Q.; Fan, H.; Song, T.; Yu, C.W.; Du, B. Multistep Flow Prediction on Car-Sharing Systems: A Multi-Graph Convolutional Neural Network with Attention Mechanism. Int. J. Softw. Eng. Knowl. Eng. 2019, 29, 1727–1740. [Google Scholar] [CrossRef]

- Ma, X.; Tao, Z.; Wang, Y.; Yu, H.; Wang, Y. Long short-term memory neural network for traffic speed prediction using remote microwave sensor data. Transp. Res. Part C Emerg. Technol. 2015, 54, 187–197. [Google Scholar] [CrossRef]

- Formentin, S.; Bianchessi, A.G.; Savaresi, S.M. On the prediction of future vehicle locations in free-floating car sharing systems. In IEEE Intelligent Vehicles Symposium, Proceedings; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2015; pp. 1006–1011. [Google Scholar] [CrossRef]

- Herbawi, W.; Knoll, M.; Kaiser, M.; Gruel, W. An evolutionary algorithm for the vehicle relocation problem in free floating carsharing. IEEE Congr. Evol. Comput. 2016, 2016, 2873–2879. [Google Scholar] [CrossRef]

- Folkestad, C.A.; Hansen, N.; Fagerholt, K.; Andersson, H.; Pantuso, G. Optimal charging and repositioning of electric vehicles in a free-floating carsharing system. Comput. Oper. Res. 2020, 113, 104771. [Google Scholar] [CrossRef]

- Mühlematter, D.J.; Wiedemann, N.; Xin, Y.; Raubal, M. Spatially-Aware Car-Sharing Demand Prediction. arXiv 2023, arXiv:2303.14421. [Google Scholar]

- Alencar, V.A.; Pessamilio, L.R.; Rooke, F.; Bernardino, H.S.; Vieira, A.B. Forecasting the carsharing service demand using uni and multivariable models. J. Internet Serv. Appl. 2021, 12, 4. [Google Scholar] [CrossRef]

- Daniotti, S.; Monechi, B.; Ubaldi, E. A maximum entropy approach for the modelling of car-sharing parking dynamics. Sci. Rep. 2023, 13, 2993. [Google Scholar] [CrossRef]

- Vijayalakshmi, B.; Ramar, K.; Jhanjhi, N.; Verma, S.; Kaliappan, M.; Vijayalakshmi, K.; Vimal, S.; Kavita; Ghosh, U. An attention-based deep learning model for traffic flow prediction using spatiotemporal features towards sustainable smart city. Int. J. Commun. Syst. 2021, 34, e4609. [Google Scholar] [CrossRef]

- Schmöller, S.; Weikl, S.; Müller, J.; Bogenberger, K. Empirical analysis of free-floating carsharing usage: The munich and berlin case. Transp. Res. Part C Emerg. Technol. 2015, 56, 34–51. [Google Scholar] [CrossRef]

- Wagner, S.; Brandt, T.; Neumann, D. In free float: Developing Business Analytics support for carsharing providers. Omega 2016, 59, 4–14. [Google Scholar] [CrossRef]

- Weikl, S.; Bogenberger, K. Relocation strategies and algorithms for free-floating car sharing systems. IEEE Intell. Transp. Syst. Mag. 2013, 5, 100–111. [Google Scholar] [CrossRef]

- Agafonov, A.A. Short-Term Traffic Data Forecasting: A Deep Learning Approach. Opt. Mem. Neural Networks (Inf. Opt.) 2021, 30, 1–10. [Google Scholar] [CrossRef]

- Song, C.; Lin, Y.; Guo, S.; Wan, H. Spatial-temporal synchronous graph convolutional networks: A new framework for spatial-temporal network data forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 914–921. [Google Scholar] [CrossRef]

- Guo, Y.; Li, W.; Xiao, L.; Allaoui, H. A prediction-based iterative Kuhn-Munkres approach for service vehicle reallocation in ride-hailing. Int. J. Prod. Res. 2023, 1–26. [Google Scholar] [CrossRef]

- Ardiles, L.G.; Tadano, Y.S.; Costa, S.; Urbina, V.; Capucim, M.N.; da Silva, I.; Braga, A.; Martins, J.A.; Martins, L.D. Negative Binomial regression model for analysis of the relationship between hospitalization and air pollution. Atmos. Pollut. Res. 2018, 9, 333–341. [Google Scholar] [CrossRef]

- Dong, X.; Wang, Y.; Li, X.; Zhong, Z.; Shen, X.; Sun, H.; Hu, B. Understanding the influencing factors of taxi ride-sharing: A case study of Chengdu, China. Transp. Res. Part A Policy Pract. 2023, 176, 103819. [Google Scholar] [CrossRef]

- Brahimi, N.; Zhang, H.; Dai, L.; Zhang, J.; Benito, R.M. Modelling on Car-Sharing Serial Prediction Based on Machine Learning and Deep Learning. Complexity 2022, 2022, 8843000. [Google Scholar] [CrossRef]

- Zamani Joharestani, M.; Cao, C.; Ni, X.; Bashir, B.; Talebiesfandarani, S. PM2.5 Prediction Based on Random Forest, XGBoost, and Deep Learning Using Multisource Remote Sensing Data. Atmosphere 2019, 10, 373. [Google Scholar] [CrossRef]

- Zhang, P.; Li, X.; Chen, J. Prediction Method for Mine Earthquake in Time Sequence Based on Clustering Analysis. Appl. Sci. 2022, 12, 11101. [Google Scholar] [CrossRef]

- Sunthornnapha, T. Utilization of MLP and Linear Regression Methods to Build a Reliable Energy Baseline for Self-benchmarking Evaluation. Energy Procedia 2017, 141, 189–193. [Google Scholar] [CrossRef]

- Hansun, S. LQ45 Stock Index Prediction using k-Nearest Neighbors Regression. Int. J. Recent Technol. Eng. 2019, 8, 2277–3878. [Google Scholar] [CrossRef]

- Segal, M.R. UCSF Recent Work Title Machine Learning Benchmarks and Random Forest Regression Publication Date Machine Learning Benchmarks and Random Forest Regression; University of California, San Francisco: San Francisco, CA, USA, 2004. [Google Scholar]

- Singh, B.; Sihag, P.; Singh, K. Modelling of impact of water quality on infiltration rate of soil by random forest regression. Model. Earth Syst. Environ. 2017, 3, 999–1004. [Google Scholar] [CrossRef]

- Chen, T.; He, T. xgboost: eXtreme Gradient Boosting. 2024. Available online: https://cran.ms.unimelb.edu.au/web/packages/xgboost/vignettes/xgboost.pdf (accessed on 5 February 2024).

- Brownlee, J. A Gentle Introduction to XGBoost for Applied Machine Learning. Available online: https://machinelearningmastery.com/gentle-introduction-xgboost-applied-machine-learning/ (accessed on 8 June 2020).

- Li, T.; Hua, M.; Wu, X. A Hybrid CNN-LSTM Model for Forecasting Particulate Matter (PM2.5). IEEE Access 2020, 8, 26933–26940. [Google Scholar] [CrossRef]

- Livieris, I.E.; Pintelas, E.; Pintelas, P. A CNN–LSTM model for gold price time-series forecasting. Neural Comput. Appl. 2020, 32, 17351–17360. [Google Scholar] [CrossRef]

- Tandon, S.; Tripathi, S.; Saraswat, P.; Dabas, C. Bitcoin Price Forecasting using LSTM and 10-Fold Cross validation. In Proceedings of the 2019 International Conference on Signal Processing and Communication (ICSC), Noida, India, 7–9 March 2019. [Google Scholar] [CrossRef]

- Chen, C.; Twycross, J.; Garibaldi, J.M. A new accuracy measure based on bounded relative error for time series forecasting. PLoS ONE 2017, 12, e0174202. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).