A Comprehensive Review of Vision-Based 3D Reconstruction Methods

Abstract

:1. Introduction

1.1. Explicit Expression

1.2. Implicit Expression

2. Traditional Static 3D Reconstruction Methods

2.1. Active 3D Reconstruction Methods

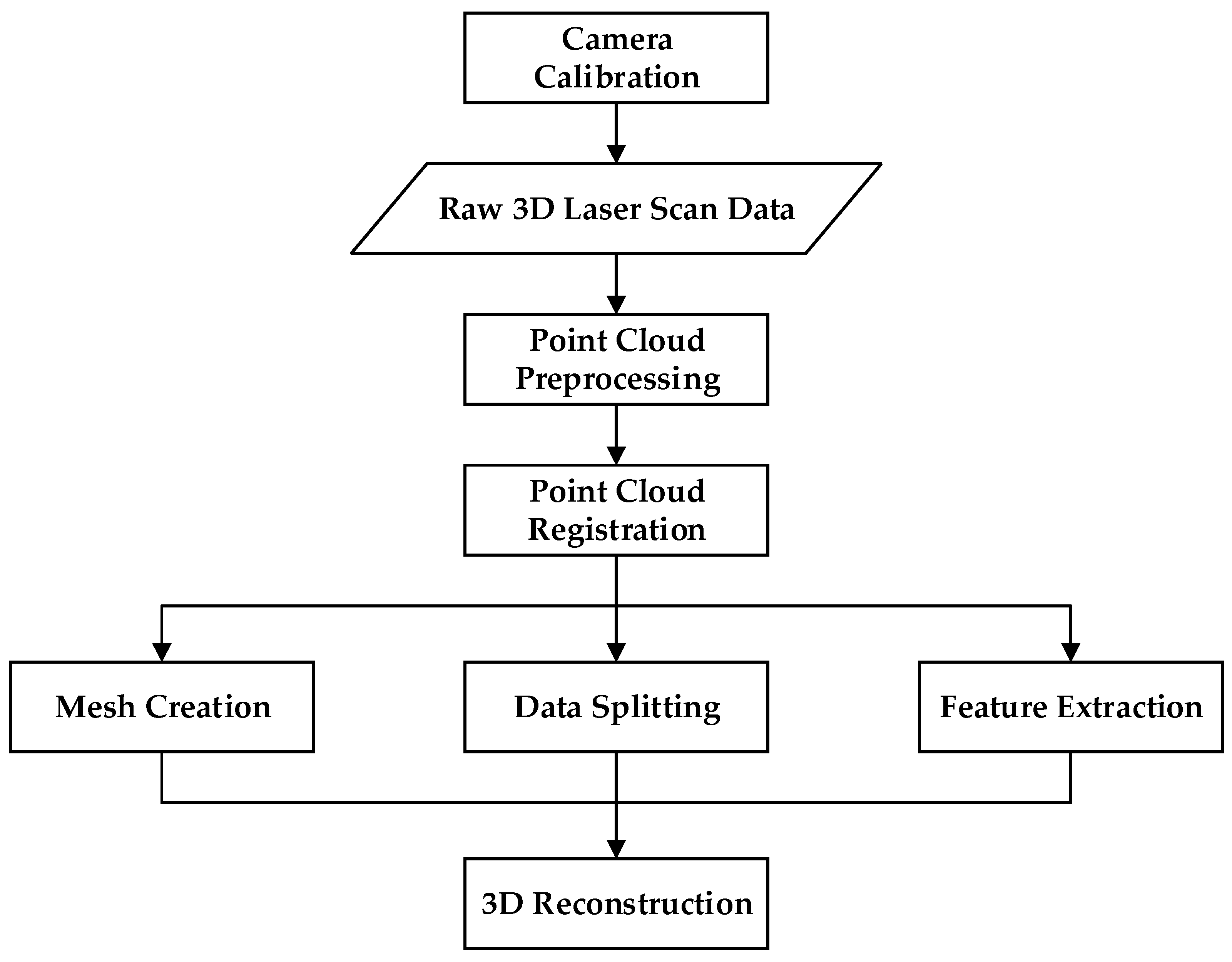

2.1.1. Laser Scanning

2.1.2. CT Scanning

2.1.3. Structured Light

2.1.4. TOF

2.1.5. Photometric Stereo

2.1.6. Multi-Sensor Fusion

2.2. Passive 3D Reconstruction Methods

2.2.1. Texture Mapping

2.2.2. Shape from Focus

2.2.3. Binocular Stereo Vision

2.2.4. Structure from Motion (SFM)

- (1)

- Factorization methods are mathematical models based on factorization, which obtain 3D structural information by decomposing image matrices [97]. Extract feature points from images captured at various viewing angles and then match them. These feature points can be corner points, edge points, and other points that have corresponding relationships in different viewing angles. The process involves converting the matched feature points into an observation matrix, which contains multiple feature point coordinates under each viewing angle. The next step is to factorize the observation matrix to decompose the factor matrix containing the 3D structure and camera motion information. Subsequently, the 3D structure information of the scene is extracted from the factor matrix, which includes the spatial coordinates of each feature point and the camera motion information, such as rotation and translation parameters, used to optimize the reconstruction results. Nonlinear optimization methods are typically utilized to enhance the accuracy of reconstruction. The advantage of the factorization method is that it can estimate the 3D structure and camera motion simultaneously without prior knowledge of the camera’s internal parameters. However, it is sensitive to noise and outliers, necessitating the use of suitable optimization methods to enhance robustness. Paul et al. [98] assumed that points are located on the object surface as a geometric prior to construct 3D point reconstruction and used affine and perspective cameras to estimate these quadratic surfaces and recover the 3D space in a closed form. Cin et al. [99] estimated the fundamental matrix by conducting motion segmentation on unstructured images to encode rigid motion in the scene. The depth map is used to resolve scale ambiguity, and the multi-body plane scanning algorithm is employed to integrate the multi-view depth and camera pose estimation network for generating the depth map.

- (2)

- Multi-view 3D reconstruction is a method based on observing the same scene from multiple perspectives or cameras and reconstructing the 3D structure of the scene using image or video information [100], as illustrated in Figure 5. The MVS method has high requirements for image quality and viewing angle. It needs to address challenges such as inadequate viewing angle overlap and shadows. At the same time, the accuracy of the image matching algorithm also greatly impacts the reconstruction effect. The primary objective of image registration is to address variations in viewing angles and postures in scale and time, ensuring consistent geometric information. This, in turn, enhances the reliability and accuracy of the subsequent 3D reconstruction process. This method has high requirements for camera calibration.

2.3. Introduction to 3D Reconstruction Technology

2.3.1. Camera Calibration

2.3.2. Image Local Feature Point Detection

2.3.3. Image Segmentation

2.3.4. Rendering

- (1)

- Rasterization rendering is a pixel-based rendering method that fragments the triangles of the 3D model into two-dimensional pixels and then colors each pixel, such as scanline rendering [159]. It has good real-time performance, but it struggles with handling transparency and reflection. It may not be as accurate as other methods when dealing with complex effects.

- (2)

- Ray tracing rendering is a method of simulating the propagation of light in a scene. It calculates the lighting and shadows in the scene by tracing the path of the light and considering the interaction between the light and the object. It takes into account the reflection, refraction, shadows, etc., of the light [160]. Ray tracing produces high-quality images but is computationally expensive. Monte Carlo rendering estimates the rendering equation through random sampling [161] and uses Monte Carlo integration to simulate real lighting effects [162]. In order to improve rendering efficiency, Monte Carlo rendering uses Importance Sampling to select the direction of the light path.

- (3)

- The radiometric algorithm is used to simulate the global illumination effect in the scene [163]. It considers the mutual radiation between objects and achieves realistic lighting effects by iteratively calculating the radiometric value of the surface.

- (4)

- Shadow rendering is a technology that generates shadows in real time. It renders the scene from the perspective of the light source, stores the depth information in the shadow map, and then uses the shadow map in regular rendering to determine whether the object is in shadow, simulating the interaction between light and objects. The occlusion relationship between them is used to produce realistic shadow effects [164]. Shadow rendering is divided into hard shadows and soft shadows. In the former, there are obvious shadow boundaries between objects, while in the latter, the shadow boundaries are gradually blurred, producing a more natural effect.

- (5)

- Ambient occlusion is a local lighting effect that considers the occlusion relationship between objects in the scene. It enhances shadows in deep recesses on the surface of objects, thereby enhancing the realism of the image [165].

- (6)

- The non-photorealistic rendering (NPR) method aims to imitate painting styles and produce non-realistic images, such as cartoon style and brush effects [166].

- (7)

- Volume rendering is a rendering technology used for visualizing volume data. It represents volume data as 3D textures and utilizes methods such as ray tracing to visualize the structure and features within the volume. The direct volume renderer [167] maps each sample value to opacity and color. The volume ray casting technique can be derived directly from the rendering equation. Volume ray casting is classified as an image-based volume rendering technique because the calculations are based on the output image rather than input volumetric data as in object-based techniques. The shear distortion method of volume rendering was developed by Cameron and Undrill and popularized by Philippe Lacroute and Marc Levoy [168]. Texture-based volume rendering utilizes texture mapping to apply images or textures to geometric objects.

- (8)

- The splash operation blurs or diffuses the point cloud data into the surrounding area, transferring the color and intensity information of the points during the splashing process. This can be achieved by transferring the attributes of the point (such as color, normal vector, etc.) to the surrounding area using a specific weighting method. In adjacent splash areas, there may be overlapping parts where color and intensity superposition operations are performed to obtain the final rendering result [169].

3. Dynamic 3D Reconstruction Methods

3.1. Introduction to Multi-View Dynamic 3D Reconstruction

3.2. Dynamic 3D Reconstruction Based on RGB-D Camera

3.3. 3D Gaussian Splatting (3DGS)

3.4. Simultaneous Localization and Mapping (SLAM)

4. 3D Reconstruction Methods Based on Machine Learning

4.1. Statistical Learning Methods

4.2. 3D Semantic Occupancy Prediction Methods

4.3. Deep Learning Methods

4.3.1. Depth Map

4.3.2. Point Cloud

4.3.3. Neural Radiance Field (NeRF)

5. Datasets

6. Outlook and Challenges

6.1. Outlook

6.2. Challenges

7. Summary

Author Contributions

Funding

Conflicts of Interest

References

- Scopigno, R.; Cignoni, P.; Pietroni, N.; Callieri, M.; Dellepiane, M. Digital fabrication techniques for cultural heritage: A survey. Comput. Graph. Forum 2017, 36, 6–21. [Google Scholar] [CrossRef]

- Mortara, M.; Catalano, C.E.; Bellotti, F.; Fiucci, G.; Houry-Panchetti, M.; Petridis, P. Learning cultural heritage by serious games. J. Cult. Herit. 2014, 15, 318–325. [Google Scholar] [CrossRef]

- Hosseinian, S.; Arefi, H. 3D Reconstruction from Multi-View Medical X-ray images–review and evaluation of existing methods. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 319–326. [Google Scholar] [CrossRef]

- Laporte, S.; Skalli, W.; De Guise, J.A.; Lavaste, F.; Mitton, D. A biplanar reconstruction method based on 2D and 3D contours: Application to the distal femur. Comput. Methods Biomech. Biomed. Eng. 2003, 6, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Zheng, L.; Li, G.; Sha, J. The survey of medical image 3D reconstruction. In Proceedings of the SPIE 6534, Fifth International Conference on Photonics and Imaging in Biology and Medicine, Wuhan, China, 1 May 2007. [Google Scholar]

- Thrun, S. Robotic mapping: A survey. In Exploring Artificial Intelligence in the New Millennium; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2003; pp. 1–35. [Google Scholar]

- Keskin, C.; Erkan, A.; Akarun, L. Real time hand tracking and 3d gesture recognition for interactive interfaces using hmm. In Proceedings of the ICANN/ICONIPP 2003, Istanbul, Turkey, 26–29 June 2003; pp. 26–29. [Google Scholar]

- Moeslund, T.B.; Granum, E. A survey of computer vision-based human motion capture. Comput. Vis. Image Underst. 2001, 81, 231–268. [Google Scholar] [CrossRef]

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A.; et al. Kinectfusion: Real-time 3d reconstruction and interaction using a moving depth camera. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011. [Google Scholar]

- Remondino, F.; Nocerino, E.; Toschi, I.; Menna, F. A critical review of automated photogrammetric processing of large datasets. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 591–599. [Google Scholar] [CrossRef]

- Roberts, L.G. Machine Perception of 3D Solids. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1963. [Google Scholar]

- Marr, D.; Nishihara, H.K. Representation and recognition of the spatial organization of 3D shapes. Proc. R. Soc. Lond. Ser. B Biol. Sci. 1978, 200, 269–294. [Google Scholar]

- Grimson, W.E.L. A computer implementation of a theory of human stereo vision. Philos. Trans. R. Soc. Lond. B Biol. Sci. 1981, 292, 217–253. [Google Scholar] [PubMed]

- Zlatanova, S.; Painsil, J.; Tempfli, K. 3D object reconstruction from aerial stereo images. In Proceedings of the 6th International Conference in Central Europe on Computer Graphics and Visualization’98, Plzen, Czech Republic, 9–13 February 1998; Volume III, pp. 472–478. [Google Scholar]

- Niemeyer, M.; Mescheder, L.; Oechsle, M.; Geiger, A. A Differentiable volumetric rendering: Learning implicit 3d representations without 3d supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 3504–3515. [Google Scholar]

- Varady, T.; Martin, R.R.; Cox, J. Reverse engineering of geometric models—An introduction. Comput.-Aided Des. 1997, 29, 255–268. [Google Scholar] [CrossRef]

- Williams, C.G.; Edwards, M.A.; Colley, A.L.; Macpherson, J.V.; Unwin, P.R. Scanning micropipet contact method for high-resolution imaging of electrode surface redox activity. Anal. Chem. 2009, 81, 2486–2495. [Google Scholar] [CrossRef] [PubMed]

- Zheng, T.X.; Huang, S.; Li, Y.F.; Feng, M.C. Key techniques for vision based 3D reconstruction: A review. Acta Autom. Sin. 2020, 46, 631–652. [Google Scholar]

- Isgro, F.; Odone, F.; Verri, A. An open system for 3D data acquisition from multiple sensors. In Proceedings of the Seventh International Workshop on Computer Architecture for Machine Perception (CAMP’05), Palermo, Italy, 4–6 July 2005. [Google Scholar]

- Kraus, K.; Pfeifer, N. Determination of terrain models in wooded areas with airborne laser scanner data. ISPRS J. Photogramm. Remote Sens. 1998, 53, 193–203. [Google Scholar] [CrossRef]

- Göbel, W.; Kampa, B.M.; Helmchen, F. Imaging cellular network dynamics in three dimensions using fast 3D laser scanning. Nat. Methods 2007, 4, 73–79. [Google Scholar] [CrossRef] [PubMed]

- Flisch, A.; Wirth, J.; Zanini, R.; Breitenstein, M.; Rudin, A.; Wendt, F.; Mnich, F.; Golz, R. Industrial computed tomography in reverse engineering applications. DGZfP-Proc. BB 1999, 4, 45–53. [Google Scholar]

- Rocchini, C.M.P.P.C.; Cignoni, P.; Montani, C.; Pingi, P.; Scopigno, R. A low cost 3D scanner based on structured light. In Computer Graphics Forum; Blackwell Publishers Ltd.: Oxford, UK; Boston, MA, USA, 2001; Volume 20. [Google Scholar]

- Park, J.; Kim, H.; Tai, Y.W.; Brown, M.S.; Kweon, I. High quality depth map upsampling for 3D-TOF cameras. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Al-Najdawi, N.; Bez, H.E.; Singhai, J.; Edirisinghe, E.A. A survey of cast shadow detection algorithms. Pattern Recognit. Lett. 2012, 33, 752–764. [Google Scholar] [CrossRef]

- Schwarz, B. Mapping the world in 3D. Nat. Photonics 2010, 4, 429–430. [Google Scholar] [CrossRef]

- Arayici, Y. An approach for real world data modeling with the 3D terrestrial laser scanner for built environment. Autom. Constr. 2007, 16, 816–829. [Google Scholar] [CrossRef]

- Dassot, M.; Constant, T.; Fournier, M. The use of terrestrial LiDAR technology in forest science: Application fields, benefits and challenges. Ann. For. Sci. 2011, 68, 959–974. [Google Scholar] [CrossRef]

- Yang, Y.; Shi, R.; Yu, X. Laser scanning triangulation for large profile measurement. J.-Xian Jiaotong Univ. 1999, 33, 15–18. [Google Scholar]

- França, J.G.D.; Gazziro, M.A.; Ide, A.N.; Saito, J.H. A 3D scanning system based on laser triangulation and variable field of view. In Proceedings of the IEEE International Conference on Image Processing 2005, Genova, Italy, 11–14 September 2005; Volume 1. [Google Scholar]

- Boehler, W.; Vicent, M.B.; Marbs, A. Investigating laser scanner accuracy. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2003, 34 Pt 5, 696–701. [Google Scholar]

- Voisin, S.; Foufou, S.; Truchetet, F.; Page, D.; Abidi, M. Study of ambient light influence for 3D scanners based on structured light. Opt. Eng. 2007, 46, 030502. [Google Scholar] [CrossRef]

- Tachella, J.; Altmann, Y.; Mellado, N.; McCarthy, A.; Tobin, R.; Buller, G.S.; Tourneret, J.Y.; McLaughlin, S. Real-time 3D reconstruction from single-photon lidar data using plug-and-play point cloud denoisers. Nat. Commun. 2019, 10, 4984. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Sui, C.; Huang, T.; Zhang, Y.; Zhou, W.; Chen, X.; Liu, Y.H. 3D surface reconstruction of transparent objects using laser scanning with a four-layers refinement process. Opt. Express 2022, 30, 8571–8591. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Xu, D.; Hyyppä, J.; Liang, Y. A survey of applications with combined BIM and 3D laser scanning in the life cycle of buildings. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5627–5637. [Google Scholar] [CrossRef]

- Dong, J.; Li, Z.; Liu, X.; Zhong, W.; Wang, G.; Liu, Q.; Song, X. High-speed real 3D scene acquisition and 3D holographic reconstruction system based on ultrafast optical axial scanning. Opt. Express 2023, 31, 21721–21730. [Google Scholar] [CrossRef] [PubMed]

- Mitton, D.; Zhao, K.; Bertrand, S.; Zhao, C.; Laporte, S.; Yang, C.; An, K.N.; Skalli, W. 3D reconstruction of the ribs from lateral and frontal X-rays in comparison to 3D CT-scan reconstruction. J. Biomech. 2008, 41, 706–710. [Google Scholar] [CrossRef] [PubMed]

- Reyneke, C.J.F.; Lüthi, M.; Burdin, V.; Douglas, T.S.; Vetter, T.; Mutsvangwa, T.E. Review of 2-D/3-D reconstruction using statistical shape and intensity models and X-ray image synthesis: Toward a unified framework. IEEE Rev. Biomed. Eng. 2018, 12, 269–286. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Ye, M.; Liu, Z.; Wang, C. Precision of cortical bone reconstruction based on 3D CT scans. Comput. Med. Imaging Graph. 2009, 33, 235–241. [Google Scholar] [CrossRef] [PubMed]

- Yu, B.; Fan, W.; Fan, J.H.; Dijkstra, T.A.; Wei, Y.N.; Wei, T.T. X-ray micro-computed tomography (μ-CT) for 3D characterization of particle kinematics representing water-induced loess micro-fabric collapse. Eng. Geol. 2020, 279, 105895. [Google Scholar] [CrossRef]

- Lorensen, W.E.; Cline, H.E. Marching cubes: A high resolution 3D surface construction algorithm. Semin. Graph. Pioneer. Efforts That Shaped Field 1998, 1, 347–353. [Google Scholar]

- Evans, L.M.; Margetts, L.; Casalegno, V.; Lever, L.M.; Bushell, J.; Lowe, T.; Wallwork, A.; Young, P.; Lindemann, A.; Schmidt, M.; et al. Transient thermal finite element analysis of CFC–Cu ITER monoblock using X-ray tomography data. Fusion Eng. Des. 2015, 100, 100–111. [Google Scholar] [CrossRef]

- Uhm, K.H.; Shin, H.K.; Cho, H.J.; Jung, S.W.; Ko, S.J. 3D Reconstruction Based on Multi-Phase CT for Kidney Cancer Surgery. In Proceedings of the 2023 International Technical Conference on Circuits/Systems, Computers, and Communications (ITC-CSCC), Grand Hyatt Jeju, Republic of Korea, 25–28 June 2023. [Google Scholar]

- Kowarschik, R.M.; Kuehmstedt, P.; Gerber, J.; Schreiber, W.; Notni, G. Adaptive optical 3-D-measurement with structured light. Opt. Eng. 2000, 39, 150–158. [Google Scholar]

- Zhang, S.; Yau, S.-T. High dynamic range scanning technique. Opt. Eng. 2009, 48, 033604. [Google Scholar]

- Ekstrand, L.; Zhang, S. Autoexposure for 3D shape measurement using a digital-light-processing projector. Opt. Eng. 2011, 50, 123603. [Google Scholar] [CrossRef]

- Yang, Z.; Wang, P.; Li, X.; Sun, C. 3D laser scanner system using high dynamic range imaging. Opt. Lasers Eng. 2014, 54, 31–41. [Google Scholar]

- Jiang, Y.; Jiang, K.; Lin, J. Extraction method for sub-pixel center of linear structured light stripe. Laser Optoelectron. Prog. 2015, 7, 179–185. [Google Scholar]

- Santolaria, J.; Guillomía, D.; Cajal, C.; Albajez, J.A.; Aguilar, J.J. Modelling and calibration technique of laser triangulation sensors for integration in robot arms and articulated arm coordinate measuring machines. Sensors 2009, 9, 7374–7396. [Google Scholar] [CrossRef] [PubMed]

- Hyun, J.-S.; Chiu, G.T.-C.; Zhang, S. High-speed and high-accuracy 3D surface measurement using a mechanical projector. Opt. Express 2018, 26, 1474–1487. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Zhang, Q.; Liu, Y.; Yu, X.; Hou, Y.; Chen, W. High-speed 3D shape measurement using a rotary mechanical projector. Opt. Express 2021, 29, 7885–7903. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Luo, B.; Su, X.; Li, L.; Li, B.; Zhang, S.; Wang, Y. A convenient 3D reconstruction model based on parallel-axis structured light system. Opt. Lasers Eng. 2021, 138, 106366. [Google Scholar] [CrossRef]

- Stipes, J.A.; Cole, J.G.P.; Humphreys, J. 4D scan registration with the SR-3000 LIDAR. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008. [Google Scholar]

- Chua, S.Y.; Wang, X.; Guo, N.; Tan, C.S.; Chai, T.Y.; Seet, G.L. Improving three-dimensional (3D) range gated reconstruction through time-of-flight (TOF) imaging analysis. J. Eur. Opt. Soc.-Rapid Publ. 2016, 11, 16015. [Google Scholar] [CrossRef]

- Woodham, R.J. Photometric method for determining surface orientation from multiple images. Opt. Eng. 1980, 19, 139–144. [Google Scholar] [CrossRef]

- Horn, B.K.P. Obtaining shape from shading information. In Shape from Shading; MIT Press: Cambridge, MA, USA, 1989; pp. 123–171. [Google Scholar]

- Shi, B.; Wu, Z.; Mo, Z.; Duan, D.; Yeung, S.K.; Tan, P. A benchmark dataset and evaluation for non-lambertian and uncalibrated photometric stereo. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Wu, L.; Ganesh, A.; Shi, B.; Matsushita, Y.; Wang, Y.; Ma, Y. Robust photometric stereo via low-rank matrix completion and recovery. In Proceedings of the Computer Vision–ACCV 2010: 10th Asian Conference on Computer Vision, Queenstown, New Zealand, 8–12 November 2010; Revised Selected Papers, Part III 10. Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Ikehata, S.; Wipf, D.; Matsushita, Y.; Aizawa, K. Robust photometric stereo using sparse regression. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Alldrin, N.G.; Mallick, S.P.; Kriegman, D.J. Resolving the generalized bas-relief ambiguity by entropy minimization. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Karami, A.; Menna, F.; Remondino, F. Investigating 3D reconstruction of non-collaborative surfaces through photogrammetry and photometric stereo. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 519–526. [Google Scholar] [CrossRef]

- Ju, Y.; Jian, M.; Wang, C.; Zhang, C.; Dong, J.; Lam, K.M. Estimating high-resolution surface normals via low-resolution photometric stereo images. IEEE Trans. Circuits Syst. Video Technol. 2023. [Google Scholar] [CrossRef]

- Daum, M.; Dudek, G. On 3-D surface reconstruction using shape from shadows. In Proceedings of the 1998 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (Cat. No. 98CB36231), Santa Barnara, CA, USA, 23–25 June 1998. [Google Scholar]

- McCool, M.D. Shadow volume reconstruction from depth maps. ACM Trans. Graph. (TOG) 2000, 19, 1–26. [Google Scholar] [CrossRef]

- Liu, W.C.; Wu, B. An integrated photogrammetric and photoclinometric approach for illumination-invariant pixel-resolution 3D mapping of the lunar surface. ISPRS J. Photogramm. Remote Sens. 2020, 159, 153–168. [Google Scholar] [CrossRef]

- Li, Z.; Ji, S.; Fan, D.; Yan, Z.; Wang, F.; Wang, R. Reconstruction of 3D Information of Buildings from Single-View Images Based on Shadow Information. ISPRS Int. J. Geo-Inf. 2024, 13, 62. [Google Scholar] [CrossRef]

- Wang, M.; Wei, S.; Liang, J.; Liu, S.; Shi, J.; Zhang, X. Lightweight FISTA-inspired sparse reconstruction network for mmW 3-D holography. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–20. [Google Scholar] [CrossRef]

- Schramm, S.; Osterhold, P.; Schmoll, R.; Kroll, A. Combining modern 3D reconstruction and thermal imaging: Generation of large-scale 3D thermograms in real-time. Quant. InfraRed Thermogr. J. 2022, 19, 295–311. [Google Scholar] [CrossRef]

- Geiger, A.; Ziegler, J.; Stiller, C. Stereoscan: Dense 3d reconstruction in real-time. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011. [Google Scholar]

- Costa, A.L.; Yasuda, C.L.; Appenzeller, S.; Lopes, S.L.; Cendes, F. Comparison of conventional MRI and 3D reconstruction model for evaluation of temporomandibular joint. Surg. Radiol. Anat. 2008, 30, 663–667. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-sensor fusion in automated driving: A survey. IEEE Access 2019, 8, 2847–2868. [Google Scholar] [CrossRef]

- Yu, H.; Oh, J. Anytime 3D object reconstruction using multi-modal variational autoencoder. IEEE Robot. Autom. Lett. 2022, 7, 2162–2169. [Google Scholar] [CrossRef]

- Buelthoff, H.H. Shape from X: Psychophysics and computation. In Computational Models of Visual Processing, Proceedings of the Sensor Fusion III: 3-D Perception and Recognition, Boston, MA, USA, 4–9 November 1990; Society of Photo-Optical Instrumentation Engineers: Bellingham, WA, USA, 1991; pp. 235–246. [Google Scholar]

- Yemez, Y.; Schmitt, F. 3D reconstruction of real objects with high resolution shape and texture. Image Vis. Comput. 2004, 22, 1137–1153. [Google Scholar] [CrossRef]

- Alexiadis, D.S.; Zarpalas, D.; Daras, P. Real-time, realistic full-body 3D reconstruction and texture mapping from multiple Kinects. In Proceedings of the IVMSP 2013, Seoul, Republic of Korea, 10–12 June 2013. [Google Scholar]

- Lee, J.H.; Ha, H.; Dong, Y.; Tong, X.; Kim, M.H. Texturefusion: High-quality texture acquisition for real-time rgb-d scanning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Xu, K.; Wang, M.; Wang, M.; Feng, L.; Zhang, T.; Liu, X. Enhancing Texture Generation with High-Fidelity Using Advanced Texture Priors. arXiv 2024, arXiv:2403.05102. [Google Scholar]

- Qin, K.; Wang, Z. 3D Reconstruction of metal parts based on depth from focus. In Proceedings of the 2011 International Conference on Electronic and Mechanical Engineering and Information Technology, Harbin, China, 12–14 August 2011; Volume 2. [Google Scholar]

- Martišek, D. Fast Shape-From-Focus method for 3D object reconstruction. Optik 2018, 169, 16–26. [Google Scholar] [CrossRef]

- Lee, I.-H.; Shim, S.-O.; Choi, T.-S. Improving focus measurement via variable window shape on surface radiance distribution for 3D shape reconstruction. Opt. Lasers Eng. 2013, 51, 520–526. [Google Scholar] [CrossRef]

- Li, M.; Mutahira, H.; Ahmad, B.; Muhammad, M.S. Analyzing image focus using deep neural network for 3d shape recovery. In Proceedings of the 2019 Second International Conference on Latest Trends in Electrical Engineering and Computing Technologies (INTELLECT), Karachi, Pakistan, 13–14 November 2019. [Google Scholar]

- Ali, U.; Mahmood, M.T. Combining depth maps through 3D weighted least squares in shape from focus. In Proceedings of the 2019 International Conference on Electronics, Information, and Communication (ICEIC), Auckland, New Zealand, 22–25 January 2019. [Google Scholar]

- Yan, T.; Hu, Z.; Qian, Y.; Qiao, Z.; Zhang, L. 3D shape reconstruction from multifocus image fusion using a multidirectional modified Laplacian operator. Pattern Recognit. 2020, 98, 107065. [Google Scholar] [CrossRef]

- Shang, M.; Kuang, T.; Zhou, H.; Yu, F. Monocular Microscopic Image 3D Reconstruction Algorithm based on Depth from Defocus with Adaptive Window Selection. In Proceedings of the 2020 12th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 22–23 August 2020; Volume 2. [Google Scholar]

- Julesz, B. Binocular depth perception of computer-generated patterns. Bell Syst. Tech. J. 1960, 39, 1125–1162. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Whelan, T.; Kaess, M.; Fallon, M.; Johannsson, H.; Leonard, J.; McDonald, J. Kintinuous: Spatially extended kinectfusion. In Proceedings of the RSS’12 Workshop on RGB-D: Advanced Reasoning with Depth Cameras, Sydney, Australia, 9–10 July 2012. [Google Scholar]

- Whelan, T.; Leutenegger, S.; Salas-Moreno, R.F.; Glocker, B.; Davison, A.J. ElasticFusion: Dense SLAM without a pose graph. Robot. Sci. Syst. 2015, 11, 3. [Google Scholar]

- Choi, S.; Zhou, Q.-Y.; Koltun, V. Robust reconstruction of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Tian, X.; Liu, R.; Wang, Z.; Ma, J. High quality 3D reconstruction based on fusion of polarization imaging and binocular stereo vision. Inf. Fusion 2022, 77, 19–28. [Google Scholar] [CrossRef]

- Wang, D.; Sun, H.; Lu, W.; Zhao, W.; Liu, Y.; Chai, P.; Han, Y. A novel binocular vision system for accurate 3-D reconstruction in large-scale scene based on improved calibration and stereo matching methods. Multimed. Tools Appl. 2022, 81, 26265–26281. [Google Scholar] [CrossRef]

- Ullman, S. The interpretation of structure from motion. Proc. R. Soc. Lond. Ser. B Biol. Sci. 1979, 203, 405–426. [Google Scholar]

- Wu, C. Towards linear-time incremental structure from motion. In Proceedings of the 2013 International Conference on 3D Vision-3DV 2013, Seattle, WA, USA, 29 June–1 July 2013. [Google Scholar]

- Cui, H.; Shen, S.; Gao, W.; Hu, Z. Efficient large-scale structure from motion by fusing auxiliary imaging information. IEEE Trans. Image Process. 2015, 24, 3561–3573. [Google Scholar] [PubMed]

- Cui, H.; Gao, X.; Shen, S.; Hu, Z. HSfM: Hybrid structure-from-motion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Xu, B.; Zhang, L.; Liu, Y.; Ai, H.; Wang, B.; Sun, Y.; Fan, Z. Robust hierarchical structure from motion for large-scale unstructured image sets. ISPRS J. Photogramm. Remote Sens. 2021, 181, 367–384. [Google Scholar] [CrossRef]

- Kanade, T.; Morris, D.D. Factorization methods for structure from motion. Philos. Trans. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 1998, 356, 1153–1173. [Google Scholar] [CrossRef]

- Gay, P.; Rubino, C.; Crocco, M.; Del Bue, A. Factorization based structure from motion with object priors. Comput. Vis. Image Underst. 2018, 172, 124–137. [Google Scholar] [CrossRef]

- Cin, A.P.D.; Boracchi, G.; Magri, L. Multi-body Depth and Camera Pose Estimation from Multiple Views. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023. [Google Scholar]

- Seitz, S.M.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A comparison and evaluation of multi-view stereo reconstruction algorithms. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 1. [Google Scholar]

- Moulon, P.; Monasse, P.; Marlet, R. Global fusion of relative motions for robust, accurate and scalable structure from motion. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Hepp, B.; Nießner, M.; Hilliges, O. Plan3d: Viewpoint and trajectory optimization for aerial multi-view stereo reconstruction. ACM Trans. Graph. TOG 2018, 38, 1–17. [Google Scholar] [CrossRef]

- Zhu, Q.; Wang, Z.; Hu, H.; Xie, L.; Ge, X.; Zhang, Y. Leveraging photogrammetric mesh models for aerial-ground feature point matching toward integrated 3D reconstruction. ISPRS J. Photogramm. Remote Sens. 2020, 166, 26–40. [Google Scholar] [CrossRef]

- Shen, S. Accurate multiple view 3d reconstruction using patch-based stereo for large-scale scenes. IEEE Trans. Image Process. 2013, 22, 1901–1914. [Google Scholar] [CrossRef] [PubMed]

- Stereopsis, R.M. Accurate, dense, and robust multiview stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1362–1376. [Google Scholar]

- Vu, H.H.; Labatut, P.; Pons, J.P.; Keriven, R. High accuracy and visibility-consistent dense multiview stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 889–901. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Yang, F.; Huang, Y.; Zhang, Y.; Wu, G. Single-shot 3D reconstruction using grid pattern-based structured-light vision method. Appl. Sci. 2022, 12, 10602. [Google Scholar] [CrossRef]

- Ye, Z.; Bao, C.; Zhou, X.; Liu, H.; Bao, H.; Zhang, G. Ec-sfm: Efficient covisibility -based structure-from-motion for both sequential and unordered images. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 110–123. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Tsai, R. A versatile camera calibration technique for high-accuracy 3D machine vision metrology using off-the-shelf TV cameras and lenses. IEEE J. Robot. Autom. 1987, 3, 323–344. [Google Scholar] [CrossRef]

- Fu, Y.; Rong, S.; Liu, E.; Bao, Q. Calibration method and regulation algorithm of binocular distance measurement in the large scene of image monitoring for overhead transmission lines. High Volt. Eng. 2019, 45, 377–385. [Google Scholar]

- Selby, B.P.; Sakas, G.; Groch, W.D.; Stilla, U. Patient positioning with X-ray detector self-calibration for image guided therapy. Australas. Phys. Eng. Sci. Med. 2011, 34, 391–400. [Google Scholar] [CrossRef] [PubMed]

- Maybank, S.J.; Faugeras, O.D. A theory of self-calibration of a moving camera. Int. J. Comput. Vis. 1992, 8, 123–151. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Smith, S.M.; Brady, J.M. SUSAN—A new approach to low level image processing. Int. J. Comput. Vis. 1997, 23, 45–78. [Google Scholar] [CrossRef]

- Shi, J. Good features to track. In Proceedings of the 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994. [Google Scholar]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Proceedings, Part I 9. Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Lindeberg, T. Detecting salient blob-like image structures and their scales with a scale-space primal sketch: A method for focus-of-attention. Int. J. Comput. Vis. 1993, 11, 283–318. [Google Scholar] [CrossRef]

- Lindeberg, T. Edge detection and ridge detection with automatic scale selection. Int. J. Comput. Vis. 1998, 30, 117–156. [Google Scholar] [CrossRef]

- Cho, Y.; Kim, D.; Saeed, S.; Kakli, M.U.; Jung, S.H.; Seo, J.; Park, U. Keypoint detection using higher order Laplacian of Gaussian. IEEE Access 2020, 8, 10416–10425. [Google Scholar] [CrossRef]

- Peng, K.; Chen, X.; Zhou, D.; Liu, Y. 3D reconstruction based on SIFT and Harris feature points. In Proceedings of the 2009 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guilin, China, 19–23 December 2009. [Google Scholar]

- Shaker, S.H.; Hamza, N.A. 3D Face Reconstruction Using Structure from Motion Technique. Iraqi J. Inf. Technol. V 2019, 9, 2018. [Google Scholar] [CrossRef]

- Yamada, K.; Kimura, A. A performance evaluation of keypoints detection methods SIFT and AKAZE for 3D reconstruction. In Proceedings of the 2018 International Workshop on Advanced Image Technology (IWAIT), Chiang Mai, Thailand, 7–9 January 2018. [Google Scholar]

- Wu, S.; Feng, B. Parallel SURF Algorithm for 3D Reconstruction. In Proceedings of the 2019 International Conference on Modeling, Simulation, Optimization and Numerical Techniques (SMONT 2019), Shenzhen, China, 27–28 February 2019; Atlantis Press: Dordrecht, The Netherlands, 2019. [Google Scholar]

- Dawood, M.; Cappelle, C.; El Najjar, M.E.; Khalil, M.; Pomorski, D. Harris, SIFT and SURF features comparison for vehicle localization based on virtual 3D model and camera. In Proceedings of the 2012 3rd International Conference on Image Processing Theory, Tools and Applications (IPTA), Istanbul, Turkey, 15–18 October 2012. [Google Scholar]

- Hafiz, D.A.; Youssef, B.A.; Sheta, W.M.; Hassan, H.A. Interest point detection in 3D point cloud data using 3D Sobel-Harris operator. Int. J. Pattern Recognit. Artif. Intell. 2015, 29, 1555014. [Google Scholar] [CrossRef]

- Schmid, B.J.; Adhami, R. Building descriptors from local feature neighborhoods for applications in semi-dense 3D reconstruction. IEEE Trans. Image Process. 2018, 27, 5491–5500. [Google Scholar] [CrossRef] [PubMed]

- Cao, M.; Jia, W.; Li, S.; Li, Y.; Zheng, L.; Liu, X. GPU-accelerated feature tracking for 3D reconstruction. Opt. Laser Technol. 2019, 110, 165–175. [Google Scholar] [CrossRef]

- Fan, B.; Kong, Q.; Wang, X.; Wang, Z.; Xiang, S.; Pan, C.; Fua, P. A performance evaluation of local features for image-based 3D reconstruction. IEEE Trans. Image Process. 2019, 28, 4774–4789. [Google Scholar] [CrossRef] [PubMed]

- Lv, Q.; Lin, H.; Wang, G.; Wei, H.; Wang, Y. ORB-SLAM-based tracing and 3D reconstruction for robot using Kinect 2.0. In Proceedings of the 2017 29th Chinese Control and Decision Conference (CCDC), Chongqing, China, 28–30 May 2017. [Google Scholar]

- Ali, S.G.; Chen, Y.; Sheng, B.; Li, H.; Wu, Q.; Yang, P.; Muhammad, K.; Yang, G. Cost-effective broad learning-based ultrasound biomicroscopy with 3D reconstruction for ocular anterior segmentation. Multimed. Tools Appl. 2021, 80, 35105–35122. [Google Scholar] [CrossRef]

- Hane, C.; Zach, C.; Cohen, A.; Angst, R.; Pollefeys, M. Joint 3D scene reconstruction and class segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Yücer, K.; Sorkine-Hornung, A.; Wang, O.; Sorkine-Hornung, O. Efficient 3D object segmentation from densely sampled light fields with applications to 3D reconstruction. ACM Trans. Graph. TOG 2016, 35, 1–15. [Google Scholar] [CrossRef]

- Vargas, R.; Pineda, J.; Marrugo, A.G.; Romero, L.A. Background intensity removal in structured light three-dimensional reconstruction. In Proceedings of the 2016 XXI Symposium on Signal Processing, Images and Artificial Vision (STSIVA), Bucaramanga, Colombia, 31 August–2 September 2016. [Google Scholar]

- Colombo, M.; Bologna, M.; Garbey, M.; Berceli, S.; He, Y.; Matas, J.F.R.; Migliavacca, F.; Chiastra, C. Computing patient-specific hemodynamics in stented femoral artery models obtained from computed tomography using a validated 3D reconstruction method. Med. Eng. Phys. 2020, 75, 23–35. [Google Scholar] [CrossRef] [PubMed]

- Jin, A.; Fu, Q.; Deng, Z. Contour-based 3d modeling through joint embedding of shapes and contours. In Proceedings of the Symposium on Interactive 3D Graphics And games, San Francisco, CA, USA, 5–7 May 2020. [Google Scholar]

- Xu, Z.; Kang, R.; Lu, R. 3D reconstruction and measurement of surface defects in prefabricated elements using point clouds. J. Comput. Civ. Eng. 2020, 34, 04020033. [Google Scholar] [CrossRef]

- Banerjee, A.; Camps, J.; Zacur, E.; Andrews, C.M.; Rudy, Y.; Choudhury, R.P.; Rodriguez, B.; Grau, V. A completely automated pipeline for 3D reconstruction of human heart from 2D cine magnetic resonance slices. Philos. Trans. R. Soc. A 2021, 379, 20200257. [Google Scholar] [CrossRef]

- Maken, P.; Gupta, A. 2D-to-3D: A review for computational 3D image reconstruction from X-ray images. Arch. Comput. Methods Eng. 2023, 30, 85–114. [Google Scholar] [CrossRef]

- Kundu, A.; Li, Y.; Dellaert, F.; Li, F.; Rehg, J.M. Joint semantic segmentation and 3d reconstruction from monocular video. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part VI 13. Springer International Publishing: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Kundu, A.; Yin, X.; Fathi, A.; Ross, D.; Brewington, B.; Funkhouser, T.; Pantofaru, C. Virtual multi-view fusion for 3d semantic segmentation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXIV 16. Springer International Publishing: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Hu, D.; Gan, V.J.L.; Yin, C. Robot-assisted mobile scanning for automated 3D reconstruction and point cloud semantic segmentation of building interiors. Autom. Constr. 2023, 152, 104949. [Google Scholar] [CrossRef]

- Jiang, C.; Paudel, D.P.; Fougerolle, Y.; Fofi, D.; Demonceaux, C. Static-map and dynamic object reconstruction in outdoor scenes using 3-d motion segmentation. IEEE Robot. Autom. Lett. 2016, 1, 324–331. [Google Scholar] [CrossRef]

- Wang, C.; Luo, B.; Zhang, Y.; Zhao, Q.; Yin, L.; Wang, W.; Su, X.; Wang, Y.; Li, C. DymSLAM: 4D dynamic scene reconstruction based on geometrical motion segmentation. IEEE Robot. Autom. Lett. 2020, 6, 550–557. [Google Scholar] [CrossRef]

- Ingale, A.K. Real-time 3D reconstruction techniques applied in dynamic scenes: A systematic literature review. Comput. Sci. Rev. 2021, 39, 100338. [Google Scholar] [CrossRef]

- Li, Z.; Wang, Q.; Cole, F.; Tucker, R.; Snavely, N. Dynibar: Neural dynamic image-based rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Yang, L.; Cai, H. Enhanced visual SLAM for construction robots by efficient integration of dynamic object segmentation and scene semantics. Adv. Eng. Inform. 2024, 59, 102313. [Google Scholar] [CrossRef]

- Pathegama, M.P.; Göl, Ö. Edge-end pixel extraction for edge-based image segmentation. Int. J. Comput. Inf. Eng. 2007, 1, 453–456. [Google Scholar]

- Phan, T.B.; Trinh, D.H.; Wolf, D.; Daul, C. Optical flow-based structure-from-motion for the reconstruction of epithelial surfaces. Pattern Recognit. 2020, 105, 107391. [Google Scholar] [CrossRef]

- Weng, N.; Yang, Y.H.; Pierson, R. 3D surface reconstruction using optical flow for medical imaging. IEEE Trans. Med. Imaging 1997, 16, 630–641. [Google Scholar] [CrossRef]

- Barghout, L.; Sheynin, J. Real-world scene perception and perceptual organization: Lessons from Computer Vision. J. Vis. 2013, 13, 709. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer International Publishing: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Hao, M.; Zhang, D.; Zou, P.; Zhang, W. Fusion PSPnet image segmentation based method for multi-focus image fusion. IEEE Photonics J. 2019, 11, 1–12. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023. [Google Scholar]

- Sun, J.; Wang, X.; Wang, L.; Li, X.; Zhang, Y.; Zhang, H.; Liu, Y. Next3d: Generative neural texture rasterization for 3d-aware head avatars. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 20991–21002. [Google Scholar]

- Dib, A.; Bharaj, G.; Ahn, J.; Thébault, C.; Gosselin, P.; Romeo, M.; Chevallier, L. Practical face reconstruction via differentiable ray tracing. Comput. Graph. Forum 2021, 40, 153–164. [Google Scholar] [CrossRef]

- Zwicker, M.; Jarosz, W.; Lehtinen, J.; Moon, B.; Ramamoorthi, R.; Rousselle, F.; Sen, P.; Soler, C.; Yoon, S.E. Recent advances in adaptive sampling and reconstruction for Monte Carlo rendering. Comput. Graph. Forum 2015, 34, 667–681. [Google Scholar] [CrossRef]

- Azinovic, D.; Li, T.M.; Kaplanyan, A.; Nießner, M. Inverse path tracing for joint material and lighting estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2447–2456. [Google Scholar]

- Khadka, A.R.; Remagnino, P.; Argyriou, V. Object 3D reconstruction based on photometric stereo and inverted rendering. In Proceedings of the 2018 14th International Conference on Signal-Image Technology and Internet-Based Systems (SITIS), Las Palmas de Gran Canaria, Spain, 26–29 November 2018; pp. 208–215. [Google Scholar]

- Savarese, S.; Andreetto, M.; Rushmeier, H.; Bernardini, F.; Perona, P. 3d reconstruction by shadow carving: Theory and practical evaluation. Int. J. Comput. Vis. 2007, 71, 305–336. [Google Scholar] [CrossRef]

- Beeler, T.; Bradley, D.; Zimmer, H.; Gross, M. Improved reconstruction of deforming surfaces by cancelling ambient occlusion. In Proceedings of the Computer Vision–ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Proceedings, Part I 12. Springer: Berlin/Heidelberg, Germany, 2012; pp. 30–43. [Google Scholar]

- Buchholz, H.; Döllner, J.; Nienhaus, M.; Kirsch, F. Realtime non-photorealistic rendering of 3D city models. In Proceedings of the 1st International Workshop on Next Generation 3D City Models, Bonn, Germany, 21–22 June 2005; pp. 83–88. [Google Scholar]

- Levoy, M. Display of surfaces from volume data. IEEE Comput. Graph. Appl. 1988, 8, 29–37. [Google Scholar] [CrossRef]

- Lacroute, P.; Levoy, M. Fast volume rendering using a shear-warp factorization of the viewing transformation. In Proceedings of the 21st Annual Conference on Computer Graphics and Interactive Techniques, New York, NY, USA, 24–29 July 1994; pp. 451–458. [Google Scholar]

- Zwicker, M.; Pfister, H.; Van Baar, J.; Gross, M. EWA splatting. IEEE Trans. Vis. Comput. Graph. 2002, 8, 223–238. [Google Scholar] [CrossRef]

- Yu, T.; Guo, K.; Xu, F.; Dong, Y.; Su, Z.; Zhao, J.; Li, J.; Dai, Q.; Liu, Y. Bodyfusion: Real-time capture of human motion and surface geometry using a single depth camera. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 910–919. [Google Scholar]

- Shao, R.; Zheng, Z.; Tu, H.; Liu, B.; Zhang, H.; Liu, Y. Tensor4d: Efficient neural 4d decomposition for high-fidelity dynamic reconstruction and rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 16632–16642. [Google Scholar]

- Sun, K.; Zhang, J.; Liu, J.; Yu, R.; Song, Z. DRCNN: Dynamic routing convolutional neural network for multi-view 3D object recognition. IEEE Trans. Image Process. 2020, 30, 868–877. [Google Scholar] [CrossRef] [PubMed]

- Schmied, A.; Fischer, T.; Danelljan, M.; Pollefeys, M.; Yu, F. R3d3: Dense 3d reconstruction of dynamic scenes from multiple cameras. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 3216–3226. [Google Scholar]

- Loper, M.; Mahmood, N.; Romero, J.; Pons-Moll, G.; Black, M.J. SMPL: A skinned multi-person linear model. In Seminal Graphics Papers: Pushing the Boundaries; Association for Computing Machinery: New York, NY, USA, 2023; Volume 2, pp. 851–866. [Google Scholar]

- Romero, J.; Tzionas, D.; Black, M.J. Embodied hands: Modeling and capturing hands and bodies together. arXiv 2022, arXiv:2201.02610. [Google Scholar] [CrossRef]

- Matsuyama, T.; Wu, X.; Takai, T.; Wada, T. Real-time dynamic 3-D object shape reconstruction and high-fidelity texture mapping for 3-D video. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 357–369. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Fox, D.; Seitz, S.M. Dynamicfusion: Reconstruction and tracking of non-rigid scenes in real-time. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 343–352. [Google Scholar]

- Innmann, M.; Zollhöfer, M.; Nießner, M.; Theobalt, C.; Stamminger, M. Volumedeform: Real-time volumetric non-rigid reconstruction. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VIII 14. Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 362–379. [Google Scholar]

- Yu, T.; Zheng, Z.; Guo, K.; Zhao, J.; Dai, Q.; Li, H.; Pons-Moll, G.; Liu, Y. Doublefusion: Real-time capture of human performances with inner body shapes from a single depth sensor. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7287–7296. [Google Scholar]

- Dou, M.; Khamis, S.; Degtyarev, Y.; Davidson, P.; Fanello, S.R.; Kowdle, A.; Escolano, S.O.; Rhemann, C.; Kim, D.; Taylor, J.; et al. Fusion4d: Real-time performance capture of challenging scenes. ACM Trans. Graph. ToG 2016, 35, 1–13. [Google Scholar] [CrossRef]

- Lin, W.; Zheng, C.; Yong, J.H.; Xu, F. Occlusionfusion: Occlusion-aware motion estimation for real-time dynamic 3d reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1736–1745. [Google Scholar]

- Pan, Z.; Hou, J.; Yu, L. Optimization RGB-D 3-D reconstruction algorithm based on dynamic SLAM. IEEE Trans. Instrum. Meas. 2023, 72, 1–13. [Google Scholar] [CrossRef]

- Kerbl, B.; Kopanas, G.; Leimkühler, T.; Drettakis, G. 3d gaussian splatting for real-time radiance field rendering. ACM Trans. Graph. 2023, 42, 1–14. [Google Scholar] [CrossRef]

- Yan, Y.; Lin, H.; Zhou, C.; Wang, W.; Sun, H.; Zhan, K.; Lang, X.; Zhou, X.; Peng, S. Street gaussians for modeling dynamic urban scenes. arXiv 2024, arXiv:2401.01339. [Google Scholar]

- Guédon, A.; Lepetit, V. Sugar: Surface-aligned gaussian splatting for efficient 3d mesh reconstruction and high-quality mesh rendering. arXiv 2023, arXiv:2311.12775. [Google Scholar]

- Xie, T.; Zong, Z.; Qiu, Y.; Li, X.; Feng, Y.; Yang, Y.; Jiang, C. Physgaussian: Physics-integrated 3d gaussians for generative dynamics. arXiv 2023, arXiv:2311.12198. [Google Scholar]

- Chen, G.; Wang, W. A survey on 3d gaussian splatting. arXiv 2024, arXiv:2401.03890. [Google Scholar]

- Meyer, L.; Erich, F.; Yoshiyasu, Y.; Stamminger, M.; Ando, N.; Domae, Y. PEGASUS: Physically Enhanced Gaussian Splatting Simulation System for 6DOF Object Pose Dataset Generation. arXiv 2024, arXiv:2401.02281. [Google Scholar]

- Chung, J.; Oh, J.; Lee, K.M. Depth-regularized optimization for 3d gaussian splatting in few-shot images. arXiv 2023, arXiv:2311.13398. [Google Scholar]

- Wu, G.; Yi, T.; Fang, J.; Xie, L.; Zhang, X.; Wei, W.; Liu, W.; Tian, Q.; Wang, X. 4d gaussian splatting for real-time dynamic scene rendering. arXiv 2023, arXiv:2310.08528. [Google Scholar]

- Liu, Y.; Li, C.; Yang, C.; Yuan, Y. EndoGaussian: Gaussian Splatting for Deformable Surgical Scene Reconstruction. arXiv 2024, arXiv:2401.12561. [Google Scholar]

- Lin, J.; Li, Z.; Tang, X.; Liu, J.; Liu, S.; Liu, J.; Lu, Y.; Wu, X.; Xu, S.; Yan, Y.; et al. VastGaussian: Vast 3D Gaussians for Large Scene Reconstruction. arXiv 2024, arXiv:2402.17427. [Google Scholar]

- Jiang, Z.; Rahmani, H.; Black, S.; Williams, B.M. 3D Points Splatting for Real-Time Dynamic Hand Reconstruction. arXiv 2023, arXiv:2312.13770. [Google Scholar]

- Chen, H.; Li, C.; Lee, G.H. Neusg: Neural implicit surface reconstruction with 3d gaussian splatting guidance. arXiv 2023, arXiv:2312.00846. [Google Scholar]

- Gao, L.; Yang, J.; Zhang, B.T.; Sun, J.M.; Yuan, Y.J.; Fu, H.; Lai, Y.K. Mesh-based Gaussian Splatting for Real-time Large-scale Deformation. arXiv 2024, arXiv:2402.04796. [Google Scholar]

- Magnabosco, M.; Breckon, T.P. Cross-spectral visual simultaneous localization and mapping (SLAM) with sensor handover. Robot. Auton. Syst. 2013, 61, 195–208. [Google Scholar] [CrossRef]

- Li, M.; He, J.; Jiang, G.; Wang, H. DDN-SLAM: Real-time Dense Dynamic Neural Implicit SLAM with Joint Semantic Encoding. arXiv 2024, arXiv:2401.01545. [Google Scholar]

- Bloesch, M.; Czarnowski, J.; Clark, R.; Leutenegger, S.; Davison, A.J. Codeslam—Learning a compact, optimisable representation for dense visual slam. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2560–2568. [Google Scholar]

- Rosinol, A.; Leonard, J.J.; Carlone, L. Nerf-slam: Real-time dense monocular slam with neural radiance fields. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 3437–3444. [Google Scholar]

- Weingarten, J.; Siegwart, R. EKF-based 3D SLAM for structured environment reconstruction. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 3834–3839. [Google Scholar]

- Li, T.; Hailes, S.; Julier, S.; Liu, M. UAV-based SLAM and 3D reconstruction system. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macau, China, 5–8 December 2017; pp. 2496–2501. [Google Scholar]

- Zhang, Y.; Tosi, F.; Mattoccia, S.; Poggi, M. Go-slam: Global optimization for consistent 3d instant reconstruction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 3727–3737. [Google Scholar]

- Yan, C.; Qu, D.; Wang, D.; Xu, D.; Wang, Z.; Zhao, B.; Li, X. Gs-slam: Dense visual slam with 3d gaussian splatting. arXiv 2023, arXiv:2311.11700. [Google Scholar]

- Matsuki, H.; Murai, R.; Kelly, P.H.; Davison, A.J. Gaussian splatting slam. arXiv 2023, arXiv:2312.06741. [Google Scholar]

- Blanz, V.; Mehl, A.; Vetter, T.; Seidel, H.P. A statistical method for robust 3D surface reconstruction from sparse data. In Proceedings of the 2nd International Symposium on 3D Data Processing, Visualization and Transmission, 3DPVT 2004, Thessaloniki, Greece, 6–9 September 2004; pp. 293–300. [Google Scholar]

- Zuffi, S.; Kanazawa, A.; Jacobs, D.W.; Black, M.J. 3D menagerie: Modeling the 3D shape and pose of animals. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6365–6373. [Google Scholar]

- Fernando KR, M.; Tsokos, C.P. Deep and statistical learning in biomedical imaging: State of the art in 3D MRI brain tumor segmentation. Inf. Fusion 2023, 92, 450–465. [Google Scholar] [CrossRef]

- Huang, Y.; Zheng, W.; Zhang, Y.; Zhou, J.; Lu, J. Tri-perspective view for vision-based 3d semantic occupancy prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9223–9232. [Google Scholar]

- Ming, Z.; Berrio, J.S.; Shan, M.; Worrall, S. InverseMatrixVT3D: An Efficient Projection Matrix-Based Approach for 3D Occupancy Prediction. arXiv 2024, arXiv:2401.12422. [Google Scholar]

- Li, X.; Liu, S.; Kim, K.; De Mello, S.; Jampani, V.; Yang, M.H.; Kautz, J. Self-supervised single-view 3d reconstruction via semantic consistency. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XIV 16. Springer International Publishing: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Lin, J.; Yuan, Y.; Shao, T.; Zhou, K. Towards high-fidelity 3d face reconstruction from in-the-wild images using graph convolutional networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 5891–5900. [Google Scholar]

- Wang, S.; Clark, R.; Wen, H.; Trigoni, N. Deepvo: Towards end-to-end visual odometry with deep recurrent convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 2043–2050. [Google Scholar]

- Jackson, A.S.; Bulat, A.; Argyriou, V.; Tzimiropoulos, G. Large pose 3D face reconstruction from a single image via direct volumetric CNN regression. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1031–1039. [Google Scholar]

- Dou, P.; Shah, S.K.; Kakadiaris, I.A. End-to-end 3D face reconstruction with deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5908–5917. [Google Scholar]

- Yao, Y.; Luo, Z.; Li, S.; Fang, T.; Quan, L. Mvsnet: Depth inference for unstructured multi-view stereo. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 767–783. [Google Scholar]

- Gu, X.; Fan, Z.; Zhu, S.; Dai, Z.; Tan, F.; Tan, P. Cascade cost volume for high-resolution multi-view stereo and stereo matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2495–2504. [Google Scholar]

- Sun, J.; Xie, Y.; Chen, L.; Zhou, X.; Bao, H. Neuralrecon: Real-time coherent 3d reconstruction from monocular video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15598–15607. [Google Scholar]

- Ju, Y.; Lam, K.M.; Xie, W.; Zhou, H.; Dong, J.; Shi, B. Deep learning methods for calibrated photometric stereo and beyond: A survey. arXiv 2022, arXiv:2212.08414. [Google Scholar]

- Santo, H.; Samejima, M.; Sugano, Y.; Shi, B.; Matsushita, Y. Deep photometric stereo network. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Wang, X.; Jian, Z.; Ren, M. Non-lambertian photometric stereo network based on inverse reflectance model with collocated light. IEEE Trans. Image Process. 2020, 29, 6032–6042. [Google Scholar] [CrossRef] [PubMed]

- Chen, G.; Han, K.; Shi, B.; Matsushita, Y.; Wong, K.Y.K. Deep photometric stereo for non-lambertian surfaces. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 129–142. [Google Scholar] [CrossRef] [PubMed]

- Ju, Y.; Shi, B.; Chen, Y.; Zhou, H.; Dong, J.; Lam, K.M. GR-PSN: Learning to estimate surface normal and reconstruct photometric stereo images. IEEE Trans. Vis. Comput. Graph. 2023, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Ikehata, S. CNN-PS: CNN-based photometric stereo for general non-convex surfaces. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Ju, Y.; Shi, B.; Jian, M.; Qi, L.; Dong, J.; Lam, K.M. Normattention-psn: A high-frequency region enhanced photometric stereo network with normalized attention. Int. J. Comput. Vis. 2022, 130, 3014–3034. [Google Scholar] [CrossRef]

- Ju, Y.; Dong, J.; Chen, S. Recovering surface normal and arbitrary images: A dual regression network for photometric stereo. IEEE Trans. Image Process. 2021, 30, 3676–3690. [Google Scholar] [CrossRef] [PubMed]

- Ikehata, S. Scalable, detailed and mask-free universal photometric stereo. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Maturana, D.; Scherer, S. Voxnet: A 3d convolutional neural network for real-time object recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015. [Google Scholar]

- Engelcke, M.; Rao, D.; Wang, D.Z.; Tong, C.H.; Posner, I. Vote3deep: Fast object detection in 3d point clouds using efficient convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017. [Google Scholar]

- Su, H.; Maji, S.; Kalogerakis, E.; Learned-Miller, E. Multi-view convolutional neural networks for 3d shape recognition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Shen, X.; Jia, J. Std: Sparse-to-dense 3d object detector for point cloud. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 30, 5105–5114. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3d object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Chen, Y.; Fu, M.; Shen, K. Point-BLS: 3D Point Cloud Classification Combining Deep Learning and Broad Learning System. In Proceedings of the 2022 34th Chinese Control and Decision Conference (CCDC), Hefei, China, 21–23 May 2022. [Google Scholar]

- Zhou, Z.; Jin, X.; Liu, L.; Zhou, F. Three-Dimensional Geometry Reconstruction Method from Multi-View ISAR Images Utilizing Deep Learning. Remote Sens. 2023, 15, 1882. [Google Scholar] [CrossRef]

- Xiao, A.; Huang, J.; Guan, D.; Zhang, X.; Lu, S.; Shao, L. Unsupervised point cloud representation learning with deep neural networks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 11321–11339. [Google Scholar] [CrossRef] [PubMed]

- Kramer, M.A. Nonlinear principal component analysis using autoassociative neural networks. AIChE J. 1991, 37, 233–243. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, C.; Xue, T.; Freeman, B.; Tenenbaum, J. Learning a probabilistic latent space of object shapes via 3d generative-adversarial modeling. Adv. Neural Inf. Process. Syst. 2016, 29, 82–90. [Google Scholar]

- Achlioptas, P.; Diamanti, O.; Mitliagkas, I.; Guibas, L. Learning representations and generative models for 3d point clouds. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Li, R.; Li, X.; Fu, C.W.; Cohen-Or, D.; Heng, P.A. Pu-gan: A point cloud upsampling adversarial network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Wen, X.; Li, T.; Han, Z.; Liu, Y.S. Point cloud completion by skip-attention network with hierarchical folding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Yu, X.; Tang, L.; Rao, Y.; Huang, T.; Zhou, J.; Lu, J. Point-bert: Pre-training 3d point cloud transformers with masked point modeling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Sanghi, A. Info3d: Representation learning on 3d objects using mutual information maximization and contrastive learning. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXIX 16. Springer International Publishing: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Poursaeed, O.; Jiang, T.; Qiao, H.; Xu, N.; Kim, V.G. Self-supervised learning of point clouds via orientation estimation. In Proceedings of the 2020 International Conference on 3D Vision (3DV), Fukuoka, Japan, 25–28 November 2020. [Google Scholar]

- Chen, Y.; Liu, J.; Ni, B.; Wang, H.; Yang, J.; Liu, N.; Li, T.; Tian, Q. Shape self-correction for unsupervised point cloud understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Barron, J.T.; Mildenhall, B.; Tancik, M.; Hedman, P.; Martin-Brualla, R.; Srinivasan, P.P. Mip-nerf: A multiscale representation for anti-aliasing neural radiance fields. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 5855–5864. [Google Scholar]

- Wang, P.; Liu, L.; Liu, Y.; Theobalt, C.; Komura, T.; Wang, W. Neus: Learning neural implicit surfaces by volume rendering for multi-view reconstruction. arXiv 2021, arXiv:2106.10689. [Google Scholar]

- Tancik, M.; Casser, V.; Yan, X.; Pradhan, S.; Mildenhall, B.; Srinivasan, P.P.; Barron, J.T.; Kretzschmar, H. Block-nerf: Scalable large scene neural view synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8248–8258. [Google Scholar]

- Turki, H.; Ramanan, D.; Satyanarayanan, M. Mega-nerf: Scalable construction of large-scale nerfs for virtual fly-throughs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12922–12931. [Google Scholar]

- Müller, T.; Evans, A.; Schied, C.; Keller, A. Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans. Graph. TOG 2022, 41, 1–15. [Google Scholar] [CrossRef]

- Vora, S.; Radwan, N.; Greff, K.; Meyer, H.; Genova, K.; Sajjadi, M.S.; Pot, E.; Tagliasacchi, A.; Duckworth, D. Nesf: Neural semantic fields for generalizable semantic segmentation of 3d scenes. arXiv 2021, arXiv:2111.13260. [Google Scholar]

- Barron, J.T.; Mildenhall, B.; Verbin, D.; Srinivasan, P.P.; Hedman, P. Mip-nerf 360: Unbounded anti-aliased neural radiance fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Fu, Q.; Xu, Q.; Ong, Y.S.; Tao, W. Geo-neus: Geometry-consistent neural implicit surfaces learning for multi-view reconstruction. Adv. Neural Inf. Process. Syst. 2022, 35, 3403–3416. [Google Scholar]

- Vinod, V.; Shah, T.; Lagun, D. TEGLO: High Fidelity Canonical Texture Mapping from Single-View Images. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–7 January 2023. [Google Scholar]

- Dai, P.; Tan, F.; Yu, X.; Zhang, Y.; Qi, X. GO-NeRF: Generating Virtual Objects in Neural Radiance Fields. arXiv 2024, arXiv:2401.05750. [Google Scholar]

- Li, M.; Lu, M.; Li, X.; Zhang, S. RustNeRF: Robust Neural Radiance Field with Low-Quality Images. arXiv 2024, arXiv:2401.03257. [Google Scholar]

- Chen, H.; Gu, J.; Chen, A.; Tian, W.; Tu, Z.; Liu, L.; Su, H. Single-stage diffusion nerf: A unified approach to 3d generation and reconstruction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 2416–2425. [Google Scholar]

- Kania, K.; Yi, K.M.; Kowalski, M.; Trzciński, T.; Tagliasacchi, A. Conerf: Controllable neural radiance fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 18623–18632. [Google Scholar]

- Ramazzina, A.; Bijelic, M.; Walz, S.; Sanvito, A.; Scheuble, D.; Heide, F. ScatterNeRF: Seeing Through Fog with Physically-Based Inverse Neural Rendering. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 17957–17968. [Google Scholar]

- Zhu, Y.; Mottaghi, R.; Kolve, E.; Lim, J.J.; Gupta, A.; Fei-Fei, L.; Farhadi, A. Target-driven visual navigation in indoor scenes using deep reinforcement learning. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017. [Google Scholar]

- Han, X.; Zhang, Z.; Du, D.; Yang, M.; Yu, J.; Pan, P.; Yang, X.; Liu, L.; Xiong, Z.; Cui, S. Deep reinforcement learning of volume-guided progressive view inpainting for 3d point scene completion from a single depth image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Song, H.; Huang, J.; Cao, Y.P.; Mu, T.J. HDR-Net-Fusion: Real-time 3D dynamic scene reconstruction with a hierarchical deep reinforcement network. Comput. Vis. Media 2021, 7, 419–435. [Google Scholar] [CrossRef]

- Li, K.; Li, A.; Xu, Y.; Xiong, H.; Meng, M.Q.H. Rl-tee: Autonomous probe guidance for transesophageal echocardiography based on attention-augmented deep reinforcement learning. IEEE Trans. Autom. Sci. Eng. 2023, 1–13. [Google Scholar] [CrossRef]

- Li, L.; He, F.; Fan, R.; Fan, B.; Yan, X. 3D reconstruction based on hierarchical reinforcement learning with transferability. Integr. Comput.-Aided Eng. 2023, 30, 327–339. [Google Scholar]

- Ze, Y.; Hansen, N.; Chen, Y.; Jain, M.; Wang, X. Visual reinforcement learning with self-supervised 3d representations. IEEE Robot. Autom. Lett. 2023, 8, 2890–2897. [Google Scholar] [CrossRef]

- Gao, Y.; Wu, J.; Yang, X.; Ji, Z. Efficient hierarchical reinforcement learning for mapless navigation with predictive neighbouring space scoring. IEEE Trans. Autom. Sci. Eng. 2023, 1–16. [Google Scholar] [CrossRef]

- Yang, X.; Ji, Z.; Wu, J.; Lai, Y.K.; Wei, C.; Liu, G.; Setchi, R. Hierarchical reinforcement learning with universal policies for multistep robotic manipulation. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 4727–4741. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.Y.; Johnson, J.; Malik, J.; Feichtenhofer, C.; Gkioxari, G. Multiview compressive coding for 3D reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9065–9075. [Google Scholar]

- Pontes, J.K.; Kong, C.; Sridharan, S.; Lucey, S.; Eriksson, A.; Fookes, C. Image2mesh: A learning framework for single image 3d reconstruction. In Proceedings of the Computer Vision–ACCV 2018: 14th Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; Revised Selected Papers, Part I 14. Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 365–381. [Google Scholar]

- Feng, Y.; Wu, F.; Shao, X.; Wang, Y.; Zhou, X. Joint 3d face reconstruction and dense alignment with position map regression network. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 534–551. [Google Scholar]

- Favalli, M.; Fornaciai, A.; Isola, I.; Tarquini, S.; Nannipieri, L. Multiview 3D reconstruction in geosciences. Comput. Geosci. 2012, 44, 168–176. [Google Scholar] [CrossRef]

- Yang, B.; Wen, H.; Wang, S.; Clark, R.; Markham, A.; Trigoni, N. 3d object reconstruction from a single depth view with adversarial learning. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 679–688. [Google Scholar]

- Wang, L.; Fang, Y. Unsupervised 3D reconstruction from a single image via adversarial learning. arXiv 2017, arXiv:1711.09312. [Google Scholar]

- Ionescu, C.; Papava, D.; Olaru, V.; Sminchisescu, C. Human3. 6m: Large scale datasets and predictive methods for 3d human sensing in natural environments. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 1325–1339. [Google Scholar] [CrossRef] [PubMed]

- Pishchulin, L.; Insafutdinov, E.; Tang, S.; Andres, B.; Andriluka, M.; Gehler, P.V.; Schiele, B. Deepcut: Joint subset partition and labeling for multi person pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4929–4937. [Google Scholar]

- Zhang, C.; Pujades, S.; Black, M.J.; Pons-Moll, G. Detailed, accurate, human shape estimation from clothed 3D scan sequences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Lassner, C.; Romero, J.; Kiefel, M.; Bogo, F.; Black, M.J.; Gehler, P.V. Unite the people: Closing the loop between 3d and 2d human representations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Chen, Q.; Zhang, C.; Liu, W.; Wang, D. SHPD: Surveillance human pose dataset and performance evaluation for coarse-grained pose estimation. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018. [Google Scholar]

- Pavlakos, G.; Choutas, V.; Ghorbani, N.; Bolkart, T.; Osman, A.A.; Tzionas, D.; Black, M.J. Expressive body capture: 3d hands, face, and body from a single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Zheng, Z.; Yu, T.; Wei, Y.; Dai, Q.; Liu, Y. Deephuman: 3d human reconstruction from a single image. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Yu, Z.; Yoon, J.S.; Lee, I.K.; Venkatesh, P.; Park, J.; Yu, J.; Park, H.S. Humbi: A large multiview dataset of human body expressions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Chatzitofis, A.; Saroglou, L.; Boutis, P.; Drakoulis, P.; Zioulis, N.; Subramanyam, S.; Kevelham, B.; Charbonnier, C.; Cesar, P.; Zarpalas, D.; et al. Human4d: A human-centric multimodal dataset for motions and immersive media. IEEE Access 2020, 8, 176241–176262. [Google Scholar] [CrossRef]

- Taheri, O.; Ghorbani, N.; Black, M.J.; Tzionas, D. GRAB: A dataset of whole-body human grasping of objects. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part IV 16. Springer International Publishing: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Zhu, X.; Liao, T.; Lyu, J.; Yan, X.; Wang, Y.; Guo, K.; Cao, Q.; Li, Z.S.; Lei, Z. Mvp-human dataset for 3d human avatar reconstruction from unconstrained frames. arXiv 2022, arXiv:2204.11184. [Google Scholar]

- Pumarola, A.; Sanchez-Riera, J.; Choi, G.; Sanfeliu, A.; Moreno-Noguer, F. 3dpeople: Modeling the geometry of dressed humans. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ international conference on intelligent robots and systems, Vilamoura-Algarve, Portugal, 7–12 October 2012. [Google Scholar]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor segmentation and support inference from rgbd images. In Proceedings of the Computer Vision–ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Proceedings, Part V 12. Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Xiao, J.; Owens, A.; Torralba, A. Sun3d: A database of big spaces reconstructed using sfm and object labels. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Couprie, C.; Farabet, C.; Najman, L.; LeCun, Y. Indoor semantic segmentation using depth information. arXiv 2013, arXiv:1301.3572. [Google Scholar]

- Chang, A.X.; Funkhouser, T.; Guibas, L.; Hanrahan, P.; Huang, Q.; Li, Z.; Savarese, S.; Savva, M.; Song, S.; Su, H.; et al. Shapenet: An information-rich 3d model repository. arXiv 2015, arXiv:1512.03012. [Google Scholar]

- Song, S.; Lichtenberg, S.P.; Xiao, J. Sun rgb-d: A rgb-d scene understanding benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- McCormac, J.; Handa, A.; Leutenegger, S.; Davison, A.J. Scenenet rgb-d: 5m photorealistic images of synthetic indoor trajectories with ground truth. arXiv 2016, arXiv:1612.05079. [Google Scholar]

- Hua, B.S.; Pham, Q.H.; Nguyen, D.T.; Tran, M.K.; Yu, L.F.; Yeung, S.K. Scenenn: A scene meshes dataset with annotations. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016. [Google Scholar]

- Song, S.; Yu, F.; Zeng, A.; Chang, A.X.; Savva, M.; Funkhouser, T. Semantic scene completion from a single depth image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wasenmüller, O.; Meyer, M.; Stricker, D. CoRBS: Comprehensive RGB-D benchmark for SLAM using Kinect v2. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016. [Google Scholar]

- Chang, A.; Dai, A.; Funkhouser, T.; Halber, M.; Niessner, M.; Savva, M.; Song, S.; Zeng, A.; Zhang, Y. Matterport3d: Learning from rgb-d data in indoor environments. arXiv 2017, arXiv:1709.06158. [Google Scholar]

- Armeni, I.; Sax, S.; Zamir, A.R.; Savarese, S. Joint 2d-3d-semantic data for indoor scene understanding. arXiv 2017, arXiv:1702.01105. [Google Scholar]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.; Nießner, M. Scannet: Richly-annotated 3d reconstructions of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Li, W.; Saeedi, S.; McCormac, J.; Clark, R.; Tzoumanikas, D.; Ye, Q.; Huang, Y.; Tang, R.; Leutenegger, S. Interiornet: Mega-scale multi-sensor photo-realistic indoor scenes dataset. arXiv 2018, arXiv:1809.00716. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Xiang, Y.; Mottaghi, R.; Savarese, S. Beyond pascal: A benchmark for 3d object detection in the wild. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Steamboat Springs, CO, USA, 24–26 March 2014. [Google Scholar]