Optimisation and Calibration of Bayesian Neural Network for Probabilistic Prediction of Biogas Performance in an Anaerobic Lagoon

Abstract

1. Introduction

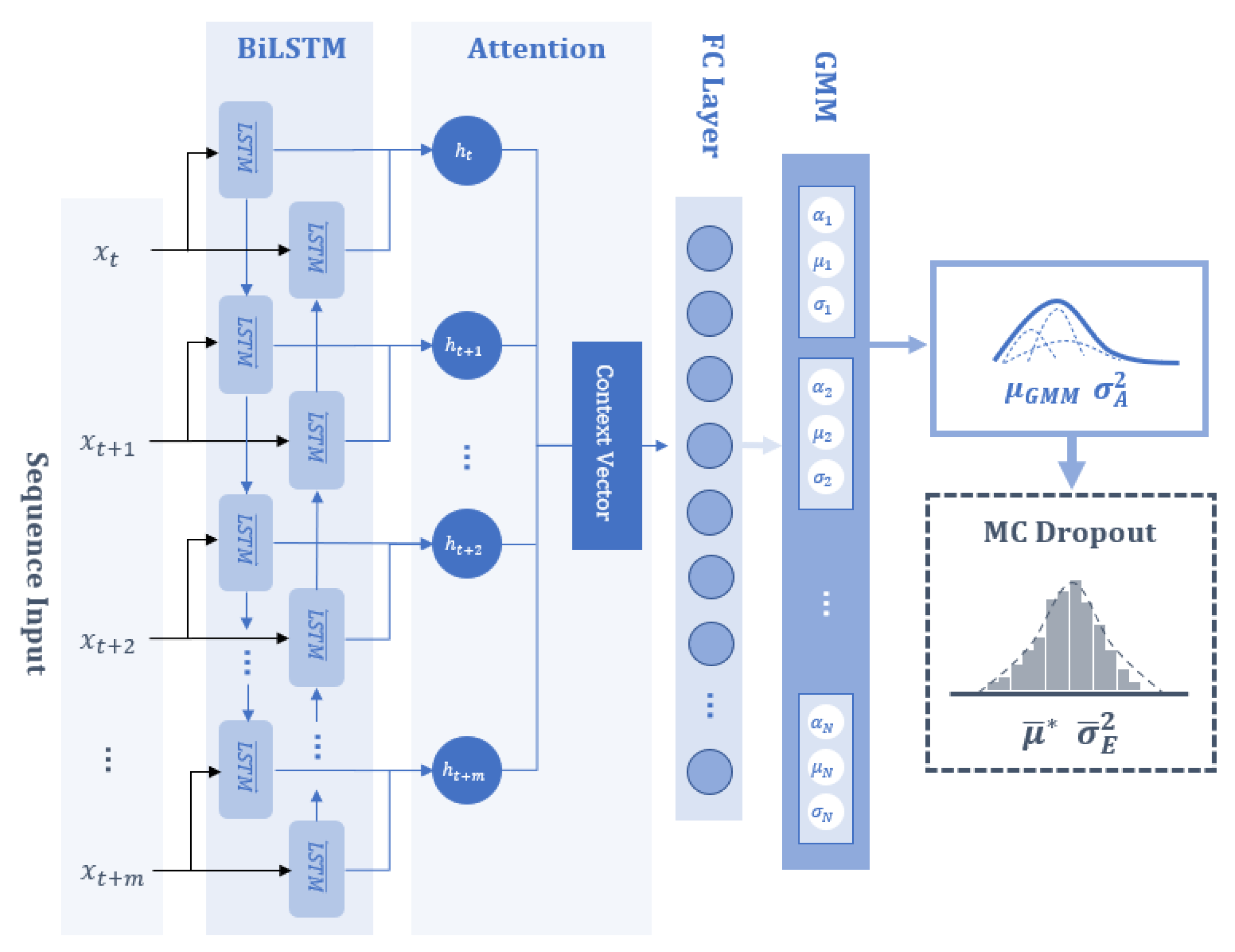

2. Neural Network Architecture

2.1. Recurrent Neural Networks and LSTM

2.2. Bidirectional Long Short-Term Memory

2.3. Attention Mechanism

2.4. Bayesian Neural Networks for Epistemic Uncertainty

2.5. Mixture Density Networks Using Gaussian Mixture Models for Aleatoric Uncertainty

2.6. Proposed Bayesian Mixture Density Neural Network Architecture

2.7. Model Calibration

3. Method

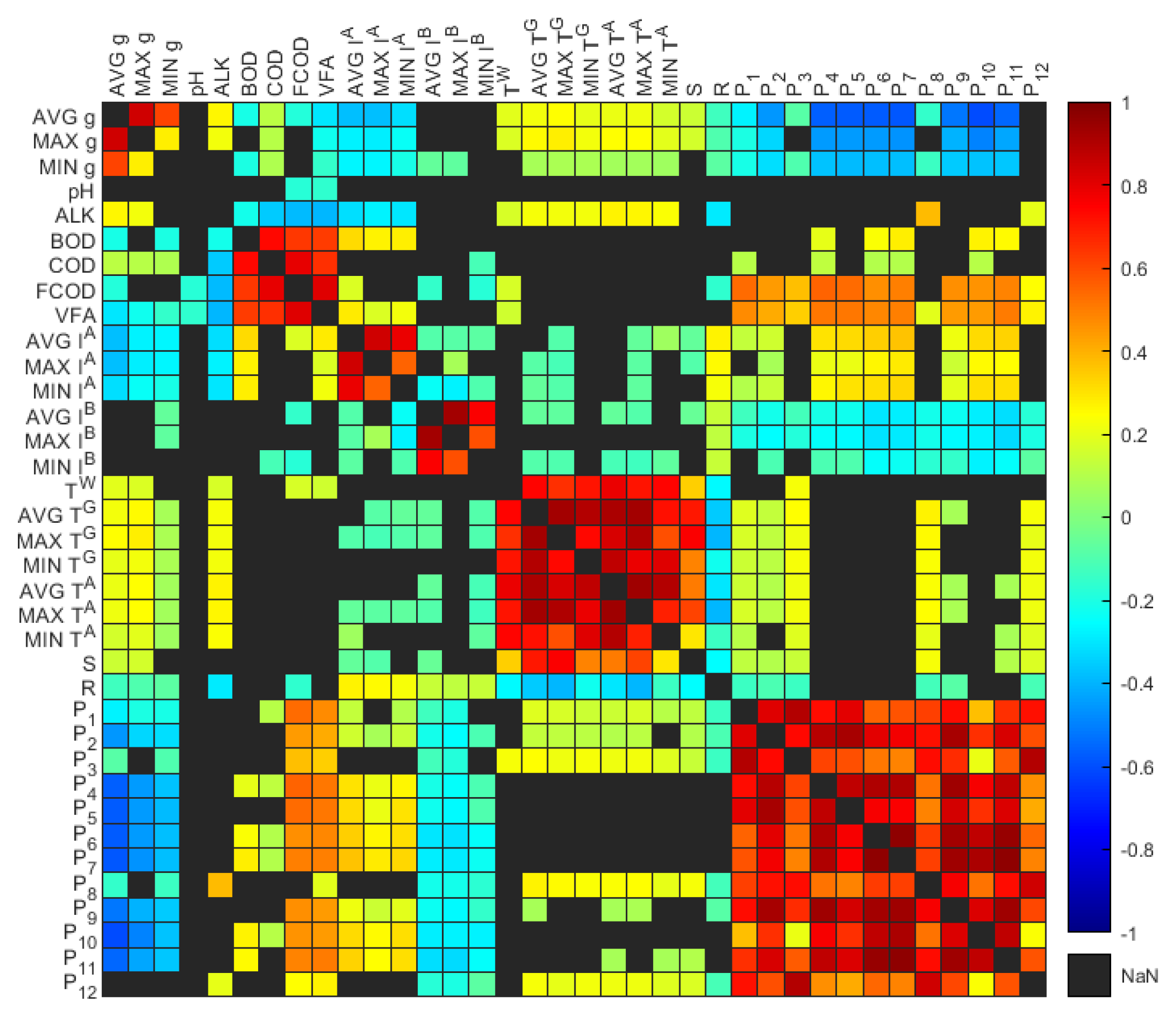

3.1. Data Preparation

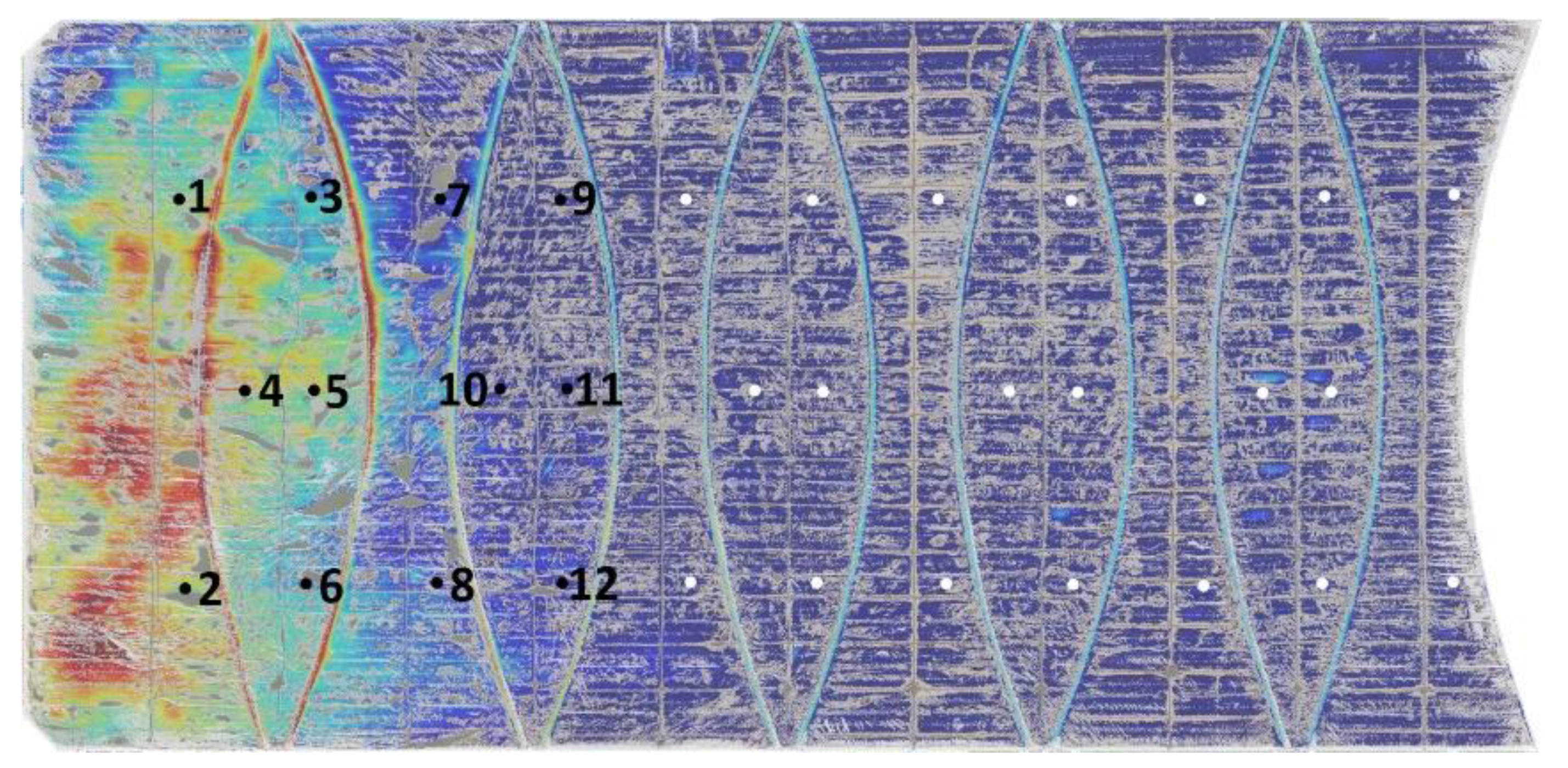

3.2. Inspection Parameters

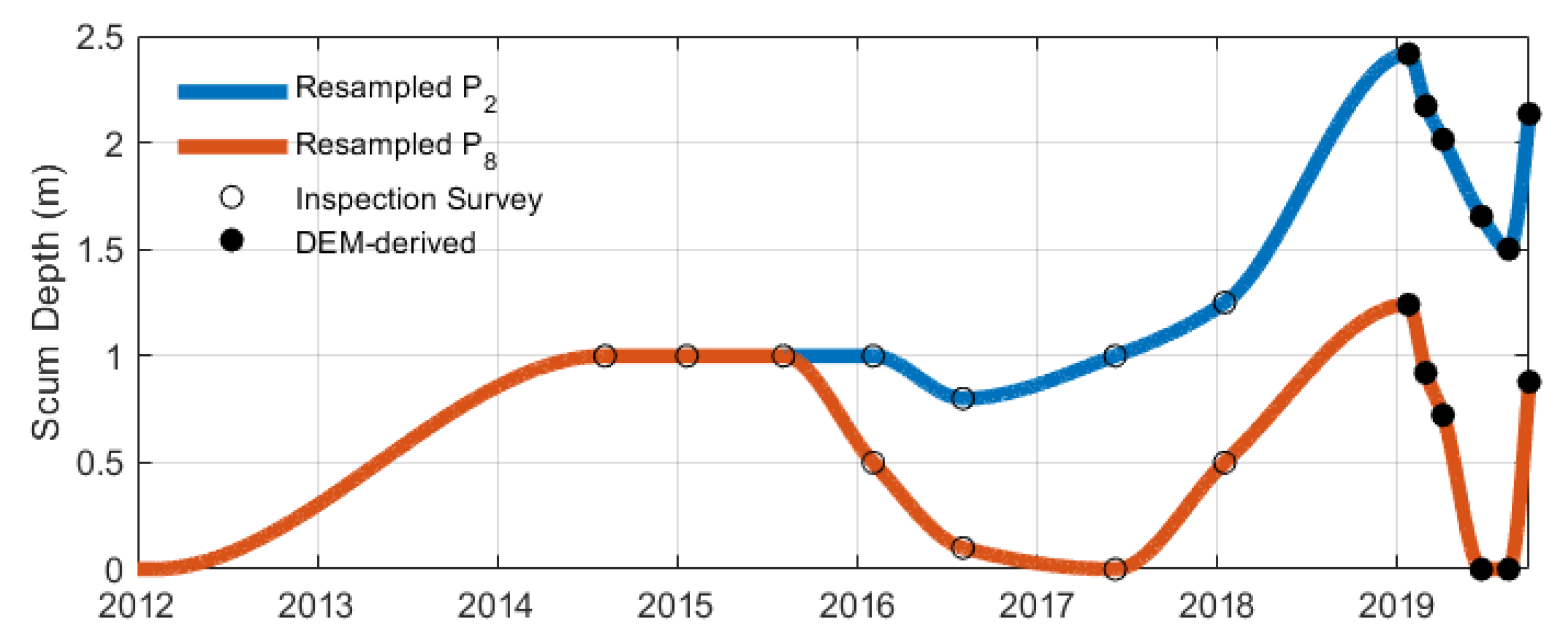

Scum Representative Variable Using Scum Depth Surveys and Digital Elevation Models

- The DEMs and their associated orthomosaics are stacked as a 4D array to enable the algorithm to cluster features into distinct groups.

- The Calinski–Harabasz (CH) criterion, which measures the between-cluster variance and within-cluster variance, is then employed to determine the optimal k groups. The optimal k groups correspond to the highest CH index, by inspecting k from 0 to 10.

- The algorithm proceeds with the optimal k groupings and the resulting clusters with features not associated with the membrane cover are considered artefacts. Thereby, the remaining clusters are then merged to provide a filtered DEM.

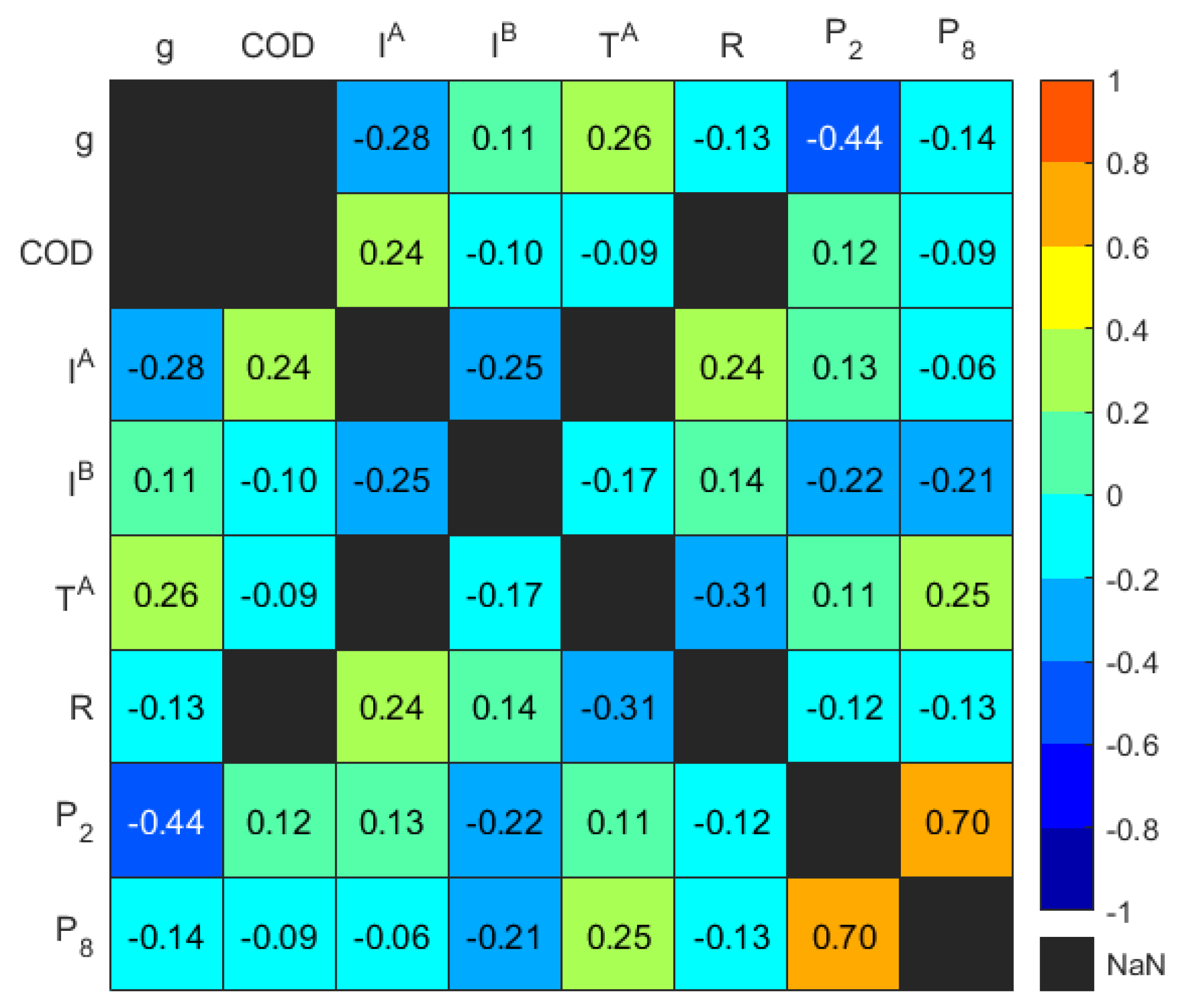

3.3. Reduction of Data Dimensionality

3.4. Resampling of Irregular Representative Variables

4. Results

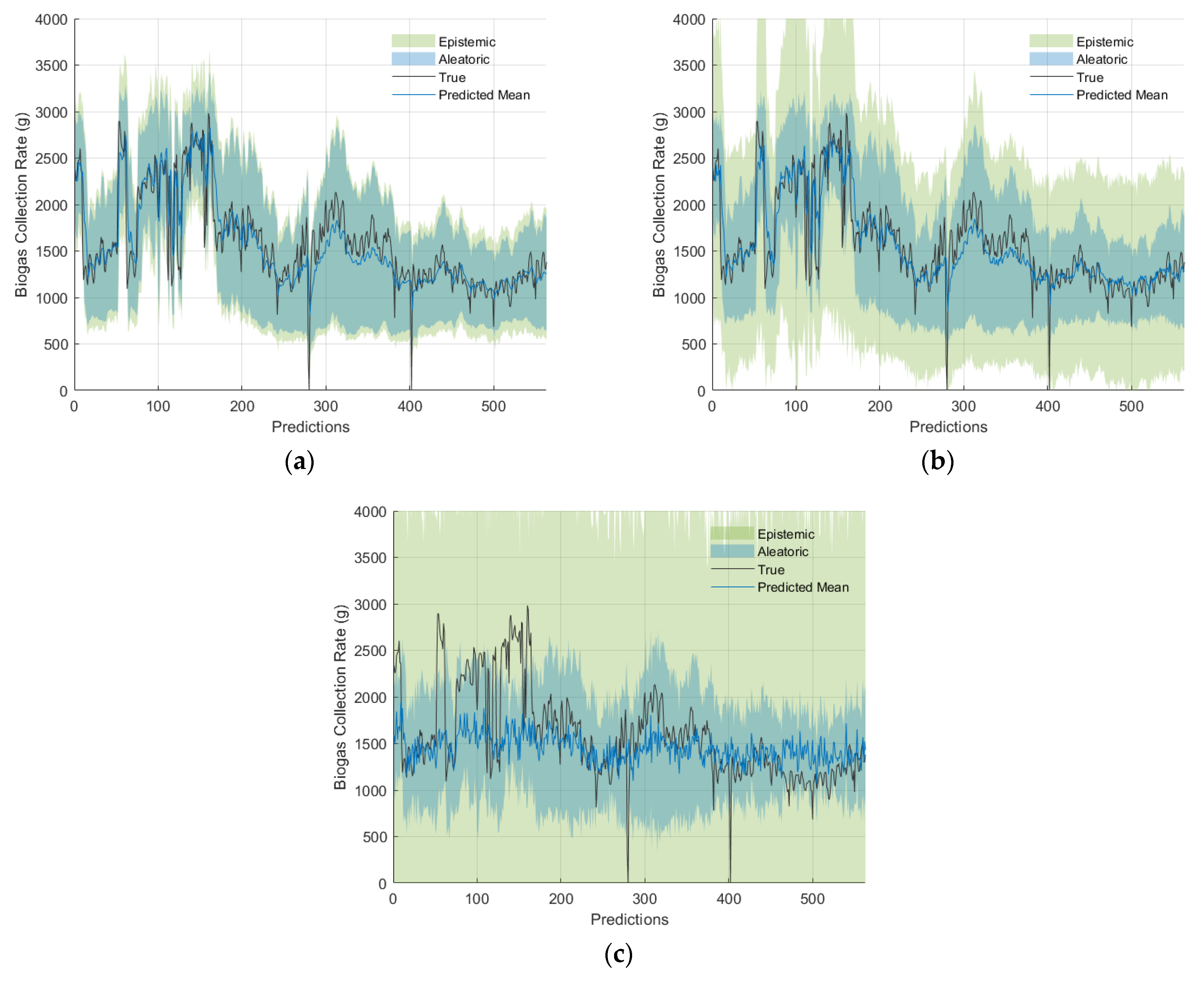

4.1. Effects of Hyperparameters

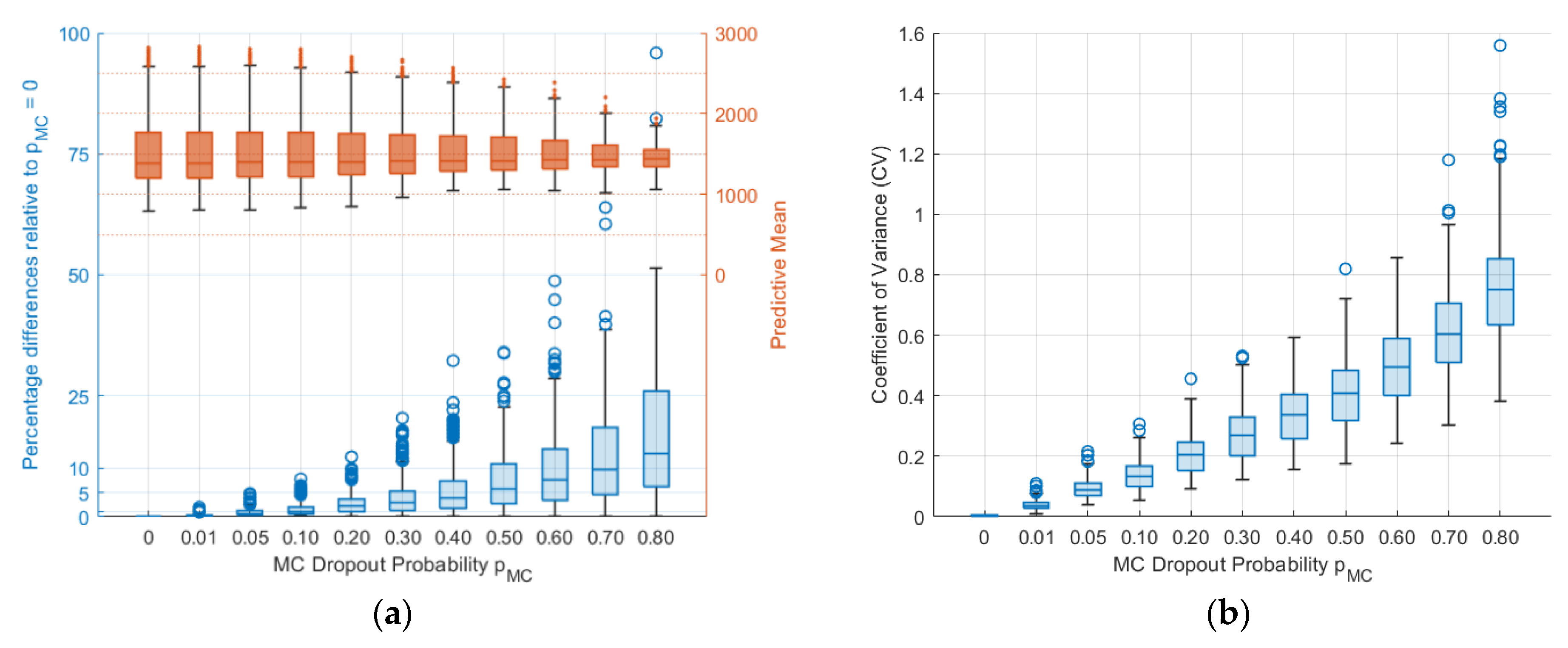

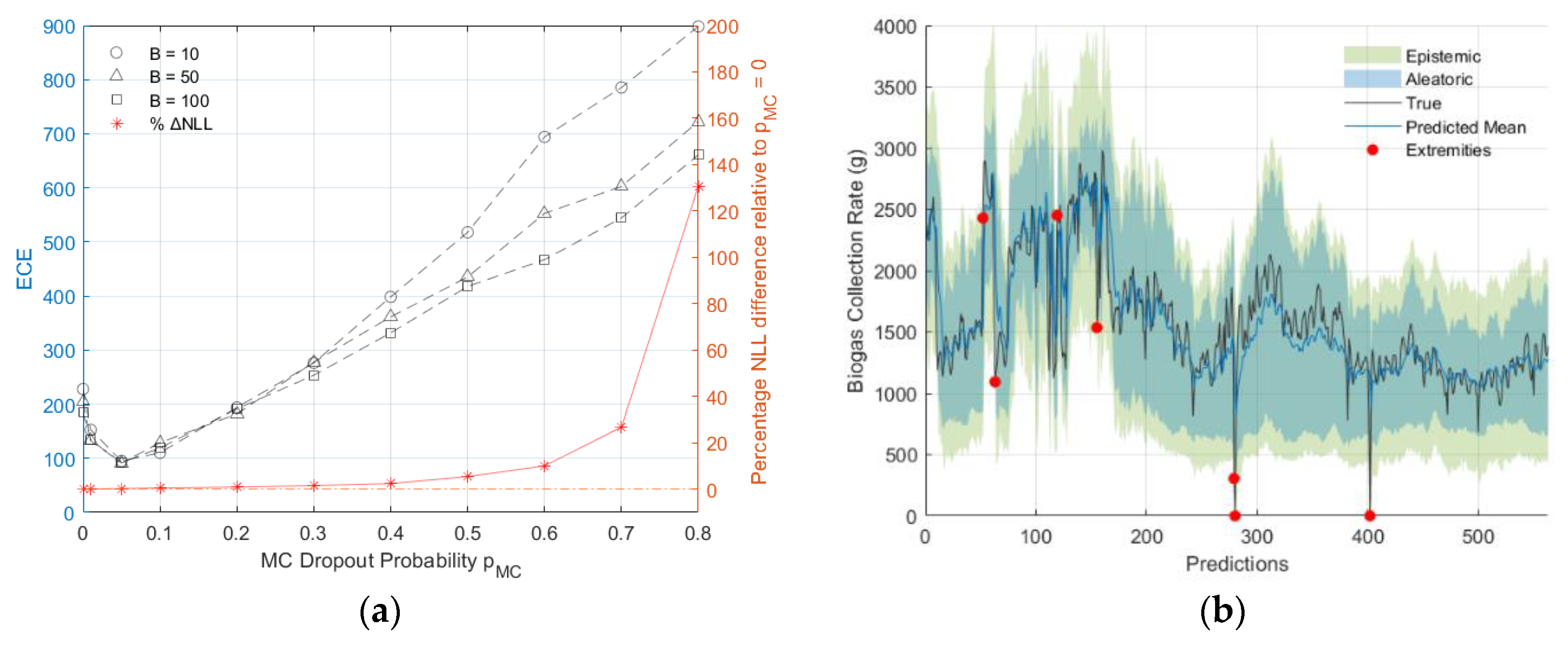

4.2. Epistemic Uncertainty via MC Dropout and Calibration

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yuan, F.-G.; Zargar, S.A.; Chen, Q.; Wang, S. Machine learning for structural health monitoring: Challenges and opportunities. In Proceedings of the Sensors and Smart Structures Technologies for Civil, Mechanical, and Aerospace Systems 2020, Online, 27 April–8 May 2020; Volume 11379, p. 1137903. [Google Scholar]

- Chen, Z.; Wu, M.; Zhao, R.; Guretno, F.; Yan, R.; Li, X. Machine Remaining Useful Life Prediction via an Attention-Based Deep Learning Approach. IEEE Trans. Ind. Electron. 2021, 68, 2521–2531. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, P.; Yan, R.; Gao, R.X. Long short-term memory for machine remaining life prediction. J. Manuf. Syst. 2018, 48, 78–86. [Google Scholar] [CrossRef]

- Shi, Z.; Chehade, A. A dual-LSTM framework combining change point detection and remaining useful life prediction. Reliab. Eng. Syst. Safety 2021, 205, 107257. [Google Scholar] [CrossRef]

- Nemani, V.P.; Lu, H.; Thelen, A.; Hu, C.; Zimmerman, A.T. Ensembles of probabilistic LSTM predictors and correctors for bearing prognostics using industrial standards. Neurocomputing 2022, 491, 575–596. [Google Scholar] [CrossRef]

- Zhang, J.; Yan, J.; Infield, D.; Liu, Y.; Lien, F.-s. Short-term forecasting and uncertainty analysis of wind turbine power based on long short-term memory network and Gaussian mixture model. Appl. Energy 2019, 241, 229–244. [Google Scholar] [CrossRef]

- Vega, M.A.; Todd, M.D. A variational Bayesian neural network for structural health monitoring and cost-informed decision-making in miter gates. Struct. Health Monit. 2022, 21, 4–18. [Google Scholar] [CrossRef]

- Arangio, S.; Bontempi, F. Structural health monitoring of a cable-stayed bridge with Bayesian neural networks. Struct. Infrastruct. Eng. 2015, 11, 575–587. [Google Scholar] [CrossRef]

- Blundell, C.; Cornebise, J.; Kavukcuoglu, K.; Wierstra, D. Weight uncertainty in neural network. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 1613–1622. [Google Scholar]

- Gal, Y.; Ghahramani, Z. Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19-24 July 2016; pp. 1050–1059. [Google Scholar]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical bayesian optimization of machine learning algorithms. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; MIT Press: Cambridge, MA, USA, 2012; pp. 2951–2959. [Google Scholar]

- Lakshminarayanan, B.; Pritzel, A.; Blundell, C. Simple and scalable predictive uncertainty estimation using deep ensembles. arXiv 2017, arXiv:1612.01474. [Google Scholar]

- Gal, Y.; Hron, J.; Kendall, A. Concrete dropout. arXiv 2017, arXiv:1705.07832. [Google Scholar]

- Guo, C.; Pleiss, G.; Sun, Y.; Weinberger, K.Q. On calibration of modern neural networks. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 1321–1330. [Google Scholar]

- Naeini, M.P.; Cooper, G.; Hauskrecht, M. Obtaining well calibrated probabilities using bayesian binning. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

- Kuleshov, V.; Fenner, N.; Ermon, S. Accurate uncertainties for deep learning using calibrated regression. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19-24 July 2016; pp. 2796–2804. [Google Scholar]

- Zhang, Z.; Dalca, A.V.; Sabuncu, M.R. Confidence calibration for convolutional neural networks using structured dropout. arXiv 2019, arXiv:1906.09551. [Google Scholar]

- Levi, D.; Gispan, L.; Giladi, N.; Fetaya, E. Evaluating and calibrating uncertainty prediction in regression tasks. Sensors 2022, 22, 5540. [Google Scholar] [CrossRef] [PubMed]

- Shamsi, A.; Asgharnezhad, H.; Abdar, M.; Tajally, A.; Khosravi, A.; Nahavandi, S.; Leung, H. Improving MC-Dropout Uncertainty Estimates with Calibration Error-based Optimization. arXiv 2021, arXiv:2110.03260. [Google Scholar]

- Okte, E.; Al-Qadi, I.L. Prediction of flexible pavement 3-D finite element responses using Bayesian neural networks. Int. J. Pavement Eng. 2022, 23, 5066–5076. [Google Scholar] [CrossRef]

- Melbourne Water. Western Treatment Plant Virtual Tour. Available online: https://www.melbournewater.com.au/water-data-and-education/learning-resources/water-and-sewage-treatment-plants/western-treatment-0 (accessed on 30 November 2022).

- Wong, L.; Vien, B.S.; Ma, Y.; Kuen, T. Development of Scum Geometrical Monitoring Beneath Floating Covers Aided by UAV Photogrammetry. Struct. Health Monit. 2021, 18, 71. [Google Scholar]

- Wong, L.; Vien, B.S.; Kuen, T.; Bui, D.N.; Kodikara, J.; Chiu, W.K. Non-Contact In-Plane Movement Estimation of Floating Covers Using Finite Element Formulation on Field-Scale DEM. Remote Sens. 2022, 14, 4761. [Google Scholar] [CrossRef]

- Vien, B.S.; Kuen, T.; Rose, L.R.F.; Chiu, W.K. Image Segmentation and Filtering of Anaerobic Lagoon Floating Cover in Digital Elevation Model and Orthomosaics Using Unsupervised k-Means Clustering for Scum Association Analysis. Remote Sens. 2023, 15, 5357. [Google Scholar] [CrossRef]

- Vien, B.S.; Wong, L.; Kuen, T.; Courtney, F.; Kodikara, J.; Chiu, W.K. Strain Monitoring Strategy of Deformed Membrane Cover Using Unmanned Aerial Vehicle-Assisted 3D Photogrammetry. Remote Sens. 2020, 12, 2738. [Google Scholar] [CrossRef]

- Vien, B.S.; Wong, L.; Kuen, T.; Chiu, W.K. A Machine Learning Approach for Anaerobic Reactor Performance Prediction Using Long Short-Term Memory Recurrent Neural Network. Struct. Health Monit. 2021, 18, 61. [Google Scholar]

- Vien, B.S.; Wong, L.; Kuen, T.; Francis, R.L.R.; Chiu, W.K. Probabilistic prediction of anaerobic reactor performance using Bayesian long short-term memory artificial recurrent Neural Network Model. In Proceedings of the International Workshop on Structural Health Monitoring (IWSHM) 2021: Enabling Next-Generation SHM for Cyber-Physical Systems; DEStech Publications, Inc.: Lancaster, PA, USA, 2021; pp. 813–820. [Google Scholar]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Sign. Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Semeniuta, S.; Severyn, A.; Barth, E. Recurrent dropout without memory loss. arXiv 2016, arXiv:1603.05118. [Google Scholar]

- Nix, D.A.; Weigend, A.S. Estimating the mean and variance of the target probability distribution. In Proceedings of the 1994 IEEE International Conference on Neural Networks (ICNN’94), Orlando, FL, USA, 28 June–2 July 1994; pp. 55–60. [Google Scholar]

- Kendall, A.; Gal, Y. What uncertainties do we need in bayesian deep learning for computer vision? arXiv 2017, arXiv:1703.04977. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Gallant, J. Adaptive smoothing for noisy DEMs. Geomorphometry 2011, 2011, 7–9. [Google Scholar]

- Wong, L.; Vien, B.S.; Ma, Y.; Kuen, T.; Courtney, F.; Kodikara, J.; Chiu, W.K. Remote Monitoring of Floating Covers Using UAV Photogrammetry. Remote Sens. 2020, 12, 1118. [Google Scholar] [CrossRef]

- Le, X.-H.; Ho, H.V.; Lee, G.; Jung, S. Application of long short-term memory (LSTM) neural network for flood forecasting. Water 2019, 11, 1387. [Google Scholar] [CrossRef]

| Layers | Array/Output Shape | |

|---|---|---|

| Input Shape | (m, ) | |

| BiLSTM + Dropout (p) | (None, m, 2 h) | |

| Attention Layer + Dropout (p) | (None, 2 h) | |

| FC Layer (tanh) + Dropout (p) | (None, n) | |

| GMM Layer | FC Layer (None) | (None, 3 N) |

| Mixture Normal Distribution | ((None,1), (None,1)) | |

| Hyperparameter | Search Grid Range |

|---|---|

| Input Window Size m | [3, 7, 14, 30] |

| Dropout Probability p | [0.01, 0.05, 0.10] |

| BiLSTM Layer Hidden Units h | [2–16] increments of 2 |

| FC Layer Neurons n | [2–16] increments of 2 |

| GMM Output Layer Gaussian Components N | [1–5] |

| Variable | Units | Mean | Min | Max | Standard Deviation | Data Portion | Time Steps (Days) | Median Time Steps (Days) |

|---|---|---|---|---|---|---|---|---|

| Nm3/hr | 1693.6 | 0 | 3680.6 | 843.9 | 100% | 1 | 1 | |

| Nm3/hr | 2197.8 | 0 | 5451.0 | 1033.5 | 100% | 1 | 1 | |

| Nm3/hr | 1098.6 | 0 | 3390.0 | 922.3 | 100% | 1 | 1 | |

| pH Units | 6.6 | 6 | 7.0 | 0.1 | 30.7% | [0.01–20.95] | 2.92 | |

| mg/L | 378.9 | 210 | 580.0 | 40.8 | 14.2% | [0.38–20.95] | 7 | |

| mg/L | 309.6 | 34 | 830.0 | 92.0 | 32.0% | [0.01–20.95] | 1.07 | |

| mg/L | 690.6 | 170 | 1300.0 | 133.7 | 60.4% | [0.65–14.04] | 1.01 | |

| mg/L | 254.2 | 69 | 540.0 | 62.3 | 31.9% | [0.37–14.07] | 1.02 | |

| mg/L | 98.5 | 10 | 260.0 | 54.8 | 14.2% | [0.38–20.95] | 7 | |

| ML/d | 199.2 | 0 | 428.9 | 51.3 | 100% | 1 | 1 | |

| ML/d | 277.6 | 0 | 750.0 | 79.1 | 100% | 1 | 1 | |

| ML/d | 94.4 | 0 | 342.0 | 43.0 | 100% | 1 | 1 | |

| ML/d | 10.7 | 0 | 257.1 | 36.2 | 100% | 1 | 1 | |

| ML/d | 27.8 | 0 | 595.0 | 73.8 | 100% | 1 | 1 | |

| ML/d | 5.0 | 0 | 183.0 | 18.2 | 100% | 1 | 1 | |

| Celsius | 20.2 | 12 | 25.0 | 2.0 | 19.3% | [0.01–20.95] | 6.98 | |

| Celsius | 14.1 | 6.867 | 35.8 | 5.2 | 100% | 1 | 1 | |

| Celsius | 19.8 | 8.7 | 51.5 | 8.4 | 100% | 1 | 1 | |

| Celsius | 10.1 | 0 | 27.3 | 3.7 | 100% | 1 | 1 | |

| Celsius | 15.4 | 4.05 | 35.1 | 5.1 | 100% | 1 | 1 | |

| Celsius | 20.6 | 8.1 | 44.8 | 6.4 | 100% | 1 | 1 | |

| Celsius | 10.3 | −2.1 | 28.9 | 4.8 | 100% | 1 | 1 | |

| MJ/m2 | 14.8 | 1.3 | 34.3 | 8.1 | 100% | 1 | 1 | |

| mm | 1.2 | 0 | 41.0 | 3.5 | 99.7% | 1 | 1 |

| Porthole | Mean | Min | Max | Standard Deviation |

|---|---|---|---|---|

| P1 | 1.7 | 0 | 2.9 | 0.7 |

| P2 | 1.4 | 0 | 2.4 | 0.6 |

| P3 | 1.0 | 0 | 1.8 | 0.5 |

| P4 | 1.5 | 0 | 3.1 | 1.0 |

| P5 | 1.2 | 0 | 2.4 | 0.9 |

| P6 | 1.2 | 0 | 2.1 | 0.6 |

| P7 | 0.6 | 0 | 1.1 | 0.4 |

| P8 | 0.6 | 0 | 1.2 | 0.4 |

| P9 | 0.5 | 0 | 1.0 | 0.4 |

| P10 | 0.5 | 0 | 1.1 | 0.4 |

| P11 | 0.5 | 0 | 1.0 | 0.3 |

| P12 | 0.5 | 0 | 1.0 | 0.4 |

| Representative Variable | Unit | Mean | Min | Max | Standard Deviation |

|---|---|---|---|---|---|

| / | 1693.6 | 0 | 3680.6 | 843.9 | |

| mg/L | 686.2 | 170 | 1300.0 | 130.9 | |

| ML/d | 199.2 | 0 | 428.9 | 51.3 | |

| ML/d | 10.7 | 0 | 257.1 | 36.2 | |

| Celsius | 15.4 | 4.05 | 35.1 | 5.1 | |

| mm | 1.2 | 0 | 41.0 | 3.5 | |

| m | 1 | 0 | 2.4 | 0.6 | |

| m | 0.5 | 0 | 1.2 | 0.4 |

| Window Size | Dropout Probability | Hidden Units | Neurons | Gaussian Components | |

|---|---|---|---|---|---|

| p-value | 1.23 × 10−251 | 1.50 × 10−37 | 7.11 × 10−212 | 1.40 × 10−5 | 5.03 × 10−22 |

| Average NLL | Standard Deviation NLL | Top 10% | Bottom 10% | |

|---|---|---|---|---|

| Dropout Probability | ||||

| 0.01 | 0.715 | 0.216 | 30.2% | 56.8% |

| 0.05 | 0.751 | 0.185 | 31.5% | 31.3% |

| 0.1 | 0.793 | 0.163 | 38.3% | 12.0% |

| Gaussian Components N | ||||

| 1 | 0.795 | 0.198 | 12.5% | 33.9% |

| 2 | 0.765 | 0.186 | 16.9% | 23.4% |

| 3 | 0.751 | 0.192 | 21.4% | 18.0% |

| 4 | 0.738 | 0.195 | 24.7% | 21.1% |

| 5 | 0.716 | 0.179 | 24.5% | 3.6% |

| Window Size | ||||

| 3 | 0.700 | 0.210 | 47.9% | 10.9% |

| 7 | 0.689 | 0.186 | 38.5% | 13.8% |

| 14 | 0.761 | 0.169 | 12.0% | 26.3% |

| 30 | 0.860 | 0.148 | 1.6% | 49.0% |

| Neurons | ||||

| 2 | 0.728 | 0.197 | 7.6% | 16.1% |

| 4 | 0.770 | 0.179 | 15.1% | 8.3% |

| 6 | 0.754 | 0.190 | 13.8% | 14.8% |

| 8 | 0.758 | 0.207 | 16.4% | 14.3% |

| 10 | 0.769 | 0.198 | 13.3% | 12.8% |

| 12 | 0.734 | 0.196 | 12.8% | 14.1% |

| 14 | 0.762 | 0.196 | 11.7% | 10.7% |

| 16 | 0.747 | 0.168 | 9.4% | 8.9% |

| Hidden Units | ||||

| 2 | 0.652 | 0.204 | 29.7% | 4.7% |

| 4 | 0.612 | 0.171 | 30.5% | 0.5% |

| 6 | 0.673 | 0.148 | 13.8% | 3.1% |

| 8 | 0.757 | 0.157 | 7.6% | 5.7% |

| 10 | 0.866 | 0.156 | 2.9% | 22.9% |

| 12 | 0.881 | 0.162 | 1.8% | 29.9% |

| 14 | 0.774 | 0.180 | 8.1% | 16.7% |

| 16 | 0.809 | 0.161 | 5.7% | 16.4% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vien, B.S.; Kuen, T.; Rose, L.R.F.; Chiu, W.K. Optimisation and Calibration of Bayesian Neural Network for Probabilistic Prediction of Biogas Performance in an Anaerobic Lagoon. Sensors 2024, 24, 2537. https://doi.org/10.3390/s24082537

Vien BS, Kuen T, Rose LRF, Chiu WK. Optimisation and Calibration of Bayesian Neural Network for Probabilistic Prediction of Biogas Performance in an Anaerobic Lagoon. Sensors. 2024; 24(8):2537. https://doi.org/10.3390/s24082537

Chicago/Turabian StyleVien, Benjamin Steven, Thomas Kuen, Louis Raymond Francis Rose, and Wing Kong Chiu. 2024. "Optimisation and Calibration of Bayesian Neural Network for Probabilistic Prediction of Biogas Performance in an Anaerobic Lagoon" Sensors 24, no. 8: 2537. https://doi.org/10.3390/s24082537

APA StyleVien, B. S., Kuen, T., Rose, L. R. F., & Chiu, W. K. (2024). Optimisation and Calibration of Bayesian Neural Network for Probabilistic Prediction of Biogas Performance in an Anaerobic Lagoon. Sensors, 24(8), 2537. https://doi.org/10.3390/s24082537