Abstract

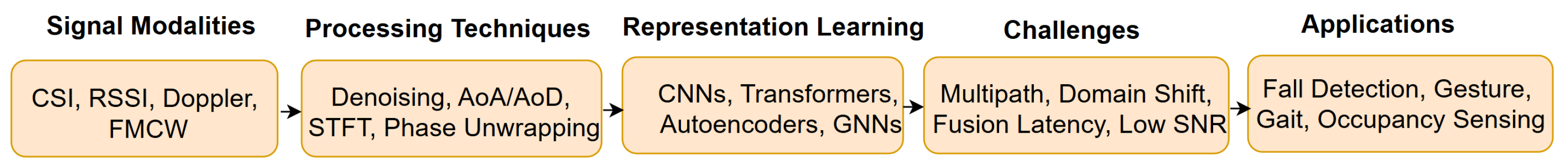

Human Activity Recognition (HAR) systems aim to understand human behavior and assign a label to each action, attracting significant attention in computer vision due to their wide range of applications. HAR can leverage various data modalities, such as RGB images and video, skeleton, depth, infrared, point cloud, event stream, audio, acceleration, and radar signals. Each modality provides unique and complementary information suited to different application scenarios. Consequently, numerous studies have investigated diverse approaches for HAR using these modalities. This survey includes only peer-reviewed research papers published in English to ensure linguistic consistency and academic integrity. This paper presents a comprehensive survey of the latest advancements in HAR from 2014 to 2025, focusing on Machine Learning (ML) and Deep Learning (DL) approaches categorized by input data modalities. We review both single-modality and multi-modality techniques, highlighting fusion-based and co-learning frameworks. Additionally, we cover advancements in hand-crafted action features, methods for recognizing human–object interactions, and activity detection. Our survey includes a detailed dataset description for each modality, as well as a summary of the latest HAR systems, accompanied by a mathematical derivation for evaluating the deep learning model for each modality, and it also provides comparative results on benchmark datasets. Finally, we provide insightful observations and propose effective future research directions in HAR.

1. Introduction

Human Activity Recognition (HAR) has been a very active research topic for the past two decades in the field of computer vision and Artificial Intelligence (AI) that focuses on the automated analysis and understanding of human actions and recognition based on the movements and poses of the entire body.

1.1. Rationale

HAR plays an important role in various applications such as surveillance, healthcare [1,2,3], remote monitoring, intelligent human–machine interfaces, entertainment, storage video and retrieval [4,5], and human–computer interaction [6].

HAR is very important in computer vision and covers many research topics, including HAR in video, human tracking, and analysis and understanding in videos captured with a moving camera, where motion patterns exist due to video objects and the moving camera as well [7]. In such a scenario, it becomes ambiguous to recognize objects. The HAR methods were categorized into three distinct tiers: human action detection, human action tracking, and behavior understanding methods. In recent years, the investigation of interaction [8,9] and human action detection [10,11,12] has emerged as a prominent area of research. Many state-of-the-art techniques deal with action recognition using action frames as images and are only able to detect the presence of an object in them. They cannot properly recognize the object in an image or video. By properly recognizing an action in a video, it is possible to recognize the class of action more accurately. To perform action recognition, there has been an increased interest in this field in recent years due to the increased availability of computing resources as well as new advances in ML [13] and DL. Robust human action modelling and feature representation are essential components for achieving effective HAR. The main issue of representing and selecting features is a well-established problem within the fields of computer vision and ML [13]. Unlike the representation of features in an image domain, the representation of features of human actions in a video not only depicts the visual attributes of the human being(s) within the image domain but must also perform the extraction of alterations in visual attributes and pose. The problem of representation of features has been expanded from a 2D space to a 3D spatio-temporal context. In the past few years, many types of action representation techniques have been proposed. These techniques include various approaches, such as local and global features that rely on temporal and spatial alterations [14,15,16], trajectory features that are based on key point tracking [17], motion changes that are derived from depth information [18,19], and action features that are derived from human pose changes [20,21]. With the performance and successful application of DL to activity recognition and classification, many researchers have used DL for HAR. This facilitates the automatically learned features from the video dataset [22,23]. However, the aforementioned review articles have only examined certain specific facets, such as the Spatial Temporal Interest Point (STIP) and Histogram of Optical Flow (HOF)-found techniques for HAR, as well as approaches for analyzing human walking and DL-based techniques. Numerous novel approaches have been recently developed, primarily regarding the utilization of depth learning techniques for feature learning. Hence, a comprehensive examination of these fresh approaches for recognizing human actions is of significant interest. Additionally, HAR has critical applications in security and surveillance; this survey focuses on general-purpose recognition methods and does not cover security-specific techniques in depth.

1.2. Objective

Many researchers have been working to survey the HAR system article, which is mainly based on ML and DL techniques, with diverse feature extraction techniques. Such HAR literature was summarized by [24] within the framework of three key areas: sensor modality, deep models, and application. Vrigkas et al. [25] also reviewed HAR using RGB static images, covering both single-mode and multi-mode approaches. Vishwakarma et al. [26] summarized classical HAR methods, categorizing them into hierarchical and non-hierarchical methods based on feature representation. The survey by Ke et al. [27] provided a comprehensive overview of handcrafted methods in HAR. Additionally, surveys [28,29,30,31] extensively discuss the strengths and weaknesses of handcrafted versus DL methods, emphasizing the advantages of DL-based approaches. Xing et al. [32] focused on HAR development using 3D skeleton data, reviewing various DL-based techniques and comparing their performance across different dimensions. Presti et al. [33] presented HAR techniques based on 3D skeleton data. Methods for HAR using depth and skeleton data have been thoroughly reviewed by Ye et al. [19]; they also present HAR techniques using depth data.

Although certain review articles discuss data fusion methods, they offer a limited overview of HAR approaches to particular data types. Similarly, Subetha et al. [34] presented the same strategy to review action recognition methods. However, in distinction to those studies, we categorize HAR into five distinct categories: action recognition RGB and handcrafted features, action recognition RGB and DL, action recognition skeleton and handcrafted features, action recognition skeleton-based and DL, action recognition sensor-based methods, and action recognition using a multimodal dataset. The crucial element of the analysis regarding the literature on HAR is that most surveys have focused on the representations of human action features. The data of the image sequences that have been processed are typically well-segmented and consist solely of a single action event. More recently, many researchers have been working to conduct HAR survey studies with a specific point of view. For example, some researchers have surveyed Graph Convolutional Networks (GCNs) structures and data modalities for HAR and the application of GCNs in HAR [35,36]. Gupta et al. [37] explored current and future directions in skeleton-based HAR and introduced the skeleton-152 dataset, marking a significant advancement in the field. Meanwhile, Song et al. [38] reviewed advancements in human pose estimation and its applications in HAR, emphasizing its importance. Additionally, Shaikh et al. [39] focused on data integration and recognition approaches within a visual framework, specifically from an RGB-D perspective. Majumder et al. [40] and Wang et al. [41] provided reviews of popular methods using vision and inertial sensors for HAR. More recently, Wang et al. [42] surveyed HAR by performing two modalities of RGB-based and skeleton-based HAR techniques. Similarly, Sun et al. [43] surveyed HAR with various multi-modality methods.

1.2.1. Research Gaps and New Research Challenges

Also, each survey paper can give us an overall summary of the existing work in this domain. Still, there is a lack of comparative studies of the various modalities, such as RGB, skeleton, sensor, and fusion-based and diverse modality-based HAR systems of recent technologies. From a data perspective, most reviews on HAR are limited to methodologies based on specific data, such as RGB, depth, and fusion data modalities. Moreover, we did not find a HAR survey paper that included diverse modality-based HAR, including their benchmark dataset and latest performance accuracy for 2014–2025. The studies of [8,42] inspired us to complete a survey study with current research trends for HAR.

1.2.2. Our Contribution

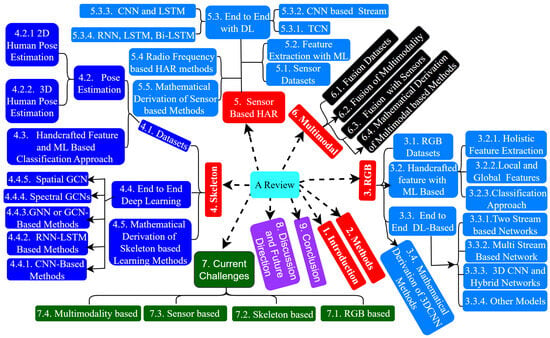

Figure 1 demonstrates the proposed methodology flowchart. In this study, we survey state-of-the-art methods for HAR, addressing their challenges and future directions across vision-, sensor-, and fusion-based data modalities. We also summarize the current two-dimensions and three-dimensions pose estimation algorithms before discussing skeleton-based feature representation methods. Additionally, we categorize action recognition techniques into handcrafted feature-based ML and end-to-end DL-based methods. Our main contributions are as follows:

- Comprehensive Review with Diverse Modality: We conduct a thorough survey of RGB-based, skeleton-based, sensor-based, and fusion HAR-based methods, focusing on the evolution of data acquisition, environments, and human activity portrayals from 2014 to 2025.

- Dataset Description: We provide a detailed overview of benchmark public datasets for RGB, skeleton, sensor, and fusion data, highlighting their latest performance accuracy with references.

- Unique Process: Our study covers feature representation methods, common datasets, challenges, and future directions, emphasizing the extraction of distinguishable action features from video data despite environmental and hardware limitations. We also included the mathematical derivation for the evaluation of the deep learning model for each modality, such as from 3D CNN to Multi-View Transformer and GCN to EMS-TAGCN for pixel video and sequence of the skeleton dataset, respectively.

- Identification of Gaps and Future Directions: We identify significant gaps in the current research and propose future research directions supported by the latest performance data for each modality.

- Evaluation of System Efficacy: We assess existing HAR systems by analyzing their recognition accuracy and providing benchmark datasets for future development.

- Guidance for Practitioners: Our review offers practical guidance for developing robust and accurate HAR systems, providing insights into current techniques, highlighting challenges, and suggesting future research directions to advance HAR system development.

1.2.3. Research Questions

This research addresses the following major questions:

- What are the main difficulties faced in human activity recognition?

- What are the major open challenges faced in human activity recognition?

- What are the major algorithms involved in human activity recognition?

1.3. Organization of the Work

The paper is categorized as follows. The benchmark datasets are provided in Section 3.1. The action recognition RGB-data modality methods and skeleton data modality-based methods are discussed in Section 3 and Section 4, respectively. In Section 5, Section 6 and Section 7, we introduce sensor modality-based HAR, multimodal fusion modality-based, and current challenges, including four data modalities, respectively. We discuss future research trends and direction in Section 8. Finally, in Section 9, we summarized the conclusions. The detailed structure of this paper is shown in Figure 1.

Figure 1.

Diverse modality, including the structure of this paper.

Figure 1.

Diverse modality, including the structure of this paper.

2. Methods

This section outlines the methodology for conducting a comprehensive review of HAR research, focusing on studies published between 2014 and 2025 across diverse data modalities. The methodology comprises the article search protocol, eligibility criteria, article selection, quality appraisal, and data charting strategy.

2.1. Article Search Protocol

We performed an extensive search using databases such as IEEE Xplore, Scopus, Web of Science, SpringerLink, and ACM Digital Library. Boolean keyword combinations included the following:

- “Human Activity Recognition” OR “Human Action Recognition”

- “Computer Vision”, “RGB”, “Skeleton”, “Sensor”, “Multimodal”, “Deep Learning”, “Machine Learning”

2.2. Eligibility Criteria

To refine and ensure relevance in our initial search results, we applied the following criteria:

Inclusion Criteria:

- Publications from 2014 to 2025;

- Peer-reviewed journals, conference papers, book chapters, and lecture notes;

- Focus on HAR using RGB, skeleton, sensor, fusion HAR methods, or multimodal;

- Emphasis on the evolution of data acquisition, environments, and human activity portrayals.

Exclusion Criteria:

- Exclusion of studies lacking in-depth information about their experimental procedures;

- Exclusion of research articles where the complete text is not accessible, both in physical and digital formats;

- Exclusion of research articles that include opinions, keynote speeches, discussions, editorials, tutorials, remarks, introductions, viewpoints, and slide presentations.

2.3. Article Selection Process

The literature was screened through a multi-step process: title screening, abstract review, and full-text evaluation. We prioritized articles published in prestigious journals and conferences such as:

- IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI);

- IEEE Transactions on Image Processing (TIP);

- International Conference on Computer Vision and Pattern Recognition (CVPR);

- IEEE International Conference on Computer Vision (ICCV);

- Springer, ELSEVIER, MDPI, Frontier, etc.

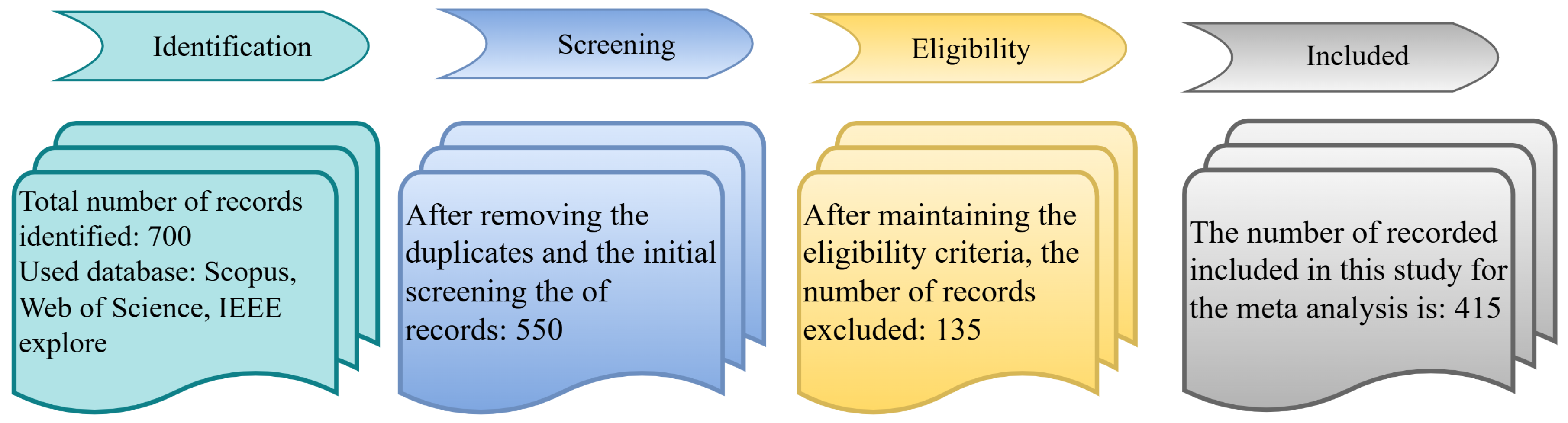

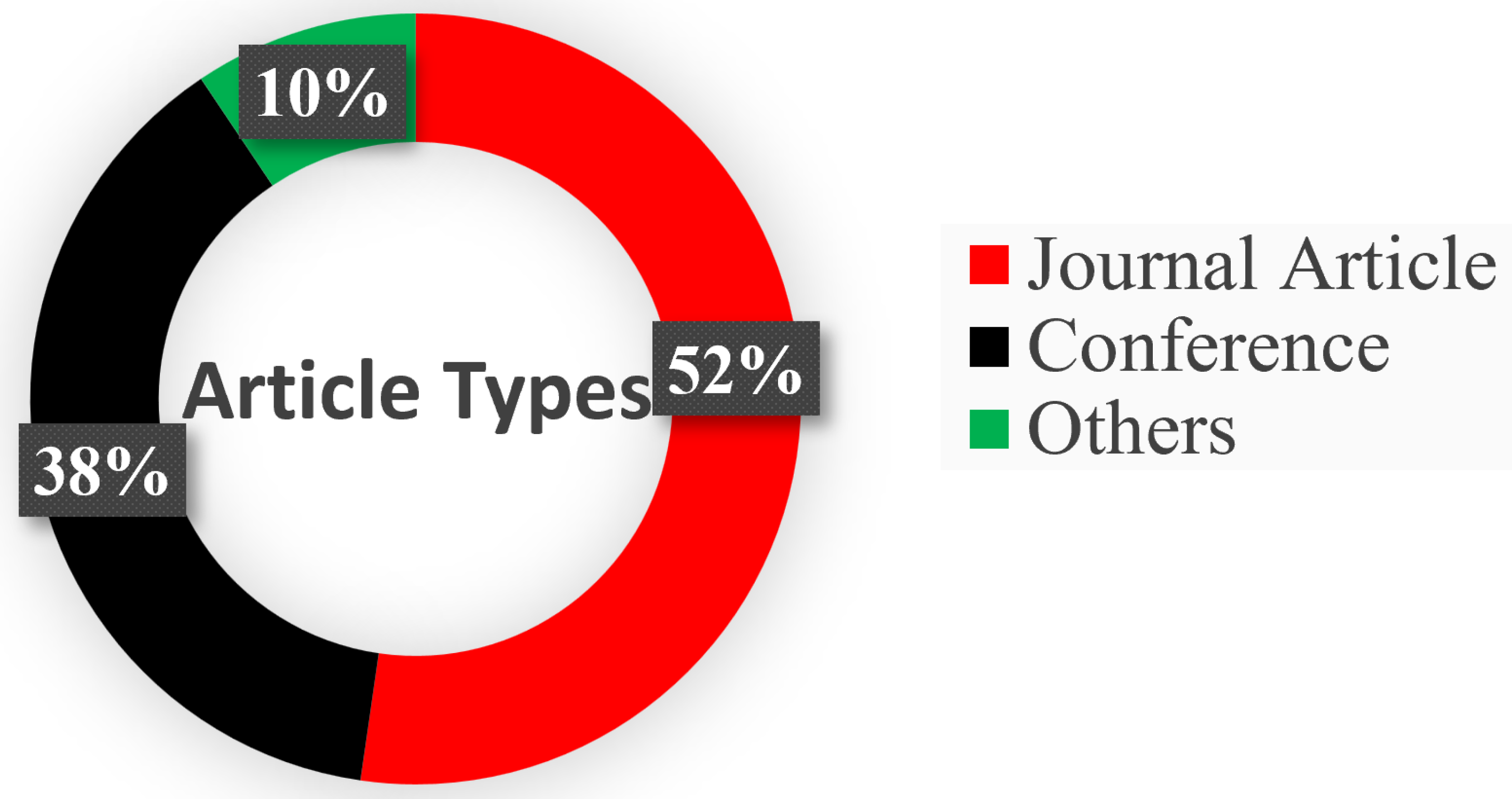

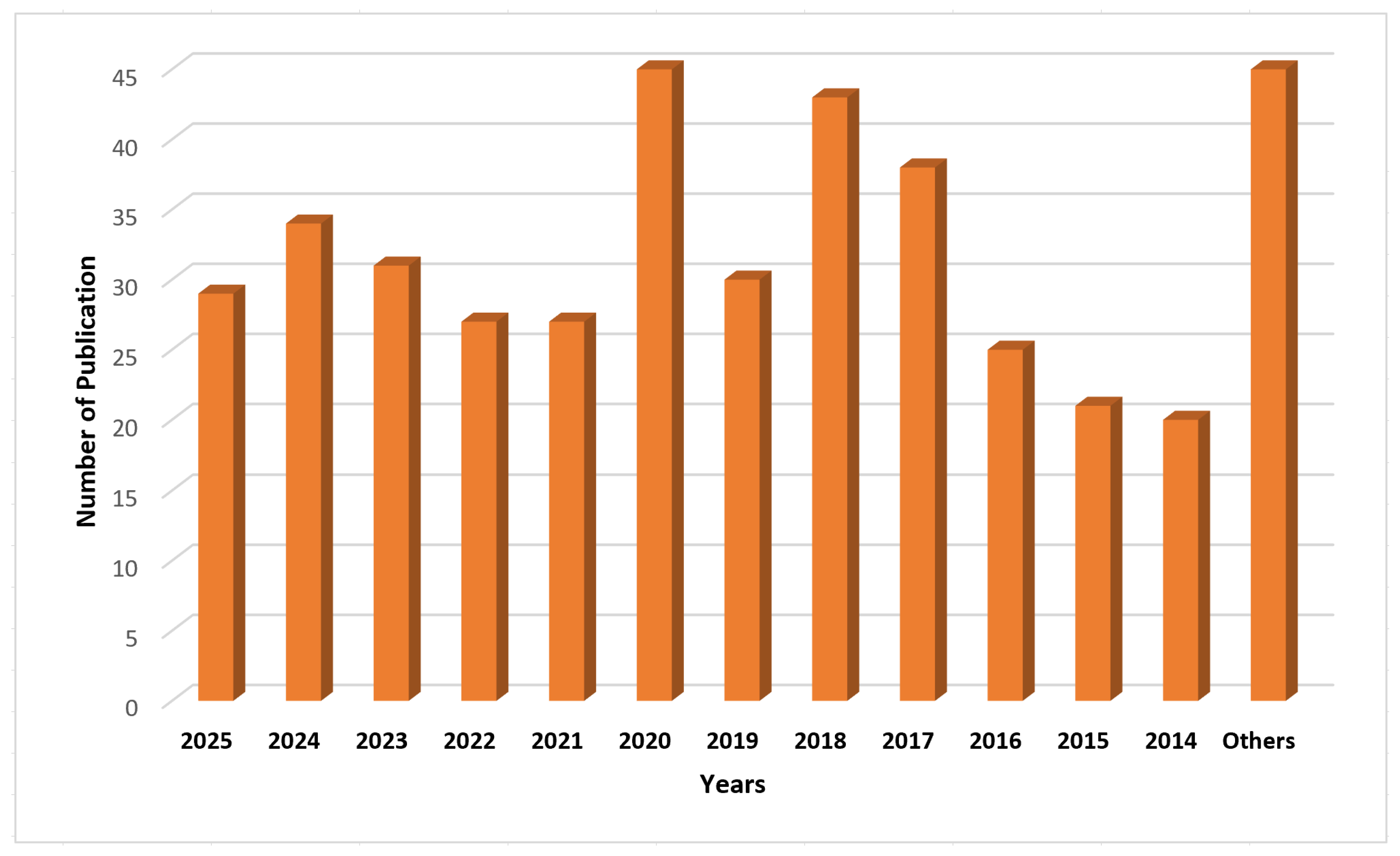

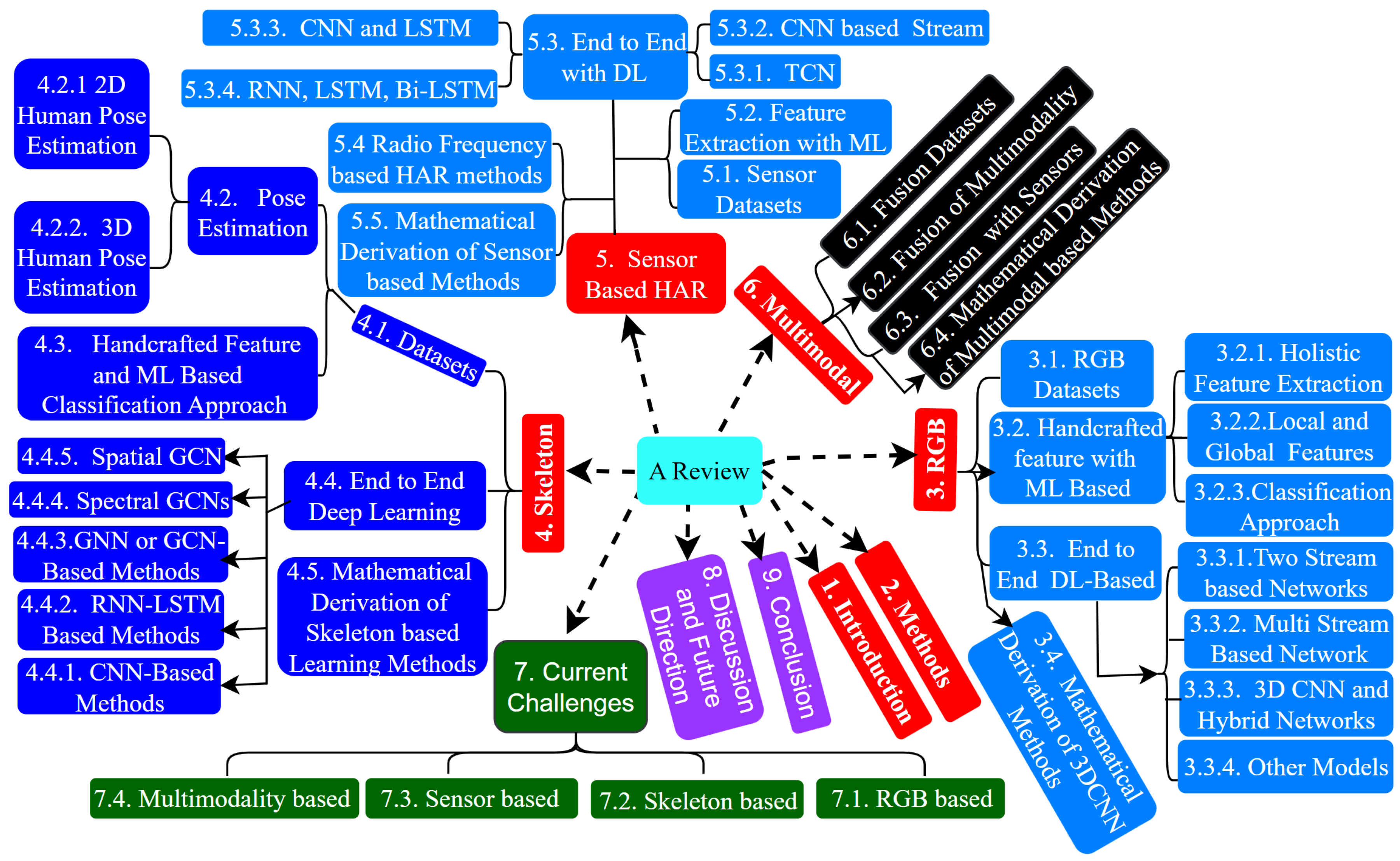

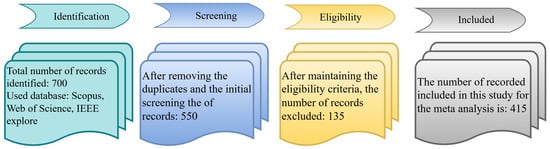

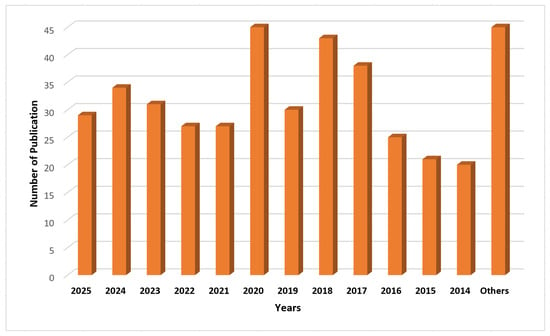

Figure 2 depicts the article selection process, while Figure 3 demonstrates the percentage of journals, conferences, and other ratios. Figure 4 shows the distribution of the year-wise number of references.

Figure 2.

Article selection process block diagram.

Figure 3.

Distribution of article types across journal publications, conference proceedings, and other sources.

Figure 4.

Year-wise distribution of selected HAR publications (2014–2025).

2.4. Critical Appraisal of Individual Sources

We reviewed each article through a structured process involving the following:

- Abstract review;

- Methodology analysis;

- Result evaluations;

- Discussion and conclusions.

We extracted key attributes from the selected studies using a structured data charting form. The extracted data included the following:

- Bibliographic information (author(s), publication year, and venue);

- Dataset characteristics and associated HAR modality (e.g., RGB, skeleton, sensor, fusion);

- Feature extraction techniques and classification models employed;

- Evaluation metrics and reported benchmark performance.

These data were synthesized and analyzed comparatively across modalities to identify trends, strengths, and methodological gaps in the literature.

3. RGB-Data Modality-Based Action Recognition Methods

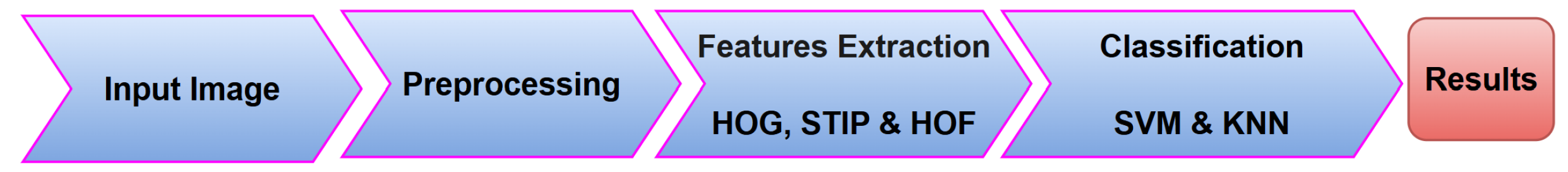

Figure 5 demonstrates a common workflow diagram of the RGB-based action recognition methods. The early stages of research regarding HAR were conducted based on the RGB data, and initially feature extraction mostly depended on manual annotation [44,45]. These annotations often relied on existing knowledge and prior assumptions. After this, DL-based architectures were developed to extract the most effective features and the best performance. The following sections describe the dataset, the methodological review of RGB-based handcrafted features with ML, and various ideas for DL-based approaches. Moreover, Table 1 lists detailed information about the RGB data modality, including the datasets, feature extraction methods, classifier, years, and performance accuracy.

Figure 5.

Workflow of RGB-based action recognition methods utilizing handcrafted features.

Table 1.

RGB and deep learning-based existing techniques for action recognition.

3.1. RGB-Based Datasets of HAR

We provided the most popular benchmark HAR datasets, which come from the RGB skeleton, which is demonstrated in Table 2. The dataset table demonstrated the details of the datasets, including modalities, creation year, number of classes, number of subjects who participated in recording the dataset, number of samples, and the latest performance accuracy of the dataset with citations.

The RGB dataset encompasses several prominent benchmarks for HAR. Notably, the Activity Net dataset, introduced in 2015, comprises 203 activity classes and an extensive 27,208 samples, achieving an impressive accuracy of 94.7% in recent evaluations [67,68]. The Kinetics-400 and Kinetics-700 datasets, from 2017 and 2019, respectively, include 400 and 700 classes with approximately 306,245 and 650,317 samples. These datasets are notable for their high accuracy rates of 92.1% and 85.9% [69,70,71]. The AVA dataset, also from 2017, contains 80 classes and 437 samples, with a recorded accuracy of 83.0% [72,73]. Datasets such as Kinetics and AVA are collected from YouTube videos. These datasets are affected by content availability issues due to reliance on external links, which may expire over time. The EPIC Kitchen 55 dataset from 2018 offers a comprehensive view with 149 classes and 39,596 samples. The Moments in Time dataset, released in 2019, is one of the largest, with 339 classes and around 1,000,000 samples, although it has a relatively lower accuracy of 51.2% [71,74]. Each dataset is instrumental for training and evaluating HAR models, providing diverse scenarios and activities.

Table 2.

Benchmark datasets for HAR RGB and Skeleton.

Table 2.

Benchmark datasets for HAR RGB and Skeleton.

| Dataset | Data Set Modalities | Year | Class | Subject | Sample | Latest Accuracy |

|---|---|---|---|---|---|---|

| UPCV [75] | Skeleton | 2014 | 10 | 20 | 400 | 99.20% [76] |

| Activity Net [67] | RGB | 2015 | 203 | - | 27,208 | 94.7% [68] |

| Kinetics-400 [69] | RGB | 2017 | 400 | - | 306,245 | 92.1% [71] |

| AVA [72] | RGB | 2017 | 80 | - | 437 | 83.0% [73] |

| EPIC Kitchen 55 [77] | RGB | 2018 | 149 | 32 | 39,596 | - |

| AVE [78] | RGB | 2018 | 28 | - | 4143 | - |

| Moments in Times [74] | RGB | 2019 | 339 | - | 1,000,000 | 51.2% [71] |

| Kinetics-700 [70] | RGB | 2019 | 700 | - | 650,317 | 85.9% [71] |

| RareAct [79] | RGB | 2020 | 122 | 905 | 2024 | 60.80% [80] |

| HiEve [81] | RGB, Skeleton | 2020 | - | - | - | 95.5% [82] |

| MSRDaily Activity3D [83] | RGB, Skeleton | 2012 | 16 | 10 | 320 | 97.50% [84] |

| N-UCLA [85] | RGB, Skeleton | 2014 | 10 | 10 | 1475 | 99.10% [86] |

| Multi-View TJU [87] | RGB, Skeleton | 2014 | 20 | 22 | 7040 | - |

| UTD-MHAD [88] | RGB, Skeleton | 2015 | 27 | 8 | 861 | 95.0% [89] |

| UWA3D Multiview II [90] | RGB, Skeleton | 2015 | 30 | 10 | 1075 | - |

| NTU RGB+D 60 [91] | RGB, Skeleton | 2016 | 60 | 40 | 56,880 | 97.40% [86] |

| PKU-MMD [92] | RGB, Skeleton | 2017 | 51 | 66 | 10,076 | 94.40% [93] |

| NEU-UB [94] | RGB | 2017 | 6 | 20 | 600 | - |

| Kinetics-600 [95] | RGB, Skeleton | 2018 | 600 | - | 595,445 | 91.90% [71] |

| RGB-D Varing-View [96] | RGB, Skeleton | 2018 | 40 | 118 | 25,600 | - |

| NTU RGB+D 120 [97] | RGB, Skeleton | 2019 | 120 | 106 | 114,480 | 95.60% [86] |

| Drive&Act [98] | RGB, Skeleton | 2019 | 83 | 15 | - | 77.61% [99] |

| MMAct [100] | RGB, Skeleton | 2019 | 37 | 20 | 36,764 | 98.60% [101] |

| Toyota-SH [102] | RGB, Skeleton | 2019 | 31 | 18 | 16,115 | - |

| IKEA ASM [103] | RGB, Skeleton | 2020 | 33 | 48 | 16,764 | - |

| ETRI-Activity3D [104] | RGB, Skeleton | 2020 | 55 | 100 | 112,620 | 95.09% [105] |

| UAV-Human [106] | RGB, Skeleton | 2021 | 155 | 119 | 27,428 | 55.00% [107] |

3.2. Handcrafted Features with ML-Based Approach

Researchers employed handcrafted feature extraction with ML-based systems at an early age to develop HAR systems [108]. In the action representation step, the RGB data are utilized to transform into the feature vector, and these feature vectors are fed into the classifier [109,110] to obtain the desired results of the action classification step. Table 3 shows the analysis of the handcrafted-based approach, including the datasets, methods of feature extraction, classifier, years, and performance accuracy. Handcrafted features are designed to capture the physical motions performed by humans and the spatial and temporal variations depicted in videos that portray actions. These variations include methods that utilize the spatio-temporal volume-based representation of actions, methods based on Spatio-temporal Interest Points (STIPs), methods that rely on the trajectory of skeleton joints for action representation, and methods that utilize human image sequences for action representation. Chen et al. [111] demonstrate this by employing Depth Motion Map (DMM)-based gestures for motion information extraction, while Local Binary Pattern (LBP) feature encoding enhances discriminative power for action recognition. Meanwhile, Patel et al. [108] fuse various features, including Histogram of Oriented Gradients (HOG) and LBP, to improve network performance in recognizing human activities. The handcrafted features can be categorized as below:

Table 3.

Action recognition methods based on handcrafted feature extraction techniques.

3.2.1. Holistic Feature Extraction

Holistic representation aims to capture the motion information of the entire human subject. Spatio-temporal action recognition often uses template-matching techniques, with key methods focusing on creating effective action templates. Bobick et al. introduced two approaches, Motion Energy Image (MEI) and Motion History Image (MHI), to perform action representation [126]. Meanwhile, Zhang et al. utilized polar coordinates in MHI and developed a Motion Context Descriptor (MCD) based on the Scale-Invariant Feature Transform (SIFT) [127]. Somasundaram et al. applied sparse representation and dictionary learning to calculate video self-similarity in both time and space [128]. In scenarios with a stationary camera, these approaches effectively capture shape-related information like human silhouettes and contours through background subtraction. However, accurately capturing silhouettes and contours in complex scenes or with camera movements remains challenging, especially when the human body is partially obscured. Many methods employ a sliding window approach to detect multiple actions within the same scene, which can be computationally expensive. These approaches transform dynamic human motion into a holistic representation in a single image. While they capture relevant foreground information, they are sensitive to background noise, including irrelevant information.

3.2.2. Local and Global Representation

Holistic feature extraction techniques for HAR face several limitations, including sensitivity to background noise, reliance on stationary cameras, difficulty in complex scenes, occlusion issues, high computational cost, limited robustness to variations, and neglect of contextual information, making them less effective in dynamic, real-world scenarios.

Combining local and global representations can effectively address HAR’s holistic feature extraction limitations. Local features reduce background noise sensitivity and handle occlusions, while global features ensure comprehensive activity recognition. This combination enhances robustness to variations, manages complex scenes, and optimizes computational efficiency, improving HAR accuracy and reliability. The local presentation means identifying a specific region, while the global representation means identifying the whole region with significant motion information. These methods [14,15,16] contain local and global features based on spatial–temporal change trajectory attributes that are founded on key point tracking [17,129], motion changes that are derived from depth information [18,19], and action-based features that are predicated on human pose changes [20,21]. The HoG and HON4D [130] is one of the feature-based techniques that calculates features on the base orientation of gradients in an image or video sequence. The HoG features are then used to encode local and global texture information, aiming to recognize different actions. Some of the presented approaches exploit the HoG in action recognition, including [131,132,133,134], in various ways. HOF is a feature extraction method used in action recognition [135,136]. It involves building histograms to present different actions over the spatio-temporal domain in a video. However, in this method, the number of bins needs to be set in advance. The challenge addresses cluttered backgrounds and camera movement by performing a physical feature-driven approach to HOF.

3.2.3. Classification Approach

Once we have the feature representation, we feed it into classifiers such as Support Vector Machine (SVM) [121], Random Forest, and K-Nearest Neighbor (KNN) [137,138] to predict the activity label. Some classification methods, such as Hidden Markov Models (HMMs), Condition Random Fields (CRFs) [139,140,141], Structured Support Vector Machine (SSVM) [110,142,143], and Global Gaussian Mixture Models (GGMMs) [7], perform sequentially for classification tasks. Additionally, Luo et al. utilized the features of fusion-based methods, Maximum Margin Distance Learning (MMDL) [144], and Multi-task Spare Learning Model (MTSLM) [145]. These methods perform the classification task based on combining various characteristics to enhance the classification task.

3.3. End-to-End Deep Learning Approach

The holistic, local, and global features reported promising results in the HAR task, but these handcrafted features need much specific knowledge to define relevant parameters. Additionally, they do not generalize sizable datasets well. In recent years, significant focus has been placed on utilizing DL in computer vision. Numerous approaches have used deep neural network-based methods to recognize human activity [22,23,47,146,147,148,149,150,151,152].

For example, Donahue et al. explored Long Short-Term Memory (LSTM) and developed Long-Term Recurrent Convolutional Networks (LRCNs) [153] to model CNN-generated spatial features across temporal sequences. Another significant HAR technique involves the use of LSTM with Convolution Neural Networks (CNNs) [154,155]. Ng et al. [155] introduced a Recurrent Neural Network (RNN) model to identify and classify the action, which performs a connection between the LSTM cell and the output of the underlying CNN. Donahue et al. [153] proposed a method of using long-term RNNs to map video frames of varying lengths to outputs of varying lengths, such as action descriptive text, rather than simply assigning them to a specific action category. Song et al. [156] introduced a model using RNNs with LSTM that employed multiple attention levels to discern key joints in the skeleton across each input frame.

Recently, researchers have utilized different ideas for spatio-temporal feature extraction, divided into four categories: two-stream networks, multi-stream networks, 3D CNN, and Hybrid Networks.

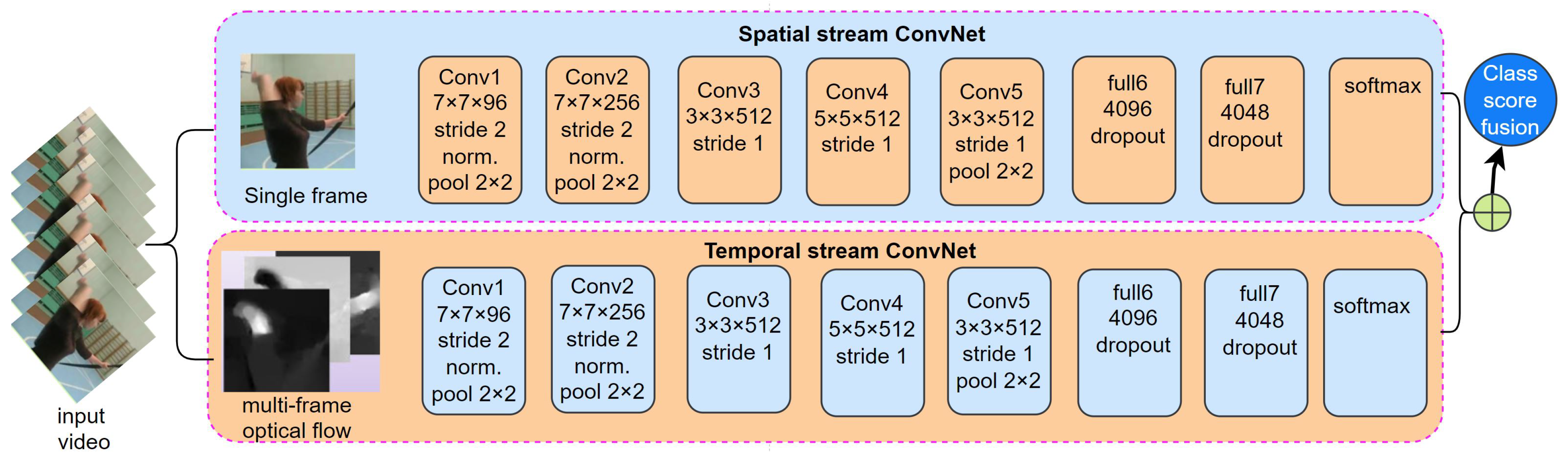

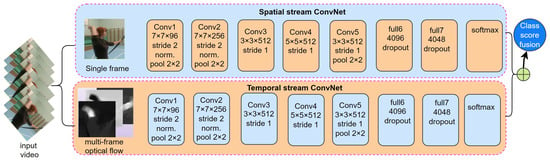

3.3.1. Two Stream-Based Network

The motion of an object can be represented based on the optical flow [157]. Simonyan et al. proposed a two-stream convolutional network to recognize human activity [22], as depicted in Figure 6. In a convolutional network with two streams, the optical flow information is computed from the sequence of images. Two separate CNNs process image and optical flow sequences as inputs during model training. Fusion of these inputs occurs at the final classification layer. The two-stream network handles a single-frame image and a stack of optical flow frames using 2D convolution. In contrast, a 3D convolutional network treats the video as a space–time structure and employs 3D convolution to capture human action features.

Figure 6.

RGB-based two-stream architecture HAR [22].

Numerous research endeavors have been conducted to enhance the efficacy of these two network architectures. Noteworthy advancements in the two-stream CNNs have been made by Zhang et al. [151], who substituted the optical flow sequence with the motion vector in the video stream. This substitution resulted in improved calculation speed and facilitated real-time implementation of the aforementioned HAR technique. The process of merging spatial and temporal information has been modified by Feichtenhofer et al. [50], shifting it from the initial final classification layer to an intermediate position within the network. As a result, the accuracy of action recognition has been further enhanced [51]. Moreover, an additional enhancement to the performance of the two-stream convolutional network was introduced through the proposal of a Temporal Segment Network (TSN). Moreover, the recognition results of TSN were further improved by the contributions of both Lan et al. [158] and Zhou et al. [159].

3.3.2. Multi-Stream Based Network

RGB data paired with CNNs offers powerful action recognition capabilities. Liu et al. [160] leverage a multi-stream convolutional network to enhance recognition performance by incorporating manually crafted skeleton joint information with CNN-derived features. Shi et al. [53] employ transfer learning techniques in a three-stream network, incorporating dense trajectories to characterize long-term motion effectively. Attention mechanisms with RGB data focus on relevant regions for better action recognition. Shah et al. [66] propose a Generative Adversarial Network (GAN)-based knowledge distillation framework combining spatial attention-augmented EfficientNetB7 and multi-layer Gated Recurrent Units (GRUs) with handcrafted hybrid LBP features for robust HAR, while acknowledging limitations in model generalization and noise sensitivity in complex real-world environments.

3.3.3. 3D CNN and Hybrid Networks

Traditional two-stream techniques often separate spatial and temporal information, which can render them less suitable for real-time deployment. These 3D approaches aim to address the limitations of the earlier two-stream networks. For example, Ji et al. [46] utilized the 3D CNN model for the action recognition task. This model extracts features from both the spatial and the temporal dimensions. Tran et al. [23] used C3D to extract spatio-temporal features for a large dataset to train the model, which is the extension of the 3DCNN model [46]. Carreira et al. [154] developed I3D, extending the network to extract spatio-temporal features along with the temporal dimension. They proposed image classification models to create 3D CNNs by transferring weights from 2D models pre-trained on ImageNet to align with the weights in the 3D model. P3D [161] and R(2+1)D [162] streamlined 3D network training using factorization, combining 2D spatial convolutions (1 × 3) with 1D temporal convolutions (3 × 1 × 1) instead of traditional 3D convolutions (3 × 3). For improved motion analysis, trajectory convolution [163] employed deformable convolutions in the temporal domain. Other approaches simplify 3D CNNs by integrating 2D and 3D convolutions within single networks to enhance feature maps, exemplified by models like MiCTNet [56], ARTNet [164], and S3D [165]. To enhance the performance of 3DCNN, CSN [166] has demonstrated the effectiveness of decomposing 3D convolution by separating channel interactions from spatio-temporal interactions, leading to state-of-the-art performance improvements. This technique can achieve speeds that are two to three times faster than previous methods. Feichtenhofer et al. developed the X3D method [167] that included both spatial and temporal dimensions with enhanced spatial, input resolution, and channel dimensions. Yang et al. [168] proposed that morphologically similar actions like walking, jogging, and running require discrimination assisted by visual speed. They proposed a Temporal Pyramid Network (TPN) similar to X3D. This approach enables the extraction of effective features at various temporal rates, reducing computational complexity while enhancing efficiency performances. Zhang et al. [169] proposed a 4D CNN with 4D convolution to capture the evolution of distant spatio-temporal representations.

Similarly, numerous researchers have made efforts to expand various 2D CNNs to 3D spatio-temporal structures to acquire knowledge about and identify human action features. Carreira et al. [154] expanded the network architecture of inception-V1 to incorporate 3D and introduced the two-stream inflated 3D ConvNet for HAR. Qin et al. [170] propose a fusion scheme combining classical descriptors with 3D CNN-learned features, achieving robustness against geometric and optical deformations. Diba et al. [171] extended DenseNet and introduced a temporal 3D ConvNet for HAR. Zhu et al. [172] expanded pooling operations across spatial and temporal dimensions, transforming the two-stream convolution network into a three-dimensional structure. Carreira et al. [154] conducted a comparison of five architectures: LSTM with CNN, 3D ConvNet, two-stream network, two-stream inflated 3D ConvNet, and 3D-fused two-stream network. In essence, 3D CNNs establish relationships between temporal and spatial features in various ways, complementing rather than replacing two-stream networks.

3.3.4. Other Models

Hassan et al. [64] created a deep bidirectional LSTM model, which effectively integrates the advantages of temporal effective features extraction through Bi-LSTM and spatial feature extraction via CNN. Use of the LSTM architecture is not feasible for supporting parallel computing, which can limit its efficiency. To overcome this problem, the transformer architecture [173] has become popular in DL to address this limitation. Girdhar et al. [174] used the transformer-based architecture to add context features and developed an attention mechanism to improve performance. Khan et al. [65] present two end-to-end DL models—ConvLSTM and LRCN—for HAR from raw video inputs, leveraging time distributed layers for spatio-temporal encoding, while facing limitations in computational efficiency and real-time deployment on resource-constrained devices.

3.4. Mathematical Derivation of the Benchmark RGB-Based 3DCNN Method

In RGB-based HAR, each dataset sample can be represented as:

where is the i-th video example and is its corresponding ground truth label. Each video is typically represented as a tensor with dimensions , where f is the number of frames per video, h and w denote the height and width of each frame, and is the number of color channels. CNNs are commonly used to process RGB data. They apply multiple convolutional layers to extract features from the input tensor. The feature at spatial location in the k-th feature map at the l-th layer is calculated as [175]:

where is the learned convolutional kernel for the k-th feature map at layer l, is the input patch at position in layer l, is the bias term for the k-th feature map at layer l, and is the pre-activation value at position in the k-th feature map. An activation function introduces non-linearity as flow:

where is the activated feature value at position in the k-th feature map at layer l and is typically a ReLU, tanh, or sigmoid function. Pooling layers then reduce the resolution of the feature maps, enhancing shift-invariance and robustness as follows:

where is the pooled feature at position in the k-th feature map at layer l, denotes the local pooling region around position , and is typically a max or average pooling operation. After stacking several convolutional and pooling layers, fully connected layers are added to perform higher-level reasoning. The final output is often passed through a Softmax operator to produce class probabilities. The CNN model is trained by minimizing a task-specific loss function that measures the difference between predicted and true labels. Given N training samples , where , the overall loss function is given by [175]:

where denotes the chosen loss function (e.g., cross-entropy), represents all learnable model parameters (weights and biases), and is the model’s output prediction for the n-th sample. Training on the RGB modality can be expressed as:

where is the CNN-based feature extractor for modality m with parameters , is the classifier network with parameters , denotes the loss function (e.g., cross-entropy), and is the ground truth label for the i-th sample.

3.4.1. Three-Dimensional CNN

The 3D CNN processes spatio-temporal features by applying 3D convolutions across both spatial (height, width) and temporal (frames) dimensions. For a 3D convolution operation at a certain location in the feature map [46] and also C3D [23] and P3D [161], we have:

where is the 3D convolutional kernel; are the spatial and temporal filter sizes; is the input feature map from the previous layer; and is the bias term.

3.4.2. C3D [23]

C3D, also called a deep 3D ConvNet model, is similar to the VGG model [176], which is constructed with eight convolution layers, five max-pooling layers, and two fully connected layers, where all 3D convolution kernels are and have a stride of 1 for both domains: spatial and temporal, whereas most pooling kernes are and pooling 1 layers are . According to the experiment using the UCF101 test split-1 with different kernels for the temporal depth setting, 3D ConvNet achieves high accuracy, with kernels, compared to the 2D ConvNet [23]. C3D achieved 85.2%, 85.2%, 78.3%, 98.1%, 87.7%, and 22.3% accuracy for the Sport1M, UCF101, ASLAN, YUPENN, UMD, and Object datasets, respectively [23].

3.4.3. I3D (Inflated 3D ConvNet)

It is difficult to identify good video architectures due to the small video benchmarks (UCF101, HMDB51), and most methods obtain similar performance on this dataset. They then proposed the Kinetics Dataset and proposed I3D models. The I3D architecture followed the InceptionV1 (GoogleNet) architecture. I3D inflates 2D filters from a pre-trained 2D CNN (e.g., from ImageNet) into 3D convolutions to capture temporal relationships. For the convolution at position , the equation is:

where is the inflated 3D kernel and represents the input features across the spatial and temporal dimensions. The model design is based on Inception V1, so that when training I3D, the I3D weights can be initialized with the InceptionV1 weights, which are pretrained in ImageNet [154].

3.4.4. S3D

The S3D model was developed to overcome the computational challenges of I3D. While I3D delivers strong performance, it is very computationally expensive. This leads to some important questions: Is 3D convolution truly necessary? If so, which layers should use 3D convolution, and which could use 2D convolution instead? These choices might depend on the nature of the dataset and the specific task. Additionally, is it crucial to apply convolution over both time and space together, or would it be enough to handle these dimensions separately? To address these issues, Xie et al. introduced the S3D model [165]. This model replaces traditional 3D convolutions with spatial and temporal separable convolutions, helping reduce computational costs while still effectively capturing both spatial and temporal features in video data. The convolution at position in S3D is given by the following equation:

where represents the 3D kernel and is the input feature map from the previous layer. This method improves efficiency, accuracy, and speed by reducing unnecessary computation.

3.4.5. R3D, R(2+1)D

Despite ResNet152 having only a 2D convolutional layer, it outperforms C3D on the video dataset. The development of a very deep 3D CNN from scratch results in expensive computational cost and memory demand. Three-dimensional convolution vs. (2+1)D convolution, which one is better? It is difficult to say, but we can explain as follows. Full 3D convolution used filter size , where d represents the height and width of the RGB image and t represents the temporal extent. On the other hand, (2+1)D splits the total operation into two sections, 2D convolution and 1D convolution, to reduce the computational complexity. R(2+1)D [162] uses a factorized 3D convolution: 2D convolutions in the spatial domain and 1D convolutions in the temporal domain. The update rule is:

where is the 2D spatial kernel and is the 1D temporal kernel.

3.4.6. P3D ResNet

Qiu et al. [161] proposed a novel architectural design termed Pseudo-3D ResNet (P3D ResNet), wherein each block is assembled in a distinct ResNet configuration. P3D is a pseudo 3D Convolutional Neural Network (3DCNN) constructed by combining C3D and ResNet architectures. For the Sports-1M dataset, P3D-ResNet achieves a performance accuracy of 66.4%, outperforming individual ResNet and C3D models, which achieve accuracies of 64.6% and 61.1%, respectively [161]. P3D can be categorized into three versions: P3D-A, which feeds spatial features into the temporal module and then concatenates with the residual unit. P3D-B is constructed by parallel concatenation of spatial, temporal, and residual units. P3D-C, which concatenates spatial, spatial–temporal, and residual units.

3.4.7. SlowFast Networks

SlowFast Networks use two streams, where a slow stream processes fewer frames per second (capturing long-term dependencies) and a fast stream processes more frames per second (capturing fast motion). The model was proposed by the Facebook AI Research Team and published in 2019 [177]. This model is inspired by biological studies on retinal ganglion cells. The outputs from both streams are fused. This network addresses low frame rate issues, where the slow pathway has low temporal resolution, and the fast pathway has high frame rate and temporal resolution. However, the fast pathway remains lightweight in terms of computational complexity due to the use of or of the channels, and it fuses the latest connections. The equations for the combined feature map are:

where and are the weights for slow and fast streams and is the input feature map. The slow and fast streams are then fused together to generate the final output. The Slow pathway uses a large temporal stride on input frames to focus on spatial information. It captures fine details and spatial patterns, similar to the parvocellular (P cell) function in retinal ganglion cells. This helps understand long-term data structure. The Fast pathway, with a smaller temporal stride, focuses on capturing temporal information. It has lower channel capacity compared to the Slow pathway and resembles the magnocellular (M cell) function in retinal ganglion cells. It specializes in detecting rapid visual changes, making it ideal for processing fast motion. What sets the Fast pathway apart is its ability to maintain good accuracy with a much lower channel capacity, making it efficient and lightweight in the SlowFast model.

3.4.8. X3D

This model was also proposed by Facebook AI Research and published in 2020 [167]. The basic network architecture is designed according to the ResNet and Fast pathway design for SlowFast networks. They have six expansion factors: , , , , , and . X-Fast increases the temporal activation size, , and frame-rate, , to improve temporal resolution while keeping the clip duration constant. X-Temporal extends both the duration and temporal resolution by sampling longer clips and increasing the frame-rate, . X-Spatial enhances the spatial resolution, , by improving the spatial sampling resolution of the input video. X-Depth increases the network’s depth by adding more layers per residual stage by . X-Width expands the number of channels uniformly across all layers using a global width factor, . Lastly, X-Bottleneck increases the inner channel width, , of the center convolutional filter in each residual block to improve processing efficiency. This paper presents X3D, a family of efficient video networks that progressively expand a tiny 2D image classification architecture along multiple network axes, in space, time, width, and depth. X3D [167] is a scalable architecture for video recognition. It adjusts the depth, width, and resolution of the 3D convolutions based on the computational resources. To expand the Basis Network to X3D, the model’s complexity, C, is first determined, where represents the initial complexity and denotes the desired increase. Each parameter (for ) is incrementally expanded until the model reaches the target complexity , defined as:

Six models, , are created, corresponding to each expanded parameter. The models are trained, and their performance is evaluated using a performance metric . The best-performing model, , is selected:

This best model, , becomes the new basis network, and the process can be repeated if further refinement is needed [167].

3.4.9. Vision Transformer (ViT)

Vision Transformer (ViT) is one of the crucial models designed for image classification tasks, utilizing a Transformer architecture originally developed for Natural Language Processing (NLP) [178]. ViT processes images by dividing them into non-overlapping patches and treating these patches as input tokens for a Transformer model, similar to how words are treated in text. Given that we are processing a batch of 100 videos, each with 32 frames, the input tensor V will have the shape:

where H and W are the height and width of each frame and C is the number of channels (e.g., 3 for RGB). For each video, V, we will extract patches from each frame. For each frame , we need to divide it into N non-overlapping patches, and we embed them into a patch embedding space:

Each patch is linearly projected to a fixed-dimensional vector using a convolutional layer:

Now, for each frame t, the patch embeddings will result in:

The full video sequence V now becomes a sequence of spatial tokens, with patches from all 32 frames:

Thus, the shape of the final tokenized video sequence will be:

where D is the dimensionality of each patch embedding. Then, multi-head self-attention is applied to the tokenized sequence of video frames. The attention mechanism computes the output sequence of tokens by considering the relationships between the tokens across both time and space:

where are the query, key, and value matrices, and is the dimension of the key vectors. After the self-attention operation, the model aggregates the output tokens (using global pooling, class token, or other aggregation methods) and projects it into the final class prediction:

where z is the final token representation after the Transformer encoder layers and W is the classification weight matrix.

3.4.10. Video Vision Transformer (ViViT)

ViViT [179] adapts the Vision Transformer (ViT) architecture for video data by extracting N non-overlapping spatio-temporal “tubes” from the input video, denoted as . Here, N is determined by the following expression:

where T, H, and W represent the temporal and spatial dimensions (time, height, and width) of the video and t, h, and w are the corresponding dimensions of each tube.

Each tube is then transformed into a token by a linear mapping operator E, as follows:

These tokens are concatenated together to form a sequence. A learnable class token, , is prepended to this sequence. Since transformers are permutation-invariant, a positional embedding is added to the sequence. Consequently, the tokenization process can be represented as:

Here, represent the token where z can be the sequence of the tokents, represent the learnable class token, x is the input data with position and E represent the mapping operator, and p represent the positional embedding. It is important to note that the linear projection E can be interpreted as a 3D convolution with a kernel size of and a stride of in the time, height, and width dimensions, respectively.

The resulting sequence of tokens, z, is then passed through a transformer encoder consisting of L layers. At each layer ℓ, the following operations are applied sequentially:

where MSA represents multi-head self-attention [173], LN refers to layer normalization, and MLP consists of two linear layers with a GeLU non-linearity in between.

Finally, a linear classifier, , maps the output of the encoded classification token, , to one of C possible classes.

The experimental results demonstrate the effectiveness of ViViT, with the ViViT-B model backbone and a tubelet size of 16 × 216 × 2 achieving high performance. The model is evaluated on two well-known benchmarks: Top-1 accuracy on Kinetics 400 (K400) and action accuracy on Epic Kitchens (EK). The runtime is measured during inference on a TPU-v3, showcasing the model’s efficiency and scalability [179].

3.4.11. Multiview Transformers for Video Recognition (MVT)

MVT [180] introduces a novel approach to video recognition by applying transformers to multiple views of the same video. By leveraging information from different perspectives, MVT improves recognition performance and robustness across various viewpoints. This approach enhances the model’s ability to capture diverse spatial and temporal patterns, making it particularly effective in complex video recognition tasks. MVT combines the strengths of two well-established models: SlowFast and ViViT. The SlowFast model, known for its ability to handle both fast and slow motions in video data, is integrated with ViViT, a transformer-based model that efficiently captures spatial and temporal dependencies in video. The MVT for video recognition based on the scenario in Equation (15) extracts multiple sets of tokens, , from the input video, where V is the number of views. Each view’s tokens are processed independently by separate transformer encoders with lateral connections for cross-view fusion. The tokens from all views are concatenated to form a unified sequence:

Due to the prohibitive computational cost of performing self-attention on all tokens from all views, a more efficient method is introduced by fusing tokens between adjacent views using Cross-View Attention (CVA). This is performed by applying attention where queries come from the larger view and keys and values from the smaller view, after projecting them to the same dimension [180]:

The attention is computed as [180]:

3.4.12. UniFormer-Based 3DCNN

UniFormer [181] is a new model that combines the advantages of CNNs and Vision Transformers (ViTs) to solve the challenges of learning representations from images and videos. CNNs reduce local redundancy but struggle to capture global dependencies due to their limited receptive fields. ViTs, on the other hand, capture long-range dependencies but suffer from high redundancy from comparing all tokens. UniFormer solves this by introducing a unique block structure with local and global token affinity, addressing both redundancy and dependency. A UniFormer block consists of three modules: Dynamic Position Embedding (DPE), Multi-Head Relation Aggregator (MHRA), and Feed Forward Network (FFN). This design allows UniFormer to handle both image and video inputs efficiently. UniFormer is highly versatile, working well for various vision tasks, from image classification to video understanding. It achieves state-of-the-art results across several benchmarks and outperforms traditional models like 3D CNNs without needing extra training data. UniFormer achieves 86.3% top-1 accuracy on ImageNet-1K classification and demonstrates state-of-the-art performance in various tasks, including 82.9%/84.8% top-1 accuracy on Kinetics-400/600, 60.9%/71.2% top-1 accuracy on Sth-Sth V1/V2, 53.8 box AP and 46.4 mask AP on COCO object detection, 50.8 mIoU on ADE20K semantic segmentation, and 77.4 AP on COCO pose estimation.

3.4.13. VideoMAE Based 3DCNN

VideoMAE (Video Masked Autoencoders) [182] is a self-supervised pre-training method designed to learn video representations efficiently, particularly on small datasets. Inspired by ImageMAE, VideoMAE uses a high masking ratio (90–95%) for video tube masking, which makes the reconstruction task more challenging. This higher masking ratio is possible due to the temporally redundant nature of videos, allowing VideoMAE to learn effective representations by focusing on the most informative content. VideoMAE shows that it can achieve impressive performance with a small number of training videos (around 3–4 k) without relying on additional data, unlike traditional models that require large-scale datasets. The model also demonstrates that the quality of data is more important than the quantity when it comes to self-supervised video pre-training. With this approach, VideoMAE achieves remarkable results on benchmarks like Kinetics-400 (87.4%), Something-Something V2 (75.4%), and UCF101 (91.3%) without any extra data.

3.4.14. InternVideo 3DCNN Model

InternVideo [183] is a general video foundation model that combines generative and discriminative self-supervised learning to improve video understanding. It utilizes two main pretraining objectives: masked video modeling and video-language contrastive learning. By selectively coordinating these complementary frameworks, InternVideo efficiently learns video representations that boost performance in various video applications. This approach allows InternVideo to achieve state-of-the-art results across 39 video datasets, covering tasks like action recognition, video-language alignment, and open-world video tasks. Notably, it achieves impressive top-1 accuracies of 91.1% on Kinetics-400 and 77.2% on Something-Something V2, demonstrating its effectiveness and general applicability for video understanding.

4. Skeleton Data Modality-Based Action Recognition Method

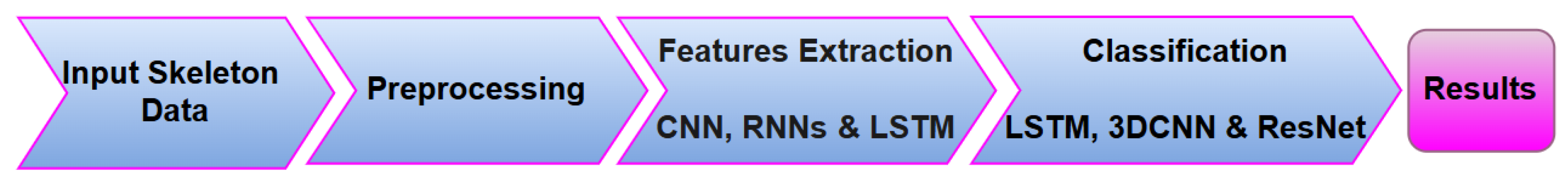

The main challenges of the RGB-based data modality-based HAR system are redundant background and computational complexity issues, and the skeleton-based data modality helps us overcome these challenges. In addition, coupling with joint coordinate estimation algorithms such as OpenPose and SDK [184] has improved the performance of accuracy and reliability of the skeleton data. Skeleton data obtained from the joint position offer several benefits over the RGB data, such as illumination variations, viewing angles, and background occlusions, making it less susceptible to noise interference. The research prefers to perform HAR by using skeleton data because they provide more focused information and reduce redundancy. Based on the feature extraction methods for HAR, the skeleton data can be divided into DL-based methods, relying on learned features, and ML-based methods, which use handcrafted features. In addition, the skeleton data depend on the precise joint position and pose estimation techniques.

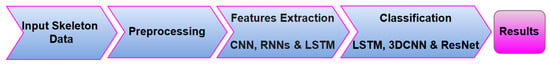

Figure 7 shows the framework of skeleton-based approaches. Table 4 describes key information regarding the skeleton-based data modality on the existing model, including datasets, classification methods, years, and performance accuracy. We describe the well-known pose estimation algorithms in the following section.

Figure 7.

Workflow of skeleton-based action recognition.

4.1. Skeleton-Based HAR Dataset

We provided the most popular benchmark HAR datasets, which come from the skeleton, which is demonstrated in Table 2. The dataset table demonstrates details of the datasets, including modalities, creation year, number of classes, number of subjects who participated in recording the dataset, number of samples, and the latest performance accuracy of the dataset with citations. The Skeleton dataset includes a variety of notable benchmarks essential for HAR. The UPCV dataset from 2014 features 10 classes, 20 subjects, and 400 samples, achieving an outstanding accuracy of 99.2% [75,76]. The NTU RGB+D dataset, introduced in 2016 and expanded in 2019, is one of the most comprehensive, with 60 and 120 classes, 40 and 106 subjects, and 56,880 and 114,480 samples, respectively, both versions recording an accuracy of 97.4% [86,91,97]. The MSRDailyActivity3D dataset from 2012 includes 16 classes, 10 subjects, and 320 samples, with an accuracy of 97.5% [83,84]. The PKU-MMD dataset from 2017 contains 51 classes, 66 subjects, and 10,076 samples, with a notable accuracy of 94.4% [92,93]. The Multi-View TJU dataset from 2014 offers 20 classes, 22 subjects, and 7040 samples. These datasets are crucial for training and testing HAR models, offering diverse activities and scenarios to enhance model robustness and accuracy.

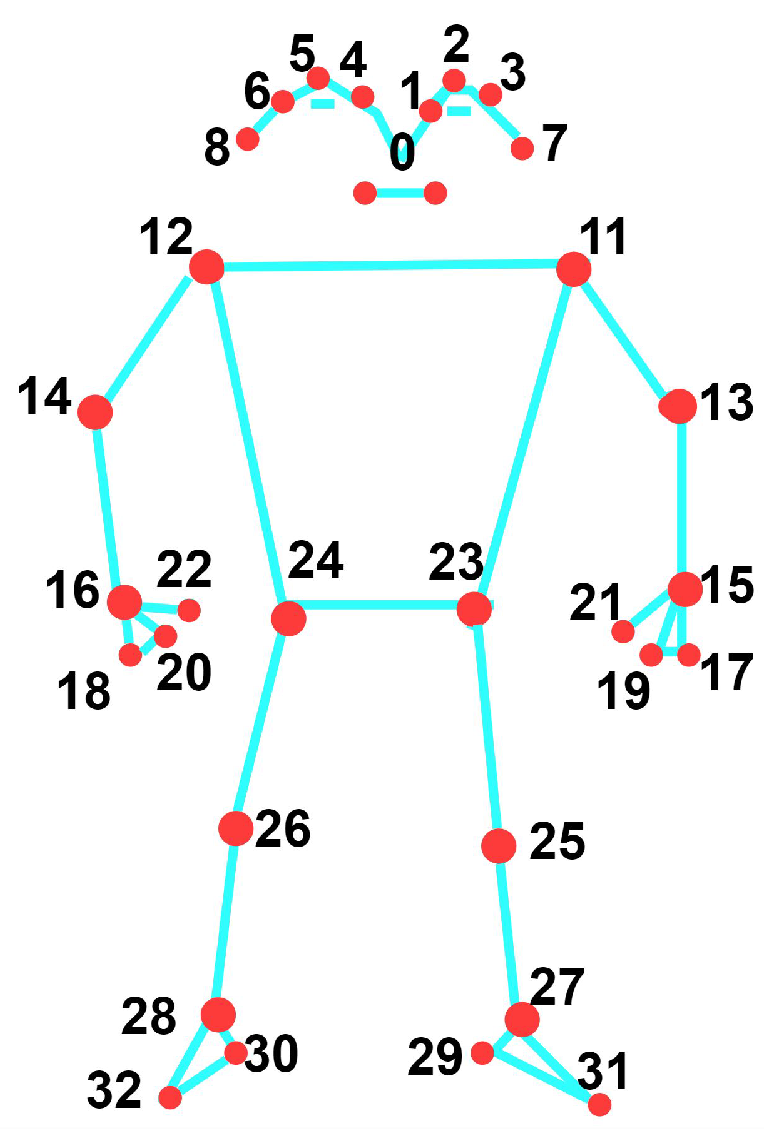

4.2. Pose Estimation

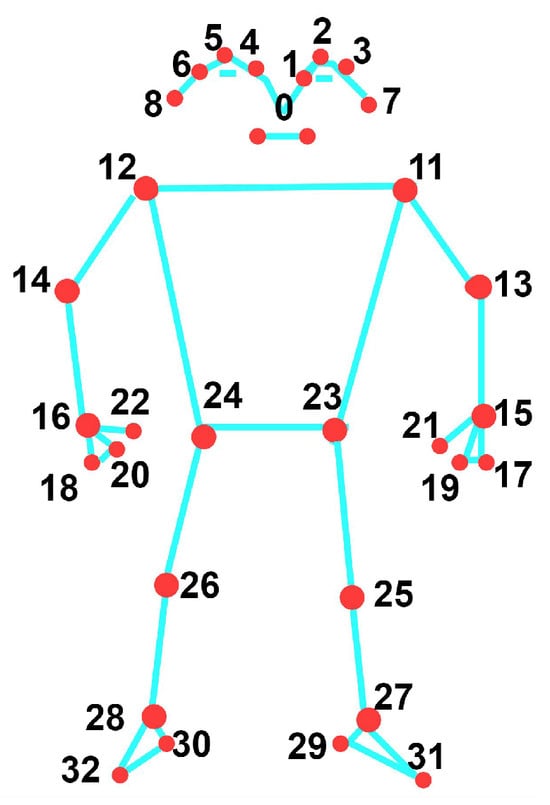

We can extract human joint skeleton points from the RGB video using media pipe, openpose, AlphaPose [185,186], MMPose, etc. Using a media pipe, Figure 8 demonstrates the 33 joint skeleton points for the whole body. Human limb trunk reconstruction includes estimating human pose by detecting joint positions in the skeleton and establishing their connections. Traditional methods, relying on manual feature labeling and regression for joint coordinate retrieval, suffer from low accuracy and are highly sensitive to occlusion. DL-based methods, including 2D and 3D pose estimation, have become pivotal in this research domain.

Figure 8.

Positions of the 33 key landmarks on the human body. (0) Nose, (1) Left eye inner, (2) Left eye, (3) Left eye outer, (4) Right eye inner, (5) Right eye, (6) Right eye outer, (7) Left ear, (8) Right ear, (9) Mouth left, (10) Mouth right, (11) Left shoulder, (12) Right shoulder, (13) Left elbow, (14) Right elbow, (15) Left wrist, (16) Right wrist, (17) Left pinky, (18) Right pinky, (19) Left index, (20) Right index, (21) Left thumb, (22) Right thumb, (23) Left hip, (24) Right hip, (25) Left knee, (26) Right knee, (27) Left ankle, (28) Right ankle, (29) Left heel, (30) Right heel, (31) Left foot index, (32) Right foot index.

4.2.1. Two-Dimensional Human Pose Estimation-Based Methods

The objective of 2D human pose estimation is to identify significant body parts in an image and connect them sequentially to form a human skeleton graph. Research commonly addresses the classification of single and multiple human subjects. In single-person pose estimation, the goal is to detect a solitary individual in an image. This involves first recognizing all joints of the person’s body and subsequently generating a bounding box around them. Two main categories of models exist for single-person pose estimation. The first utilizes a direct regression approach, where key points are directly predicted from extracted features. In 2D pose estimation, one can employ deformable part models to recognize the object by matching a set of templates. Nevertheless, these deformable part models exhibit limited expressiveness and fail to consider the global context. Yan et al. [187] proposed a pose-based approach and performed two main methods: detection-based and regression-based approaches. Detection-based methods utilize powerful part detectors based on CNNs, which can be integrated using graphical models, as described by Yuille et al. [188]. For solving the detection problem, pose estimation can be represented as a heat map where each pixel indicates the detection confidence of a joint, as outlined by Bulat et al. [189]. However, detection approaches do not directly provide joint coordinates. A post-processing step is applied to recover poses where (x, y) coordinates are obtained by utilizing the max function. Toshev et al. [190] proposed a cascade of regressor methods to estimate poses; they employ the regression-based approach with a nonlinear function that maps the joint coordinates and refines pose estimates. Carreira et al. [191] proposed the Iterative Error Feedback (IEF) approach, where iterative prediction is performed to correct the current estimates. Instead of predicting outputs in a single step, a self-correcting model is employed, which modifies an initial solution by incorporating error predictions, also called IEF. However, the sub-optimal nature of the regression function leads to lower performance than detection-based techniques.

4.2.2. Three-Dimensional Human Pose Estimation-Based Methods

Conversely, when presented with an image containing an individual, the objective of 3D pose estimation is to generate a 3D pose that accurately aligns with the spatial location of the person depicted. The accurate reconstruction of 3D poses from real-life images holds significant potential in various fields of HAR, such as entertainment and human–computer interaction, particularly indoors and outdoors. Earlier approaches relied on feature engineering techniques, whereas the most advanced techniques are based on deep neural networks, as proposed by Zhou et al. [192] Three-dimensional pose estimation is acknowledged to be more complex than its 2D handle due to its management of a larger 3D pose space and an increased number of ambiguities. Nunes et al. [193] presented skeleton extraction through depth images, wherein skeleton joints are inferred frame by frame. A manually selected set of 15 skeleton joints, as determined by Gan et al. [112], are used to form an APJ3D representation, which is based on relative positions and local spherical angles. These 15 joints, which have been deliberately selected, play a crucial role in the development of a concise representation of human posture. Spatial features are encoded using diverse metrics, including joint distances, orientations, vectors, distances between joints and lines, and angles between lines. These measures collectively contribute to a comprehensive texture feature set, as suggested by Chen et al. [194]. Additionally, a CNN-based network is trained to recognize corresponding actions.

Table 4.

Skeleton-based action recognition methods using handcrafted and deep learning approaches.

Table 4.

Skeleton-based action recognition methods using handcrafted and deep learning approaches.

| Author | Year | Dataset Name | Modality | Method | Classifier | Accuracy [%] |

|---|---|---|---|---|---|---|

| Veeriah et al. [195] | 2015 | MSRAction3D KTH-1 (CV) KTH-2 (CV) | Skeleton | Differential RNN | Softmax |

92.03 93.96, 92.12 |

| Xu et al. [116] | 2016 | MSRAction3D UTKinect Florence3D action | Skeleton | SVM with PSO | SVM | 93.75 97.45, 91.20 |

| Zhu et al. [196] | 2016 | SBU Kinect HDM05, CMU | Skeleton | Stacked LSTM | Softmax | 90.41 97.25, 81.04 |

| Li et al. [197] | 2017 | UTD-MHAD NTU-RGBD (CV) NTU-RGBD (CS) | Skeleton | CNN | Maximum Score | 88.10 82.3 76.2 |

| Soo et al. [198] | 2017 | NTU-RGBD (CV) NTU-RGBD (CS) | Skeleton | Temporal CNN | Softmax | 83.1 74.3 |

| Liu et al. [160] | 2017 | NTU-RGBD (CS) NTU-RGBD (CV) MSRC-12 (CS) Northwestern-UCLA | Skeleton | Multi-stream CNN | Softmax | 80.03, 87.21 96.62, 92.61 |

| Das et al. [199] | 2018 | MSRDailyActivity3D NTU-RGBD (CS) CAD-60 | Skeleton | Stacked LSTM | Softmax | 91.56 64.49, 67.64 |

| Si et al. [200] | 2019 | NTU-RGBD (CS) NTU-RGBD (CV) UCLA | Skeleton | AGCN-LSTM | Sigmoid | 89.2, 95.0 93.3 |

| Shi et al. [201] | 2019 | NTU-RGBD (CS) NTU-RGBD (CV) Kinetics | Skeleton | AGCN | Softmax | 88.5 95.1 58.7 |

| Trelinski et al. [202] | 2019 | UTD-MHAD MSR-Action3D | Skeleton | CNN-based | Softmax | 95.8, 77.44 80.36 |

| Li et al. [203] | 2019 | NTU-RGBD (CS) Kinetics (CV) | Skeleton | Actional graph based CNN | Softmax | 86.8 56.5 |

| Huynh et al. [204] | 2019 | MSRAction3D UTKinect-3D SBU-Kinect Interaction | Skeleton | ConvNets | Softmax | 97.9 98.5, 96.2 |

| Huynh et al. [205] | 2020 | NTU-RGB+D UTKinect-Action3D | Skeleton | PoT2I with CNN | Softmax | 83.85, 98.5 |

| Naveenkumar et al. [206] | 2020 | UTKinect-Action3D NTU-RGB+D | Skeleton | Deep ensemble | Softmax | 98.9, 84.2 |

| Plizzari et al. [207] | 2021 | NTU-RGBD 60 NTU-RGBD 120 Kinetics Skeleton-400 | Skeleton | ST-GCN | Softmax | 96.3, 87.1 60.5 |

| Snoun et al. [208] | 2021 | RGBD-HuDact, KTH | Skeleton | VGG16 | Softmax | 95.7, 93.5 |

| Duan et al. [209] | 2022 | NTU-RGBD UCF101 | Skeleton | PYSKL | - | 97.4, 86.9 |

| Song et al. [210] | 2022 | NTU-RGBD | Skeleton | GCN | Softmax | 96.1 |

| Zhu et al. [211] | 2023 | UESTC NTU-60 (CS) | Skeleton | RSA-Net | Softmax | 93.9, 91.8 |

| Zhang et al. [212] | 2023 | NTU-RGBD Kinetics-Skeleton | Skeleton | Multilayer LSTM | Softmax | 83.3 27.8 (Top-1) 50.2 (Top-5) |

| Liu et al. [213] | 2023 | NTU-RGBD 60 (CV)NTU-RGBD 120 (CS) | Skeleton | LKJ-GSN | Softmax | 96.1 86.3 |

| Liang et al. [214] | 2024 | NTU-RGBD (CV) NTU-RGBD 120 (CS) FineGYM | Skeleton | MTCF | Softmax | 96.9, 86.6 94.1 |

| Karthika et al. [215] | 2025 | NTU-RGBD 60 NTU-RGBD 120 Kinetics-700 Micro- Action-52 | Skeleton | Stacked Ensemble | Logistic Regression |

97.87 98.0 97.50 95.20 |

| Sun et al. [216] | 2025 | Self collected KTH UTD-MHAD | Skeleton | Multi channel fussion | Logistic Regression | 98.16 92.85 84.98 |

| Mehmood et al. [217] | 2025 | NTU-RGB+D (CS/CV) Kinetics UCF-101 HMDB-51 | Skeleton | EMS-TAGCN | Logistic Regression | 91.3/97.5 62.3 51.24 72.7 |

4.3. Handcrafted Feature and ML-Based Classification Approach

Researchers determine handcrafted features using statistical features extracted from action data. These features describe the dynamics or statistical properties of the action analyzed. Yang et al. [18] proposed a method to extract the super vector features to determine the action based on the depth information. Shao et al. [218] combined shape and motion information for HAR through temporal segmentation, utilizing MHI and Predicted Gradients (PCOG) as feature descriptors. Yang et al. [219] introduced the Depth Motion Map (DMM) technique, which allows for the projection and compression of the spatio-temporal depth structure from different viewpoints, including the side, front, and upper views. This process results in the formation of three distinct motion history maps. To represent these motion history maps, the authors employed the HOG feature. Instead of using HOG, Chen et al. [111] employed local features to describe human activities based on Dynamic Motion Models (DMMs). Additionally, Chen et al. [220] introduced a spatio-temporal depth layout across frontal, lateral, and upper orientations. Departing from depth compression methods, they extracted motion trajectory shapes and boundary histogram features from spatio-temporal interest points, leveraging dense sampling and joint points in each perspective to depict actions. Moreover, Miao et al. [221] applied the discrete cosine variation technique for the effective compression of depth maps. Simultaneously, they generated action features by utilizing transform coefficients. From the available depth data, it is possible to estimate the structure of the human skeleton promptly and precisely. Shotton et al. [222] proposed a method for the real-time estimation of body postures from depth images, thereby facilitating the rapid segmentation of humans based on depth. Within this context, the problem of detecting joints has been simplified to a per-pixel classification task. Additionally, there is ongoing research in the field of HAR that employs depth data and focuses on methods utilizing the human skeleton. These approaches analyze changes in the joint points of the human body across consecutive video frames to characterize actions, encompassing alterations in both the position and appearance of the joint points. Xia et al. [223] proposed a three-dimensional joint point histogram as a means to depict the human pose and subsequently formulated the action using a discrete hidden Markov model. Keceli et al. [224] captured depth and human skeleton information via employment of the Kinect sensor, and subsequently derived human action features by assessing angle and displacement information regarding the skeleton joint points. Similarly, Yang et al. [21] developed a method based on the EigenJoints, which leverages an Accumulative Motion Energy (AME) function to identify video frames and joint points that offer richer information for action modeling. Pazhoumand et al. [225] utilized the longest common subsequence method to select distinctive features with high discriminatory power from the skeleton’s relative motion trajectories, thereby providing a comprehensive description of the corresponding action.

Handcrafted features offer high interpretability and simplicity and are straight-forward to use. However, the handcrafted feature-based method requires prior knowledge, which is difficult to generalize.

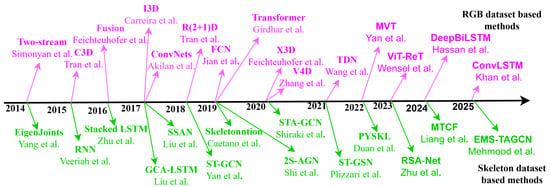

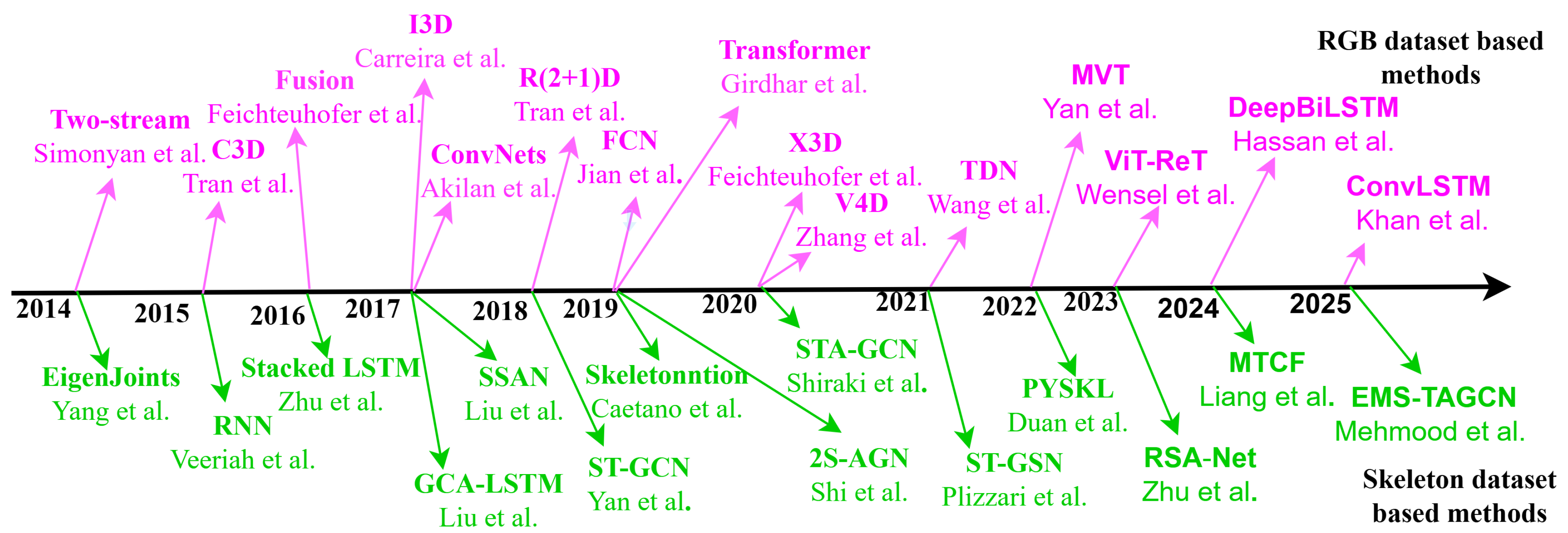

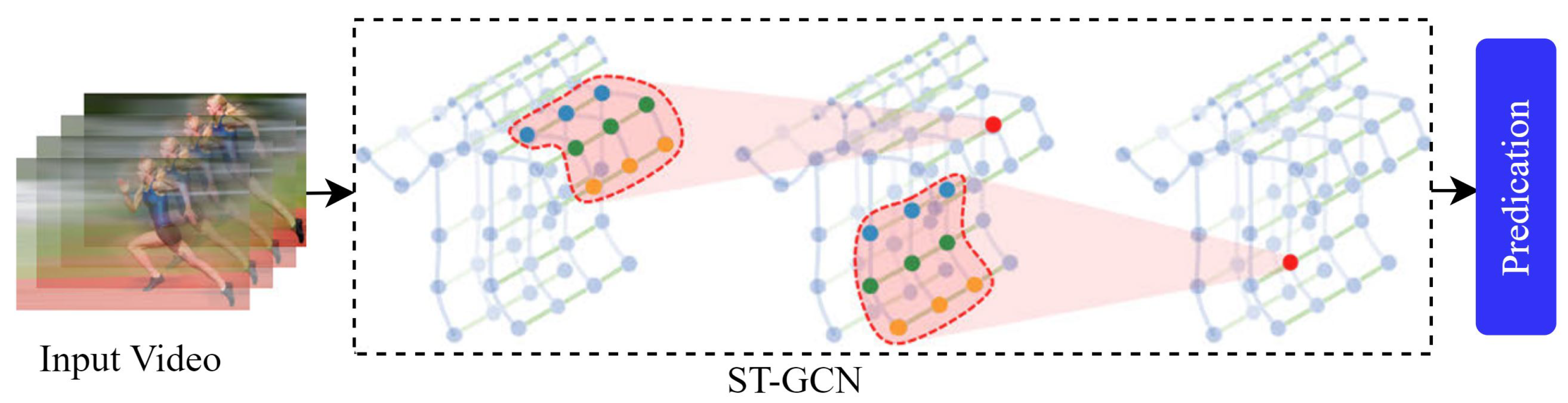

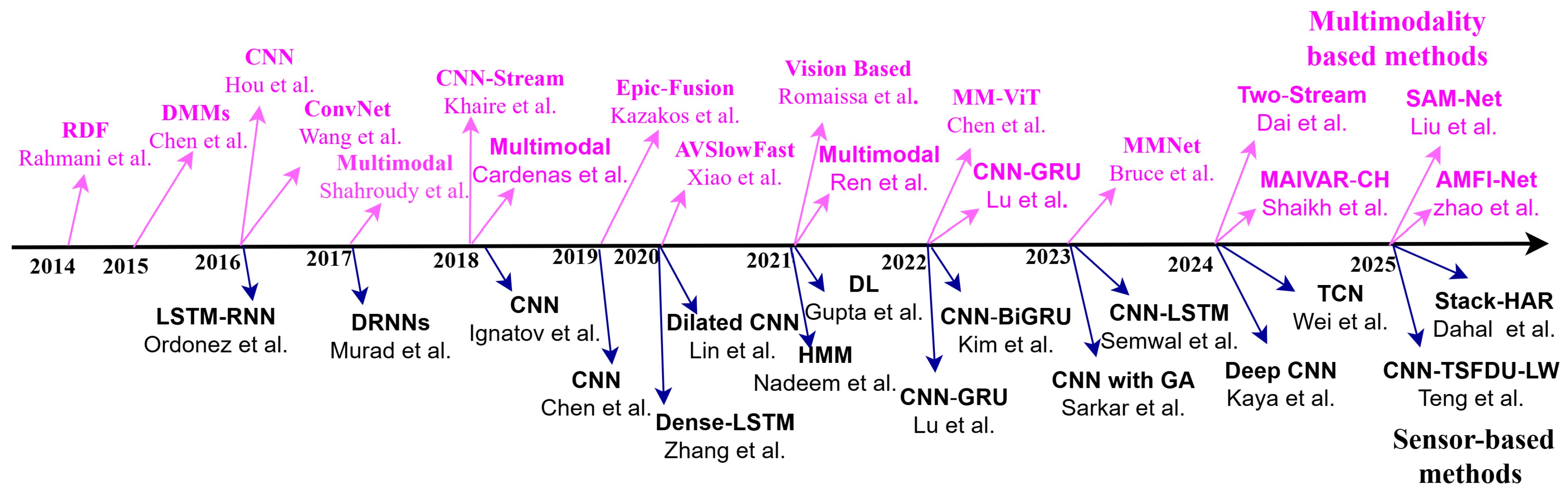

4.4. End-to-End Deep Learning-Based Approach

Recently, there has been a growing acknowledgment in HAR of the advantages of integrating skeleton data with DL-based techniques. The handcrafted features have reduced discriminative capability for HAR; conversely, to extract features efficiently, the utilization of methods based on DL necessitates a substantial quantity of training data. Figure 9 demonstrates the year-wise end-to-end DL-based method developed by various researchers for RGB and skeleton-based HAR systems. As shown, several notable models leveraging Recurrent Neural Networks (RNNs), CNN, and GCN have been developed.

4.4.1. CNN-Based Methods

Skeleton data combined with ML methods provide efficient action recognition capabilities. Zhang et al. [226] utilized the Kinect sensor to capture skeletal representations, enabling the recognition of actions based on body part movements. Skeleton data paired with CNNs offer robust action recognition. As a result, in the work of Wang et al. [47], an advantage is found in combining handcrafted and DL-based features through the use of an enhanced trajectory. Additionally, the Trajectory-pooled Deep-Convolutional Descriptor (TpDD), also referred to as Two-stream ConvNets, is employed. The construction of an effective descriptor is achieved through the learning of multi-scale convolutional feature maps within a deep architecture. Ding et al. [227] developed a CNN-based model to extract high-level effective semantic features from RGB textured images obtained from using skeletal data. However, these methodologies have a significant amount of preprocessing steps and a chance to miss some effective information. Caetano et al. suggested SkeleMotion [228], which offers a novel skeleton image representation as an alternative input for neural networks to address these issues. Researchers have explored solutions to the challenge of long-time dependence, especially considering that CNNs do not extract long-distance motion information. To overcome this issue, Liu et al. [229] suggested a Subsequence Attention Network (SSAN) to improve the capture of long-term features. This network, combined with 3DCNN, uses skeleton data to record long-term features more effectively. Sun et al. [216] proposed a network for encoding 3D skeletal joint data into grayscale images and classifying human activities using a three-channel ResNet34-based fusion network, while noting potential limitations in handling occlusions and multi-person scenarios due to reliance on unobstructed single-person video inputs.

4.4.2. RNN-LSTM-Based Methods

Approaches relying on Recurrent Neural Networks (RNNs) with LSTM [230,231] have garnered considerable popularity as a predominant DL methodology for skeleton-based action recognition. Moreover, these approaches have demonstrated exceptional proficiency in accomplishing video-based action recognition tasks [91,149,195,196,232,233]. The spatio-temporal patterns of skeletons exhibit temporal evolutions. Consequently, these patterns can be effectively represented by memory cells within the structure of RNN-LSTM models, as proposed by [230]. In a similar way, Du et al. [232] introduced a hierarchical RNN approach to capture the long-term contextual information of skeletal data. This involved dividing the human skeleton into five distinct parts based on its physical structure. Subsequently, each lower-level part was represented using an RNN, and these representations were then integrated to form the final representation of higher-level parts, which facilitated action classification. The problem related to gradient explosion and vanishing gradients occurs if the sequences are too long for actual training. To overcome this issue, Li et al. [234] suggested an Independent Recurrent Neural Network (IndRNN) to regulate gradient backpropagation over time, allowing the network to capture long-term dependencies. Shahroudy et al. [91] introduced a model for human action learning using a part-aware LSTM. This model involves splitting the long-term memory of the entire motion into part-based cells and independently learning the long-term context of each body part. The network’s output is then formed by combining the independent body part context information. Liu et al. [149] presented a spatio-temporal LSTM network named ST-LSTM, aimed at 3D action recognition from skeletal data. They proposed a technique called skeleton-based tree traversal to feed the structure of the skeletal data into a sequential LSTM network and improved the performance of ST-LSTM by incorporating additional trust gates. In their recent work, Liu et al. [233] directed their attention towards the selection of the most informative joints in the skeleton by employing a novel type of LSTM network called Global Context-Aware Attention (GCA-LSTM) to recognize actions based on 3D skeleton data. Two layers of LSTM were utilized in his study. The initial layer encoded the input sequences and produced a global context memory for these sequences. Simultaneously, the second layer carried out attention mechanisms over the input sequences with the support of the acquired global context memory. The resulting attention representation was subsequently employed to refine the global context. Numerous iterations of attention mechanisms were conducted, and the final global contextual information was employed in the task of action classification. Compared to the methodologies based on hand-crafted designed local features, the RNN-LSTM methodologies and their variations have demonstrated superior performance in the recognition of actions. Nevertheless, these methodologies tend to excessively emphasize the temporal information while neglecting the spatial information of skeletons [91,149,195,215,232,233]. RNN-LSTM methodologies continue to face difficulties in dealing with the intricate spatio-temporal variations of skeletal movements due to multiple issues, such as jitters and variability in movement speed. Another drawback of the RNN-LSTM networks [230,231] is their sole focus on modeling the overall temporal dynamics of actions, disregarding the detailed temporal dynamics. To address these limitations, in this investigation a CNN-based methodology can extract discriminative characteristics of actions and model the various temporal dynamics of skeleton sequences via the suggested Enhanced-SPMF representation, encompassing short-term, medium-term, and long-term actions.

4.4.3. GNN or GCN-Based Methods

Graph Convolutional Neural Networks (GCNNs) are powerful DL-based methods designed to perform with non-Euclidean data. Unlike traditional CNNs and RNNs, which perform well with Euclidean data (such as images, text, and speech), they are unable to perform with non-Euclidean data [235,236,237,238,239,240]. The GCN was first introduced by Gori et al. [241] in 2005 to handle graph data. GCNNs with skeleton data enable spatial dependencies to be captured for accurate action recognition. The human skeleton data, consisting of joint points and skeletal lines, can be viewed as non-Euclidean graph data. Therefore, GCNs are particularly suited for learning from such data. There are two main branches of GCNs: Spectral GCN and Spatial GCN.

4.4.4. Spectral GCN-Based Methods

Using and leveraging both eigenvalues and eigenvectors of the Graph Laplacian Matrix (GLM) to convert graph data from the temporal to the spatial domain [242], this model is not computationally efficient. To address this issue, Kipf et al. [243] enhanced the spectral GCN approach by allowing the filter operation of only one neighbor node to reduce the computational cost. While spectral GCNs have shown effectiveness in HAR tasks, their computational cost poses challenges when dealing with graphs.

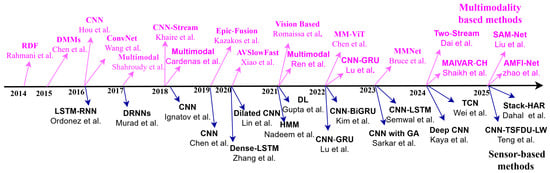

4.4.5. Spatial GCN-Based Methods