Enhancing Data Collection Through Optimization of Laser Line Triangulation Sensor Settings and Positioning

Abstract

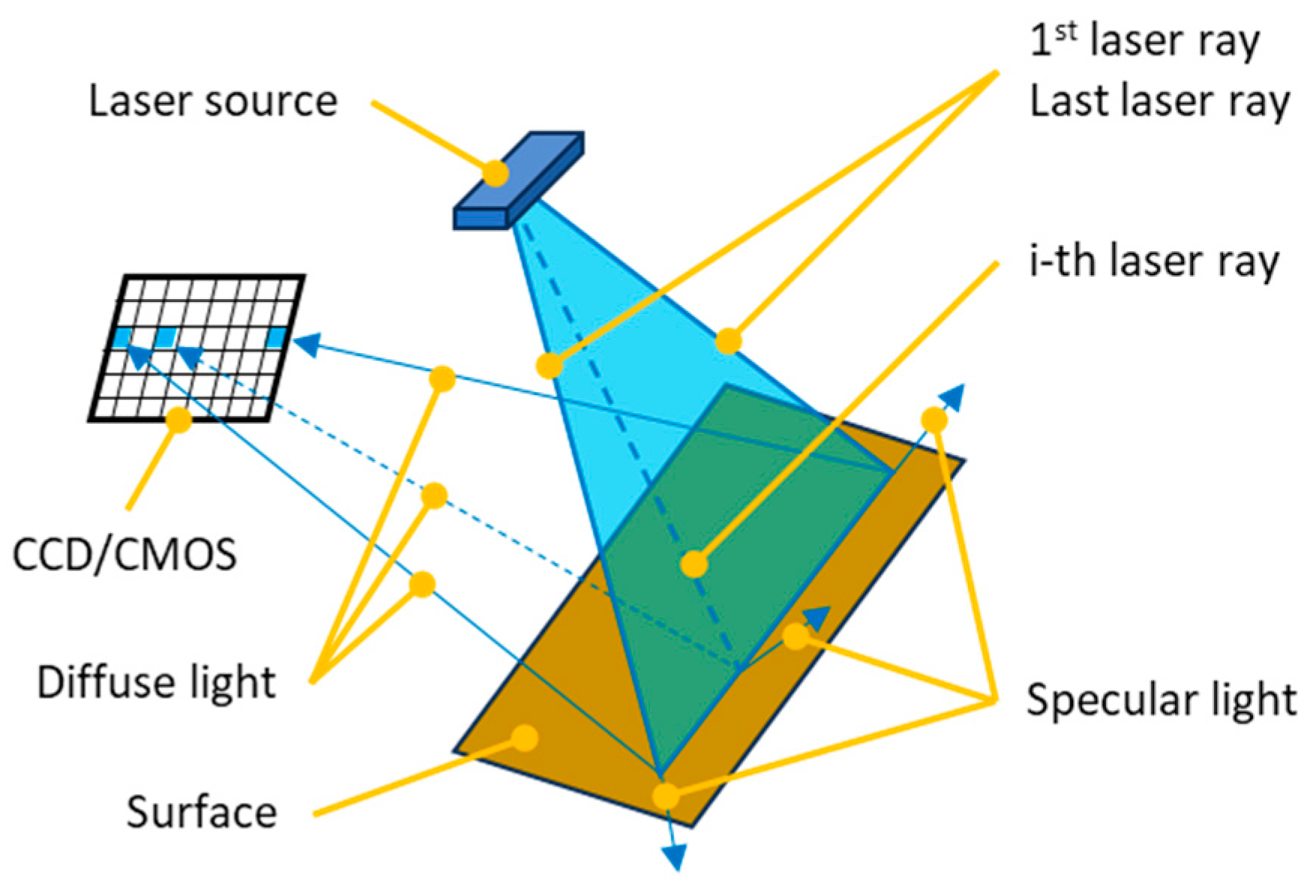

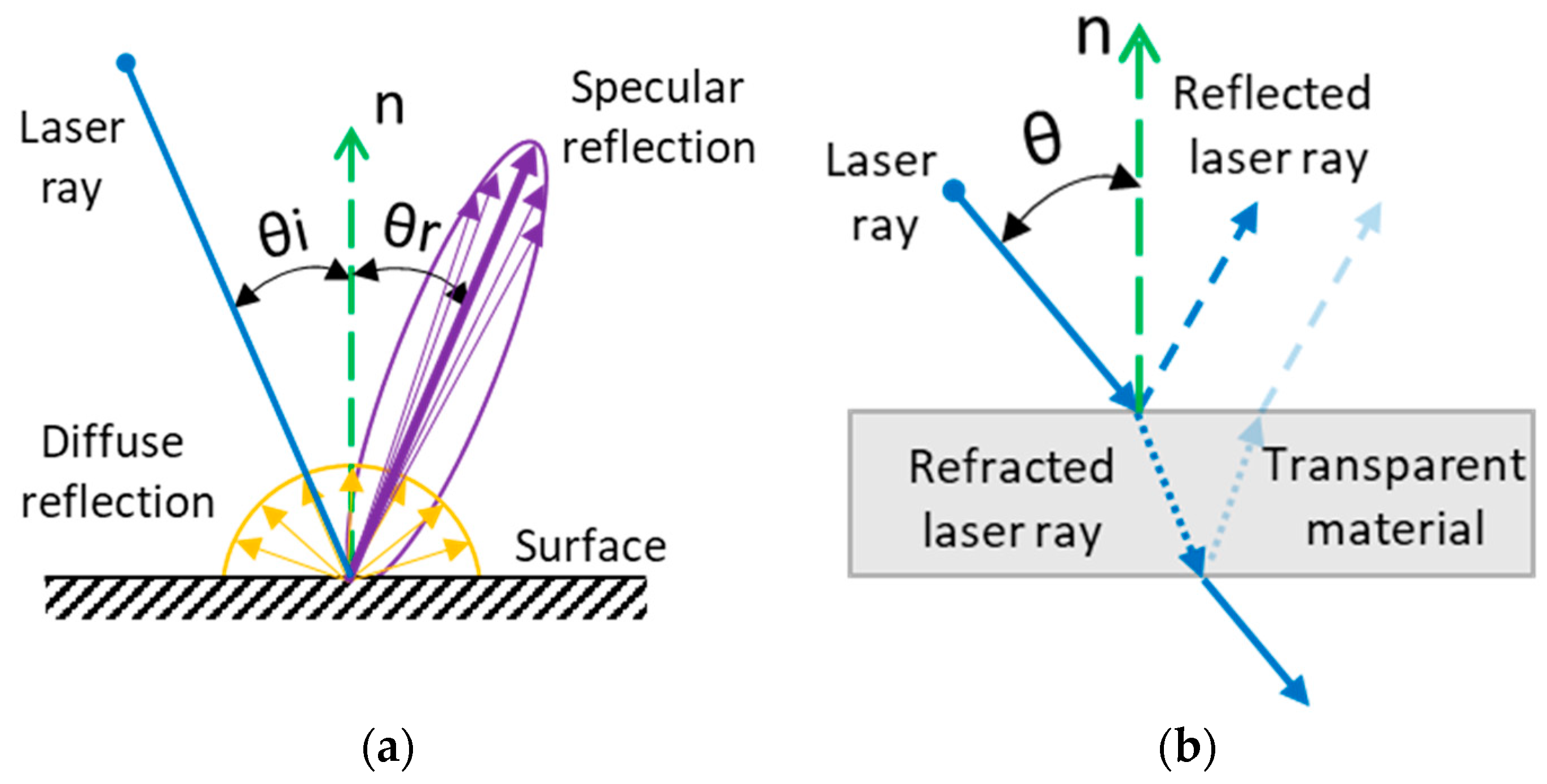

:1. Introduction

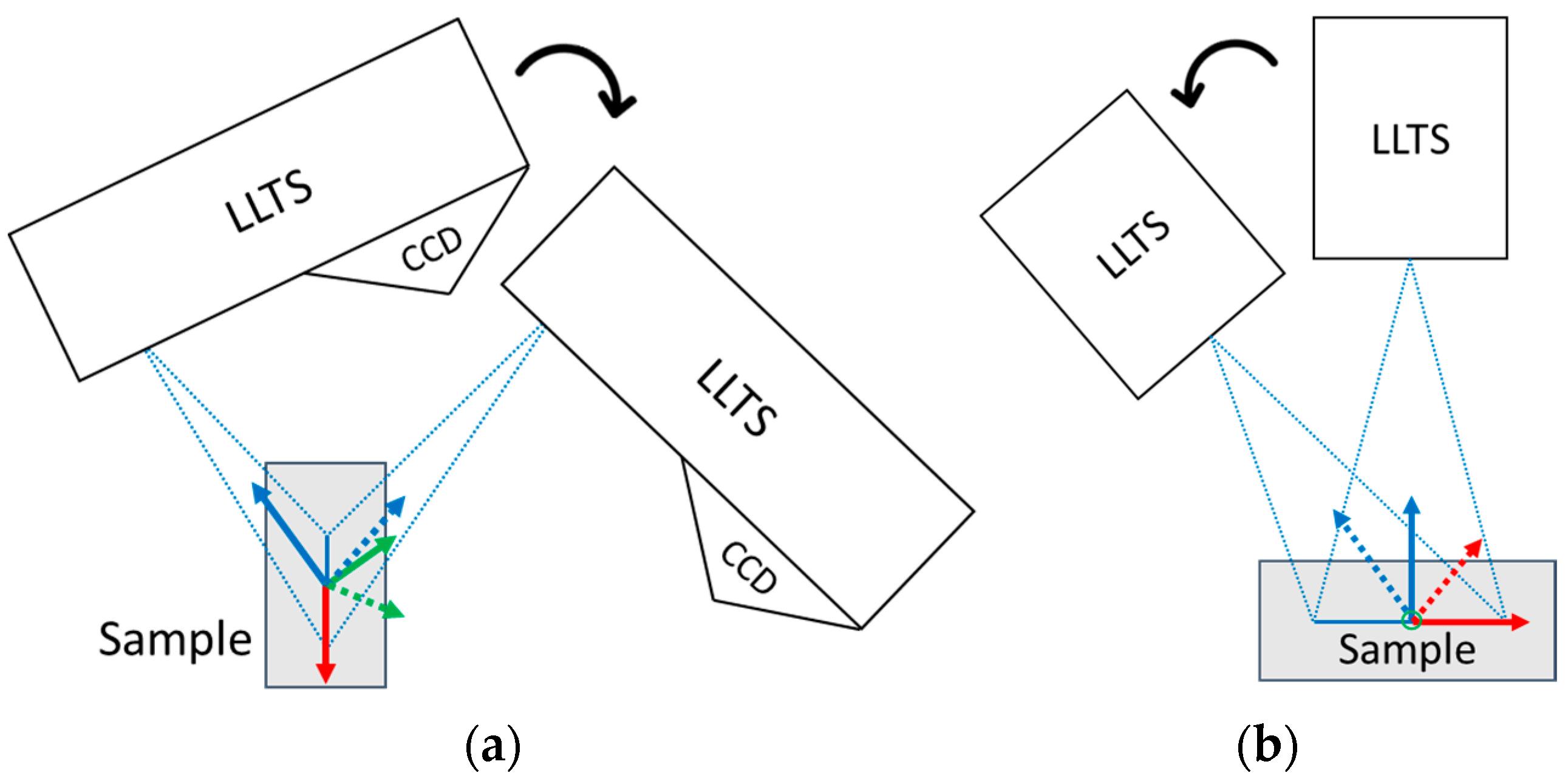

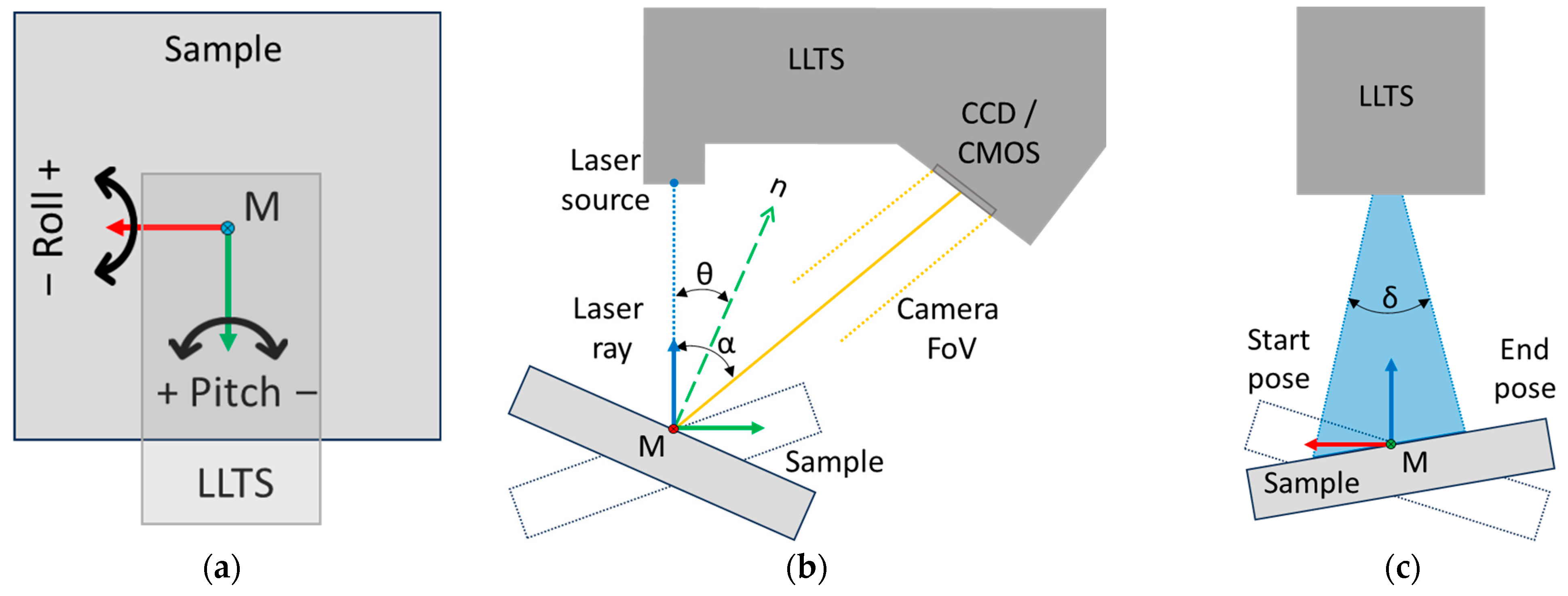

2. Methodology

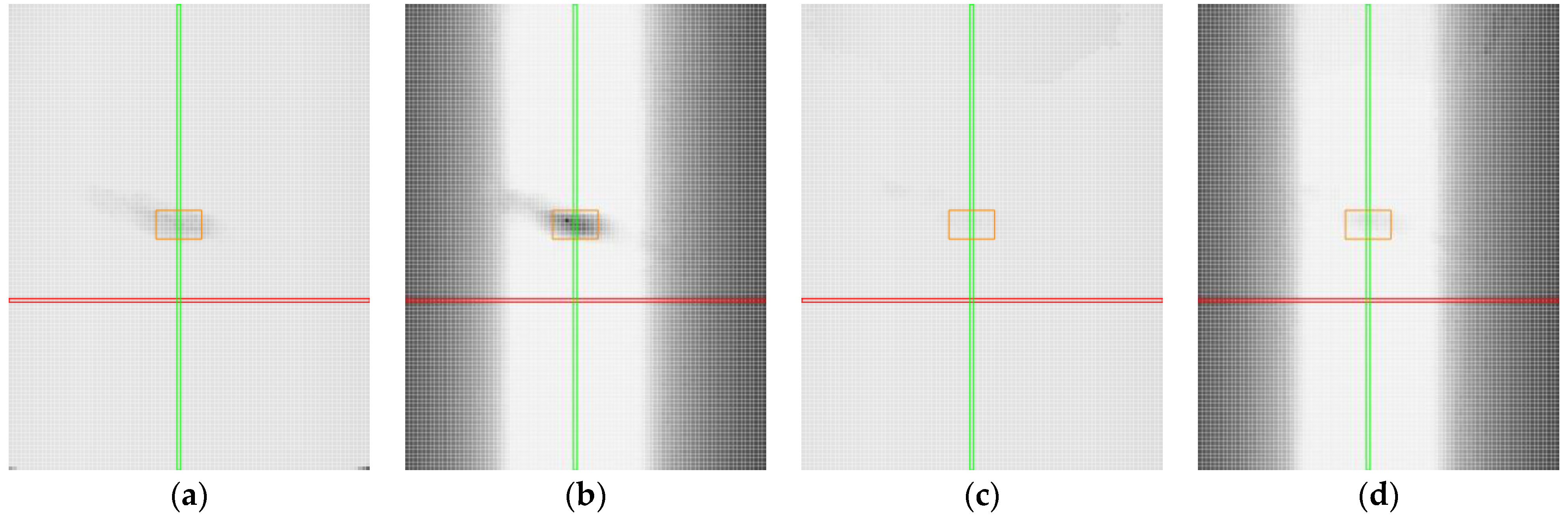

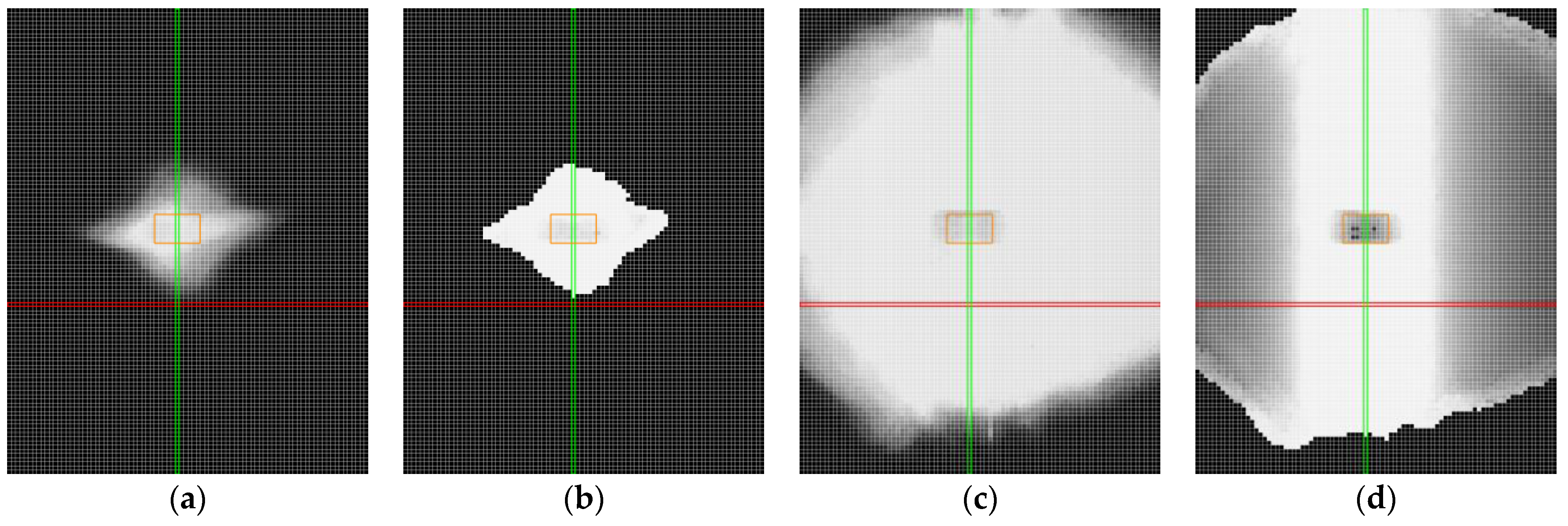

3. Experiment Setup and Data Collection

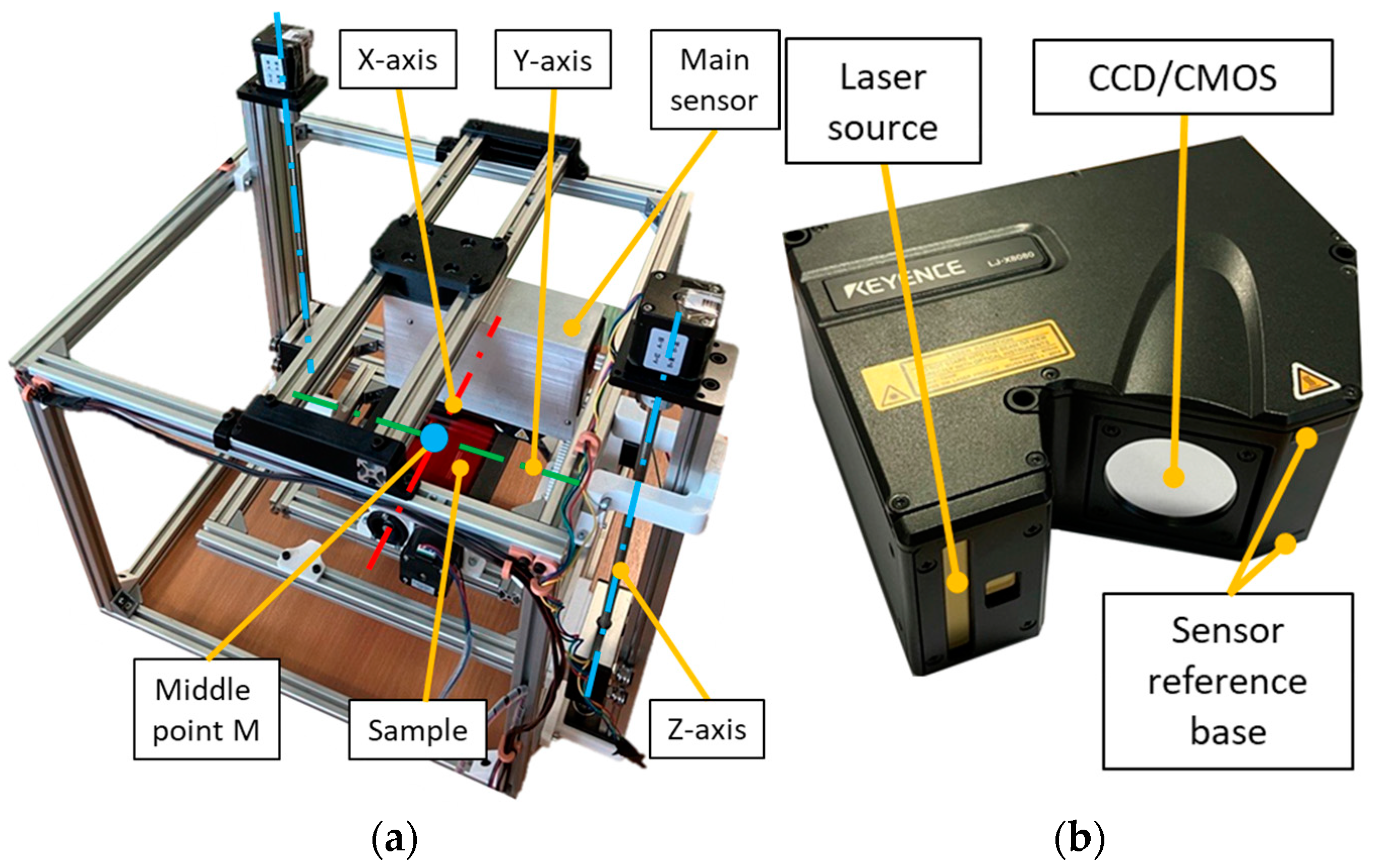

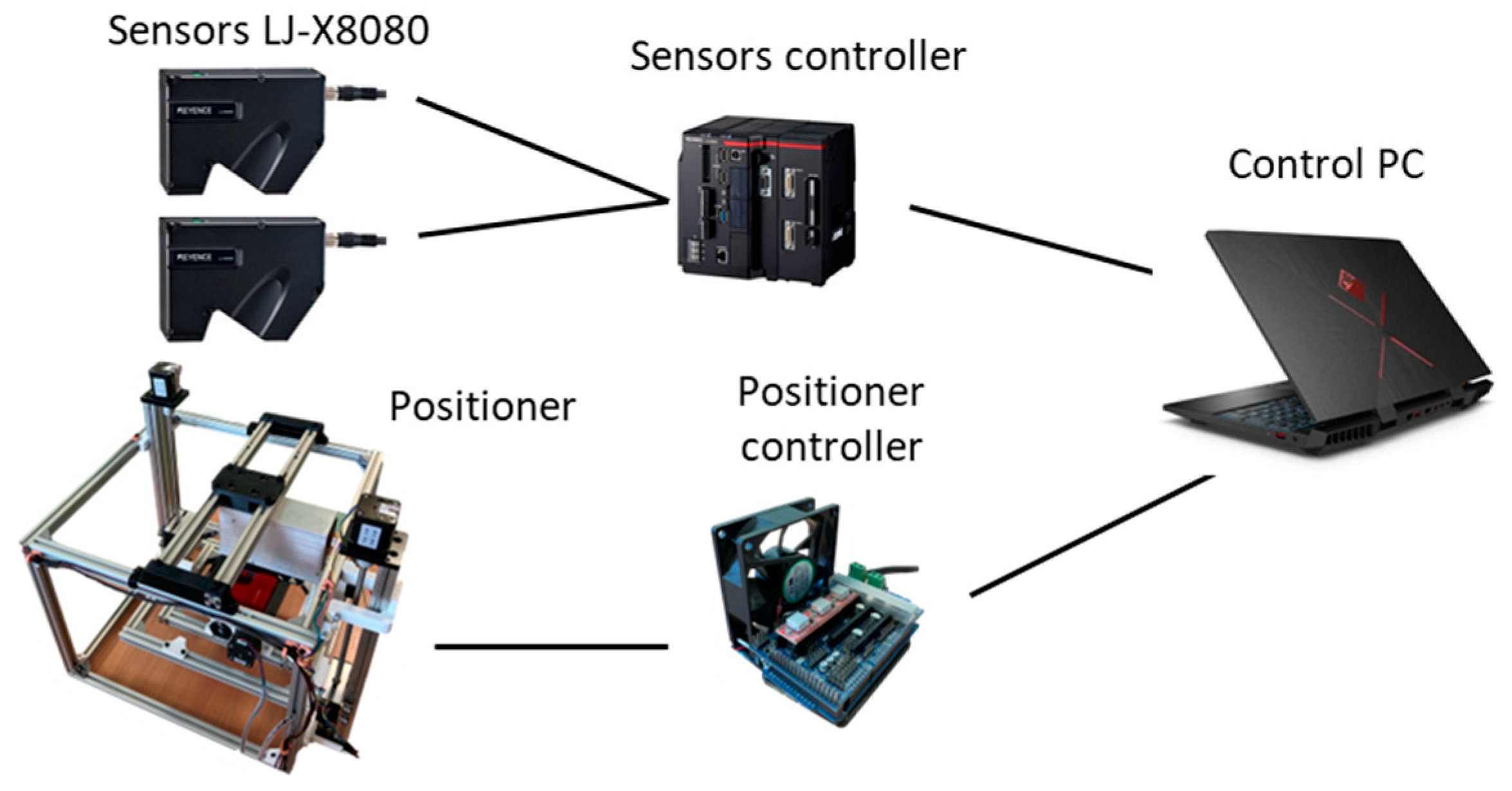

3.1. Experimental Workplace

3.2. Data Collection

| Algorithm 1. The measurement process. |

| for i = −40 to 45 //i represents the pitch angle (rotation around the Y-axis) SetPitchPose (i) for j = −70 to 40 //represents the roll angle (rotation around the X-axis) SetRollPose (j) WaitForStabilization () CollectProfileData () end for end for |

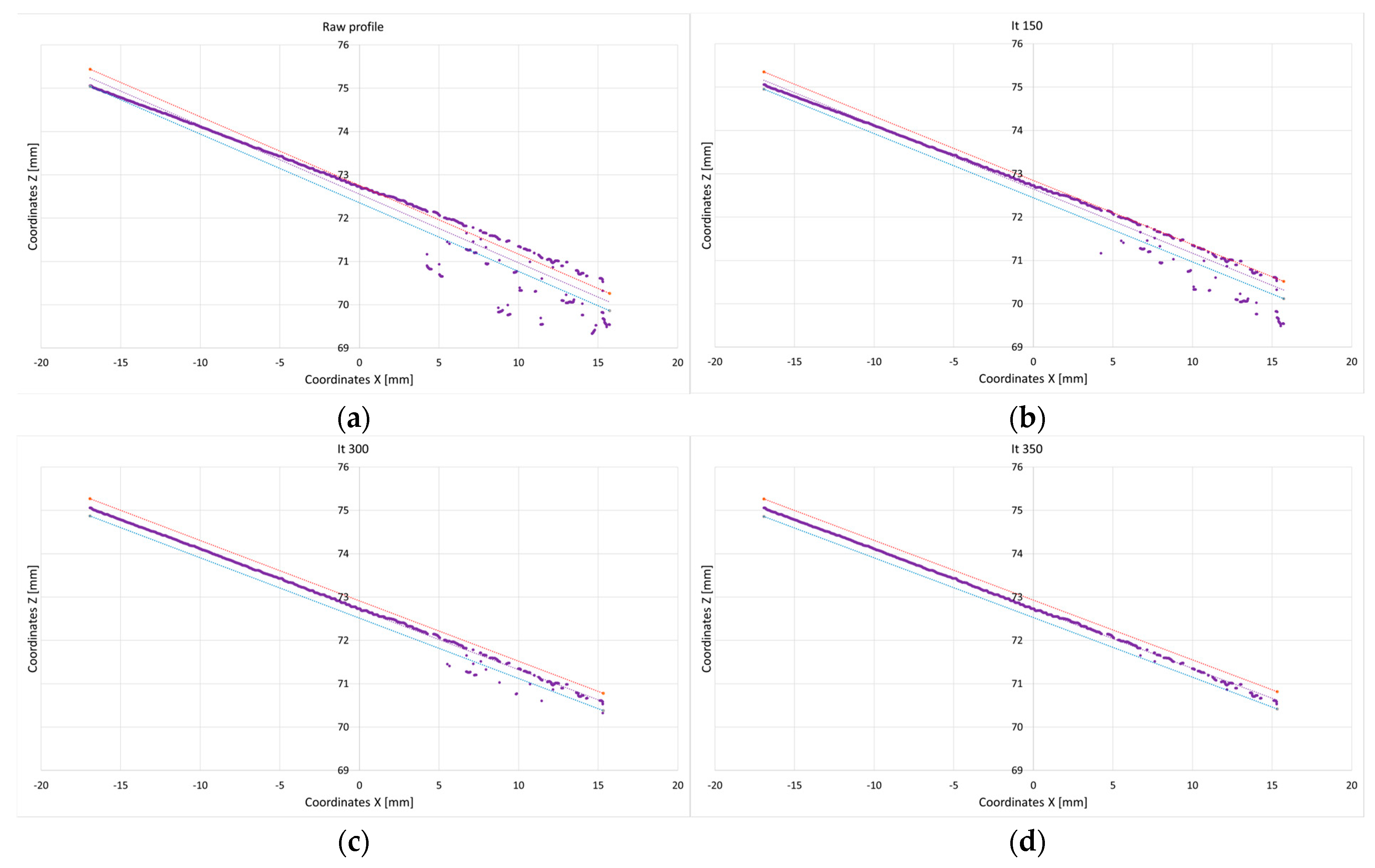

4. Experimental Results

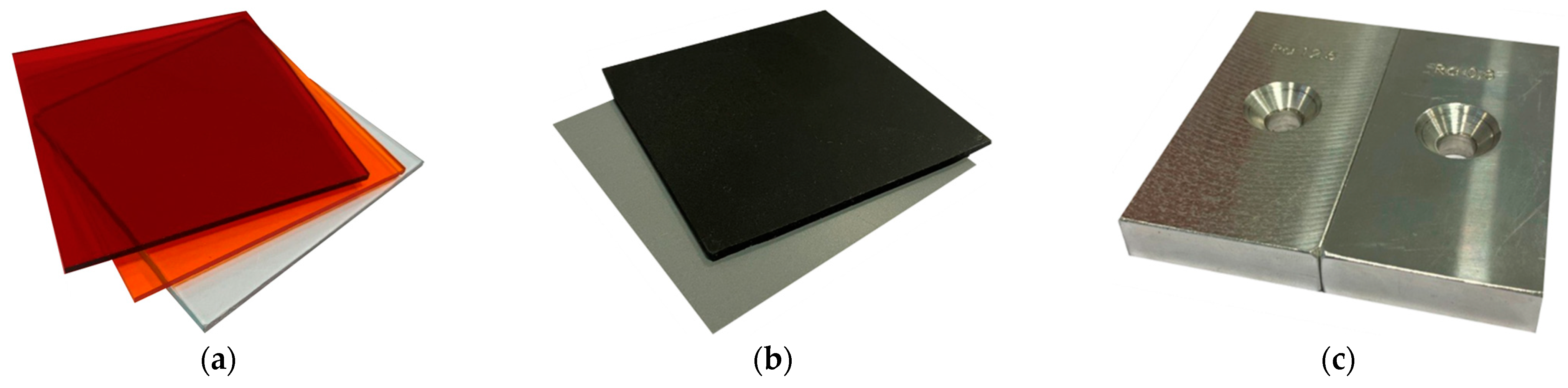

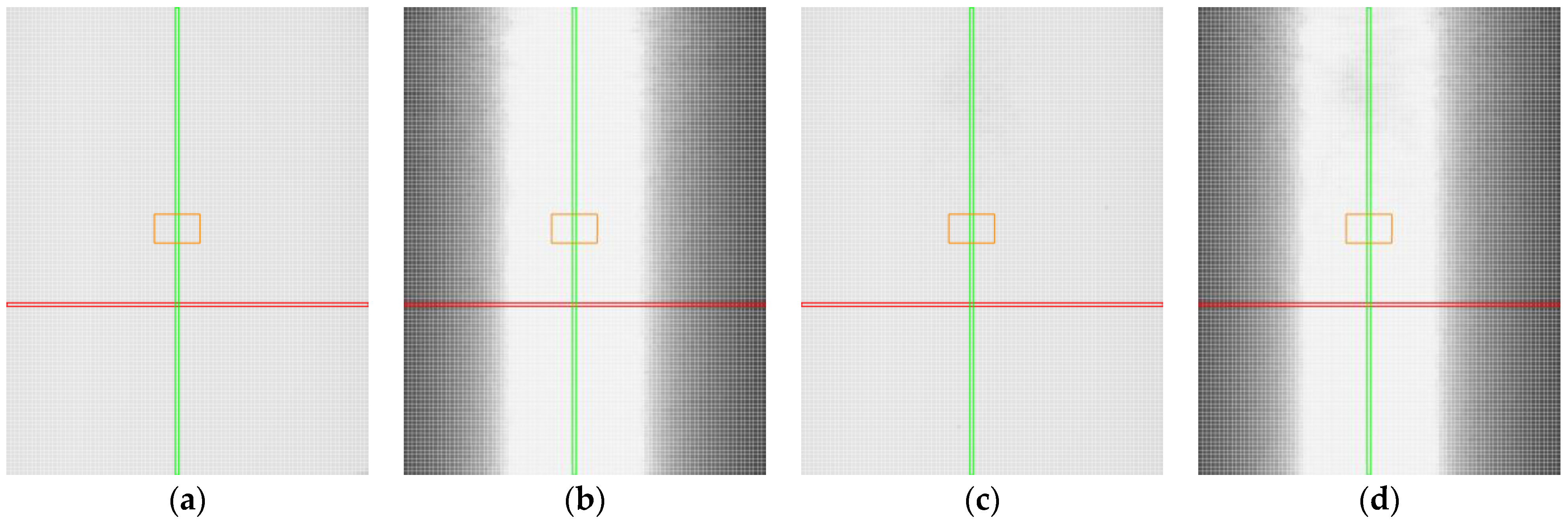

4.1. Non-Transparent Plastics

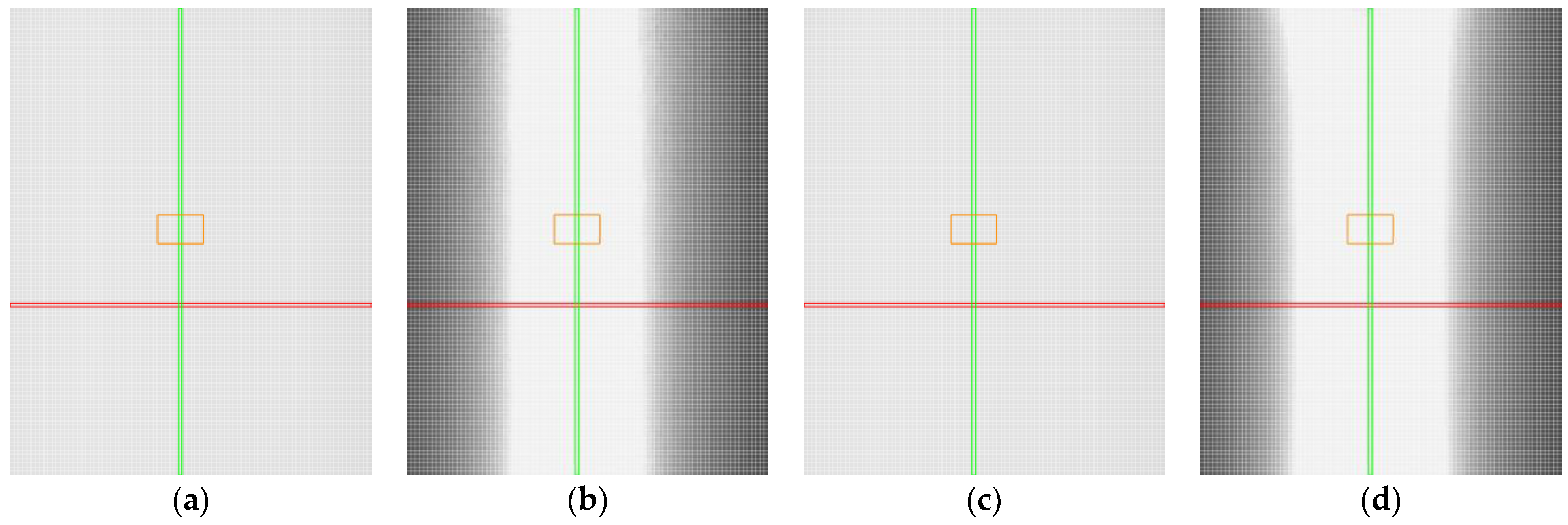

4.2. Aluminium Alloys

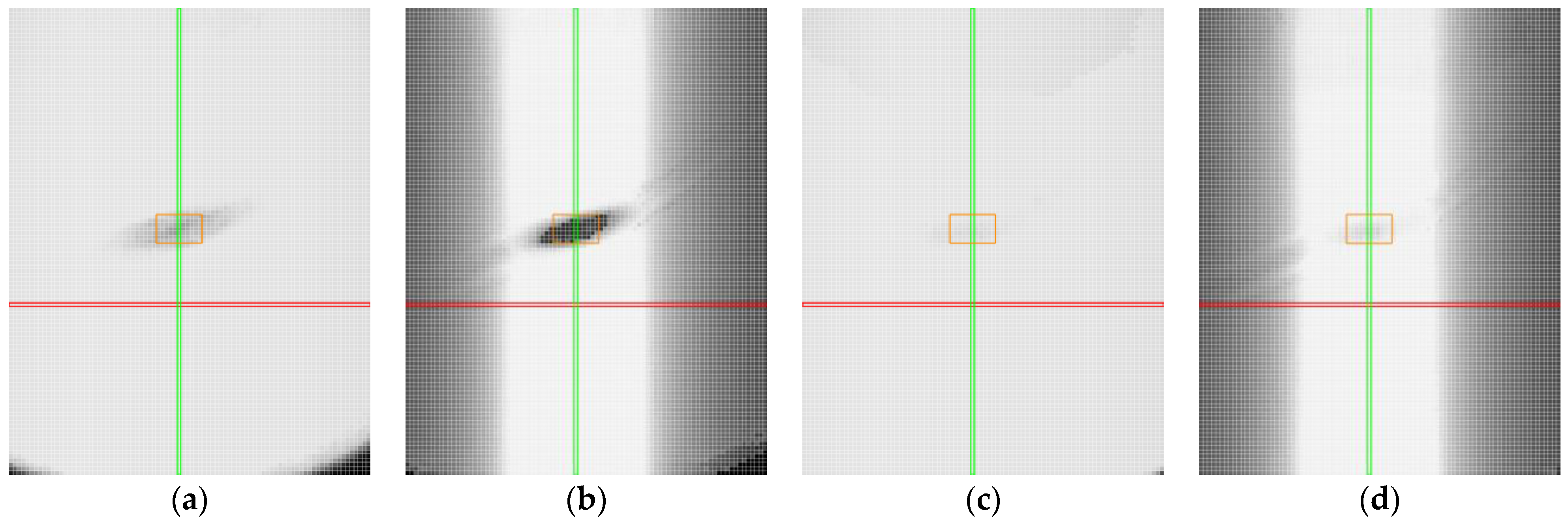

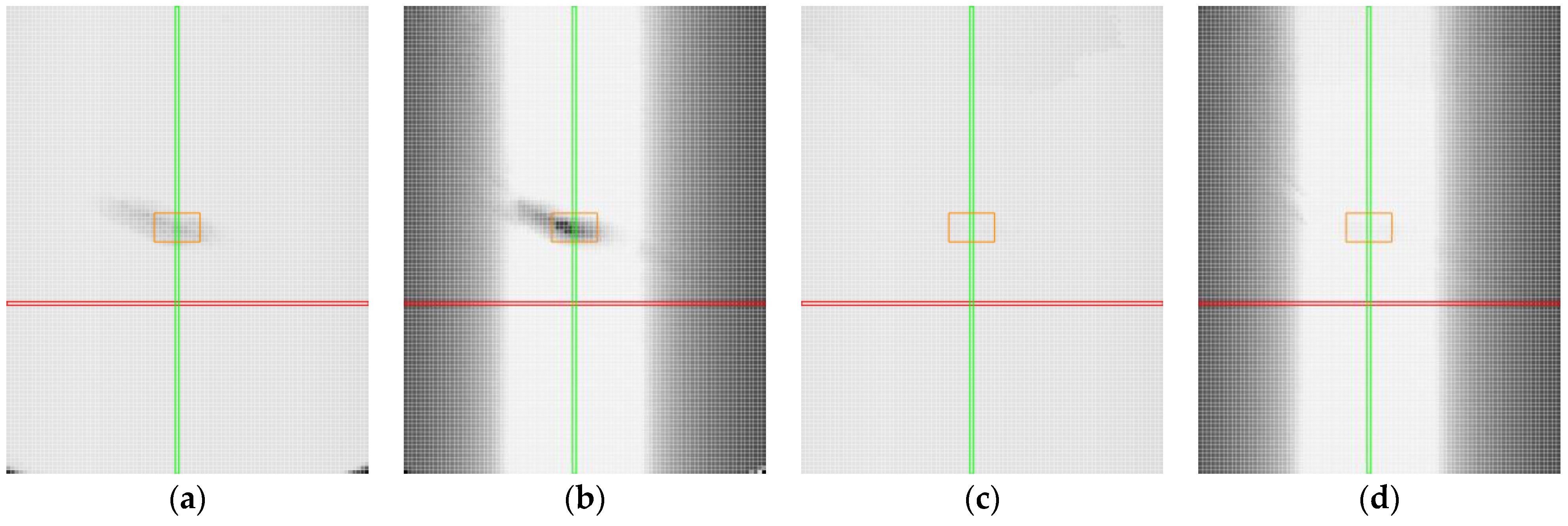

4.3. Transparent Plastics

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Camburu, G.; Zemba, M.; Tătaru, C.P.; Purcărea, V.L. The measurement of Central Corneal Thickness. Rom. J. Ophthalmol. 2023, 67, 168–174. [Google Scholar] [CrossRef] [PubMed]

- Kaushik, A.; Gahletia, S.; Garg, R.K.; Sharma, P.; Chhabra, D.; Yadav, M. Advanced 3D body scanning techniques and its clinical applications. In Proceedings of the 2022 International Conference on Computational Modelling, Simulation and Optimization (ICCMSO), Pathum Thani, Thailand, 23–25 December 2022; pp. 352–358. [Google Scholar] [CrossRef]

- Jiang, N.; Li, H.-B.; Li, C.-J.; Xiao, H.-X.; Zhou, J.-W. A Fusion Method Using Terrestrial Laser Scanning and Unmanned Aerial Vehicle Photogrammetry for Landslide Deformation Monitoring Under Complex Terrain Conditions. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Blair, J.B.; Rabine, D.L.; Hofton, M.A. The Laser Vegetation Imaging Sensor: A medium-altitude, digitisation-only, airborne laser altimeter for mapping vegetation and topography. ISPRS J. Photogramm. Remote Sens. 1999, 54, 115–122. [Google Scholar] [CrossRef]

- Andaru, R.; Cahyono, B.K.; Riyadi, G.; Istarno; Djurdjani; Ramadhan, G.R.; Tuntas, S. The Combination of Terrestrial Lidar and UAV Photogrammetry for Interactive Architectural Heritage Visualization Using Unity 3D Game Engine. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W17, 39–44. [Google Scholar] [CrossRef]

- López, A.; Ogayar, C.J.; Jurado, J.M.; Feito, F.R. A GPU-Accelerated Framework for Simulating LiDAR Scanning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Gan, C.; Schwartz, J.; Alter, S.; Schrimpf, M.; Traer, J.; De Freitas, J.; Kubilius, J.; Bhandwaldar, A.; Haber, N.; Sano, M.; et al. 3D World: A Platform for Interactive Multi-Modal Physical Simulation. In Proceedings of the Annual Conference on Neural Information Processing Systems, Online, 6 December 2021; Available online: https://research.ibm.com/publications/threedworld-a-platform-for-interactive-multi-modal-physical-simulation (accessed on 20 May 2024).

- Dupuis, J.; Holst, C.; Kuhlmann, H. Laser Scanning Based Growth Analysis of Plants as a New Challenge for Deformation Monitoring. J. Appl. Geodesy 2016, 10, 37–44. [Google Scholar] [CrossRef]

- Williams, S.R.; Baniya, A.A.; Islam, M.S.; Murphy, K. A Data Ecosystem for Orchard Research and Early Fruit Traceability. Horticulturae 2023, 9, 1013. [Google Scholar] [CrossRef]

- Rofalis, N.; Olesen, A.S.; Jakobsen, M.L.; Krüger, V. Fast Visual Part Inspection for Bin Picking. In Proceedings of the 2016 IEEE International Conference on Imaging Systems and Techniques (IST), Chania, Greece, 4–6 October 2016; pp. 412–417. [Google Scholar] [CrossRef]

- Phan, N.D.M.; Quinsat, Y.; Lartigue, C. Optimal scanning strategy for on-machine inspection with laser-plane sensor. Int. J. Adv. Manuf. Technol. 2019, 103, 4563–4576. [Google Scholar] [CrossRef]

- Yu, Z.; Wang, T.; Wang, P.; Tian, Y.; Li, H. Rapid and Precise Reverse Engineering of Complex Geometry Through Multi-Sensor Data Fusion. IEEE Access 2019, 7, 165793–165813. [Google Scholar] [CrossRef]

- Bao-qing, D.; Yu-shu, W. Research on Welding Seam Tracking Technology Based on Linear Laser CCD Robot. In Proceedings of the 2019 3rd International Conference on Robotics and Automation Sciences (ICRAS), Wuhan, China, 1–3 June 2019; pp. 54–57. [Google Scholar] [CrossRef]

- Vandone, A.; Baraldo, S.; Valente, A.; Mazzucato, F. Vision-based melt pool monitoring system setup for additive manufacturing. Procedia CIRP 2019, 81, 747–752. [Google Scholar] [CrossRef]

- Delli, U.; Chang, S. Automated Process Monitoring in 3D Printing Using Supervised Machine Learning. Procedia Manuf. 2018, 26, 865–870. [Google Scholar] [CrossRef]

- Senthilnathan, S.; Raphael, B. Using Computer Vision for Monitoring the Quality of 3D-Printed Concrete Structures. Sustainability 2022, 14, 15682. [Google Scholar] [CrossRef]

- Castellazzi, G.; D’Altri, A.M.; Bitelli, G.; Selvaggi, I.; Lambertini, A. From Laser Scanning to Finite Element Analysis of Complex Buildings by Using a Semi-Automatic Procedure. Sensors 2015, 15, 18360–18380. [Google Scholar] [CrossRef] [PubMed]

- Tallavajhula, A.; Meriçli, Ç.; Kelly, A. Off-Road Lidar Simulation with Data-Driven Terrain Primitives. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 7470–7477. [Google Scholar] [CrossRef]

- Bickel, G.; Häusler, G.; Maul, M. Triangulation with expanded range of depth. Opt. Eng. 1985, 24, 975–977. [Google Scholar] [CrossRef]

- Buzinski, M.; Levine, A.; Stevenson, W.H. Laser Triangulation Range Sensors: A Study of Performance Limitations. In International Congress on Applications of Lasers & Electro-Optics; AIP Publishing: Melville, NY, USA, 1991; pp. 1–15. [Google Scholar] [CrossRef]

- Wen, X.; Wang, J.; Zhang, G.; Niu, L. Three-Dimensional Morphology and Size Measurement of High-Temperature Metal Components Based on Machine Vision Technology: A Review. Sensors 2021, 21, 4680. [Google Scholar] [CrossRef]

- Wu, S.; Feng, Q.; Gao, Z.; Han, Q. A Novel Laser Triangulation Sensor with Wide Dynamic Range. In SAE 2011 World Congress & Exhibition; SAE International: Warrendale, PA, USA, 2011. [Google Scholar] [CrossRef]

- Nayar, S.; Ikeuchi, K.; Kanade, T. Surface Reflection—Physical and Geometrical Perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 611–634. [Google Scholar] [CrossRef]

- Oren, M.; Nayar, S.K. Generalization of the Lambertian model and implications for machine vision. Int. J. Comput. Vis. 1995, 14, 227–251. [Google Scholar] [CrossRef]

- Tsitsas, N.L.; Valagiannopoulos, C.A. Anomalous reflection of visible light by all-dielectric gradient metasurfaces. J. Opt. Soc. Am. B 2017, 34, D1–D8. [Google Scholar] [CrossRef]

- Qi, C.; Wong, A.M.H. Broadband efficient anomalous reflection using an aggressively discretized metasurface. Opt. Express 2022, 30, 15735–15746. [Google Scholar] [CrossRef]

- Heczko, D.; Oščádal, P.; Kot, T.; Huczala, D.; Semjon, J.; Bobovský, Z. Increasing the Reliability of Data Collection of Laser Line Triangulation Sensor by Proper Placement of the Sensor. Sensors 2021, 21, 2890. [Google Scholar] [CrossRef]

- Yu, Q.; Zhang, Y.; Shang, W.; Dong, S.; Wang, C.; Wang, Y.; Liu, T.; Cheng, F. Thickness Measurement for Glass Slides Based on Chromatic Confocal Microscopy with Inclined Illumination. Photonics 2021, 8, 170. [Google Scholar] [CrossRef]

- Heczko, D.; Oščádal, P.; Kot, T.; Boleslavský, A.; Krys, V.; Bém, J.; Virgala, I.; Bobovský, Z. Finding the Optimal Pose of 2D LLT Sensors to Improve Object Pose Estimation. Sensors 2022, 22, 1536. [Google Scholar] [CrossRef] [PubMed]

- Van Gestel, N.; Cuypers, S.; Bleys, P.; Kruth, J.-P. A performance evaluation test for laser line scanners on CMMs. Opt. Lasers Eng. 2009, 47, 336–342. [Google Scholar] [CrossRef]

- Vlaeyen, M.; Haitjema, H.; Dewulf, W. Error compensation for laser line scanners. Measurement 2021, 175, 109085. [Google Scholar] [CrossRef]

- Vocetka, M.; Bobovský, Z.; Babjak, J.; Suder, J.; Grushko, S.; Mlotek, J.; Krys, V.; Hagara, M. Influence of Drift on Robot Repeatability and Its Compensation. Appl. Sci. 2021, 11, 10813. [Google Scholar] [CrossRef]

- LJ-X, S.H.8080. KEYENCE UK & Ireland. Available online: https://www.keyence.co.uk/products/measure/laser-2d/lj-x8000/models/lj-x8080/ (accessed on 21 December 2023).

- Suder, J.; Bobovsky, Z.; Mlotek, J.; Vocetka, M.; Zeman, Z.; Safar, M. Experimental Analysis of Temperature Resistance of 3D Printed PLA Components. MMSJ 2021, 1, 4322–4327. [Google Scholar] [CrossRef]

- Vlaeyen, M.; Haitjema, H.; Dewulf, W. Digital Twin of an Optical Measurement System. Sensors 2021, 21, 6638. [Google Scholar] [CrossRef]

- Kot, T.; Bobovský, Z.; Heczko, D.; Vysocký, A.; Virgala, I.; Prada, E. Using Virtual Scanning to Find Optimal Configuration of a 3D Scanner Turntable for Scanning of Mechanical Parts. Sensors 2021, 21, 5343. [Google Scholar] [CrossRef]

- Ružarovský, R.; Skýpala, R. A general take on a Tecnomatix Process Simulate’s Digital Twin creation and its exchange of information with the TIA Portal and PLC SIM Advanced. J. Phys. Conf. Ser. 2022, 2212, 012010. [Google Scholar] [CrossRef]

| Parameter | Specification |

|---|---|

| Reference distance (Z-axis) | 73 mm |

| Measuring range (Z-axis) | ±20.5 mm (full scale = 41 mm) |

| Measuring range (X-axis) | 30 mm (near side) |

| 35 mm (reference distance) | |

| 39 (far side) | |

| Linearity (Z-axis) | ±0.03% of the full scale |

| Profile data count | 3200 points |

| Laser type | Blue laser |

| Laser source | 10 mW |

| Laser wavelength | 405 nm (visible light) |

| Material | Width [mm] | Height [mm] | Thickness [mm] | Roughness |

|---|---|---|---|---|

| Non-transparent plastics | 100 | 100 | 3 | Ra 1.2 |

| Coloured transparent plastics | 100 | 100 | 3 | Ra 0.01–0.04 |

| Pure transparent plastic | 100 | 100 | 5 | Ra 0.01–0.04 |

| Aluminium alloy | 40 | 80 | 12 | Ra 0.8 |

| Ra 1.6 | ||||

| Ra 3.2 | ||||

| Ra 6.3 | ||||

| Ra 12.5 |

| Parameter | Description | Value |

|---|---|---|

| Dynamic range | It specifies the light-receiving sensitivity range of the capture element in the sensor unit. For high precision, the dynamic range is lowered, and the peak is measured at high sensitivity. This is used for target objects that have a small difference in reflectance. | 1 to 9 |

| Exposure time | It sets the exposure time of the capture element in the sensor unit. It is the length of time in which the camera collects light from the sample. | 15 μs, 30 μs, 60 μs, 120 μs, 240 μs, 480 μs, 960 μs, 1700 μs, 9.6 ms |

| Detection sensitivity | It sets the threshold value for the received light quantity to be detected. Increasing this value makes it easier for a received light quantity to be detected. Reduce this value to prevent mis-detection due to ambient light or multiple reflected lights. | 1 to 5 |

| Laser power | Laser power in percentage. The maximum power is 10 mW. | 1–100% |

| Parameter | Manual Settings | Automatic Settings | ||

|---|---|---|---|---|

| Aluminium Alloys and Grey Plastic | Black Plastic | Transparent Plastics | ||

| Dynamic range | 1 | 7 | 9 | 1 |

| Exposure time | 120 μs (transparent, roll, and pitch) 240 μs (transparent and roll) 480 μs (other materials) | 480 μs | 9.6 ms | 9.6 ms |

| Detection sensitivity | 3 | 3 | 3 | 3 |

| Laser power | 100 (black plastic) 40 (aluminium alloys) 20 (grey and transparent plastics) | 1–100 (auto) | 1–100 (auto) | 1–100 (auto) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Heczko, D.; Chlebek, J.; Mlotek, J.; Kot, T.; Scalera, L.; Dekan, M.; Zeman, Z.; Bobovský, Z. Enhancing Data Collection Through Optimization of Laser Line Triangulation Sensor Settings and Positioning. Sensors 2025, 25, 1772. https://doi.org/10.3390/s25061772

Heczko D, Chlebek J, Mlotek J, Kot T, Scalera L, Dekan M, Zeman Z, Bobovský Z. Enhancing Data Collection Through Optimization of Laser Line Triangulation Sensor Settings and Positioning. Sensors. 2025; 25(6):1772. https://doi.org/10.3390/s25061772

Chicago/Turabian StyleHeczko, Dominik, Jakub Chlebek, Jakub Mlotek, Tomáš Kot, Lorenzo Scalera, Martin Dekan, Zdeněk Zeman, and Zdenko Bobovský. 2025. "Enhancing Data Collection Through Optimization of Laser Line Triangulation Sensor Settings and Positioning" Sensors 25, no. 6: 1772. https://doi.org/10.3390/s25061772

APA StyleHeczko, D., Chlebek, J., Mlotek, J., Kot, T., Scalera, L., Dekan, M., Zeman, Z., & Bobovský, Z. (2025). Enhancing Data Collection Through Optimization of Laser Line Triangulation Sensor Settings and Positioning. Sensors, 25(6), 1772. https://doi.org/10.3390/s25061772