YOLO-BCD: A Lightweight Multi-Module Fusion Network for Real-Time Sheep Pose Estimation

Abstract

:1. Introduction

2. Materials and Methods

2.1. Animal Pose Estimation

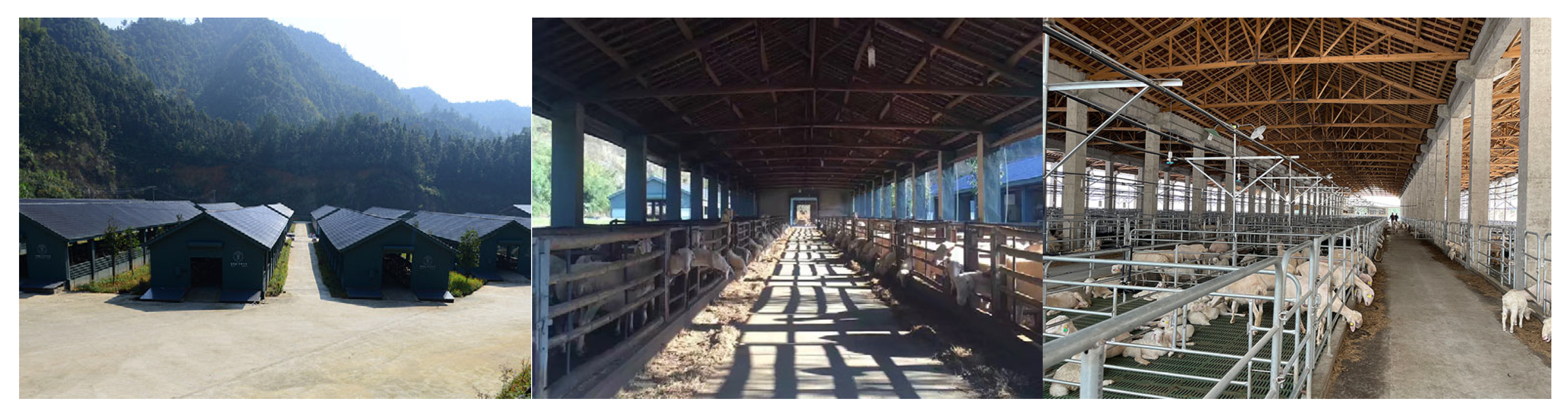

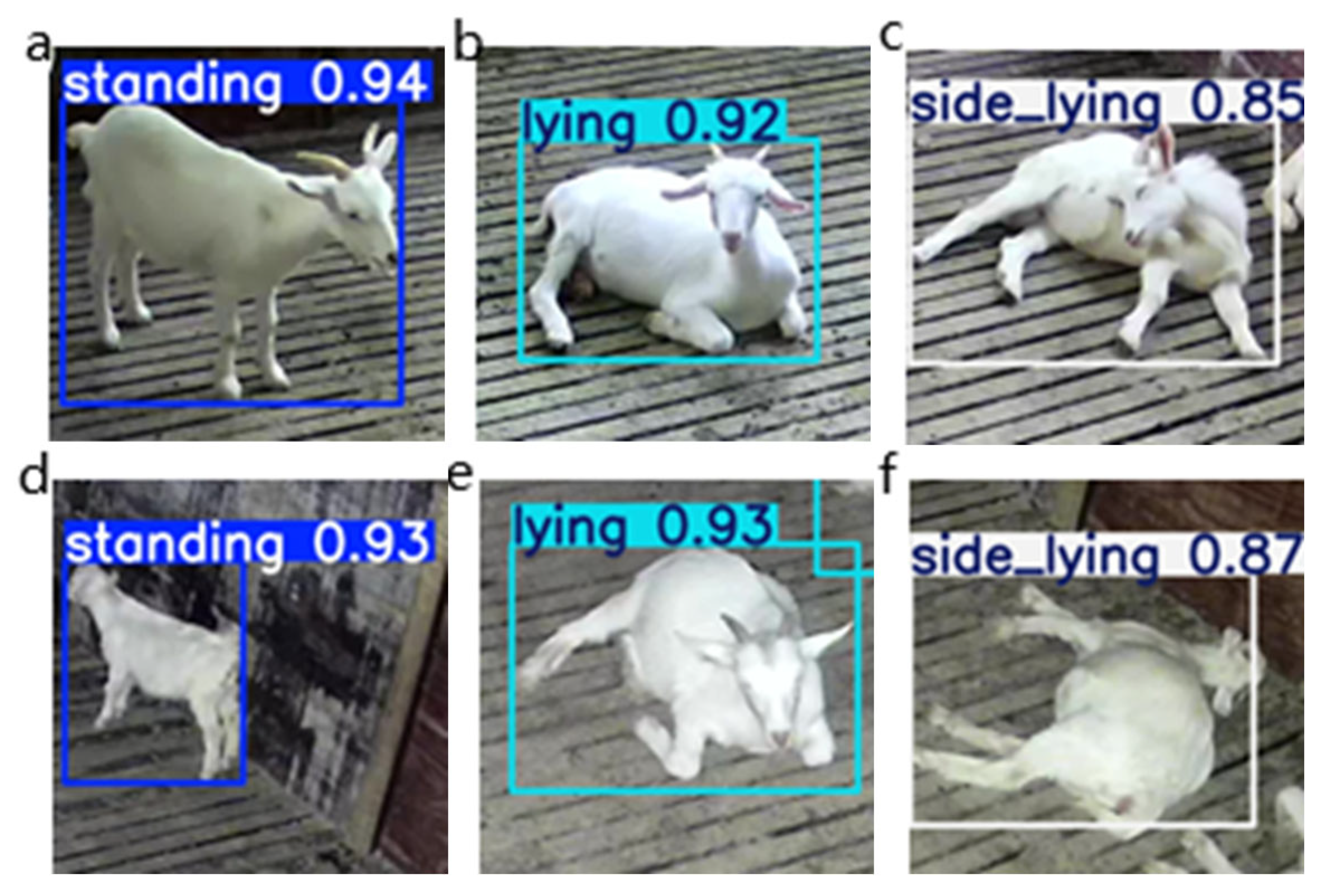

2.2. Dataset Construction

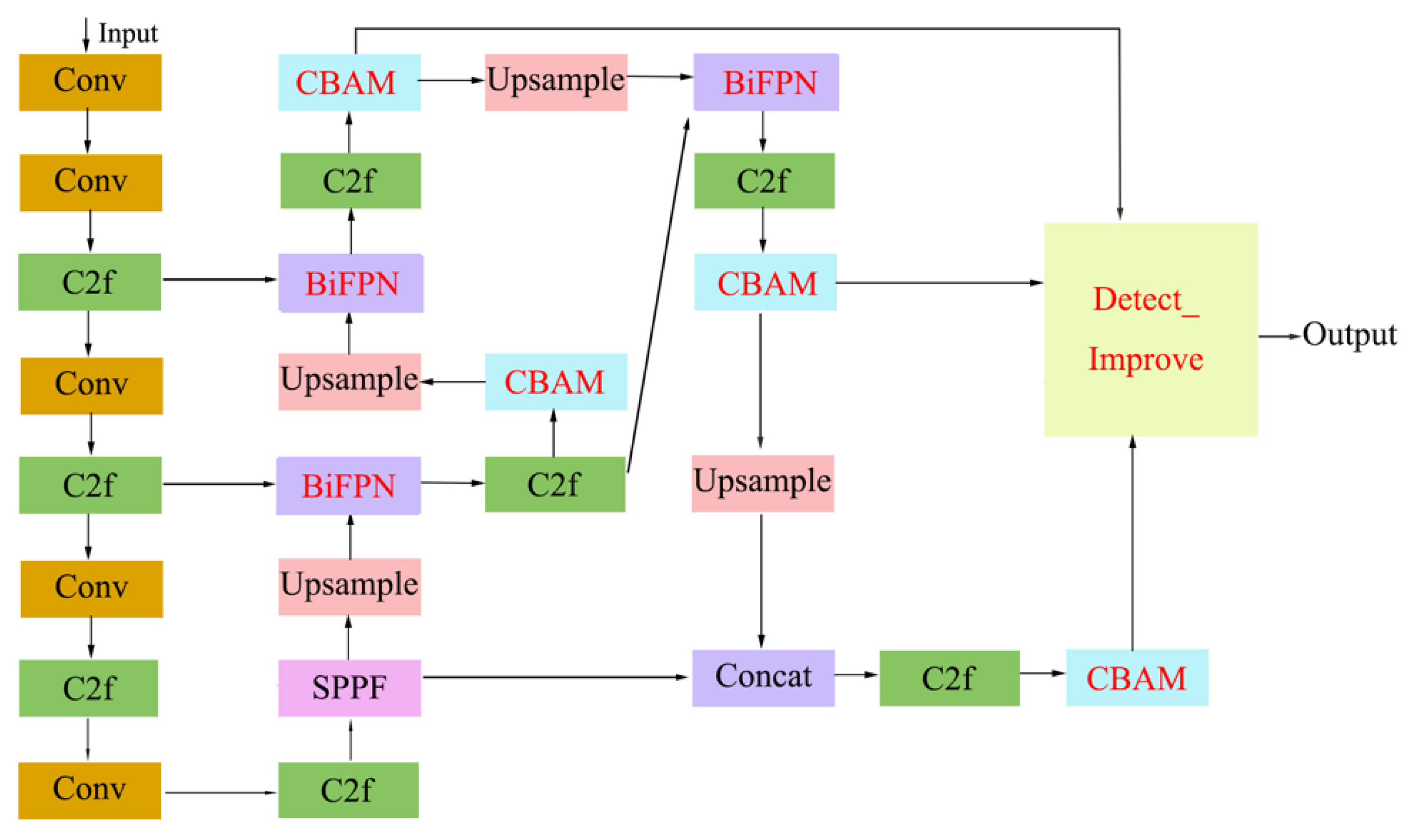

2.3. Detection Network

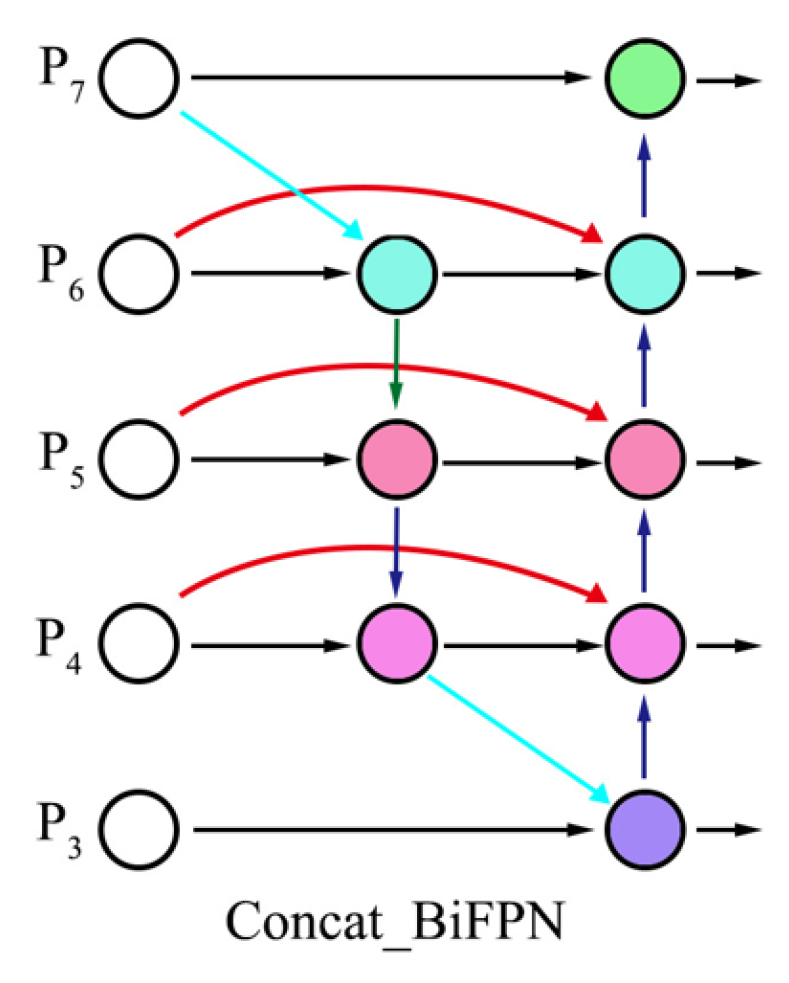

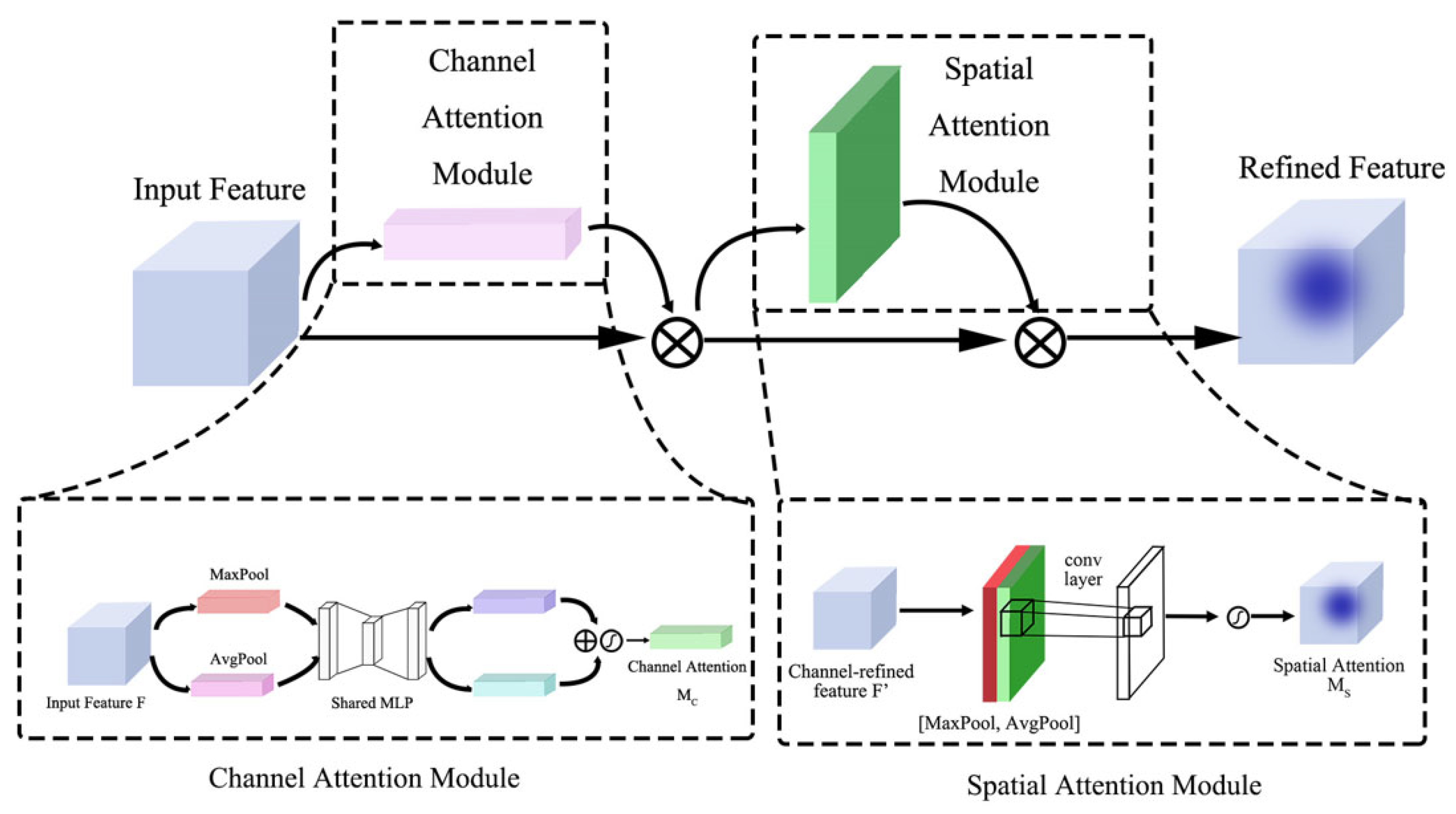

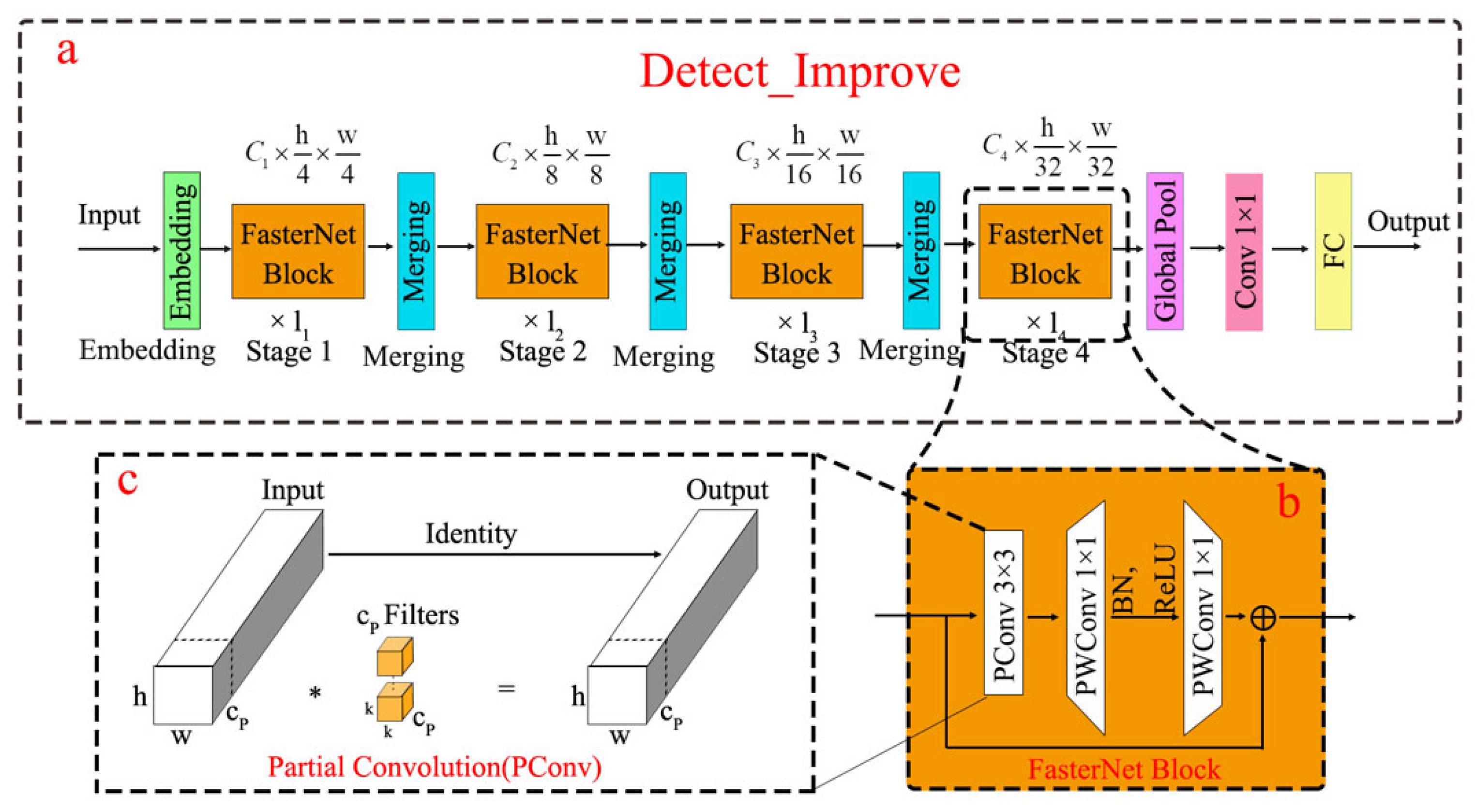

2.4. Key Improvements

3. Results

3.1. Experimental Settings

3.2. Experimental Details and Evaluation Metrics

3.3. Comprehensive Comparison of Different Models

3.4. Comparison Under Different Lighting Conditions

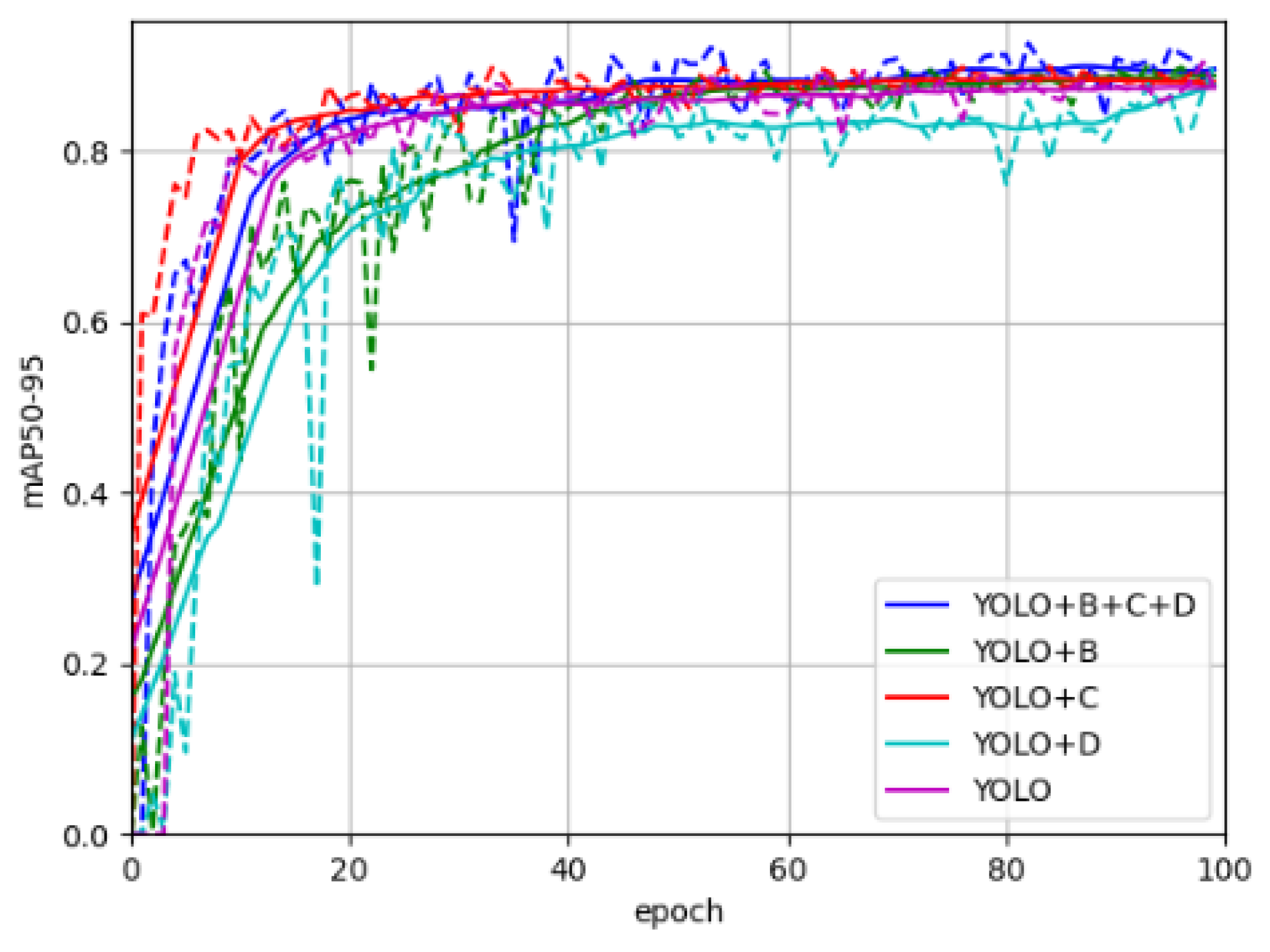

3.5. Ablation Study

3.6. Heatmap Visualization Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Su, Q.; Tang, J.; Zhai, M.; He, D. An Intelligent Method for Dairy Goat Tracking Based on Siamese Network. Comput. Electron. Agric. 2022, 193, 106636. [Google Scholar] [CrossRef]

- Mao, R.; Shen, D.; Wang, R.; Cui, Y.; Hu, Y.; Li, M.; Wang, M. An Integrated Gather-and-Distribute Mechanism and Attention-Enhanced Deformable Convolution Model for Pig Behavior Recognition. Animals 2024, 14, 1316. [Google Scholar] [CrossRef]

- Cheng, M.; McCarl, B.; Fei, C. Climate Change and Livestock Production: A Literature Review. Atmosphere 2022, 13, 140. [Google Scholar] [CrossRef]

- Choukeir, A.I.; Kovacs, L.; Kezer, L.F.; Bujak, D.; Szelenyi, Z.; Abdelmegeid, M.K.; Gaspardy, A.; Szenci, O. Evaluation of a Commercial Intravaginal Thermometer to Predict Calving in a Hungarian Holstein-Friesian Dairy Farm. Reprod. Domest. Anim. 2020, 55, 1535–1540. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.; Kondo, N.; Fujiura, T.; Suzuki, T.; Ouma, S.; Wulandari; Yoshioka, H.; Itoyama, E. Dam Behavior Patterns in Japanese Black Beef Cattle Prior to Calving: Automated Detection Using LSTM-RNN. Comput. Electron. Agric. 2020, 169, 105178. [Google Scholar] [CrossRef]

- Taneja, M.; Byabazaire, J.; Jalodia, N.; Davy, A.; Olariu, C.; Malone, P. Machine Learning Based Fog Computing Assisted Data-Driven Approach for Early Lameness Detection in Dairy Cattle. Comput. Electron. Agric. 2020, 171, 105286. [Google Scholar] [CrossRef]

- Yin, M.; Ma, R.; Luo, H.; Li, J.; Zhao, Q.; Zhang, M. Non-Contact Sensing Technology Enables Precision Livestock Farming in Smart Farms. Comput. Electron. Agric. 2023, 212, 108171. [Google Scholar] [CrossRef]

- Zhang, X.; Xuan, C.; Xue, J.; Chen, B.; Ma, Y. LSR-YOLO: A High-Precision, Lightweight Model for Sheep Face Recognition on the Mobile End. Animals 2023, 13, 1824. [Google Scholar] [CrossRef]

- Lei, J.; Gao, S.; Rasool, M.A.; Fan, R.; Jia, Y.; Lei, G. Optimized Small Waterbird Detection Method Using Surveillance Videos Based on YOLOv7. Animals 2023, 13, 1929. [Google Scholar] [CrossRef]

- Yu, R.; Wei, X.; Liu, Y.; Yang, F.; Shen, W.; Gu, Z. Research on Automatic Recognition of Dairy Cow Daily Behaviors Based on Deep Learning. Animals 2024, 14, 458. [Google Scholar] [CrossRef]

- Borges Oliveira, D.A.; Ribeiro Pereira, L.G.; Bresolin, T.; Pontes Ferreira, R.E.; Reboucas Dorea, J.R. A Review of Deep Learning Algorithms for Computer Vision Systems in Livestock. Livest. Sci. 2021, 253, 104700. [Google Scholar] [CrossRef]

- da Cunha, A.C.R.; Antunes, R.C.; da Costa, W.G.; Rebouças, G.F.; Leite, C.D.S.; do Carmo, A.S. Body Weight Prediction in Crossbred Pigs from Digital Images Using Computer Vision. Livest. Sci. 2024, 282, 105433. [Google Scholar] [CrossRef]

- Kelly, N.A.; Khan, B.M.; Ayub, M.Y.; Hussain, A.J.; Dajani, K.; Hou, Y.; Khan, W. Video Dataset of Sheep Activity for Animal Behavioral Analysis via Deep Learning. Data Brief 2024, 52, 110027. [Google Scholar] [CrossRef]

- Rohan, A.; Rafaq, M.S.; Hasan, M.J.; Asghar, F.; Bashir, A.K.; Dottorini, T. Application of Deep Learning for Livestock Behaviour Recognition: A Systematic Literature Review. Comput. Electron. Agric. 2024, 224, 109115. [Google Scholar] [CrossRef]

- Xu, J.; Zhou, S.; Xia, F.; Xu, A.; Ye, J. Research on the Lying Pattern of Grouped Pigs Using Unsupervised Clustering and Deep Learning. Livest. Sci. 2022, 260, 104946. [Google Scholar] [CrossRef]

- Tao, Y.; Li, F.; Sun, Y. Development and Implementation of a Training Dataset to Ensure Clear Boundary Value of Body Condition Score Classification of Dairy Cows in Automatic System. Livest. Sci. 2022, 259, 104901. [Google Scholar] [CrossRef]

- Yu, L.; Guo, J.; Pu, Y.; Cen, H.; Li, J.; Liu, S.; Nie, J.; Ge, J.; Yang, S.; Zhao, H.; et al. A Recognition Method of Ewe Estrus Crawling Behavior Based on Multi-Target Detection Layer Neural Network. Animals 2023, 13, 413. [Google Scholar] [CrossRef]

- Chen, G.; Yuan, Z.; Luo, X.; Liang, J.; Wang, C. Research on Behavior Recognition and Online Monitoring System for Liaoning Cashmere Goats Based on Deep Learning. Animals 2024, 14, 3197. [Google Scholar] [CrossRef] [PubMed]

- Tan, F.; Tang, Y.; Yi, J. Multi-Pose Face Recognition Method Based on Improved Depth Residual Network. Int. J. Biol. Macromol. 2024, 16, 514–532. [Google Scholar] [CrossRef]

- Holinger, M.; Bühl, V.; Helbing, M.; Pieper, L.; Kürmann, S.; Pontiggia, A.; Dohme-Meier, F.; Keil, N.; Ammer, S. Behavioural Changes to Moderate Heat Load in Grazing Dairy Cows under On-Farm Conditions. Livest. Sci. 2024, 279, 105376. [Google Scholar] [CrossRef]

- Molle, G.; Cannas, A.; Gregorini, P. A Review on the Effects of Part-Time Grazing Herbaceous Pastures on Feeding Behaviour and Intake of Cattle, Sheep and Horses. Livest. Sci. 2022, 263, 104982. [Google Scholar] [CrossRef]

- Tzanidakis, C.; Simitzis, P.; Arvanitis, K.; Panagakis, P. An Overview of the Current Trends in Precision Pig Farming Technologies. Livest. Sci. 2021, 249, 104530. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, L.; Tang, J.; Tang, C.; An, R.; Han, R.; Zhang, Y. GRMPose: GCN-Based Real-Time Dairy Goat Pose Estimation. Comput. Electron. Agric. 2024, 218, 108662. [Google Scholar] [CrossRef]

- Riaboff, L.; Relun, A.; Petiot, C.-E.; Feuilloy, M.; Couvreur, S.; Madouasse, A. Identification of Discriminating Behavioural and Movement Variables in Lameness Scores of Dairy Cows at Pasture from Accelerometer and GPS Sensors Using a Partial Least Squares Discriminant Analysis. Prev. Vet. Med. 2021, 193, 105383. [Google Scholar] [CrossRef]

- Zhu, W.; Guo, Y.; Jiao, P.; Ma, C.; Chen, C. Recognition and Drinking Behaviour Analysis of Individual Pigs Based on Machine Vision. Livest. Sci. 2017, 205, 129–136. [Google Scholar] [CrossRef]

- Mu, Y.; Hu, J.; Wang, H.; Li, S.; Zhu, H.; Luo, L.; Wei, J.; Ni, L.; Chao, H.; Hu, T.; et al. Research on the Behavior Recognition of Beef Cattle Based on the Improved Lightweight CBR-YOLO Model Based on YOLOv8 in Multi-Scene Weather. Animals 2024, 14, 2800. [Google Scholar] [CrossRef]

- Huang, X.; Hu, Z.; Wang, X.; Yang, X.; Zhang, J.; Shi, D. An Improved Single Shot Multibox Detector Method Applied in Body Condition Score for Dairy Cows. Animals 2019, 9, 470. [Google Scholar] [CrossRef]

- Huang, R.; Zhang, B.; Yao, Z.; Xie, B.; Guo, J. DESNet: Real-Time Human Pose Estimation for Sports Applications Combining IoT and Deep Learning. Alex. Eng. J. 2025, 112, 293–306. [Google Scholar] [CrossRef]

- Guarnido-Lopez, P.; Ramirez-Agudelo, J.-F.; Denimal, E.; Benaouda, M. Programming and Setting Up the Object Detection Algorithm YOLO to Determine Feeding Activities of Beef Cattle: A Comparison between YOLOv8m and YOLOv10m. Animals 2024, 14, 2821. [Google Scholar] [CrossRef]

- Sozzi, M.; Pillan, G.; Ciarelli, C.; Marinello, F.; Pirrone, F.; Bordignon, F.; Bordignon, A.; Xiccato, G.; Trocino, A. Measuring Comfort Behaviours in Laying Hens Using Deep-Learning Tools. Animals 2023, 13, 33. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 779–788. [Google Scholar]

- Tan, F.; Zhai, M.; Zhai, C. Foreign Object Detection in Urban Rail Transit Based on Deep Differentiation Segmentation Neural Network. Heliyon 2024, 10, e37072. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the COMPUTER VISION—ECCV 2018, PT VII, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing Ag: Cham, Switzerland, 2018; Volume 11211, pp. 3–19. [Google Scholar]

| Model | Precision | Recall | Standing | Lying | Side Lying | mAP50 | Params (M) | GFLOPs (G) |

|---|---|---|---|---|---|---|---|---|

| Faster RCNN | 74.52 | 92.49 | 97.82 | 88.14 | 89.51 | 91.82 | 28.480 | 941.169 |

| SSD | 82.76 | 81.29 | 96.49 | 86.13 | 82.22 | 88.28 | 26.285 | 62.747 |

| DETR | 73.25 | 91.74 | 97.18 | 85.12 | 85.05 | 89.12 | 36.762 | 114.249 |

| YOLO v5 | 87.21 | 82.68 | 95.30 | 85.30 | 85.70 | 88.80 | 2.504 | 7.1 |

| YOLO v8 | 85.23 | 85.42 | 94.30 | 84.30 | 86.70 | 88.50 | 3.006 | 8.1 |

| YOLO v10 | 81.73 | 83.22 | 94.60 | 86.20 | 86.30 | 89.00 | 2.696 | 8.2 |

| YOLO v11 | 87.91 | 77.83 | 94.00 | 86.30 | 88.00 | 89.40 | 2.583 | 6.3 |

| YOLO v8-BCD | 91.84 | 81.33 | 96.90 | 86.40 | 91.90 | 91.70 | 2.433 | 5.5 |

| Model | Precision | Recall | Standing | Lying | Side Lying | mAP50 |

|---|---|---|---|---|---|---|

| YOLO v8 | 84.61 | 83.83 | 95.20 | 96.10 | 82.20 | 91.20 |

| YOLO v8-BCD | 86.22 | 86.56 | 96.50 | 93.70 | 87.90 | 92.70 |

| Model | Precision | Recall | Standing | Lying | Side Lying | mAP50 |

|---|---|---|---|---|---|---|

| YOLO v8 | 83.84 | 78.51 | 88.60 | 84.40 | 89.80 | 87.60 |

| YOLO v8-BCD | 84.63 | 82.61 | 95.80 | 85.80 | 88.20 | 89.90 |

| Model | BiFPN | CBAM | Detect_ Improve | Precision | Recall | F1 | mAP50 | FPS (ms) | Params (M) | GFLOPs (G) |

|---|---|---|---|---|---|---|---|---|---|---|

| YOLO v8 | no | no | no | 85.23 | 85.42 | 85.32 | 88.5% | 312.33 | 3.006 | 8.1 |

| YOLO v8 | add | no | no | 88.74 | 79.75 | 84.01 | 91.1% | 265.78 | 3.006 | 8.1 |

| YOLO v8 | no | add | no | 86.33 | 84.60 | 85.46 | 91.5% | 327.45 | 3.019 | 8.1 |

| YOLO v8 | no | no | add | 91.20 | 73.78 | 81.57 | 88.2% | 378.21 | 2.420 | 5.5 |

| YOLO v8 | add | add | no | 87.05 | 81.32 | 84.09 | 92.6% | 342.96 | 3.019 | 8.1 |

| YOLO v8 | add | no | add | 88.46 | 81.12 | 84.63 | 90.9% | 356.89 | 2.420 | 5.5 |

| YOLO v8 | no | add | add | 93.07 | 69.13 | 79.33 | 91.2% | 395.67 | 2.433 | 5.5 |

| YOLO v8 | add | add | add | 91.84 | 81.33 | 86.27 | 91.7% | 389.12 | 2.433 | 5.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, C.; Hu, J.; Wang, Q.; Zhu, C.; Chen, L.; Shi, C. YOLO-BCD: A Lightweight Multi-Module Fusion Network for Real-Time Sheep Pose Estimation. Sensors 2025, 25, 2687. https://doi.org/10.3390/s25092687

Sun C, Hu J, Wang Q, Zhu C, Chen L, Shi C. YOLO-BCD: A Lightweight Multi-Module Fusion Network for Real-Time Sheep Pose Estimation. Sensors. 2025; 25(9):2687. https://doi.org/10.3390/s25092687

Chicago/Turabian StyleSun, Chaojie, Junguo Hu, Qingyue Wang, Chao Zhu, Lei Chen, and Chunmei Shi. 2025. "YOLO-BCD: A Lightweight Multi-Module Fusion Network for Real-Time Sheep Pose Estimation" Sensors 25, no. 9: 2687. https://doi.org/10.3390/s25092687

APA StyleSun, C., Hu, J., Wang, Q., Zhu, C., Chen, L., & Shi, C. (2025). YOLO-BCD: A Lightweight Multi-Module Fusion Network for Real-Time Sheep Pose Estimation. Sensors, 25(9), 2687. https://doi.org/10.3390/s25092687