MUF-Net: A Novel Self-Attention Based Dual-Task Learning Approach for Automatic Left Ventricle Segmentation in Echocardiography †

Abstract

:1. Introduction

- We develop a dual-task network comprising a supervised semantic segmentation branch and an unsupervised optical flow learning branch to capture the coherence between consecutive frames.

- We propose a multi-scale edge-attention U-Net segmentation model, which significantly enhances the model’s ability to segment fuzzy boundaries of the left ventricle.

- We employ a temporal consistency constraint to jointly train the two branches, enabling the network to learn spatio-temporal features from echocardiograms.

- The proposed model achieves superior segmentation performance on the EchoNet-Dynamic dataset and demonstrates higher consistency on transition frames compared with existing methods.

2. Materials and Methods

2.1. Methods

2.1.1. Overview of Framework Workflow

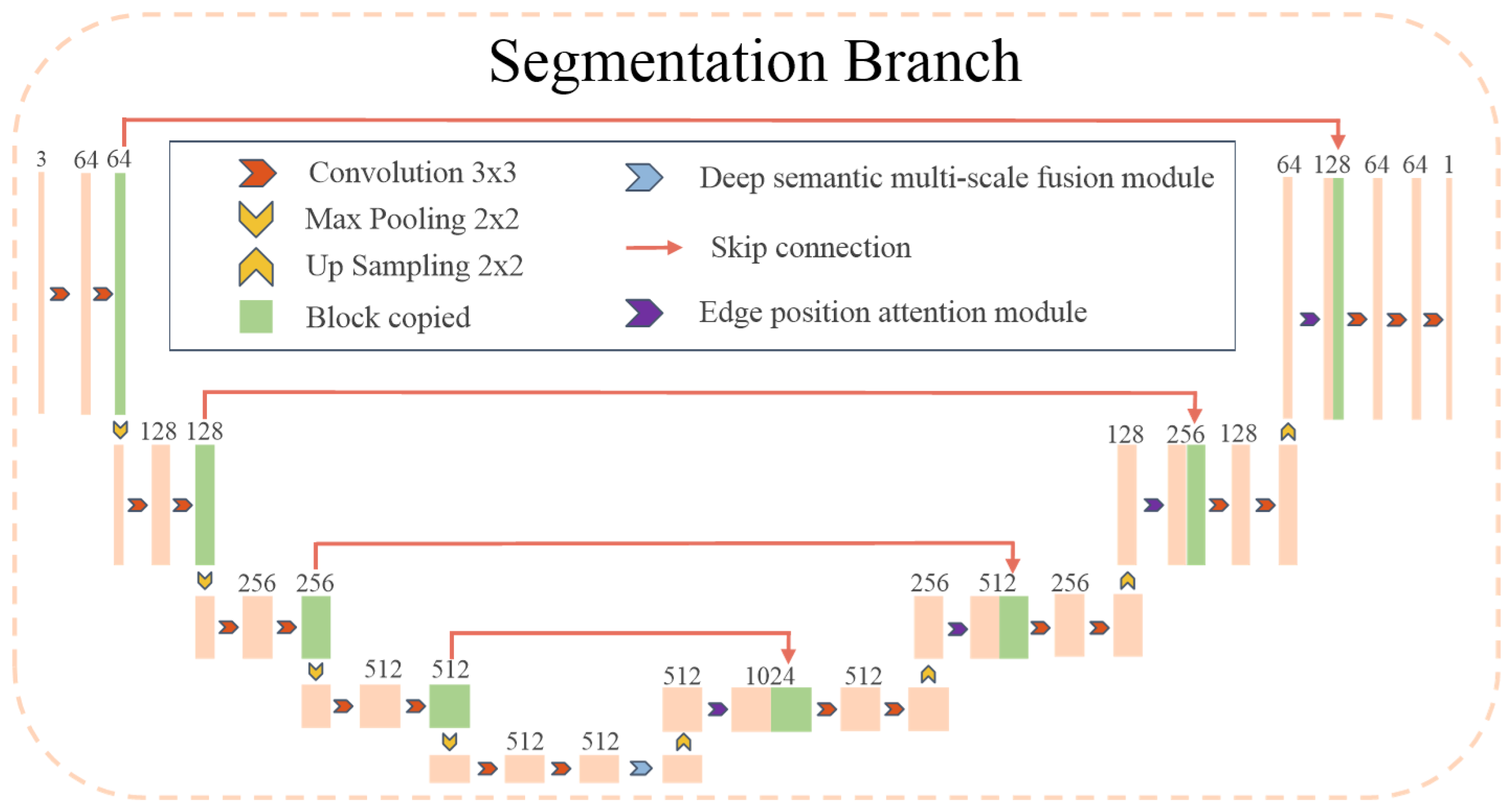

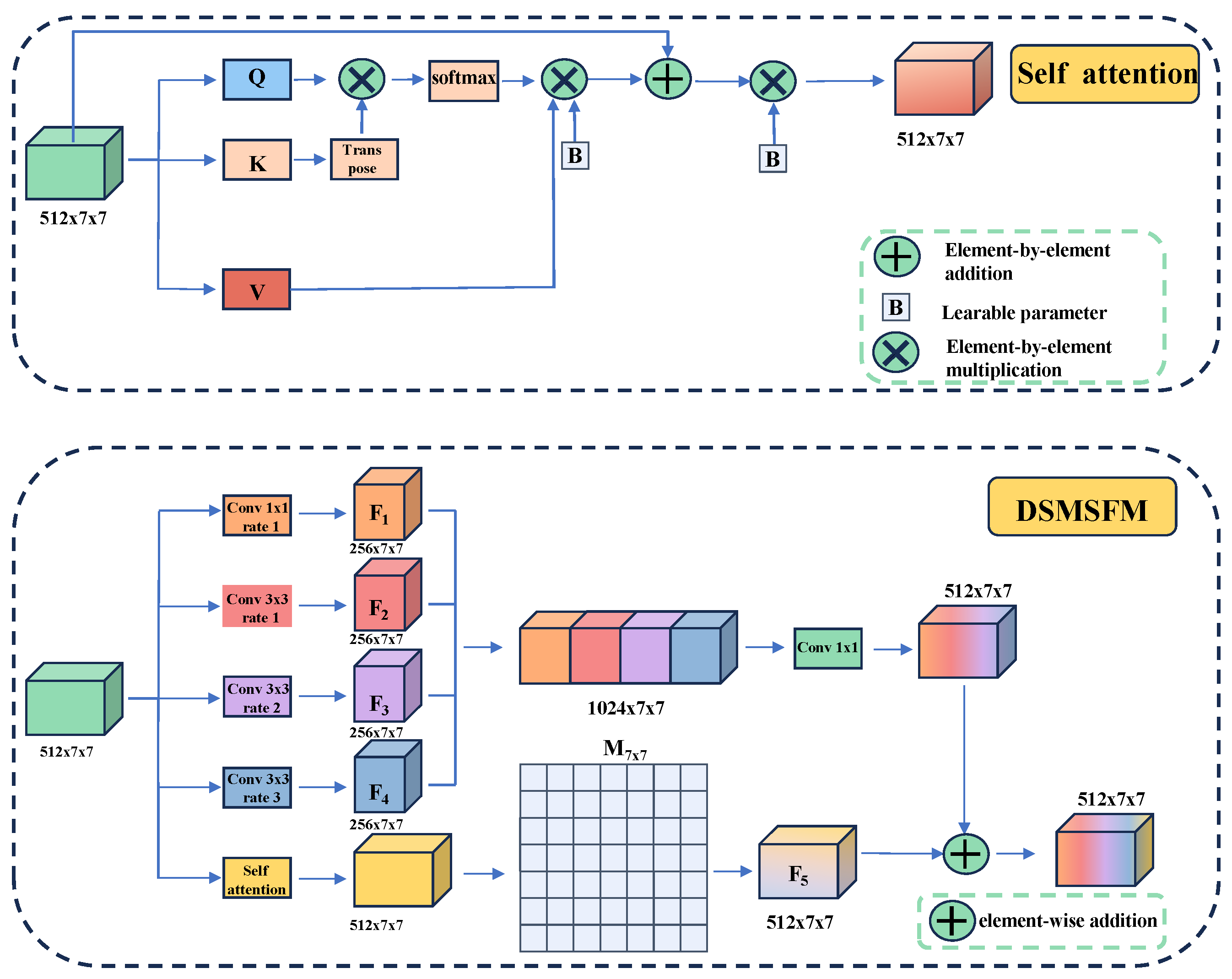

2.1.2. Segmentation Learning

- (1)

- MSEA-U-Net encoder

- (2)

- MSEA-U-Net decoder

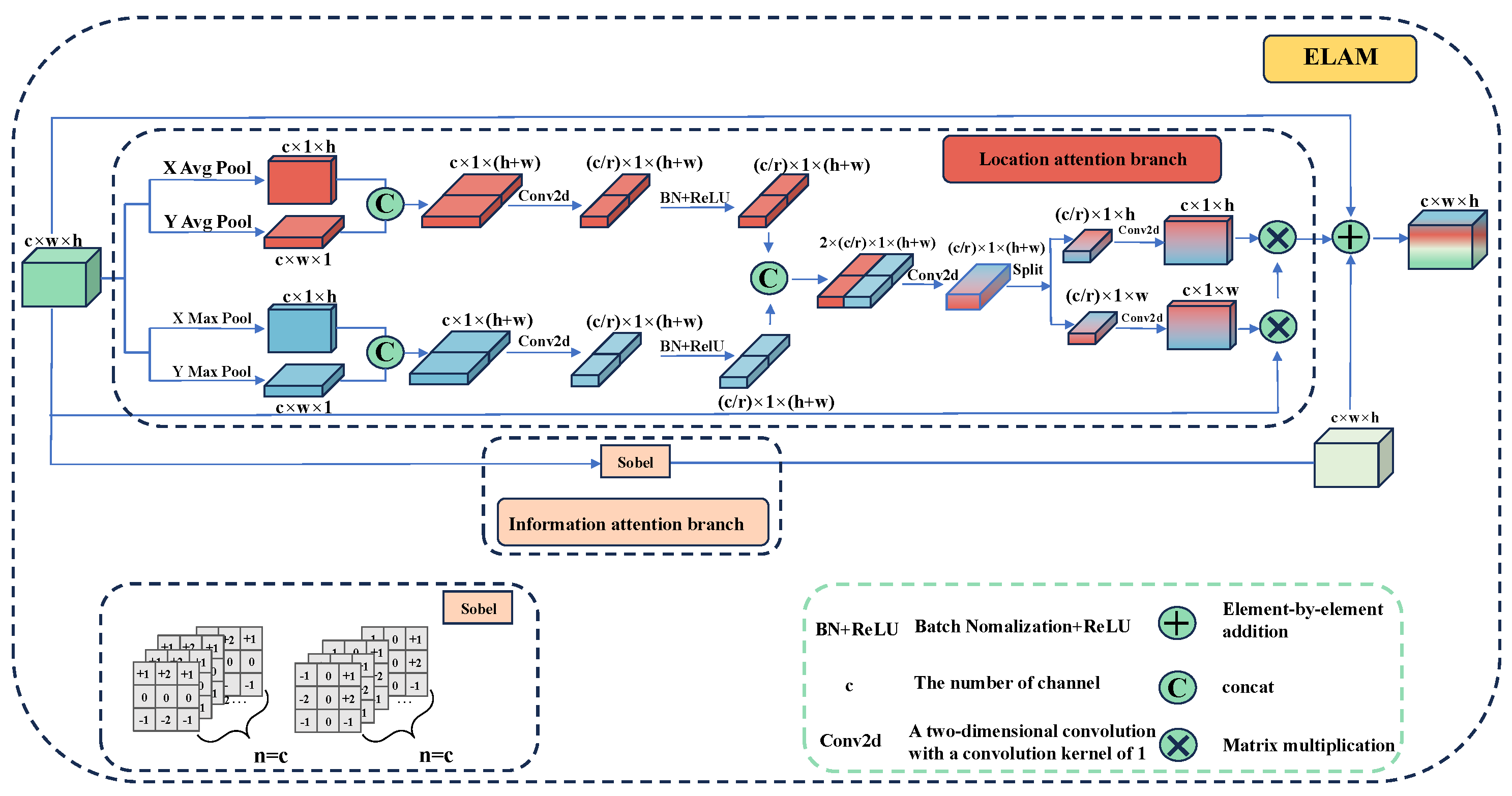

- a.

- Location Attention Branch

- b.

- Information Attention Branch

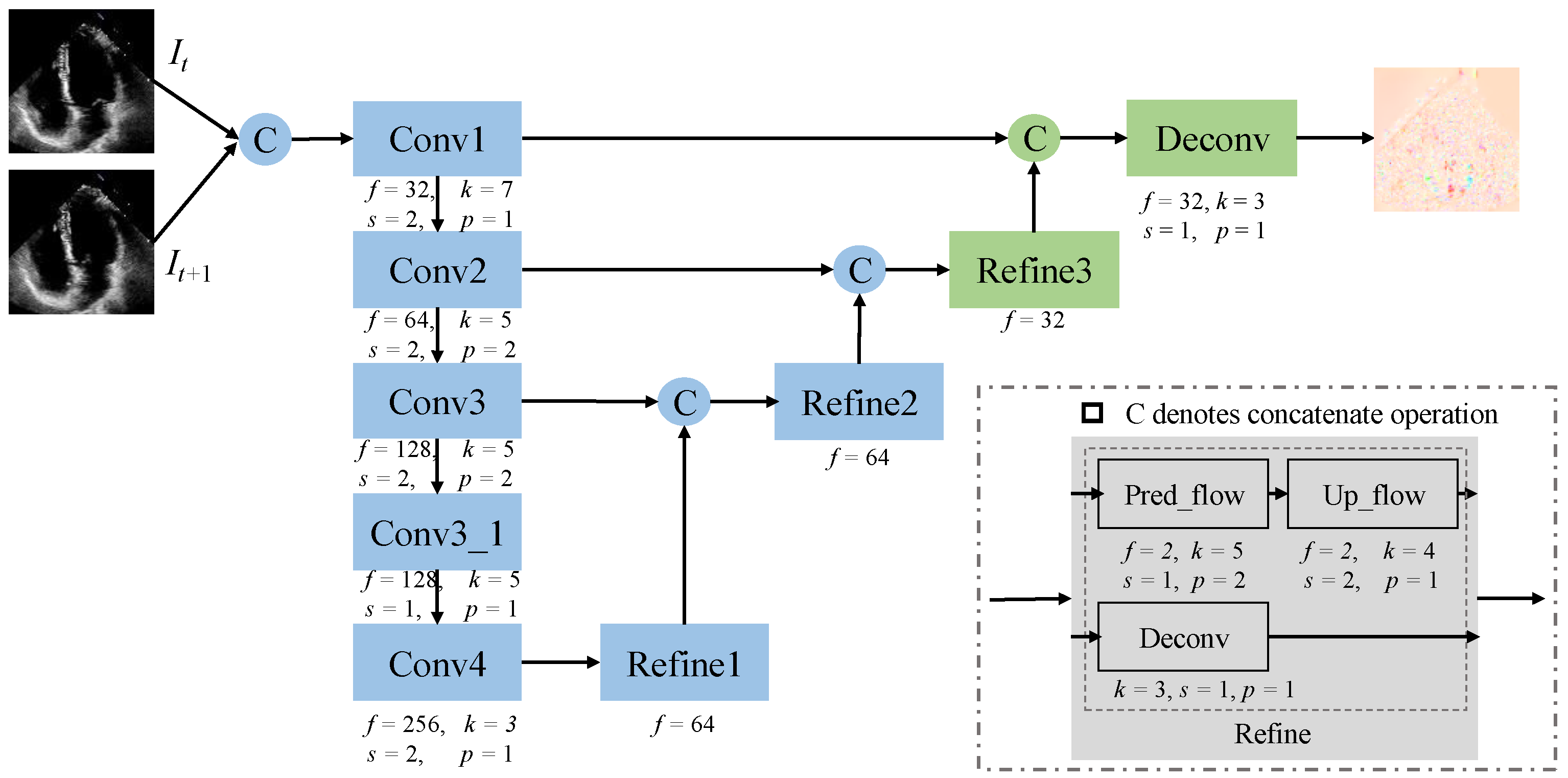

2.1.3. Optical Flow Learning

2.1.4. Cooperation Mechanism and Joint Learning

2.2. Materials

3. Experiment Results

3.1. Ablation Experiment

3.1.1. Evaluation of Introducing Optical Flow Branch

3.1.2. Evaluation of the Proposed MSEA-U-Net Semantic Segmentation Model

3.2. Comparison with Existing Methods

- For 2D ES and ED frames segmentation methods, we compare with the primary algorithm proposed by Ouyang et al., the EchoNet-Dynamic method [30], and three recent models which combined transformer module or attention mechanism: TransBridge [8] (which offers TransBridge-B and TransBridge-L variants), PLANet [31], and Bi-DCNet [12].

3.2.1. Quantitative Analysis

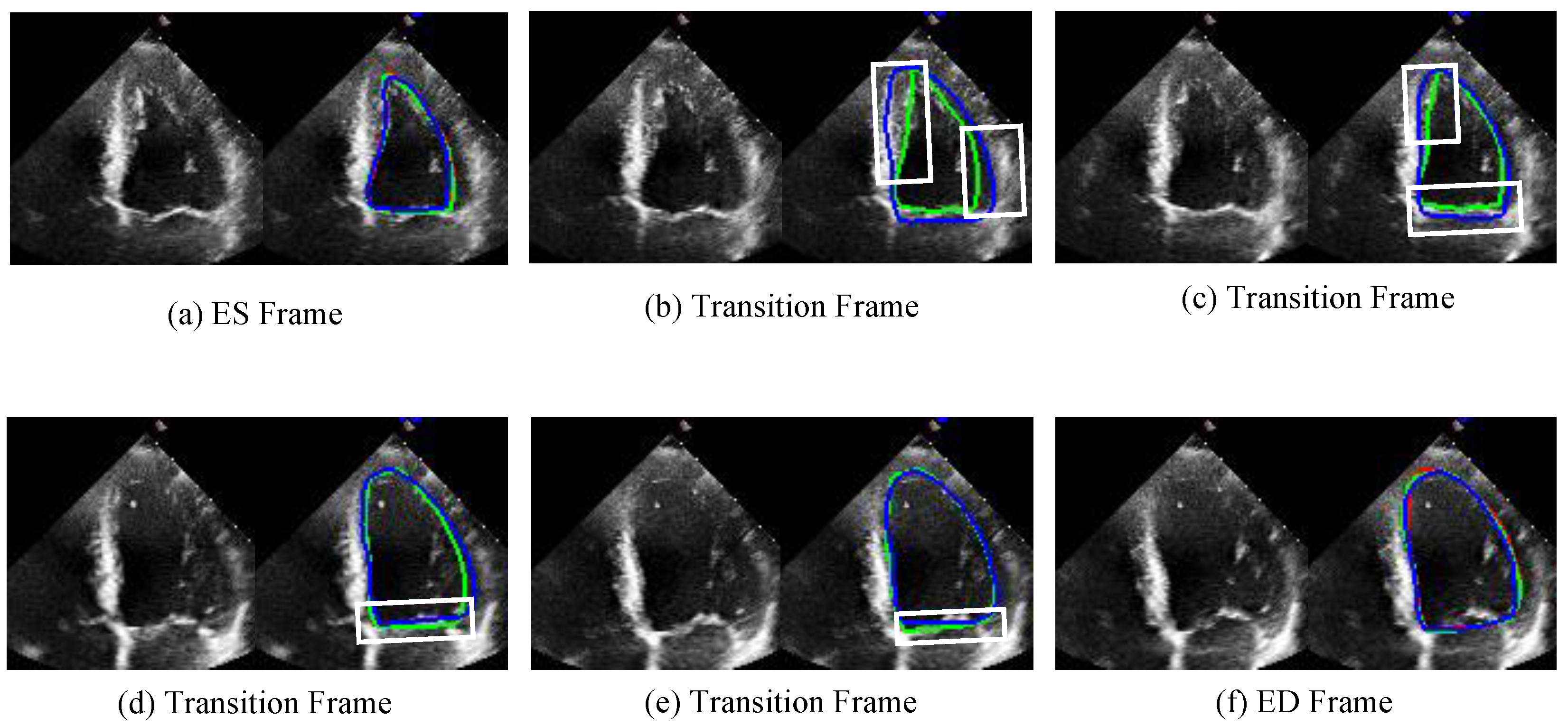

3.2.2. Qualitative Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jagannathan, R.; Patel, S.A.; Ali, M.K.; Narayan, K.V. Global updates on cardiovascular disease mortality trends and attribution of traditional risk factors. Curr. Diabetes Rep. 2019, 19, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Cikes, M.; Solomon, S.D. Beyond ejection fraction: An integrative approach for assessment of cardiac structure and function in heart failure. Eur. Heart J. 2016, 37, 1642–1650. [Google Scholar] [CrossRef] [PubMed]

- Hung, J.; Lang, R.; Flachskampf, F.; Shernan, S.K.; McCulloch, M.L.; Adams, D.B.; Thomas, J.; Vannan, M.; Ryan, T. 3D echocardiography: A review of the current status and future directions. J. Am. Soc. Echocardiogr. 2007, 20, 213–233. [Google Scholar] [CrossRef] [PubMed]

- Ali, Y.; Janabi-Sharifi, F.; Beheshti, S. Echocardiographic image segmentation using deep Res-U network. Biomed. Signal Process. Control 2021, 64, 102248. [Google Scholar] [CrossRef]

- Puyol-Antón, E.; Ruijsink, B.; Sidhu, B.S.; Gould, J.; Porter, B.; Elliott, M.K.; Mehta, V.; Gu, H.; Rinaldi, C.A.; Cowie, M.; et al. AI-Enabled Assessment of Cardiac Systolic and Diastolic Function from Echocardiography. In Proceedings of the International Workshop on Advances in Simplifying Medical Ultrasound, Singapore, 18 September 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 75–85. [Google Scholar]

- Li, M.; Dong, S.; Gao, Z.; Feng, C.; Xiong, H.; Zheng, W.; Ghista, D.; Zhang, H.; de Albuquerque, V.H.C. Unified model for interpreting multi-view echocardiographic sequences without temporal information. Appl. Soft Comput. 2020, 88, 106049. [Google Scholar] [CrossRef]

- Zeng, Y.; Tsui, P.H.; Pang, K.; Bin, G.; Li, J.; Lv, K.; Wu, X.; Wu, S.; Zhou, Z. MAEF-Net: Multi-attention efficient feature fusion network for left ventricular segmentation and quantitative analysis in two-dimensional echocardiography. Ultrasonics 2023, 127, 106855. [Google Scholar] [CrossRef]

- Deng, K.; Meng, Y.; Gao, D.; Bridge, J.; Shen, Y.; Lip, G.; Zhao, Y.; Zheng, Y. Transbridge: A lightweight transformer for left ventricle segmentation in echocardiography. In Proceedings of the Simplifying Medical Ultrasound: Second International Workshop, ASMUS 2021, Held in Conjunction with MICCAI 2021, Strasbourg, France, 27 September 2021; Proceedings 2. Springer: Berlin/Heidelberg, Germany, 2021; pp. 63–72. [Google Scholar]

- Shi, S.; Alimu, P.; Mahemuti, P.; Chen, Q.; Wu, H. The Study of Echocardiography of Left-Ventricle Segmentation Combining Transformer and CNN. 2022. Available online: https://ssrn.com/abstract=4184447 (accessed on 8 August 2022).

- Vaswani, A. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Alexey, D. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Ye, Z.; Kumar, Y.J.; Song, F.; Li, G.; Zhang, S. Bi-DCNet: Bilateral Network with Dilated Convolutions for Left Ventricle Segmentation. Life 2023, 13, 1040. [Google Scholar] [CrossRef]

- Wei, H.; Cao, H.; Cao, Y.; Zhou, Y.; Xue, W.; Ni, D.; Li, S. Temporal-consistent segmentation of echocardiography with co-learning from appearance and shape. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, 4–8 October 2020; Proceedings, Part II 23. Springer: Berlin/Heidelberg, Germany, 2020; pp. 623–632. [Google Scholar]

- Chen, Y.; Zhang, X.; Haggerty, C.M.; Stough, J.V. Assessing the generalizability of temporally coherent echocardiography video segmentation. In Proceedings of the Medical Imaging 2021: Image Processing, Online, 15–20 February 2021; SPIE: Bellingham, WA, USA, 2021; Volume 11596, pp. 463–469. [Google Scholar]

- Li, M.; Wang, C.; Zhang, H.; Yang, G. MV-RAN: Multiview recurrent aggregation network for echocardiographic sequences segmentation and full cardiac cycle analysis. Comput. Biol. Med. 2020, 120, 103728. [Google Scholar] [CrossRef] [PubMed]

- Sirjani, N.; Moradi, S.; Oghli, M.G.; Hosseinsabet, A.; Alizadehasl, A.; Yadollahi, M.; Shiri, I.; Shabanzadeh, A. Automatic cardiac evaluations using a deep video object segmentation network. Insights Imaging 2022, 13, 69. [Google Scholar] [CrossRef] [PubMed]

- Painchaud, N.; Duchateau, N.; Bernard, O.; Jodoin, P.M. Echocardiography segmentation with enforced temporal consistency. IEEE Trans. Med Imaging 2022, 41, 2867–2878. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Liu, J.; Xiao, F.; Wen, Z.; Cheng, L.; Qin, J. Semi-supervised segmentation of echocardiography videos via noise-resilient spatiotemporal semantic calibration and fusion. Med Image Anal. 2022, 78, 102397. [Google Scholar] [CrossRef] [PubMed]

- Ouyang, D.; He, B.; Ghorbani, A.; Lungren, M.P.; Ashley, E.A.; Liang, D.H.; Zou, J.Y. Echonet-dynamic: A large new cardiac motion video data resource for medical machine learning. In Proceedings of the NeurIPS ML4H Workshop, Vancouver, BC, Canada, 13 December 2019; pp. 1–11. [Google Scholar]

- Lyu, J.; Meng, J.; Zhang, Y.; Ling, S.H.; Sun, L. Joint semantic feature and optical flow learning for automatic echocardiography segmentation. In Proceedings of the International Conference on Intelligent Computing, Tianjin, China, 5–8 August 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 160–171. [Google Scholar]

- Deng, X.; Wu, H.; Zeng, R.; Qin, J. MemSAM: Taming Segment Anything Model for Echocardiography Video Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 9622–9631. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Sobel, I.; Feldman, G. A 3 × 3 isotropic gradient operator for image processing. A Talk Stanf. Artif. Proj. 1968, 1968, 271–272. [Google Scholar]

- Dosovitskiy, A.; Fischer, P.; Ilg, E.; Hausser, P.; Hazirbas, C.; Golkov, V.; Van Der Smagt, P.; Cremers, D.; Brox, T. Flownet: Learning optical flow with convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2758–2766. [Google Scholar]

- Godard, C.; Mac Aodha, O.; Brostow, G.J. Unsupervised monocular depth estimation with left-right consistency. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 270–279. [Google Scholar]

- Yin, Z.; Shi, J. Geonet: Unsupervised learning of dense depth, optical flow and camera pose. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1983–1992. [Google Scholar]

- Ding, M.; Wang, Z.; Zhou, B.; Shi, J.; Lu, Z.; Luo, P. Every frame counts: Joint learning of video segmentation and optical flow. In Proceedings of the AAAI Conference on Artificial Intelligence, Hilton, NY, USA, 7–12 February 2020; Volume 34, pp. 10713–10720. [Google Scholar]

- Ouyang, D.; He, B.; Ghorbani, A.; Yuan, N.; Ebinger, J.; Langlotz, C.P.; Heidenreich, P.A.; Harrington, R.A.; Liang, D.H.; Ashley, E.A.; et al. Video-based AI for beat-to-beat assessment of cardiac function. Nature 2020, 580, 252–256. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.; Wang, K.; Liu, D.; Yang, X.; Tian, J. Deep pyramid local attention neural network for cardiac structure segmentation in two-dimensional echocardiography. Med. Image Anal. 2021, 67, 101873. [Google Scholar] [CrossRef] [PubMed]

- Ta, K.; Ahn, S.S.; Stendahl, J.C.; Sinusas, A.J.; Duncan, J.S. A semi-supervised joint network for simultaneous left ventricular motion tracking and segmentation in 4D echocardiography. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, 4–8 October 2020; Proceedings, Part VI 23. Springer: Berlin/Heidelberg, Germany, 2020; pp. 468–477. [Google Scholar]

- Ding, W.; Zhang, H.; Liu, X.; Zhang, Z.; Zhuang, S.; Gao, Z.; Xu, L. Multiple token rearrangement Transformer network with explicit superpixel constraint for segmentation of echocardiography. Med. Image Anal. 2025, 101, 103470. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Lin, J.; Xie, W.; Kang, L.; Wu, H. Dynamic-guided Spatiotemporal Attention for Echocardiography Video Segmentation. IEEE Trans. Med. Imaging 2024, 43, 3843–3855. [Google Scholar] [CrossRef] [PubMed]

| Structure | Dice Score (%) |

|---|---|

| U-Net | 88.76 |

| U-Net + FlowNetSimple | 92.50 |

| U-Net + mFlowNet | 92.64 |

| U-net + mFlowNet + MECAM (this work) | 92.71 |

| Methods | Year | Train/Val/Test: 75/12.5/12.5 | Train/Val/Test: 80/-/20 | ||

|---|---|---|---|---|---|

| Dice Score (%) | HD (mm) | Dice Score (%) (Mean ± STD) | HD (mm) (Mean ± STD) | ||

| EchoNet-Dynamic | 2020 | 91.97 | 2.32 | 93.79 ± 0.22 | 2.27 ± 0.47 |

| Joint-net | 2020 | - | - | 90.91 ± 0.36 | 3.85 ± 0.92 |

| TransBridge-B | 2021 | 91.39 | 4.41 | - | - |

| TransBridge-L | 2021 | 91.64 | 4.19 | - | - |

| PLANet | 2021 | - | - | 91.92 ± 0.34 | 3.42 ± 0.67 |

| BSSF-Net | 2022 | - | - | 92.87 ± 0.16 | 2.93 ± 0.72 |

| Bi-DCNet | 2023 | 92.25 | - | - | - |

| JSAO-Net | 2024 | 92.64 | 2.23 | 96.99 ± 0.12 | 1.76 ± 0.47 |

| Ours | 2025 | 92.71 | 3.85 | - | - |

| Method | Parameter (M) | Speed (ms/f) | Flops (G) |

|---|---|---|---|

| Echonet-Dynamic | 39.60 | 14 | 7.85 |

| Joint-net | 117.27 | 62 | 108.32 |

| TransBridge-B | 3.49 | - | - |

| TransBridge-L | 11.30 | - | - |

| PLANet | 20.75 | 34 | 74.95 |

| BSSF-Net | 74.79 | 32 | 56.36 |

| JSAO-Net | 17.27 | 9 | 7.69 |

| Ours | 24.70 | 8 | 68.65 |

| Method | Dice Score (%) | p-Value | Cohen’s d |

|---|---|---|---|

| Echonet-Dynamic | 91.97 | 1.073 | |

| JSAO-Net | 92.64 | 1.041 | |

| Ours | 92.71 | 1.044 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lyu, J.; Meng, J.; Zhang, Y.; Ling, S.H. MUF-Net: A Novel Self-Attention Based Dual-Task Learning Approach for Automatic Left Ventricle Segmentation in Echocardiography. Sensors 2025, 25, 2704. https://doi.org/10.3390/s25092704

Lyu J, Meng J, Zhang Y, Ling SH. MUF-Net: A Novel Self-Attention Based Dual-Task Learning Approach for Automatic Left Ventricle Segmentation in Echocardiography. Sensors. 2025; 25(9):2704. https://doi.org/10.3390/s25092704

Chicago/Turabian StyleLyu, Juan, Jinpeng Meng, Yu Zhang, and Sai Ho Ling. 2025. "MUF-Net: A Novel Self-Attention Based Dual-Task Learning Approach for Automatic Left Ventricle Segmentation in Echocardiography" Sensors 25, no. 9: 2704. https://doi.org/10.3390/s25092704

APA StyleLyu, J., Meng, J., Zhang, Y., & Ling, S. H. (2025). MUF-Net: A Novel Self-Attention Based Dual-Task Learning Approach for Automatic Left Ventricle Segmentation in Echocardiography. Sensors, 25(9), 2704. https://doi.org/10.3390/s25092704