Artificial Intelligence Accurately Detects Traumatic Thoracolumbar Fractures on Sagittal Radiographs

Abstract

:1. Introduction

2. Materials and Methods

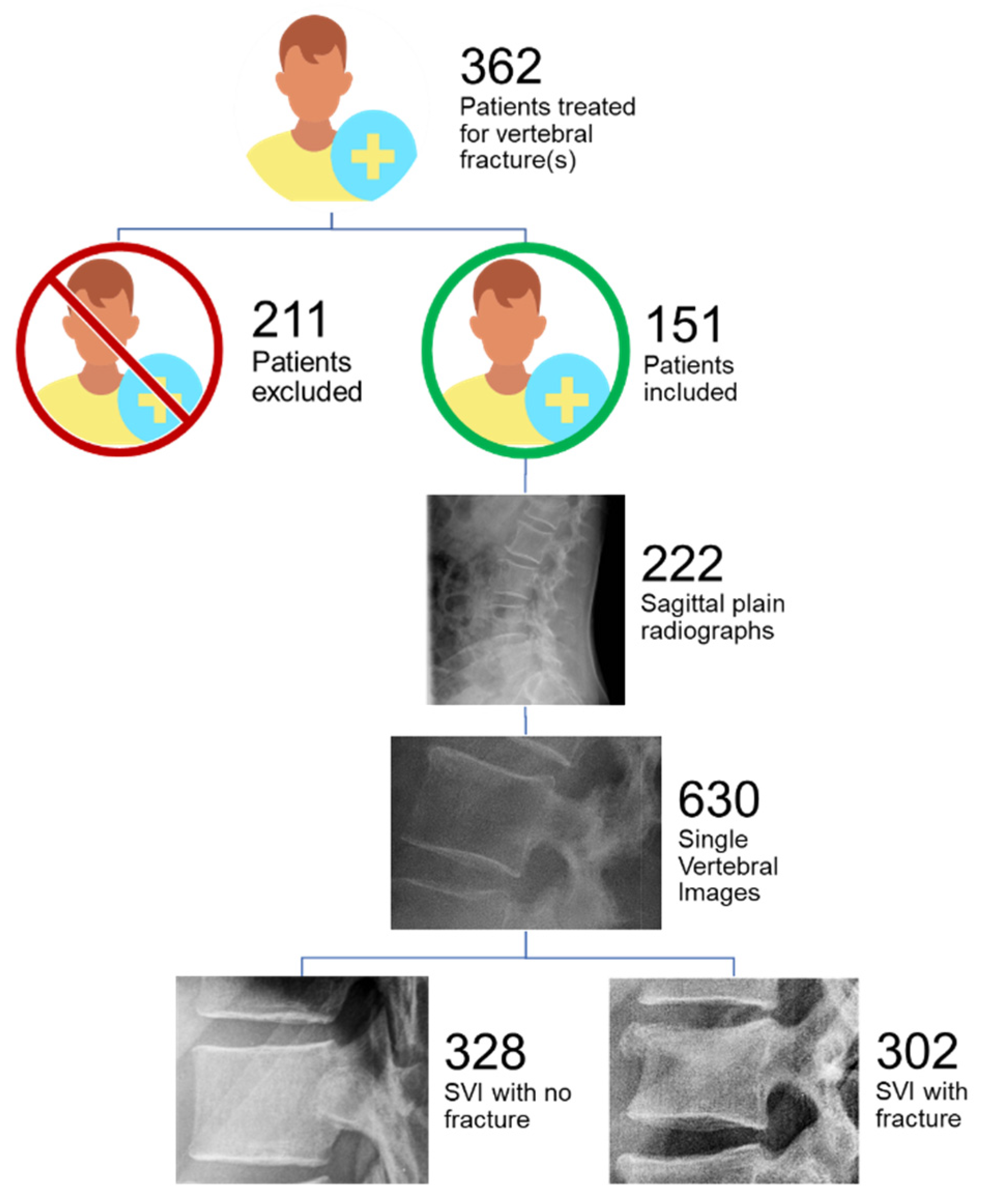

2.1. Patient Selection and Image Acquisition

2.2. Standard of Reference

2.3. Image Processing and Annotation

2.4. Adapting the Deep Learning Model

2.5. Training and Test Sets

2.6. Model’s Performance Parameters

2.7. Understanding the Model’s Prediction

3. Results

3.1. Epidemiological Distribution of TL Fractures

3.2. Deep Learning Model Performance

3.3. Heatmap Analysis

4. Discussion

4.1. Heatmap Analysis

4.2. Choice of DL Model for Fracture Classification Task

4.3. Clinical Relevance of AI for Automated Traumatic Lesion Detection in Radiographs

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Leucht, P.; Fischer, K.; Muhr, G.; Mueller, E.J. Epidemiology of traumatic spine fractures. Injury 2009, 40, 166–172. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Zhu, Y.; Liu, S.; Chen, W.; Zhang, F.; Zhang, Y. National incidence of traumatic spinal fractures in China. Medicine 2018, 97, e12190. [Google Scholar] [CrossRef] [PubMed]

- Holmes, J.F.; Miller, P.Q.; Panacek, E.A.; Lin, S.; Horne, N.S.; Mower, W.R. Epidemiology of thoracolumbar spine injury in blunt trauma. Acad. Emerg. Med. 2001, 8, 866–872. [Google Scholar] [CrossRef] [PubMed]

- Katsuura, Y.; Osborn, J.M.; Cason, G.W. The epidemiology of thoracolumbar trauma: A Meta-Analysis. J. Orthop. 2016, 13, 383–388. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hu, R.; Mustard, C.A.; Burns, C. Epidemiology of Incident Spinal Fracture in a Complete Population. Spine 1966, 21, 492–499. [Google Scholar] [CrossRef]

- Daly, M.C.; Pate, M.S.; Bhatia, N.N.; Bederman, S.S. The Influence of Insurance Status on the Surgical Treatment of Acute Spinal Fractures. Spine 2016, 41, E37–E45. [Google Scholar] [CrossRef] [Green Version]

- Advanced trauma life support (ATLS®): The ninth edition. J. Trauma Acute Care Surg. 2013, 74, 1363–1366. [CrossRef]

- Spinal Injury Assessment and Initial Management; National Institute for Health and Care Excellence: London, UK, 2016.

- Reitano, E.; Briani, L.; Sammartano, F.; Cimbanassi, S.; Luperto, M.; Vanzulli, A.; Chiara, O. Torso computed tomography in blunt trauma patients with normal vital signs can be avoided using non-invasive tests and close clinical evaluation. Emerg. Radiol. 2019, 26, 655–661. [Google Scholar] [CrossRef]

- Silva, H.P.; Viana, A.L.D. Health technology diffusion in developing countries: A case study of CT scanners in Brazil. Health Policy Plan 2011, 26, 385–394. [Google Scholar] [CrossRef]

- Ngoya, P.S.; Muhogora, W.E.; Pitcher, R.D. Defining the diagnostic divide: An analysis of registered radiological equipment resources in a low-income African country. Pan Afr. Med. J. 2016, 25, 99. [Google Scholar] [CrossRef]

- Alibhai, A.; Hendrikse, C.; Bruijns, S.R. Poor access to acute care resources to treat major trauma in low- and middle-income settings: A self-reported survey of acute care providers. Afr. J. Emerg. Med. 2019, 9, S38. [Google Scholar] [CrossRef] [PubMed]

- Brandt, M.-M.; Wahl, W.L.; Yeom, K.; Kazerooni, E.; Wang, S.C. Computed Tomographic Scanning Reduces Cost and Time of Complete Spine Evaluation. J. Trauma Inj. Infect. Crit. Care 2004, 56, 1022–1028. [Google Scholar] [CrossRef] [PubMed]

- VandenBerg, J.; Cullison, K.; Fowler, S.A.; Parsons, M.S.; McAndrew, C.M.; Carpenter, C.R. Blunt Thoracolumbar-Spine Trauma Evaluation in the Emergency Department: A Meta-Analysis of Diagnostic Accuracy for History, Physical Examination, and Imaging. J. Emerg. Med. 2019, 56, 153–165. [Google Scholar] [CrossRef] [PubMed]

- Wintermark, M.; Mouhsine, E.; Theumann, N.; Mordasini, P.; Van Melle, G.; Leyvraz, P.F.; Schnyder, P. Thoracolumbar spine fractures in patients who have sustained severe trauma: Depiction with multi-detector row CT. Radiology 2003, 227, 681–689. [Google Scholar] [CrossRef] [PubMed]

- Pizones, J.; Izquierdo, E.; Álvarez, P.; Sánchez-Mariscal, F.; Zúñiga, L.; Chimeno, P.; Benza, E.; Castillo, E. Impact of magnetic resonance imaging on decision making for thoracolumbar traumatic fracture diagnosis and treatment. Eur. Spine J. 2011, 20, 390–396. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Levi, A.D.; Hurlbert, R.J.; Anderson, P.; Fehlings, M.; Rampersaud, R.; Massicotte, E.M.; France, J.C.; Le Huec, J.C.; Hedlund, R.; Arnold, P. Neurologic deterioration secondary to unrecognized spinal instability following trauma-A multicenter study. Spine 2006, 31, 451–458. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, Y.S.; Yue, K.; Khademhosseini, A. Cell-laden hydrogels for osteochondral and cartilage tissue engineering. Acta Biomater 2017, 57, 1–25. [Google Scholar] [CrossRef]

- Jamaludin, A.; The Genodisc Consortium; Lootus, M.; Kadir, T.; Zisserman, A.; Urban, J.; Battié, M.; Fairbank, J.; McCall, I. ISSLS PRIZE IN BIOENGINEERING SCIENCE 2017: Automation of reading of radiological features from magnetic resonance images (MRIs) of the lumbar spine without human intervention is comparable with an expert radiologist. Eur. Spine J. 2017, 26, 1374–1383. [Google Scholar] [CrossRef] [Green Version]

- Weng, C.-H.; Wang, C.-L.; Huang, Y.-J.; Yeh, Y.-C.; Fu, C.-J.; Yeh, C.-Y.; Tsai, T.-T. Artificial Intelligence for Automatic Measurement of Sagittal Vertical Axis Using ResUNet Framework. J. Clin. Med. 2019, 8, 1826. [Google Scholar] [CrossRef] [Green Version]

- Vergari, C.; Skalli, W.; Gajny, L. A convolutional neural network to detect scoliosis treatment in radiographs. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1069–1074. [Google Scholar] [CrossRef]

- Maki, S.; Furuya, T.; Horikoshi, T.; Yokota, H.; Mori, Y.; Ota, J.; Kawasaki, Y.; Miyamoto, T.; Norimoto, M.; Okimatsu, S.; et al. A Deep Convolutional Neural Network With Performance Comparable to Radiologists for Differentiating Between Spinal Schwannoma and Meningioma. Spine 2020, 45, 694–700. [Google Scholar] [CrossRef] [PubMed]

- Chmelik, J.; Jakubicek, R.; Walek, P.; Jan, J.; Ourednicek, P.; Lambert, L.; Amadori, E.; Gavelli, G. Deep convolutional neural network-based segmentation and classification of difficult to define metastatic spinal lesions in 3D CT data. Med. Image Anal. 2018, 49, 76–88. [Google Scholar] [CrossRef] [PubMed]

- Murata, K.; Endo, K.; Aihara, T.; Suzuki, H.; Sawaji, Y.; Matsuoka, Y.; Nishimura, H.; Takamatsu, T.; Konishi, T.; Maekawa, A.; et al. Artificial intelligence for the detection of vertebral fractures on plain spinal radiography. Sci. Rep. 2020, 10, 20031. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Gyftopoulos, S.; Lin, D.; Knoll, F.; Doshi, A.; Rodrigues, T.C.; Recht, M.P. Artificial Intelligence in Musculoskeletal Imaging: Current Status and Future Directions. AJR Am. J. Roentgenol. 2019, 213, 506–513. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Galbusera, F.; Casaroli, G.; Bassani, T. Artificial intelligence and machine learning in spine research. JOR Spine 2019, 2, e1044. [Google Scholar] [CrossRef] [Green Version]

- Shin, H.-C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.; Summers, R.M. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans. Med. Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef] [Green Version]

- Panigrahi, S.; Nanda, A.; Swarnkar, T. A Survey on Transfer Learning. Smart Innov. Syst. Technol. 2021, 194, 781–789. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An imperative style, high-performance deep learning library. arXiv 2019, arXiv:1912.01703. [Google Scholar]

- Sidey-Gibbons, J.A.M.; Sidey-Gibbons, C.J. Machine learning in medicine: A practical introduction. BMC Med. Res. Methodol. 2019, 19, 64. [Google Scholar] [CrossRef] [Green Version]

- Olczak, J.; Fahlberg, N.; Maki, A.; Razavian, A.S.; Jilert, A.; Stark, A.; Sköldenberg, O.; Gordon, M. Artificial intelligence for analyzing orthopedic trauma radiographs: Deep learning algorithms—Are they on par with humans for diagnosing fractures? Acta Orthop. 2017, 88, 581–586. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Adams, M.; Chen, W.; Holcdorf, D.; McCusker, M.W.; Howe, P.D.; Gaillard, F. Computer vs. human: Deep learning versus perceptual training for the detection of neck of femur fractures. J. Med. Imaging Radiat. Oncol. 2019, 63, 27–32. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chung, S.W.; Han, S.S.; Lee, J.W.; Oh, K.-S.; Kim, N.R.; Yoon, J.P.; Kim, J.Y.; Moon, S.H.; Kwon, J.; Lee, H.-J.; et al. Automated detection and classification of the proximal humerus fracture by using deep learning algorithm. Acta Orthop. 2018, 89, 468–473. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, D.H.; MacKinnon, T. Artificial intelligence in fracture detection: Transfer learning from deep convolutional neural networks. Clin. Radiol. 2018, 73, 439–445. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.-X.; Goswami, A.; Xu, D.-L.; Xuan, J.; Jin, H.-M.; Xu, H.-M.; Zhou, F.; Wang, Y.-L.; Wang, X.-Y. The radiologic assessment of posterior ligamentous complex injury in patients with thoracolumbar fracture. Eur. Spine J. 2017, 26, 1454–1462. [Google Scholar] [CrossRef]

- Yang, S.; Yin, B.; Cao, W.; Feng, C.; Fan, G.; He, S. Diagnostic accuracy of deep learning in orthopaedic fractures: A systematic review and meta-analysis. Clin. Radiol. 2020, 75, 713.e17–713.e28. [Google Scholar] [CrossRef]

- Rajasekaran, S.; Vaccaro, A.R.; Kanna, R.M.; Schroeder, G.D.; Oner, F.C.; Vialle, L.; Chapman, J.; Dvorak, M.; Fehlings, M.; Shetty, A.P.; et al. The value of CT and MRI in the classification and surgical decision-making among spine surgeons in thoracolumbar spinal injuries. Eur. Spine J. 2017, 26, 1463–1469. [Google Scholar] [CrossRef]

- Sixta, S.; Moore, F.O.; Ditillo, M.F.; Fox, A.D.; Garcia, A.J.; Holena, D.; Joseph, B.; Tyrie, L.; Cotton, B. Screening for thoracolumbar spinal injuries in blunt trauma: An eastern association for the surgery of trauma practice management guideline. J. Trauma Acute Care Surg. 2012, 73, S326–S332. [Google Scholar] [CrossRef] [Green Version]

- Balogh, E.P.; Miller, B.T.; Ball, J.R. Improving Diagnosis in Health Care; National Academies Press: Washington, DC, USA, 2016. [Google Scholar]

- Fazal, M.I.; Patel, M.E.; Tye, J.; Gupta, Y. The past, present and future role of artificial intelligence in imaging. Eur. J. Radiol. 2018, 105, 246–250. [Google Scholar] [CrossRef]

- Lindsey, R.; Daluiski, A.; Chopra, S.; Lachapelle, A.; Mozer, M.; Sicular, S.; Hanel, D.; Gardner, M.; Gupta, A.; Hotchkiss, R.; et al. Deep neural network improves fracture detection by clinicians. Proc. Natl. Acad. Sci. USA 2018, 115, 11591–11596. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Disease Codes | Procedure Codes |

|---|---|

| Fracture of thoracic spine | Thoracolumbar instrumentation |

| Fracture of thoracolumbar spine | Instrumentation lumbar spine |

| Fracture of lumbar spine | Instrumentation thoracic spine |

| Vertebra fracture | Osteosynthesis of the spine |

| Vertebra injury | Spinopelvic fixation |

| Kyphoplasty | |

| Spinal fixation |

| Sensitivity | Specificity | Negative Predictive Value | Accuracy | |

|---|---|---|---|---|

| ResNet 18 | 0.91 | 0.89 | 0.89 | 0.88 |

| VGG16 | 0.90 | 0.83 | 0.89 | 0.86 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rosenberg, G.S.; Cina, A.; Schiró, G.R.; Giorgi, P.D.; Gueorguiev, B.; Alini, M.; Varga, P.; Galbusera, F.; Gallazzi, E. Artificial Intelligence Accurately Detects Traumatic Thoracolumbar Fractures on Sagittal Radiographs. Medicina 2022, 58, 998. https://doi.org/10.3390/medicina58080998

Rosenberg GS, Cina A, Schiró GR, Giorgi PD, Gueorguiev B, Alini M, Varga P, Galbusera F, Gallazzi E. Artificial Intelligence Accurately Detects Traumatic Thoracolumbar Fractures on Sagittal Radiographs. Medicina. 2022; 58(8):998. https://doi.org/10.3390/medicina58080998

Chicago/Turabian StyleRosenberg, Guillermo Sánchez, Andrea Cina, Giuseppe Rosario Schiró, Pietro Domenico Giorgi, Boyko Gueorguiev, Mauro Alini, Peter Varga, Fabio Galbusera, and Enrico Gallazzi. 2022. "Artificial Intelligence Accurately Detects Traumatic Thoracolumbar Fractures on Sagittal Radiographs" Medicina 58, no. 8: 998. https://doi.org/10.3390/medicina58080998