Validity and Reliability of Vis-Screen Application: A Smartphone-Based Distance Vision Testing for Visual Impairment and Blindness Vision Screening

Abstract

:1. Introduction

2. Materials and Methods

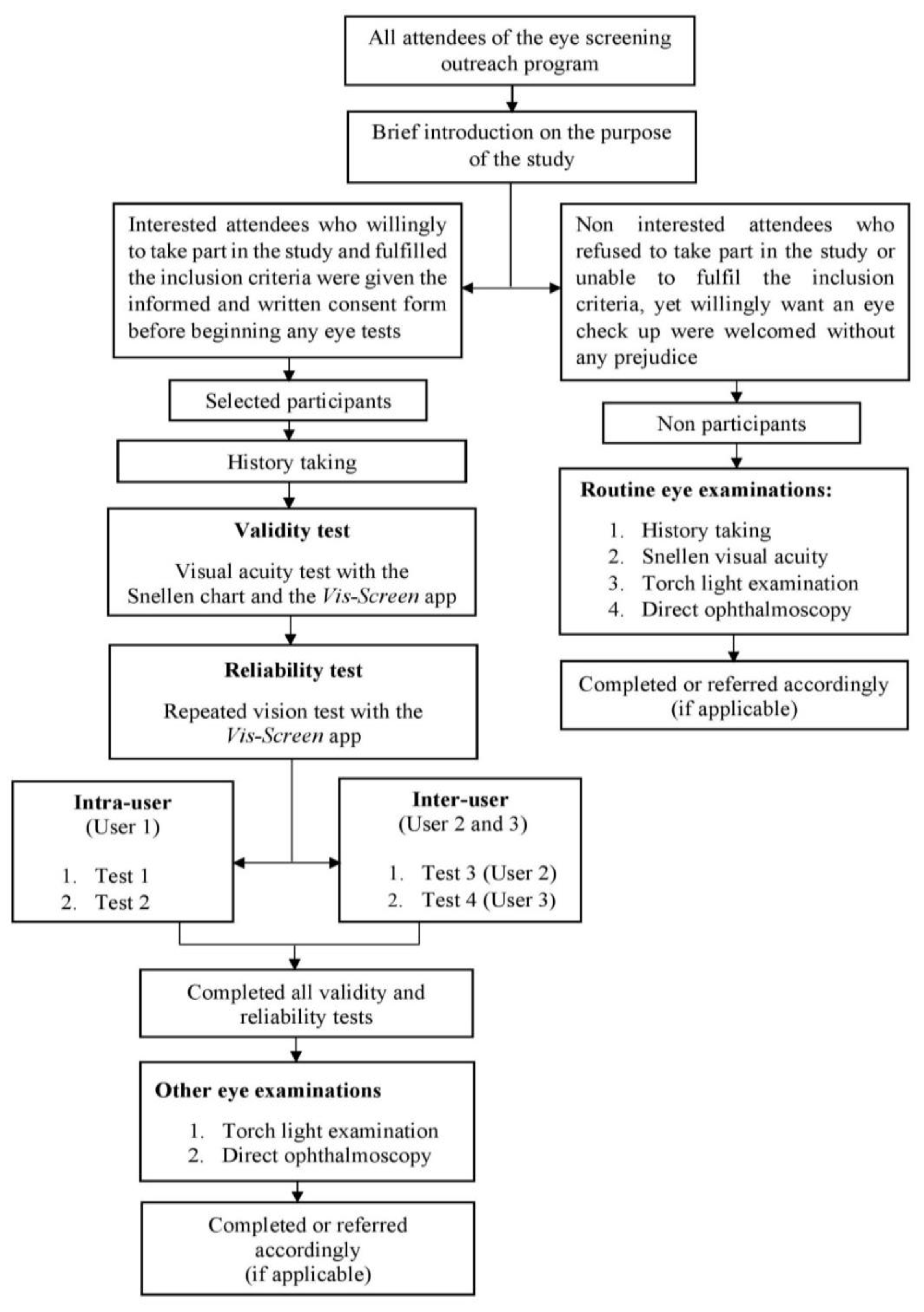

2.1. Study Design and Participants

2.2. Sampling Process

2.3. Application Description and Test Algorithm

2.4. Visual Acuity Testing

2.5. Sample Size and Statistical Analysis

3. Results

4. Discussion

Limitation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Stiff, H.; Dimenstein, N.; Larson, S.A. Vision screening outcomes in children less than 3 years of age compared with children 3 years and older. J. AAPOS 2020, 24, 293.e1–293.e4. [Google Scholar] [CrossRef]

- O’Colmain, U.; Neo, Y.N.; Gilmour, C.; MacEwen, C.J. Long-term visual and treatment outcomes of whole-population pre-school visual screening (PSVS) in children: A longitudinal, retrospective, population-based cohort study. Eye 2020, 34, 2315–2321. [Google Scholar] [CrossRef] [PubMed]

- Aruljyothi, L.; Janakiraman, A.; Malligarjun, B.; Babu, B.M. Smartphone applications in ophthalmology: A quantitative analysis. Indian J. Ophthalmol. 2021, 69, 548–553. [Google Scholar] [CrossRef] [PubMed]

- Bhaskaran, A.; Babu, M.; Abhilash, B.; Sudhakar, N.A.; Dixitha, V. Comparison of smartphone application-based visual acuity with traditional visual acuity chart for use in tele-ophthalmology. Taiwan J. Ophthalmol. 2022, 12, 155–163. [Google Scholar] [PubMed]

- Suo, L.; Ke, X.; Zhang, D.; Qin, X.; Chen, X.; Hong, Y.; Dai, W.; Wu, D.; Zhang, C.; Zhang, D. Use of Mobile Apps for Visual Acuity Assessment: Systematic Review and Meta-analysis. JMIR Mhealth Uhealth 2022, 10, e26275. [Google Scholar] [CrossRef]

- Mena-Guevara, K.J.; Piñero, D.P.; de Fez, D. Validation of Digital Applications for Evaluation of Visual Parameters: A Narrative Review. Vision 2021, 5, 58. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. Screening programmes: A short guide. In Increase Effectiveness, Maximize Benefits and Minimize Harm; World Health Organization: Geneva, Switzerland, 2020. [Google Scholar]

- Maxim, L.D.; Niebo, R.; Utell, M.J. Screening tests: A review with examples. Inhal. Toxicol. 2014, 26, 811–828. [Google Scholar] [CrossRef]

- Rahman, S.N.A.A.; Mutalib, A.; Hassan, A.; Baig, A.A.; Nyi, N.; Naing, M.P.H.; Hashim, S.E. Development and validation of a new vision screening test algorithm for public use mobile application- A pilot study. Med. J. Malays. 2020, 75, 685–690. [Google Scholar]

- World Health Organization. Blindness and Vision Impairment. Available online: https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment (accessed on 5 November 2022).

- Selvaraj, S.; Naing, N.N.; Wan-Arfah, N.; Karobari, M.I.; Marya, A.; Prasadh, S. Development and Validation of Oral Health Knowledge, Attitude and Behavior Questionnaire among Indian Adults. Medicina 2022, 58, 68. [Google Scholar] [CrossRef]

- Bastawrous, A.; Rono, H.; Livingstone, I.A.T.; Weiss, H.A.; Jordan, S.; Kuper, H.; Burton, M. Development and Validation of a Smartphone-Based Visual Acuity Test (Peek Acuity) for Clinical Practice and Community-Based Fieldwork. JAMA Ophthalmol. 2015, 133, 930–937. [Google Scholar] [CrossRef]

- Raja, M.; Ramamurthy, D.; Srinivasan, K.; Varadharajan, L.S. Development of Pocket Vision Screener and its effectiveness at screening visual acuity deficits. Indian J. Ophthalmol. 2014, 62, 1152–1155. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. World Report on Vision; World Health Organization: Geneva, Switzerland, 2019. [Google Scholar]

- Ariffin, W.N. Sample Size Calculator. Available online: http://wnarifin.github.io (accessed on 5 November 2022).

- Leeflang, M.M.G.; Allerberger, F. How to: Evaluate a diagnostic test. Clin. Microbiol. Infect. 2019, 25, 54–59. [Google Scholar] [CrossRef] [PubMed]

- Warrens, M.J. Five ways to look at Cohen’s kappa. J. Psychol. Psychother. 2015, 5, 1. [Google Scholar] [CrossRef]

- McHugh, M.L. Interrater reliability: The kappa statistic. Biochem. Med. 2012, 22, 276–282. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Rono, H.K.; Bastawrous, A.; MacLeod, D.; Wanjala, E.; Di Tanna, G.L.; Weiss, H.A.; Burton, M. Smartphone-based screening for visual impairment in Kenyan school children: A cluster randomised controlled trial. Lancet Glob. Health 2018, 6, e924–e932. [Google Scholar] [CrossRef]

- Xie, Y.; Xu, W.; Yang, L.; Wu, W.; Tan, X. Applicability of the Smart Vision Screening Instrument among Chinese Primary School Students. Iran J. Public Health. 2021, 50, 111–120. [Google Scholar] [CrossRef]

- Tong, L.; Saw, S.-M.; Tan, D.; Chia, K.-S.; Chan, W.-Y.; Carkeet, A.; Chua, W.-H.; Hong, C.-Y. Sensitivity and Specificity of Visual Acuity Screening for Refractive Errors in School Children. Optom. Vis. Sci. 2002, 79, 650–657. [Google Scholar] [CrossRef]

- Vashist, P.; Shukla, P.; Singh, S.S.; Gupta, V.; Gupta, N.; Wadhwani, M.; Bharadwaj, A.; Arora, L. Assessing the inclusion of primary school children in vision screening for refractive error program of India. Indian J. Ophthalmol. 2018, 66, 935–939. [Google Scholar] [CrossRef]

- Thomas, J.; Rajashekar, B.; Kamath, A.; Gogate, P. Diagnostic accuracy and agreement between visual acuity charts for detecting significant refractive errors in preschoolers. Clin. Exp. Optom. 2020, 103, 347–352. [Google Scholar] [CrossRef]

- Loewenstein, J.I.; Palmberg, P.F.; Connett, J.E.; Wentworth, D.N. Effectiveness of a pinhole method for visual acuity screening. Arch. Ophthalmol. 1985, 103, 222–223. [Google Scholar] [CrossRef]

- Cook, C.; Cockburn, N.; van der Merwe, J.; Ehrlich, R. Cataract and glaucoma case detection for Vision 2020 programs in Africa: An evaluation of 6 possible screening tests. J. Glaucoma. 2009, 18, 557–562. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Fu, J.; Sun, A.; Li, L.; Sun, Y.; Meng, Z. Pinhole does not increase screening accuracy of detecting decreased best corrected visual acuity in schoolchildren. BMC Ophthalmol. 2021, 21, 416. [Google Scholar] [CrossRef]

- Kumar, R.S.; Rackenchath, M.V.; Sathidevi, A.V.; Nagaraj, S.; Moe, C.A.; Stamper, R.L.; Keenan, J.D. Accuracy of pinhole visual acuity at an urban Indian hospital. Eye 2019, 33, 335–337. [Google Scholar] [CrossRef] [PubMed]

- Mactaggart, I.; Limburg, H.; Bastawrous, A.; Burton, M.J.; Kuper, H. Rapid Assessment of Avoidable Blindness: Looking back, looking forward. Br. J. Ophthalmol. 2019, 103, 1549–1552. [Google Scholar] [CrossRef] [PubMed]

- Shakoor, S.A.; Rahman, M.; Hossain, A.H.M.E.; Moniruzzaman, M.; Bhuiyan, M.R.; Hakim, F.; Zaman, M.M. Prevalence of blindness and its determinants in Bangladeshi adult population: Results from a national cross-sectional survey. BMJ Open 2022, 12, e052247. [Google Scholar] [CrossRef]

- Marmamula, S.; Khanna, R.C.; Mettla, A.L.; Pehere, N.K.; Keeffe, J.E.; Yameneni, D.K.; Rao, G.N. Agreement and diagnostic accuracy of vision screening in children by teachers, community eye-health workers and vision technicians. Clin. Exp. Optom. 2018, 101, 553–559. [Google Scholar] [CrossRef]

- Hazari, H.; Curtis, R.; Eden, K.; Hopman, W.M.; Irrcher, I.; Bona, M.D. Validation of the visual acuity iPad app Eye Chart Pro compared to the standard Early Treatment Diabetic Retinopathy Study chart in a low-vision population. J. Telemed. Telecare. 2022, 28, 680–686. [Google Scholar] [CrossRef]

- Irawati, Y.; Bani, A.P.; Gabriella, K.; Fitriana, A.; Paramita, C.; Susiyanti, M.; Rahayu, T.; Andayani, G.; Lestari, Y.D. Peek Acuity vs Snellen Chart for visual impairment screening in leprosy: A cross-sectional study. Lepr. Rev. 2020, 91, 262–273. [Google Scholar] [CrossRef]

- Tiraset, N.; Poonyathalang, A.; Padungkiatsagul, T.; Deeyai, M.; Vichitkunakorn, P.; Vanikieti, K. Comparison of Visual Acuity Measurement Using Three Methods: Standard ETDRS Chart, Near Chart and a Smartphone-Based Eye Chart Application. Clin. Ophthalmol. 2021, 15, 859–869. [Google Scholar] [CrossRef]

- Han, X.; Scheetz, J.; Keel, S.; Liao, C.; Liu, C.; Jiang, Y.; Müller, A.; Meng, W.; He, M. Development and Validation of a Smartphone-Based Visual Acuity Test (Vision at Home). Transl. Vis. Sci. Technol. 2019, 8, 27. [Google Scholar] [CrossRef]

- Zhao, L.; Stinnett, S.S.; Prakalapakorn, S.G. Visual Acuity Assessment and Vision Screening Using a Novel Smartphone Application. J. Pediatr. 2019, 213, 203–210.e1. [Google Scholar] [CrossRef] [PubMed]

- Koenig, S.; Tonagel, F.; Schiefer, U.; Bach, M.; Heinrich, S.P. Assessing visual acuity across five disease types: ETDRS charts are faster with clinical outcome comparable to Landolt Cs. Graefes Arch. Clin. Exp. Ophthalmol. 2014, 252, 1093–1099. [Google Scholar] [CrossRef] [PubMed]

- Gofin, R.; Falk, M. Comparison of the automated vision screening test to the Snellen test. Public Health 1991, 105, 139–144. [Google Scholar] [CrossRef]

- Rosser, D.A.; Laidlaw, D.A.; Murdoch, I.E. The development of a "reduced logMAR" visual acuity chart for use in routine clinical practice. Br. J. Ophthalmol. 2001, 85, 432–436. [Google Scholar] [CrossRef] [PubMed]

| Category | Definition | Cut-Off Point | Diagnostic Term Applied | ||

|---|---|---|---|---|---|

| WHO Criteria | Vis-Screen Criteria | No Disease | Disease | ||

| Normal | Distance VA 6/12 or better | Can see 6/12 | NA | NA | NA |

| Mild VI | Distance VA worse than 6/12 but equal to or better than 6/18 | Can see 6/18 but cannot see 6/12 | 6/12 | Normal | Mild VI or worse |

| Moderate VI | Distance VA worse than 6/18 but equal to or better than 6/60 | Can see 6/60 but cannot see 6/18 | 6/18 | Mild VI or better | Moderate VI or worse |

| Severe VI | Distance VA worse than 6/60 but equal to or better than 3/60 | Can see 3/60 but cannot see 6/60 | 6/60 | Moderate VI or better | Severe VI or worse |

| Blindness | Distance VA worse than 3/60 up till no light perception | Cannot see 3/60 | 3/60 | Severe VI or better | Blindness |

| Cut-Off Point | Sensitivity (95% CI) | Specificity (95% CI) | PPV (95% CI) | NPV (95% CI) | Accuracy (95% CI) | |

|---|---|---|---|---|---|---|

| 6/12 | PVR | 88.4% (85.3, 91.5) | 94.7% (92.6, 96.9) | 81.7% (78.0, 85.5) | 96.8% (95.1, 98.5) | 93.4% (90.5, 95.6) |

| CVR | 85.4% (81.9, 88.8) | 95.6% (93.7, 97.6) | 68.6% (64.1, 73.1) | 98.3% (97.1, 99.6) | 94.6% (91.9, 96.6) | |

| 6/18 | PVR | 82.0% (78.2, 85.7) | 95.7% (93.7, 97.7) | 76.9% (72.8, 81.0) | 96.8% (95.1, 98.5) | 93.6% (90.8, 95.8) |

| CVR | 73.9% (69.7, 78.2) | 96.9% (95.2, 98.6) | 58.6% (53.8, 63.4) | 98.4% (97.2, 99.6) | 95.6% (93.1, 97.4) | |

| 6/60 | PVR | 64.7% (60.1, 69.3) | 98.0% (96.6, 99.3) | 57.9% (53.1, 62.7) | 98.5% (97.3, 99.7) | 96.6% (94.3, 98.1) |

| CVR | 60.0% (55.3, 64.8) | 98.8% (97.7, 99.8) | 37.5% (32.8, 42.2) | 99.5% (98.8, 100.0) | 98.3% (96.5, 99.3) | |

| 3/60 | PVR | 55.6% (50.7, 60.4) | 99.3% (98.4, 100.0) | 62.5% (57.8, 67.2) | 99.0% (98.0, 100.0) | 98.3% (96.5, 99.3) |

| CVR | 66.7% (62.1, 71.2) | 99.0% (98.1, 100.0) | 33.3% (28.8, 37.9) | 99.8% (99.3, 100.0) | 98.8% (97.2, 99.6) | |

| Cut-Off Point | AUC (95% CI) | Correctly Classified (%) | LR+ | LR− | |

|---|---|---|---|---|---|

| 6/12 | PVR | 0.93 (0.89, 0.97) | 93.4 | 16.73 | 0.12 |

| CVR | 0.91 (0.86, 0.97) | 94.6 | 19.58 | 0.15 | |

| 6/18 | PVR | 0.95 (0.92, 0.98) | 93.6 | 18.96 | 0.19 |

| CVR | 0.98 (0.96, 0.99) | 95.6 | 23.71 | 0.27 | |

| 6/60 | PVR | 0.96 (0.94, 0.99) | 96.6 | 31.63 | 0.36 |

| CVR | 0.97 (0.94, 1.00) | 98.3 | 48.36 | 0.41 | |

| 3/60 | PVR | 0.97 (0.95, 1.00) | 98.3 | 73.89 | 0.45 |

| CVR | 0.98 (0.96, 1.00) | 98.8 | 67.50 | 0.34 | |

| Category | Vision Test | Kappa Value (95% CI) | Z | p-Value |

|---|---|---|---|---|

| Intra-user | PVR | 0.85 (0.80, 0.91) | 24.77 | ˂0.001 |

| CVR | 0.79 (0.70, 0.89) | 22.28 | ˂0.001 | |

| Inter-user | PVR | 0.72 (0.66, 0.77) | 36.31 | ˂0.001 |

| CVR | 0.67 (0.60, 0.75) | 32.79 | ˂0.001 | |

| Vis-Screen vs. Snellen chart | PVR | 0.61 (0.53, 0.68) | 17.96 | ˂0.001 |

| CVR | 0.52 (0.42, 0.62) | 14.89 | ˂0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdul Rahman, S.N.A.; Naing, N.N.; Othman, A.M.; Mohamad, Z.; Ibrahim, T.M.M.; Hashim, S.E.; Baig, A.A.; Wong, L.S.; Dewadas, H.D.; Selvaraj, S. Validity and Reliability of Vis-Screen Application: A Smartphone-Based Distance Vision Testing for Visual Impairment and Blindness Vision Screening. Medicina 2023, 59, 912. https://doi.org/10.3390/medicina59050912

Abdul Rahman SNA, Naing NN, Othman AM, Mohamad Z, Ibrahim TMM, Hashim SE, Baig AA, Wong LS, Dewadas HD, Selvaraj S. Validity and Reliability of Vis-Screen Application: A Smartphone-Based Distance Vision Testing for Visual Impairment and Blindness Vision Screening. Medicina. 2023; 59(5):912. https://doi.org/10.3390/medicina59050912

Chicago/Turabian StyleAbdul Rahman, Siti Nor Aishah, Nyi Nyi Naing, Abdul Mutalib Othman, Zarina Mohamad, Tg Mohd Masrul Ibrahim, Syaratul Emma Hashim, Atif Amin Baig, Ling Shing Wong, Hemaniswarri Dewi Dewadas, and Siddharthan Selvaraj. 2023. "Validity and Reliability of Vis-Screen Application: A Smartphone-Based Distance Vision Testing for Visual Impairment and Blindness Vision Screening" Medicina 59, no. 5: 912. https://doi.org/10.3390/medicina59050912