Modeling Spatiotemporal Pattern of Depressive Symptoms Caused by COVID-19 Using Social Media Data Mining

Abstract

:1. Introduction

2. Material and Methods

2.1. Data Collection and Preprocessing

2.2. Analytical Approach

2.2.1. Bootstrapping the Initial Keywords

2.2.2. Identify Stressed or Non-Stressed Tweets Using Words Obtained from Basilisk Algorithm

2.2.3. Generate Word Embeddings and Train the Classifier

2.2.4. Generate Labels Using the Trained Classifier

2.2.5. CorExQ9 Algorithm

2.2.6. Define the PHQ Category and Uncertainty Analysis

- Score 1: Understandable: the answer is understandable but may contain high levels of uncertainty;

- Score 2: Reasonable: maybe not the best possible answer but acceptable;

- Score 3: Good: would be happy to find this answer given on the map;

- Score 4: Absolutely right: no doubt about the match. It is a perfect prediction.

2.3. Baseline Evaluation

3. Results

3.1. Overall Experimental Procedures

3.2. Fuzzy Accuracy Assessment Results

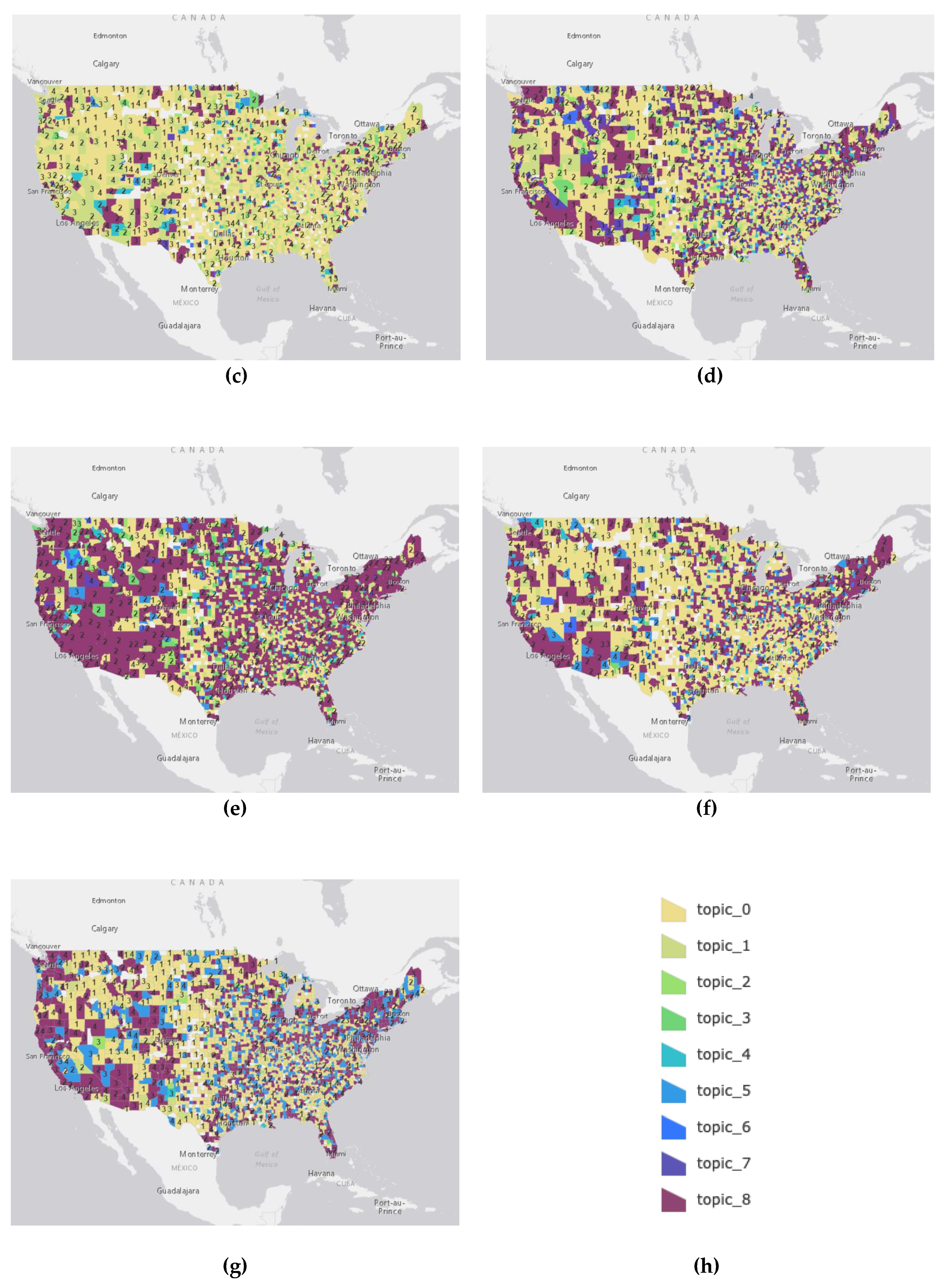

3.3. Spatiotemporal Patterns and Detected Topics

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

| Notation | Description |

|---|---|

| A subset of the extraction patterns that tend to extract the seed words | |

| The candidate nouns extracted by are placed in | |

| Total correlation, also called multi-information, it quantifies the redundancy or dependency among a set of random variables. | |

| Kullback–Leibler divergence, also called relative entropy, is a measure of how probability distribution is different from a second, reference probability distribution [50]. | |

| Probability densities of | |

| The mutual information between two random variables | |

| ’s dependence on can be written in terms of a linear number of parameters which are just the estimate marginals | |

| The Kronecker delta, a function of two variables. The function is 1 if the variables are equal, and 0 otherwise. | |

| A constant used to ensure the normalization of for each . It can be calculated by summing , an initial parameter. |

References

- World Health Organization. Coronavirus Disease (COVID-19): Situation Report 110. Available online: https://www.who.int/emergencies/diseases/novel-coronavirus-2019/situation-reports/ (accessed on 29 May 2020).

- Centers for Disease Control and Prevention Mental Health and Coping During COVID-19|CDC. Available online: https://www.cdc.gov/coronavirus/2019-ncov/daily-life-coping/managing-stress-anxiety.html (accessed on 29 May 2020).

- Kirzinger, A.; Kearney, A.; Hamel, L.; Brodie, M. KFF Health Tracking Poll-Early April 2020: The Impact of Coronavirus on Life in America; KFF: Oakland, CA, USA, 2020; pp. 1–30. [Google Scholar]

- Kroenke, K.; Spitzer, R.L. The PHQ-9: A new depression diagnostic and severity measure. Psychiatr. Ann. 2002, 32, 509–515. [Google Scholar] [CrossRef] [Green Version]

- Haselton, M.G.; Nettle, D.; Murray, D.R. The Evolution of Cognitive Bias. In The Handbook of Evolutionary Psychology; John Wiley & Sons Inc.: Hoboken, NJ, USA, 2015; pp. 1–20. [Google Scholar]

- Zhou, C.; Su, F.; Pei, T.; Zhang, A.; Du, Y.; Lu, B.; Cao, Z.; Wang, J.; Yuan, W.; Zhu, Y.; et al. COVID-19: Challenges to GIS with Big Data. Geogr. Sustain. 2020, 1, 77–87. Available online: https://www.sciencedirect.com/science/article/pii/S2666683920300092 (accessed on 29 May 2020). [CrossRef]

- Mollalo, A.; Vahedi, B.; Rivera, K.M. GIS-based spatial modeling of COVID-19 incidence rate in the continental United States. Sci. Total Environ. 2020, 728, 138884. [Google Scholar] [CrossRef] [PubMed]

- Jahanbin, K.; Rahmanian, V. Using twitter and web news mining to predict COVID-19 outbreak. Asian Pac. J. Trop. Med. 2020, 26–28. [Google Scholar] [CrossRef]

- Coppersmith, G.; Dredze, M.; Harman, C. Quantifying Mental Health Signals in Twitter. In Proceedings of the Workshop on Computational Linguistics and Clinical Psychology: From Linguistic Signal to Clinical Reality. Association for Computational Linguistics, Baltimore, MA, USA, 27 June 2014; pp. 51–60. Available online: http://aclweb.org/anthology/W14-3207 (accessed on 29 May 2020).

- De Choudhury, M.; Counts, S.; Horvitz, E. Predicting postpartum changes in emotion and behavior via social media. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013. [Google Scholar]

- Go, A.; Bhayani, R.; Huang, L. Twitter Sentiment Classification using Distant Supervision. Processing 2009, 1, 2009. [Google Scholar]

- Feldman, R. Techniques and applications for sentiment analysis. Commun. ACM 2013, 56, 89. [Google Scholar] [CrossRef]

- Yu, Y.; Duan, W.; Cao, Q. The impact of social and conventional media on firm equity value: A sentiment analysis approach. Decis. Support Syst. 2013, 55, 919–926. [Google Scholar] [CrossRef]

- Zhou, X.; Tao, X.; Yong, J.; Yang, Z. Sentiment analysis on tweets for social events. In Proceedings of the 17th International Conference on Computer Supported Cooperative Work in Design CSCWD, Whistler, BC, Canada, 27–29 June 2013; pp. 557–562. [Google Scholar]

- Pratama, B.Y.; Sarno, R. Personality classification based on Twitter text using Naive Bayes, KNN and SVM. In Proceedings of the 2015 International Conference on Data and Software Engineering (ICoDSE), Yogyakarta, Indonesia, 25–26 November 2015; pp. 170–174. Available online: https://www.semanticscholar.org/paper/Personality-classification-based-on-Twitter-text-Pratama-Sarno/6d8bf96e65b9425686bde3405b8601cc8c4f2779#references (accessed on 14 May 2020).

- Chen, Y.; Yuan, J.; You, Q.; Luo, J. Twitter Sentiment Analysis via Bi-sense Emoji Embedding and Attention-based LSTM. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Korea, 22–26 October 2018; ACM Press: New York, NY, USA, 2018; pp. 117–125. [Google Scholar]

- Barbosa, L.; Feng, J. Robust sentiment detection on twitter from biased and noisy data. In Proceedings of the Coling 2010 23rd International Conference on Computational Linguistics, Beijing, China, 23–27 August 2010. [Google Scholar]

- Pak, A.; Paroubek, P. Twitter as a Corpus for Sentiment Analysis and Opinion Mining. IJARCCE 2016, 5, 320–322. [Google Scholar] [CrossRef]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. Available online: https://arxiv.org/abs/1603.04467 (accessed on 14 May 2020).

- Asghar, M.Z.; Ahmad, S.; Qasim, M.; Zahra, S.R.; Kundi, F.M. SentiHealth: Creating health-related sentiment lexicon using hybrid approach. SpringerPlus 2016, 5. [Google Scholar] [CrossRef] [Green Version]

- Bhosale, S.; Sheikh, I.; Dumpala, S.H.; Kopparapu, S.K. End-to-End Spoken Language Understanding: Bootstrapping in Low Resource Scenarios. Proc. Interspeech 2019, 1188–1192. [Google Scholar] [CrossRef] [Green Version]

- Jin, C.; Zhang, S. Micro-blog Short Text Clustering Algorithm Based on Bootstrapping. In Proceedings of the 12th International Symposium on Computational Intelligence and Design, Hangzhou, China, 14–15 December 2019; pp. 264–266. [Google Scholar]

- Mihalcea, R.; Banea, C.; Wiebe, J. Learning Multilingual Subjective Language via Cross-Lingual Projections. In Proceedings of the 45th Annual Meeting of the Association of Computational Linguistics, Prague, Czech Republic, 23–30 June 2007; pp. 976–983. [Google Scholar]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Ramage, D.; Manning, C.D.; Dumais, S. Partially labeled topic models for interpretable text mining. In Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Diego, CA, USA, 21–24 August 2011. [Google Scholar]

- Passos, A.; Wallach, H.M.; Mccallum, A. Correlations and Anticorrelations in LDA Inference. In Proceedings of the Challenges in Learning Hierarchical Models: Transfer Learning and Optimization NIPS Workshop, Granada, Spain, 16–17 December 2011; pp. 1–5. [Google Scholar]

- Yazdavar, A.H.; Al-Olimat, H.S.; Ebrahimi, M.; Bajaj, G.; Banerjee, T.; Thirunarayan, K.; Pathak, J.; Sheth, A. Semi-Supervised Approach to Monitoring Clinical Depressive Symptoms in Social Media. In Proceedings of the 2017 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Sydney, Australia, 31 July–3 August 2017; pp. 1191–1198. [Google Scholar] [CrossRef] [Green Version]

- Loper, E.; Bird, S. NLTK: The Natural Language Toolkit. arxiv.org. 2002. Available online: http://portal.acm.org/citation.cfm?doid=1118108.1118117 (accessed on 29 May 2020).

- API Reference—TextBlob 0.16.0 Documentation. Available online: https://textblob.readthedocs.io/en/dev/api_reference.html#textblob.blob.TextBlob.sentiment (accessed on 22 June 2020).

- Ver Steeg, G.; Galstyan, A. Discovering structure in high-dimensional data through correlation explanation. Adv. Neural Inf. Process Syst. 2014, 1, 577–585. [Google Scholar]

- ver Steeg, G.; Galstyan, A. Maximally informative hierarchical representations of high-dimensional data. J. Mach. Learn. Res. 2015, 38, 1004–1012. [Google Scholar]

- Gallagher, R.J.; Reing, K.; Kale, D.; ver Steeg, G. Anchored correlation explanation: Topic modeling with minimal domain knowledge. Trans. Assoc. Comput. Linguist. 2017, 5, 529–542. [Google Scholar] [CrossRef] [Green Version]

- Loper, E.; Bird, S. NLTK: The Natural Language Toolkit. In Proceedings of the ACL-02 Workshop on Effective Tools and Methodologies for Teaching Natural Language Processing and Computational linguistics, Philadelphia, PA, USA, 12 July 2002. [Google Scholar] [CrossRef]

- Ji, S.; Li, G.; Li, C.; Feng, J. Efficient interactive fuzzy keyword search. In Proceedings of the 18th International Conference World Wide Web. Association for Computing Machinery, New York, NY, USA, 20–24 April 2009; pp. 371–380. [Google Scholar]

- A Fast, Offline Reverse Geocoder in Python. Available online: https://github.com/thampiman/reverse-geocoder (accessed on 29 May 2020).

- Full List of US States and Cities. Available online: https://github.com/grammakov/USA-cities-and-states (accessed on 4 May 2020).

- Thelen, M.; Riloff, E. A Bootstrapping Method for Learning Semantic Lexicons Using Extraction Pattern Contexts. In Proceedings of the 2002 Conference on Empirical Methods in Natural Language Processing, Philadelphia, PA, USA, 6–7 July 2002; pp. 214–221. [Google Scholar]

- Keywords: Stress. Available online: http://www.stresscure.com/hrn/keywrds.html (accessed on 1 June 2020).

- Chen, D.; Manning, C.D. A fast and accurate dependency parser using neural networks. In Proceedings of the EMNLP 2014 Conference on Empirical Methods in Natural Language, Doha, Qatar, 25–29 October 2014; pp. 740–750. Available online: https://www.emnlp2014.org/papers/pdf/EMNLP2014082.pdf (accessed on 20 June 2020).

- Contributors Universal Dependencies CoNLL-U Format. Available online: https://universaldependencies.org/format.html (accessed on 20 June 2020).

- Cer, D.; Yang, Y.; Kong, S.; Hua, N.; Limtiaco, N.; St. John, R.; Constant, N.; Guajardo-Cespedes, M.; Yuan, S.; Tar, C.; et al. Universal Sentence Encoder for English. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Brussels, Belgium, 31 October–4 November 2018; pp. 169–174. [Google Scholar]

- Nelder, J.A.; Wedderburn, R.W.M. Generalized Linear Models. J. R. Stat. Soc. Ser. A. Stat. Soc. 1972, 135, 370. [Google Scholar] [CrossRef]

- Keating, K.A.; Cherry, S. Use and Interpretation of Logistic Regression in Habitat-Selection Studies. J. Wildl. Manag. 2004, 68, 774–789. [Google Scholar] [CrossRef]

- Honnibal, M.; Montani, I. spaCy2: Natural Language Understanding with Bloom Embeddings, Convolutional Neural Networks and Incremental Parsing. 2017. Available online: https://spacy.io/ (accessed on 29 May 2020).

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Lewis Patient Health Questionnaire (PHQ-9) NAME: Over the Last 2 Weeks, How Often Have You Been Date: Several More than Nearly Half the Every Day. Depression. 2005, pp. 9–10. Available online: https://www.mcpapformoms.org/Docs/PHQ-9.pdf (accessed on 14 May 2020).

- Rehurek, R.; Sojka, P. Software Framework for Topic Modelling with Large Corpora. Gait Recognition from Motion Capture Data View Project Math Information Retrieval View Project. 2010. Available online: https://radimrehurek.com/lrec2010_final.pdf (accessed on 20 May 2020). [CrossRef]

- Sklearn.Feature_Extraction.Text.TfidfVectorizer—Scikit-Learn 0.23.1 Documentation. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.feature_extraction.text.TfidfVectorizer.html (accessed on 20 June 2020).

- Watanabe, S. Information Theoretical Analysis of Multivariate Correlation. IBM J. Res. Dev. 2010, 4, 66–82. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On Information and Sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Aizawa, A. An Information-Theoretic Perspective of tf-idf Measures. Available online: https://www.elsevier.com/locate/infoproman (accessed on 29 May 2020).

- Schneidman, E.; Bialek, W.; Berry, M.J. Synergy, Redundancy, and Independence in Population Codes. J. Neurosci. 2003, 23, 11539–11553. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Tishby, N.; Pereira, F.C.; Bialek, W. The Information Bottleneck Method. Available online: http://arxiv.org/abs/physics/0004057 (accessed on 17 May 2020).

- Gopal, S.; Woodcock, C. Theory and methods for accuracy assessment of thematic maps using fuzzy sets. Photogramm. Eng. Remote Sens. 1994, 60, 181–188. [Google Scholar]

- Zhang, Z.; Demšar, U.; Rantala, J.; Virrantaus, K. A Fuzzy Multiple-Attribute Decision-Making Modelling for vulnerability analysis on the basis of population information for disaster management. Int. J. Geogr. Inf. Sci. 2014, 28, 1922–1939. [Google Scholar] [CrossRef]

- Lee, D.D.; Seung, H.S. Algorithms for Non-Negative Matrix Factorization. Available online: http://papers.nips.cc/paper/1861-algorithms-for-non-negative-matrix-factorization (accessed on 29 May 2020).

- Newman, D.; Lau, J.H.; Grieser, K.; Baldwin, T. Automatic evaluation of topic coherence. In Human Language Technologies, Proceedings of the 2010 Annual Conference of the North American Chapter of the Association for Computational Linguistics, Los Angeles, CA, USA, 2–4 June 2010; Association for Computational Linguistics: Los Angeles, CA, USA, 2010; pp. 100–108. [Google Scholar]

- Mimno, D.; Wallach, H.M.; Talley, E.; Leenders, M.; McCallum, A. Optimizing semantic coherence in topic models. In Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing, Edinburgh, UK, 27–31 July 2011; pp. 262–272. [Google Scholar]

- McDonell, S. Li Wenliang: Coronavirus Kills Chinese Whistleblower Doctor. BBC News. 2020. Available online: https://www.bbc.com/news/world-asia-china-51403795 (accessed on 1 June 2020).

- John Hopkins University and Medicine COVID-19 Map—Johns Hopkins Coronavirus Resource Center. Available online: https://coronavirus.jhu.edu/map.html (accessed on 1 June 2020).

- Centers for Disease Control and Prevention COVIDView Summary Ending on March 28, 2020|CDC. Available online: https://www.cdc.gov/coronavirus/2019-ncov/covid-data/covidview/past-reports/04-03-2020.html (accessed on 29 May 2020).

- Atkeson, A. What Will Be the Economic Impact of COVID-19 in the US? Rough Estimates of Disease Scenarios. NBER Work. Pap. Ser. 2020, 25. [Google Scholar] [CrossRef]

- Baker, S.; Bloom, N.; Davis, S.; Terry, S. COVID-Induced Economic Uncertainty; NBER: Cambridge, MA, USA, 2020. [Google Scholar]

- Courthouse News Service. Burdeau Boris Johnson’s Talk of ‘Herd Immunity’ Raises Alarms. Available online: https://www.courthousenews.com/boris-johnsons-talk-of-herd-immunity-raises-alarms/ (accessed on 29 May 2020).

- Wojcik, S.; Hughes, A. How Twitter Users Compare to the General Public|Pew Research Center. Available online: https://www.pewresearch.org/internet/2019/04/24/sizing-up-twitter-users/ (accessed on 19 June 2020).

- Sloan, L.; Morgan, J.; Burnap, P.; Williams, M. Who tweets? Deriving the demographic characteristics of age, occupation and social class from Twitter user meta-data. PLoS ONE 2015, 10, e0115545. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mislove, A.; Lehmann, S.; Ahn, Y.-Y.; Onnela, J.-P.; Rosenquist, J.N. Understanding the Demographics of Twitter Users. In Proceedings of the the International Conference on Weblogs and Social Media (ICWSM), Barcelona, Spain, 17–21 July 2011; Available online: https://www.semanticscholar.org/paper/Understanding-the-Demographics-of-Twitter-Users-Mislove-Lehmann/06b50b893dd4b142a4059f3250bb876d61dd205e (accessed on 29 May 2020).

- Zhenkui, M.; Redmond, R.L. Tau coefficients for accuracy assessment of classification of remote sensing data. Photogramm. Eng. Remote Sens. 1994, 61, 435–439. [Google Scholar]

- Næsset, E. Conditional tau coefficient for assessment of producer’s accuracy of classified remotely sensed data. ISPRS J. Photogramm. Remote Sens. 1996, 51, 91–98. [Google Scholar] [CrossRef]

- Congalton, R.G.; Oderwald, R.G.; Mead, R.A. Assessing Landsat classification accuracy using discrete multivariate analysis statistical techniques. Photogramm. Eng. Remote Sens. 1983, 49, 1671–1678. [Google Scholar]

- Rossiter, D.G. Technical Note: Statistical methods for accuracy assessment of classified thematic maps. Geogr. Inf. Sci. 2004, 1–46. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.77.247&rep=rep1&type=pdf (accessed on 19 May 2020).

- Mosammam, A.M. Geostatistics: Modeling spatial uncertainty, second edition. J. Appl. Stat. 2013, 40, 923. [Google Scholar] [CrossRef] [Green Version]

- Verstegen, J.A.; Karssenberg, D.; van der Hilst, F.; Faaij, A. Spatio-temporal uncertainty in Spatial Decision Support Systems: A case study of changing land availability for bioenergy crops in Mozambique. Comput. Environ. Urban 2012, 36, 30–42. [Google Scholar] [CrossRef] [Green Version]

- Lukasiewicz, T.; Straccia, U. Managing uncertainty and vagueness in description logics for the Semantic Web. J. Web Seman. 2008, 6, 291–308. [Google Scholar] [CrossRef] [Green Version]

- Card, D.H. Using known map category marginal frequencies to improve estimates of thematic map accuracy. Photogramm. Eng. Remote Sens. 1982, 48, 431–439. [Google Scholar]

- Woodcock, C.E.; Gopal, S. Fuzzy set theory and thematic maps: Accuracy assessment and area estimation. Int. J. Geogr. Inf. Sci. 2000, 14, 153–172. [Google Scholar] [CrossRef]

| Initial Stressed Seed Words: | ||||||

| addiction | boredom | dissatisfaction | grief | insecure | fear | stress |

| tense | burnout | meditation | guilt | irritable | panic | alcoholism |

| anger | conflict | embarrassment | headache | irritated | pressure | tension |

| anxiety | criticism | communication | tired | loneliness | problem | impatience |

| backaches | deadline | frustration | impatient | nervous | sadness | worry |

| Initial Non-Stressed Seed Words: | ||||||

| chill | satisfaction | self-confidence | cure | perfection | heart | prevention |

| enjoy | happiness | self-improvement | distress | overwork | right | self-talk |

| love | productivity | empowerment | wedding | perfectionism | change | tension |

| relax | perfection | self-image | marriage | self-help | family | tired |

| relaxation | well-being | commitment | relax | control | joy | empower |

| Information in Text | Description |

|---|---|

| Index | Index of the word in the sentence |

| Text | Text of the word at the particular index |

| Lemma | Lemmatized value of the word |

| Xpos | Treebank-specific part-of-speech of the word. Example: “NNP” |

| Feats | Morphological features of the word. Example: “Gender = Ferm” |

| Governor | The index of governor of the word, which is 0 for root |

| Dependency relation | Dependency relation of the word with the governor word which is root if governor = 0. Example: “nmod” |

| Input:Extraction Patterns in the Unannotated Corpus and their Extractions, Seed Lists Output:Updated List of Seeds |

| Procedure: for 1. Score all extraction patterns with RlogF 2. = top ranked patterns 3. = extractions of patterns in 4. Score candidate words in 5. Add top five candidate words to 6. 7. Go to Step 1. |

| Model | Validation Accuracy |

|---|---|

| Support Vector Machine (SVM) (Radial basis function kernel) | 0.8218 |

| SVM (Linear kernel) | 0.8698 |

| Logistic Regression | 0.8620 |

| Naïve Bayes | 0.8076 |

| Simple Neural Network | 0.8690 |

| Input:phq_lexicon, Stressed Tweets (geotagged) Output:topic sparse matrix S where row: tweetid and columns: PHQ Stress Level Index (1 to 9) |

| Procedure: 1. Shallow parsing each tweet into using 2. For each in do 3. Calculate average vector of and using GloVe 4. Match with set using cosine similarity measure 5. Append each matched to 6. Calculate Tf-Idf vector for all the tweets and transform the calculated value to a sparse matrix 7. Iteratively run CorEx function with initial random variables 8. Estimate marginals; calculate total correlation; update 9. For each in 10. Compare and with bottleneck function 11. Until convergence |

| PHQ-9 Category | Description | Lexicon Examples |

|---|---|---|

| PHQ1 | Little interest or pleasure in doing things | Acedia, anhedonia, bored, boring, ca not be bothered |

| PHQ2 | Feeling down, depressed | Abject, affliction, agony, all torn up, bad day |

| PHQ3 | Trouble falling or staying asleep | Active at night, all nightery, awake, bad sleep |

| PHQ4 | Feeling tired or having little energy | Bushed, debilitate, did nothing, dog tired |

| PHQ5 | Poor appetite or overeating | Abdominals, anorectic, anorexia, as big as a mountain |

| PHQ6 | Feeling bad about yourself | I am a burden, abhorrence, forgotten, give up |

| PHQ7 | Trouble concentrating on things | Absent minded, absorbed, abstracted, addled |

| PHQ8 | Moving or speaking so slowly that other people could have noticed | Adagio, agitated, angry, annoyed, disconcert, furious |

| PHQ9 | Thoughts that you would be better off dead | Belt down, benumb, better be dead, blade, bleed |

| Model | Average UMass | Average UCI |

|---|---|---|

| CorExQ9 | –3.77 | –2.61 |

| LDA | –4.22 | –2.76 |

| NMF-LK | –3.97 | –2.58 |

| NMF-F | –4.03 | –2.36 |

| PHQ-9 Category and Description | Top Symptoms and Topics |

|---|---|

| PHQ0: Little interest or pleasure in doing things | Feb.: Chinese journalist, koalas, snakes, Melinda gates, South Korea, World Health Organization (WHO) declared outbreak, send hell, airways suspended, etc. Mar.: Prime Minister Boris, Dr. Anthony Fauci, moved intensively, attending mega rally, Tom Hanks, Rita Wilson, etc. Apr.: stay home, bored at home, masks arrived pos, sign petition UK change, uninformed act, etc. |

| PHQ1: Feeling down, depressed | Feb.: Wenliang Li, whistleblower, South Korea confirms, suffering eye darkness, China breaking, global health emergency, Nancy Messonnier, grave situation, etc. Mar.: abject, despair, Kelly Loeffler, Jim, stock, Richard Burr, feeling sorry, Gavin Newsom, cynical, nazi paedophile, destroyed, etc. Apr.: social isolation, ha island, suffering, bus driver, coverings, cloth face, etc. |

| PHQ2: Trouble falling or staying asleep | Feb.: sneezing, coughing, avoid nonessential travel, diamond princess cruise, San Lazaro hospital, RepRooney, Dean Koontz, gun, arranging flight, etc. Mar.: calls grow quarantine, secret meetings, donates quarterly, task force, sleepy, cutting pandemic, nitrogen dioxide, aquarium closed, Elba tested, etc. Apr.: workers, healthcare, basic income, Bronx zoo, tiger, keep awaking, coughing, concealed, etc. |

| PHQ3: Feeling tired or having little energy | Feb.: test positive, tired dropping flies, horror, clinical features patients, national health commission, governors, flown CDC advice, weakness, etc. Mar.: blocking bill limits, drugmakers, Elizabeth fault, CPAC attendee tested, overruled health, collapses, front lines, practicing social distancing, etc. Apr.: exhausted, Boris Johnson admitted hospital, Brooke Baldwin, etc. |

| PHQ4: Poor appetite or overeating | Feb.: food market, Harvard chemistry, citizen plainly, Commerce Secretary Wilbur, White House asks, scientists investigate, etc. Mar.: obesity, anemia, Iran temporarily releases, CDC issued warning, blood pressure, Obama holdover call fly, etc. Apr.: White House, force, Crozier, roosevelt, Peter Navarro, confirmed cases, etc. |

| PHQ5: Feeling bad about yourself | Feb.: worst treating, accelerate return jobs, tendency, investigating suspected cases, unwanted rolls, mistakenly released, vaccine, predicted kill, etc. Mar.: testing January aid, executive order medical, VP secazar, risking, embarrassment ugly, unnecessarily injured, etc. Apr.: invisible, house press, gross, insidious, irresponsible, shame, trump, worst, obvious consequences, etc. |

| PHQ6: Trouble concentrating on things | Feb.: dangerous pathogens, distracted, ignorant attacks, funding, camps, travel advisor, let alone watching, etc. Mar.: dogs, Fox news cloth, institute allergy, hands soap water, self-quarantined, Christ redeemer, valves, etc. Apr.: Theodore Roosevelt, confused, Dalglish, economy shrinks, U.S. commerce, etc. |

| PHQ7: Moving or speaking so slowly that other people could have noticed | Feb.: panic, Santa Clara, furious, wall street journal reports, pencedemic bus, dead birds, Tencent accidentally, unhinged disease control, etc. Mar.: Theodore, federal reserve, panic buy, councilwoman, anxiety, USS Theodore, frantic, avian swine, etc. Apr.: chief medical officer, social distancing, NHS lives, rallies jan, CDC issued warning, enrollment, Ron Desantis, etc. |

| PHQ8: Thoughts that you would be better off dead | Feb.: death people, China death, death toll rises, cut, China deadly outbreak, Hubei, lunar year, laboratories linked, first death, etc. Mar.: Washington state, dead, prevent, causing, worse, kill, death camps, increasing, etc. Apr.: death, patient, living expenses, abused, uninsured, treatment, death camps, etc. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, D.; Chaudhary, H.; Zhang, Z. Modeling Spatiotemporal Pattern of Depressive Symptoms Caused by COVID-19 Using Social Media Data Mining. Int. J. Environ. Res. Public Health 2020, 17, 4988. https://doi.org/10.3390/ijerph17144988

Li D, Chaudhary H, Zhang Z. Modeling Spatiotemporal Pattern of Depressive Symptoms Caused by COVID-19 Using Social Media Data Mining. International Journal of Environmental Research and Public Health. 2020; 17(14):4988. https://doi.org/10.3390/ijerph17144988

Chicago/Turabian StyleLi, Diya, Harshita Chaudhary, and Zhe Zhang. 2020. "Modeling Spatiotemporal Pattern of Depressive Symptoms Caused by COVID-19 Using Social Media Data Mining" International Journal of Environmental Research and Public Health 17, no. 14: 4988. https://doi.org/10.3390/ijerph17144988

APA StyleLi, D., Chaudhary, H., & Zhang, Z. (2020). Modeling Spatiotemporal Pattern of Depressive Symptoms Caused by COVID-19 Using Social Media Data Mining. International Journal of Environmental Research and Public Health, 17(14), 4988. https://doi.org/10.3390/ijerph17144988