SEEP-CI: A Structured Economic Evaluation Process for Complex Health System Interventions

Abstract

:1. Introduction

2. Materials and Methods—Development and Outline of the SEEP-CI

2.1. Description of Case Study

2.2. Description of Challenges for the Economic Evaluation of Complex Health System Interventions Such as REACHOUT

2.2.1. Dispersed Impacts

2.2.2. Non-Standardized Interventions

2.2.3. Complex Causal Relationship between Intervention and Outcome

2.2.4. Impact Conditional on Health System Functioning

3. Results—Development of the SEEP-CI and Illustrative Application to an Evaluation of Embedding QI in Community Health Programs

3.1. Development of the SEEP-CI

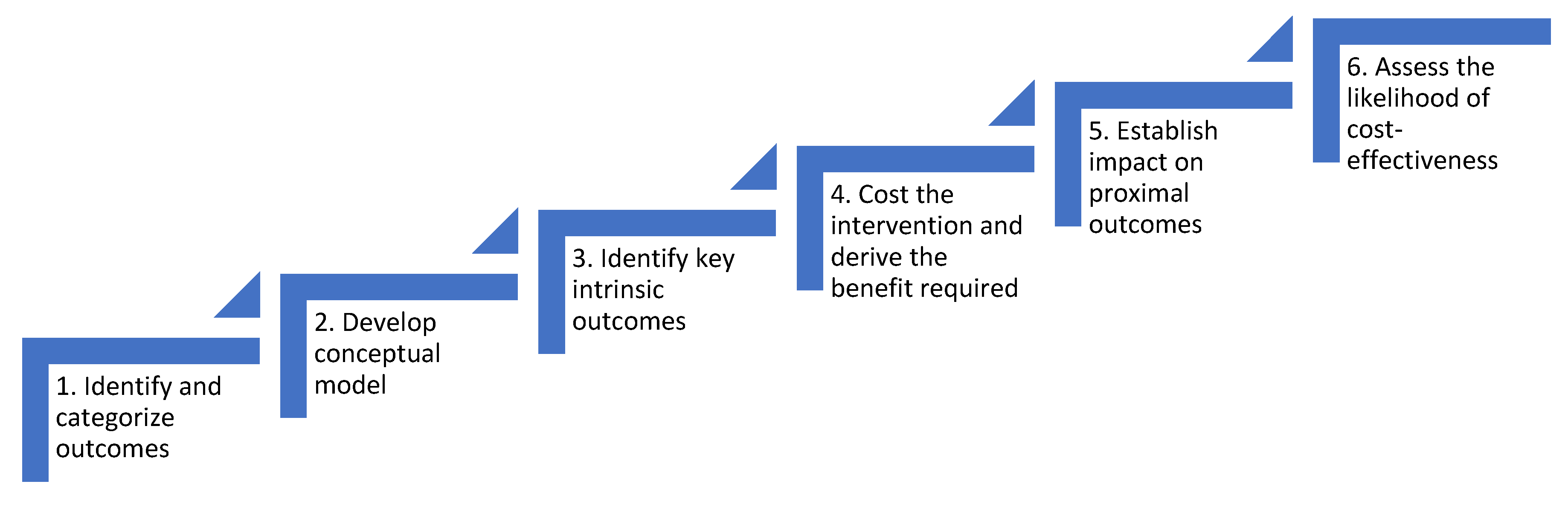

3.2. Description of the SEEP-CI

3.2.1. Identify and Categorize Outcomes of the Intervention

3.2.2. Develop a Conceptual Model Outlining the Causal Relationship between the Intervention and the Outcomes Identified in Step One

- (i)

- understand what the proximal goal of the intervention is, such as delivering a training package to a specific cadre of health workers

- (ii)

- specify the relationship between this goal and health care delivery e.g., better trained workers might miss fewer cases where referral to specialists was required

- (iii)

- determine the consequences of changing health delivery e.g., whether more appropriate referrals might improve survival rates

- (iv)

- identify potential feedback loops e.g., improved survival might increase patient willingness to engage with the health system, leading to more opportunities for health workers to learn through experience

3.2.3. Identify Key Intrinsic Outcomes that Will Drive the Adoption Decision

3.2.4. Estimate the Cost of Delivering the Intervention, and the Threshold Change in the Key Intrinsic Outcome(s) Required for the Intervention to Be Cost-Effective

3.2.5. Identify Proximal Instrumental Outcomes that are Likely to Cause Changes in the Key Intrinsic Outcome(s) Identified in Step Three, and Estimate the Effectiveness of the Intervention in Terms of These Proximal Outcomes

3.2.6. Assess the Strength of the Case for Investing in the Intervention

3.3. Application of the SEEP-CI to Evaluation of Embedding QI in Community Health Programmes

3.3.1. Identify and Categorize Outcomes

3.3.2. Develop a Conceptual Model of Causal Links between Outcomes

3.3.3. Identify Key Intrinsic Outcomes

3.3.4. Estimate the Costs of Delivering the Intervention, and the Threshold Change Required for the Intervention to Be Cost-Effective

3.3.5. Identify the Key Proximal Instrumental Outcomes for the Intervention, Estimate the Effectiveness of the Intervention on these Outcomes, and Assess the Strength of the Case for Investing in the Intervention

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kruk, M.E.; Gage, A.D.; Arsenault, C.; Jordan, K.; Leslie, H.H.; Roder-DeWan, S.; Adeyi, O.; Barker, P.; Daelmans, B.; Doubova, S.V.; et al. High-quality health systems in the Sustainable Development Goals era: Time for a revolution. Lancet Glob. Health 2018, 6, e1196–e1252. [Google Scholar] [CrossRef] [Green Version]

- NICE. Guide to the Process of Technology Appraisal; National Institute for Health and Clinical Excellence (NICE): London, UK, 2014. [Google Scholar]

- Campbell, M.; Fitzpatrick, R.; Haines, A.; Kinmonth, A.L.; Sandercock, P.; Spiegelhalter, D.; Tyrer, P. Framework for design and evaluation of complex interventions to improve health. BMJ 2000, 321, 694–696. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- NICE. Methods for the Development of NICE Public Health Guidance (Third Edition) Process and Methods [PMG4]. Available online: https://www.nice.org.uk/process/pmg4/chapter/introduction (accessed on 28 August 2020).

- Sutton, M.; Garfield-Birkbeck, S.; Martin, G.; Meacock, R.; Morris, S.; Sculpher, M.; Street, A.; Watson, S.I.; Lilford, R.J. Economic Analysis of Service and Delivery Interventions in Health Care. Health Serv. Deliv. Res. 2018, 6. [Google Scholar] [CrossRef] [PubMed]

- NICE. Guide to the Methods of Technology Appraisal; National Institute for Health and Clinical Excellence (NICE): London, UK, 2013. [Google Scholar]

- Hauck, K.; Morton, A.; Chalkidou, K.; Chi, Y.L.; Culyer, A.; Levin, C.; Meacock, R.; Over, M.; Thomas, R.; Vassall, A.; et al. How can we evaluate the cost-effectiveness of health system strengthening? A typology and illustrations. Soc. Sci. Med. 2019, 220, 141–149. [Google Scholar] [CrossRef]

- Meacock, R. Methods for the economic evaluation of changes to the organisation and delivery of health services: Principal challenges and recommendations. Health Econ. Policy Law 2019, 14, 119–134. [Google Scholar] [CrossRef]

- Tsiachristas, A.; Stein, K.V.; Evers, S.; Rutten-van Mölken, M. Performing economic evaluation of integrated care: Highway to hell or stairway to heaven? Int. J. Integr. Care 2016, 16, 3. [Google Scholar] [CrossRef] [Green Version]

- REACHOUT. REACHOUT Consortium. Available online: http://www.reachoutconsortium.org/ (accessed on 15 July 2020).

- Kumar, M.B.; Madan, J.J.; Achieng, M.M.; Limato, R.; Ndima, S.; Kea, A.Z.; Chikaphupha, K.R.; Barasa, E.; Taegtmeyer, M. Is quality affordable for community health systems? Costs of integrating quality improvement into close-to-community health programmes in five low-income and middle-income countries. BMJ Glob. Health 2019, 4, e001390. [Google Scholar] [CrossRef] [Green Version]

- Craig, P.; Dieppe, P.; Macintyre, S.; Michie, S.; Nazareth, I.; Petticrew, M. Developing and evaluating complex interventions: The new Medical Research Council guidance. BMJ 2008, 337. [Google Scholar] [CrossRef] [Green Version]

- Barasa, E.W.; English, M. Economic evaluation of package of care interventions employing clinical guidelines. Trop. Med. Int. Health 2011, 16, 97–104. [Google Scholar] [CrossRef] [Green Version]

- Brown, C.; Lilford, R. Evaluating service delivery interventions to enhance patient safety. BMJ 2008, 337, a2764. [Google Scholar] [CrossRef]

- Vassall, A.; Mangham-Jefferies, L.; Gomez, G.B.; Pitt, C.; Foster, N. Incorporating demand and supply constraints into economic evaluations in low-income and middle-income countries. Health Econ. 2016, 25, 95–115. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Raiffa, H.; Schlaifer, R. Applied Statistical Decision Theory; Wiley Classics Library: New York, NY, USA, 1961. [Google Scholar]

- Kumar, M.B.; Taegtmeyer, M.; Madan, J.; Ndima, S.; Chikaphupha, K.; Kea, A.; Barasa, E. How do decision-makers use evidence in community health policy and financing decisions? A qualitative study and conceptual framework in four African countries. Health Policy Plan. 2020. [Google Scholar] [CrossRef] [PubMed]

- De Meyrick, J. The Delphi method and health research. Health Educ. 2003. [Google Scholar] [CrossRef]

- Robinson, S. Conceptual modelling for simulation Part I: Definition and requirements. J. Oper. Res. Soc. 2008, 59, 278–290. [Google Scholar] [CrossRef] [Green Version]

- Vennix, J.A. Group model-building: Tackling messy problems. System Dynamics Review. J. Syst. Dyn. Soc. 1999, 15, 379–401. [Google Scholar] [CrossRef]

- Woods, B.; Revill, P.; Sculpher, M.; Claxton, K. Country-level cost-effectiveness thresholds: Initial estimates and the need for further research. Value Health 2016, 19, 929–935. [Google Scholar] [CrossRef] [Green Version]

- Borghi, J.; Thapa, B.; Osrin, D.; Jan, S.; Morrison, J.; Tamang, S.; Shrestha, B.P.; Wade, A.; Manandhar, D.S.; Anthony, M.D. Economic assessment of a women’s group intervention to improve birth outcomes in rural Nepal. Lancet 2005, 366, 1882–1884. [Google Scholar] [CrossRef]

- Duncan, C.; Weich, S.; Fenton, S.J.; Twigg, L.; Moon, G.; Madan, J.; Singh, S.P.; Crepaz-Keay, D.; Parsons, H.; Bhui, K. A realist approach to the evaluation of complex mental health interventions. Br. J. Psychiatry 2018, 213, 451–453. [Google Scholar] [CrossRef] [Green Version]

- Pawson, R.; Tilley, N. An introduction to scientific realist evaluation. In Evaluation for the 21st Century: A Handbook; SAGE Publications: London, UK, 1997; pp. 405–418. [Google Scholar]

- Marchal, B.; Van Belle, S.; Van Olmen, J.; Hoerée, T.; Kegels, G. Is realist evaluation keeping its promise? A review of published empirical studies in the field of health systems research. Evaluation 2012, 18, 192–212. [Google Scholar] [CrossRef]

- Ackermann, F. Problem structuring methods ‘in the Dock’: Arguing the case for Soft OR. Eur. J. Oper. Res. 2012, 219, 652–658. [Google Scholar] [CrossRef]

- Mingers, J.; Rosenhead, J. Problem structuring methods in action. Eur. J. Oper. Res. 2004, 152, 530–554. [Google Scholar] [CrossRef]

- Checkland, P.B. Soft systems methodology. Hum. Syst. Manag. 1989, 8, 273–289. [Google Scholar] [CrossRef]

- Husereau, D.; Drummond, M.; Petrou, S.; Carswell, C.; Moher, D.; Greenberg, D.; Augustovski, F.; Briggs, A.H.; Mauskopf, J.; Loder, E.; et al. Consolidated health economic evaluation reporting standards (CHEERS)—Explanation and elaboration: A report of the ISPOR health economic evaluations publication guidelines good reporting practices task force. Value Health 2013, 16, 231–250. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| PROXIMAL | DISTAL | |

|---|---|---|

| INSTRUMENTAL | Supervisory support for CTC providers CTC provider motivation Number of quality improvement (QI) meetings Number of QI initiatives proposed Number of QI teams trained in the global curriculum | Utilization of health clinics Community satisfaction with CTC providers CTC provider turnover Uptake of vaccinations |

| INTRINSIC | Costs of training Staff wellbeing | Maternal mortality DALYs Deaths due to diarrhea |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Madan, J.; Bruce Kumar, M.; Taegtmeyer, M.; Barasa, E.; Singh, S.P. SEEP-CI: A Structured Economic Evaluation Process for Complex Health System Interventions. Int. J. Environ. Res. Public Health 2020, 17, 6780. https://doi.org/10.3390/ijerph17186780

Madan J, Bruce Kumar M, Taegtmeyer M, Barasa E, Singh SP. SEEP-CI: A Structured Economic Evaluation Process for Complex Health System Interventions. International Journal of Environmental Research and Public Health. 2020; 17(18):6780. https://doi.org/10.3390/ijerph17186780

Chicago/Turabian StyleMadan, Jason, Meghan Bruce Kumar, Miriam Taegtmeyer, Edwine Barasa, and Swaran Preet Singh. 2020. "SEEP-CI: A Structured Economic Evaluation Process for Complex Health System Interventions" International Journal of Environmental Research and Public Health 17, no. 18: 6780. https://doi.org/10.3390/ijerph17186780

APA StyleMadan, J., Bruce Kumar, M., Taegtmeyer, M., Barasa, E., & Singh, S. P. (2020). SEEP-CI: A Structured Economic Evaluation Process for Complex Health System Interventions. International Journal of Environmental Research and Public Health, 17(18), 6780. https://doi.org/10.3390/ijerph17186780