Towards Child-Appropriate Virtual Acoustic Environments: A Database of High-Resolution HRTF Measurements and 3D-Scans of Children

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

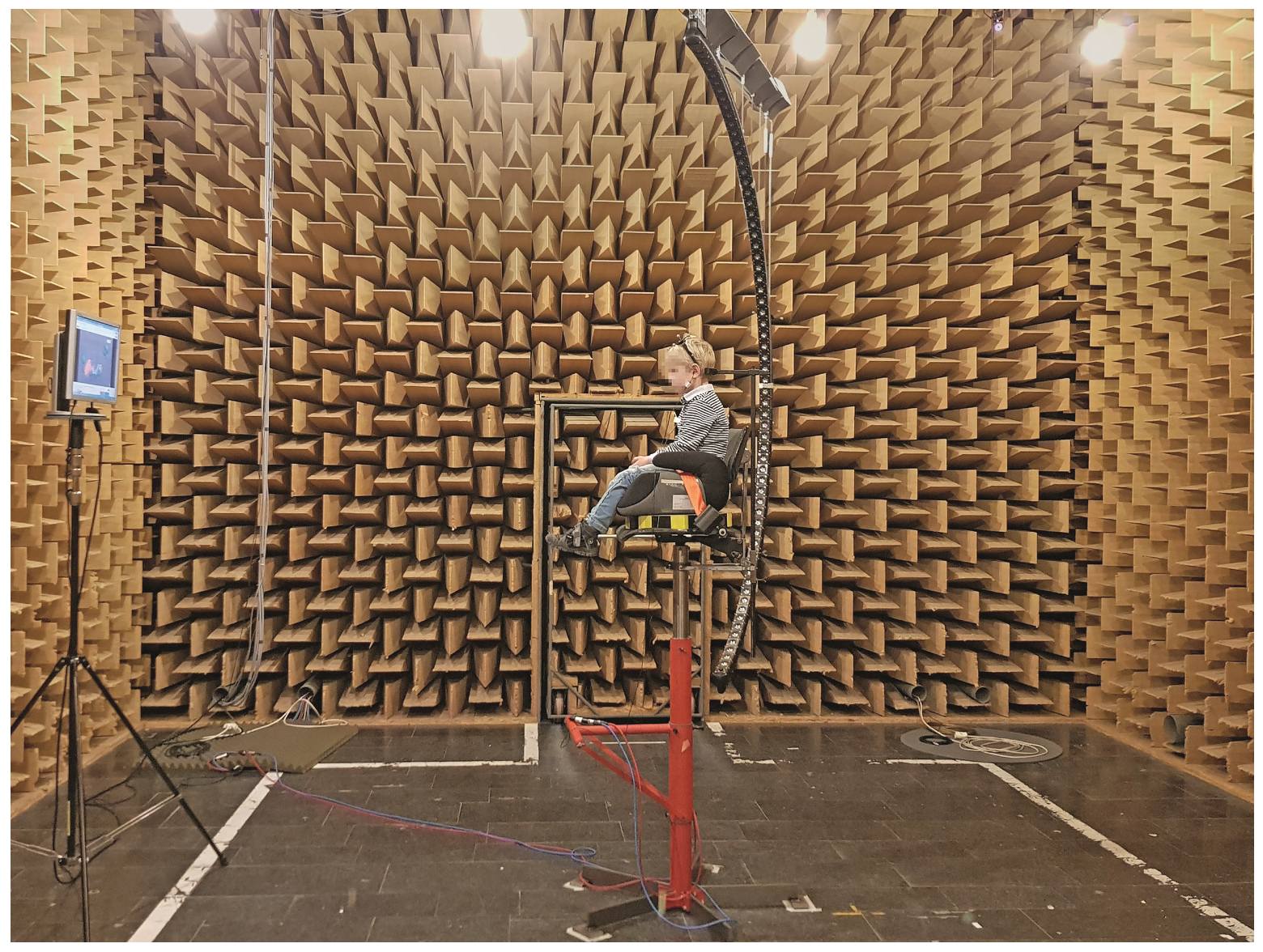

2.2. HRTF Measurement

2.3. HRTF Processing

2.4. 3D-Scan

2.5. Mes Processing

2.6. HRTF Simulation

2.7. HRTF Distance Metrics

3. Results

3.1. 3D-Models: Mesh Resolution

3.2. 3D-Models: Anthropometric Measurements

3.3. HRTF Measurement: Head Movement

3.4. HRTF Measurements: Comparison to the KEMAR Artificial Head

3.5. HRTF Comparison: Measurement and Simulation

3.6. HRTF Simulation: Effect of the Anonymization of the 3D-Models

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Calosso, G.; Puglisi, G.E.; Astolfi, A.; Castellana, A.; Carullo, A.; Pellerey, F. A one-school year longitudinal study of secondary school teachers’ voice parameters and the influence of classroom acoustics. J. Acoust. Soc. Am. 2017, 142, 1055. [Google Scholar] [CrossRef]

- Astolfi, A.; Bottalico, P.; Barbato, G. Subjective and objective speech intelligibility investigations in primary school classrooms. J. Acoust. Soc. Am. 2012, 131, 247–257. [Google Scholar] [CrossRef] [Green Version]

- Prodi, N.; Visentin, C.; Peretti, A.; Griguolo, J.; Bartolucci, G.B. Investigating Listening Effort in Classrooms for 5- to 7-Year-Old Children. Lang. Speech Hear. Serv. Sch. 2019, 50, 196–210. [Google Scholar] [CrossRef]

- Klatte, M.; Bergstroem, K.; Lachmann, T. Does noise affect learning? A short review on noise effects on cognitive performance in children. Front. Psychol. 2013, 4, 578. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vorländer, M. Auralization, 2nd ed.; RWTHedition; Springer International Publishing: Berlin/Heidelberg, Germany, 2020. [Google Scholar] [CrossRef]

- Gerzon, M. Ambisonics in Multichannel Broadcasting and Video. J. Audio Eng. Soc. 1985, 33, 859–871. [Google Scholar]

- Zotter, F.; Frank, M. Ambisonics: A Practical 3D Audio Theory for Recording, Studio Production, Sound Reinforcement, and Virtual Reality; Springer Nature: Cham, Switzerland, 2019. [Google Scholar]

- Pulkki, V. Virtual Sound Source Positioning using Vector Base Amplitude Panning. J. Audio Eng. Soc. 1997, 45, 456–466. [Google Scholar]

- Wightman, F.L.; Kistler, D.J. Headphone simulation of free-field listening. I: Stimulus synthesis. J. Acoust. Soc. Am. 1989, 85, 858–867. [Google Scholar] [CrossRef]

- Møller, H. Fundamentals of binaural technology. Appl. Acoust. 1992, 36, 171–218. [Google Scholar] [CrossRef] [Green Version]

- Lindau, A. Binaural Resynthesis of Acoustic Environments. Technology and Perceptual Evaluation. Ph.D Thesis, Technical University of Berlin, Berlin, Germany, 2014. [Google Scholar]

- Oberem, J.; Masiero, B.; Fels, J. Experiments on authenticity and plausibility of binaural reproduction via headphones employing different recording methods. Appl. Acoust. 2016, 114, 71–78. [Google Scholar] [CrossRef]

- Møller, H.; Friis Sorensen, M.; Hammershoi, D.; Jensen, C.B. Head-related transfer functions of human subjects. J. Audio Eng. Soc. 1995, 43, 300–321. [Google Scholar]

- Masiero, B.; Fels, J. Perceptually robust headphone equalization for binaural reproduction. In Proceedings of the Audio Engineering Society—130th Convention, London, UK, 13–16 May 2011. [Google Scholar] [CrossRef]

- Blauert, J. Spatial Hearing: The Psychophysics of Human Sound Localization; MIT Press: Cambridge, UK, 1997. [Google Scholar]

- Macpherson, E.A.; Middlebrooks, J.C. Listener weighting of cues for lateral angle: The duplex theory of sound localization revisited. J. Acoust. Soc. Am. 2002, 111, 2219–2236. [Google Scholar] [CrossRef] [Green Version]

- Masiero, B.; Pollow, M.; Fels, J. Design of a fast broadband individual head-related transfer function measurement system. In Proceedings of the Forum Acusticum, Aalborg, Denmark, 27 June–1 July 2011; pp. 2197–2202. [Google Scholar]

- Richter, J.G.; Behler, G.; Fels, J. Evaluation of a Fast HRTF Measurement System; Audio Engineering Society Convention 140; Audio Engineering Society: New York, NY, USA, 2016. [Google Scholar]

- Thiemann, J.; van de Par, S. A multiple model high-resolution head-related impulse response database for aided and unaided ears. Eurasip J. Adv. Signal Process. 2019, 2019, 9. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Peissig, J. Measurement of Head-Related Transfer Functions: A Review. Appl. Sci. 2020, 10, 5014. [Google Scholar] [CrossRef]

- Burkhard, M.D.; Sachs, R.M. Anthropometric manikin for acoustic research. J. Acoust. Soc. Am. 1975, 58, 214–222. [Google Scholar] [CrossRef]

- Gardner, W.G.; Martin, K.D. HRTF measurements of a KEMAR. J. Acoust. Soc. Am. 1995, 97, 3907–3908. [Google Scholar] [CrossRef]

- Langendijk, E.H.A.; Bronkhorst, A.W. Contribution of spectral cues to human sound localization. J. Acoust. Soc. Am. 2002, 112, 1583–1596. [Google Scholar] [CrossRef]

- Stitt, P.; Picinali, L.; Katz, B.F.G. Auditory Accommodation to Poorly Matched Non-Individual Spectral Localization Cues Through Active Learning. Sci. Rep. 2019, 9, 1063. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zahorik, P.; Bangayan, P.; Sundareswaran, V.; Wang, K.; Tam, C. Perceptual recalibration in human sound localization: Learning to remediate front-back reversals. J. Acoust. Soc. Am. 2006, 120, 343–359. [Google Scholar] [CrossRef]

- Parseihian, G.; Katz, B.F.G. Rapid head-related transfer function adaptation using a virtual auditory environment. J. Acoust. Soc. Am. 2012, 131, 2948. [Google Scholar] [CrossRef] [Green Version]

- Oberem, J.; Lawo, V.; Koch, I.; Fels, J. Intentional switching in auditory selective attention: Exploring different binaural reproduction methods in an anechoic chamber. Acta Acust. United Acust. 2014, 100, 1139–1148. [Google Scholar] [CrossRef]

- Jakien, K.M.; Kampel, S.D.; Stansell, M.M.; Gallun, F.J. Validating a Rapid, Automated Test of Spatial Release From Masking. Am. J. Audiol. 2017, 26, 507–518. [Google Scholar] [CrossRef] [Green Version]

- Lorenzo, P.; Maria, C.-R.; Daniel, G.-T.; Arcadio, R.-L. Speech-in-Noise Performances in Virtual Cocktail Party Using Different Non-Individual Head Related Transfer Functions. In Proceedings of the International Congress on Acoustics (ICA), Aachen, Germany, 9–13 September 2019; pp. 2158–2159. [Google Scholar]

- Ahrens, A.; Cuevas-Rodriguez, M.; Brimijoin, W.O. Speech intelligibility with various head-related transfer functions: A computational modelling approach. JASA Express Lett. 2021, 1, 034401. [Google Scholar] [CrossRef]

- Adamson, J.E.; Hortox, C.E.; Crawford, H.H. The Growth Pattern of the External Ear. Plast. Reconstr. Surg. 1965, 36, 466–470. [Google Scholar] [CrossRef]

- Fels, J.; Vorländer, M. Anthropometric parameters influencing head-related transfer functions. Acta Acust. United Acust. 2009, 95, 331–342. [Google Scholar] [CrossRef]

- ITU-T. P.58 Head and Torso Simulator for Telephonometry. Recommendation, International Telecommunication Union. 2021. Available online: https://www.itu.int/rec/T-REC-P.58-202102-S/en (accessed on 16 December 2021).

- Cameron, S.; Dillon, H.; Newall, P. Development and evaluation of the listening in spatialized noise test. Ear Hear. 2006, 27, 30–42. [Google Scholar] [CrossRef] [PubMed]

- Loh, K.; Fintor, E.; Nolden, S.; Fels, J. Children’s intentional switching of auditory selective attention in spatial and noisy acoustic environments in comparison to adults. Develop. Psychol. 2021, in press. [Google Scholar] [CrossRef]

- Johnston, D.; Egermann, H.; Kearney, G. SoundFields: A virtual reality game designed to address auditory hypersensitivity in individuals with autism spectrum disorder. Appl. Sci. 2020, 10, 2996. [Google Scholar] [CrossRef]

- Fels, J.; Schröder, D.; Vorländer, M. Room Acoustics Simulations Using Head-Related Transfer Functions of Children and Adults. In Proceedings of the International Symposium on Room Acoustics-Satellite Symposium of the 19th International Congress on Acoustics, Seville, Spain, 10–12 September 2007. [Google Scholar]

- Loh, K.; Fels, J. Binaural psychoacoustic parameters describing noise perception of children in comparison to adults. In Proceedings of the Euronoise 2018, 11th European Congress and Exposition on Noise Control Engineering, Hersonissos, Greece, 27–31 May 2018. [Google Scholar]

- Prodi, N.; Farnetani, A.; Smyrnova, Y.; Fels, J. Investigating classroom acoustics by means of advanced reproduction techniques. In Proceedings of the Audio Engineering Society—122nd Convention, Vienna, Austria, 5–8 May 2007; Volume 3, pp. 1201–1210. [Google Scholar]

- Fels, J.; Buthmann, P.; Vorländer, M. Head-related transfer functions of children. Acta Acust. United Acust. 2004, 90, 918–927. [Google Scholar]

- Bomhardt, R.; Braren, H.; Fels, J. Individualization of head-related transfer functions using principal component analysis and anthropometric dimensions. Proc. Meet. Acoust. 2016, 29, 050007. [Google Scholar] [CrossRef]

- Inoue, N.; Kimura, T.; Nishino, T.; Itou, K.; Takeda, K. Evaluation of HRTFs estimated using physical features. Acoust. Sci. Technol. 2005, 26, 453–455. [Google Scholar] [CrossRef] [Green Version]

- Kistler, D.J.; Wightman, F.L. A Model of Head-Related Transfer Functions based on Principal Components Analysis and Minimum-Phase Reconstruction. J. Acoust. Soc. Am. 1992, 91, 1637–1647. [Google Scholar] [CrossRef]

- Middlebrooks, J.C. Individual differences in external-ear transfer functions reduced by scaling in frequency. J. Acoust. Soc. Am. 1999, 106, 1480–1492. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Li, Z.; Salvendy, G. Individualization of Head-Related Transfer Function for Three-Dimensional Virtual Auditory Display: A Review. In Proceedings of the 12th International Conference on Human-Computer Interaction (HCI International 2007), Beijing, China, 22–27 July 2007; pp. 397–407. [Google Scholar] [CrossRef]

- Algazi, V.R.; Duda, R.; Thompson, D.; Avendano, C. The CIPIC HRTF database. In Proceedings of the 2001 IEEE Workshop on the Applications of Signal Processing to Audio and Acoustics, New Platz, NY, USA, 24 October 2001; pp. 99–102. [Google Scholar] [CrossRef]

- Bomhardt, R.; de la Fuente Klein, M.; Fels, J. A high-resolution head-related transfer function and three-dimensional ear model database. In Proceedings of the Meetings on Acoustics, Honolulu, HI, USA, 28 November–2 December 2016; Volume 29. [Google Scholar] [CrossRef] [Green Version]

- Majdak, P.; Goupell, M.J.; Laback, B. 3-D localization of virtual sound sources: Effects of visual environment, pointing method, and training. Atten. Percept. Psychophys. 2010, 72, 454–469. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Harder, S.; Paulsen, R.R.; Larsen, M.; Laugesen, S. A Three Dimensional Children Head Database for Acoustical Research and Development. In Proceedings of the Meetings on Acoustics, Montreal, QC, Canada, 2–7 June 2013; Volume 19, p. 50013. [Google Scholar] [CrossRef] [Green Version]

- Richter, J.G.; Fels, J. On the Influence of Continuous Subject Rotation during High-Resolution Head-Related Transfer Function Measurements. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 730–741. [Google Scholar] [CrossRef]

- Berzborn, M.; Bomhardt, R.; Klein, J.; Richter, J.G.; Vorländer, M. The ITA-Toolbox: An Open Source MATLAB Toolbox for Acoustic Measurements and Signal Processing. In Proceedings of the DAGA 2017, 43th Annual German Congress on Acoustics, Kiel, Germany, 6–9 March 2017. [Google Scholar]

- Richter, J.G. Fast Measurement of Individual Head-Related Transfer Functions. Ph.D. Thesis, RWTH Aachen University, Berlin, Germany, 2019. [Google Scholar] [CrossRef]

- Xie, B. Virtual Auditory Display and Spatial Hearing; J. Ross Publishing: Fort Lauderdale, FL, USA, 2013. [Google Scholar]

- Dietrich, P.; Masiero, B.; Vorländer, M. On the optimization of the multiple exponential sweep method. J. Audio Eng. Soc. 2013, 61, 113–124. [Google Scholar]

- Katz, B.F.G. Boundary element method calculation of individual head-related transfer function. I. Rigid model calculation. J. Acoust. Soc. Am. 2001, 110, 2440–2448. [Google Scholar] [CrossRef]

- Katz, B.F.G. Boundary element method calculation of individual head-related transfer function. II. Impedance effects and comparisons to real measurements. J. Acoust. Soc. Am. 2001, 110, 2449–2455. [Google Scholar] [CrossRef]

- Ziegelwanger, H.; Majdak, P.; Kreuzer, W. Numerical calculation of listener-specific head-related transfer functions and sound localization: Microphone model and mesh discretization. J. Acoust. Soc. Am. 2015, 138, 208–222. [Google Scholar] [CrossRef] [Green Version]

- Ziegelwanger, H.; Kreuzer, W.; Majdak, P. MESH2HRTF: An open-source software package for the numerical calculation of head-related tranfer functions. In Proceedings of the 22nd International Congress on Sound and Vibration, ICSV, Florence, Italy, 12–16 July 2015. [Google Scholar] [CrossRef]

- Ziegelwanger, H.; Kreuzer, W.; Majdak, P. A priori mesh grading for the numerical calculation of the head-related transfer functions. Appl. Acoust. 2016, 114, 99–110. [Google Scholar] [CrossRef] [Green Version]

- Braren, H.; Fels, J. A High-Resolution Head-Related Transfer Function Data Set and 3D-Scan of KEMAR; Technical report; Institute for Hearing Technology and Acoustics, RWTH Aachen University: Aachen, Germany, 2020. [Google Scholar] [CrossRef]

- Katz, B.F.G.; Noisternig, M. A comparative study of interaural time delay estimation methods. J. Acoust. Soc. Am. 2014, 135, 3530–3540. [Google Scholar] [CrossRef]

- Aussal, M.; Alouges, F.; Katz, B. HRTF interpolation and ITD personalization for binaural synthesis using spherical harmonics. In Proceedings of the 25th Conference: Spatial Audio in Today’s 3D World, York, UK, 25–27 March 2012. [Google Scholar]

- Bomhardt, R. Anthropometric Individualization of Head-Related Transfer Functions. Ph.D. Thesis, RWTH Aachen University, Berlin, Germany, 2017. [Google Scholar]

- Takemoto, H.; Mokhtari, P.; Kato, H.; Nishimura, R.; Iida, K. Mechanism for generating peaks and notches of head-related transfer functions in the median plane. J. Acoust. Soc. Am. 2012, 132, 3832–3841. [Google Scholar] [CrossRef]

- Wersényi, G.; Illényi, A. Differences in Dummy-head HRTFs caused by the Acoustical Environment Near the Head. Electron. J. Tech. Acoust. 2005, 1, 1–15. [Google Scholar]

- Riederer, K. Repeatability Analysis of Head-Related Transfer Function Measurements; Audio Engineering Society Convention 105; Audio Engineering Society Convention: New York, NY, USA, 1998. [Google Scholar]

- Ahrens, J.; Thomas, M.R.; Tashev, I. HRTF magnitude modeling using a non-regularized least-squares fit of spherical harmonics coefficients on incomplete data. In Proceedings of the 2012 Asia Pacific Signal and Information Processing Association Annual Summit and Conference, Hollywood, CA, USA, 3–6 December 2012. [Google Scholar]

- Zotkin, D.N.; Duraiswami, R.; Gumerov, N.A. Regularized HRTF fitting using spherical harmonics. In Proceedings of the 2009 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics, New Paltz, NY, USA, 18–21 October 2009; pp. 257–260. [Google Scholar] [CrossRef] [Green Version]

- Carpentier, T.; Bahu, H.; Noisternig, M.; Warusfel, O. Measurement of a head-related transfer function database with high spatial resolution. In Proceedings of the Forum Acusticum, Krakow, Poland, 7–12 September 2014. [Google Scholar]

- Denk, F.; Heeren, J.; Ewert, S.D.; Kollmeier, B.; Ernst, S.M.A. Controlling the Head Position during individual HRTF Measurements and its Effect on Accuracy. In Proceedings of the DAGA, Kiel, Germany, 6–9 March 2017; pp. 1085–1088. [Google Scholar]

- Hirahara, T.; Sagara, H.; Toshima, I.; Otanil, M. Head movement during head-related transfer function measurements. Acoust. Sci. Technol. 2010, 31, 165–171. [Google Scholar] [CrossRef] [Green Version]

- Riederer, K. Part Va: Effect of Head Movements on Measured Head-Related Transfer Functions. In Proceedings of the International Congress on Acoustics (ICA), Kyoto, Japan, April 5–9 2004; pp. 795–798. [Google Scholar]

- Andreopoulou, A.; Roginska, A.; Mohanraj, H. Analysis of the Spectral Variations in Repeated Head-Related Transfer Function Measurements. In Proceedings of the International Conference in Auditory Displays (ICAD), Lodz, Poland, 6–10 July 2013; pp. 213–218. [Google Scholar]

- Andreopoulou, A.; Begault, D.R.; Katz, B.F.G. Inter-Laboratory Round Robin HRTF Measurement Comparison. IEEE J. Sel. Top. Signal Process. 2015, 9, 895–906. [Google Scholar] [CrossRef]

- Katz, B.F.G.; Begault, D.R. Round Robin Comparison of Hrtf Measurement Systems: Preliminary Results. In Proceedings of the International Congress on Acoustics (ICA), Montreal, Canada, 26–29 June 2007; pp. 2–7. [Google Scholar]

- Schmitz, A. Ein neues digitales Kunstkopfmesssystem. Acust. Int. J. Acoust. 1995, 81, 416–420. [Google Scholar]

- Braren, H.; Fels, J. Objective differences between individual HRTF datasets of children and adults. In Proceedings of the International Congress on Acoustics (ICA), Aachen, Germany, 9–13 September 2019. [Google Scholar]

- Baumgartner, R.; Majdak, P.; Laback, B. Modeling sound-source localization in sagittal planes for human listeners. J. Acoust. Soc. Am. 2014, 136, 791–802. [Google Scholar] [CrossRef] [Green Version]

| Dimension | min | p5 | Mean | p95 | max |

|---|---|---|---|---|---|

| x1: head width | 114.4 | 133.5 | 142.6 | 150.7 | 153.0 |

| d3: cavum concha height | 14.1 | 14.8 | 17.25 | 18.8 | 20.9 |

| d5: pinna height | 51.8 | 52.0 | 55.6 | 60.1 | 63.6 |

| d6: pinna width | 26.7 | 27.2 | 30.8 | 35.8 | 36.3 |

| d8 cavum concha depth | 12.9 | 13.7 | 16.0 | 19.0 | 19.6 |

| x12: shoulder width | 274.2 | 283.3 | 322.7 | 365.4 | 371.8 |

| x14: height | 119 | 120.3 | 133.2 | 145.8 | 160.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Braren, H.S.; Fels, J. Towards Child-Appropriate Virtual Acoustic Environments: A Database of High-Resolution HRTF Measurements and 3D-Scans of Children. Int. J. Environ. Res. Public Health 2022, 19, 324. https://doi.org/10.3390/ijerph19010324

Braren HS, Fels J. Towards Child-Appropriate Virtual Acoustic Environments: A Database of High-Resolution HRTF Measurements and 3D-Scans of Children. International Journal of Environmental Research and Public Health. 2022; 19(1):324. https://doi.org/10.3390/ijerph19010324

Chicago/Turabian StyleBraren, Hark Simon, and Janina Fels. 2022. "Towards Child-Appropriate Virtual Acoustic Environments: A Database of High-Resolution HRTF Measurements and 3D-Scans of Children" International Journal of Environmental Research and Public Health 19, no. 1: 324. https://doi.org/10.3390/ijerph19010324