From Survey Results to a Decision-Making Matrix for Strategic Planning in Healthcare: The Case of Clinical Pathways

Abstract

1. Introduction

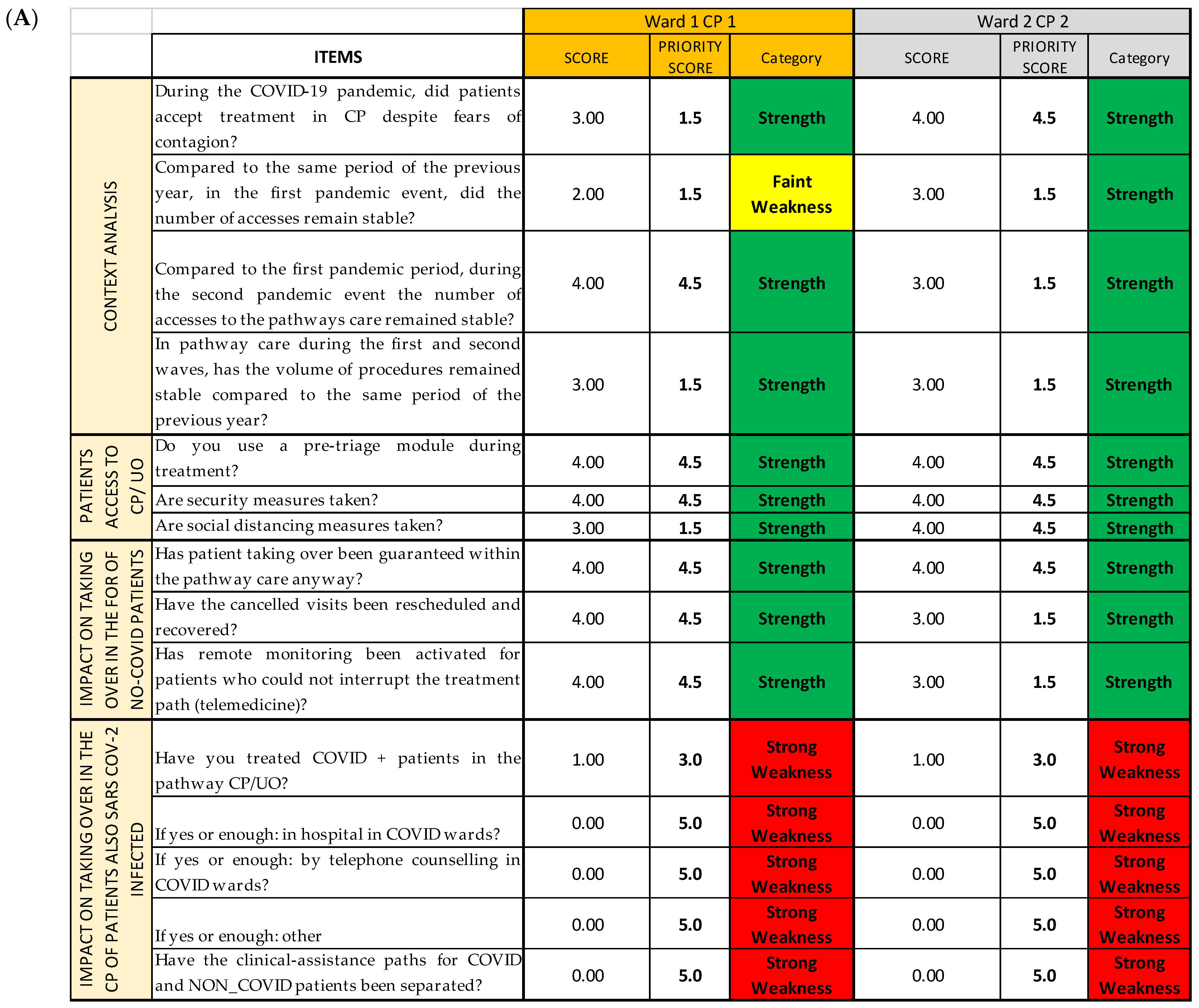

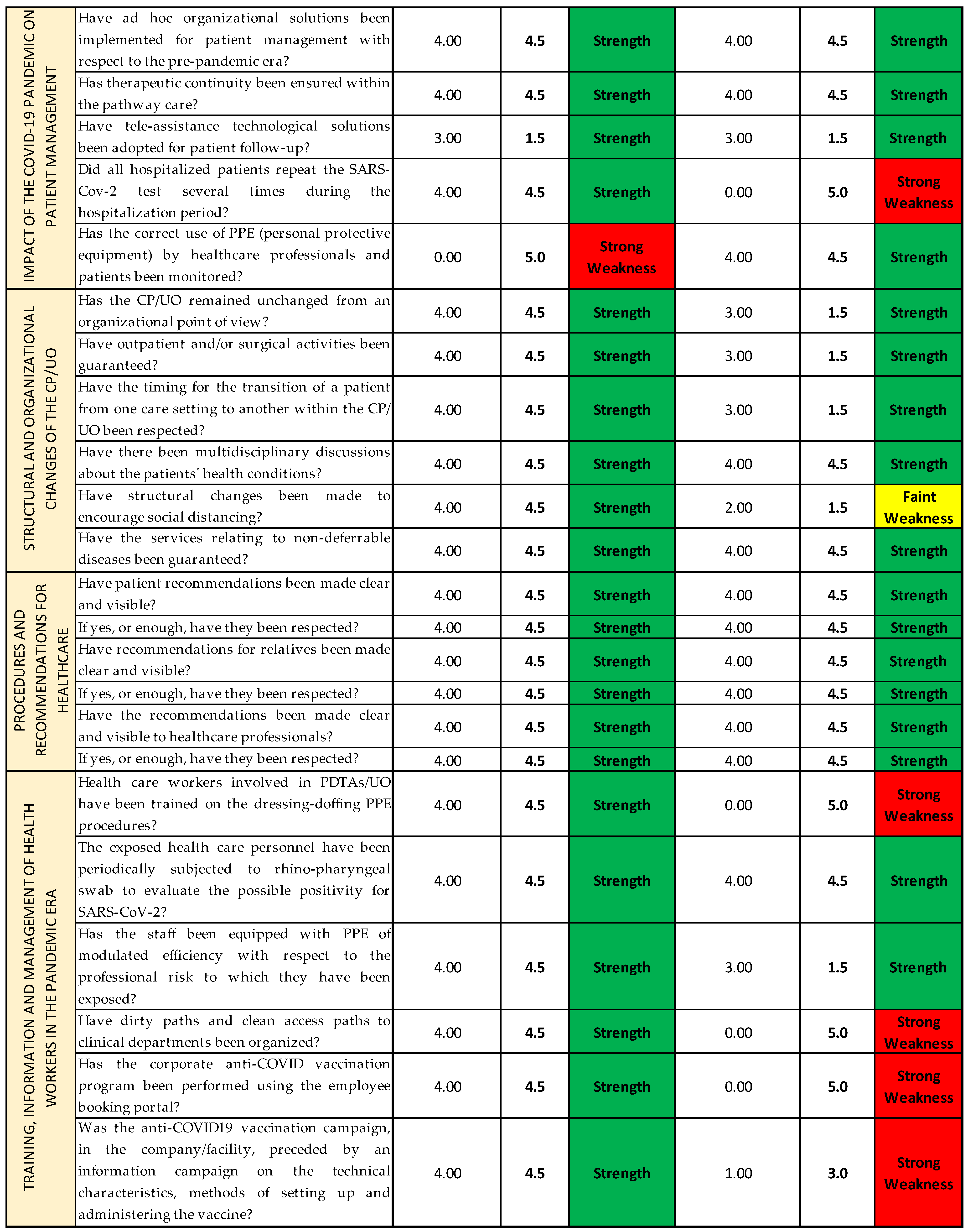

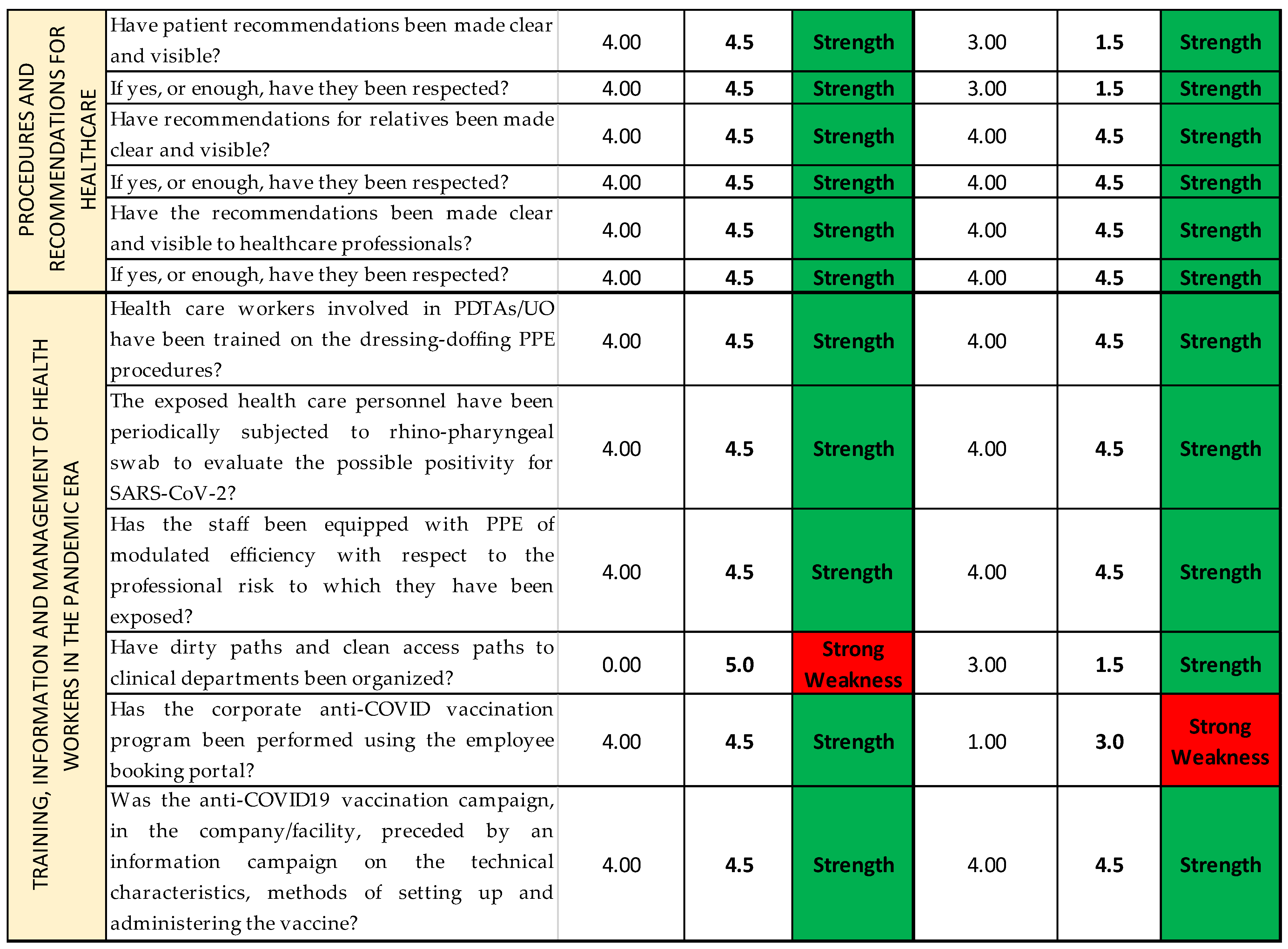

Aim of the Current Study

2. Materials and Methods

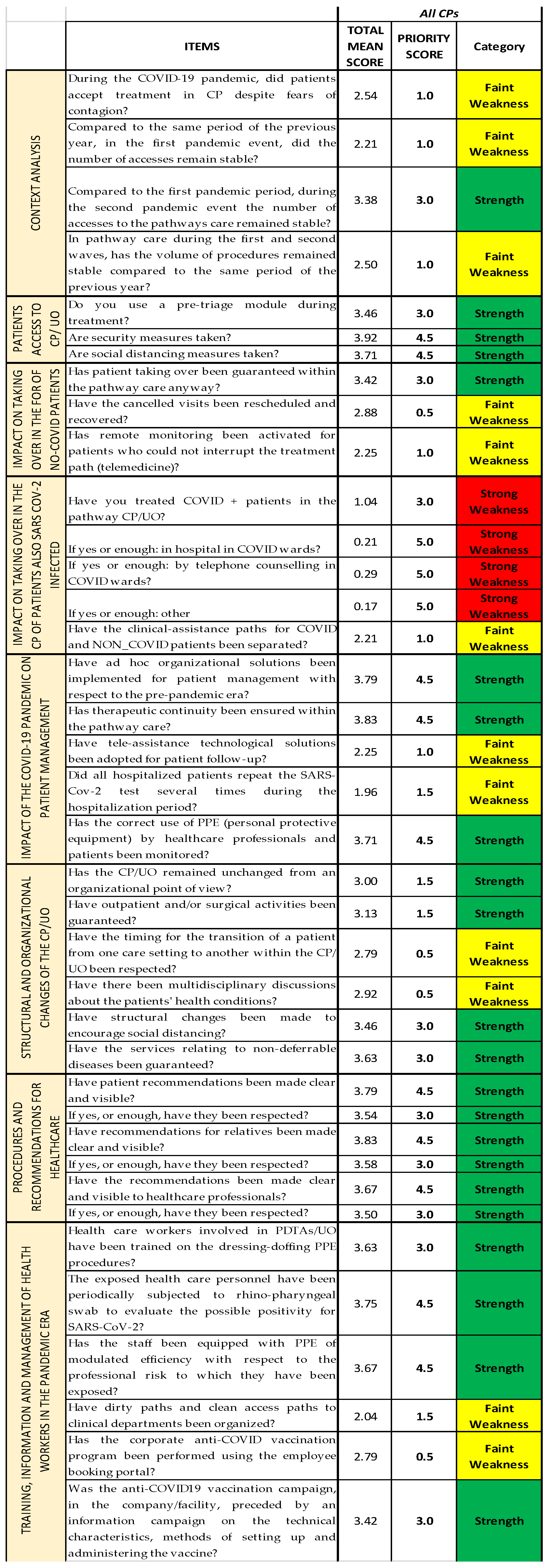

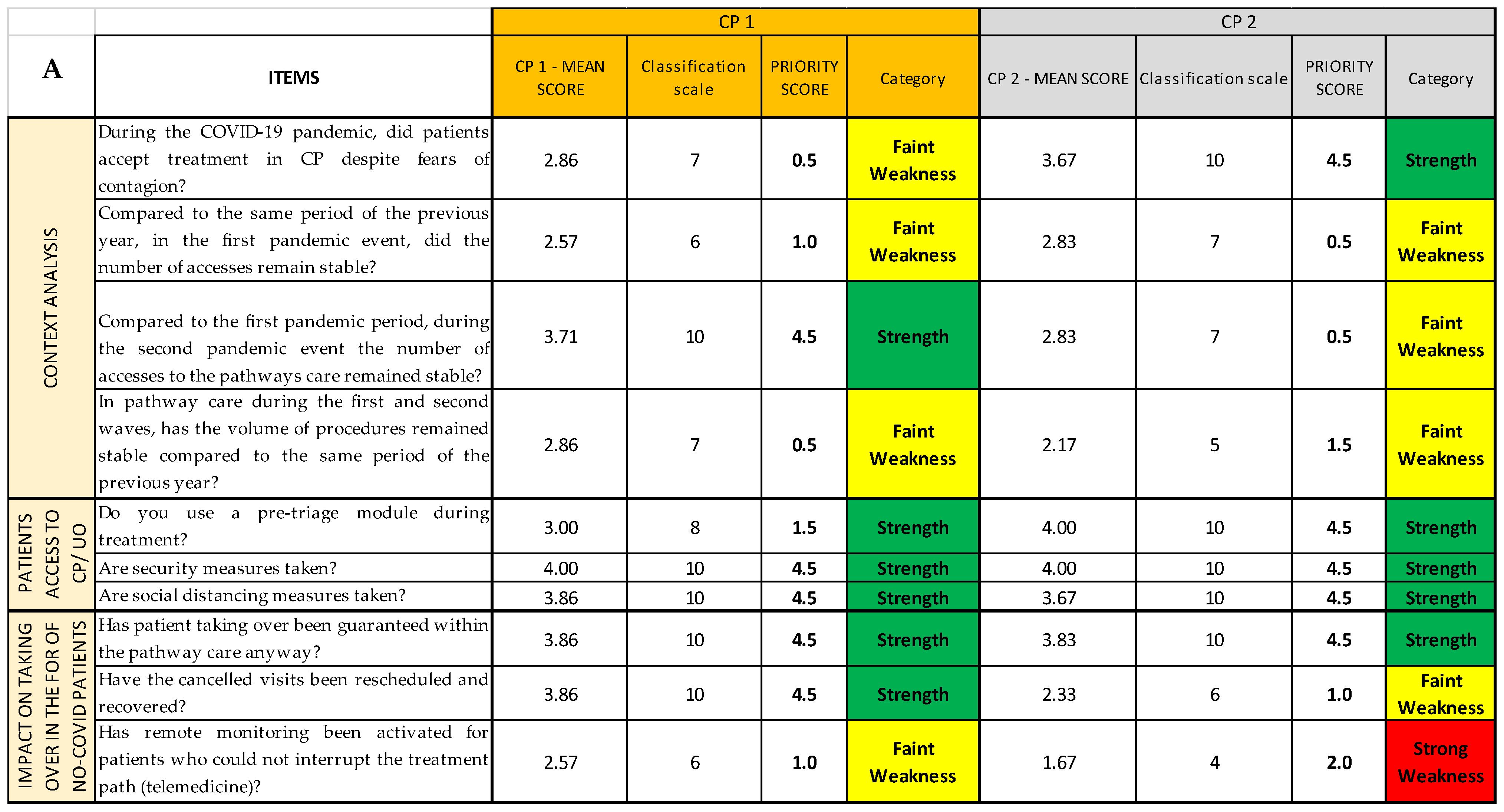

- Context analysis;

- Patients’ access to Care Pathways (CPs)/Operational Units (OUs);

- Impact on the treatment of non-COVID patients in the CPs;

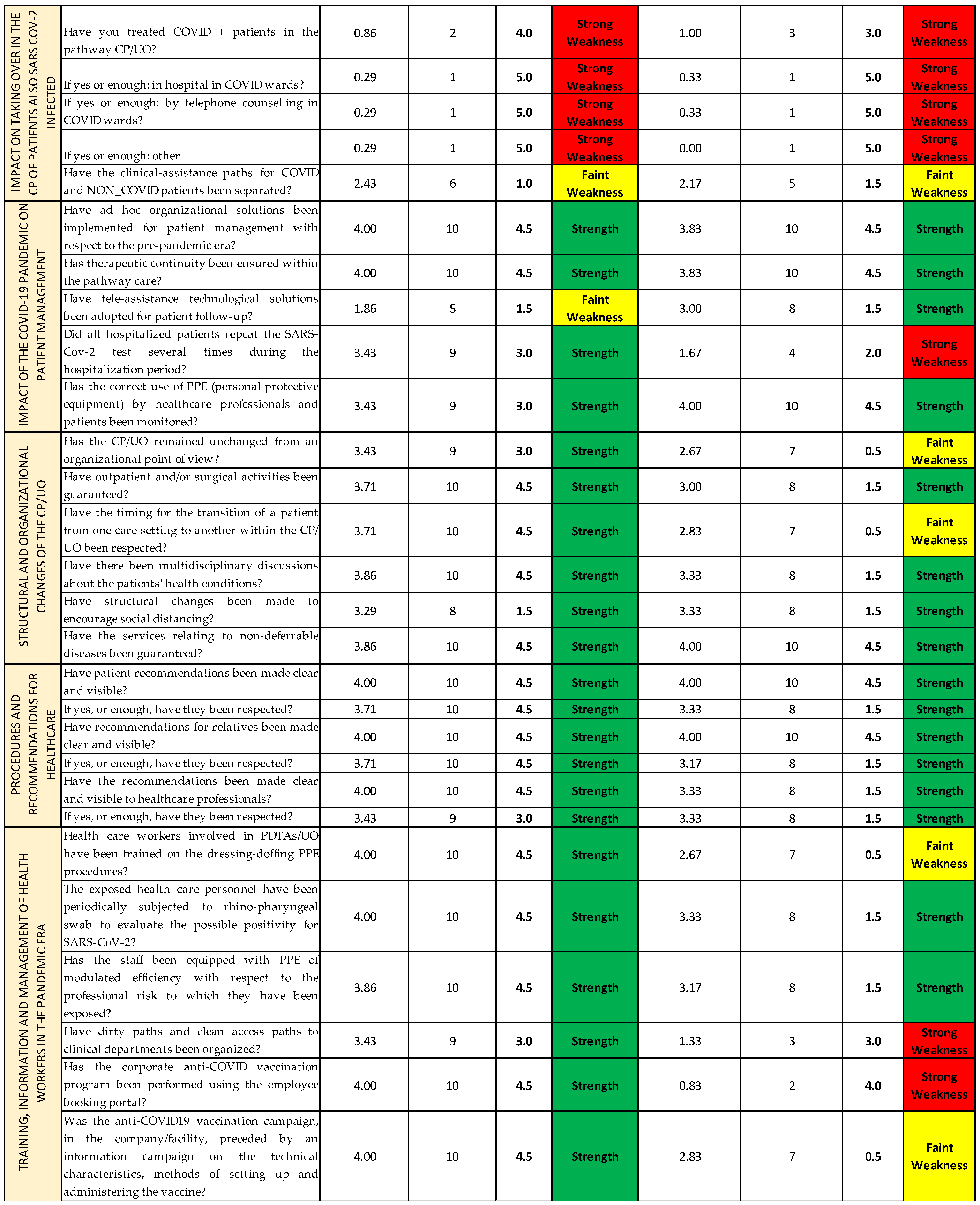

- Impact on the treatment of patients also SARS-CoV-2 infected in the CPs;

- Impact of the COVID-19 pandemic on patient management;

- Structural and organizational changes of the CPs/OUs;

- Procedures and recommendations for healthcare professionals/users;

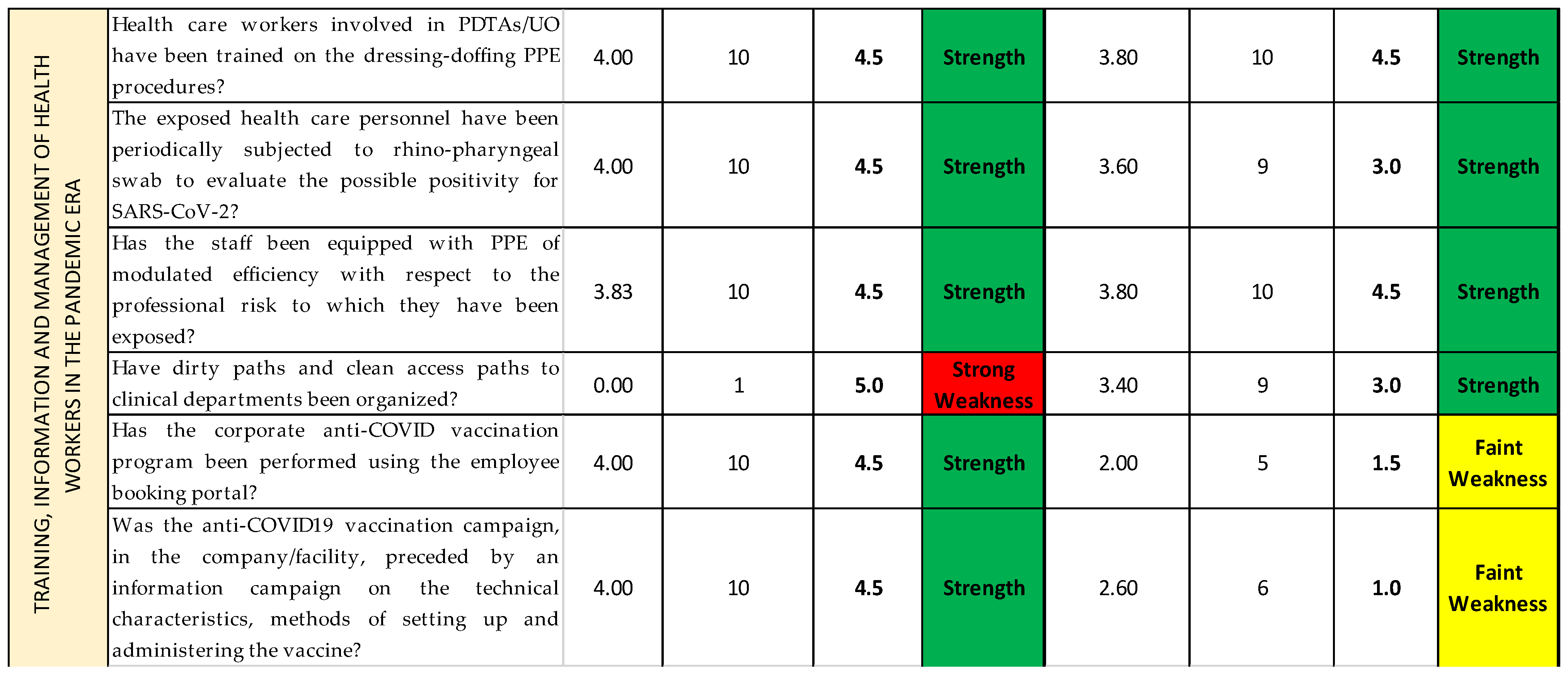

- Training, information, and management of health workers in the pandemic era.

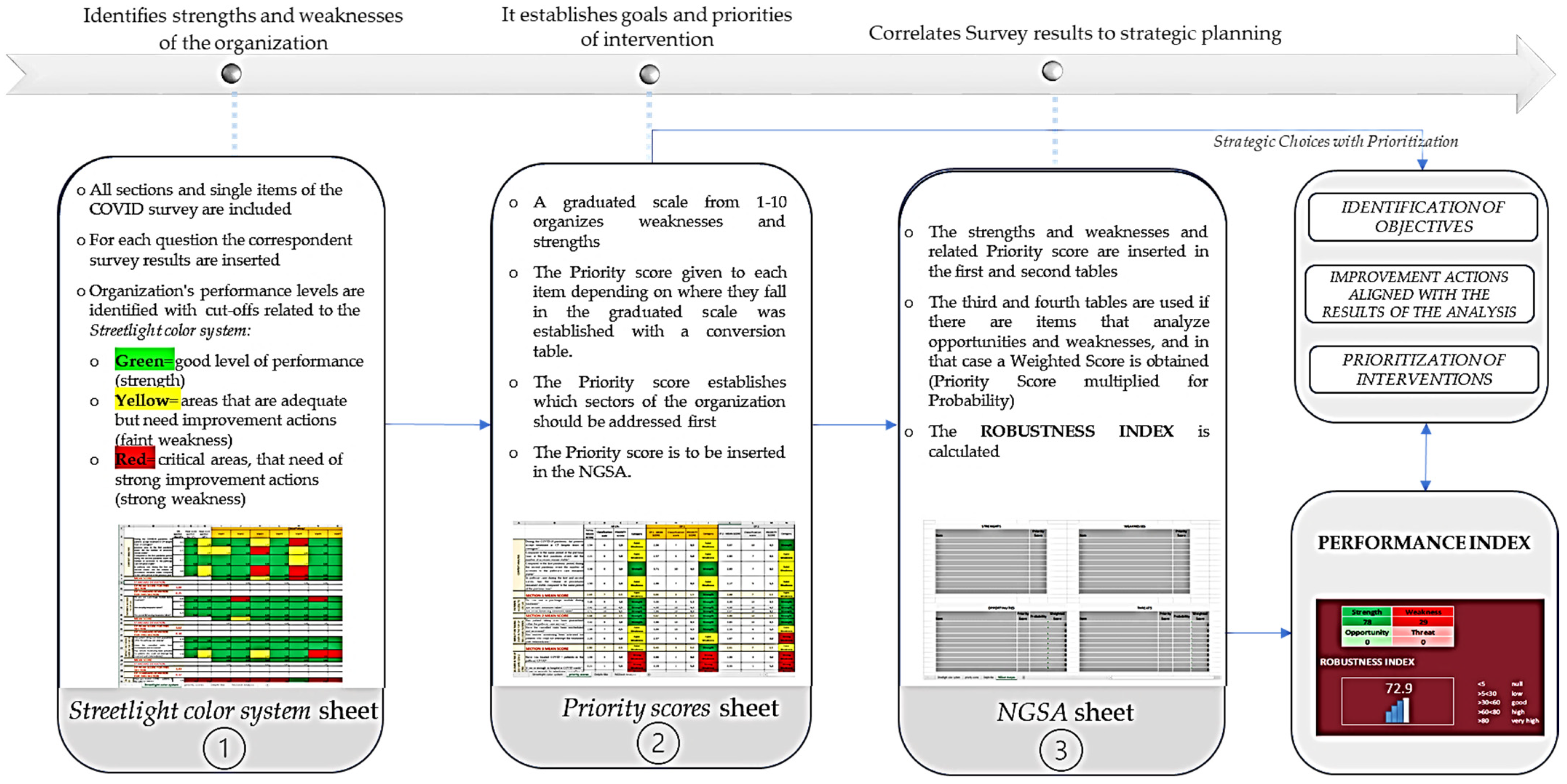

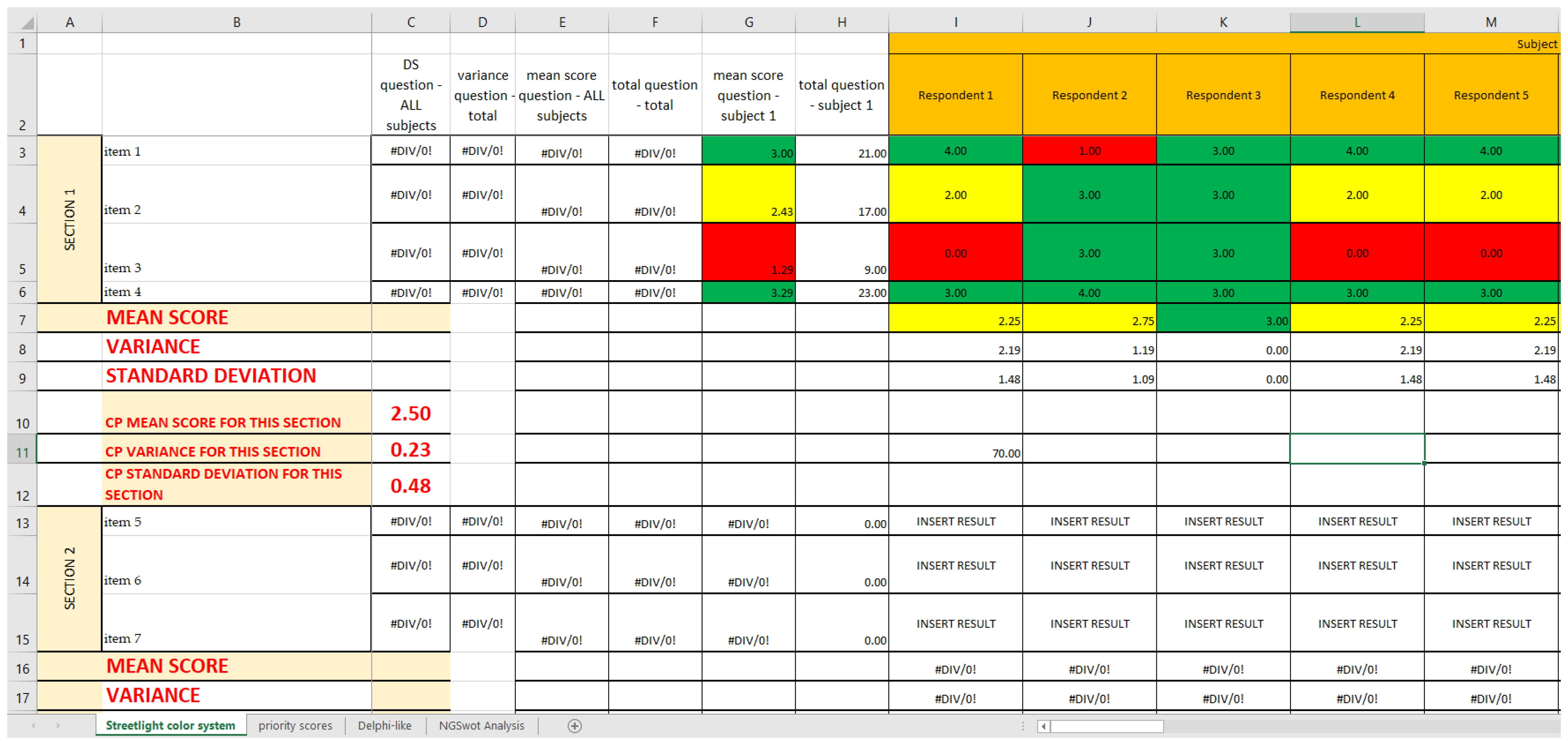

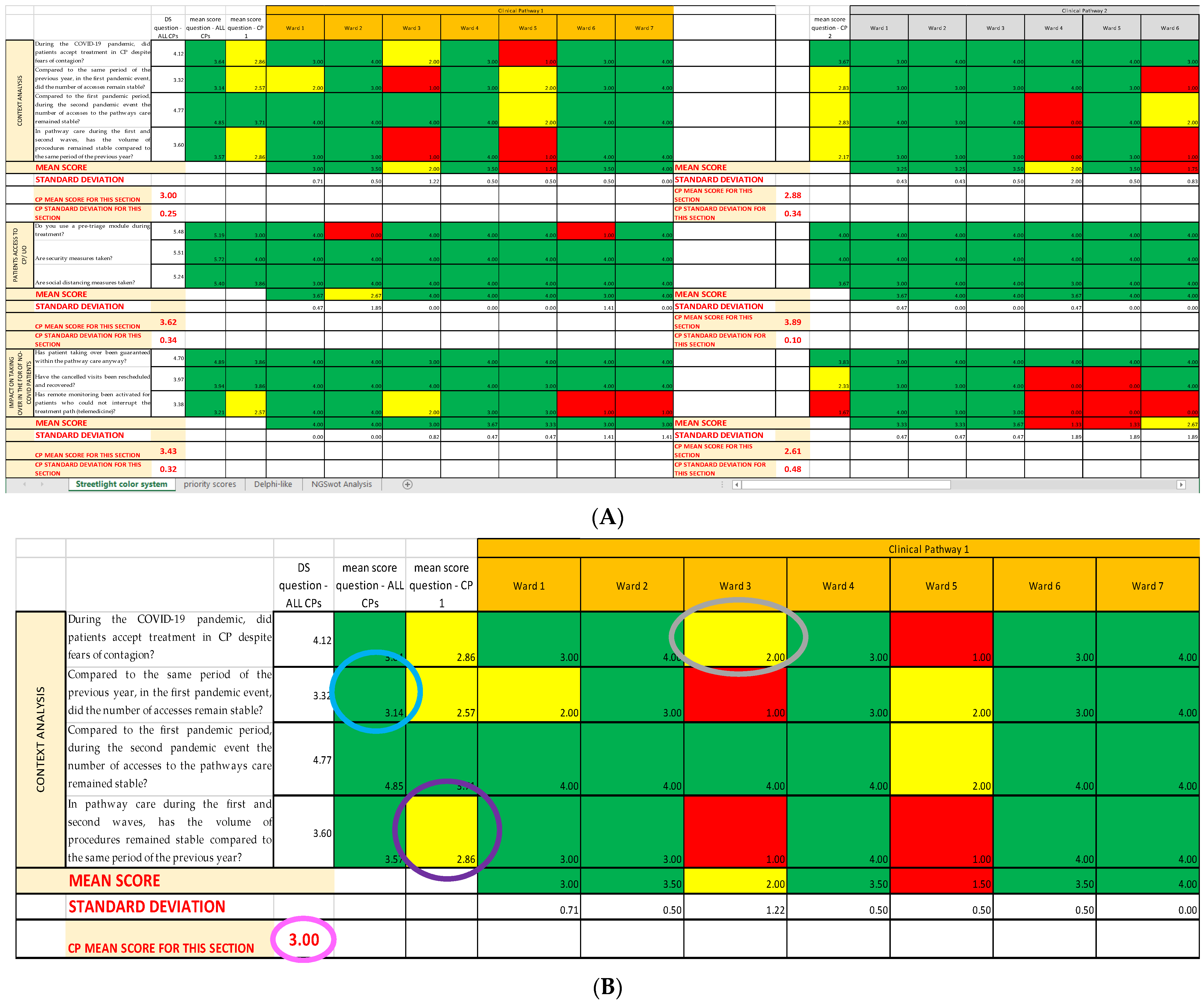

2.1. First Sheet: Streetlight Color System

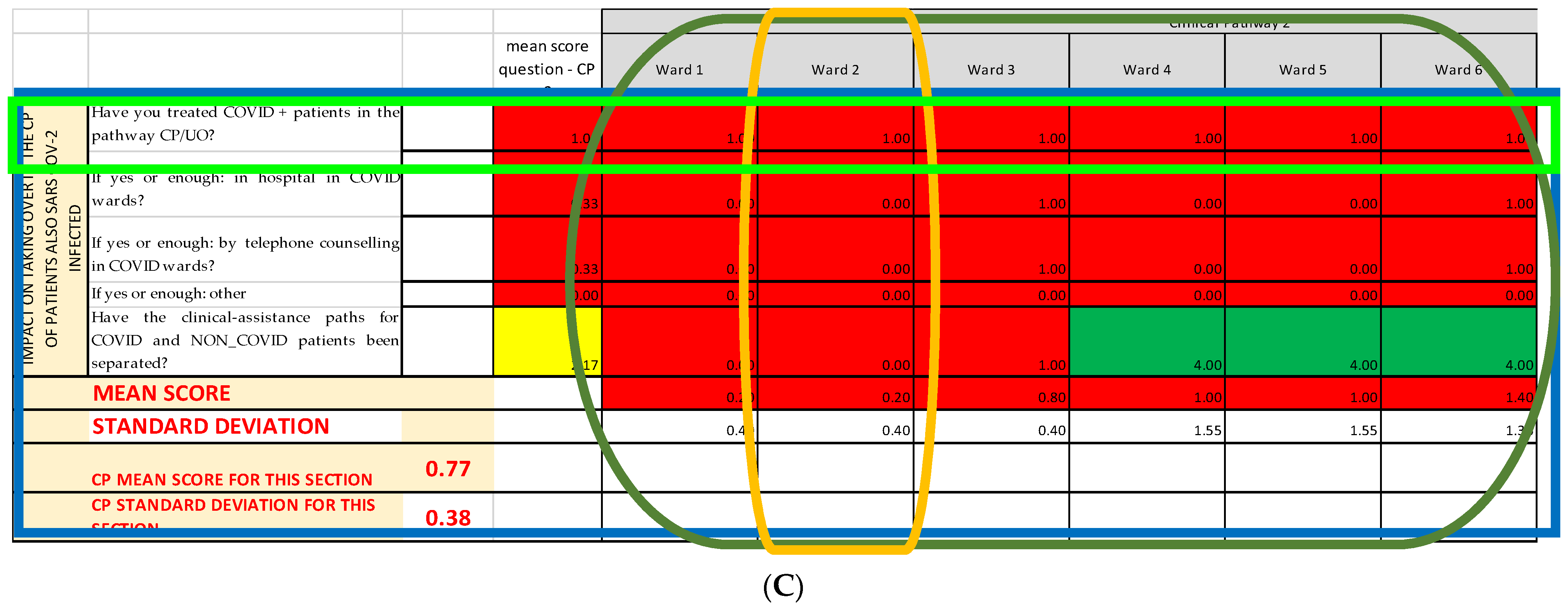

2.2. Second Sheet: Priority Scores

2.2.1. Classification Scale

2.2.2. Conversion Scale

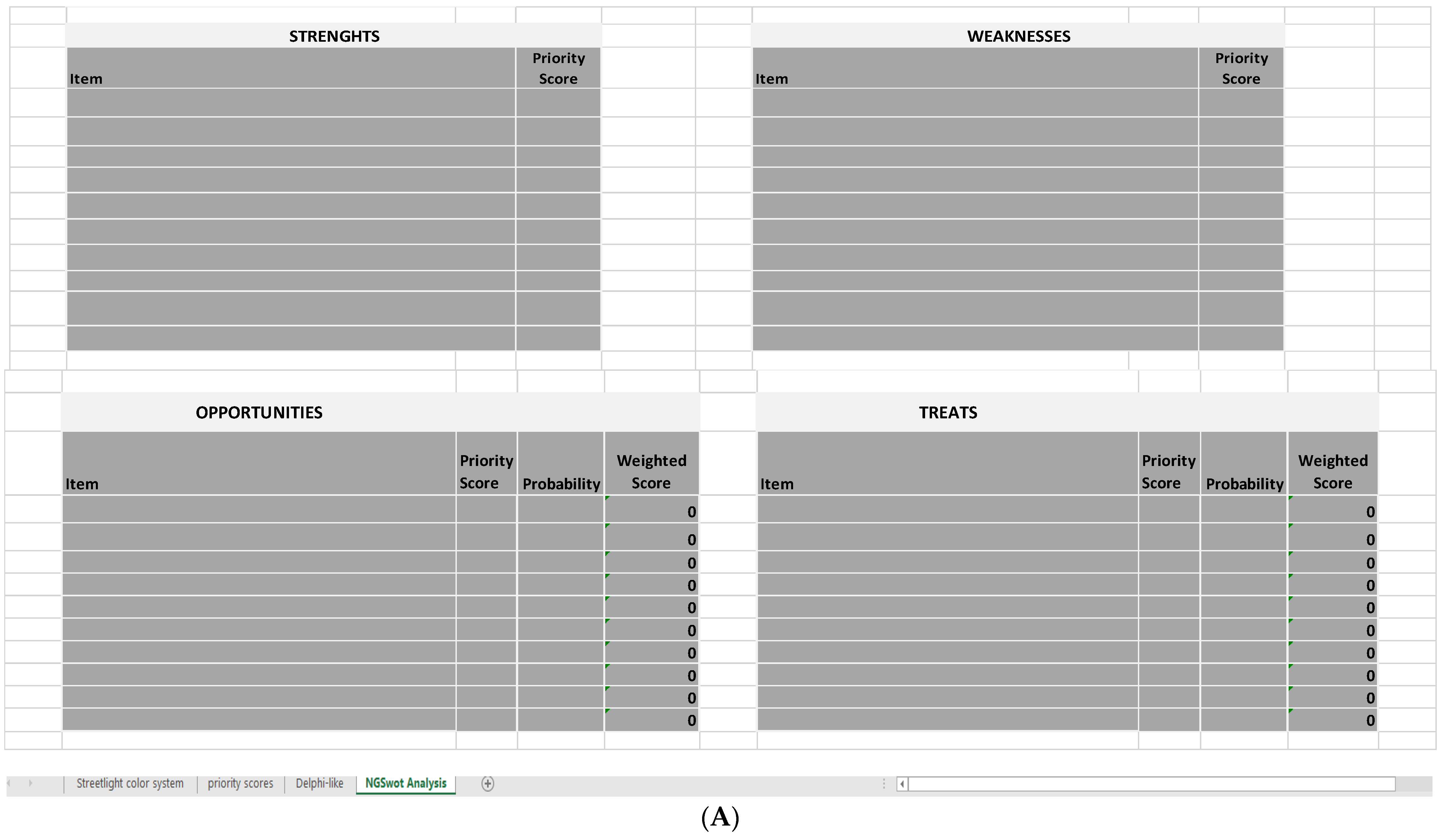

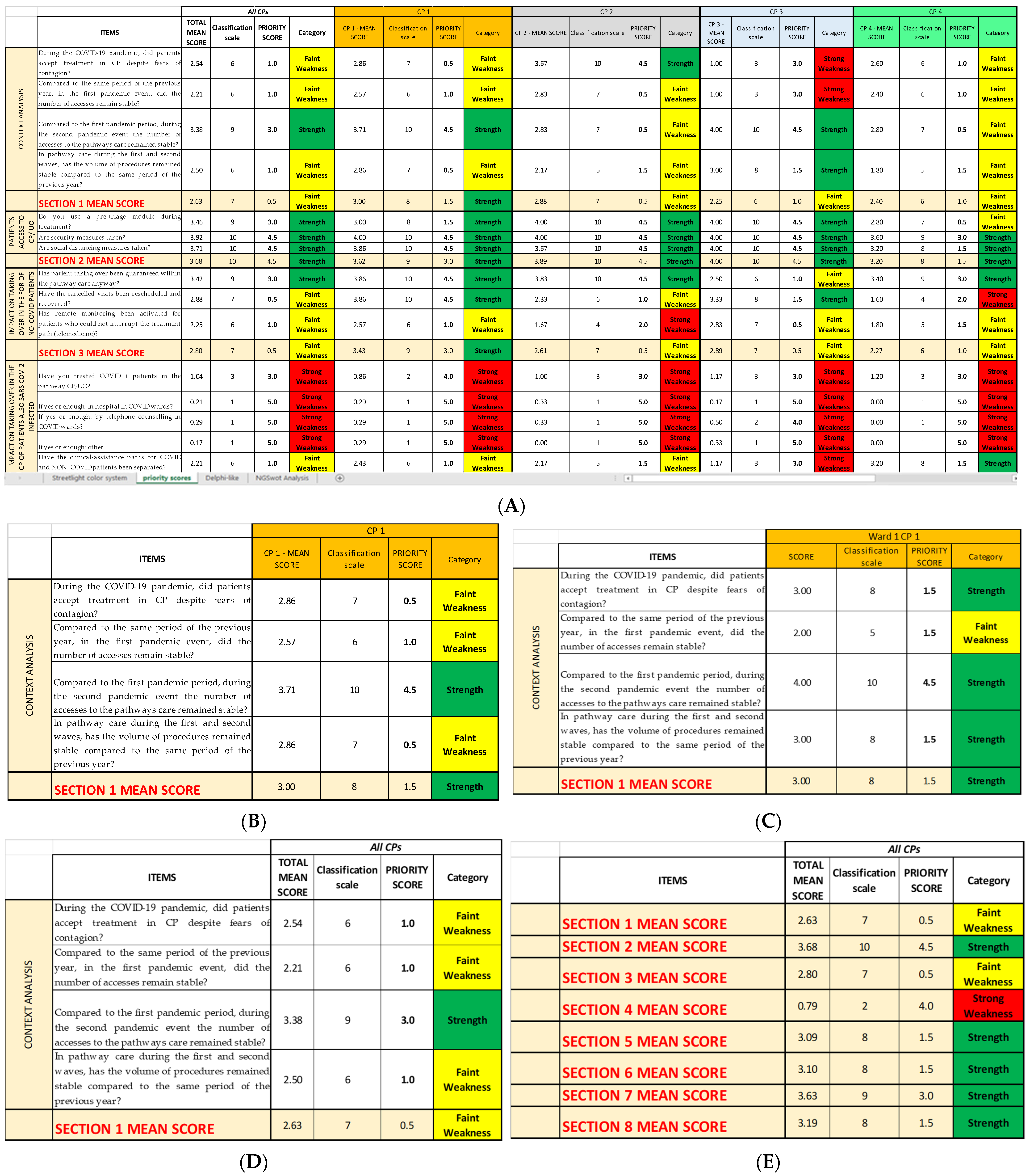

2.3. Last Sheet: Next-Generation SWOT Analysis

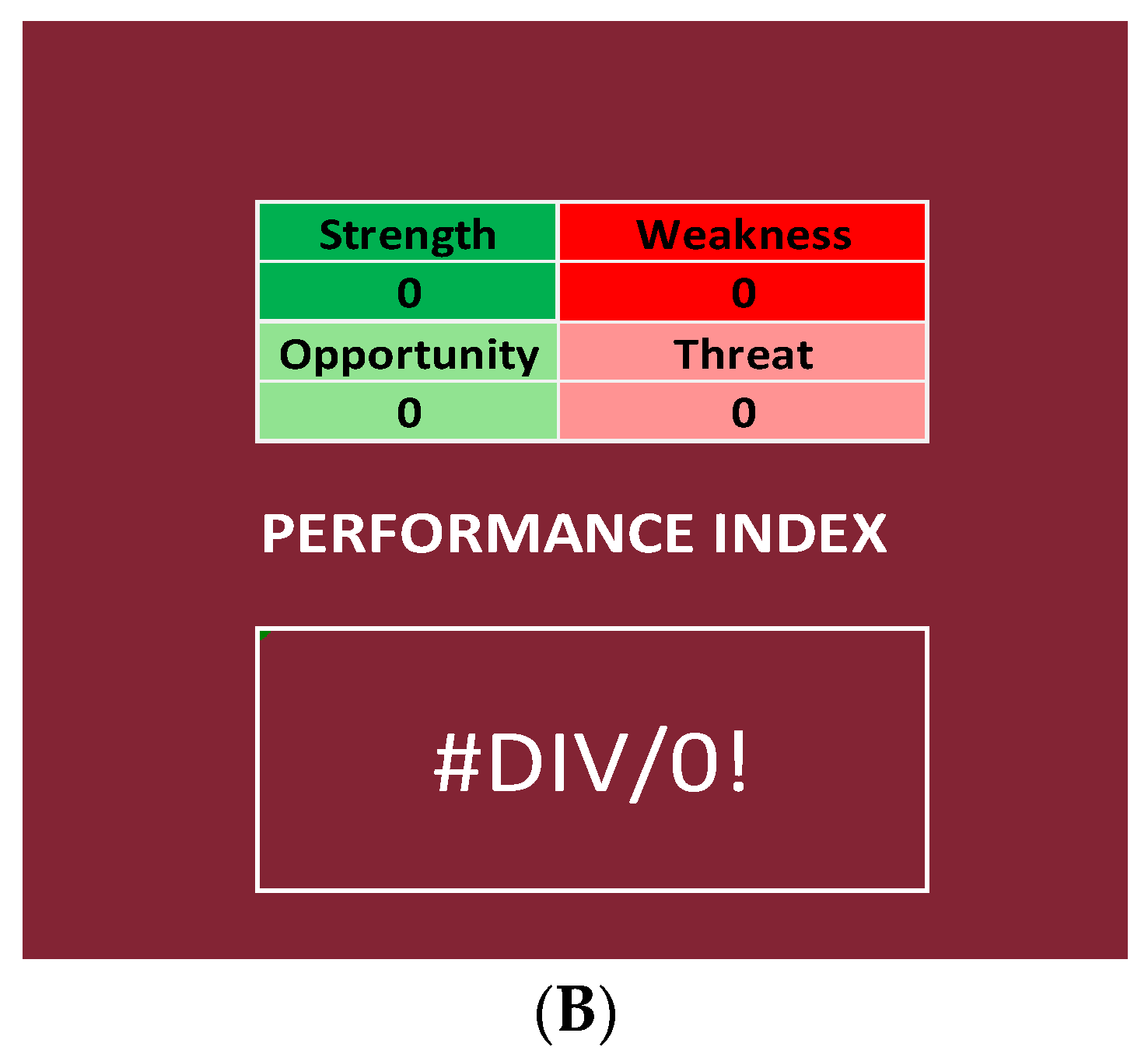

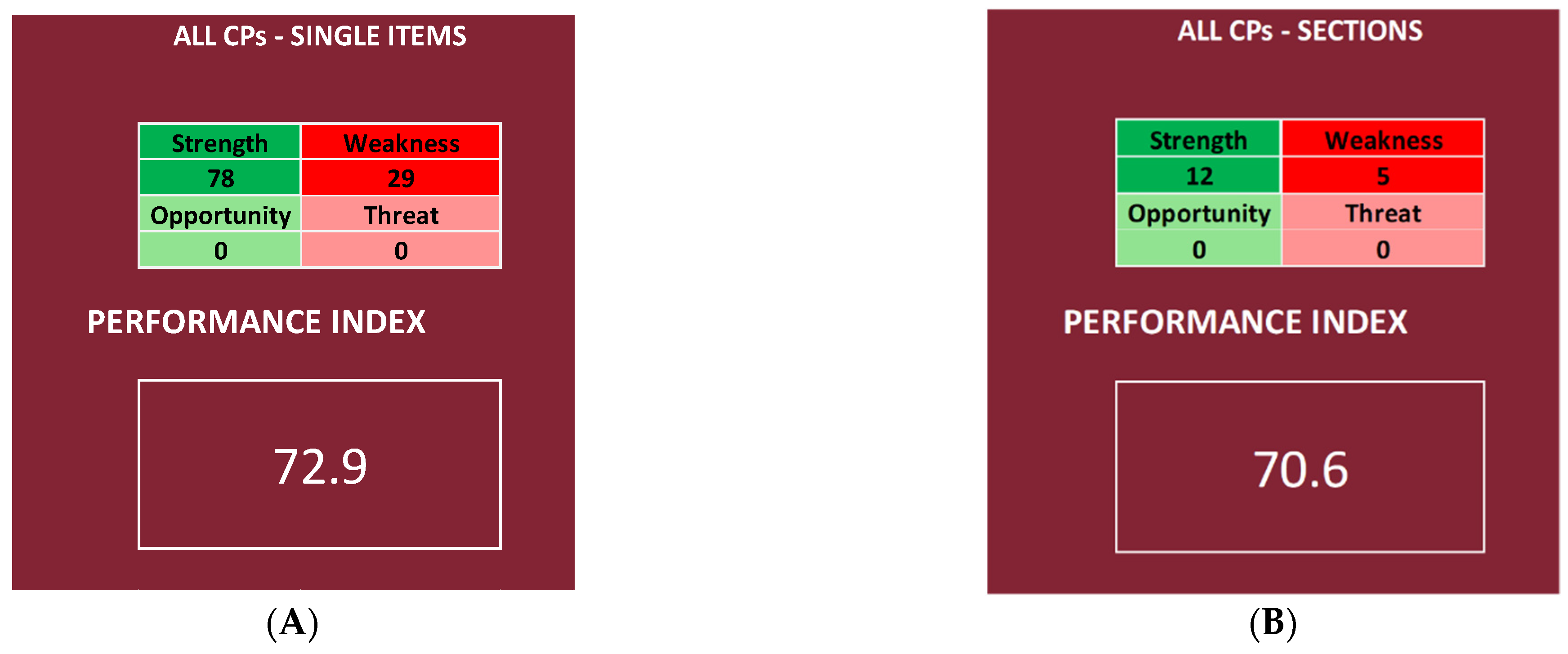

2.3.1. Performance Index

2.3.2. Range of the Performance Index

- ○

- Null if below the tenth percentile;

- ○

- Low if between the tenth percentile and the first quartile;

- ○

- Good if between the first and the second quartile;

- ○

- High if between the second and the third quartile;

- ○

- Very high if between the third and the fourth quartile.

2.4. COVID Survey and SPRISS

3. Results

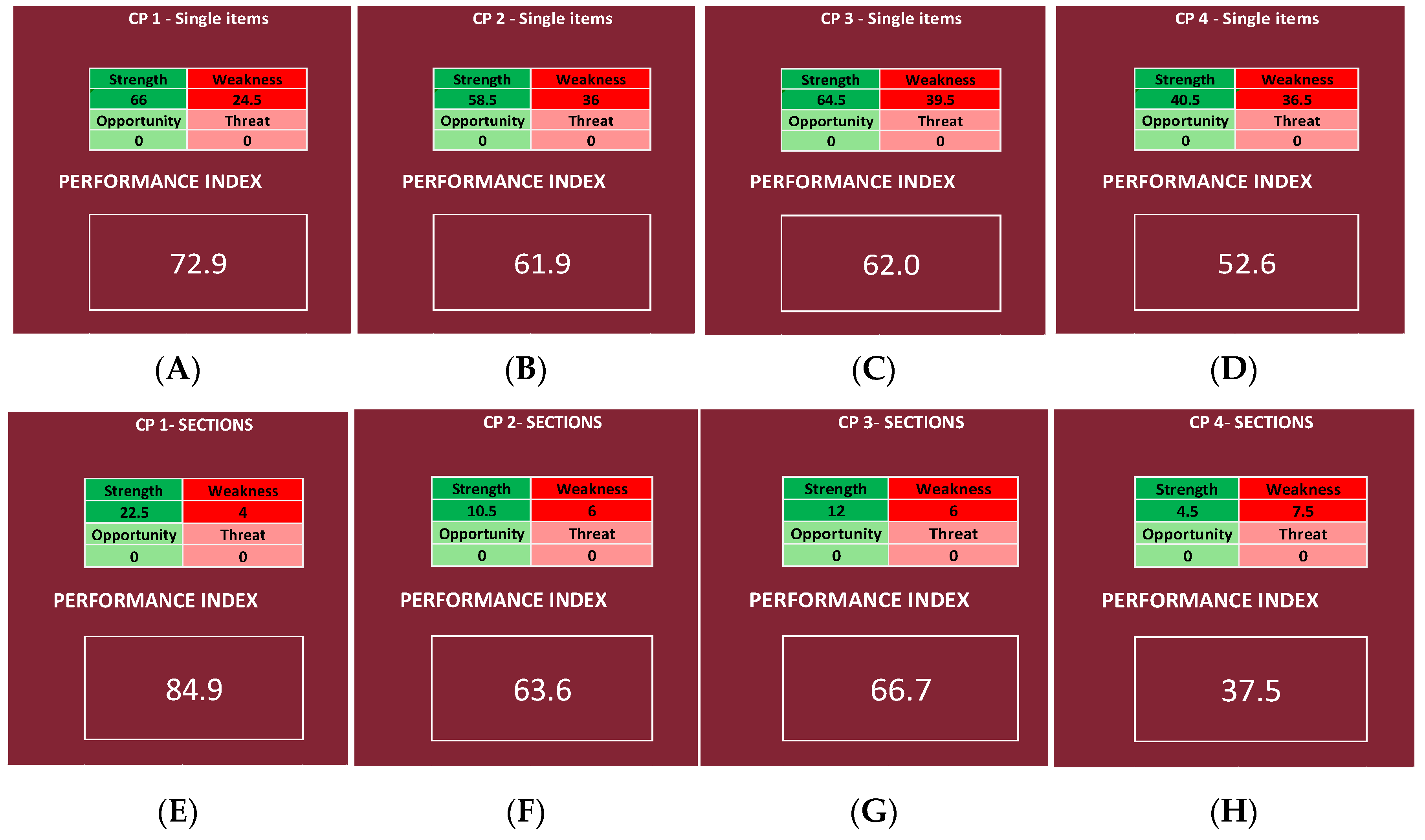

- For the items:

- ○

- Null if below <52.79% (tenth percentile);

- ○

- Low if between 52.80% and 57.55% (between the tenth percentile and the first quartile);

- ○

- Good if between 57.56% and 62.83% (between the first and the second quartile);

- ○

- High if between 62.84% and 68.12% (between the second and the third quartile);

- ○

- Very high if between 68.13% and 100% (between the third and the fourth quartile).

- For the Questionnaire Sections:

- ○

- Null if below <51.20% (tenth percentile);

- ○

- Low if between 51.21% and 59.92% (between the tenth percentile and the first quartile);

- ○

- Good if between 59.93% and 69.61% (between the first and the second quartile);

- ○

- High if between 69.62% and 79.30% (between the second and the third quartile);

- ○

- Very high if between 79.31% and 100% (between the third and the fourth quartile).

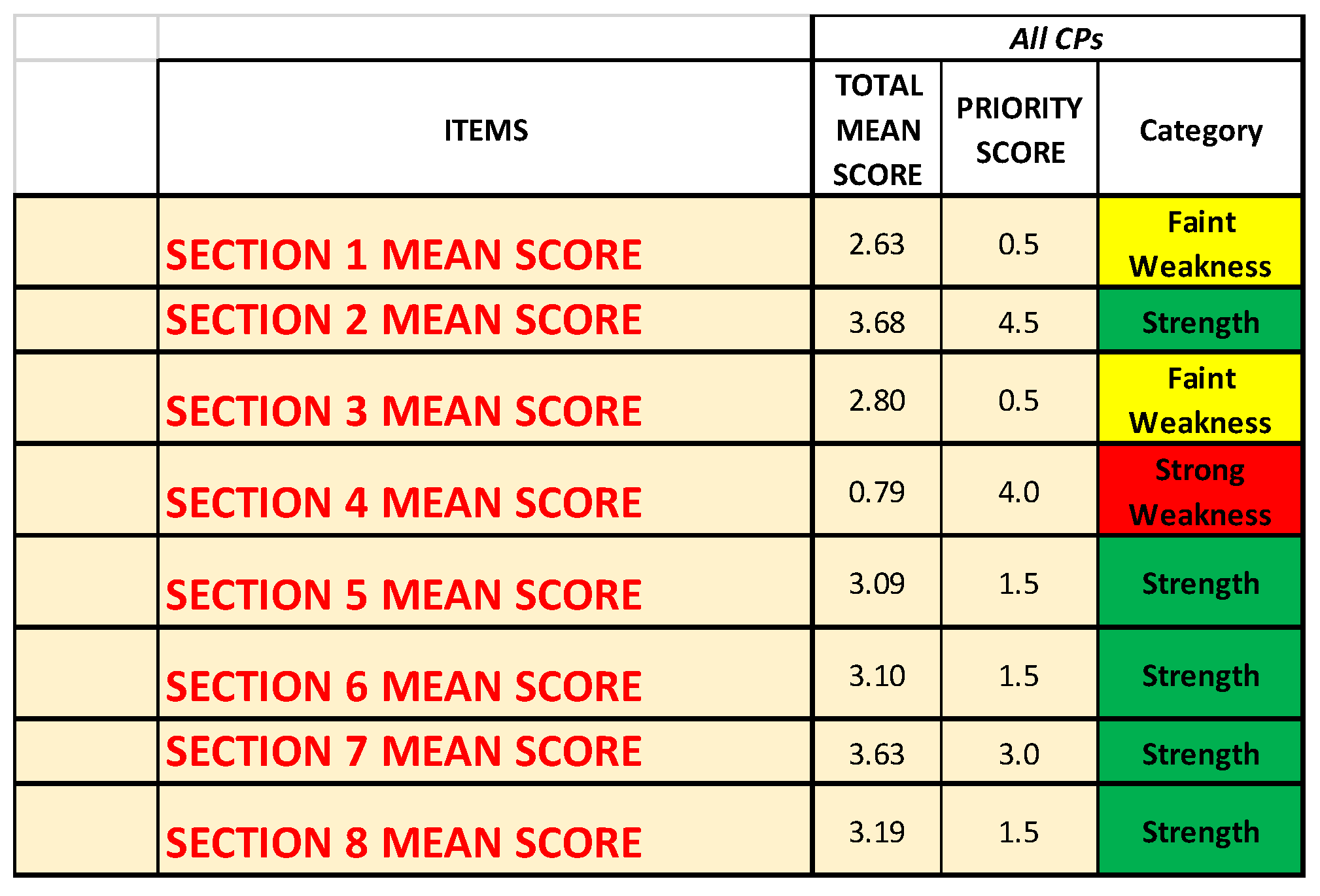

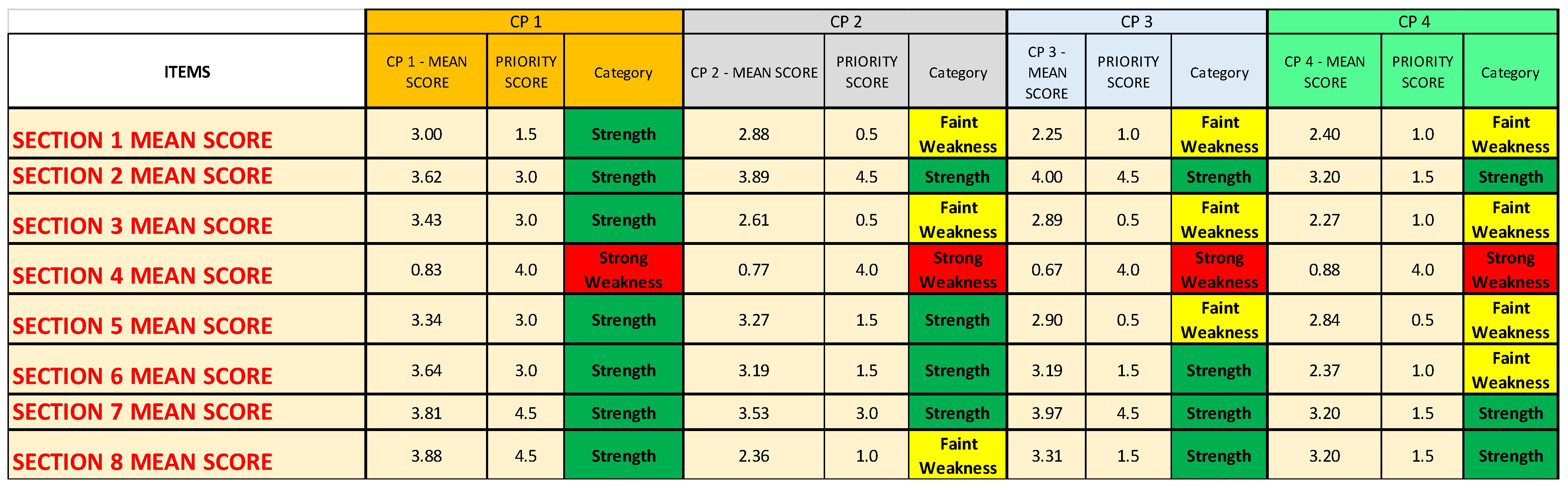

3.1. All CPs

3.2. Single CPs

- For all four analyzed CPs, Questionnaire Section 4 (“Impact on the treatment of patients also SARS-CoV-2 infected in the CPs”) is identified as a strong weakness;

- Questionnaire Section 5 (“Impact of the COVID-19 pandemic on patient management”) is identified as a faint weakness for CP3 and CP4;

- Questionnaire Section 6 (“Structural and organizational changes of the CP/OU”) is identified as a faint weakness only for CP4;

- Questionnaire Section 8 (“Training, information, and management of health workers in the pandemic era”) is identified as a faint weakness only for CP2.

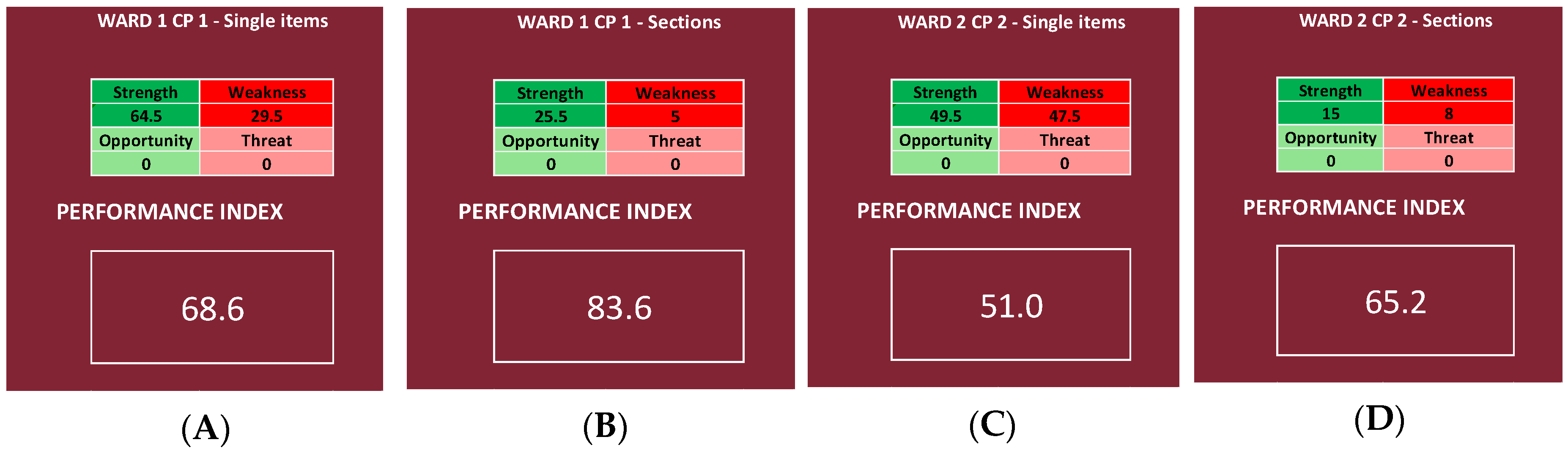

3.3. Single Wards

4. Discussion

4.1. Limits of the Study

4.2. Further Prospective Work

4.3. Application

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hailemariam, M.; Bustos, T.; Montgomery, B.; Barajas, R.; Evans, L.B.; Drahota, A. Evidence-based intervention sustainability strategies: A systematic review. Implement. Sci. 2019, 14, 57. [Google Scholar] [CrossRef] [PubMed]

- Rao, G.N.; Philips, A.P.; Benegal, V. Costs, Prohibition, and Need for Responsive Public Health Evidence. Indian J. Community Med. 2020, 45, 381. [Google Scholar] [CrossRef] [PubMed]

- Scholte, M.; Van Dulmen, S.A.; Neeleman-Van der Steen, C.W.M.; Van der Wees, P.J.; Nijhuis-van der Sanden, M.W.G.; Braspenning, J. Data extraction from electronic health records (EHRs) for quality measurement of the physical therapy process: Comparison between EHR data and survey data. BMC Med. Inform. Decis. Mak. 2016, 16, 141. [Google Scholar] [CrossRef] [PubMed]

- Donabedian, A. Evaluating the quality of medical care. Milbank Q. 2005, 83, 691–729. [Google Scholar] [CrossRef] [PubMed]

- Orton, L.; Lloyd-Williams, F.; Taylor-Robinson, D.; O’Flaherty, M.; Capewell, S. The Use of Research Evidence in Public Health Decision Making Processes: Systematic Review. PLoS ONE 2011, 6, e21704. [Google Scholar] [CrossRef] [PubMed]

- Rudolf, K. Evidence and policy: Interpreting the Delphic oracle. J. R. Soc. Med. 2003, 96, 429–431. [Google Scholar] [CrossRef][Green Version]

- Gallè, F.; Sabella, E.A.; Roma, P.; Ferracuti, S.; Da Molin, G.; Diella, G.; Montagna, M.T.; Orsi, G.B.; Liguori, G.; Napoli, C. Knowledge and Lifestyle Behaviors Related to COVID-19 Pandemic in People over 65 Years Old from Southern Italy. Int. J. Environ. Res. Public Health 2021, 18, 10872. [Google Scholar] [CrossRef]

- Li, A.H.; Thomas, S.M.; Farag, A.; Duffett, M.; Garg, A.X.; Naylor, K.L. Quality of Survey Reporting in Nephrology Journals: A Methodologic Review. Clin. J. Am. Soc. Nephrol. 2014, 9, 2089–2094. [Google Scholar] [CrossRef]

- Giraldi, G.; Montesano, M.; Napoli, C.; Frati, P.; La Russa, R.; Santurro, A.; Scopetti, M.; Orsi, G.B. Healthcare-Associated Infections Due to Multidrug-Resistant Organisms: A Surveillance Study on Extra Hospital Stay and Direct Costs. Curr. Pharm. Biotechnol. 2019, 20, 643–652. [Google Scholar] [CrossRef]

- Gupta, B.; Ahluwalia, P. Need for a central survey-based registry-for effective conduct of survey-based medical research. Indian J. Anaesth. 2021, 65, 768–770. [Google Scholar] [CrossRef]

- Puhan, M.A.; Soesilo, I.; Guyatt, G.H.; Schünemann, H.J. Combining scores from different patient reported outcome measures in meta-analyses: When is it justified? Health Qual. Life Outcomes 2006, 4, 94. [Google Scholar] [CrossRef] [PubMed]

- Anoke, S.C.; Mwai, P.; Jeffery, C.; Valadez, J.J.; Pagano, M. Comparing two survey methods of measuring health-related indicators: Lot Quality Assurance Sampling and Demographic Health Surveys. Trop. Med. Int. Health 2015, 20, 1756–1770. [Google Scholar] [CrossRef] [PubMed]

- Chan, M.; Kazatchkine, M.; Lob-Levyt, J.; Obaid, T.; Schweizer, J.; Sidibe, M.; Veneman, A.; Yamada, T. Meeting the Demand for Results and Accountability: A Call for Action on Health Data from Eight Global Health Agencies. PLoS Med 2010, 7, e1000223. [Google Scholar] [CrossRef] [PubMed]

- Piane, M.; Bianco, L.; Mancini, R.; Fornelli, P.; Gabriele, A.; Medici, F.; Battista, C.; Greco, S.; Croce, G.; Franceschetti, L.; et al. Impact of the COVID-19 Pandemic on Clinical Pathways for Non-SARS-CoV-2 Related Diseases in the Lazio Region, Italy. Int. J. Environ. Res. Public Health. 2022, 19, 635. [Google Scholar] [CrossRef] [PubMed]

- Drinkwater, B.L. A comparison of the direction-of-perception technique with the Likert method in the measurement of attitudes. J. Soc. Psychol. 1965, 67, 189–196. [Google Scholar] [CrossRef] [PubMed]

- Beran, D.; Perone, S.A.; Perolini, M.C.; Francois, C.; Chopard, P.; Haller, D.M.; Bausch, F.J.; Maisonneuve, H.; Perone, N.; Gastaldi, G. Beyond the virus: Ensuring continuity of care for people with diabetes during COVID-19. Prim. Care Diabetes 2021, 15, 16–17. [Google Scholar] [CrossRef]

- Capalbo, C.; Aceti, A.; Simmaco, M.; Bonfini, R.; Rocco, M.; Ricci, A.; Napoli, C.; Rocco, M.; Alfonsi, V.; Teggi, A.; et al. The Exponential Phase of the COVID-19 Pandemic in Central Italy: An Integrated Care Pathway. Int. J. Environ. Res. Public Health. 2020, 17, 3792. [Google Scholar] [CrossRef]

- Indini, A.; Aschele, C.; Cavanna, L.; Clerico, M.; Daniele, B.; Fiorentini, G.; Fioretto, L.; Giordano, M.; Montesarchio, V.; Ortega, C.; et al. Reorganisation of medical oncology departments during the novel coronavirus disease-19 pandemic: A nationwide Italian survey. Eur. J. Cancer 2020, 132, 17–23. [Google Scholar] [CrossRef]

- Indini, A.; Pinotti, G.; Artioli, F.; Aschele, C.; Bernardi, D.; Butera, A.; Defraia, E.; Fasola, G.; Gamucci, T.; Giordano, M.; et al. Management of patients with cancer during the COVID-19 pandemic: The Italian perspective on the second wave. Eur. J. Cancer 2021, 148, 112–116. [Google Scholar] [CrossRef]

- Murris, F.; Huchon, C.; Zilberman, S.; Dabi, Y.; Phalippou, J.; Canlorbe, G.; Ballester, M.; Gauthier, T.; Avigdor, S.; Cirier, J.; et al. Impact of the first lockdown for coronavirus 19 on breast cancer management in France: A multicentre survey. J. Gynecol. Obstet. Hum. Reprod. 2021, 50, 102166. [Google Scholar] [CrossRef]

- Fersia, O.; Bryant, S.; Nicholson, R.; McMeeken, K.; Brown, C.; Donaldson, B.; Jardine, A.; Grierson, V.; Whalen, V.; Mackay, A. The impact of the COVID-19 pandemic on cardiology services. Open Heart 2020, 7, e001359. [Google Scholar] [CrossRef] [PubMed]

- García-Olmos, L.; Rodríguez-Salvanés, F.; Batlle-Pérez, M.; Aguilar-Torres, R.; Porro-Fernández, C.; García-Cabello, A.; Carmona, M.; Ruiz-Alonso, S.; Ruiz-Alonso, S.; Garrido-Elustondo, S.; et al. Development and validation of a risk stratification model for prediction of disability and hospitalisation in patients with heart failure: A study protocol. BMJ Open 2017, 7, e014840. [Google Scholar] [CrossRef] [PubMed]

- Chen, I.H.; Kuo, S.F.; Lin, Y.K.; Huang, T.W. Knowledge of and Barriers to Palliative Care Perceived by Healthcare Providers before and after Promotion of the Patient Autonomy Act: A Cross-Sectional Study. Int. J. Environ. Res. Public Health 2022, 19, 3884. [Google Scholar] [CrossRef] [PubMed]

- Vincerževskiene, I.; Jasilionis, D.; Donatas Austys, D.; Stukas, R.; Kaceniene, A.; Smailyte, G. Education predicts cervical cancer survival: A Lithuanian cohort study. Eur. J. Public Health 2017, 27, 421–424. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Trimarchi, L.; Caruso, R.; Magon, G.; Odone, A.; Arrigoni, C. Clinical pathways and patient-related outcomes in hospital-based settings: A systematic review and meta-analysis of randomized controlled trials. Acta Biomed 2021, 92, e2021093. [Google Scholar] [CrossRef]

- Centre for Policy on Ageing (CPA). The Effectiveness of Care Pathways in Health and Social Care. 2014. Available online: http://www.cpa.org.uk/information/reviews/CPA-Rapid-Review-Effectiveness-of-care-pathways.pdf (accessed on 18 June 2022).

- Banerjee, M.; Chakraborty, S.; Pal, R. Diabetes self-management amid COVID-19 pandemic. Diabetes Metab. Syndr. 2020, 14, 351–354. [Google Scholar] [CrossRef]

- Peric, S.; Stulnig, T.M. Diabetes and COVID-19: Disease-Management-People. Wien Klin. Wochenschr. 2020, 132, 356–361. [Google Scholar] [CrossRef]

- Caggiano, G.; Napoli, C.; Coretti, C.; Lovero, G.; Scarafile, G.; De Giglio, O.; Montagna, M.T. Mold contamination in a controlled hospital environment: A 3-year surveillance in southern Italy. BMC Infect. Dis. 2014, 14, 595. [Google Scholar] [CrossRef]

- Pasquarella, C.; Veronesi, L.; Castiglia, P.; Liguori, G.; Montagna, M.T.; Napoli, C.; Rizzetto, R.; Torre, I.; Masia, M.D.; Di Onofrio, V. Italian multicentre study on microbial environmental contamination in dental clinics: A pilot study. Sci. Total Environ. 2010, 408, 4045–4051. [Google Scholar] [CrossRef]

- Iatta, R.; Napoli, C.; Borghi, E.; Montagna, M.T. Rare mycoses of the oral cavity: A literature epidemiologic review. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endod. 2009, 108, 647–655. [Google Scholar] [CrossRef]

- Masia, M.D.; Dettori, M.; Deriu, G.M.; Soddu, S.; Deriu, M.; Arghittu, A.; Azara, A.; Castiglia, P. Microbial Monitoring as a Tool for Preventing Infectious Risk in the Operating Room: Results of 10 Years of Activity. Atmosphere 2021, 12, 19. [Google Scholar] [CrossRef]

- Macinko, J.; Silver, D. Improving State Health Policy Assessment: An Agenda for Measurement and Analysis. Am. J. Public Health 2012, 102, 1697–1705. [Google Scholar] [CrossRef] [PubMed]

- Bosa, I.; Castelli, A.; Castelli, M.; Ciani, O.; Compagni, A.; Galizzi, M.M.; Garofano, M.; Ghislandi, S.; Giannoni, M.; Marini, G.; et al. Corona-regionalism? Differences in regional responses to COVID-19 in Italy. Health Policy 2021, 125, 1179–1187. [Google Scholar] [CrossRef]

- Arghittu, A.; Dettori, M.; Dempsey, E.; Deiana, G.; Angelini, C.; Bechini, A.; Bertoni, C.; Boccalini, S.; Bonanni, P.; Cinquetti, S.; et al. Health Communication in COVID-19 Era: Experiences from the Italian VaccinarSì Network Websites. Int. J. Environ. Res. Public Health 2021, 18, 5642. [Google Scholar] [CrossRef] [PubMed]

- Phadermrod, B.; Crowder, R.M.; Wills, G.B. Importance-Performance Analysis based SWOT analysis. Int. J. Inf. Manag. 2019, 44, 194–203. [Google Scholar] [CrossRef]

- Dalkey, N.C. An experimental study of group opinion: The Delphi method. Futures 1969, 1, 408–426. [Google Scholar] [CrossRef]

- Niederberger, M.; Spranger, J. Delphi Technique in Health Sciences: A Map. Front. Public Health 2020, 8, 457. [Google Scholar] [CrossRef]

- Escoffery, C.; Lebow-Skelley, E.; Udelson, H.; Böing, E.A.; Wood, R.; Fernandez, M.E.; Mullen, P.D. A scoping study of frameworks for adapting public health evidence-based interventions. TBM 2019, 9, 1–10. [Google Scholar] [CrossRef]

| Established Cutoffs [1] | Sub-Intervals | Color | Category | Classification Scale |

|---|---|---|---|---|

| Not Acceptable < 1.80 | <0.45 | Red | Strong Weakness | 1 |

| 0.46–0.89 | Red | Strong Weakness | 2 | |

| 0.90–1.35 | Red | Strong Weakness | 3 | |

| 1.36–1.79 | Red | Strong Weakness | 4 | |

| 1.80 ≤ Acceptable ≤ 2.98 | 1.80–2.20 | Yellow | Faint Weakness | 5 |

| 2.21–2.60 | Yellow | Faint Weakness | 6 | |

| 2.61–2.98 | Yellow | Faint Weakness | 7 | |

| Good ≥ 2.99 | 2.99–3.33 | Green | Strength | 8 |

| 3.34–3.66 | Green | Strength | 9 | |

| 3.67–4.00 | Green | Strength | 10 |

| Classification Scale | Priority Score | Category |

|---|---|---|

| 1 | 5 | Strong Weakness |

| 2 | 4 | Strong Weakness |

| 3 | 3 | Strong Weakness |

| 4 | 2 | Strong Weakness |

| 5 | 1.5 | Faint Weakness |

| 6 | 1 | Faint Weakness |

| 7 | 0.5 | Faint Weakness |

| 8 | 1.5 | Strength |

| 9 | 3 | Strength |

| 10 | 4.5 | Strength |

| Performance Index | Subject Analyzed | Subject Name in SPRIS | Kind of Data | Range |

|---|---|---|---|---|

| 72.9% | All CPs | All CPs | Items | Very high |

| 70.6% | All CPs | All CPs | Questionnaire Sections | High |

| 72.9% | Hereditary breast-ovarian cancers CP | CP 1 | Items | Very high |

| 84.9% | Hereditary breast-ovarian cancers CP | CP 1 | Questionnaire Sections | Very high |

| 61.9% | Autism spectrum disorders (DSA) CP | CP 2 | Items | Good |

| 63.6% | Autism spectrum disorders (DSA) CP | CP 2 | Questionnaire Sections | Good |

| 62.0% | Diabetes CP | CP 3 | Items | Good |

| 66.7% | Diabetes CP | CP 3 | Questionnaire Sections | Good |

| 52.6% | Heart failure CP | CP 4 | Items | Null |

| 37.5% | Heart failure CP | CP 4 | Questionnaire Sections | Null |

| 68.6% | UOC Medical Genetics | Ward 1 CP 1 | Items | Very high |

| 83.6% | UOC Medical Genetics | Ward 1 CP 1 | Questionnaire Sections | Very high |

| 51.0% | UOC Mental Health Center H1-H3 | Ward 2 CP 2 | Items | Null |

| 65.2% | UOC Mental Health Center H1-H3 | Ward 2 CP 2 | Questionnaire Sections | Good |

| 60.0% | UOS Primary Care District C and D | Ward 3 CP 3 | Items | Good |

| 75.0% | UOS Primary Care District C and D | Ward 3 CP 3 | Questionnaire Sections | High |

| 63.6% | UOD Shock and Trauma | Ward 4 CP 4 | Items | High |

| 79.4% | UOD Shock and Trauma | Ward 4 CP 4 | Questionnaire Sections | Very high |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bianco, L.; Raffa, S.; Fornelli, P.; Mancini, R.; Gabriele, A.; Medici, F.; Battista, C.; Greco, S.; Croce, G.; Germani, A.; et al. From Survey Results to a Decision-Making Matrix for Strategic Planning in Healthcare: The Case of Clinical Pathways. Int. J. Environ. Res. Public Health 2022, 19, 7806. https://doi.org/10.3390/ijerph19137806

Bianco L, Raffa S, Fornelli P, Mancini R, Gabriele A, Medici F, Battista C, Greco S, Croce G, Germani A, et al. From Survey Results to a Decision-Making Matrix for Strategic Planning in Healthcare: The Case of Clinical Pathways. International Journal of Environmental Research and Public Health. 2022; 19(13):7806. https://doi.org/10.3390/ijerph19137806

Chicago/Turabian StyleBianco, Lavinia, Salvatore Raffa, Paolo Fornelli, Rita Mancini, Angela Gabriele, Francesco Medici, Claudia Battista, Stefania Greco, Giuseppe Croce, Aldo Germani, and et al. 2022. "From Survey Results to a Decision-Making Matrix for Strategic Planning in Healthcare: The Case of Clinical Pathways" International Journal of Environmental Research and Public Health 19, no. 13: 7806. https://doi.org/10.3390/ijerph19137806

APA StyleBianco, L., Raffa, S., Fornelli, P., Mancini, R., Gabriele, A., Medici, F., Battista, C., Greco, S., Croce, G., Germani, A., Petrucci, S., Anibaldi, P., Bianco, V., Ronchetti, M., Banchieri, G., Napoli, C., & Piane, M. (2022). From Survey Results to a Decision-Making Matrix for Strategic Planning in Healthcare: The Case of Clinical Pathways. International Journal of Environmental Research and Public Health, 19(13), 7806. https://doi.org/10.3390/ijerph19137806