Author Contributions

Conceptualization, X.W. and Y.L.; methodology, X.W. and Y.L.; software, Y.L. and L.C.; validation, X.W. and Y.L.; formal analysis, Y.L., S.L. and L.C.; investigation, Y.L., S.L. and J.H.; resources, X.W. and Y.L.; data curation, Y.L., L.C. and J.H.; writing—original draft preparation, Y.L.; writing—review and editing, X.W., Y.L. and H.S.; visualization, Y.L., H.S. and F.Z.; supervision, X.W.; project administration, X.W.; funding acquisition, X.W. All authors have read and agreed to the published version of the manuscript.

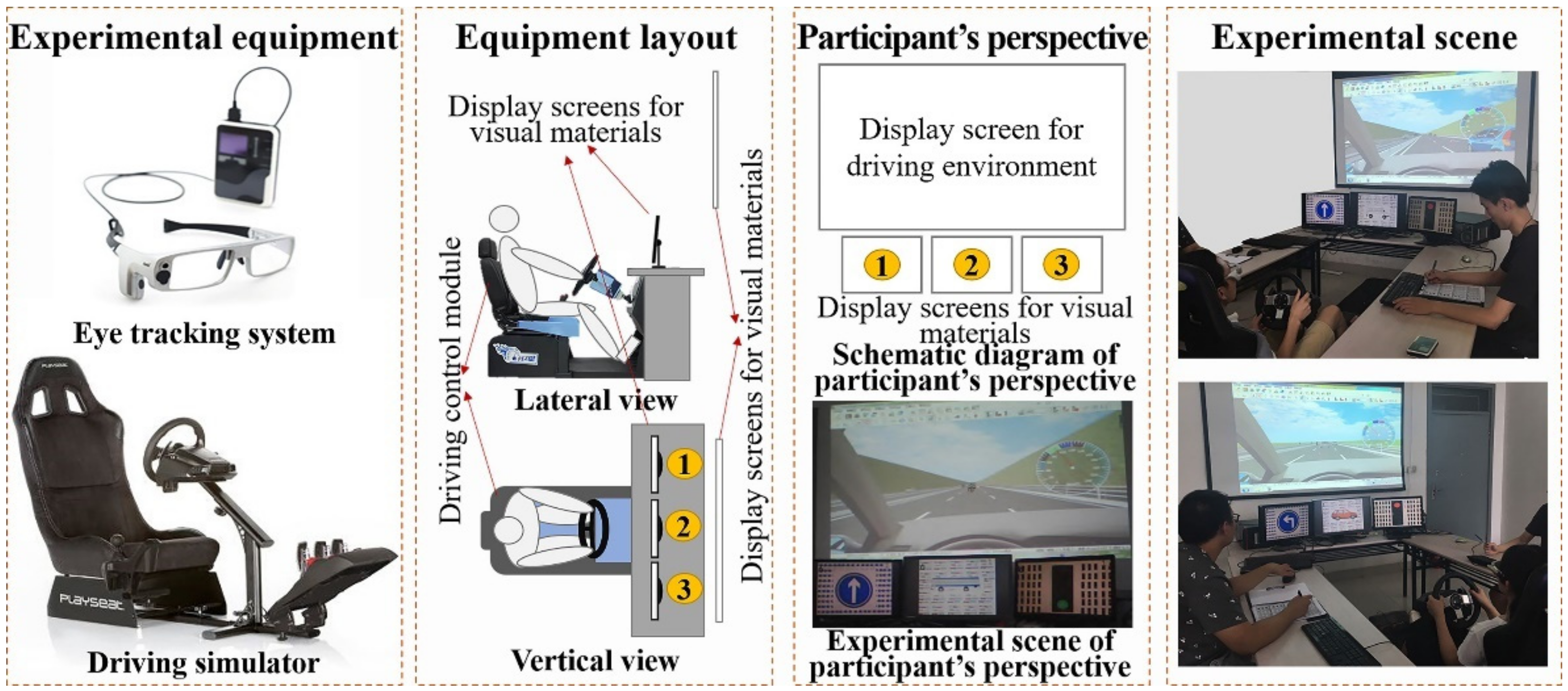

Figure 1.

Design idea of visual attention characteristic data collection.

Figure 1.

Design idea of visual attention characteristic data collection.

Figure 2.

Visual cognitive materials: (a) Schematic diagram of vehicle types; (b) Schematic diagram of traffic signs and traffic lights.

Figure 2.

Visual cognitive materials: (a) Schematic diagram of vehicle types; (b) Schematic diagram of traffic signs and traffic lights.

Figure 3.

Display timeline of visual cognitive materials in VIT.

Figure 3.

Display timeline of visual cognitive materials in VIT.

Figure 4.

Display timeline of visual cognitive materials in VWMT.

Figure 4.

Display timeline of visual cognitive materials in VWMT.

Figure 5.

Display timeline of visual cognitive materials in MVIT.

Figure 5.

Display timeline of visual cognitive materials in MVIT.

Figure 6.

Fixation point distribution of a participant. Note: In the “Distribution of visual fixation points”, each dot represents a visual fixation point. The numeric code represents the temporal order of the fixation points, and the two fixation points adjacent to each other in the temporal order are connected by a straight line.

Figure 6.

Fixation point distribution of a participant. Note: In the “Distribution of visual fixation points”, each dot represents a visual fixation point. The numeric code represents the temporal order of the fixation points, and the two fixation points adjacent to each other in the temporal order are connected by a straight line.

Figure 7.

Drivers’ visual attention characteristics in VIT: (a) Fixation time for visual tasks; (b) Fixation time for basic information unit; (c) Identification accuracy for visual tasks.

Figure 7.

Drivers’ visual attention characteristics in VIT: (a) Fixation time for visual tasks; (b) Fixation time for basic information unit; (c) Identification accuracy for visual tasks.

Figure 8.

Drivers’ visual attention characteristics in VIMT: (a) Fixation time for basic information unit; (b) Identification accuracy for visual tasks.

Figure 8.

Drivers’ visual attention characteristics in VIMT: (a) Fixation time for basic information unit; (b) Identification accuracy for visual tasks.

Figure 9.

Drivers’ visual attention characteristics in VIRT: (a) Fixation time for basic information unit; (b) Identification accuracy for visual tasks.

Figure 9.

Drivers’ visual attention characteristics in VIRT: (a) Fixation time for basic information unit; (b) Identification accuracy for visual tasks.

Figure 10.

Drivers’ visual attention characteristics in MVIT: (a) Fixation time for basic information unit; (b) Identification accuracy for visual tasks.

Figure 10.

Drivers’ visual attention characteristics in MVIT: (a) Fixation time for basic information unit; (b) Identification accuracy for visual tasks.

Figure 11.

Visual materials display timeline of each visual attention characteristic data collection.

Figure 11.

Visual materials display timeline of each visual attention characteristic data collection.

Figure 12.

Proportion of activation efficacy levels of eight typical emotions.

Figure 12.

Proportion of activation efficacy levels of eight typical emotions.

Figure 13.

Average activation efficacy of eight typical emotions.

Figure 13.

Average activation efficacy of eight typical emotions.

Figure 14.

Visual attention characteristics of participants with different emotional states in VIT: (a) fixation time on visual materials; (b) identification accuracy of visual materials.

Figure 14.

Visual attention characteristics of participants with different emotional states in VIT: (a) fixation time on visual materials; (b) identification accuracy of visual materials.

Figure 15.

Visual attention characteristics of participants with different emotional states in VWMT: (a) fixation time on visual materials; (b) identification accuracy of visual materials.

Figure 15.

Visual attention characteristics of participants with different emotional states in VWMT: (a) fixation time on visual materials; (b) identification accuracy of visual materials.

Figure 16.

Visual attention characteristics of participants with different emotional states in MVIT: (a) fixation time on visual materials; (b) identification accuracy of visual materials.

Figure 16.

Visual attention characteristics of participants with different emotional states in MVIT: (a) fixation time on visual materials; (b) identification accuracy of visual materials.

Table 1.

Elaboration on the different visual cognitive tasks.

Table 1.

Elaboration on the different visual cognitive tasks.

| Tasks | Description | Visual Materials | Display Screen |

|---|

| VIT | To identify and report the proportion of vehicle types in visual materials while completing the virtual driving. | Ternary pictures/quintuple pictures/seven-element pictures | Screen 2 |

| VIMT | To identify, report and memorize the proportions of vehicle types in the previous. | Seven-element pictures | Screen 2 |

| VIRT | To identify and report whether the proportion of vehicle types in the subsequent picture is the same as the previous picture. | Seven-element pictures | Screen 2 |

| MVIT | To identify and report the unary pictures on the 3 screens while completing driving in the order from left to right. | Unary pictures | Screen 1/Screen 2/Screen 3 |

Table 2.

Explanatory notes of the relevant parameters (symbols) obtained from the experiments.

Table 2.

Explanatory notes of the relevant parameters (symbols) obtained from the experiments.

| Collective Name | Symbols | Description |

|---|

| VI | VI3, VI5, VI7 | Average fixation time of each participant to each ternary picture, quintuple picture and seven-element picture in the VIT |

| AVI | AVI3, AVI5, AVI7 | Average identification accuracy of each participant for ternary, quintuple and seven-element pictures in the VIT |

| SVI | SVI3, SVI5, SVI7 | Fixation time to identify a basic information unit in a ternary picture, a quintuple picture and a seven-element picture in the VIT |

| VIM | VIM10, VIM20, VIM30 | Average fixation time of each participants to each previous picture in the MTU with the display interval of 10, 20 and 30 s |

| AVIM | AVIM10, AVIM20, AVIM30 | Average identification accuracy of each participant for the previous pictures in the MTU with the interval of 10, 20 and 30 s |

| SVIM | SVIM10, SVIM20, SVIM30 | Fixation time to identify a basic information unit in the previous picture in the MTU with the interval of 10, 20 and 30 s |

| VIR | VIR10, VIR20, VIR30 | Average fixation time of each participant to each subsequent picture in the MTU with the interval of 10, 20 and 30 s |

| AVIR | AVIR10, AVIR20, AVIR30 | Average identification accuracy of each participant for the subsequent pictures in the MTU with the interval of 10, 20 and 30 s |

| SVIR | SVIR10, SVIR20, SVIR30 | Fixation time to identify a basic information unit in the subsequent picture in the MTU with the interval of 10, 20 and 30 s |

| MulVI | MulVI1, MulVI2, MulVI3 | Average fixation time (seconds) of each participant to pictures on screens 1, 2 and 3 in the MVIT |

| AMulVI | AMulVI1, AMulVI2, AMulVI3 | Average identification accuracy of each participant for five unary pictures on screens 1, 2 and 3 in the MVIT |

Table 3.

One-way ANOVA results for SVI.

Table 3.

One-way ANOVA results for SVI.

| SVI | SoS 1 | df 2 | MS 3 | F | Sig. 4 |

|---|

| BG 5 | 0.086 | 2 | 0.043 | 45.172 | 0.000 |

| WG 6 | 0.188 | 198 | 0.001 | | |

| Tot. 7 | 0.274 | 200 | | | |

Table 4.

Multiple comparison results for SVI.

Table 4.

Multiple comparison results for SVI.

| (I) | (J) | MD 1 (I–J) | SE 2 | Sig. 3 | 95% CI 4 |

|---|

| LB 5 | UB 6 |

|---|

| SVI7 | SVIR10 | −0.020 * 7 | 0.004 | 0.000 | −0.031 | −0.008 |

| | SVIR20 | −0.019 * | 0.005 | 0.000 | −0.031 | −0.007 |

| | SVIR30 | −0.019 * | 0.005 | 0.000 | −0.031 | −0.007 |

| SVIR10 | SVI7 | 0.020 * | 0.004 | 0.000 | 0.008 | 0.031 |

| | SVIR20 | 0.001 | 0.005 | 1.000 | −0.012 | 0.014 |

| | SVIR30 | 0.000 | 0.005 | 1.000 | −0.012 | 0.013 |

| SVIR20 | SVI7 | 0.019 * | 0.005 | 0.000 | 0.007 | 0.031 |

| | SVIR10 | −0.001 | 0.005 | 1.000 | −0.014 | 0.012 |

| | SVIR30 | 0.000 | 0.005 | 1.000 | −0.014 | 0.013 |

| SVIR30 | SVI7 | 0.019 * | 0.005 | 0.000 | 0.007 | 0.031 |

| | SVIR10 | 0.000 | 0.005 | 1.000 | −0.013 | 0.012 |

| | SVIR20 | 0.000 | 0.005 | 1.000 | −0.013 | 0.014 |

Table 5.

One-way ANOVA results for AVI.

Table 5.

One-way ANOVA results for AVI.

| AVI | SoS 1 | df 2 | MS 3 | F | Sig. 4 |

|---|

| BG 5 | 0.067 | 2 | 0.034 | 0.886 | 0.414 |

| WG 6 | 7.532 | 198 | 0.038 | | |

| Tot. 7 | 7.600 | 200 | | | |

Table 6.

One-way ANOVA results for SVIM.

Table 6.

One-way ANOVA results for SVIM.

| SVIM | SoS 1 | df 2 | MS 3 | F | Sig. 4 |

|---|

| BG 5 | 0 | 2 | 0 | 0.166 | 0.847 |

| WG 6 | 0.152 | 198 | 0.001 | | |

| Tot. 7 | 0.152 | 200 | | | |

Table 7.

One-way ANOVA results for AVIM.

Table 7.

One-way ANOVA results for AVIM.

| SVIM | SoS 1 | df 2 | MS 3 | F | Sig. 4 |

|---|

| BG 5 | 0.031 | 2 | 0.015 | 0.288 | 0.750 |

| WG 6 | 10.630 | 198 | 0.054 | | |

| Tot. 7 | 10.661 | 200 | | | |

Table 8.

One-way ANOVA results for SVI7 and SVIM.

Table 8.

One-way ANOVA results for SVI7 and SVIM.

| SVIM | SoS 1 | df 2 | MS 3 | F | Sig. 4 |

|---|

| BG 5 | 0.017 | 3 | 0.006 | 8.159 | 0.000 |

| WG 6 | 0.186 | 264 | 0.001 | | |

| Tot. 7 | 0.204 | 267 | | | |

Table 9.

Multiple comparison results for SVI7 and SVIM.

Table 9.

Multiple comparison results for SVI7 and SVIM.

| (I) | (J) | MD 1 (I–J) | SE 2 | Sig. 3 | 95% CI 4 |

|---|

| LB 5 | UB 6 |

|---|

| SVI7 | SVIM10 | −0.018 *7 | 0.004 | 0.001 | −0.030 | −0.006 |

| | SVIM20 | −0.017 * | 0.004 | 0.001 | −0.029 | −0.006 |

| | SVIM30 | −0.020 * | 0.004 | 0.000 | −0.032 | −0.008 |

| SVIM10 | SVI7 | 0.018 * | 0.004 | 0.001 | 0.006 | 0.030 |

| | SVIM20 | 0.000 | 0.005 | 1.000 | −0.012 | 0.013 |

| | SVIM30 | −0.002 | 0.005 | 0.998 | −0.015 | 0.011 |

| SVIM20 | SVI7 | 0.017 * | 0.004 | 0.001 | 0.006 | 0.029 |

| | SVIM10 | 0.000 | 0.005 | 1.000 | −0.013 | 0.012 |

| | SVIM30 | −0.003 | 0.005 | 0.996 | −0.015 | 0.010 |

| SVIM30 | SVI7 | 0.020 * | 0.004 | 0.000 | 0.008 | 0.032 |

| | SVIM10 | 0.002 | 0.005 | 0.998 | −0.011 | 0.015 |

| | SVIM20 | 0.003 | 0.005 | 0.996 | −0.010 | 0.015 |

Table 10.

One-way ANOVA results for AVI7 and AVIM.

Table 10.

One-way ANOVA results for AVI7 and AVIM.

| SVIM | SoS 1 | df 2 | MS 3 | F | Sig. 4 |

|---|

| BG 5 | 0.045 | 3 | 0.015 | 0.284 | 0.837 |

| WG 6 | 13.864 | 264 | 0.053 | | |

| Tot. 7 | 13.909 | 267 | | | |

Table 11.

One-way ANOVA results for SVIR.

Table 11.

One-way ANOVA results for SVIR.

| SVIM | SoS 1 | df 2 | MS 3 | F | Sig. 4 |

|---|

| BG 5 | 0.000 | 2 | 0.000 | 0.016 | 0.984 |

| WG 6 | 0.161 | 198 | 0.001 | | |

| Tot. 7 | 0.161 | 200 | | | |

Table 12.

One-way ANOVA results for AVIR.

Table 12.

One-way ANOVA results for AVIR.

| SVIM | SoS 1 | df 2 | MS 3 | F | Sig. 4 |

|---|

| BG 5 | 1.991 | 2 | 0.996 | 14.107 | 0.000 |

| WG 6 | 13.973 | 198 | 0.071 | | |

| Tot. 7 | 15.965 | 200 | | | |

Table 13.

Multiple comparison results for AVIR.

Table 13.

Multiple comparison results for AVIR.

| (I) | (J) | MD 1 (I−J) | SE 2 | Sig. 3 | 95% CI 4 |

|---|

| LB 5 | UB 6 |

|---|

| AVIR10 | AVIR20 | 0.119 * 7 | 0.043 | 0.021 | 0.014 | 0.225 |

| | AVIR30 | 0.244 * | 0.044 | 0.000 | 0.137 | 0.351 |

| AVIR20 | AVIR10 | −0.119 * | 0.043 | 0.021 | −0.225 | −0.014 |

| | AVIR30 | 0.124 * | 0.050 | 0.041 | 0.004 | 0.245 |

| AVIR30 | AVIR10 | −0.244 * | 0.044 | 0.000 | −0.351 | −0.137 |

| | AVIR20 | −0.124 * | 0.050 | 0.041 | −0.245 | −0.004 |

Table 14.

One-way ANOVA results for SVI7 and SVIR.

Table 14.

One-way ANOVA results for SVI7 and SVIR.

| SVIM | SoS 1 | df 2 | MS 3 | F | Sig. 4 |

|---|

| BG 5 | 0.019 | 3 | 0.006 | 8.414 | 0.000 |

| WG 6 | 0.196 | 264 | 0.001 | | |

| Tot. 7 | 0.215 | 267 | | | |

Table 15.

Multiple comparison results for SVI7 and SVIR.

Table 15.

Multiple comparison results for SVI7 and SVIR.

| (I) | (J) | MD 1 (I−J) | SE 2 | Sig. 3 | 95% CI 4 |

|---|

| LB 5 | UB 6 |

|---|

| SVI7 | SVIR10 | −0.020 * 7 | 0.004 | 0.000 | −0.031 | −0.008 |

| | SVIR20 | −0.019 * | 0.005 | 0.000 | −0.031 | −0.007 |

| | SVIR30 | −0.019 * | 0.005 | 0.000 | −0.031 | −0.007 |

| SVIR10 | SVI7 | 0.020 * | 0.004 | 0.000 | 0.008 | 0.031 |

| | SVIR20 | 0.001 | 0.005 | 1.000 | −0.012 | 0.014 |

| | SVIR30 | 0.000 | 0.005 | 1.000 | −0.012 | 0.013 |

| SVIR20 | SVI7 | 0.019 * | 0.005 | 0.000 | 0.007 | 0.031 |

| | SVIR10 | −0.001 | 0.005 | 1.000 | −0.014 | 0.012 |

| | SVIR30 | 0.000 | 0.005 | 1.000 | −0.014 | 0.013 |

| SVIR30 | SVI7 | 0.019 * | 0.005 | 0.000 | 0.007 | 0.031 |

| | SVIR10 | 0.000 | 0.005 | 1.000 | −0.013 | 0.012 |

| | SVIR20 | 0.000 | 0.005 | 1.000 | −0.013 | 0.014 |

Table 16.

Multiple comparison results for AVI7 and AVIR.

Table 16.

Multiple comparison results for AVI7 and AVIR.

| (I) | (J) | MD 1 (I–J) | SE 2 | Sig. 3 | 95% CI 4 |

|---|

| LB 5 | UB 6 |

|---|

| AVI7 | AVIR10 | 0.000 | 0.038 | 1.000 | −0.100 | 0.100 |

| | AVIR20 | 0.119 * 7 | 0.044 | 0.045 | 0.002 | 0.237 |

| | AVIR30 | 0.254 * | 0.045 | 0.000 | 0.134 | 0.373 |

| AVIR10 | AVI7 | 0.000 | 0.038 | 1.000 | −0.100 | 0.100 |

| | AVIR20 | 0.119 * | 0.043 | 0.041 | 0.003 | 0.236 |

| | AVIR30 | 0.254 * | 0.044 | 0.000 | 0.136 | 0.372 |

| AVIR20 | AVI7 | −0.119 * | 0.044 | 0.045 | −0.237 | −0.002 |

| | AVIR10 | −0.119 * | 0.043 | 0.041 | −0.236 | −0.003 |

| | AVIR30 | 0.134 * | 0.050 | 0.046 | 0.002 | 0.267 |

| AVIR30 | AVI7 | −0.254 * | 0.045 | 0.000 | −0.373 | −0.134 |

| | AVIR10 | −0.254 * | 0.044 | 0.000 | −0.372 | −0.136 |

| | AVIR20 | −0.134 * | 0.050 | 0.046 | −0.267 | −0.002 |

Table 17.

Paired-sample T-test results for SVIM and SVIR.

Table 17.

Paired-sample T-test results for SVIM and SVIR.

| | M 1 | SD 2 | SE 3 | 95% CI 4 | t | df 7 | Sig. 8 (2-Tailed) |

|---|

| LB 5 | UB 6 |

|---|

| SVIM10-SVIR10 | −0.002 | 0.009 | 0.001 | −0.004 | 0.000 | −1.721 | 66 | 0.090 |

| SVIM20-SVIR20 | −0.001 | 0.010 | 0.001 | −0.004 | 0.001 | −1.167 | 66 | 0.247 |

| SVIM30-SVIR30 | 0.001 | 0.010 | 0.001 | −0.002 | 0.003 | 0.590 | 66 | 0.557 |

Table 18.

One-way ANOVA results for MulVI.

Table 18.

One-way ANOVA results for MulVI.

| SVIM | SoS 1 | df 2 | MS 3 | F | Sig. 4 |

|---|

| BG 5 | 0.314 | 2 | 0.157 | 26.168 | 0.000 |

| WG 6 | 1.189 | 198 | 0.006 | | |

| Tot. 7 | 1.503 | 200 | | | |

Table 19.

Multiple comparison results for MulVI.

Table 19.

Multiple comparison results for MulVI.

| (I) | (J) | MD 1 (I–J) | SE 2 | Sig. 3 | 95% CI 4 |

|---|

| LB 5 | UB 6 |

|---|

| MulVI1 | MulVI2 | 0.018 | 0.013 | 0.172 | −0.008 | 0.045 |

| | MulVI3 | 0.092 * 7 | 0.013 | 0.000 | 0.065 | 0.118 |

| MulVI2 | MulVI1 | −0.018 | 0.013 | 0.172 | −0.045 | 0.008 |

| | MulVI3 | 0.073 * | 0.013 | 0.000 | 0.047 | 0.100 |

| MulVI3 | MulVI1 | −0.092 * | 0.013 | 0.000 | −0.118 | −0.065 |

| | MulVI2 | −0.073 * | 0.013 | 0.000 | −0.100 | −0.047 |

Table 20.

One-way ANOVA results for AMulVI.

Table 20.

One-way ANOVA results for AMulVI.

| SVIM | SoS 1 | df 2 | MS 3 | F | Sig. 4 |

|---|

| BG 5 | 0.002 | 2 | 0.001 | 0.090 | 0.914 |

| WG 6 | 1.742 | 198 | 0.009 | | |

| Tot. 7 | 1.744 | 200 | | | |

Table 21.

Paired-samples T-test results for VI’ of neuter and VI’ of eight emotions.

Table 21.

Paired-samples T-test results for VI’ of neuter and VI’ of eight emotions.

| VI’ | M 1 | SD 2 | SE 3 | 95% CI 4 | t | Sig. 7 (2-Tailed) |

|---|

| LB 5 | UB 6 |

|---|

| neuter-anger | 0.029 | 0.043 | 0.007 | 0.016 | 0.042 | 4.416 | 0.000 |

| neuter-surprise | 0.011 | 0.068 | 0.010 | −0.010 | 0.032 | 1.045 | 0.302 |

| neuter-fear | −0.173 | 0.178 | 0.027 | −0.227 | −0.118 | −6.363 | 0.000 |

| neuter-anxiety | −0.207 | 0.183 | 0.028 | −0.264 | −0.151 | −7.449 | 0.000 |

| neuter-helplessness | −0.025 | 0.051 | 0.008 | −0.041 | −0.010 | −3.235 | 0.002 |

| neuter-contempt | 0.030 | 0.053 | 0.008 | 0.014 | 0.046 | 3.695 | 0.001 |

| neuter-relief | −0.015 | 0.065 | 0.010 | −0.035 | 0.005 | −1.481 | 0.146 |

| neuter-pleasure | −0.004 | 0.064 | 0.010 | −0.024 | 0.016 | −0.429 | 0.670 |

Table 22.

Paired-samples T-test results for AVI’ of neuter and AVI’ of eight emotions.

Table 22.

Paired-samples T-test results for AVI’ of neuter and AVI’ of eight emotions.

| AVI’ | M 1 | SD 2 | SE 3 | 95% CI 4 | t | Sig. 7 (2-Tailed) |

|---|

| LB 5 | UB 6 |

|---|

| neuter-anger | −0.016 | 0.071 | 0.011 | −0.037 | 0.006 | −1.431 | 0.160 |

| neuter-surprise | 0.008 | 0.170 | 0.026 | −0.045 | 0.060 | 0.298 | 0.767 |

| neuter-fear | 0.116 | 0.161 | 0.025 | 0.067 | 0.166 | 4.743 | 0.000 |

| neuter-anxiety | 0.039 | 0.149 | 0.023 | −0.007 | 0.085 | 1.703 | 0.096 |

| neuter-helplessness | 0.047 | 0.117 | 0.018 | 0.011 | 0.082 | 2.610 | 0.013 |

| neuter-contempt | −0.008 | 0.154 | 0.024 | −0.055 | 0.040 | −0.330 | 0.743 |

| neuter-relief | 0.031 | 0.142 | 0.022 | −0.013 | 0.075 | 1.431 | 0.160 |

| neuter-pleasure | 0.008 | 0.185 | 0.028 | −0.049 | 0.065 | 0.274 | 0.785 |

Table 23.

Paired-samples T-test results for VIR’ of neuter and VIR’ of eight emotions.

Table 23.

Paired-samples T-test results for VIR’ of neuter and VIR’ of eight emotions.

| VIR’ | M 1 | SD 2 | SE 3 | 95% CI 4 | t | Sig. 7 (2-Tailed) |

|---|

| LB 5 | UB 6 |

|---|

| neuter-anger | 0.035 | 0.045 | 0.007 | 0.021 | 0.049 | 5.072 | 0.000 |

| neuter-surprise | −0.019 | 0.045 | 0.007 | −0.033 | −0.006 | −2.864 | 0.007 |

| neuter-fear | −0.207 | 0.185 | 0.028 | −0.264 | −0.150 | −7.321 | 0.000 |

| neuter-anxiety | −0.191 | 0.197 | 0.030 | −0.252 | −0.131 | −6.361 | 0.000 |

| neuter-helplessness | −0.019 | 0.056 | 0.009 | −0.036 | −0.001 | −2.185 | 0.035 |

| neuter-contempt | 0.010 | 0.060 | 0.009 | −0.009 | 0.028 | 1.070 | 0.291 |

| neuter-relief | −0.001 | 0.061 | 0.009 | −0.020 | 0.017 | −0.146 | 0.885 |

| neuter-pleasure | −0.037 | 0.047 | 0.007 | −0.051 | −0.022 | −5.126 | 0.000 |

Table 24.

Paired-samples T-test results for AVIR’ of neuter and AVIR’ of eight emotions.

Table 24.

Paired-samples T-test results for AVIR’ of neuter and AVIR’ of eight emotions.

| AVIR’ | M 1 | SD 2 | SE 3 | 95% CI 4 | t | Sig. 7 (2-Tailed) |

|---|

| LB 5 | UB 6 |

|---|

| neuter-anger | −0.070 | 0.137 | 0.021 | −0.112 | −0.028 | −3.334 | 0.002 |

| neuter-surprise | 0.155 | 0.168 | 0.026 | 0.103 | 0.207 | 6.043 | 0.000 |

| neuter-fear | 0.155 | 0.183 | 0.028 | 0.099 | 0.211 | 5.547 | 0.000 |

| neuter-anxiety | 0.078 | 0.190 | 0.029 | 0.019 | 0.136 | 2.673 | 0.011 |

| neuter-helplessness | 0.070 | 0.137 | 0.021 | 0.028 | 0.112 | 3.334 | 0.002 |

| neuter-contempt | 0.008 | 0.212 | 0.032 | −0.057 | 0.073 | 0.240 | 0.812 |

| neuter-relief | −0.031 | 0.216 | 0.033 | −0.097 | 0.035 | −0.942 | 0.352 |

| neuter-pleasure | 0.016 | 0.205 | 0.031 | −0.048 | 0.079 | 0.496 | 0.623 |

Table 25.

Paired-samples T-test results for MulVI’ of neuter and MulVI’ of eight emotions.

Table 25.

Paired-samples T-test results for MulVI’ of neuter and MulVI’ of eight emotions.

| MulVI’ | M 1 | SD 2 | SE 3 | 95% CI 4 | t | Sig. 7 (2-Tailed) |

|---|

| LB 5 | UB 6 |

|---|

| neuter-anger | 0.004 | 0.008 | 0.001 | 0.002 | 0.007 | 3.398 | 0.001 |

| neuter-surprise | −0.012 | 0.016 | 0.002 | −0.017 | −0.008 | −5.267 | 0.000 |

| neuter-fear | 0.001 | 0.009 | 0.001 | −0.002 | 0.004 | 0.916 | 0.365 |

| neuter-anxiety | −0.038 | 0.036 | 0.006 | −0.049 | −0.027 | −6.902 | 0.000 |

| neuter-helplessness | −0.004 | 0.011 | 0.002 | −0.007 | −0.001 | −2.327 | 0.025 |

| neuter-contempt | 0.004 | 0.010 | 0.002 | 0.000 | 0.007 | 2.333 | 0.025 |

| neuter-relief | −0.001 | 0.013 | 0.002 | −0.005 | 0.003 | −0.722 | 0.474 |

| neuter-pleasure | 0.000 | 0.013 | 0.002 | −0.004 | 0.004 | 0.114 | 0.910 |

Table 26.

Paired-samples T-test results for AMulVI’ of neuter and AMulVI’ of eight emotions.

Table 26.

Paired-samples T-test results for AMulVI’ of neuter and AMulVI’ of eight emotions.

| AMulVI’ | M 1 | SD 2 | SE 3 | 95% CI 4 | t | Sig. 7 (2-Tailed) |

|---|

| LB 5 | UB 6 |

|---|

| neuter-anger | 0.005 | 0.068 | 0.010 | −0.016 | 0.026 | 0.496 | 0.623 |

| neuter-surprise | 0.008 | 0.089 | 0.014 | −0.020 | 0.035 | 0.573 | 0.570 |

| neuter-fear | −0.008 | 0.070 | 0.011 | −0.029 | 0.014 | −0.724 | 0.473 |

| neuter-anxiety | 0.021 | 0.074 | 0.011 | −0.002 | 0.043 | 1.838 | 0.073 |

| neuter-helplessness | 0.026 | 0.053 | 0.008 | 0.009 | 0.042 | 3.177 | 0.003 |

| neuter-contempt | 0.016 | 0.062 | 0.009 | −0.004 | 0.035 | 1.634 | 0.110 |

| neuter-relief | 0.016 | 0.071 | 0.011 | −0.006 | 0.037 | 1.431 | 0.160 |

| neuter-pleasure | 0.028 | 0.065 | 0.010 | 0.009 | 0.048 | 2.886 | 0.006 |