Abstract

Measure-valued differentiation (MVD) is a relatively new method for computing Monte Carlo sensitivities, relying on a decomposition of the derivative of transition densities of the underlying process into a linear combination of probability measures. In computing the sensitivities, additional paths are generated for each constituent distribution and the payoffs from these paths are combined to produce sample estimates. The method generally produces sensitivity estimates with lower variance than the finite difference and likelihood ratio methods, and can be applied to discontinuous payoffs in contrast to the pathwise differentiation method. However, these benefits come at the expense of an additional computational burden. In this paper, we propose an alternative approach, called the absolute measure-valued differentiation (AMVD) method, which expresses the derivative of the transition density at each simulation step as a single density rather than a linear combination. It is computationally more efficient than the MVD method and can result in sensitivity estimates with lower variance. Analytic and numerical examples are provided to compare the variance in the sensitivity estimates of the AMVD method against alternative methods.

JEL Classification:

D52; D81; G11

1. Introduction

Sensitivities play a key role in the assessment and the management of risks associated with financial derivatives and must be computed along with prices as part of sound risk management practice. Given that it is not possible to compute the sensitivities let alone the prices of many financial derivatives analytically, they are usually computed numerically, with Monte Carlo simulation being the most widely used technique. The associated sensitivities are then computed by finite difference, pathwise differentiation, or likelihood ratio methods, each with its own set of limitations. In particular, the finite difference method is biased and sensitivity estimates have relatively large variances, the pathwise derivative method cannot be applied to products with discontinuous payoffs such as digitals, and the likelihood ratio method can be subject to unbounded variance when the associated score function is not absolutely continuous with respect to the sensitivity parameter. For a detailed discussion of these methods and their limitations, refer to (), chapter 7.

There has been extensive work on regularizing the pathwise method to enable it to work with discontinuities. Such work tends either to involve differentiating the discontinuity directly, which yields a delta distribution, or to utilize smoothing to avoid this differentiation. (), (), and () explore the explicit integration of the delta distribution, which requires at least one extra simulation that must be handcrafted to the payoff. () and () reduce the degree of handcrafting by employing kernel smoothing and importance sampling, respectively. () modify the simulation scheme as a function of the perturbation variable so that the pathwise payoff, as a function of perturbation size, is smooth. It is then possible to apply the pathwise method at the cost of gaining a small likelihood ratio term. All these methods have the drawback that the methodology is adapted to the singularities of the payoff. This renders them much harder to implement generically than the likelihood ratio and pathwise methods.

Despite their importance, there is a relative absence of new techniques for computing Monte Carlo sensitivities, and recent advances have focused instead on improving the computational efficiency of existing techniques. For example, () apply adjoint algorithmic differentiation to speed up pathwise sensitivity calculations, while () apply low discrepancy sequences to achieve variance reduction for American option sensitivities.

A notable exception is the measure-valued differentiation (MVD) method considered, for example, in () and (). The MVD method does not differentiate the payoff and so discontinuities do not cause difficulties, and it has been shown to produce sensitivity estimates with lower variance than those computed using the finite difference and the likelihood ratio (LR) methods. It is also generic in the sense that it is not adapted to the payoff in any way, and so the same paths can be used to compute the sensitivities of many derivatives on the same underlying framework. In the MVD approach, the derivative of the transition density is recognized as a signed measure and decomposed as the difference of two proper measures. The simulation at each step is then branched according to the two constituent measures to produce multiple MVD paths. Sensitivity estimates from these branched paths are then recombined to produce one MVD sensitivity sample. It is shown that this approach generally results in sensitivity estimates with lower variance than alternative methods, albeit at the expense of a greater computational burden due to the branching of the paths. Further variance reduction is possible by coupling, or correlating, each pair of the branched paths.

In this paper, we introduce an alternative to the MVD method, called the absolute measure-valued differentiation (AMVD) method, which decomposes the density derivative as the product of its sign and its absolute value. This method shares many of the properties of the MVD method, such as the ability to handle discontinuous payoffs, but instead of initiating two additional paths at each simulation step of the original path, it initiates only one additional subpath. It is found that the AMVD method gives vega estimates with lower variance than the MVD method for vanilla and digital calls in the Black–Scholes model. Moreover, the AMVD vega estimates have lower variance than the pathwise differentiation method in certain situations, such as the case of short-dated deep in-the-money vanilla calls. A consideration of vegas for the more exotic double-barrier options revealed that the relative performances of the LR, MVD, and AMVD methods depend, in general, on the positions of the barriers and the way each method distributes densities relative to the barriers.

Applying the AMVD method requires the generation of nonstandard random variates,1 and for the examples considered in this paper, we provide a highly efficient implementation of the inverse transform method for this purpose using Newton’s method with a careful selection of the initial point. The time taken to generate each vega subpath for the AMVD method is approximately equal to the time taken to generate the corresponding MVD method using the efficient generation of the double-sided Boltzmann–Maxwell distribution introduced in ().

The remainder of the paper is structured as follows: the measure-valued differentiation method is briefly reviewed in Section 2, and the absolute measure-valued differentiation method is presented in Section 3. This is followed by Section 4 in which the notation and key identities for the remainder of the paper are presented. Sensitivities for vanilla calls and digital calls are computed analytically in Section 5 and Section 6, respectively. A more complex example of the double knock-out barrier option is investigated numerically in Section 7, and the paper concludes with Section 8.

2. Brief Review of Measure-Valued Differentiation

In this section, we provide a heuristic overview of the MVD method and refer the interested reader to () and () for the details.

Let X be an -valued random variable, and let be the probability density function of X with as one of its parameters. Then, given a payoff, , associated with a financial derivative on X, the likelihood ratio (LR) sensitivity with respect to the parameter is given by

ignoring discounting. At this point, the likelihood ratio method rewrites the above expression as

and computes the sensitivity as the expectation of the modified payoff obtained by scaling by the score function .

Now, suppose is a signed measure on , and that it decomposes as the difference

where and are proper measures on . Then, under the MVD method, the sensitivity with respect to is computed as the difference

where . The two summands are computed separately, although they may be coupled to reduce the total variance. It should be noted that , for , are probability measures on , and so the decomposition in (4) provides the basis for a numerical approximation of the sensitivity using, for example, a Monte Carlo simulation in which the integrals in (4) are computed numerically using random draws from the distributions determined by .

The above description of the MVD method applies to path-independent European derivatives. For the more general case, suppose that a Monte Carlo simulation, with simulation times , is used to value a given derivative with expiry T. If the underlying process is assumed to be Markovian, then the probability density of a simulated path is given by the product

where is the transition density from the to conditional starting from at . For notational convenience, write , , and define

The likelihood ratio sensitivity of a general payoff, , with respect to the parameter, , is then given by

Once again, if it is assumed that the derivatives

are signed measures on , and decompose as differences

where are proper measures, then the MVD estimate of the sensitivity is given by

where is obtained from by substituting the derivative term, , in (6) with its decomposition

where .

The implementation of the MVD method begins with the simulation, in the usual manner, of the underlying asset price path. Then, for each , the i-th step is branched into two by drawing from distributions corresponding to the probability densities, , for . The remaining steps in the two branched paths are then resimulated by drawing from the original distributions, for . Thus, the full MVD method generates a set of paths for each regular path and is consequently more computationally intensive than, for example, the likelihood ratio method.2 In (), less computationally demanding approximations to the full MVD method are proposed in which branching is performed only in a small subset of the simulation steps.

3. Absolute Measure-Valued Differentiation

An important factor taken into consideration when deciding on the form of the decomposition of the transition density in (9) is the additional computation burden associated with the need to generate random variates from the component distributions. The decomposition chosen in () and () is due, at least in part, to ensuring that one of the components coincides with the distribution already used in generating the underlying process. The remaining factor, however, will generally be from a different distribution.

The main motivation behind the absolute measure-valued differentiation (AMVD) method is improve the computational efficiency of the MVD method by requiring the introduction of only one additional distribution and branching off only one path at each simulation step, while retaining the key features of the MVD method, viz., not requiring the payoff derivative, and producing sensitivity estimates with low variances. Using the notation from the previous section, the AMVD estimate for the sensitivity of a payoff f with respect to the parameter is defined by

where , , and

It follows from that is an unbiased estimator for . Moreover, although the AMVD method introduces what appears to be a problematic term, viz., , since , and these terms are squared when computing the associated variance, they do not adversely affect the AMVD variance.

4. Notation, Model, and Useful Identities

In situations where it applies, the pathwise differentiation (PW) method usually gives sensitivity estimates with lower variances than other methods, and it was shown in () that the MVD sensitivities have lower variance than the corresponding finite difference estimates. In subsequent sections, we will compare the variances of sensitivity estimates computed using the LR, MVD, AMVD, and PW methods. Although the analysis could be performed for any sensitivity and with any underlying model, we will focus on delta and vega under the () model. This allows us to obtain, analytically, the variances of deltas and vegas for vanillas and digitals, and avoid the consideration of more complex models that do not contribute, in any substantial way, to the analysis of the relative performance of the various approaches for computing sensitivities.

4.1. Black–Scholes Delta and Vega

Let r be the risk-free rate, the price of the underlying asset at time t, and the asset volatility. Moreover, let be the sequence of Monte Carlo simulation times, and, for notational convenience, define

where and . The Euler–Maruyama discretization scheme for the Black–Scholes model

is unbiased, where is a normally distributed random variable. If we define

for , then the transition density, , is given by

so that . Straight-forward calculations give

where is the standard normal random variate in (15) and is the standard normal density. The decompositions chosen for the MVD method in () are

where . Both terms in (21) correspond to the density of the Rayleigh distribution, while in (22), the first term corresponds to the double-sided Maxwell–Boltzmann distribution, the second and third terms correspond to the Rayleigh distribution, and the last term is the standard normal density. Note that the linear terms in (18) and (20) were decomposed into two Rayleigh density terms since they define signed measures rather than proper measures on . The decompositions that will be used in the AMVD method are

4.2. Notation and Identities

For any , define polynomials, , by and for ,

Moreover, given any polynomial, , of degree n, and , let be the coefficients in the decomposition

which are welldefined and unique since is a basis for the space of polynomials of degree at the highest n. For the purposes of computing the sensitivity variances, we note that

so that

If for any given , we define the quadratic , by

then

and so

Next, squaring gives

and so , , and

Finally, for any , define

and given , , and a polynomial of degree n, define

In (37), we agree to set and . Note that the definition of was motivated by the identity

It will be seen that the variances of deltas and vegas for vanilla and digital options can be obtained analytically and expressed succinctly in terms of .

5. Vanilla Calls

For vanilla options, it is possible to derive analytic expressions for the variances of deltas and vegas for the LR, MVD, AMVD, and PW methods. We only provide the details for European calls, since the corresponding calculations for puts are entirely similar. Throughout this section, let K be the strike for a European call with expiry T, and let .

5.1. Vanilla Call Delta

Recall from (18) that if , then the derivative of the transition density from to with respect to is given by

where , and for notational clarity, we have written , and . The likelihood ratio estimator for the call delta is unbiased and computed using the expression

where

The variance of the likelihood ratio call delta is given by

The expected value of the MVD delta is unbiased and is computed using the expression

where the terms are computed independently. The coefficient, , preceding the terms , is introduced to ensure that the product is a probability density function. The variance of the MVD delta is given by

where

The expected value of the AMVD delta is unbiased and is computed using the expression

and its variance is given by

It should be noted that although is approximately half the value of , implementing MVD using a Monte Carlo simulation would require two paths for each sample, while the AMVD method requires only one. Finally, the expected value for the PW delta is given by

with the associated variance

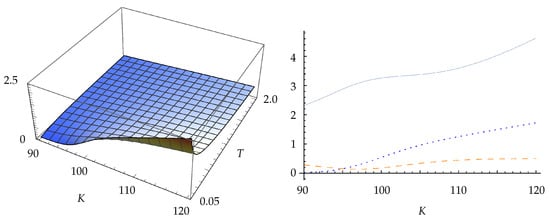

The ratios of variances for the vanilla call delta are shown in Figure 1 and Figure 2. It can be seen that the AMVD method gives lower variances than the LR method, but higher variances than the MVD method. Somewhat unexpectedly, the AMVD method results in lower variances than the PW method for short-dated deep in-the-money calls.

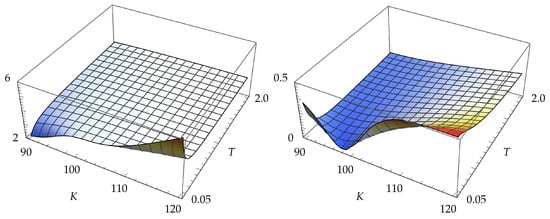

Figure 1.

On the left, there is the ratio and, on the right, there is the ratio .

Figure 2.

On the left, there is the ratio . On the right, there are variance ratios at . The solid curve is , the dashed curve is , and the dotted curve is .

5.2. Vanilla Call Vega

The derivative of the transition density, , with respect to is given by

where is as defined previously. The likelihood ratio vega for the vanilla call is unbiased and is given by

so that . The associated variance is

The MVD vanilla call vega is unbiased and is computed using the decomposition

where it is assumed that the components are uncoupled. It should be noted that the first and last terms are coupled in () and () to reduce the resulting variance. The variance associated with the uncoupled MVD vanilla call vega is

The AMVD vanilla call vega is computed using the expression

where

and the associated variance is

Finally, the pathwise vega for the vanilla call is given by

with the associated variance

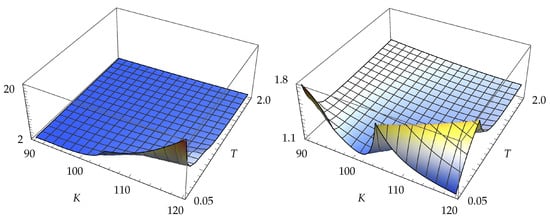

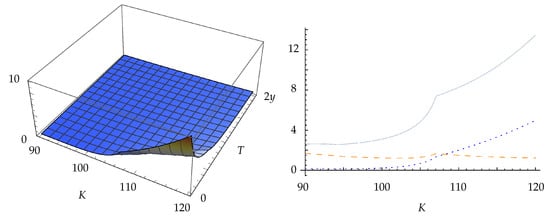

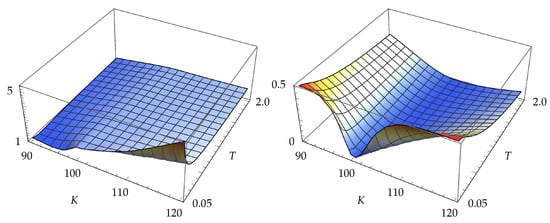

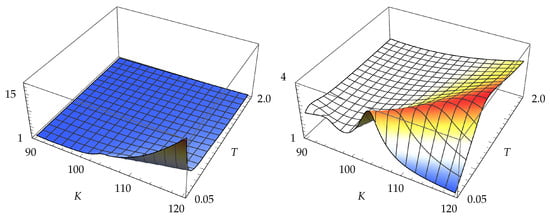

The ratios of variances for vanilla call vegas are shown in Figure 3 and Figure 4. As was the case with the deltas, the AMVD method gives lower variances than the LR method, but, in contrast to the deltas, it also produces lower variances than the MVD method. The AMVD method also produces lower variances than the PW method for in-the-money calls.

Figure 3.

On the left, there is the ratio and, on the right, there is the ratio .

Figure 4.

On the left, there is the ratio . On the right, there are variance ratios at . The solid curve is , the dashed curve is , and the dotted curve is .

6. Digital Calls

In this section, we compute the LR, MVD, and AMVD variances for the digital call deltas and vegas. Once again, we only provide details for digital calls; let K be the strike for a digital call with expiry T.

6.1. Digital Call Delta

The likelihood ratio delta for the digital call is computed using the expression

and the associated variance is

The MVD delta for the digital call is computed using the expression

and the variance is given by

Finally, AMVD delta for the digital is given by

with the associated variance

We note that the PW method cannot be applied for digital options.

The ratios of variances for digital call delta are shown in Figure 5. The AMVD method gives lower variances than the LR method, particularly for short-dated deep in-the-money digitals. The MVD method gives lower variances than the AMVD method, most noticeably for at-the-money digitals.

Figure 5.

On the left, there is the ratio and, on the right, there is the ratio .

6.2. Digital Call Vega

The likelihood ratio vega for the digital call is computed using the expression

and the associated variance is

The MVD vega for the digital call is computed using the expression

and the associated variance is given by

Finally, the AMVD vega for the digital call is computed using the expression

with the associated variance

The ratios of variances for digital calls are shown in Figure 6, where it can be seen that the AMVD method gives lower variance than both the LR and the MVD methods.

Figure 6.

On the left, there is the ratio and, on the right, there is the ratio .

7. Double-Barrier Option

In this section, we consider the delta and vega for a double-barrier option that knocks out if the closing price of the underlying asset lies outside the range, , on at least three days prior to expiry, where , and pays the constant rebate otherwise. We take , , , and subdivide the interval into 90 equal subintervals. This is the example considered in () Subsection 5.3.

7.1. Generation of Non-Normal Random Variates

In order to implement the AMVD method, and the MVD method for comparison purposes, we need to generate random variates from the Rayleigh distribution, the double-sided Maxwell–Boltzmann distribution, and the distributions corresponding to the absolute value densities appearing in (23) and (24).

7.1.1. Rayleigh Random Variate

The standard Rayleigh distribution is defined for and has the probability density function

and the cumulative density function

Since the inverse of the cumulative density function is given by

for , a variate from the Rayleigh distribution can be generated using the following algorithm:

- R1.

- Generate a uniform variate .

- R2.

- Set , which is then a Rayleigh random variate.

7.1.2. Double-Sided Maxwell–Boltzmann Random Variate

The standard Maxwell–Boltzmann distribution is defined for and has probability density function

An algorithm for generating variates from the Maxwell–Boltzmann distribution based on the acceptance–rejection method, and introduced in (), is as follows:

- MB1.

- Generate independent uniform variates [. Set .

- MB2.

- If , then go back to step MB1.3

- MB3.

- Generate a uniform variate , independent of and .

- MB4.

- If , set , and set otherwise. Then, x is a double-sided Maxwell–Boltzmann random variate.

7.1.3. Absolute Rayleigh Distribution

Consider the random variable with domain , and the probability density function

Note that is obtained by extending to using symmetry about the vertical axis. Hence, an algorithm for generating a variate from the absolute Rayleigh distribution is as follows:

- AR1.

- Generate a uniform variate .

- AR2.

- Then, an absolute Rayleigh random variate, x, is given by

7.1.4. Absolute Quadratic Normal (AQN) Distribution

Assume , and let , , and be as defined in (29), (49), and (50), respectively. Consider the random variable with domain , and the probability density function

Note that are the roots of , and for . If we denote by the corresponding cumulative density function, then a straight forward calculation gives

Note that, in each of the three regions, has the form

where and . Thus, given a uniform random variate u, to compute the corresponding variate with density , we need to compute the root of a function of the form

with and appropriately chosen according to the value of u. For this purpose, we apply Newton’s method noting that

Thus, given an initial point , we can apply the recursion

until the desired degree of precision is achieved.

However, due to the nature of , Newton’s method can be highly sensitive to the initial point . In fact, a poor initial value can result in the method failing to converge to the correct root. Thus, it is crucial that the initial point is chosen carefully, and, for this, we take inspiration from () where the Weibull distribution is used in the acceptance–rejection method to generate the double-sided Maxwell–Boltzmann distribution.

For the two regions, and , we approximate the AQN density using Weibull distributions

with the cumulative density function

The parameters, , are determined by matching the variances of the approximating Weibull distributions to the variances of the AQN variate restricted to the intervals ] and ], respectively. This gives

where are variances of the AQN variate restricted to ] and [. For the details, refer to Appendix A.

For the region , we approximate the AQN cumulative density on and , where , using quadratics

with determined by setting , which gives

The objective function, h, and the initial point, , for the four regions determined by the uniform random variate are shown in Table 1.

Table 1.

Objective functions and the initial values for generating the AQN random variate using Newton’s method, where .

Using the initial values given in Table 1, Newton’s method converges within four iterations in most cases. It should be noted that each Newton iteration only involves elementary operations and one exponential, and so the method is quite computationally efficient.

7.2. Double-Barrier Vega

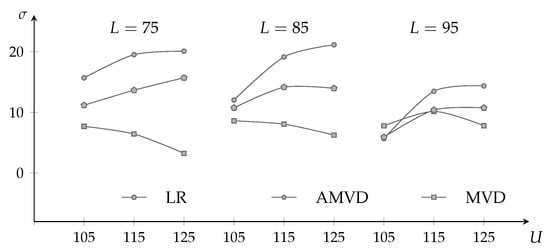

Vegas for the double knock-out barrier was computed using a Monte Carlo simulation implemented in C++ with uniform random variates generated by Mersenne Twister from the boost library.4 For each method, 100 independent valuations were performed with each valuation using 50,000 paths for the MVD method and 100,000 paths for the LR and AMVD methods. Standard deviations were then computed from these 100 samples. The lower and upper barriers were set to and for . The resulting means and standard deviations of vegas for a subset of pairs is shown in Table 2.

Table 2.

Means and standard deviations of vegas for double-barrier options with varying lower and upper barriers using the LR, MVD, and AMVD methods.

It is evident from Figure 7 that MVD vegas have the lowest standard deviations in general for double-barrier options, followed by those of the AMVD, and then the LR method. It is worth noting that the standard deviation in vegas for the AMVD method is lowest, in relative terms, when the upper barrier is close to the lower barrier. In fact, the AMVD method has the lowest standard deviation among the three methods when .

Figure 7.

Comparison of double-barrier option vega standard deviations for the LR, MVD, and AMVD methods.

This is consistent with an observation made in previous sections that the method giving sensitivities with the smallest variance will not only depend on the derivative being considered but also on the details such as strikes and barrier levels, and the way each method distributes densities relative to the strikes and barriers. The implementer should experiment and choose the method that is most appropriate for each application.

8. Conclusions

In this paper, we introduced an alternative to the measure-valued differentiation (MVD) method for computing Monte Carlo sensitivities, called the absolute measure-valued differentiation (AMVD) method, which decomposes the density derivative as the product of its sign and its absolute value. This method shares many of the properties of the MVD method, but instead of initiating two additional paths in each simulation step of the original path, it initiates only one additional subpath. It was shown that the AMVD method gives vega estimates with lower variance than the MVD method for vanilla and digital calls in the Black–Scholes model. Moreover, the AMVD vega estimates have lower variance than the pathwise differentiation method in certain situations, such as the case of short-dated deep in-the-money vanilla calls. A consideration of the vegas for the more exotic double-barrier options revealed that the relative performances of the likelihood ratio, MVD, and AMVD methods depend, in general, on the positions of the barriers, and the way each method distributes densities relative to the barriers.

As is the case with the MVD method, applying the AMVD method requires the generation of nonstandard random variates. For the examples considered in this paper, we provided a highly efficient implementation of the inverse transform method for this purpose using Newton’s method with a careful selection of the initial point.

Author Contributions

Conceptualization, M.J. and O.K.K.; methodology, M.J. and O.K.K.; software, O.K.K.; formal analysis, M.J., O.K.K. and S.S.; writing—original draft preparation, M.J. and O.K.K. writing—review and editing, O.K.K. and S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data and software used in this article are available on reqeust from the corresponding author (O.K.).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Initial Values for Newton’s Method

For the initial point in Newton’s method to invert the AQN cumulative density function, we approximate the cumulative density function using a Weibull density with matching variance. For this purpose, note that the mean, , and the second moment, , for the AQN distribution over the region are given by

where , and

The variance is given by , and the corresponding mean and second moment for approximating the Weibull distribution are

and

The variance of the approximating the Weibull distribution is given by

and similar calculations for the region gives

where . Equating the corresponding variances gives

Appendix B. Vanilla Call Variances

Appendix B.1. Likelihood Ratio Call Delta Variance

The second moment associated with the likelihood ratio delta for the vanilla call is given by

Computing the three terms, we obtain

Appendix B.2. MVD Call Delta Variance

The second moment for the MVD delta estimate is given by

Computing the first term, we obtain

and the three subterms are given by

Similarly, computing the second term, we obtain

and the three subterms are given by

Appendix B.3. AMVD Call Delta Variance

The second moment for the AMVD delta estimate is given by

Appendix B.4. Likelihood Ratio Call Vega Variance

The second moment associated with the likelihood ratio vega for the vanilla call is given by

where is as defined in (30). Expanding out the first term, we obtain

Computing the three terms gives

Appendix B.5. MVD Call Vega Variance

The second moment associated with the MVD vega is given by

The four terms are as follows:

Appendix B.6. AMVD Call Vega Variance

The second moment associated with the AMVD vega is given by

The three terms are as follows:

Appendix C. Digital Call Variances

Appendix C.1. Likelihood Ratio Digital Call Delta Variance

The second moment associated with the likelihood ratio delta for the digital call is

Appendix C.2. MVD Digital Call Delta Variance

The second moment associated with the MVD delta for the digital call is

Appendix C.3. AMVD Digital Call Delta Variance

The second moment associated with the AMVD delta for the digital call is

Appendix C.4. Likelihood Ratio Digital Call Vega Variance

The second moment associated with the likelihood ratio vega for the digital call is

Appendix C.5. MVD Digital Call Vega Variance

The second moment associated with the MVD delta for the digital call is

Appendix C.6. AMVD Digital Call Vega Variance

The second moment associated with the AMVD vega for the digital call is

Notes

| 1 | This is also the case for the MVD method. |

| 2 | It should be noted, however, that this higher computational burden is partially offset by the MVD sensitivity estimates having lower variance. |

| 3 | We note that the inequality involving in () is in the opposite direction, which is most likely a typographical error. |

| 4 | Refer to www.boost.org. Accessed 3 June 2022. |

References

- Black, Fischer, and Myron Scholes. 1973. The pricing of options and corporate liabilities. Journal of Political Economy 81: 637–54. [Google Scholar] [CrossRef]

- Brace, Alan. 2007. Engineering BGM. Sydney: Chapman and Hall. [Google Scholar]

- Capriotti, Luca, and Mike Giles. 2018. Fast Correlation Greeks by Adjoint Algorithmic Differentiation. arXiv arXiv:1004.1855v1. [Google Scholar] [CrossRef]

- Chan, Jiun Hong, and Mark Joshi. 2015. Optimal limit methods for computing sensitivities of discontinuous integrals including triggerable derivative securities. IIE Transactions 47: 978–97. [Google Scholar] [CrossRef]

- Glasserman, Paul. 2004. Monte Carlo Methods in Financial Engineering. New York: Springer. [Google Scholar]

- Heidergott, Bernd, Felisa J. Váquez-Abad, and Warren Volk-Makarewicz. 2008. Sensitivity esitmation for Gaussian systems. European Journal of Operational Research 187: 193–207. [Google Scholar] [CrossRef]

- Joshi, Mark S., and Dherminder Kainth. 2004. Rapid and accurate development of prices and Greeks for n-th to default credit swaps in the Li model. Quantitative Finance 4: 266–75. [Google Scholar] [CrossRef][Green Version]

- Liu, Guangwu, and L. Jeff Hong. 2011. Kernel estimation of the greeks for options with discontinuous payoffs. Operations Research 59: 96–108. [Google Scholar] [CrossRef]

- Lyuu, Yuh-Dauh, and Huei-Wen Teng. 2011. Unbiased and efficient Greeks of financial options. Finance and Stochastics 15: 141–81. [Google Scholar] [CrossRef]

- Pflug, Georg Ch, and Philipp Thoma. 2016. Efficient calculation of the Greeks for exponentional Lévy processes: An application of measure valued differentiation. Quantitative Finance 16: 247–57. [Google Scholar] [CrossRef]

- Rott, Marius G., and Christian P. Fries. 2005. Fast and Robust Monte Carlo Cdo Sensitivities and Their Efficient Object Oriented Implementation. SSRN, May 31. [Google Scholar]

- Xiang, Jiangming, and Xiaoqun Wang. 2022. Quasi-Monte Carlo simulation for American option sensitivities. Journal of Computational and Applied Mathematics 413: 114268. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).