Professional Forecasters vs. Shallow Neural Network Ensembles: Assessing Inflation Prediction Accuracy

Abstract

1. Introduction

2. Materials and Methods

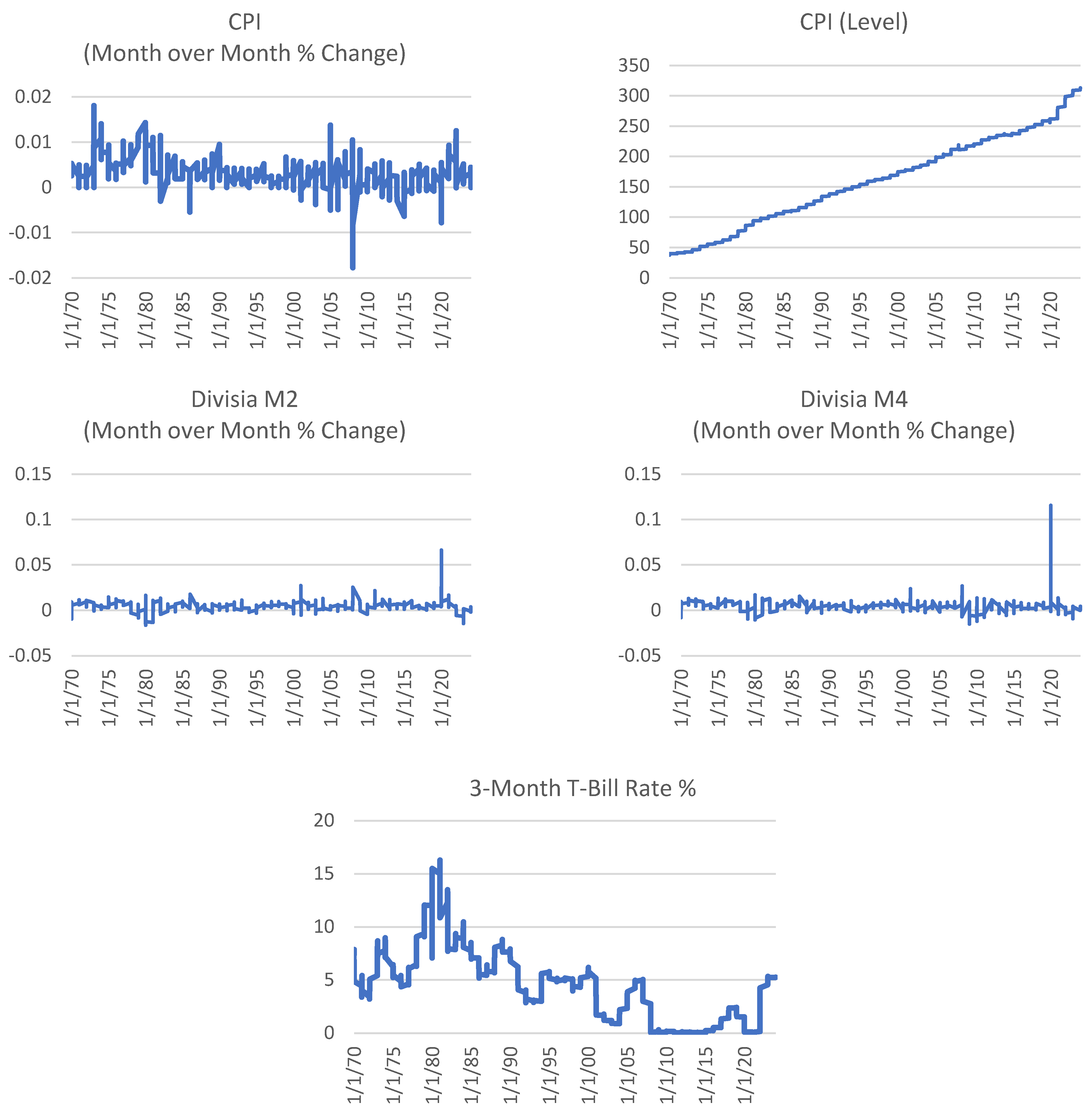

2.1. Data

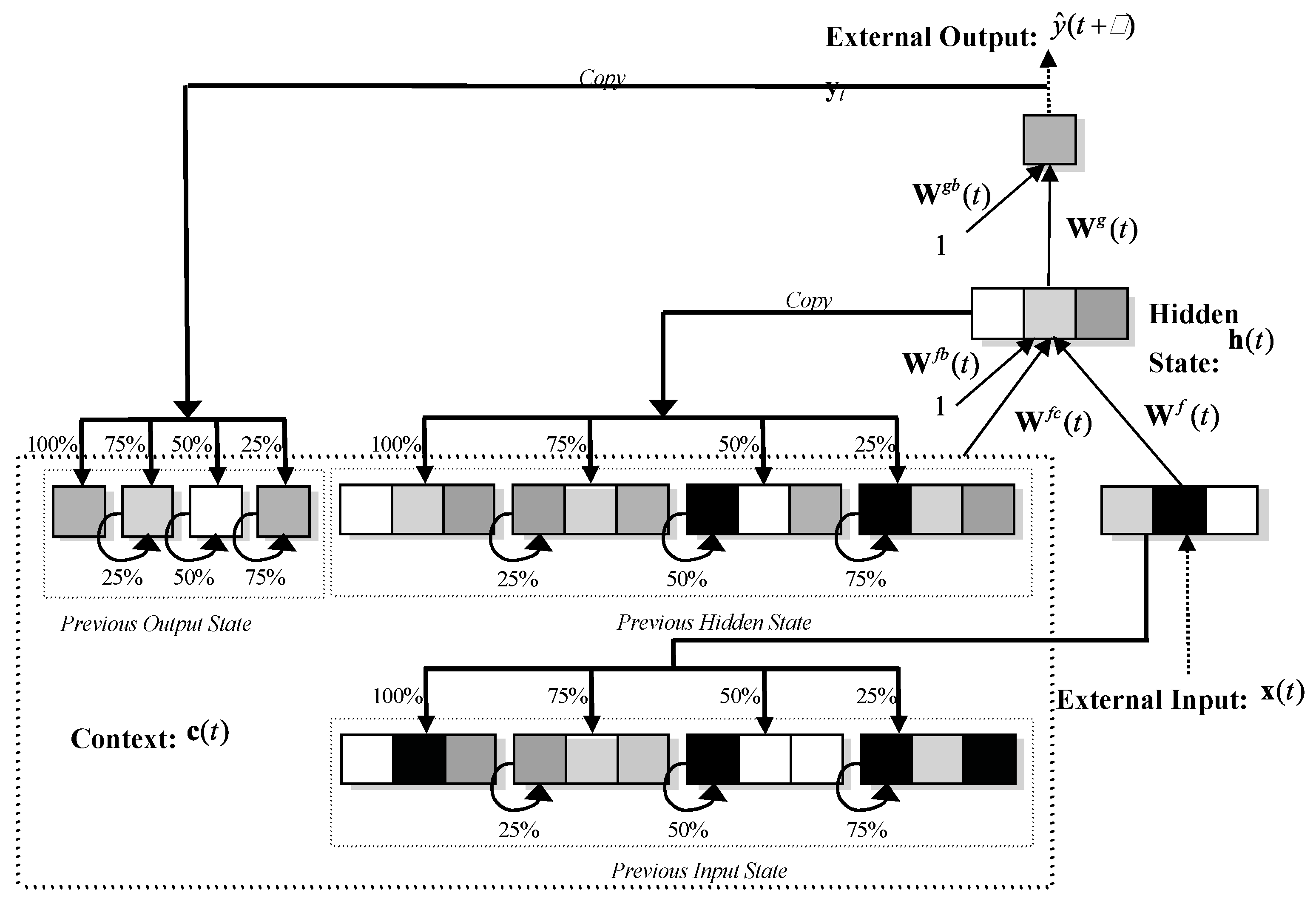

2.2. The Multi-Recurrent Network Methodology

- (a)

- simple MRN consisting of three input variables: the month-on-month percentage change in inflation (cpi_percmom, the auto-regressive term), the natural log of the price level (ln_cpi_level) and 3-Month Treasury Bill Secondary Market Rate (dtb3).

- (b)

- intermediate MRN which includes (a) plus the month-on-month growth rate (%) for the Divisia monetary measure DM4 (dm4_percmom).

- (c)

- complex MRN which includes (b) plus the month-on-month growth rate (%) for the Divisia monetary measure DM2 (dm2_percmom).

2.3. Survey of Professional Forecasters (SPF)

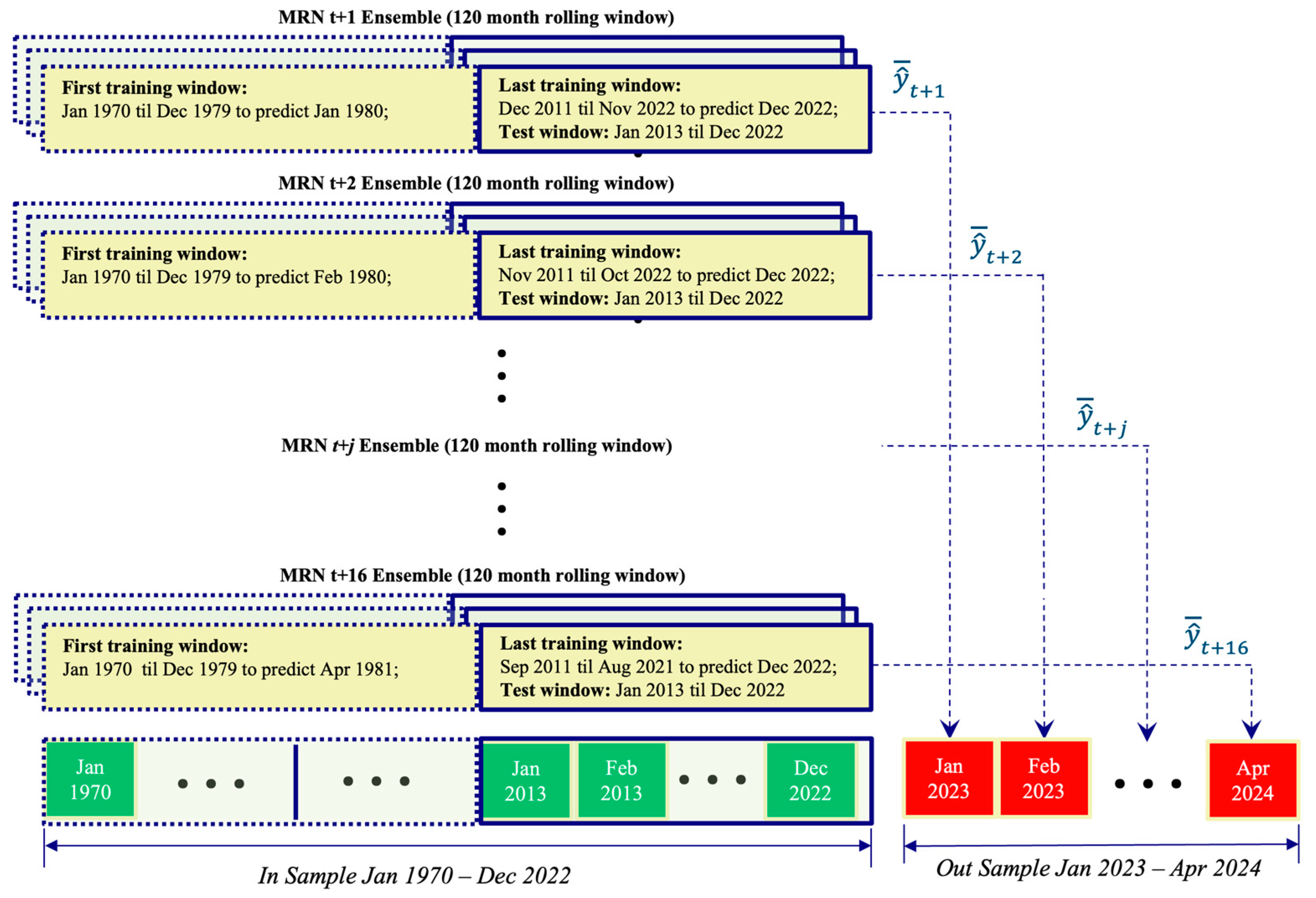

2.4. Forecast Evaluation Procedure

- Root Mean Squared Error (RMSE), which measures average forecast errors and emphasizes large deviations.

- Symmetric Mean Absolute Percentage Error (sMAPE) which expresses errors as a percentage, simplifying comparisons across different scales.

- Theil’s U Statistic, which compares model performance to a naïve forecast, with lower values indicating better accuracy.

- Improvement Over Random Walk, which quantifies how much better a forecasting model performs compared to a random walk benchmark, expressed as a proportion

3. Results

3.1. Forecast Evaluation

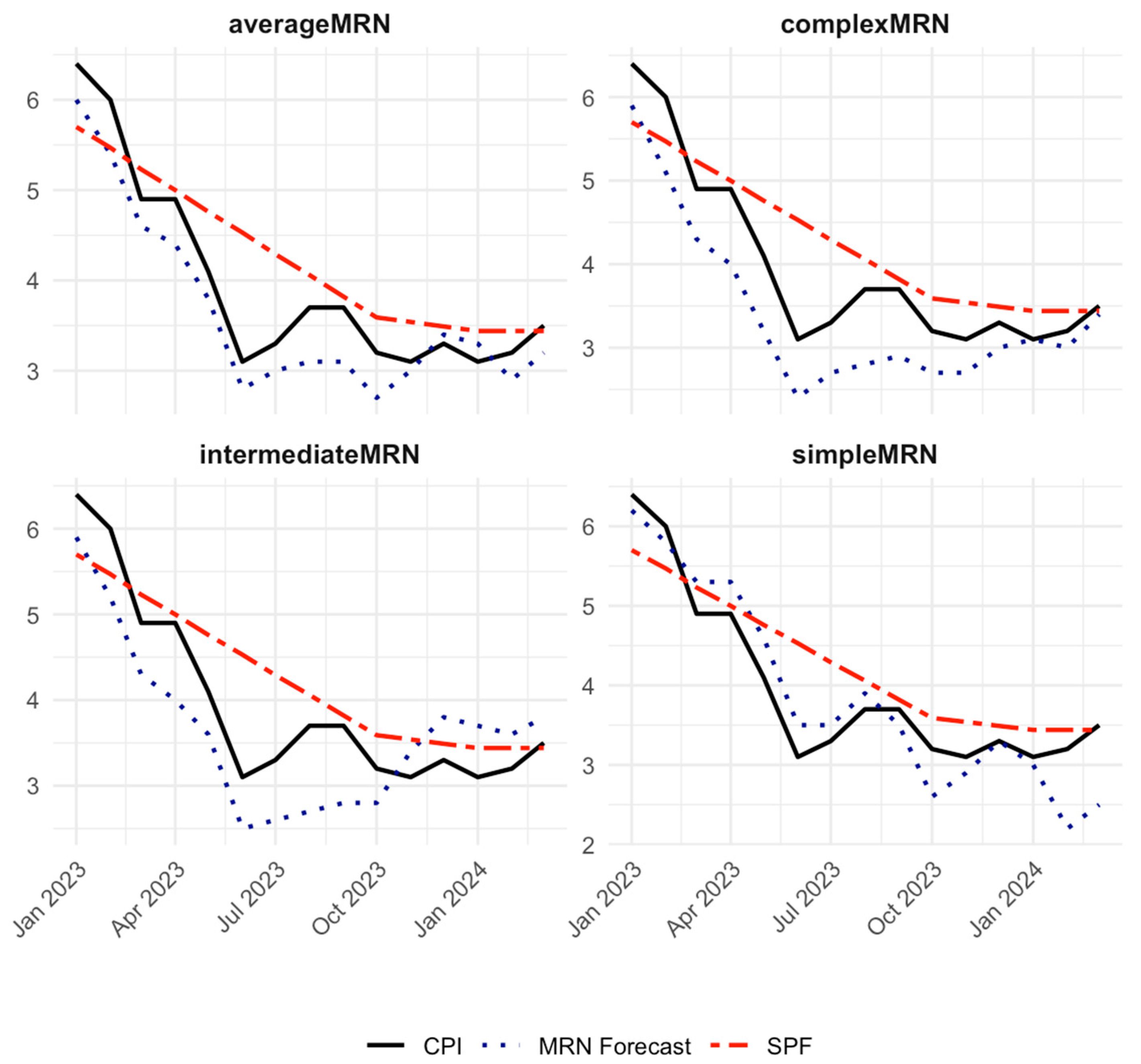

3.2. Comparison of CPI and Forecasts over Time

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bachmeier, L., Leelahanon, S., & Li, Q. (2007). Money Growth and Inflation in the United States. Macroeconomic Dynamics, 11, 113–127. [Google Scholar] [CrossRef]

- Barnett, W. A. (1997). Which Road Leads to Stable Money? Economic Journal, 107, 1171–1185. [Google Scholar]

- Belongia, M. T., & Binner, J. M. (Eds.). (2000). Divisia monetary aggregates: Theory and practice. Palgrave Macmillan. ISBN 978-0333647448. [Google Scholar]

- Bengio, Y., Simard, P., & Frasconi, P. (1994). Learning Long-Term Dependencies with Gradient Descent is Difficult. IEEE Transactions on Neural Networks, 5(2), 157–166. [Google Scholar] [CrossRef] [PubMed]

- Binner, J. M., Bissoondeeal, R. K., Elger, T., & Mullineux, A. W. (2005). A Comparison of Linear Forecasting Models and Neural Networks: An Application to Euro Inflation and Euro Divisia. Applied Economics, 37, 665–680. [Google Scholar] [CrossRef]

- Binner, J. M., Chaudhry, S. M., Kelly, L. J., & Swofford, J. L. (2018). Risky Monetary Aggregates for the USA and UK. Journal of International Money and Finance, 89, 127–138. [Google Scholar]

- Binner, J. M., Dixon, H., Jones, B. E., & Tepper, J. A. (2024). A Neural Network Approach to Forecasting Inflation. In UK economic outlook (Spring ed., pp. 8–11). NIESR. Box A. Available online: https://niesr.ac.uk/publications/neural-network-approach-forecasting-inflation?type=uk-economic-outlook-box-analysis (accessed on 15 March 2025).

- Binner, J. M., Gazely, A. M., Chen, S. H., & Chie, B. T. (2004a). Financial Innovation and Divisia Money in Taiwan: Comparative Evidence from Neural Network and Vector Error-Correction Forecasting Models. Contemporary Economic Policy, 22(2), 213–224. [Google Scholar]

- Binner, J. M., Kendall, G., & Chen, S. H. (2004b). Applications of Artificial Intelligence in Economics and Finance. In T. B. Fomby, & R. C. Hill (Eds.), Advances in econometrics (Vol. 19, pp. 127–144). Emerald Publishing. [Google Scholar]

- Binner, J. M., Tino, P., Tepper, J. A., Anderson, R. G., Jones, B. E., & Kendall, G. (2010). Does Money Matter in Inflation Forecasting? Physica A: Statistical Mechanics and its Applications, 389, 4793–4808. [Google Scholar]

- Bodyanskiy, Y., & Popov, S. (2006). Neural network approach to forecasting of quasiperiodic financial time series. European Journal of Operational Research, 175, 1357–1366. [Google Scholar]

- Box, G. E. P., & Jenkins, G. M. (1970). Time series analysis: Forecasting and control. Holden Day. [Google Scholar]

- Briggs, A., & Sculpher, M. (1998). An introduction to Markov modelling for economic evaluation. Pharmaco Economics, 13(4), 397–409. [Google Scholar]

- Brown, R. G. (1956). Exponential smoothing for predicting demand. Available online: http://legacy.library.ucsf.edu/tid/dae94e00 (accessed on 13 January 2025).

- Cao, B., Ewing, B. T., & Thompson, M. A. (2012). Forecasting Wind Speed with Recurrent Neural Networks. European Journal of Operational Research, 221, 148–154. [Google Scholar]

- Cerqueira, V., Torgo, L., & Soares, C. (2019). Machine learning vs. statistical methods for time series forecasting: Size matters. Applied Soft Computing, 80, 1–18. [Google Scholar]

- Chen, J. (2020). Economic forecasting with autoregressive methods and neural networks. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3521532 (accessed on 15 March 2025).

- Cho, K., van Merrienboer, B., Bahdanau, D., Bougares, F., Schwenk, H., & Bengio, Y. (2014). Learning phrase representations using rnn encoder–decoder for statistical machine translation. arXiv, arXiv:1406.1078. [Google Scholar]

- Coibion, O., Gorodnichenko, Y., & Kamdar, R. (2018). The formation of expectations, inflation, and the Phillips curve. Journal of Economic Literature, 56(4), 1447–1491. [Google Scholar] [CrossRef]

- Cont, R. (2001). Empirical properties of asset returns: Stylized facts and statistical issues. Quantitative Finance, 1(2), 223–236. [Google Scholar] [CrossRef]

- Croushore, D., & Stark, T. (2019). Fifty years of the survey of professional forecasters. Available online: https://www.philadelphiafed.org/the-economy/macroeconomics/fifty-years-of-the-survey-of-professional-forecasters (accessed on 15 March 2025).

- Curry, B. (2007). Neural Networks and Seasonality: Some Technical Considerations. European Journal of Operational Research, 179, 267–274. [Google Scholar] [CrossRef]

- Cybenko, G. (1989). Approximation by superpositions of a sigmoid function. Mathematics of Control, Signals and Systems, 2, 303–314. [Google Scholar] [CrossRef]

- Dede Ruslan, D., Rusiadi, R., Novalina, A., & Lubis, A. (2018). Early detection of the financial crisis of developing countries. International Journal of Civil Engineering and Technology, 9(8), 15–26. [Google Scholar]

- Dia, H. (2001). An object-oriented neural network approach to traffic forecasting. European Journal of Operational Research, 131, 253–261. [Google Scholar]

- Dorsey, R. E. (2000). Neural Networks with Divisia Money: Better Forecasts of Future Inflation? In M. T. Belongia, & J. M. Binner (Eds.), Divisia monetary aggregates: Theory and practice (pp. 12–28). Palgrave Macmillan. [Google Scholar]

- Forbes, K. (2023, October 19–20). Monetary policy under uncertainty: The hare or the tortoise? Boston Federal Reserve Bank Conference, Boston, MA, USA. [Google Scholar]

- Friedman, M., & Schwartz, A. J. (1970). Monetary statistics of the United States. Columbia University Press. [Google Scholar]

- Gazely, A. M., & Binner, J. M. (2000). A neural network approach to the Divisa index debate: Evidence from three countries. Applied Economics, 32, 1607–1615. [Google Scholar]

- Giles, C. L., Lawrence, S., & Tsoi, A. C. (2001). Noisy time series prediction using a recurrent neural network and grammatical inference. Machine Learning, 44(1/2), 161–183. [Google Scholar] [CrossRef]

- Goldfeld, S., & Quandt, R. E. (1973). A Markov model for switching regressions. Journal of Econometrics, 1(1), 3–15. [Google Scholar] [CrossRef]

- Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT Press. [Google Scholar]

- Hamilton, J. D. (1988). Rational expectations econometric analysis of changes in regime: An investigation of the term structure of interest rates. Journal of Economic Dynamics and Control, 12, 385–423. [Google Scholar] [CrossRef]

- Hamilton, J. D. (1989). A new approach to the economic analysis of non-stationary time series and the business cycle. Econometrica, 57(2), 357–384. [Google Scholar] [CrossRef]

- Hamilton, J. D. (1994). Time series analysis. Princeton University Press. [Google Scholar]

- Hellwig, C. (2008). Welfare costs of inflation in a menu cost model. American Economic Review, 98(2), 438–443. [Google Scholar]

- Hochreiter, S., & Schmidhuber, J. (1997). Long short-term memory. Neural Computation, 9(8), 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Holt, C. C. (1957). Forecasting seasonal and trends by exponentially weighted moving averages. International Journal of Forecasting, 20, 5–10. [Google Scholar] [CrossRef]

- Hornik, K., Stinchcombe, M., & White, H. (1989). Multilayer feedforward networks are universal approximators. Neural Networks, 2(5), 359–366. [Google Scholar] [CrossRef]

- Kelly, L. J., Binner, J. M., & Tepper, J. A. (2024). Do Monetary Aggregates Improve Inflation Forecasting in Switzerland? Journal of Management Policy and Practice, 25(1), 124–133. [Google Scholar] [CrossRef]

- Kelly, L. J., Tepper, J. A., Binner, J. M., Dixon, H., & Jones, B. E. (2025). National institute UK economic outlook box A: (NIESR): An alternative UK economic forecast. Available online: https://niesr.ac.uk/wp-content/uploads/2025/02/JC870-NIESR-Outlook-Winter-2025-UK-Box-A.pdf?ver=u3KMjmyGBUB2ukqGdvpT (accessed on 19 March 2025).

- Lawrence, S., Giles, C. L., & Fong, S. (2000). Natural language grammatical inference with recurrent neural networks. IEEE Transactions on Knowledge and Data Engineering, 12, 126–140. [Google Scholar] [CrossRef]

- Lin, T., Horne, B., Tino, P., & Giles, C. L. (1996). Learning long-term dependencies in narx recurrent neural networks. IEEE Transactions on Neural Networks, 7(6), 1329–1338. [Google Scholar]

- Makridakis, S., & Hibon, M. (2000). The M3-competition: Results, conclusions and implications. International Journal of Forecasting, 16(4), 451–476. [Google Scholar]

- Makridakis, S., Spiliotis, E., & Assimakopoulos, V. (2018). Statistical and machine learning forecasting methods: Concerns and ways forward. PLoS ONE, 13(3), e0194889. [Google Scholar] [CrossRef]

- McCallum, B. T. (1993). Unit roots in macroeconomic time series: Some critical issues. Federal Reserve Bank of Richmond Economic Quarterly, 79(2), 13–43. [Google Scholar]

- Moshiri, S., Cameron, N., & Scuse, D. (1999). Static, dynamic, and hybrid neural networks in forecasting inflation. Computational Economics, 14, 219–235. [Google Scholar] [CrossRef]

- Murray, C., Chaurasia, P., Hollywood, L., & Coyle, D. (2022). A comparative analysis of state-of-the-art time series forecasting algorithms. In International conference on computational science and computational intelligence. IEEE. [Google Scholar]

- Nakamura, E. (2005). Inflation forecasting using a neural network. Economics Letters, 86, 373–378. [Google Scholar]

- Narayanaa, T., Skandarsini, R., Ida, S. R., Sabapathy, S. R., & Nanthitha, P. (2023, December 21–23). Inflation prediction: A comparative study of arima and lstm models across different temporal resolutions [Conference session]. 2023 3rd International Conference on Innovative Mechanisms for Industry Applications (ICIMIA) (pp. 1390–1395), Bengaluru, India. [Google Scholar] [CrossRef]

- Orojo, O. (2022). Optimizing sluggish state-based neural networks for effective time-series processing [Ph.D. Thesis, Nottingham Trent University]. Unpublished. [Google Scholar]

- Orojo, O., Tepper, J. A., Mcginnity, T. M., & Mahmud, M. (2019, December 6–9). A multi-recurrent network for crude oil price prediction [Conference session]. 2019 IEEE Symposium Series On Computational Intelligence (SSCI) (pp. 2940–2945), Xiamen, China. [Google Scholar] [CrossRef]

- Orojo, O., Tepper, J. A., Mcginnity, T. M., & Mahmud, M. (2023). The multi-recurrent neural network for state-of-the-art time-series processing. Procedia Computer Science, 222, 488–498. [Google Scholar] [CrossRef]

- Petrica, A., Stancu, S., & Tindeche, A. (2016). Limitations of arima models in financial and monetary economics. Theoretical and Applied Economics, 23(4), 19–42. [Google Scholar]

- Refenes, A. N., & Azema-Barac, M. (1994). Neural network applications in financial asset management. Neural Computing and Applications, 2, 13–39. [Google Scholar]

- Roumeliotis, K. I., & Tselikas, N. D. (2023). ChatGPT and Open-AI models: A preliminary review. Future Internet, 15(6), 192. [Google Scholar] [CrossRef]

- Rumelhart, D. E., Hinton, G. E., & Williams, R. A. (1986). Learning internal representations by error propagation. In D. E. Rumelhart, & J. L. McClelland (Eds.), Parallel distributed processing: Explorations in the microstructure of cognition (Vol. 1, pp. 318–362). MIT Press. [Google Scholar]

- Sharkey, N., Sharkey, A., & Jackson, S. (2000). Are SRNs sufficient for modelling language acquisition? In P. Broeder, & J. M. J. Murre (Eds.), Models of language acquisition: Inductive and deductive approaches (pp. 35–54). Oxford University Press. [Google Scholar]

- Shirdel, M., Asadi, R., Do, D., & Hintlian, M. (2021). Deep learning with kernel flow regularization for time series forecasting. arXiv, arXiv:2109.11649. [Google Scholar] [CrossRef]

- Sims, C. A. (1980). Macroeconomics and reality. Econometrica, 48(1), 1–48. [Google Scholar]

- Stock, J. H., & Watson, M. W. (2007). Why has U.S. inflation become harder to forecast? Journal of Money, Credit and Banking, 39, 3–33. [Google Scholar] [CrossRef]

- Tenti, P. (1996). Forecasting foreign exchange rates using recurrent neural networks. Applied Artificial Intelligence, 10, 567–581. [Google Scholar]

- Tepper, J. A., Shertil, M. S., & Powell, H. M. (2016). On the importance of sluggish state memory for learning long term dependency. Knowledge-Based Systems, 102, 1–11. [Google Scholar] [CrossRef][Green Version]

- Tino, P., Horne, B. G., & Giles, C. L. (2001). Attractive periodic sets in discrete time recurrent networks (with emphasis on fixed point stability and bifurcations in two–neuron networks). Neural Computation, 13(6), 1379–1414. [Google Scholar] [PubMed]

- Tsay, R. S. (2005). Analysis of financial time series. Wiley and Sons. ISBN 9780471690740. [Google Scholar]

- Ulbricht, C. (1994, July 31–August 4). Multi-recurrent networks for traffic forecasting [Conference session]. Twelfth National Conference on Artificial Intelligence (pp. 883–888), Seattle, WA, USA. [Google Scholar]

- Vaswani, A., Shazeer, N. M., Parmar, N. P., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L. K., & Polosukhin, I. (2017). Attention is all you need. arXiv, arXiv:1706.03762. [Google Scholar]

- Virili, F., & Freisleben, B. (2000). Nonstationarity and data preprocessing for neural network predictions of an economic time series. In Neural networks, 2000. IJCNN 2000, proceedings of the IEEE-INNS-ENNS international joint conference on Como, Italy, July 24–27 (Vol. 5, pp. 129–134). IEEE. [Google Scholar]

- Werbos, P. J. (1990). Backpropagation through time: What it does and how to do it. Proceedings of the IEEE, 78(10), 1550–1560. [Google Scholar] [CrossRef]

- Whittle, P. (1951). Hypothesis testing in time series analysis. Almquist & Wiksell. [Google Scholar]

- Williams, R. J., & Peng, J. (1990). An efficient gradient-based algorithm for on-line training of recurrent network trajectories. Neural Computation, 2(4), 490–501. [Google Scholar]

- Zeng, A., Chen, M., Zhang, L., & Xu, Q. (2022). Are transformers effective for time series forecasting? arXiv, arXiv:2205.13504. [Google Scholar]

- Zhang, D., Yu, L., Wang, S., & Xie, H. (2010). Neural network methods for forecasting turning points in economic time series: An asymmetric verification to business cycles. Frontiers of Computer Science in China, 4, 254–262. [Google Scholar]

- Zhang, G. P., & Qi, M. (2005). Neural network forecasting for seasonal and trend time series. European Journal of Operational Research, 160(2), 501–514. [Google Scholar]

| Forecast Method | RMSE | sMAPE | Theil U Statistic | Improvement Over Random Walk |

|---|---|---|---|---|

| simpleMRN | 0.472 | 10.91% | 0.124 | 0.876 |

| intermediateMRN | 0.637 | 16.47% | 0.167 | 0.833 |

| complexMRN | 0.627 | 15.29% | 0.164 | 0.836 |

| averageMRN | 0.395 | 9.66% | 0.104 | 0.896 |

| SPF | 0.580 | 11.33% | 0.152 | 0.848 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Binner, J.M.; Kelly, L.J.; Tepper, J.A. Professional Forecasters vs. Shallow Neural Network Ensembles: Assessing Inflation Prediction Accuracy. J. Risk Financial Manag. 2025, 18, 173. https://doi.org/10.3390/jrfm18040173

Binner JM, Kelly LJ, Tepper JA. Professional Forecasters vs. Shallow Neural Network Ensembles: Assessing Inflation Prediction Accuracy. Journal of Risk and Financial Management. 2025; 18(4):173. https://doi.org/10.3390/jrfm18040173

Chicago/Turabian StyleBinner, Jane M., Logan J. Kelly, and Jonathan A. Tepper. 2025. "Professional Forecasters vs. Shallow Neural Network Ensembles: Assessing Inflation Prediction Accuracy" Journal of Risk and Financial Management 18, no. 4: 173. https://doi.org/10.3390/jrfm18040173

APA StyleBinner, J. M., Kelly, L. J., & Tepper, J. A. (2025). Professional Forecasters vs. Shallow Neural Network Ensembles: Assessing Inflation Prediction Accuracy. Journal of Risk and Financial Management, 18(4), 173. https://doi.org/10.3390/jrfm18040173