Thermal Performance Evaluation of a Data Center Cooling System under Fault Conditions

Abstract

:1. Introduction

2. Literature Reviews

3. Major Issues in Data Center Planning

3.1. Root Causes and Scale of Fault Conditions

3.2. Temperature and IT Reliability

4. Case Study: Data Center and Modeling

4.1. Data Center Cooling System

4.2. IT Server Room Thermal Model

4.3. Simulation Results

5. Temperature Control Approaches

5.1. Temperature Increase over Time After a Cooling System Outage

5.2. System Response to IT Environment under Fault Conditions

6. Conclusions

- (1)

- Up to a chilled water supply temperature of 17 °C and a CRAH unit air supply temperature of 24 °C, the temperature of the air flowing into the IT server fell within the required range set forth in the ASHRAE standard (18–27 °C). Using a CRAH unit coil capacity of 85%, it was possible to perform allowable operations for approximately 320 s after cooling system outage.

- (2)

- Starting at a CRAH unit chilled water supply temperature of 18 °C and an air supply temperature of 25 °C, the coil capacity became smaller than the cooling load, and a rapid temperature increase occurred, which is a serious cause of IT equipment failure.

- (3)

- Currently, the number of cases in which cold aisle containment and designs with relatively high chilled water and air supply temperatures are used is increasing. During a cooling system outage, there is a high possibility that a rapid temperature increase will occur inside the IT server room. Thus, backup systems must be activated within 300 s. The fixed value is the maximum allowable time until the cooling system re-starts working.

Author Contributions

Funding

Conflicts of Interest

Nomenclature

| Nomenclature | |

| C | cooling coil capacity of CRAH unit (kW) |

| Q | chilled water flow rate (m3/h) |

| V | water volume in chilled water pipes (m3) |

| T | chilled water temperature (°C) |

| T | chilled water temperature differential (°C) |

| Cp | specific heat (J/kg°C) |

| ρ | density (kg/m3) |

| t | operating time (s) |

| Subscripts and Superscripts | |

| CRAH | computer room air handling |

| CHS | chilled water supply (in CRAH unit) |

| CHR | chilled water return (in CRAH unit) |

| n | number of data |

References

- Zhang, K.; Zhang, Y.; Liu, J.; Niu, X. Recent advancements on thermal management and evaluation for data centers. Appl. Therm. Eng. 2018, 142, 215–231. [Google Scholar] [CrossRef]

- Garday, D.; Housley, J. Thermal Storage System Provides Emergency Data Center Cooling; Doug Garday and Jens Housley, Intel Corporation: Santa Clara, CA, USA, 2007. [Google Scholar]

- Tunner, W.P.; Seader, J.H.; Brill, K.G. Tier Classifications Define Site Infrastructure Performance; White Paper; The Uptime Institute, Inc.: NewYork, NK, USA, 2005. [Google Scholar]

- Cho, J.; Lim, T.; Kim, B.S. Measurements and predictions of the air distribution systems in high compute density (Internet) data centers. Energy Build. 2009, 41, 1107–1115. [Google Scholar] [CrossRef]

- Nadjahi, C.; Louahlia, H.; Lemasson, S. A review of thermal management and innovative cooling strategies for data center. Sustain. Comput. Inf. Syst. 2018, 19, 14–28. [Google Scholar] [CrossRef]

- ASHRAE TC 9.9. Design Considerations for Datacom Equipment Centers; American Society of Heating Refrigerating and Air-Conditioning Engineers Inc.: Atlanta, GA, USA, 2005. [Google Scholar]

- Hasan, Z. Redundancy for Data Centers. ASHRAE J. 2009, 51, 52–54. [Google Scholar]

- Hartmann, B.; Farkas, C. Energy efficient data centre infrastructure—Development of a power loss model. Energy Build. 2016, 127, 692–699. [Google Scholar] [CrossRef]

- He, Z.; Ding, T.; Liu, Y.; Li, Z. Analysis of a district heating system using waste heat in a distributed cooling data center. Appl. Therm. Eng. 2018, 141, 1131–1140. [Google Scholar] [CrossRef]

- Kummert, M.; Dempster, W.; McLean, K. Thermal analysis of a data centre cooling system under fault conditions. In Proceedings of the 11th IBPSA International Building Performance Simulation Conference, Glasgow, Scotland, 27–30 July 2009. [Google Scholar]

- Zavřel, V.; Barták, M.; Hensen, J.L.M. Simulation of data center cooling system in an emergency situation. Future 2014, 1, 2. [Google Scholar]

- Lin, M.; Shao, S.; Zhang, X.S.; VanGilder, J.W.; Avelar, V.; Hu, X. Strategies for data center temperature control during a cooling system outage. Energy Build. 2014, 73, 146–152. [Google Scholar] [CrossRef]

- Lin, P.; Zhang, S.; VanGilde, J. Data Center Temperature Rise during a Cooling System Outage; Schneider Electric White Paper; Schneider Electric’s Data Center Science Center: Foxboro, MA, USA, 2013. [Google Scholar]

- Moss, D.L. Facility Cooling Failure: How Much Time Do You Have; A Dell Technical White Paper, Dell Data Center Infrastructure: Round Rock, TX, USA, 2011. [Google Scholar]

- Gao, X.; Xu, Z.; Wang, H.; Li, L.; Wang, X. Reduced cooling redundancy: A new security vulnerability in a hot data center. In Proceedings of the Network and Distributed Systems Security (NDSS) Symposium, San Diego, CA, USA, 18–21 February 2018. [Google Scholar]

- Nada, S.A.; Attia, A.M.A.; Elfeky, K.E. Experimental study of solving thermal heterogeneity problem of data center servers. Appl. Therm. Eng. 2016, 109, 466–474. [Google Scholar] [CrossRef]

- Torell, W.; Brown, K.; Avelar, V. The Unexpected Impact of Raising Data Center Temperatures; Schneider Electric White Paper; Schneider Electric’s Data Center Science Center: Foxboro, MA, USA, 2016. [Google Scholar]

- Ponemon Institute. Cost of Data Center Outages, Data Center Performance Benchmark Series; Emerson Network Power: Columbus, OH, USA, 2016. [Google Scholar]

- ASHRAE TC 9.9. Thermal Guideline for Data Processing Environments; American Society of Heating Refrigerating and Air-Conditioning Engineers, Inc.: Atlanta, GA, USA, 2015. [Google Scholar]

- Intel. Reducing Data Center Cost with an Air Economizer; IT@Intel Brief, Intel Information Technology: Santa Clara, CA, USA, 2008. [Google Scholar]

- Strutt, S.; Kelley, C.; Singh, H.; Smith, V. Data Center Efficiency and IT Equipment Reliability at Wider Operating Temperature and Humidity Ranges; The Green Grid Technical Committee: Oregon, OR, USA, 2012. [Google Scholar]

- Cho, J.; Yang, J.; Park, W. Evaluation of air distribution system’s airflow performance for cooling energy savings in high-density data centers. Energy Build. 2014, 68, 270–279. [Google Scholar] [CrossRef]

| Class | Dry Bulb (°C) | Relative Humidity (%) | Dew Point (°C) | Change Rate (°C/h) | |

|---|---|---|---|---|---|

| Recommended | A1 to A4 | 18 to 27 | 5.5 °C (DP) to 60 (RH) | N/A | N/A |

| Allowable | A1 | 15 to 32 | 20 to 80 | 17 | 5/20 |

| A2 | 10 to 35 | 20 to 80 | 21 | 5/20 | |

| A3 | 5 to 40 | 8 to 85 | 24 | 5/20 | |

| A4 | 5 to 45 | 8 to 90 | 24 | 5/20 | |

| Item | CRAH-1 | CRAH-2 |

|---|---|---|

| Type | water-side economizer | with indirect air-side economizer |

| Cooling capacity (usRT) | 43 | 15 |

| Air volume (m3/h) | 31,000 | 8500 |

| Supply air (SA) temperature (°C) | 20.0 | 20.0 |

| Return air (RA) temperature (°C) | 34.5 | 34.5 |

| Chilled water flow rate (LPM) | 391 | 144 |

| Chilled water supply (CWS) temperature (°C) | 10–14 | 10 |

| Chilled water return (CWS) temperature (°C) | 15.5–19.5 | 15.5 |

| Number of systems (EA/floor) | 12 | 31 |

| Size (mm) | Length (m) | Volume (m3) | Water Content (m3) | ||

|---|---|---|---|---|---|

| Horizontal | Riser | Total | |||

| 200 | 1850 (typical floor) | - | 1850 | 58.0 | 234.3 |

| 250 | - | 82 | 82 | 4.0 | |

| 350 | - | 82 | 82 | 7.8 | |

| 400 | - | 82 | 82 | 10.2 | |

| 450 | - | 82 | 82 | 13.0 | |

| 500 | 440 (mechanical room) | 280 | 720 | 141.3 | |

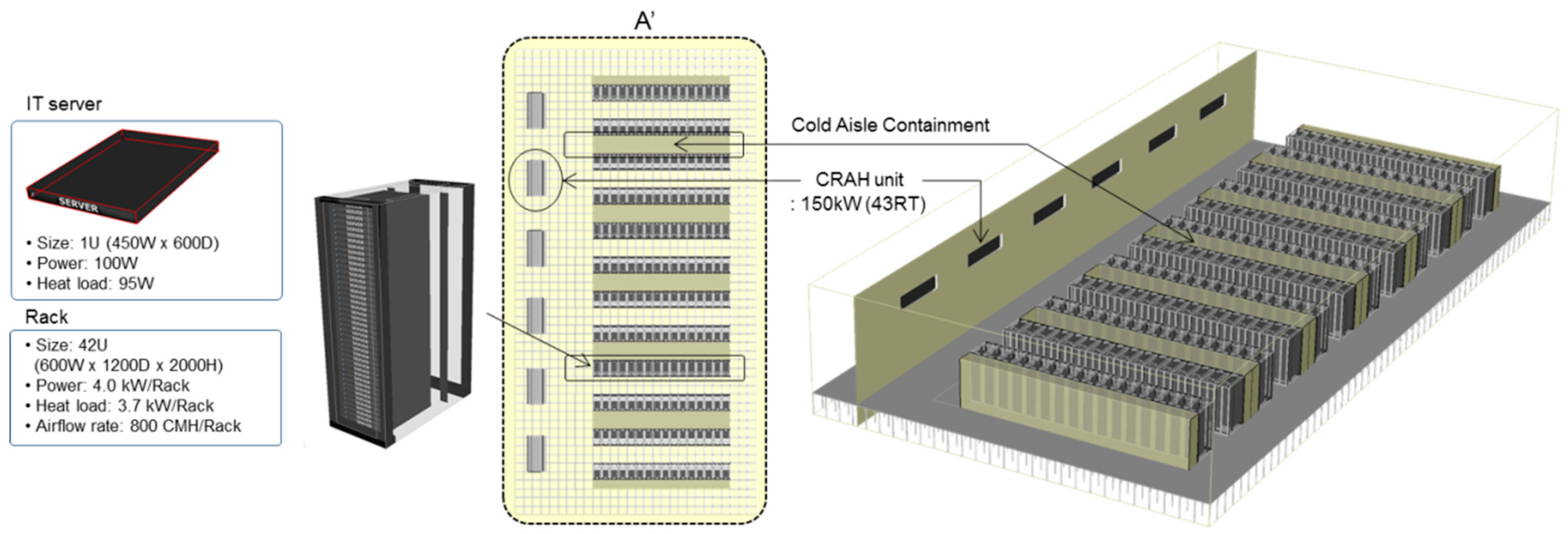

| Item | Value | Item | Value |

|---|---|---|---|

| Room size (m2) | 544.3 | Raised floor height (m) | 0.8 |

| Number of cabinets | 192 EA | Number of CRAH unit | 6 EA |

| Air flow rate (m3/h/CRAH) | 31,000 | Supply air temperature (°C) | 20–25 |

| Room height (m) | 5.1 | False ceiling height (m) | 3.0 |

| Rack IT limit (kW/rack) | 4.0 | Cooling capacity (kW/CRAC) | 150 (43 RT) |

| Cold aisle/hot aisle | Cold aisle containment | Chilled water temperature (°C) | 10–18 |

| Item | CRAH-1 | CRAH-2 | Total |

|---|---|---|---|

| Cooling capacity (kW) | 150 × (11 EA) | 50 × (17 EA) | 2500 |

| Chilled water flow rate (m3/min) | 0.391 × (11 EA) | 0.144 × (17 EA) | 6.75 |

| Chilled water content in pipes (m3) | - | 45 m3 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cho, J.; Park, B.; Jeong, Y. Thermal Performance Evaluation of a Data Center Cooling System under Fault Conditions. Energies 2019, 12, 2996. https://doi.org/10.3390/en12152996

Cho J, Park B, Jeong Y. Thermal Performance Evaluation of a Data Center Cooling System under Fault Conditions. Energies. 2019; 12(15):2996. https://doi.org/10.3390/en12152996

Chicago/Turabian StyleCho, Jinkyun, Beungyong Park, and Yongdae Jeong. 2019. "Thermal Performance Evaluation of a Data Center Cooling System under Fault Conditions" Energies 12, no. 15: 2996. https://doi.org/10.3390/en12152996