2. Optimization and Control Framework Technical Development

2.1. Generic Framework

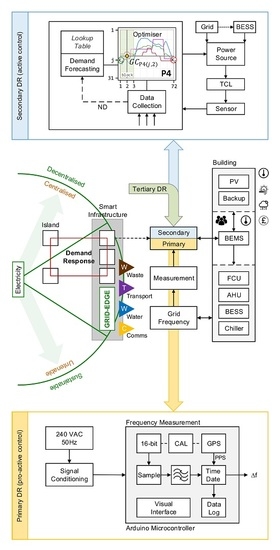

A generic decentralized, optimization, and control framework can be used as part of an evolving demand response service; this means both curtailment and generation. This general arrangement will support primary and secondary DR services through frequency regulation and optimal control mechanisms, respectively, and tertiary DR events (

Figure 1). Here, optimal performance might be described in terms of energy cost (ec), thermal comfort (tc), and predicted future energy demands (dv). A multi-objective cost function formulated using a weight-based routing algorithm automatically regulates the control of heating to create a meaningful energy demand reduction by shifting energy consumption to out of peak demand periods. Thermostatically controlled loads (TCL) can provide auxiliary services [

53]. In this approach, the proposed scheme offers a pro-active control mechanism that changes the TCL operating setpoint proportionally to measured grid frequency. Following this approach avoids synchronization problems that bound the coupling between frequency excursions and load dynamics that switch when prescribed frequency thresholds are exceeded [

40]. An optimization algorithm that responds to the real thermal needs of the building occupants is proposed. To achieve this, individual occupants can report their thermal comfort needs using smartphone technology. The feedback reports are processed, and a consensus determined, which is in turn used to influence the room temperature.

The inclusion of building occupant feedback is crucial. Recent research has illustrated that engineers tend to assume occupants will not feel small changes in temperature [

44]. This oversight can cause a performance gap between the expected and actual results from technologies intended to reduce or shift energy consumption in buildings. The inclusion of occupant feedback ensures that this issue will be avoided in the case of the solution presented in this paper.

This work provides a reference basis for further DR applications in decentralized community-based environments. It is particularly relevant to microgrids that are isolated from the grid as it offers potential for reducing the amount of energy storage required to balance the power fluctuation on those isolated microgrids. Current research has shown that even in the case of a single consumer, a microgrid option could be more economical than network renovation (e.g., provision of underground cabling) to increase the grid reliability [

54]. Therefore, the ability to reduce the costs further by utilizing the approach described in this paper could offer real potential for the development of islanded and semi-islanded microgrids in many contexts.

2.2. General Description

The proposed decentralized, informatics, optimization, and control simulation model has been developed to optimize space heating, schedule utility of energy storage assets, and provide pro-active/active control for primary and secondary DR services. Two groups define the simulation model data that aims to replicate the trajectory of the physical systems under consideration so that system configuration parameters can be differentiated from local preferences. Ultimately, the simulation model is designed to assess our understanding of the optimizer and control components in the context of decentralized energy management. The applicability of the optimizer and control component is further demonstrated in hardware-in-the-loop simulation.

The following outline is provided as an overview of the proposed optimization and control strategy. The approach is based on the idea that when the demand for electricity on the distribution network is high, then the system attempts to reduce the local rate of energy consumption by reducing the space heating temperature setpoint. Similarly, during periods of low electricity demand the constraints that govern the temperature setpoint are relaxed, which, in turn, allows, not mandates, an increase in energy consumption by increasing the space heating temperature setpoint.

When we add a measured response from occupants that describes their collective relative thermal comfort, the perception is the rate of energy consumption shifts towards being self-regulatory. For example, if the demand for electricity increases, the system attempts to reduce the local energy consumption at a rate that is inversely proportional to the predicted demand. If space remains void of occupants, this approach is satisfactory and local settings ensure a minimum space temperature is maintained. However, during periods of occupancy, individuals become eligible participants in the optimization algorithm. Subsequently, when individuals report they are feeling cold, and their collective measured response satisfies a set threshold, then the resultant action is to issue a command that counters the instruction to reduce the space temperature further. Conversely, this self-regulatory behavior works equally well during periods of low demand. Consider now introducing a third data type. Incentivizing energy reduction through financial gain aims to reduce or shift energy consumption during periods of high demand [

55].

Including information about the cost of energy into the mix introduces an interesting dynamic to the optimization and control strategy. Given a time of use tariff that increases at times when demand is known to peak, the net contribution to the optimizer is to automatically adjust the energy consumption when the cost of electricity exceeds a user-defined threshold. Furthermore, the system can be configured to automatically switch to an alternative power source if demand exceeds a set limit or during periods when the cost of energy makes utilizing an alternative power source more attractive, e.g., energy storage assets.

The immediate outcome attributed to the interaction between the three data types becomes even more attractive if their behaviors can be predicted over a finite time horizon. The opportunity to participate in tertiary DR services by making ready the system in response to a network operator DR instruction becomes feasible. The proposed control algorithm alters the demand profile trajectory such that it adds bias to the tri-data mix in a way that promotes a rise in space temperature. The net effect is to provide optimal space pre-heating in advance of commencing the scheduled DR event. Furthermore, a switching mechanism denies use of a local energy storage asset for a period leading up to the DR event. Instead, resources ensure the energy storage asset is set to recharge. Thus, when the DR event period commences, the system power source automatically switches to the energy storage asset. Previous interventions ensure the energy storage asset capacity is sufficiently charged to enable it to remain the primary source for the duration of the event or until the asset can no longer meet the power demand for continued operation. In this instance, the grid becomes the systems primary power source, and recharging of the energy storage asset is initiated.

The remainder of the section describes the development of individual systems that contribute to the optimization and control framework. Real-time computer simulations that aim to model the behavior of physical systems and the mathematical model of the proposed optimization and control algorithms are performed using the MATLAB/Simulink® environment. Level-2 MATLAB System functions have been used extensively during the design and implementation, providing access to create custom blocks that support multiple input and output ports. Furthermore, this section describes how desktop simulations are reconfigured to validate the optimization and control algorithm using hardware-in-the-loop (HIL) simulation techniques.

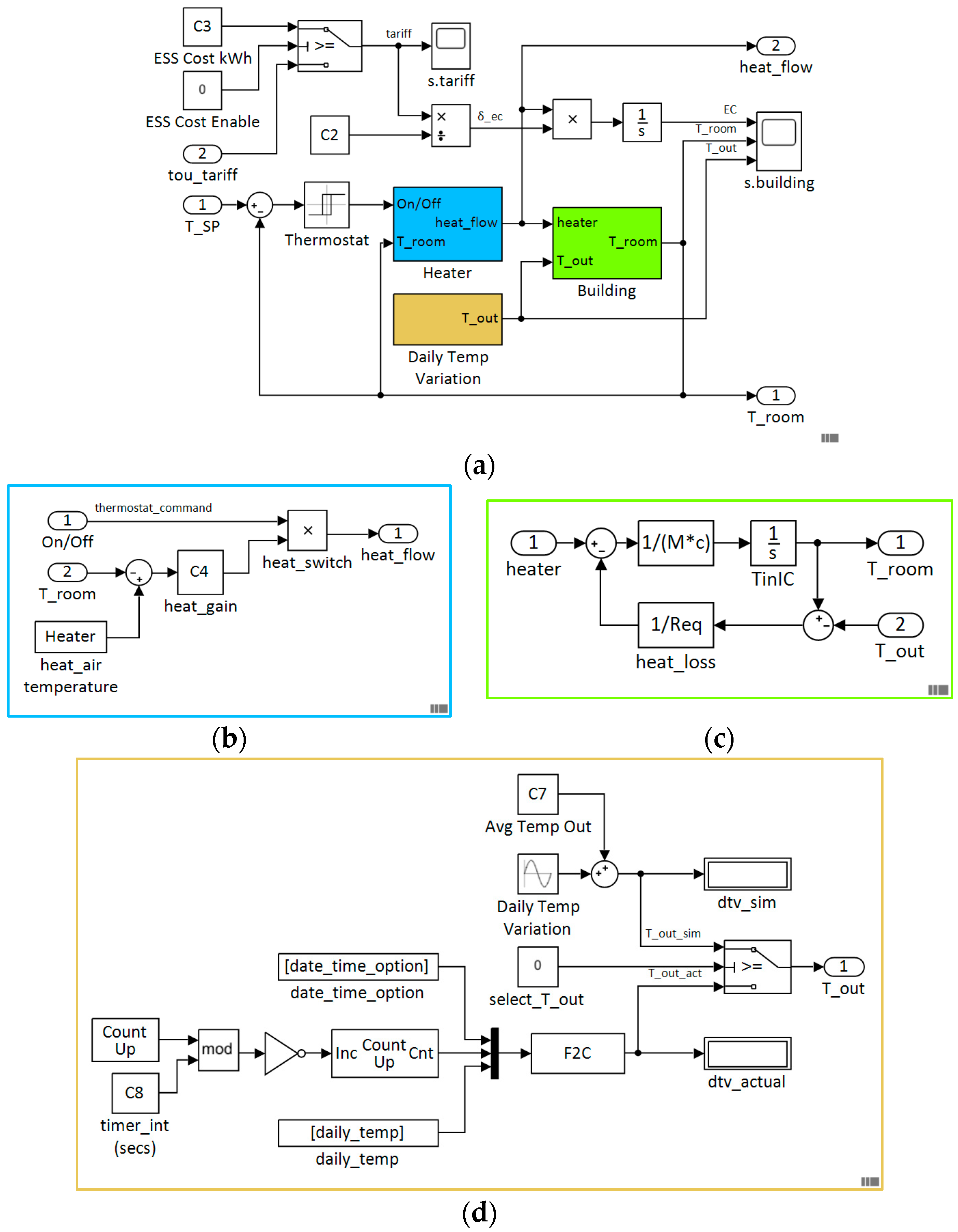

The desktop simulation model is shown in

Figure 2. In addition to the optimization and control block, the model is composed of a catalogue of supporting subsystems: energy, building, scheduler, date-time (dt), and demand event signal (des).

2.3. Technical Development

The simulation optimizer is constructed in a piecemeal fashion, progressing sequentially by solving problems centered on three data types: (1) thermal comfort, (2) electricity demand forecast, and (3) cost (tariff). In brief, during periods when the system is not responding to a tertiary DR activity, the active control process begins by calculating a predicted or actual value for each data type over a 4-h horizon window at 10 min intervals. Values are mapped onto a multi-dimensional array with a fixed number of rows (magnitude) and columns (time). A Dijkstra’s algorithm is then used to project the predicted values over the 4-h horizon window [

56,

57]. The contribution of each data type is then combined before

-means clustering (see Reference [

58,

59]) is applied iteratively at each 10 min interval. The result yields a new path that follows the optimal temperature setpoint trajectory over the 4-h horizon window. For demand response applications, a model for building design can be successfully implemented using a simplified first-order plus dead time model [

60]. Time constants of 10 to 30 min and dead-times between 0 to 5 min are typical [

61]. Avoiding complex calculations is achieved by taking a pragmatic approach when determining model control actions. For example, the proposed optimizer has been configured to update the control action at a sample time 10 min.

Since the control objective is to minimize the deviations from a temperature setpoint, according to the system and user-defined rules, at discrete points in time, the optimal cost (shortest path) can be obtained by formulating a Dynamic Programming algorithm that proceeds backwards in time. The algorithm takes a sequence of -means centroid points, where each centroid represents a value that minimizes the total intra-cluster variance of all objects in each cluster. In simple terms, given a time horizon of 240 min, this equates to 24 stages, each separated by a 10-min interval. At each stage, there are 11 objects. A k-means algorithm is applied to find the centroid of the 11 objects, at each stage. These calculations result in a series of 24 centroids that contribute to formulating the shortest path.

The objects that belong to each cluster are derived from a series of functions that calculate occupants’ relative thermal comfort cost (), rate of energy consumption (demand forecast value) cost (), and energy cost (). Given this, a deterministic problem can be formulated in a finite space , which can be equivalently represented by a gridmap of fixed dimension; the problem starts from a source node , where , proceeds to , and progresses to the final node . An important characteristic of this activity is highlighted. In solving the shortest path problem, the source node and target node are revealed to the optimizer just before the first transition from begins. The trajectory of the shortest path from will follow a series of weighted edges that interconnect successive pairs of nodes, i.e., .

In the framework of the fundamental problem, minimizing the cost in a finite state space

can be translated into mathematical terms:

where the cost of transition at

is the centroid in a cluster of objects at stage

from node

to node

.

For the problem to have a solution, each object centroid is constructed with -means++ algorithm. Here, after initially assigning a random object within a cluster as the first centroid, we compute the distance from each remaining object. Based on the square of these distances, a new centroid is defined. The process repeats until centroids are chosen. We formulate the objects in the following sections.

In addition, when the network operator issues an explicit DR instruction, the optimizer initiates a pre-programmed control strategy that changes the trajectory of subsequent control actions in a period leading up to and during the event window. However, it remains useful if the control actions continue to respond to facility or occupant needs during this mode of operation.

2.4. Optimize and Control Subsystem

The optimize and control subsystem (optimize_control) is a user-defined block written using the MATLAB S-Function application programming interface (API). The proposed optimization algorithm calculates the optimal space heating temperature according to the rate at which electricity is consumed (demand) and cost (tariff). Furthermore, the final temperature value is impacted by the occupants’ thermal responses to the combined thermal effect of the environment and physiological variables that influence the relative thermal comfort.

Figure 2 shows the Simulink

® optimize and control block includes three input signals: (1) room temperature (temp_room), (2) current date and time (S0_date), and (3) a demand event signal that indicates the status of a tertiary DR service (des_mode). The block output signals provide: (1) a control signal (ctrl_action) that will alter the space heating temperature setpoint, (2) the current cost of energy usage (tou_tariff), and (3) an indication of the tertiary DR event duration (des_duration). The internal architecture of the optimize and control subsystem is shown in

Figure 3.

The design presented in this article is configured to operate within a custom-built temperature range between and . Exception handling ensures temperature values measured outside this range are mapped to either or . Default system configuration parameters set the forecast horizon window to 4 h, a demand response temperature step () that instructs the control action to increase the space temperature by over the duration of the forecast horizon window, and the duration of a demand event to 40 min. Additional system parameters specific to thermal comfort, electricity demand forecasting and cost (tariff) are described in the corresponding subsections that follow.

2.4.1. Thermal Comfort

The energy demand of buildings is influenced by the presence and behavioral patterns of occupants [

62]. The thermal comfort element impacts the temperature setpoint by analyzing the measured room temperature (

) and occupants’ feedback collated at a sample time of 10 min. Weekdays are divided into 7 time intervals

, configured to mirror a typical teaching timetable, whereas a weekend day consists of only 1 time interval. Changing the weekend day interval pattern to replicate a weekday is straightforward. By considering occupant presence is inhomogeneous, for each

we choose an algorithm for the simulation of occupants to be used as an input for current occupant level,

. In practice, not all individuals will report their relative thermal comfort; therefore, the model automatically creates several feedback reports

, where

. An individual’s response is measured using a unipolar Likert scale [

63,

64]. The question has a five-scale response: too warm, warm, okay, cold, too cold; this is scored mathematically using a scale

{−2, −1, 0, 1, 2}. In order to imitate perceived behavior patterns, for each time interval, the following model parameters are defined:

,

and response threshold

. The thermal model weekday parameters are reported in

Table 1.

For any given weekday time, the thermal comfort model output is calculated by the following expression:

with respect to:

s.t. constraints:

For weekend days, we assume ; hence, the model returns a value . The variation in represents a bias that is configured to reflect a change in outside temperature over a 24-h period.

It is noted that the seven-time intervals

are bounded by a start and stop clock time

such that

,

; and terminating at

. In practice, if a date and time are specified (e.g., S0_date = Fri, 05-February-2020 07:23:14), then the task to determine if the date-time element occurs on a weekday or weekend day is straightforward. Given a date-time S0_date, it is possible to formulate an algorithm that returns a

array

, where

represents a thermal comfort value over a 4-h period at 10

min. It should be noted that because the optimizer is designed to take into consideration occupants’ feedback in real-time at a sample time of 10 min

. However, if during the 4-h horizon window the system identifies a time interval where

, i.e., there are no planned occupants, the model starts a pre-programmed sequence that sets the thermal comfort on a downward trajectory reducing at a rate of

per 10 min interval until a minimum temperature threshold value

.is reached. We have by the definition of the

nodemap

completed the data preparation of thermal comfort shown in

Figure 3. It must be remembered that the thermal comfort model is prepared for operation within the simulated environment only. In practice, the implementation proposes occupants’ report thermal comfort to the system using a smartphone app. This concept is elaborated further in

Section 3.

2.4.2. Electricity Demand Forecasting

A data-driven methodology for modeling electricity demand forecasting is proposed [

65]. The implication of this novel semi-autonomous simplified lumped model has the potential to offer decentralized electricity network operators’ knowledge of the more extensive aggregated rate of future energy consumption. Thus, enabling decentralized energy management systems to proactively reduce load demand on small island electricity grids or distributed grid-edge systems as part of an evolving DR service. In this paper, we integrate the electricity demand forecasting model as part of the optimize and control framework. Initially, analysis of a chronological sequence of 245,424 discrete observations reveals the composition of the one-dimensional time series is characterized by three seasonal patterns: weekday, weekend day and month. These findings motivate an effort to reduce the dimensionality using piecewise aggregated approximation (PAA). Subsequently, calculating a cubic polynomial that interpolates points of interest yields a

multi-dimensional array, which in turn helps restore the shape of the original demand forecast profile. The polynomial coefficient structure for weekday and weekend day are listed in the array page 1 and 2, respectively. Given both weekday and weekend day demand profiles recur every 24 h, it turns out using Equation (6) a normalized demand forecast value

can be tagged to a specific time in any 24-h period.

where

and

correspond to the minimum and maximum data points of each PAA 2h segment, respectively, and the cubic polynomial coefficient parameters are

,

,

, and

. Moreover, we will show how the demand forecasting model can be used to compute a credible demand forecast value for any given date and time.

There are 12 equidistant segments, which equates to 13 periods () bounded by minimum and maximum points and , i.e., where the number of periods . In the first period, , after that ; and terminating at . If we adopt the convention that makes 13-time intervals bounded by a start and stop clock time then , after that ; and terminating at . Thus, it can be seen, given a date-time S0_date it is possible to formulate an algorithm that returns a array where represents a normalized demand forecast value over a 4-h period at min starting from the specified date-time. This approach works equally well for both weekdays and weekend days.

The normalized demand forecast value

is defined as:

where

,

,

,

, noting that a nodemap

is a

two-dimensional array.

2.4.3. Cost (Tariff) Model

A key consideration when taking part in a predefined energy reduction strategy must empower customers to use energy in the lowest price period accessible, at the same time as offering participation in DR initiatives. The cost (tariff) model is configured to integrate a typical static time of use (TOU) tariff [

66]. As shown in

Figure 4, these tariffs charge cheaper rates when demand is low but increases for electricity consumption at peak times.

Given a date-time S0_date, the cost (tariff) model returns a array where represents a normalized cost (tariff) value over a 4-h period at min starting from the specific date-time.

The normalized cost (tariff) value is defined:

where

is the cost (tariff) at

min,

,

,

, and

. The scaling factors are set by design to position

values in the subsequent optimize stage such that a change in price to either off-peak or peak has maximum influence during the optimization outcome. Furthermore, it will be shown

impacts the operation of system assets managed by the scheduler subsystem.

2.4.4. Optimization

The optimization cycle (

Figure 3) starts on receipt of the input signal S0_date. Subsequent cycles commence at a block sample time of 10 min (600 s). Previously, data preparation for occupants’ thermal comfort, electricity demand forecast, and cost (tariff) each returned a

array

where

represents a normalised data type (

and

) value over a 4-h period at

min intervals starting from a specific date-time (S0_date). Before each data type array can be processed, it must be homogenized in a way that makes it accessible to the optimizer. The data is transformed into a

two-dimensional nodemap

such that

. Accordingly

represents a temperature

and

defines 25 stages

) each separated by a 10 min time interval for the duration of the 4-h forecast horizon window, e.g.,

and

is linked to the 10 min time interval

. The

nodemap

is then transformed to a

gridmap

by the following function:

where:

s.t. constraints:

when constraint (13) is not satisfied

.

The temperature from , where s.t. ; however, if , then ; furthermore, if , then . Based on this information, this equates to 31 permissible temperature changes between and . If we continue to record the change in temperature from using blocks of three columns for each cycle, then it is clear a gridmap of size is created. We refer to the three columns in each block as the source node , target node , and edge-weight , respectively.

The Dijkstra’s algorithm computes the shortest path between a specified temperature point given at

and

. This deterministic problem follows the principle of optimality which suggests if the path taken transits from one legitimate node to the next minimizes the cost-to-go from

to

, then the transition between the collective nodes must be optimal [

67]. For the Dijkstra’s algorithm to solve the shortest path, the

gridmap is first subjected to a series of simple transformations. The first instruction reshapes the gridmap into a

matrix referred to as the edgelist. Here, following the same convention to identify columns in the gridmap, the edgelist provides a listed description of all source nodes

, legitimate target nodes

and their respective connecting edge-weights

, i.e., its associated cost. A second instruction creates a digraph object that generates an

Edges variable (

table) based on the number of source and target nodes extracted from the

edgelist, and a

Nodes variable (

table). The 275 value represents the total number of nodes (

) in the fixed

nodemap. Finally, an equivalent sparse adjacency matrix representation of the digraph, which includes the edge-weights, is created. Since the graph object we have constructed is a directed graph, the sparse adjacency matrix is not symmetric. However, we can overcome this by converting the sparse adjacency matrix to a full storage matrix. In this instance, the conversion generates a

full storage matrix.

The data type shape is now in a format required by the Dijkstra’s algorithm. Executing the Dijkstra’s algorithm will compute the optimal cost which is equivalent to the summation of all edge weights on the shortest path from to between time and .

This process is repeated for each data type. At the end of each transformation the results are assigned to a specific page of a multi-dimensional array where page 1 (P1) is reserved for data type comfort, page 2 (P2) demand, and page 3 (P3) cost (tariff). The fourth page (P4) is reserved for the final stage in the optimization process, which combines the contributions assigned to P1 to P3. Here, every third column in the

P4 gridmap is allocated a grid centroid value

, where

and

, and assigned to row index

that is equivalent to the

-means cluster centroid index that partitions the observations in the corresponding column

on P1 to P3. Note, for each data type

; see Equation (1). The remaining values in each column are incremented by one until the row index

has reached its boundary limit, i.e.,

or

. When the Dijkstra’s algorithm subsequently computes the shortest path between the source node

and target node

where

, the results yield the optimal path that transits from

. The control action

. Simply stated, the control action is a fixed temperature value that is linked to the

nodemap

at row index

, where

, where

. The relationship between the gridmap and nodemap is highlighted in

Figure 3. The pseudocode describing the operating principle of the optimize and control algorithm is listed in

Appendix A.

2.5. Demand Event Signal Subsystem

The demand event signal subsystem (des_subsystem) simulates actions in response to a network operator instigated instruction. These signals are sent to individual customers enrolled in a campaign designed to deliver aggregated tertiary DR. The Simulink

® model itself is trivial (

Figure 5); however, the subsequent sequence of events requires further explanation. Firstly, the objective shifts to making the system ready for a DR event; this includes setting the control action to increase the room temperature in a measured approach by a pre-set value

within the 4-h horizon window. Secondly, there is the objective to ensure the battery energy storage system (BESS) is available with enough charge at the start of the DR event.

The period of pre-heating is regulated by altering the demand forecast profile. By default, . Therefore, the normalized demand forecast value is recast to , where , , where s.t. . This new trajectory increases the last recorded room temperature by at a rate of every 50 min. At the beginning of each subsequent optimization cycle, the trajectory leading up to the DR event is maintained, i.e., it advances closer to the plus temperature at each iteration and towards the DR projected start time. However, before reverts to , the trajectory is modified further, this time by reducing the temperature setpoint less than the temperature recorded immediately before the start of the tertiary DR event. The system reinstates immediately after the DR event terminates.

The des_mode signal triggers the scheduler subsystem to start charging the BESS. The energy storage asset will continue to charge until the start of the DR event. The battery will then start to work from this time, reducing the stored charge of the battery while it continues to provide primary power to the heating system. The heating system will continue to be supplied from the battery until a state of charge (SOC) minimum threshold has been reached. The scheduler switches primary power to the grid and the battery to charge.

2.6. Energy Subsystem

Decentralized DR frequency regulation, when used in building stock, can regulate short-term frequency excursions in demanded electrical energy [

68]. The contribution of a decentralized frequency regulator has been analyzed [

68]. Results presented suggest that small excursions in measured temperature from a TCL setpoint value will not compromise indoor comfort temperatures but can contribute to the restoration of frequency equilibrium during network stress events. In this paper, we integrate the implied linear power system and frequency regulator as part of the optimize and control framework. The model (energy_subsystem) shown in

Figure 6 replicates a power system rating of 300 MVA. Initial conditions assume the balance in supply and demand is at equilibrium, measured frequency is 50 Hz and the steady-state frequency error is zero. The energy subsystem model parameters are reported in

Table 2.

2.7. Building Subsystem

The building subsystem model (building_subsystem) (

Figure 7) is a simplified thermostatically controlled (on/off) space heating system with feedback loops which typically maintains the air temperature at a set level. The model emulates building thermodynamics (building), calculating variations in temperature based on heat flow,

, and heat losses,

.

A series of embedded lookup tables representative of seasonal variation are used to model outside air temperature over a 24-h period at a sample rate of 30 min [

69]. In practice, the local outdoor temperature is measured using sensors and input into the system. Energy cost

[p/kWh]) is calculated as a function of time and heat flow and is expressed in following equation:

where

denotes air mass flow rate through the heater;

specific heat at constant air pressure, and

[p/kWh] is the energy price at time

. The building subsystem model parameters are reported in

Table 3.

2.8. Scheduler Subsystem

The scheduler subsystem primary job is to monitor several signals and direct the operation of an automatic transfer switch between a grid and an alternative backup source of power. To ensure the appropriate power source is selected, the scheduler requires knowledge of the current cost (tariff) of electrical energy, whether a tertiary DR event is in progress including information of the event duration and BESS state of charge. The Simulink

® model of the scheduler subsystem is shown in

Figure 8 and includes three input signals and six output signals. The output signals are provided for visual indication of various signal status. A simplified BESS element (ess_subsystem) simulates a battery SOC using a first-order transfer function. Locally defined parameters SOC_hi and SOC_lo set maximum and minimum state of charge values (expressed as a percentage), which determine when the BESS is declared available for use. In this context, initial values are defined in

Section 3. The model also includes a self-discharge rate (SDR) which reduces the stored charge of the battery naturally over time.

The BESS availability function is represented by Equation (17), where

is a low-level SOC threshold (locally defined parameter).

Control rules that determine when the primary power source is set to grid or BESS is illustrated in

Figure A1 (

Appendix B). The decision variable t_mode is the cost (tariff) threshold and automatically switches the power source to BESS when the cost (tariff) is high s.t. Equation (17). Furthermore, when signal des_mode =1 (0 = normal, 1 = tertiary DR event), t_mode = 0, thus preventing a control action that switches the power source to BESS during the period leading up to the start of the DR event (nominally 4 h). Signal CDir (change direction) reports if the battery is in charge or discharge (0 = discharge, 1 = charge); PWR denotes primary power source (0 = grid, 1 = BESS); SOC_EC denotes cost (tariff) in use, (0 = TOU, 1 = BESS).

2.9. Date-Time Subsystem

For completeness, the Simulink

® model of the date-time subsystem (dt_subsystem) is shown (

Figure 9). Its primary function is to provide a date-time element at a sample time of 10 min. The model has been configured to run in real-time during experimental evaluation. By default, dt is set to the current date and time, using format dd-mmm-yyyy hh:mm:ss, with the option to set to any data time during model analysis. The date-time model parameters are reported in

Table 4.

3. Computational Study

In this section, we report the findings from a computational study (desktop simulation). By design, the computational study validates the functionality of critical services. In contrast, the experimental evaluation (

Section 4) is explicitly directed on proving the interaction of proposed data types within the optimization subsystem. A simulated tertiary DR event is considered in both scenarios.

The interaction between decision variables and control actions of individual subsystems is complex. Accordingly, the computational study validates the functionality of the following vital services:

To begin, we evaluate the data input models. Individual charts created using nodemap data, and corresponding gridmap data validate the optimization and control behavior. In the second study, the results obtained from a simulated tertiary DR event are discussed. Finally, we monitor the system behavior during an imbalance between supply and demand. Here, the pro-active frequency control reacts to a simulated load disturbance causing a frequency excursion from the nominal 50 Hz steady-state. The model is initialized using the values reported in

Table 5.

Occupant thermal comfort feedback is shown in

Figure 10a. At 16:40, the model reports the aggregated occupant thermal comfort is “too warm”. This consensus triggers the optimization algorithm to set the comfort level gridmap trajectory on a path that reduces the measured room temperature by

, i.e.,

, where

. In addition, according to local settings, the timetable sets the number of occupants in a space to zero at 19:00. A ‘no occupancy’ status has clearly defined adaptive triggers. Firstly, the comfort signal values (occupants, response, and comfort) are held at a constant zero, while the number of occupants present in a space is zero. Secondly, at 19:00, the optimizer begins to alter the comfort level gridmap trajectory by reducing the temperature to a minimum temperature threshold

.(local setting) at a rate of

every 10 min. This behavior is confirmed in the optimizer gridmap visualization and subsequent optimizer nodemap shown in

Figure 11.

The price in the three-tier TOU tariff is translated visually in

Figure 10b. Initially, from 16:00 to 19:00 the TOU signal value is set to 9, which represents cost 24.99 p/kWh (peak), reducing to 6 (11.99 p/kWh mid-peak price) at 19:00. The energy cost nodemap data (

) transformation to the optimizer gridmap is shown in

Figure 11. During peak periods, when the cost of energy is highest, the gridmap interpretation is to influence the control variable by reducing the temperature setpoint, which in turn reduces the cost of energy. Similarly, at 19:00 (mid-peak), the gridmap tou signal is set at mid-scale (nominally

). The electricity demand forecast is shown in

Figure 10c. To help interpret the demand signals shown,

Figure 10d illustrates the calculated weekday demand profile over a 24-h period. The red circle marks the start of the 4-h horizon window (shaded area). The dv (gridmap) signal is reconstructed within the optimization algorithm. The results are consistent with the modified layout of corresponding digraph object node coordinates, which describes the relationship between directional edges and connecting nodes shown in

Figure 11a. The optimal temperature path is calculated at a sample rate of 10 min.

Figure 11b highlights the optimal temperature value over a 4-h horizon window commencing 16:40. The control action for the continuing 10 min cycle shown is the temperature value specified at 16:50, that is

. This accords with our earlier occupant thermal comfort feedback report, which registered a consensus to reduce the room temperature by

.

On receipt of a DR event notice (16:40) the normalized demand forecast value

is recast to

. The modified demand profile trajectory is defined by the Dijkstra’s shortest path algorithm

, where

. As can be observed in

Figure 12, the change in demand profile at 16:50 increases from

(

) to

(

). A sample rate of 600 s accounts for the slight delay from the start of the DR preparatory window to the change in demand profile trajectory. Although the supposed outcome is to promote an increase in temperature equivalent to

leading up to the start of the DR event, the projected value is offset by the continued influence of the thermal comfort (

) and energy cost (

) (tou) decision variables. Consequently, in this instance, the optimization algorithm sets the 4-h ahead optimal temperature value slightly less than the anticipated

. The layout of individual digraph objects and their corresponding nodemap representation, shown in

Figure 11 and

Figure 12, respectively, serve to provide a snapshot of the optimizer outputs over a 4-h horizon window at any given time. The benefit of the optimizer is now translated into

Figure 13, which plots several decision variables and control actions over a 24-h period. Between

Figure 13a,b, we observe the impact of demand and tariff data on the temperature setpoint (TS1). Furthermore, the outside temperature (Tout) as no impact on the measured room temperature during this simulation.

The start of the DR preparatory window is recorded at 16:40 and subsequently sets and holds des_mode = 1 for 4 h and 40 min (the time leading up to and including the DR event). The BESS is seen to start a charge period in readiness to the start of the DR event. A tariff mode signal (t_mode) automatically restricts the use of the BESS until the DR event starts. At 16:40, the power signal (PWR) switches the primary power source from the grid to BESS. If the cost of energy is peak tariff immediately after the DR event (t_mode = 3), then the BESS would continue as the primary power source. However, as can be observed, the BESS SOC signal (SOC) indicates the BESS starts a discharge phase at from the start of the DR event and continues, in this scenario, to the end of the DR event. At 20:20, the primary power source reverts to the grid, but the BESS remains available ().

The rate at which the energy source naturally discharges has been magnified to evaluate control actions when SDR exceeds low and high charge threshold values (local settings). In practice, SDR parameters should be set accordingly. The simulation results show the calculated electricity demand forecast profile (demand). Its impact on the optimization algorithm is clear when demand is high (06:00 to 22:00) the aggregated effect is to limit the temperature setpoint (reducing the demand for electricity on the distribution network). Conversely, when demand is low (22:00 to 06:00), the constraints that govern the temperature setpoint are relaxed. Here, the optimizer allows, not mandates, an increase in energy consumption by increasing the space heating temperature setpoint. This finding, while preliminary, suggests the proposed control strategy has the potential to deliberately lessen peaks in demand (electrical) and fill in the period of low demand.

At 18:20.36, the impact of a simulated load disturbance

(

Table 2) within the power subsystem is highlighted. The large and rapid decreasing frequency excursion shown in the box highlight, signifying an imbalance between supply and demand, is observed more clearly in

Figure 14a. The proposed system immediate response is to lower the temperature setpoint (

), reducing the on-site heat source energy consumption and thus providing a pro-active response to the stability of the electrical distribution network [

68]. As can be observed in

Figure 14b, and in the broader context in

Figure 13a, these immediate interventions have minimal impact on measured room temperature (

), hence minimizing occupant thermal discomfort.

4. Experimental Evaluation

Testing cannot be expected to catch every error in the software, and system complexity makes it difficult to evaluate every branch. A traditional approach to software testing during earlier development and subsequent simulation testing provided a satisfactory level of acceptance. However, as the hardware-in-the-loop test environment is not entirely under the control of the tester, an element of nondeterminism is introduced in the test. Furthermore, because of observations documented during early simulation testing, new features are added to help eliminate transitions to deadlock states. These situations arise when a stalemate between two or more processes occurs, and the process is unable to proceed because each is waiting for the other to respond [

70]. Therefore, it is considered helpful to outline an appropriate test and level of abstraction of the software and hardware devices for testing.

One of the significant objectives of testing is to assess the integration of the optimizer software code by connecting other software and hardware components. Therefore, a test environment was designed to evaluate and report as accurately as possible on the proposed optimization algorithm interaction with real-world data. An image of prototype equipment, including an industrial controller and sensor equipment, is shown in

Figure 15a.

The test environment composed of the following main components: (1) a revised Simulink® model designed to send/receive serial data, (2) electronic fan speed controller (EFSC) to regulate the heat transfer through flow, (3) a 240 VAC 3 kW box fan portable heater, (4) an Industruino IND.I/O 32u4 Arduino-compatible industrial controller, which includes 2 CH 0 to 10 VDC/4-20 mA 12bit output, and (5) Arduino-compatible remote sensors and communication equipment, including Android smartphone pre-loaded with an app, developed using MIT App Inventor 2 version nb183c. In addition to streaming data into the software environment, the industrial controller on-board liquid crystal display (LCD) panel was codified to visualize the data from remote sensors and user thermal preferences (registered using the smartphone app). These feedback indicators were supplemented by a series of light emitting diodes (LEDs) reporting the status of several decision-making variables.

Dedicated values for energy demand forecasting and price indicators are embedded in the computer model. However, occupant thermal comfort feedback data is input into the system in real-time using Bluetooth technology. This experiment utilizes the thermal comfort feedback data reported by a single occupant. A technology update to manage multiple users is a relatively straightforward task. Images of the App demonstrator designed to capture occupants thermal comfort report is shown in

Figure 15b. Remote sensors monitor room temperature data, which is communicated to the optimizer in real-time using low power device 433 MHz (LPD433) equipment. A block diagram of the general arrangement is shown in

Figure 16. The positioning of the optimizer indicates this configuration has the potential to participate in similar energy management schemes with minimal impact on existing infrastructure.

This paper reports the results of an experimental test carried out in real-time. The test started on Monday, 6-April-2020, 16:00. At 16:40, the start of a DR preparatory event triggers a pre-set sequence of control actions designed to prepare the heating services in advance of the 40 min DR event, which started at 20:40. The test was run for 5.5 h, finishing 10 min after the DR event. Comparison of the findings shown in

Figure 17 with those of earlier computation studies confirms the operation of the optimization algorithm is consistent with our mathematical arguments, which posits that the interaction between declared data types can influence an environment space heating. Increasing the temperature setpoint successively by

at 10-min intervals during the DR preparatory stage increased the space temperature by

from the start of the DR preparatory window.

Figure 18a confirms a temperature value of

was recorded at approximately 19:10. It can be observed the temperature then decreased to

at 20:40, which is the start time of DR event. This behavior may be explained by the fact that the thermal comfort profile (dark red color) reduced to an equivalent of

(

) at 19:00, which is consistent with an expected zero occupancy at the same time.

Furthermore, as can be observed in

Figure 18b, the control action signal utilized in the earlier computational study has been modified to regulate the physical heat transfer through flow. Here, the control action signal (Tu), which operates a 0 to 10 VDC EFSC, is proportional to the difference between the calculated optimal temperature setpoint (TS1) and the measured temperature (Tr), i.e.,

, where

. The power switch signal (PWR) shows the virtual energy storage system is activated at 20:40 and continues to operate as the heating system primary energy source for the duration of the DR event (shaded area).

Overall, these results are very encouraging. The experimental evaluation raises the possibility that the proposed optimization algorithm may support small communities in a decentralized environment with limited access to communication networks. Comparison of the findings with other studies confirms the novelty of the proposed framework for energy management. It is encouraging elements of this research are consistent with results found in previous work. Eriksson et al. [

71] developed a normalized weighted constrained multi-objective meta-heuristic optimization algorithm to consider technical, economic, socio-political, and environmental objectives. The results emphasized the application of a modified Particle Swarm Optimization (PSO) algorithm to optimize a renewable energy system of any configuration. The implementation of the Dijkstra’s algorithm (used in this study) is more prevalent in other applications (e.g., see Reference [

72,

73,

74]).

Nevertheless, the simplicity Dijkstra’s algorithm makes it a versatile heuristic algorithm. The shortest path optimization algorithm was designed to compute an optimal water heating plan based on specific optimality criteria and inputs [

75]. The significant feature reported of the proposed algorithm was its low computational complexity, which opens the possibility to deploy directly on low-cost embedded controllers. In a further study, a strong relationship between optimization and space heating has been reported [

76]. Here, a neural network algorithm was used to build a predictive model for the optimization of a HVAC is combined with a strength multi-objective PSO algorithm. Although results show satisfactory solutions at hourly time intervals for users with different preferences, demand response mechanisms have not been considered. However, leveraging upon the concepts of Industry 4.0, Short et al. [

77] demonstrated the potential to dispatch HVAC units in the presence of tertiary DR program in a distributed optimization problem could deliver satisfactory performances. Finally, a more inclusive study proposed an optimization model which takes total operational cost and energy efficiencies as objective functions [

78]. Here, a thermal load is adjusted in the knowledge that a managed change in temperature value has no significant impact on user comfort. An integrated demand response mechanism is also considered. Although the results provide a new perspective for integrated energy management and demand side load management, there is no further exploitation in real-time user engagement or perspectives on decentralization.

5. Conclusions

A real-time DR strategy in a decentralized grid has been formulated with both spatial and temporal constraints. A generic framework established regulatory control of space heating through mechanisms that automatically respond to changes in grid frequency and in response to explicit tertiary DR event signals. Design considerations set decision variables and control actions to illustrate the effectiveness of the novel multi-objective cost function, which is based on a weight-based routing algorithm. A series of embedded lookup tables based on historical operational data calculate an aggregated rate of electricity consumption over a rolling 4-h horizon window. As the techniques for enabling and controlling DR events emerged, extensive simulation studies demonstrated power consumption could be easily shifted without causing any significant short-term impact on space temperatures. Increasing growth of renewable energy resources could reduce system inertia, which means networks are more vulnerable to energy security. The approach offered may benefit rural decentralized community power systems, including geographical islands, seeking to optimize heating services through optimization and collaborative energy management. This operation was validated using experimental testing, which included a response to simulated tertiary DR event signals. The obtained results show an effectiveness of the decentralized, informatic optimization, and control framework for evolving DR services. The energy transition offers small communities’ opportunities to meet decarbonization targets. The gradual shift from centralized fossil fuel power networks to more low carbon decentralized sustainable smart energy systems is set to disrupt businesses, policymakers, and system analysts. As energy markets change to meet innovations, the reliance on a single energy source is slowly diminishing. Still, as technology advances and support for an emerging group of consumers that produce energy continues to gather momentum, system operators should embrace a changing market to remain relevant in the future.

The context within which DR operates is important as related initiatives need to support the objectives of DR itself. These are usually encased within a policy objective in response to concerns relating to the environment. Concerning supporting the achievement of such policy goals, organizations seeking to participate in the sector need a business model/strategy that will, in the longer-term support and sustain the success of such policy goals. Therefore, the business model is essential to the objective of the policy. Martin et al. [

79] illustrated the dangers of applying the business model/strategy that does not support the overall objective of an organization. Here, the policy goal is the European targets related to energy efficiency and climate change by consumers reducing or shifting their electricity usage during periods of peak electricity demand in response to time-based tariffs or other forms of financial incentives. The success of such policy objectives, therefore, are dependent on several factors, including end-user participation. The opportunities for realizing DR, however, vary across Europe as they are dependent on the particular regulatory, market, and technical contexts in different European countries [

80]. It is estimated that the distribution networks share of the overall network investment in energy networks will be 80% by 2050 [

81]. Hence, the need for DR solutions to reduce peaks in energy demand is significant. Thus, in recent times, the emphasis is on developing novel solutions which can align the energy demand to the energy supply in real-time [

82]. A decentralized approach will support creativity and tailor-made solutions [

83,

84]. The role of the end-user in successfully delivering the policy objective is essential, and their buying into new usage patterns is critical [

85]. Therefore, a business model that encourages end-user participation becomes crucial. Hence, a business model that understands and appreciates the variables of regulatory, market and technical contexts in different European countries, enabling enhanced end-user engagement is required to support the achievement of the policy objectives.

Future research will extend the test and validation work, including the integration of scalable communities and other forms of energy storage and distributed renewable energy generators. In addition, a business case that scrutinizes how the proposed optimization and control framework can be mobilized will be investigated in future work.