Ultra-Short-Term Load Demand Forecast Model Framework Based on Deep Learning

Abstract

1. Introduction

2. Literature Review

3. Theoretical Description of the Proposed Model

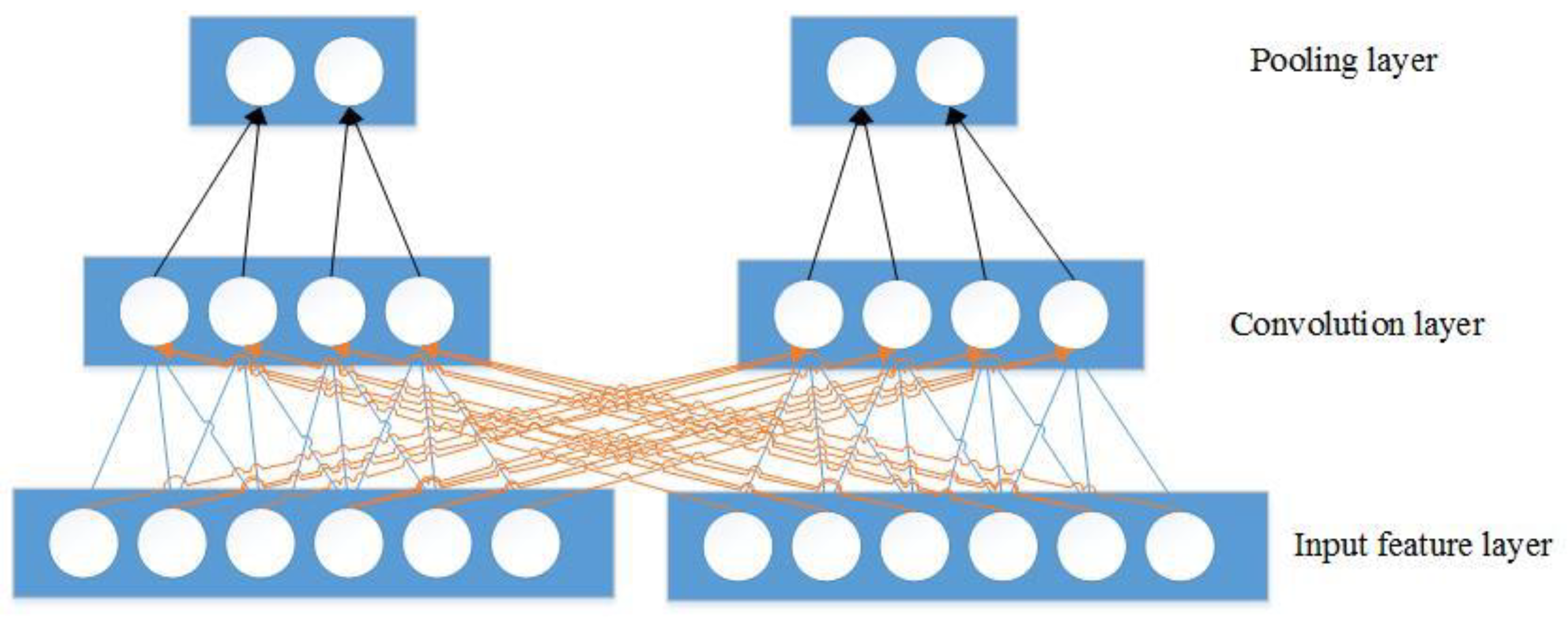

3.1. Convolution

3.2. Long Short-Term Memory

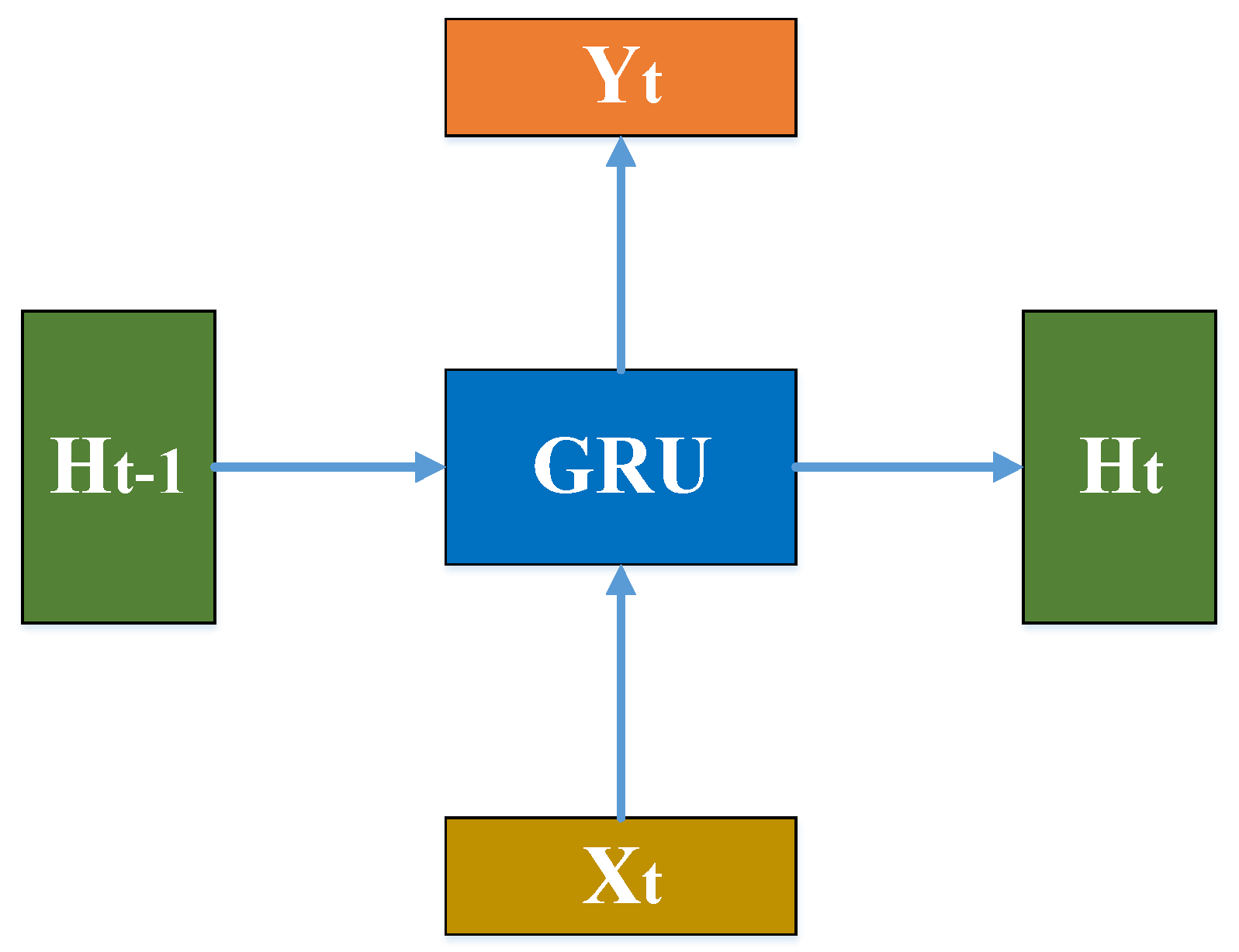

3.3. Gate Recurrent Unit

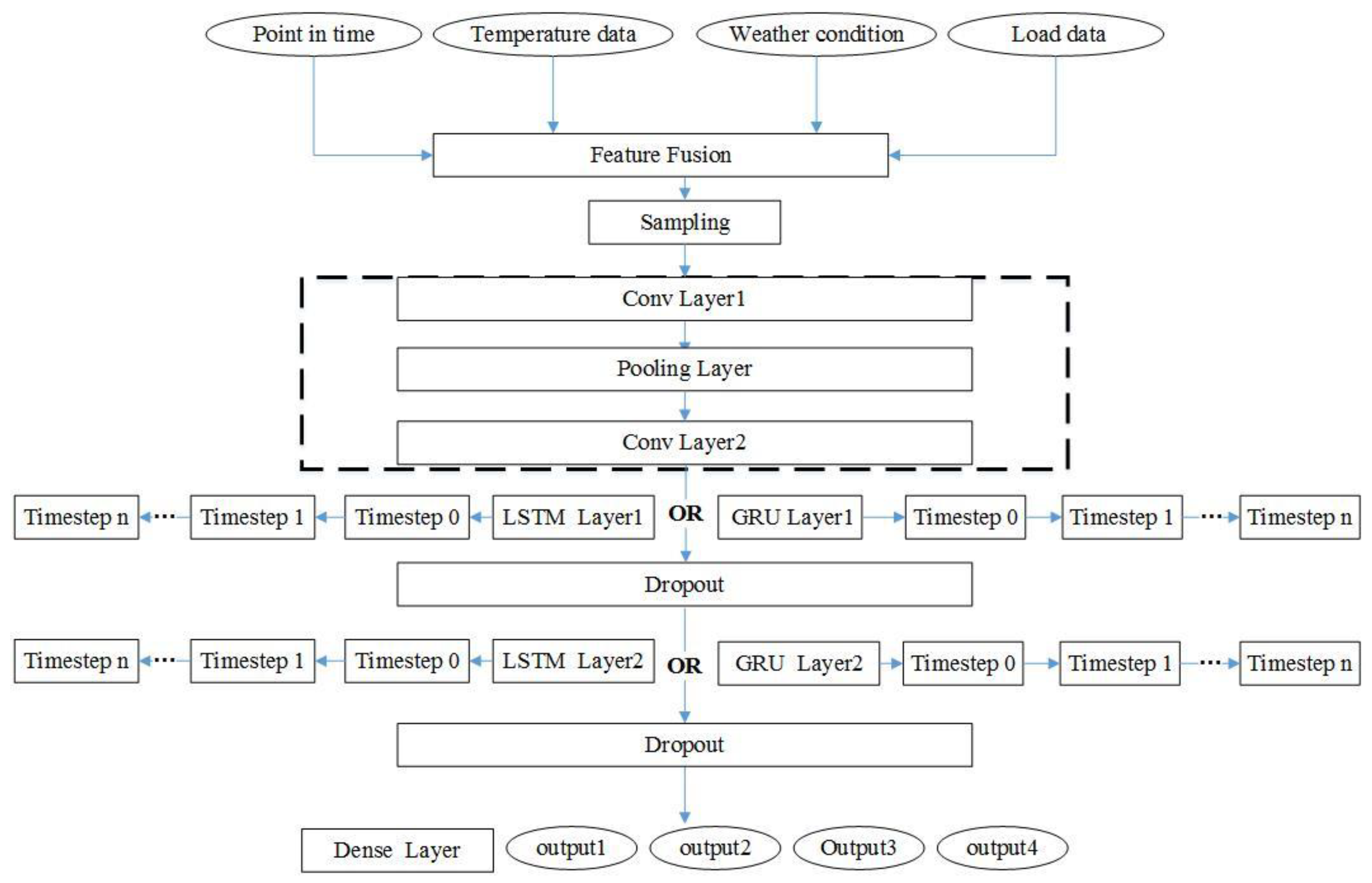

4. Data Description

4.1. Feature Engineering

4.2. Data Preprocessing

5. Deep Learning Model

5.1. Deep Learning Network Prediction Framework

5.2. Hyperparameters of Deep Learning Model

5.3. Evaluation Index

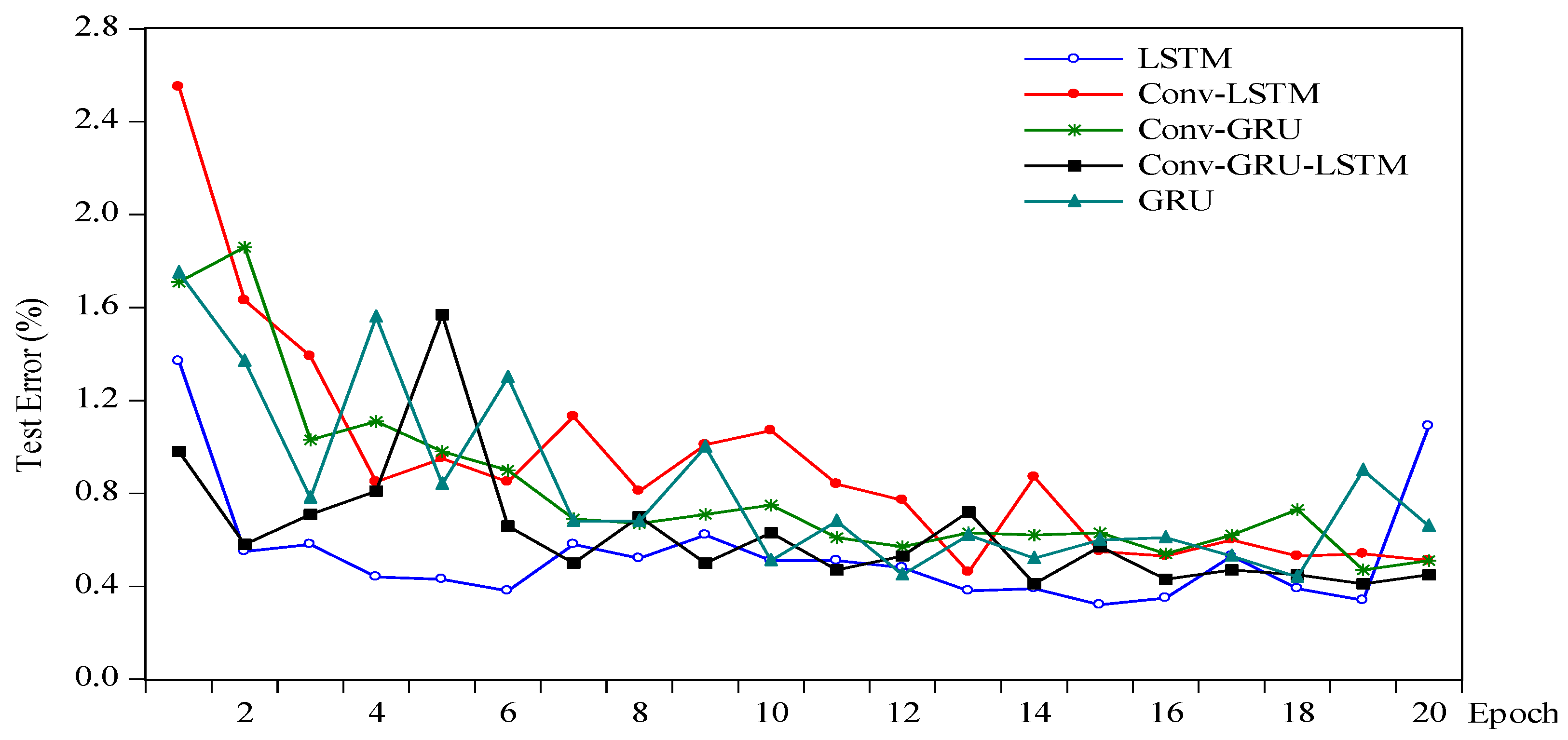

6. Results

6.1. Training Process Analysis

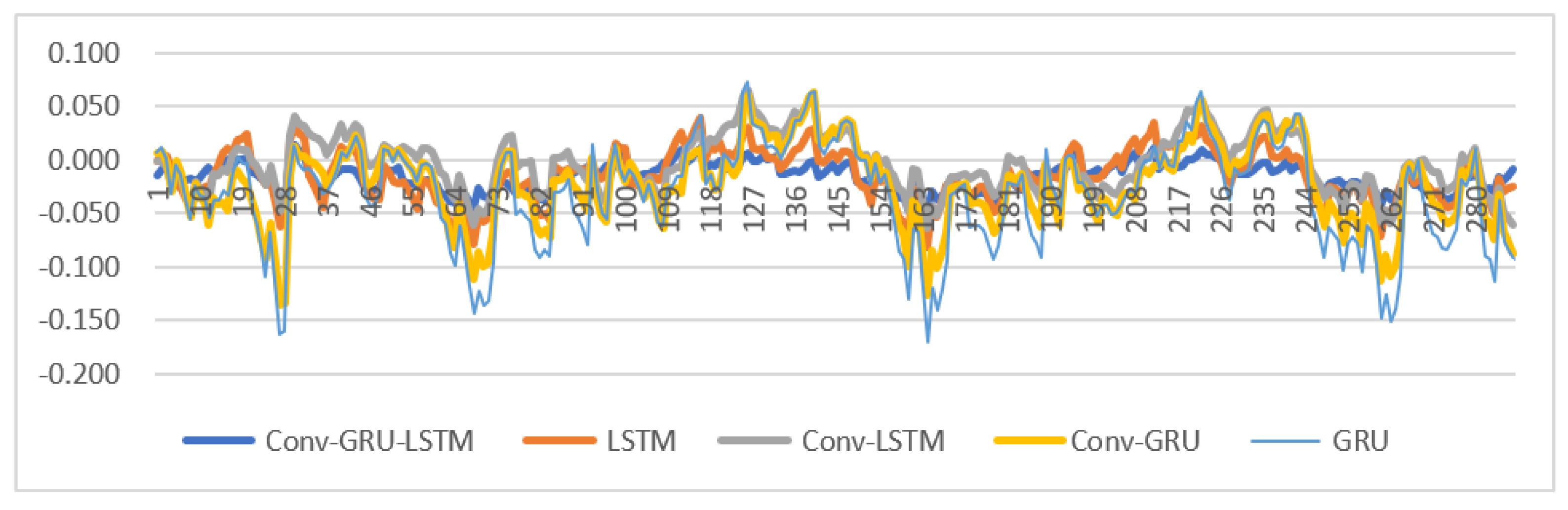

6.2. Forecast Results Display

7. Conclusions and Discussion

7.1. Conclusions

7.2. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Luo, X.; Oyedele, L.O.; Ajayi, A.O.; Akinade, O.O. Comparative study of machine learning-based multi-objective prediction framework for multiple building energy loads. Sustain. Cities Soc. 2020, 61, 102283. [Google Scholar] [CrossRef]

- Zhang, Z.; Yu, D. RBF-NN based short-term load forecasting model considering comprehensive factors affecting demand response. Proc. CSEE. 2018, 38, 1631–1638. [Google Scholar]

- Kang, C.; Xia, Q.; Liu, M. Power System Load Forecasting; Electric Power Press: Beijing, China, 2017. [Google Scholar]

- Zhang, F.S.; Wang, H.; Han, T.; Sun, X.Q.; Zhang, Z.Y.; Cao, J. Short-term load forecasting based on partial least-squares regression. Power Syst. Technol. 2003, 3, 36–40. [Google Scholar]

- Ramos, P.; Santos, N.; Rebelo, R. Performance of state space and ARIMA models for consumer retail sales forecasting. Robot. Comput. Integr. Manuf. 2015, 34, 151–163. [Google Scholar] [CrossRef]

- Chen, P.; Yuan, H.; Shu, X. Forecasting Crime Using the ARIMA Model. In Proceedings of the 2008 Fifth International Conference on Fuzzy Systems and Knowledge Discovery, Shandong, China, 18–20 October 2008; pp. 627–630. [Google Scholar]

- Patil, G.R.; Sahu, P.K. Simultaneous dynamic demand estimation models for major seaports in India[J]. Transportation Letters. Int. J. Transp. Res. 2017, 9, 141–151. [Google Scholar]

- Garcia, R.; Contreras, J.; Van Akkeren, M.; Garcia, J.B.C. A GARCH forecasting model to predict day-ahead electricity prices. IEEE Trans. Power Syst. 2005, 20, 867–874. [Google Scholar] [CrossRef]

- Guzey, H.; Akansel, M. A Comparison of SVM and Traditional Methods for Demand Forecasting in a Seaport: A case study. Int. J. Sci. Technol. Res. 2019, 5, 168–176. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, N.; Wu, L.; Wang, Y. Wind speed forecasting based on the hybrid ensemble empirical mode decomposition and GA-BP neural network method. Ren. Energy 2016, 94, 629–636. [Google Scholar] [CrossRef]

- Shi, H.; Xu, M.; Li, R. Deep Learning for Household Load Forecasting—A Novel Pooling Deep RNN. IEEE Trans. Smart Grid 2018, 9, 5271–5280. [Google Scholar] [CrossRef]

- Chen, M.; Yuan, J.; Liu, D.; Li, T. An adaption scheduling based on dynamic weighted random forests for load demand forecasting. J. Supercomp. 2020, 76, 1–19. [Google Scholar] [CrossRef]

- Dedinec, A.; Filiposka, S.; Dedinec, A.; Kocarev, L. Deep belief network based electricity load forecasting: An analysis of Macedonian case. Energy 2016, 115, 1688–1700. [Google Scholar] [CrossRef]

- Qing, X.; Chao, Z.; Shuangshuang, Z.; Jian, L.; Dan, G.; Yongchun, Z. Research on Short-term Electric Load Forecasting Method Based on Machine Learning. Electr. Meas. Instrum. 2019, 56, 70–75. [Google Scholar]

- Jincheng, F. Research on Short-Term Power Load Forecasting Model Based on DEEP Learning; University of Electronic Science and Technology of China: Chengdu, China, 2020. [Google Scholar]

- Yu, G. Application Research of Machine Learning in Short-Term Power Load Forecasting; Anhui University: Hefei, China, 2020. [Google Scholar]

- Haican, L.; Weifeng, W.; Bing, Z.; Yi, Z.; Qiuting, G.; Wei, H. Short-term station load forecasting based on Wide&Deep-LSTM model. Power Syst. Technol. 2020, 44, 428–436. [Google Scholar]

- Dongfang, Y.; Ying, W.; Lei, L.; Shuai, Y.; Wenguang, W.; Hong, D. Short-term power load forecasting based on deep learning. Foreign Electr. Meas. Technol. 2020, 39, 44–48. [Google Scholar]

- Zengping, W.; Bing, Z.; Weijia, J.; Xin, G.; Xiaobing, L. Short-term load forecasting method based on GRU-NN model. Autom. Electr. Power Syst. 2019, 43, 53–62. [Google Scholar]

- Wu, R.; Bao, Z.; Song, X.; Deng, W. Research on short-term load forecasting method of power grid based on deep learning. Mod. Electr. Power 2018, 35, 43–48. [Google Scholar]

- Wang, K.; Qi, X.; Liu, H. A comparison of day-ahead photovoltaic power forecasting models based on deep learning neural network. Appl. Energy 2019, 251, 113315. [Google Scholar] [CrossRef]

- Zhang, W.; Xu, Y.; Ni, J.; Shi, H. Image target recognition algorithm based on multi-scale block convolutional neural network. J. Comput. Appl. 2016, 36, 1033–1038. [Google Scholar]

- Wang, P.; Zhao, J.G. New Method of Modulation Recognition Based on Convolutional Neural Networks. Radio Eng. 2019, 9, 453–457. [Google Scholar]

- Zhang, C.; Qin, P.; Yin, Y. Adaptive Weight Multi-gram Statement Modeling System Based on Convolutional Neural Network. J. Comput. Sci. 2017, 44, 60–64. [Google Scholar]

- Wang, H.Z.; Wang, G.B.; Li, G.Q.; Peng, J.C.; Liu, Y.T. Deep belief network based deterministic and probabilistic wind speed forecasting approach. Appl. Energy 2016, 182, 80–93. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, R.; Zhang, T.; Zha, Y. Short-term load forecasting based on a improved deep belief network. In Proceedings of the 2016 International Conference on Smart Grid and Clean Energy Technologies (ICSGCE), Chengdu, China, 19–22 October 2016; Volume 42, pp. 339–342. [Google Scholar] [CrossRef]

- Zhang, Y.; Ai, W.; Lin, L.; Yuan, S.; Li, Z. Regional-level ultra-short-term load forecasting method based on deep-length time-time memory network. Power Syst. Technol. 2019, 43, 1884–1892. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Rao, G.; Huang, W.; Feng, Z.; Cong, Q. LSTM with sentence representations for document-level sentiment classification. Neurocomputing 2018, 308, 49–57. [Google Scholar] [CrossRef]

- Sundermeyer, M.; Ney, H.; Schlüter, R. From Feedforward to Recurrent LSTM Neural Networks for Language Modeling. IEEE/ACM Trans. Audio Speech Lang. Process. 2015, 23, 517–529. [Google Scholar] [CrossRef]

- Qing, X.; Niu, Y. Hourly day-ahead solar irradiance prediction using weather forecasts by LSTM. Energy 2018, 148, 461–468. [Google Scholar] [CrossRef]

- Kong, W.; Dong, Z.; Jia, Y.; Hill, D.J.; Xu, Y.; Zhang, Y. Short-Term Residential Load Forecasting Based on LSTM Recurrent Neural Network. IEEE Trans. Smart Grid 2017, 10, 841–851. [Google Scholar] [CrossRef]

- Greff, K.; Srivastava, R.K.; Koutnik, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A Search Space Odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2222–2232. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.H. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Fu, R.; Zhang, Z.; Li, L. Using LSTM and GRU neural network methods for traffic flow prediction. In Proceedings of the 2016 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC), Wuhan, China, 11–13 November 2016; pp. 324–328. [Google Scholar]

- Le, T.-T.-H.; Kim, J.; Kim, H. Classification performance using gated recurrent unit recurrent neural network on energy disaggregation. In Proceedings of the 2016 International Conference on Machine Learning and Cybernetics (ICMLC), Jeju, South Korea, 10–13 July 2016; Volume 1, pp. 105–110. [Google Scholar]

- Pezeshki, M. Sequence modeling using gated recurrent neural networks. arXiv 2015, arXiv:1501.00299. [Google Scholar]

- Huang, Q.; Wang, W.; Zhou, K. Scene labeling using gated recurrent units with explicit long range conditioning. arXiv 2016, arXiv:1611.07485. [Google Scholar]

- Tang, Y.; Huang, Y.; Wu, Z.; Meng, H.; Xu, M.; Cai, L. Question detection from acoustic features using recurrent neural network with gated recurrent unit. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 6125–6129. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Ding, X.; Ding, G.; Han, J. Auto-Balanced Filter Pruning for Efficient Convolutional Neural Networks. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LO, USA, 2–7 February 2018; p. 7. [Google Scholar]

- Singh, P.; Verma, V.K.; Rai, P. Hetconv: Heterogeneous kernel-based convolutions for deep cnns. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4835–4844. [Google Scholar]

- Gers, F.A.; Schraudolph, N.N.; Schmidhuber, J. Learning precise timing with LSTM recurrent networks. J. Mach. Learn. Res. 2002, 3, 115–143. [Google Scholar]

- Ryu, S.; Noh, J.; Kim, H. Deep Neural Network Based Demand Side Short Term Load Forecasting. Energies 2016, 10, 3. [Google Scholar] [CrossRef]

- Zhou, G.-B.; Wu, J.; Zhang, C.-L.; Zhou, Z.-H. Minimal gated unit for recurrent neural networks. Int. J. Autom. Comput. 2016, 13, 226–234. [Google Scholar] [CrossRef]

- Liu, B.; Fu, C.; Bielefield, A.; Liu, Y.Q. Forecasting of Chinese Primary Energy Consumption in 2021 with GRU Artificial Neural Network. Energies 2017, 10, 1453. [Google Scholar] [CrossRef]

- The National Development and Reform Commission and the Energy Administration issued the “Notice on Carrying out Pilot Work for the Construction of Electricity Spot Market”. Energy Res. Util. 2017, 5, 14.

| Dimension of Input | Feature Description | Dimension of Output | Output |

|---|---|---|---|

| 1 | time (t) is a time point for every 15 min | 1 | From (t) to (t + 3) load |

| 2 | From (t- time-window) to (t–1) temperature | ||

| 3 | From (t- time-window) to (t–1) weather condition | ||

| 4 | From (t- time-window) to (t–1) load |

| Weather Condition | Mapping Results | Weather Condition | Mapping Results | Weather Condition | Mapping Results |

|---|---|---|---|---|---|

| Overcast | 0 | Sand blowing | 6 | Heavy rain | 12 |

| Fog | 1 | Heavy snow | 7 | Floating dust | 13 |

| Medium-to-heavy rain | 2 | Sunny | 8 | Rainstorm | 14 |

| Light rain | 3 | Drizzle | 9 | Small-to-medium rain | 15 |

| Haze | 4 | Sleet and snow | 10 | Thunderstorms | 16 |

| Little Snow | 5 | Cloudy | 11 | Shower | 17 |

| Time | Weather Condition | Temperature | Load |

|---|---|---|---|

| 2016-01-01 00:00:00 | 1.738101 | −1.394100 | −0.207188 |

| 2016-01-01 00:15:00 | 1.738101 | −1.410131 | −0.316169 |

| 2016-01-01 00:30:00 | 1.738101 | −1.426163 | −0.402202 |

| 2016-01-01 00:45:00 | 1.738101 | −1.458227 | −0.502655 |

| Type of Hyperparameter | Experimental Scene Setting |

|---|---|

| Number of first layer convolution filters | 8 |

| Kernel size in Conv Layer 1 | 4 4 |

| Max pooling size | 4 4 |

| Number of second layer convolution filters | 16 |

| Kernel size in Conv Layer 2 | 3 3 |

| LSTM or GRU layer 1; hidden layer unit | {20, 50, 80} |

| LSTM or GRU layer 2; hidden layer unit | {20, 50, 80} |

| Objective function | MSE |

| Dropout rate | 0.2 |

| Time step | {48, 96, 192} |

| Batch size | {32, 64} |

| Epoch | 5 |

| Adam code parameter settings | = 0.001, = 0.9, = 0.999 |

| Type of Hyperparameter | Optimal Experimental Scene Setting |

|---|---|

| LSTM or GRU layer 1; hidden layer unit | 50 |

| LSTM or GRU layer 2; hidden layer unit | 50 |

| Time step | 288 |

| Batch size | 32 |

| Model | R2 |

|---|---|

| GRU | 0.9404 |

| LSTM | 0.8735 |

| Conv-LSTM | 0.9705 |

| Conv-GRU | 0.9191 |

| Conv-GRU-LSTM | 0.9636 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Liu, H.; Ji, H.; Zhang, S.; Li, P. Ultra-Short-Term Load Demand Forecast Model Framework Based on Deep Learning. Energies 2020, 13, 4900. https://doi.org/10.3390/en13184900

Li H, Liu H, Ji H, Zhang S, Li P. Ultra-Short-Term Load Demand Forecast Model Framework Based on Deep Learning. Energies. 2020; 13(18):4900. https://doi.org/10.3390/en13184900

Chicago/Turabian StyleLi, Hongze, Hongyu Liu, Hongyan Ji, Shiying Zhang, and Pengfei Li. 2020. "Ultra-Short-Term Load Demand Forecast Model Framework Based on Deep Learning" Energies 13, no. 18: 4900. https://doi.org/10.3390/en13184900

APA StyleLi, H., Liu, H., Ji, H., Zhang, S., & Li, P. (2020). Ultra-Short-Term Load Demand Forecast Model Framework Based on Deep Learning. Energies, 13(18), 4900. https://doi.org/10.3390/en13184900