Automatic Crack Segmentation for UAV-Assisted Bridge Inspection

Abstract

:1. Introduction

2. Proposed Methodology

2.1. Stage 1: Data Collection and Model Training

2.2. Stage 2: 3D Construction—Photogrammetry

2.3. Stage 3: Damage Identification Model

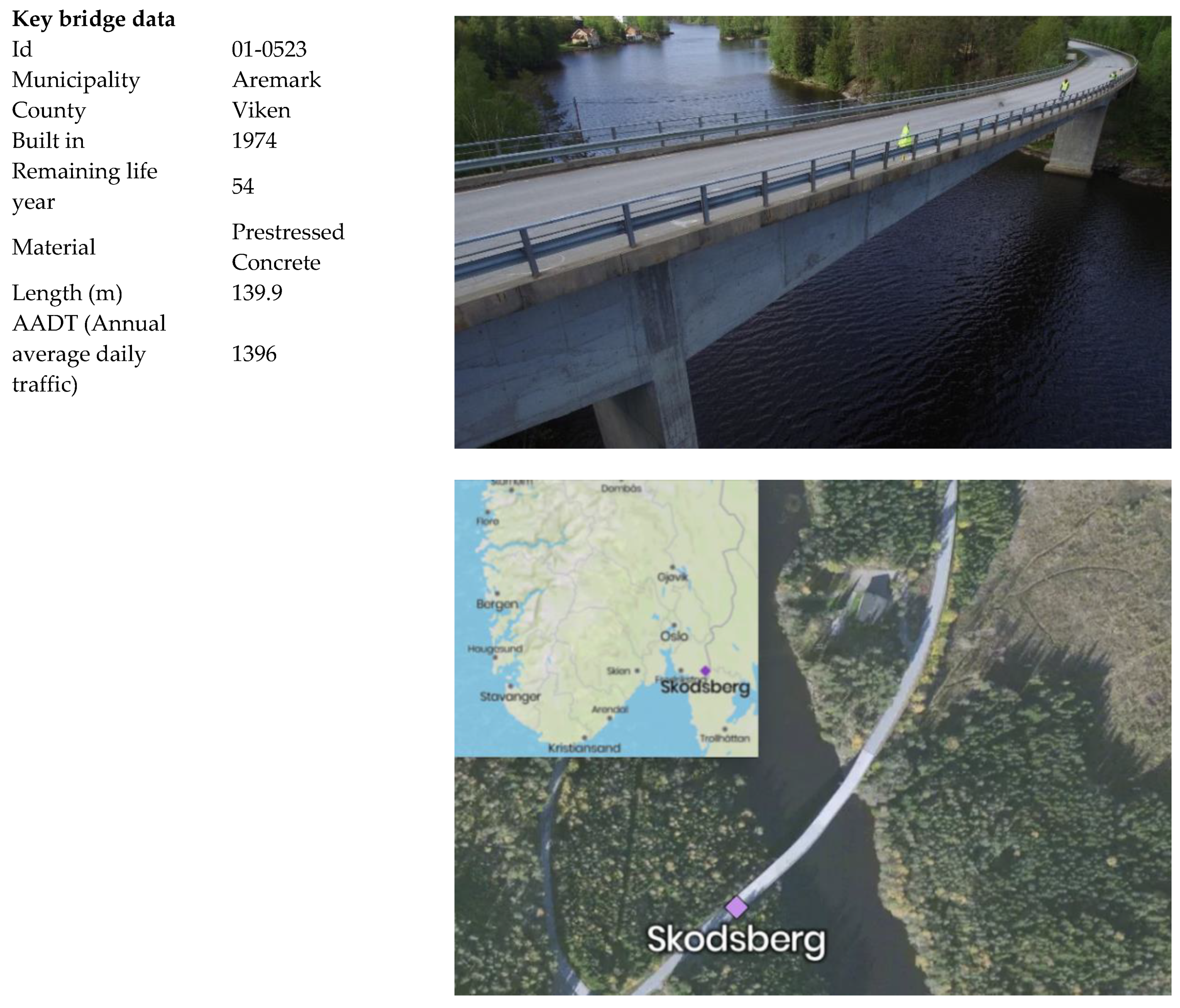

3. An Illustrative Case Study—UAV-Assisted Inspection of Skodsberg Bridge, Norway

3.1. Data Collection

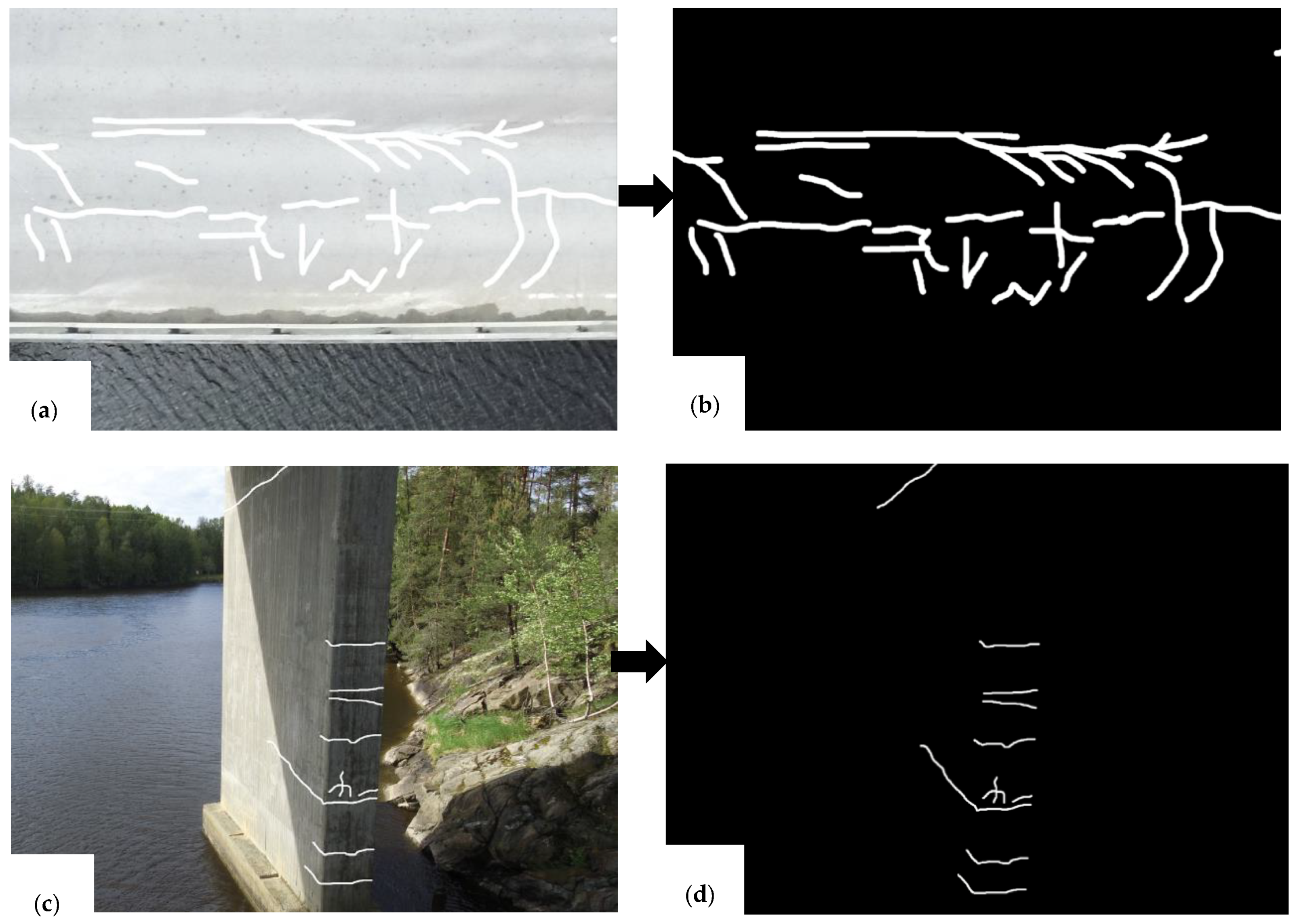

3.2. Model Training

3.3. D Construction—Orthorectification

3.4. Crack Segmentation

- Class labelling and creating the bounding box coordinates, and then returns the object mask. Resulting masks passed though the crack statistics analysis script; see Appendix II in the supplemental file. The statistics have been calculated by using OpenCV image processing library. We have utilized contour approximations for extracting individual cracks from the predicted binary mask. Then, the contour perimeter and area directly on vector points were calculated. For estimating the length and width of the crack, the Euclidean distance from corners (a1, b1) and (a1, c1) is calculated. The maximum distance, i.e., MAX ((a1, b1), (a1, c1)) is assumed to be the length and the minimum assumed to be the width. This type of approximation is more accurate with the increase of linearity of the crack. Figure 9 demonstrates the process of estimation of cracks length and width using Euclidean distance.

- Loading in orthomosaic (output from Stage 2), tiling the orthomosiac into predefined sizes which should remain consistent across the entire dataset; see Appendix III in the supplemental file for orthomosaic analysis script.

- Thereafter, each tile is passed into the trained crack segmentation Mask RCNN network.

- Finally, results are saved, including: location (real world coordinates), length, width, area, perimeter, classification score by generating XMLs. XMLs are simple scripts, for performing return location and size of all cracks with certain classification scores-based standards, such as Norwegian Public Roads Administration Handbook V441-Inspection Handbook for Bridges. Figure 10 displays the Graphical User Interface (GUI) outline of the crack segmentation Mask RCNN analysis for one of the drone images of Skodsberg bridge.

4. Result Discussion

- A practical methodology from data collection to automated bridge crack segmentation Mask RCNN model/toolkit is demonstrated.

- From the case study, it is deduced that for the model labelling aspect, the network can achieve optimum performance with up to 90% less manually labelled data, reducing labelling costs by the same percentage.

- From the case study, it is inferred that using UAV-assisted bridge inspections coupled with automated crack detection, threats (such as cracks in steel elements, fractures in concrete elements, etc.) can be pinpointed early, high risk bridge elements can be monitored, and failure rates can be reduced.

- By employing the developed model/toolkit, which is a deep Mask RCNN, it was achieving up to 90% accuracy in distinguishing threats and anomalies.

- Crack segmentation Mask RCNN viewer provides a simple proof of concept visualization and processing tool for automated bridge crack segmentation and analysis

- It is demonstrated how an online system can be deployed wherein the user requires no programming experience or knowledge of the underlying algorithms.

- It is demonstrated that the 3D model of bridge can be used as base line for maintenance and asset management purposes. The addition of 3D capabilities to bridge management allows navigation through a complex structure, providing visual identification of the area of concern rather than solely relying on reference names or numbers.

5. Concluding Remarks and Future Work Suggestions

Future Work Suggestion

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Barabadi, A.; Ayele, Y.Z. Post-disaster infrastructure recovery: Prediction of recovery rate using historical data. Reliab. Eng. Syst. Saf. 2018, 169, 209–223. [Google Scholar] [CrossRef]

- Biondini, F.; Frangopol, D.M. Life-cycle performance of deteriorating structural systems under uncertainty. J. Struct. Eng. 2016, 142, F4016001. [Google Scholar] [CrossRef]

- Wells, J.; Lovelace, B. Improving the Quality of Bridge Inspections Using Unmanned Aircraft Systems (UAS); Minnesota Department of Transportation: St. Paul, MN, USA, 2018. [Google Scholar]

- Okasha, N.M.; Frangopol, D.M. Integration of structural health monitoring in a system performance based life-cycle bridge management framework. Struct. Infrastruct. Eng. 2012, 8, 999–1016. [Google Scholar]

- Phares, B.M.; Rolander, D.D.; Graybeal, B.A.; Washer, G.A. Reliability of visual bridge inspection. Public Roads 2001, 64, 22–29. [Google Scholar]

- Liu, M.; Frangopol, D.M.; Kim, S. Bridge system performance assessment from structural health monitoring: A case study. J. Struct. Eng. 2009, 135, 733–742. [Google Scholar] [CrossRef]

- Ayele, Y.Z. Drones for inspecting aging bridges. In Proceedings of the International Conference on Natural Hazards and Infrastructure, Chania, Crete Island, Greece, 23–26 June 2019. ISSN 2623-4513. [Google Scholar]

- Ayele, Y.Z.; Droguett, E.L. Application of UAVs for bridge inspection and resilience assessment. In Proceedings of the 29th European Safety and Reliability Conference, Hannover, Germany, 22–26 September 2019; Beer, M., Zio, E., Eds.; Research Publishing: Singapore, 2019. [Google Scholar]

- Heimbecher, F.; Kaundinya, I. Protection of vulnerable infrastructures in a road transport network. In Proceedings of the TRA Conference, Brussels, Belgium, 7–10 June 2010. [Google Scholar]

- Minnesota Department of Transportation. Economic Impacts of the I-35W Bridge Collapse. Available online: http://www.dot.state.mn.us/i35wbridge/rebuild/pdfs/economic-impacts-from-deed.pdf (accessed on 20 September 2019).

- Zink, J.; Lovelace, B. Unmanned Aerial Vehicle Bridge Inspection Demonstration Project; Minnesota Department of Transportation: St. Paul, MN, USA, 2015. [Google Scholar]

- Duque, L.; Seo, J.; Wacker, J. Synthesis of unmanned aerial vehicle applications for infrastructures. J. Perform. Constr. Facil. 2018, 32, 04018046. [Google Scholar] [CrossRef]

- Dorafshan, S.; Thomas, R.J.; Maguire, M. Fatigue crack detection using unmanned aerial systems in fracture critical inspection of steel bridges. J. Bridge Eng. 2018, 23, 04018078. [Google Scholar] [CrossRef]

- Gillins, M.N.; Gillins, D.T.; Parrish, C. Cost-effective bridge safety inspections using unmanned aircraft systems (UAS). Geotech. Struct. Eng. Congr. 2016, 2016, 1931–1940. [Google Scholar]

- Seitz, S.M.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A comparison and evaluation of multi-view stereo reconstruction algorithms. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; IEEE: New York, NY, USA, 2006; Volume 1, pp. 519–528. [Google Scholar]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Jung, H.-J.; Lee, J.-H.; Yoon, S.; Kim, I.-H. Bridge inspection and condition assessment using unmanned aerial vehicles (UAVs): Major challenges and solutions from a practical perspective. Smart Struct. Syst. 2019, 24, 669–681. [Google Scholar]

- Song, Y.; Liu, Z.; Rxnnquist, A.; Navik, P.; Liu, Z. Contact wire irregularity stochastics and effect on high-speed railway pantograph-catenary interactions. IEEE Trans. Instrum. Meas. 2020, 69, 8196–8206. [Google Scholar]

- Bolourian, N.; Soltani, M.; Albahria, A.; Hammad, A. High level framework for bridge inspection using LiDAR-equipped UAV. In ISARC, Proceedings of the International Symposium on Automation and Robotics in Construction, Taipei, Taiwan, 28 June–1 July 2017; IAARC Publications Curran Associates, Inc.: New York, NY, USA, 2017; p. 34. [Google Scholar]

- Lovelace, B.; Zink, J. Unmanned aerial vehicle bridge inspection demonstration project. Res. Proj. Final Rep. 2015, 40, 1–214. [Google Scholar]

- Mader, D.; Blaskow, R.; Westfeld, P.; Weller, C. Potential of UAV-based laser scanner and multispectral camera data in building inspection. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; Volume 41. [Google Scholar]

- Iwnicki, S. Handbook of Railway Vehicle Dynamics; CRC Press: Boca Raton, FL, USA, 2006. [Google Scholar]

- Scott, R.H.; Banerji, P.; Chikermane, S.; Srinivasan, S.; Basheer, P.M.; Surre, F.; Sun, T.; Grattan, K.T. Commissioning and evaluation of a fiber-optic sensor system for bridge monitoring. IEEE Sens. J. 2013, 13, 2555–2562. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Li, J.; Liang, X.; Wei, Y.; Xu, T.; Feng, J.; Yan, S. Perceptual generative adversarial networks for small object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1222–1230. [Google Scholar]

- Bai, Y.; Zhang, Y.; Ding, M.; Ghanem, B. Sod-mtgan: Small object detection via multi-task generative adversarial network. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 206–221. [Google Scholar]

- Cha, Y.J.; Choi, W.; Büyüköztürk, O. Deep learning-based crack damage detection using convolutional neural networks. Comput. Aided Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Kim, B.; Cho, S. Automated vision-based detection of cracks on concrete surfaces using a deep learning technique. Sensors 2018, 18, 3452. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Atha, D.J.; Jahanshahi, M.R. Evaluation of deep learning approaches based on convolutional neural networks for corrosion detection. Struct. Health Monit. 2018, 17, 1110–1128. [Google Scholar] [CrossRef]

- Mandal, V.; Uong, L.; Adu-Gyamfi, Y. Automated road crack detection using deep convolutional neural networks. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 5212–5215. [Google Scholar]

- Huston, D.; Cui, J.; Burns, D.; Hurley, D. Concrete bridge deck condition assessment with automated multisensor techniques. Struct. Infrastruct. Eng. 2011, 7, 613–623. [Google Scholar] [CrossRef]

- Chen, S.; Laefer, D.F.; Mangina, E.; Zolanvari, S.I.; Byrne, J. UAV bridge inspection through evaluated 3D reconstructions. J. Bridge Eng. 2019, 24, 05019001. [Google Scholar] [CrossRef] [Green Version]

- Seo, J.; Duque, L.; Wacker, J. Drone-enabled bridge inspection methodology and application. Autom. Constr. 2018, 94, 112–126. [Google Scholar] [CrossRef]

- Seo, J.; Wacker, J.P.; Duque, L. Evaluating the use of Drones for Timber Bridge Inspection; Gen. Tech. Rep. FPL-GTR-258; US Department of Agriculture, Forest Service, Forest Products Laboratory: Madison, WI, USA, 2018; Volume 258, pp. 1–152.

- Belcastro, C.M.; Newman, R.L.; Evans, J.; Klyde, D.H.; Barr, L.C.; Ancel, E. Hazards identification and analysis for unmanned aircraft system operations. In Proceedings of the 17th AIAA Aviation Technology, Integration, and Operations Conference, Denver, CO, USA, 5–9 June 2017; p. 3269. [Google Scholar]

- Agisoft, L.L.C. Metashape—Photogrammetric Processing of Digital Images and 3D Spatial Data Generation. Available online: https://www.agisoft.com (accessed on 20 July 2019).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ayele, Y.Z.; Aliyari, M.; Griffiths, D.; Droguett, E.L. Automatic Crack Segmentation for UAV-Assisted Bridge Inspection. Energies 2020, 13, 6250. https://doi.org/10.3390/en13236250

Ayele YZ, Aliyari M, Griffiths D, Droguett EL. Automatic Crack Segmentation for UAV-Assisted Bridge Inspection. Energies. 2020; 13(23):6250. https://doi.org/10.3390/en13236250

Chicago/Turabian StyleAyele, Yonas Zewdu, Mostafa Aliyari, David Griffiths, and Enrique Lopez Droguett. 2020. "Automatic Crack Segmentation for UAV-Assisted Bridge Inspection" Energies 13, no. 23: 6250. https://doi.org/10.3390/en13236250