A Review on Deep Learning Models for Forecasting Time Series Data of Solar Irradiance and Photovoltaic Power

Abstract

:1. Introduction

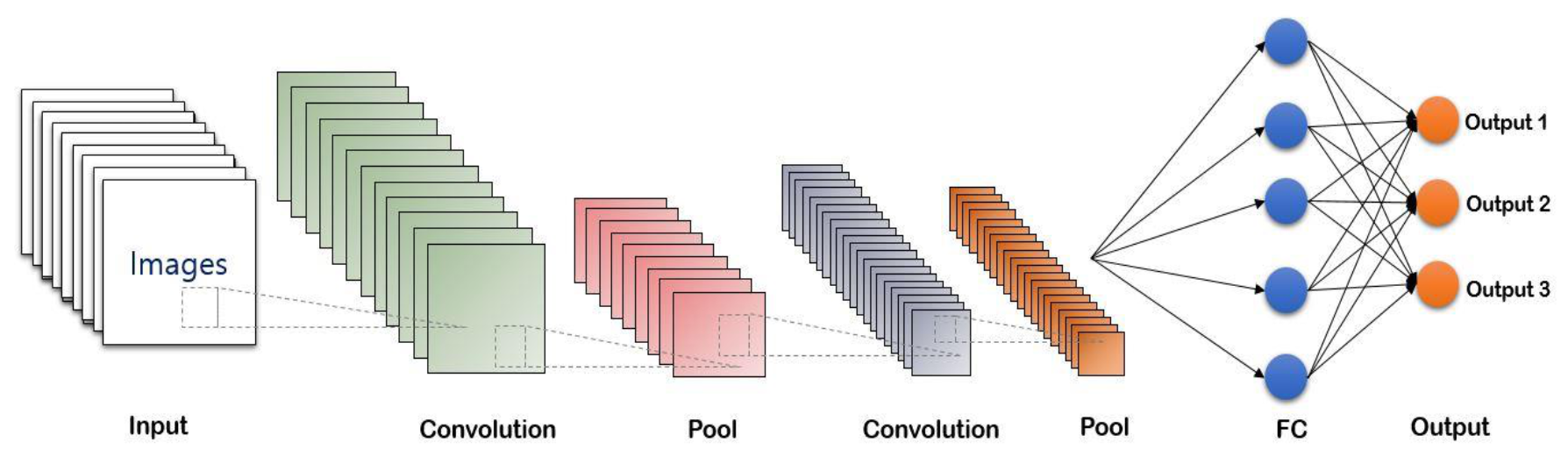

2. Solar Irradiance Variability

3. Deep Learning Models

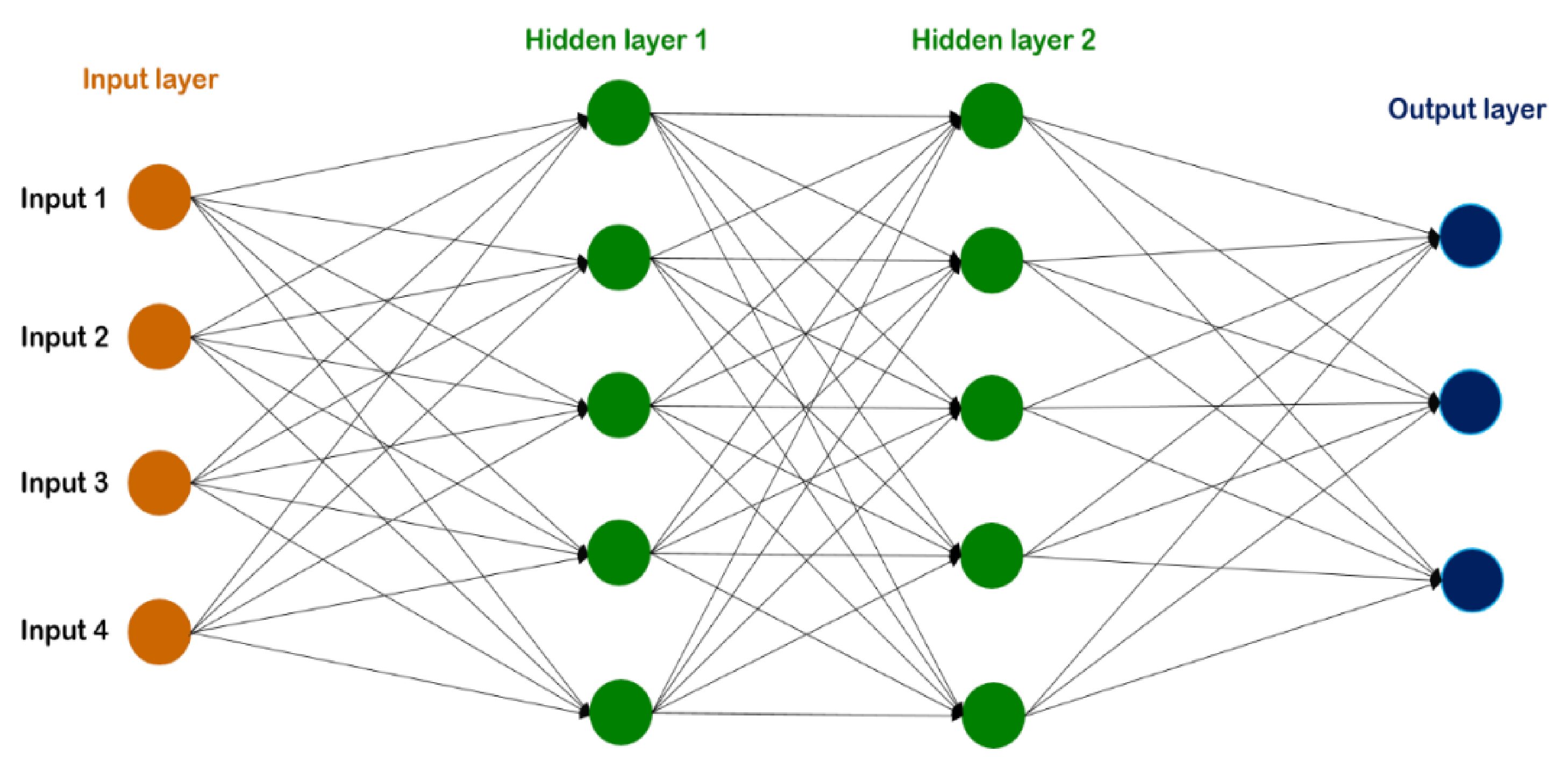

3.1. Neural Network

3.2. RNN

3.3. LSTM

- Xt is the input vector to the memory cell at time t.

- Wi, Wf, Wc, Wo, Ui, Uf, Uc, Uo, and Vo are weight matrices.

- bi, bf, bc, and bo are bias vectors.

- ht is the value of the memory cell at time t.

- St and Ct are the values of the candidate state of the memory cell and the state of the memory cell at time t, respectively.

- σ and tanh are the activation functions.

- it, ft, and ot are values of the input gate, the forget gate, and the output gate at time t.

3.4. GRU

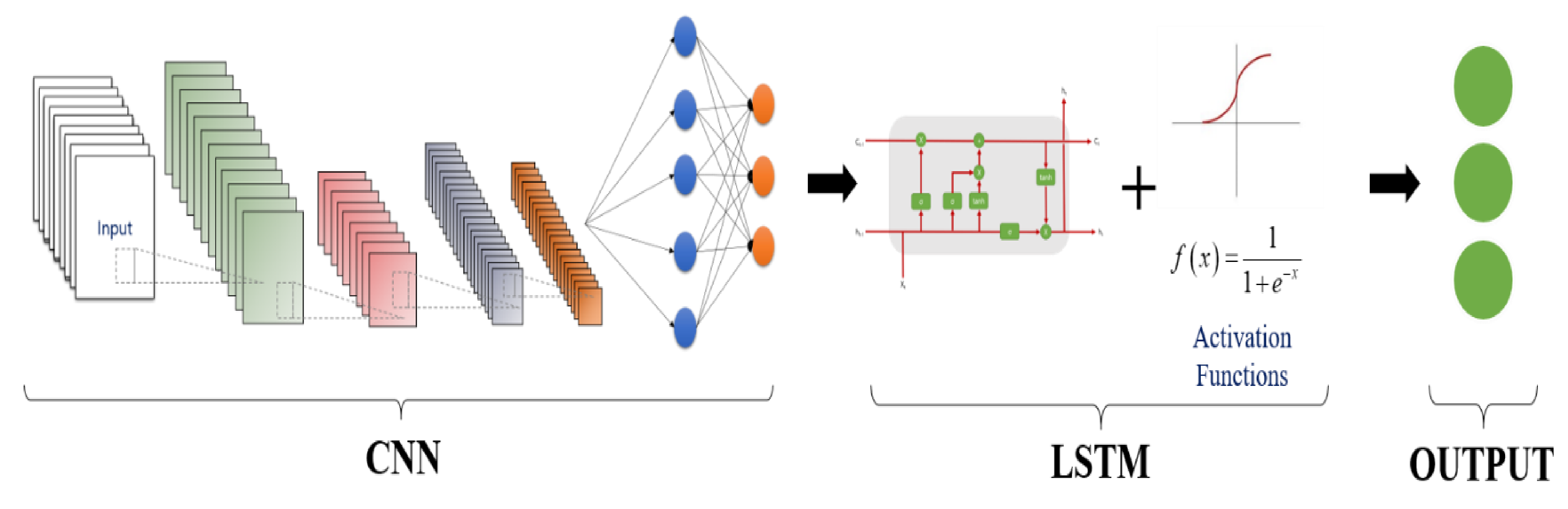

3.5. The Hybrid Model (CNN–LSTM)

4. Evaluation Metrics

5. Analysis of Past Studies

5.1. Accuracy

5.2. Types of Input Data

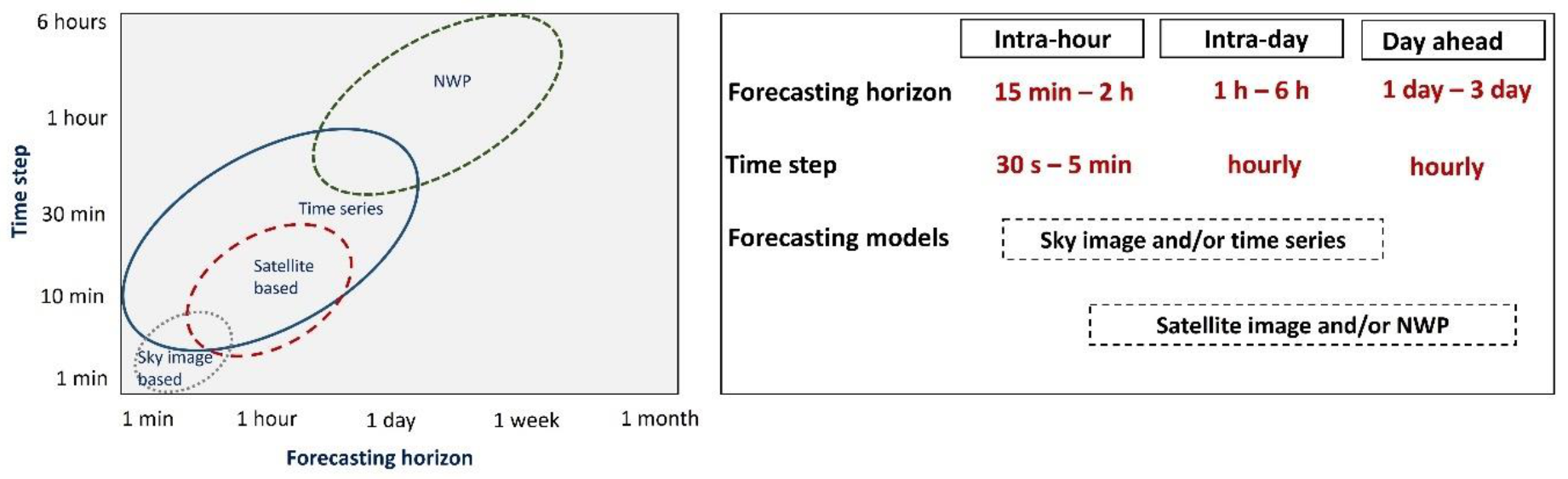

5.3. Forecast Horizon

- Very short-term forecasting (1 min to several minutes ahead).

- Short-term forecasting (1 h or several hours ahead to 1 day or 1 week ahead).

- Medium-term forecasting (1 month to 1 year ahead).

- Long-term forecasting (1 to 10 years ahead).

5.4. Type of Season and Weather

5.5. Training Time

5.6. Comparison with Other Models

6. Conclusions

- In the case of the single model, most studies explain that LSTM and GRU show better performance than RNN in all conditions because LSTM and GRU have internal memory to overcome the vanishing gradient problems occurring in the RNN.

- The hybrid model (CNN–LSTM) outperforms the three standalone models in predicting solar irradiance. More specifically, the evaluation metrics for this hybrid model are substantially smaller than those of the standalone models. However, the CNN–LSTM model requires complex input data, such as images, because it has a CNN layer inside.

- The training time should be considered to recognize the performance of the models. This work reveals that the statistics of GRU are more efficient than that of LSTM in the case of computational time because the average time for LSTM to train the data is relatively longer than that for GRU. Therefore, considering training time and forecasting accuracy, the GRU model can generate a satisfactory result for forecasting PV power and solar irradiance.

- Comparisons between the deep learning models and other machine learning models conclude that these models were better used in predicting solar irradiance and PV power (Section 5.6). Most studies show that the accuracy of the proposed models is better than other models, such as ANN, FFNN, SVR, RFR, and MLP.

Author Contributions

Funding

Conflicts of Interest

Abbreviation

| ANN | Artificial neural network |

| BPNN | Back propagation neural network |

| CNN | Convolutional neural network |

| DHI | Diffuse horizontal irradiance |

| FFNN | Feedforward neural network |

| GHI | Global horizontal irradiance |

| GRU | Gated recurrent unit |

| LSTM | Long short-term memory |

| MAE | Mean absolute error |

| MAPE | Mean absolute percentage error |

| MLP | Multilayer perceptron |

| PV | Photovoltaic |

| RBF | Radial basis function |

| ReLU | Rectified linear unit |

| RFR | Random forest regression |

| RMSE | Root-mean-square error |

| RNN | Recurrent neural network |

| rRMSE | Relative root-mean-square error |

| SVR | Support vector regression |

References

- Mohanty, S.; Patra, P.K.; Sahoo, S.S.; Mohanty, A. Forecasting of solar energy with application for a growing economy like India: Survey and implication. Renew. Sustain. Energy Rev. 2017, 78, 539–553. [Google Scholar] [CrossRef]

- Das, U.K.; Tey, K.S.; Seyedmahmoudian, M.; Mekhilef, S.; Idris, M.Y.I.; Van Deventer, W.; Horan, B.; Stojcevski, A. Forecasting of photovoltaic power generation and model optimization: A review. Renew. Sustain. Energy Rev. 2018, 81, 912–928. [Google Scholar] [CrossRef]

- Husein, M.; Chung, I.-Y. Day-Ahead Solar Irradiance Forecasting for Microgrids Using a Long Short-Term Memory Recurrent Neural Network: A Deep Learning Approach. Energies 2019, 12, 1856. [Google Scholar] [CrossRef] [Green Version]

- Lappalainen, K.; Wang, G.C.; Kleisslid, J. Estimation of the largest expected photovoltaic power ramp rates. Appl. Energy 2020, 278, 115636. [Google Scholar] [CrossRef]

- Sobri, S.; Koohi-Kamali, S.; Rahim, N.A. Solar photovoltaic generation forecasting methods: A review. Energy Convers. Manag. 2018, 156, 459–497. [Google Scholar] [CrossRef]

- Ng, A. Machine Learning Yearning: Techincal Strategy for AI Engineers, in the Era of Deep Learning. 2018. Available online: https://www.deeplearning.ai/machine-learning-yearning (accessed on 30 November 2020).

- Zang, H.; Liu, L.; Sun, L.; Cheng, L.; Wei, Z.; Sun, G. Short-term global horizontal irradiance forecasting based on a hybrid CNN-LSTM model with spatiotemporal correlations. Renew. Energy 2020, 160, 26–41. [Google Scholar] [CrossRef]

- Shuai, Y.; Notton, G.; Kalogirou, S.; Nivet, M.-L.; Paoli, C.; Motte, F.; Fouilloy, A. Machine learning methods for solar radiation forecasting: A review. Renew. Energy 2017, 105, 569–582. [Google Scholar] [CrossRef]

- Carrera, B.; Kim, K. Comparison Analysis of Machine Learning Techniques for Photovoltaic Prediction Using Weather Sensor Data. Sensors 2020, 20, 3129. [Google Scholar] [CrossRef]

- Caldas, M.; Alonso-Suárez, R. Very short-term solar irradiance forecast using all-sky imaging and real-time irradiance measurements. Renew. Energy 2019, 143, 1643–1658. [Google Scholar] [CrossRef]

- Miller, S.D.; Rogers, M.A.; Haynes, J.M.; Sengupta, M.; Heidinger, A.K. Short-term solar irradiance forecasting via satellite/model coupling. Sol. Energy 2018, 168, 102–117. [Google Scholar] [CrossRef]

- Xie, T.; Zhang, G.; Liu, H.; Liu, F.; Du, P. A Hybrid Forecasting Method for Solar Output Power Based on Variational Mode Decomposition, Deep Belief Networks and Auto-Regressive Moving Average. Appl. Sci. 2018, 8, 1901. [Google Scholar] [CrossRef] [Green Version]

- Wang, K.; Li, K.; Zhou, L.; Hu, Y.; Cheng, Z.; Liu, J.; Chen, C. Multiple convolutional neural networks for multivariate time series prediction. Neurocomputing 2019, 360, 107–119. [Google Scholar] [CrossRef]

- Chaouachi, A.; Kamel, R.M.; Nagasaka, K. Neural Network Ensemble-Based Solar Power Generation Short-Term Forecasting. J. Adv. Comput. Intell. Intell. Inform. 2010, 14, 69–75. [Google Scholar] [CrossRef] [Green Version]

- Yadav, A.K.; Chandel, S. Solar radiation prediction using Artificial Neural Network techniques: A review. Renew. Sustain. Energy Rev. 2014, 33, 772–781. [Google Scholar] [CrossRef]

- Mellit, A.; Kalogirou, S.A. Artificial intelligence techniques for photovoltaic applications: A review. Prog. Energy Combust. Sci. 2008, 34, 574–632. [Google Scholar] [CrossRef]

- Cheon, J.; Lee, J.T.; Kim, H.G.; Kang, Y.H.; Yun, C.Y.; Kim, C.K.; Kim, B.Y.; Kim, J.Y.; Park, Y.Y.; Jo, H.N.; et al. Trend Review of Solar Energy Forecasting Technique. J. Korean Sol. Energy Soc. 2019, 39, 41–54. [Google Scholar] [CrossRef]

- Hameed, W.I.; Sawadi, B.A.; Al-Kamil, S.J.; Al-Radhi, M.S.; Al-Yasir, Y.I.; Saleh, A.L.; Abd-Alhameed, R.A. Prediction of Solar Irradiance Based on Artificial Neural Networks. Inventions 2019, 4, 45. [Google Scholar] [CrossRef] [Green Version]

- Fan, C.; Wang, J.; Gang, W.; Li, S. Assessment of deep recurrent neural network-based strategies for short-term building energy predictions. Appl. Energy 2019, 236, 700–710. [Google Scholar] [CrossRef]

- Chollet, F.; Allaire, J. Deep Learning with R; Manning Publications: Shelter Island, NY, USA, 2018. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Liu, Y.; Guan, L.; Hou, C.; Han, H.; Liu, Z.; Sun, Y.; Zheng, M. Wind Power Short-Term Prediction Based on LSTM and Discrete Wavelet Transform. Appl. Sci. 2019, 9, 1108. [Google Scholar] [CrossRef] [Green Version]

- Kim, H.Y.; Won, C.H. Forecasting the volatility of stock price index: A hybrid model integrating LSTM with multiple GARCH-type models. Expert Syst. Appl. 2018, 103, 25–37. [Google Scholar] [CrossRef]

- Cho, K.; Van Merrienboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 14–21 October 2014; pp. 1724–1734. [Google Scholar] [CrossRef]

- Wang, F.; Yu, Y.; Zhang, Z.; Li, J.; Zhen, Z.; Li, K. Wavelet Decomposition and Convolutional LSTM Networks Based Improved Deep Learning Model for Solar Irradiance Forecasting. Appl. Sci. 2018, 8, 1286. [Google Scholar] [CrossRef] [Green Version]

- Yann, L.; Yoshua, B. Convolutional Networks for Images, Speech, and Time-Series. Handb. Brain Theory Neural Netw. 1995, 10, 2571–2575. [Google Scholar]

- Rawat, W.; Wang, Z. Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Rehman, A.U.; Malik, A.K.; Raza, B.; Ali, W. A Hybrid CNN-LSTM Model for Improving Accuracy of Movie Reviews Sentiment Analysis. Multimed. Tools Appl. 2019, 78, 26597–26613. [Google Scholar] [CrossRef]

- He, Y.; Liu, Y.; Shao, S.; Zhao, X.; Liu, G.; Kong, X.; Liu, L. Application of CNN-LSTM in Gradual Changing Fault Diagnosis of Rod Pumping System. Math. Probl. Eng. 2019, 2019, 4203821. [Google Scholar] [CrossRef]

- Huang, C.-J.; Kuo, P.-H. A Deep CNN-LSTM Model for Particulate Matter (PM2.5) Forecasting in Smart Cities. Sensors 2018, 18, 2220. [Google Scholar] [CrossRef] [Green Version]

- Cao, K.; Kim, H.; Hwang, C.; Jung, H. CNN-LSTM coupled model for prediction of waterworks operation data. J. Inf. Process. Syst. 2018, 14, 1508–1520. [Google Scholar] [CrossRef]

- Swapna, G.; Soman, K.P.; Vinayakumar, R. Automated detection of diabetes using CNN and CNN-LSTM network and heart rate signals. Procedia Comput. Sci. 2018, 132, 1253–1262. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 2015, 2015, 802–810. [Google Scholar]

- Zhang, J.; Florita, A.; Hodge, B.-M.; Lu, S.; Hamann, H.F.; Banunarayanan, V.; Brockway, A.M. A suite of metrics for assessing the performance of solar power forecasting. Sol. Energy 2015, 111, 157–175. [Google Scholar] [CrossRef] [Green Version]

- Lave, M.; Hayes, W.; Pohl, A.; Hansen, C.W. Evaluation of Global Horizontal Irradiance to Plane-of-Array Irradiance Models at Locations across the United States. IEEE J. Photovolt. 2015, 5, 597–606. [Google Scholar] [CrossRef]

- Ghimire, S.; Deo, R.C.; Raj, N.; Mi, J. Deep solar radiation forecasting with convolutional neural network and long short-term memory network algorithms. Appl. Energy 2019, 253, 113541. [Google Scholar] [CrossRef]

- Niu, F.; O’Neill, Z. Recurrent Neural Network based Deep Learning for Solar Radiation Prediction. In Proceedings of the 15th IBPSA Conference, San Francisco, CA, USA, 7–9 August 2017; pp. 1890–1897. [Google Scholar]

- Cao, S.; Cao, J. Forecast of solar irradiance using recurrent neural networks combined with wavelet analysis. Appl. Therm. Eng. 2005, 25, 161–172. [Google Scholar] [CrossRef]

- Qing, X.; Niu, Y. Hourly day-ahead solar irradiance prediction using weather forecasts by LSTM. Energy 2018, 148, 461–468. [Google Scholar] [CrossRef]

- Aslam, M.; Lee, J.-M.; Kim, H.-S.; Lee, S.-J.; Hong, S. Deep Learning Models for Long-Term Solar Radiation Forecasting Considering Microgrid Installation: A Comparative Study. Energies 2019, 13, 147. [Google Scholar] [CrossRef] [Green Version]

- He, H.; Lu, N.; Jie, Y.; Chen, B.; Jiao, R. Probabilistic solar irradiance forecasting via a deep learning-based hybrid approach. IEEJ Trans. Electr. Electron. Eng. 2020, 15, 1604–1612. [Google Scholar] [CrossRef]

- Jeon, B.-K.; Kim, E.-J. Next-Day Prediction of Hourly Solar Irradiance Using Local Weather Forecasts and LSTM Trained with Non-Local Data. Energies 2020, 13, 5258. [Google Scholar] [CrossRef]

- Wojtkiewicz, J.; Hosseini, M.; Gottumukkala, R.; Chambers, T. Hour-Ahead Solar Irradiance Forecasting Using Multivariate Gated Recurrent Units. Energies 2019, 12, 4055. [Google Scholar] [CrossRef] [Green Version]

- Yu, Y.; Cao, J.; Zhu, J. An LSTM Short-Term Solar Irradiance Forecasting Under Complicated Weather Conditions. IEEE Access 2019, 7, 145651–145666. [Google Scholar] [CrossRef]

- Yan, K.; Shen, H.; Wang, L.; Zhou, H.; Xu, M.; Mo, Y. Short-Term Solar Irradiance Forecasting Based on a Hybrid Deep Learning Methodology. Information 2020, 11, 32. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Verschae, R.; Nobuhara, S.; LaLonde, J.-F. Deep photovoltaic nowcasting. Sol. Energy 2018, 176, 267–276. [Google Scholar] [CrossRef] [Green Version]

- Wang, K.; Qi, X.; Liu, H. Photovoltaic power forecasting based LSTM-Convolutional Network. Energy 2019, 189, 116225. [Google Scholar] [CrossRef]

- Li, P.; Zhou, K.; Lu, X.; Yang, S. A hybrid deep learning model for short-term PV power forecasting. Appl. Energy 2020, 259, 114216. [Google Scholar] [CrossRef]

- Suresh, V.; Janik, P.; Rezmer, J.; Leonowicz, Z. Forecasting Solar PV Output Using Convolutional Neural Networks with a Sliding Window Algorithm. Energies 2020, 13, 723. [Google Scholar] [CrossRef] [Green Version]

- Gensler, A.; Henze, J.; Sick, B.; Raabe, N. Deep Learning for solar power forecasting—An approach using AutoEncoder and LSTM Neural Networks. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 002858–002865. [Google Scholar]

- Wang, Y.; Liao, W.; Chang, Y. Gated Recurrent Unit Network-Based Short-Term Photovoltaic Forecasting. Energies 2018, 11, 2163. [Google Scholar] [CrossRef] [Green Version]

- Abdel-Nasser, M.; Mahmoud, K. Accurate photovoltaic power forecasting models using deep LSTM-RNN. Neural Comput. Appl. 2019, 31, 2727–2740. [Google Scholar] [CrossRef]

- Lee, D.; Kim, K. Recurrent Neural Network-Based Hourly Prediction of Photovoltaic Power Output Using Meteorological Information. Energies 2019, 12, 215. [Google Scholar] [CrossRef] [Green Version]

- Lee, D.; Jeong, J.; Yoon, S.H.; Chae, Y.T. Improvement of Short-Term BIPV Power Predictions Using Feature Engineering and a Recurrent Neural Network. Energies 2019, 12, 3247. [Google Scholar] [CrossRef] [Green Version]

- Li, G.; Wang, H.; Zhang, S.; Xin, J.; Liu, H. Recurrent Neural Networks Based Photovoltaic Power Forecasting Approach. Energies 2019, 12, 2538. [Google Scholar] [CrossRef] [Green Version]

- Wang, K.; Qi, X.; Liu, H. A comparison of day-ahead photovoltaic power forecasting models based on deep learning neural network. Appl. Energy 2019, 251, 113315. [Google Scholar] [CrossRef]

- Wen, L.; Zhou, K.; Yang, S.; Lu, X. Optimal load dispatch of community microgrid with deep learning based solar power and load forecasting. Energy 2019, 171, 1053–1065. [Google Scholar] [CrossRef]

- Sharadga, H.; Hajimirza, S.; Balog, R.S. Time series forecasting of solar power generation for large-scale photovoltaic plants. Renew. Energy 2020, 150, 797–807. [Google Scholar] [CrossRef]

- Raza, M.Q.; Nadarajah, M.; Ekanayake, C. On recent advances in PV output power forecast. Sol. Energy 2016, 136, 125–144. [Google Scholar] [CrossRef]

- Gupta, S.; Zhang, W.; Wang, F. Model accuracy and runtime tradeoff in distributed deep learning: A systematic study. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining (ICDM), Barcelona, Spain, 12–15 December 2016. [Google Scholar] [CrossRef] [Green Version]

- Pang, Z.; Niu, F.; O’Neill, Z. Solar radiation prediction using recurrent neural network and artificial neural network: A case study with comparisons. Renew. Energy 2020, 156, 279–289. [Google Scholar] [CrossRef]

- Alzahrani, A.; Shamsi, P.; Dagli, C.; Ferdowsi, M. Solar Irradiance Forecasting Using Deep Neural Networks. Procedia Comput. Sci. 2017, 114, 304–313. [Google Scholar] [CrossRef]

- Lee, W.; Kim, K.; Park, J.; Kim, J.; Kim, Y. Forecasting Solar Power Using Long-Short Term Memory and Convolutional Neural Networks. IEEE Access 2018, 6, 73068–73080. [Google Scholar] [CrossRef]

| Activation Function | Equation | Plot |

|---|---|---|

| Linear |  | |

| ReLU |  | |

| Leaky ReLU |  | |

| Tanh |  | |

| Sigmoid |  |

| Evaluation Metric | Equation |

|---|---|

| Error | |

| Mean absolute error (MAE) | |

| Mean absolute percentage error (MAPE) | |

| Mean bias error (MBE) | |

| Relative Mean bias error (rMBE) | |

| rRMSE | |

| RMSE | |

| Forecasting skill |

| Authors and Ref. | Forecast Horizon | Time Interval | Model | Input Parameter | Historical Data Description | RMSE (W/m2) |

|---|---|---|---|---|---|---|

| Cao et al. [38] | 1 day | hourly | RNN | -Solar irradiance | 1995–2000 (2192 days) | 44.326 |

| Niu et al. [37] | 10 min ahead | every 10 min | RNN | -Global solar radiation -Dry bulb temperature -Relative humidity -Dew point -Wind speed | 22–29 May 2016 (7 days) | 118 |

| 30 min ahead | 121 | |||||

| 1 h ahead | 195 | |||||

| Qing et al. [39] | 1 day ahead | hourly | LSTM | -Temperature -Dew Point -Humidity -Visibility -Wind Speed | March 2011–August 2012 January 2013–December 2013 (30 months) | 76.245 |

| Wang et al. [25] | 1 day ahead | every 15 min | CNN–LSTM | -Solar irradiance | 2008–2012 2014–2017 (3013 days) | 32.411 |

| LSTM | 33.294 | |||||

| Aslam et al. [40] | 1 h ahead | hourly | LSTM | -Solar irradiance | 2007–2017 (10 years) | 108.888 |

| GRU | 99.722 | |||||

| RNN | 105.277 | |||||

| 1 day ahead | LSTM | 55.277 | ||||

| GRU | 55.821 | |||||

| RNN | 63.125 | |||||

| Ghimire et al. [36] | 1 day ahead | every 30 min | CNN–LSTM | -Solar irradiance | January 2006–August 2018 | 8.189 |

| Husein et al. [3] | 1 day ahead | hourly | LSTM | -Temperature -Humidity -Wind speed -Wind direction -Precipitation -Cloud cover | January 2003–December 2017 | 60.310 |

| Hui et al. [41] | 1 day ahead | hourly | LSTM | -Temperature -Relative humidity -Cloud cover -Wind speed -Pressure | 2006–2015 (10 years) | 62.540 |

| Byung-ki et al. [42] | 1 day head | hourly | LSTM | -Temperature -Humidity -Wind speed -Sky cover -Precipitation -Irradiance | (1825 days) | 30.210 |

| Wojtkiewicz et al. [43] | 1 h ahead | hourly | GRU | -GHI -Solar zenith angle -Relative humidity -Air Temperature | January 2004–December 2014 | 67.290 |

| LSTM | 66.570 | |||||

| Yu et al. [44] | 1 h ahead | hourly | LSTM | -GHI -Cloud type -Dew point -Temperature -Precipitation -Relative humidity -Solar Zenith Angle -Wind speed -Wind direction | 2013–2017 | 41.370 |

| Yan et al. [45] | 5 min ahead | every 1 min | LSTM | -Solar irradiance | 2014 | 18.850 |

| GRU | 20.750 | |||||

| 10 min ahead | LSTM | 14.200 | ||||

| GRU | 15.200 | |||||

| 20 min ahead | LSTM | 33.860 | ||||

| GRU | 29.580 | |||||

| 30 min ahead | LSTM | 58.000 | ||||

| GRU | 55.290 |

| Authors and Ref. | Forecast Horizon | Interval Data | Model | Input Variable | Historical Data Description | RMSE (kW) | PV Size |

|---|---|---|---|---|---|---|---|

| Vishnu et al. [49] | 1 h ahead | hourly | CNN–LSTM | -Irradiation -Wind speed -Temperature | March 2012–December 2018 | 0.053 | N/A |

| 1 day ahead | 0.051 | ||||||

| 1 w ahead | 0.045 | ||||||

| Gensler et al. [50] | 1 day ahead | hourly | LSTM | -PV power | (990 days) | 0.044 | N/A |

| Wang et al. [51] | 1 h ahead | hourly | GRU | -Total column liquid water -Total column ice water -Surface pressure -Relative humidity -Total cloud cover -Wind speed -Temperature -Total precipitation -Total net solar radiation -Surface solar radiation -Surface thermal radiation | April 2012–May 2014 | 68.300 | N/A |

| Zhang et al. [34] | 1 min ahead | every 1 min | LSTM | -Sky images -PV Power | 2006 | 0.139 | 10 × 6 m2 |

| Abdel-Nassar et al. [52] | 1 h ahead | hourly | LSTM | -PV power | (12 months) | 82.150 | N/A |

| Lee et al. [53] | 1 h ahead | hourly | LSTM | -PV power | June 2013–August 2016 (39 months) | 0.563 | N/A |

| Lee et al. [54] | 1 h ahead | hourly | RNN | -Temperature -Relative humidity -Wind speed -Wind direction -Sky index -Precipitation -Solar altitude | June 2017–August 2018 | 0.160 | N/A |

| Li et al. [55] | 15 min ahead | N/A | RNN | -PV power | January 2015–January 2016 | 6970 | N/A |

| LSTM | 8700 | ||||||

| 30 min ahead | RNN | 15,290 | |||||

| LSTM | 15,570 | ||||||

| Li et al. [48] | 1 h ahead | every 5 min | LSTM | -PV power | June 2014–June 2016 (743 days) | 0.885 | 199.16 m2 |

| GRU | 0.847 | ||||||

| RNN | 0.888 | ||||||

| Wang et al. [56] | 5 min ahead | every 5 min | LSTM | -Current phase average -Wind speed -Temperature -Relative humidity -GHI -DHI -Wind direction | 2014–2017 (4 years) | 0.398 | 4 × 38.37 m2 |

| CNN–LSTM | 0.343 | ||||||

| Wen et al. [57] | 1 h ahead | hourly | LSTM | -Temperature -Humidity -Wind speed -GHI -DHI | 1 January–1 February 2018 | 7.536 | N/A |

| Sharadga et al. [58] | 1 h ahead | hourly | LSTM | -PV power | January–October 2010 | 841 | N/A |

| 2 h ahead | 1102 | ||||||

| 3 h ahead | 1824 |

| Input Sequence (Years) | LSTM | CNN–LSTM (kW) | ||

|---|---|---|---|---|

| RMSE (kW) | MAE (kW) | RMSE (kW) | MAE (kW) | |

| 0.5 | 1.244 | 0.654 | 1.161 | 0.559 |

| 1 | 1.393 | 0.616 | 1.434 | 0.628 |

| 1.5 | 1.533 | 0.599 | 1.248 | 0.529 |

| 2 | 1.320 | 0.457 | 0.941 | 0.397 |

| 2.5 | 0.945 | 0.389 | 0.426 | 0.198 |

| 3 | 0.398 | 0.181 | 0.343 | 0.126 |

| 3.5 | 1.150 | 0.455 | 0.991 | 0.384 |

| 4 | 1.465 | 0.565 | 0.886 | 0.405 |

| Forecast Horizon (min) | Model | Spring | Summer | Autumn | Winter | ||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | ||

| (W/m2) | (W/m2) | (W/m2) | (W/m2) | ||||||

| 5 | LSTM | 36.67 | 26.95 | 89.91 | 59.20 | 18.85 | 13.13 | 44.24 | 21.58 |

| GRU | 36.82 | 27.18 | 89.77 | 59.70 | 20.75 | 16.03 | 43.66 | 23.60 | |

| 10 | LSTM | 41.02 | 29.65 | 42.23 | 32.96 | 53.01 | 33.62 | 14.20 | 11.09 |

| GRU | 44.71 | 34.42 | 41.08 | 30.92 | 55.00 | 38.35 | 15.20 | 12.83 | |

| 20 | LSTM | 56.22 | 49.09 | 46.31 | 40.58 | 33.86 | 28.11 | 43.54 | 39.10 |

| GRU | 45.23 | 36.78 | 53.97 | 47.44 | 29.58 | 24.55 | 41.03 | 37.09 | |

| 30 | LSTM | 58.77 | 47.54 | 58.00 | 47.82 | 81.75 | 59.08 | 61.68 | 52.29 |

| GRU | 60.42 | 49.65 | 55.29 | 50.52 | 82.12 | 60.71 | 62.33 | 54.13 | |

| Model | RMSE (W/m2) | MAE (W/m2) | ||||||

|---|---|---|---|---|---|---|---|---|

| 1 Day | 1 Week | 2 Weeks | 1 Month | 1 Day | 1 Week | 2 Weeks | 1 Month | |

| CNN–LSTM | 8.189 | 16.011 | 14.295 | 32.872 | 6.666 | 9.804 | 8.238 | 13.131 |

| LSTM | 21.055 | 18.879 | 16.327 | 33.387 | 18.339 | 11.275 | 10.750 | 14.307 |

| RNN | 20.177 | 18.113 | 15.494 | 41.511 | 18.206 | 11.387 | 10.492 | 26.858 |

| GRU | 14.289 | 21.464 | 19.207 | 57.589 | 11.320 | 15.658 | 14.005 | 39.716 |

| Season | Type of Weather | LSTM (kW) | GRU (kW) | RNN (kW) |

|---|---|---|---|---|

| Winter | Sunny | 1.2541 | 1.2399 | 1.2468 |

| Cloudy | 1.1279 | 0.2206 | 0.2867 | |

| Rainy | 2.2336 | 2.0876 | 2.1223 | |

| Spring | Sunny | 0.1643 | 0.2456 | 0.3431 |

| Cloudy | 0.2759 | 0.6452 | 0.4222 | |

| Rainy | 0.8107 | 1.0036 | 0.8604 | |

| Summer | Sunny | 0.9701 | 1.0748 | 0.8514 |

| Cloudy | 0.8398 | 0.9323 | 0.8812 | |

| Rainy | 0.3009 | 0.5805 | 0.4993 | |

| Autumn | Sunny | 0.7395 | 0.8029 | 0.7778 |

| Cloudy | 1.0540 | 1.2110 | 1.1365 | |

| Rainy | 2.4216 | 2.3687 | 2.4275 |

| Model | The Best Case/s | The Worst Case/s | The Average Case/s |

|---|---|---|---|

| LSTM | 393.01 | 400.57 | 396.27 |

| GRU | 354.92 | 379.57 | 365.40 |

| Region | Year | Hourly | Daily | ||

|---|---|---|---|---|---|

| LSTM (s) | GRU (s) | LSTM (s) | GRU (s) | ||

| Seoul | 2017 | 1251.23 | 1004.15 | 88.35 | 72.56 |

| 2016 | 1060.82 | 832.63 | 77.98 | 64.12 | |

| Busan | 2017 | 1269.21 | 1028.43 | 90.42 | 75.44 |

| 2016 | 1023.27 | 830.54 | 75.99 | 64.29 | |

| Model | LSTM (s) | CNN–LSTM (s) |

|---|---|---|

| Training time | 70.490 | 983.701 |

| Forecast Horizon (min) | RMSE (W/m2) | |

|---|---|---|

| ANN | RNN | |

| 10 | 55.7 | 41.2 |

| 30 | 63.3 | 53.3 |

| 60 | 170.9 | 58.1 |

| Model | FFNN (W/m2) | SVR (W/m2) | LSTM (W/m2) |

|---|---|---|---|

| RMSE | 0.160 | 0.110 | 0.086 |

| Model | RMSE (kW) | |

|---|---|---|

| With Weather Data | Without Weather Data | |

| RFR | 0.178 | 0.191 |

| SVR | 0.122 | 0.126 |

| CNN–LSTM | 0.098 | 0.140 |

| Season | RMSE (kW) | ||||

|---|---|---|---|---|---|

| Winter | Spring | Summer | Autumn | Average | |

| GRU | 847 | 917 | 1238 | 1074 | 1035 |

| MLP | 916 | 1069 | 1263 | 1061 | 1086 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rajagukguk, R.A.; Ramadhan, R.A.A.; Lee, H.-J. A Review on Deep Learning Models for Forecasting Time Series Data of Solar Irradiance and Photovoltaic Power. Energies 2020, 13, 6623. https://doi.org/10.3390/en13246623

Rajagukguk RA, Ramadhan RAA, Lee H-J. A Review on Deep Learning Models for Forecasting Time Series Data of Solar Irradiance and Photovoltaic Power. Energies. 2020; 13(24):6623. https://doi.org/10.3390/en13246623

Chicago/Turabian StyleRajagukguk, Rial A., Raden A. A. Ramadhan, and Hyun-Jin Lee. 2020. "A Review on Deep Learning Models for Forecasting Time Series Data of Solar Irradiance and Photovoltaic Power" Energies 13, no. 24: 6623. https://doi.org/10.3390/en13246623

APA StyleRajagukguk, R. A., Ramadhan, R. A. A., & Lee, H.-J. (2020). A Review on Deep Learning Models for Forecasting Time Series Data of Solar Irradiance and Photovoltaic Power. Energies, 13(24), 6623. https://doi.org/10.3390/en13246623