1. Introduction

To date, four international energy crises have occurred: The oil crisis of 1973, caused by the Middle East War; the Iranian oil crisis of 1979, caused by the Islamic Revolution; the 1990 oil crisis, caused by the Gulf War; and the energy crisis in 2008 caused by the financial crisis. In addition to being a subsystem of the national economy, the energy system incorporates the petroleum, coal, natural gas, and power economy. Birol [

1] showed that the 2008 global financial crisis caused the energy demand in most countries to decrease, ultimately leading to the energy crisis. On the other hand, imbalances between energy supply and demand are still the root cause of rising energy prices, and high energy prices were an underlying cause of the financial crisis.

Compared with fossil fuel oil and coal, natural gas is a more environment friendly and green clean energy. As a result, natural gas has gained an increasingly prominent role in the global energy market. As we know, people always use the settlement prices at the Henry Hub as benchmarks for the entire North American natural gas market and parts of the global liquid natural gas (LNG) market. According to the International Energy Agency (IEA), natural gas had a remarkable year in 2018, with a 4.6% increase in consumption, accounting for nearly half of the increase in global energy demand (

https://www.iea.org/fuels-and-technologies/gas). Accurate predictions of crises in the natural gas market make it possible for investors and policymakers to minimize losses.

Figure 1 shows some of the major events that affected US natural gas prices between 1994 and 2019.

An early warning system (EWS) is a chain of information communication systems comprising sensors, event detection, and decision subsystems. By employing such systems to detect crises before they occur, we can reduce false alarms [

2]. An EWS must be able to send a clear signal about whether an economic crisis is impending to complement the expert judgment of decision-makers. Edison [

3] developed an operational EWS that successfully detects financial crises based on a signal approach [

4,

5]. Ideally, an EWS should not ignore any crisis events but should minimize false alarms. However, the cost of not sending a global crisis signal is much higher than the cost of an incorrect alert.

Machine learning refers to a scientific model in which computer systems rely on patterns and inference to perform specific tasks without applying explicit instructions. Machine learning is becoming increasingly useful in finance. As banks and other financial institutions work to strengthen security, streamline processes, and forecast crises, machine learning is becoming their technology of choice. Lin et al. [

6] conducted a survey on machine learning in financial crisis prediction, using a machine learning algorithm to investigate the achievements and limitations of cy-prediction and credit-scoring models.

In this study, we propose a dynamic moving window and expanding window methodology that incorporates extreme gradient boosting (XGboost), a support vector machine (SVM), a logistic regression (LogR), a random forest (RF), and a neural networks (NNs) as machine learning methods to predict US natural gas crises. To the best of our knowledge, this study is the first to combine dynamic methodologies with machine learning to predict such crises. The main contributions of this study are as follows:

We employ dynamic methodologies to define a crisis, thus preventing extreme crisis events.

We use advanced machine learning techniques, including XGboost, neural networks, and other traditional machine learning methods.

We combine dynamic methods with machine learning techniques to increase the prediction accuracy of the model.

We employ novel model evaluation methodologies, and use daily data for the period 1994 to 2019. The long period ensures enough data for the machine learning model, and allows us to check whether US natural gas crises are persistent and clustered.

Our main conclusion is that the combination of XGboost and the dynamic moving window approach performs well in predicting US natural gas crises, particularly in the partial variable case with a moving window. In addition, LogR in the moving window method and NN in the expanding window method do not perform badly in a partial variables situation whereas SVM in the moving window and LogR in the expanding window performance do not perform badly in the all variables situation.

The remainder of the paper proceeds as follows.

Section 2 reviews relevant empirical works. In

Section 3, we present the data and develop the model. In

Section 4, we provide a brief description and technical analysis of the machine learning models employed in this study. In

Section 5, we introduce novel model evaluation approaches. Here, we also present the empirical results for each machine learning model.

Section 6 concludes the paper. The

Appendix A provides the XGboost variable importance plots, the parameter tuning for the postulated SVM plots, the estimated LogR model results, and the RF variable importance plots.

2. Literature Review

Recent financial crises have highlighted their devastating effects on the economy, society, and politics. Therefore, being able to predict crises and disasters, particularly in areas, such as banking, finance, business, medical, and others, means we can implement appropriate measures in advance, and thus minimize losses. Chen et al. [

7] considered value-at-risk (VaR) forecasting using a computational Bayesian framework for a portfolio of four Asia-Pacific stock markets, showing that the Autoregressive Conditional Heteroscedasticity (GARCH) model outperforms stochastic volatility models before and after the crises. Bagheri et al. [

8] proposed a new hybrid intelligence approach to predict financial periods for the foreign exchange market, finding that it performs well for price forecasting. Niemira and Saaty [

9] developed an imbalanced crisis turning point model based on the analytical network process framework to forecast the probability of crises, finding this method to be more flexible and comprehensive than traditional methods. Chiang et al. [

10] showed that traders could generate higher returns by employing their proposed adaptive decision support system model.

An energy crisis refers to an energy shortage caused by rising energy prices, and energy and financial crises have mutual effects. Gorucu [

11] employed an artificial neural network (ANN) to evaluate and predict natural gas consumption in Ankara, Turkey, examining the factors affecting the output, and then training the ANN to determine the optimal parameters to predict gas consumption, achieving a good performance. Xie et al. [

12] used an SVM to forecast crude oil prices, finding that it outperforms both auto regressive integrated moving average and back-propagation neural network models.

Regarding crisis prediction, there are currently three international financial crisis early warning models. First, the discrete choice (probit or logit) model, developed by Frankel and Rose [

13], has been used to model financial contagion by Bae et al. [

14]. Second, Sachs et al. [

15] developed an STV cross-section regression model, finding that the depreciation of the real exchange rate, growth of domestic private loans, and international reserves/M2 (a calculation of the money supply that includes cash and checking deposits as well as near money) are important indicators of whether a country will experience a financial crisis. Third, Kaminsky and Reinhart [

5] developed a signaling approach-KLR model that monitors the evolution of several indicators that approach to show unusual behavior in periods preceding a crisis. Furthermore, Knedlik and Schweinitz [

16] investigated debt crises in Europe by applying a signal approach, finding that a broad composite indicator has the highest predictive power. Numerous works have since extended these three models, thus improving the early warning system. Based on the multinomial logit model, Bussiere and Fratzscher [

17] developed a new early warning system (EWS) model for financial crises. Their results show that employing the proposed EWS substantially improves the ability to forecast financial crises, and that their model would have predicted crises correctly in emerging markets between 1993 and 2001. Xu et al. [

18] combined an RF and a wavelet transform in a new EWS to forecast currency crises, finding that real exchange rate appreciation and overvaluation can be measured over a period of 16 to 32 months. Saleh et al. [

19] applied a new EWS model for systemic financial fragility and near crises in The Organisation for Economic Co-operation and Development (OECD) countries over a 27-year period, finding that the model has significant implications for financial stability and regulation.

Researchers are increasingly combining EWSs with machine learning techniques to predict financial crises. Ahn et al. [

20] extended the EWS classification method to a traditional-type crisis, using an SVM to forecast financial crises. As such, they proved that an SVM is an efficient classifier. Sevim et al. [

21] developed three EWSs, based on an ANN, a decision tree, and a LogR model, respectively, to predict currency crises, finding that the decision tree model can predict crises up to a year ahead with approximately 95% accuracy. In order to predict currency crises, Lin et al. [

22] developed a hybrid causal model, which is integrated by the learning ability of a neural network with the inference mechanism of a fuzzy logit model, showing that this approach is promising in terms of preventing currency crises. Finally, Chatzis et al. [

23] employed a classification tree, SVM, RF, neural network, XGboost, and deep neural network to forecast stock market crises. They show that deep neural networks increase the classification accuracy significantly, and propose an efficient global systemic early warning tool.

Few studies have used machine learning methods with moving windows to predict financial crises. However, studies have focused on other classification methods. Bolbol et al. [

24] combined a moving window method with an SVM to classify Global Positioning System (GPS) data into different transportation modes. Chou and Ngo [

25] combined the time-series sliding window with the machine learning system to predict real-time building energy-consumption data, showing that the model can potentially predict building energy consumption using big data.

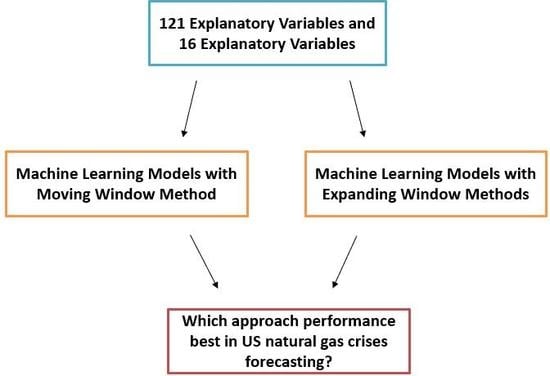

From the aforementioned literature, we can see that the methodologies proposed here offer clear innovations and advantages. Thus, we compared the predictive ability of the following models: XGboost, a SVM, a LogR, a RF, and a NN. In addition, we used a moving window and an expanding window approach. Furthermore, we investigated the effects of explanatory variables on the models’ predictive ability by splitting the variables into two categories—natural gas-related predictors (16 predictors), and all predictors (121 predictors)—to compare their prediction accuracy separately. To the best of our knowledge, our study is the first to analyze US natural gas market crises by combining machine learning techniques with dynamic forecasting methods (the moving window and expanding window method).

3. Data

3.1. Data Collection

We employed daily data to model US natural gas market crises, focusing on the US crude oil, stock, commodity, and agriculture markets, where the transmission of extreme events is stronger. In order to obtain an adequate daily data sample for machine learning, we collected data from many different sources and databases (see

Table 1).

The raw data included the most sensitive market variables from the energy, stock, commodity, and agriculture markets. To remove the effect of the exchange rate on our results, we consolidated the currency units of the variables into a dollar currency unit. Then, because natural gas has the shortest period of daily data (8 June 1990–18 December 2019), we focused on this period for all variables. Finally, we obtained a raw daily price data set with 7152 records. This data set enabled us to model contagion dynamics and financial market interdependence across natural gas-related variables and over time. In order to facilitate the reading of this paper, we named the professional items as exhibited in

Table 2.

3.2. Data Processing

In

Section 3.1, we described the raw data and the prices of the variables. Our goal was to predict next-day crisis events in the US natural gas market using machine learning. Therefore, we needed to define a “crisis event” for natural gas. We also wanted to apply the moving window and expanding window methods in our model. We illustrate these two methods below (see

Table 3) [

26].

We set a 1000-day window, covering five years of daily data. First, we employed the logarithmic difference method to compute the daily return of natural gas. Then, in the daily data set, we calculated the initial empirical distribution of the return on 22 September 1994, based on the daily returns of the first 1000 observations (covering the period 11 June 1990–21 September 1994, which is a 1000-day window). Next, for the moving window method, for each subsequent record, we fixed the number of days (1000 days in the daily data set), and moved the window forward by one day. Then, we recalculated the empirical distribution of the returns based on the new period. Thus, for the last observation in the daily data (18 December 2019), the empirical distribution of the returns was based on the period 23 November 2015 to 17 December 2019 (i.e., 1000 days). In contrast, for the expanding window method, for each subsequent record, we increased the size of the window by one day. Then, we again recalculated the empirical distribution of the returns, including the new observation. Hence, for the last observation in the daily data (18 December 2019), the empirical distribution of the returns was based on the period 11 June 1990, to 17 December 2019 (i.e., 7150 days).

Thus, we obtained the preliminary processed data from which to calculate the “crisis events.” Bussiere and Fratzscher [

17] developed an EWS model based on a multinomial logit model to forecast financial crises, using

to define a currency crisis for each country

i and period

t. Patel and Sarkar [

27], Coudert and Gex [

28], and Li et al. [

29] used

, defined as the ratio of an index level at month

t to the maximum index level for the period up to month

t. Here, we employed the return of natural gas, defined as follows:

where

is the price of natural gas on day

t. Next, as in Bussiere and Fratzscher [

17], we defined

as a crisis event when the natural gas return

is less than two standard deviations below its mean value:

where

and

were calculated based on

Table 3. The former is the mean value calculated by the moving window or expanding window on day

t. Similarly, the latter denotes the standard deviation calculated by the corresponding methods. We plotted the crises processing procedure, as shown in

Figure 2. After processing these data, we obtained two predicted/dependent variables in the daily data set. These constitute binary indicators that take the value of 1 when a crisis occurs in the US natural gas market on the following day, and 0 otherwise. Based on these formulae,

Table 4 and

Figure 3 summarize the crisis events between 22 September 1994 and 18 December 2019.

We have outlined the exploratory binary variables in the daily data set using the moving window and expanding window methods. Next, we constructed the independent variables for our machine learning model. In particular, to capture the subtle dynamics and correlations, we performed the following transformation:

We calculated the average number of crises during the previous five working days, based on the total number of crisis events.

We used the logarithmic difference method to derive the continuously compounded daily return for the prices of natural gas, crude oil, bond yield, gold, canola, cocoa, sugar, rice, corn, and wheat.

We calculated the lagged variables for each crisis indicator, for one to five days. Specifically, we considered these lags (lag1 to lag5) for the crisis itself, the returns on all variables except the VIX index, and the returns on all variables including the VIX index.

After the above processing, we obtained two summary tables of daily data for the period 28 February 1994 to 18 December 2019, yielding 6146 records and 121 predictors. The first table determines crises using the moving window method, and the second uses the expanding window method.

4. Model Development

As already discussed, we combined the moving window method, expanding window method, and machine learning to predict crisis events. In addition, we used a moving window and expanding window in the data processing step. Therefore, we employed corresponding methods to develop our model using machine learning. By doing so, we derived dynamic results and improved the accuracy of the model prediction [

25]. To maintain the initial number of windows, we employed 1000 days as the initial number of days for the moving window (fixed) and the expanding window (increasing by one day). In order to combine machine learning with the two window methods, we inserted loop statements into the machine learning model to perform 5146 iterations. For both methods, we set all data in the window as the training data set, and set the day following the final day in the training data set as the test data set. For example, in the final loop calculation, the training data set for the moving window method covers the period 19 November 2015 to 17 December 2019 (1000 days), and the test data is that of 18 December 2019; for the expanding window method, the training data set covers the period 29 September 1994 to 17 December 2019 (6145 days), and the test data is that of 18 December 2019. Therefore, we derived a confusion matrix for crisis prediction once the loops were complete.

Next, we chose our methods following Chatzis et al. [

23], deciding on the following: XGboost [

30], SVM [

31], LogR [

32], RF [

33,

34,

35], and NN [

36]. We split the explanatory variables into two types to compare the predictions: A natural gas-related variable (the average number of crises during the previous five working days, lags 1 to 5 of crises, and lags 1 to 5 of the prices and returns of natural gas, yielding 16 predictors), and all variables (121 predictors, including natural gas-related variables). In the following section, we describe the development process and the parameter tuning for each model.

4.1. Extreme Gradient Boosting

XGboost is not only an improvement algorithm on the boosting algorithm based on gradient-boosted decision trees, and the internal decision tree uses a regression tree, but also an efficient and prolongable development of the gradient boosting framework [

37]. It supports multiple kinds of objective functions, like regression, classification, ranking, and so on. Furthermore, in terms of model accuracy, efficiency, and scalability, XGboost outperforms RFs and NNs [

23]. In our study, we employed the XGboost R package developed by Chen and He [

30].

In order to increase the accuracy of the model prediction, we employed a (five-fold) cross-validation method to debug a series of vital hyperparameters. In order to build the classification tree, we determined the maximum depth of the tree, minimum number of observations in a terminal node, and size of the subsampling for both window methods. We also tuned the hyperparameter , which controls the minimum reduction in the loss function required to grow a new node in a tree, and reduces overfitting the model. In addition, we tuned (the L1 regulation term on the weights) and (the L2 regulation term on weights). Because of the binary nature of our dependent variable, we applied the LogR objective function for the binary classification to train the model. We also calculated the area under the curve (AUC) of our model, which is equal to the probability that the classifier ranks randomly selected positive instances higher than randomly selected negative instances. In our model training, when we employed the moving window method, the AUC values were above 0.8, regardless of whether we used 16 natural gas-related explanatory variables or 121 explanatory variables, indicating a very good performance. However, we derived an AUC of only 0.67 when applying the expanding window method, indicating a poor performance for the combination of this and the XGboost method.

Because we used the moving window and expanding window methods in our study, we cannot show every XGboost variable importance plot, because there were more than 5000 plots after the loop calculation. Therefore, we present the plots for the last step of the loop in the

Appendix A. Here,

Figure A1 and

Figure A2 show that lag5 of the natural gas price is the most important variable in the moving window method. However, lag2 of the natural gas return is the most important in the expanding window method.

4.2. Support Vector Machines

Erdogan [

38] applied an SVM [

31] to analyze the financial ratio in bankruptcy, finding that the SVM with a Gaussian kernel is capable of predicting bankruptcy crises. This is because the SVM has a regularization parameter, which controls overfitting. Furthermore, using an appropriate kernel function, we can solve many complex problems. An SVM scales relatively well to high-dimensional data. Therefore, it is widely used in financial crisis prediction, handwriting recognition, and so on.

In our study, we used sigmoid, polynomial, radial basis function, and linear kernels to evaluate a soft-margin SVM, finding that the sigmoid function performs optimally. In order to select the optimal hyperparameter (e.g., , the free parameter of the Gaussian radial basis function, and c, the cost hyperparameter of the SVM, which involves a trading error penalty for stability), we applied cross-validation. We selected the optimal values of these hyperparameters using the grid-search methodology. We implemented the SVM model using the e1071 package in R, employing the tuning function in the e1071 package to tune the grid-search hyperparameter.

Unlike the XGboost methodology, we could tune the parameters

and

c of the daily data set before executing the loop calculation. The plots of the parameter tuning for the SVM model are provided in the

Appendix A.

Figure A3 and

Figure A4 show the hyperparameters

(

x-axis) and

c (

y-axis) using the grid-search method in the SVM model. The parameter

c is the cost of a misclassification. As shown, a large

c yields a low bias and a high variance, because it penalizes the cost of a misclassification. In contrast, a small

c yields a high bias and a low variance. The goal is to find a balance between “not too strict” and “not too loose”. For

, the parameter of the Gaussian kernel, low values mean “far” and high values mean “close”. When

is too small, the model will be constrained and will not be able to determine the “shape” of the data. In contrast, an excessively large

will lead to the radius of the area of influence of the support vectors only including the support vector itself. In general, for an SVM, a high value of gamma leads to greater accuracy but biased results, and vice versa. Similarly, a large value of the cost parameter (

c) indicates poor accuracy but low bias, and vice versa.

4.3. Logistic Regression

In statistics, the LogR algorithm is widely applied for modeling the probability of a certain class or event existing, such as crisis/no crisis, healthy/sick or win/lose, and so on. The LogR is a statistical model, which applies a logistic function to model a binary dependent variable in its basic form. Ohlson [

32] employed a LogR to predict corporate bankruptcy using publicly available financial data. Yap et al. [

39] employed a LogR to predict corporate failures in Malaysia over a 10-year period, finding the method to be very effective and reliable. In our study, we employed the glm function in R, following Hothorn and Everitt [

40]. In addition, because the output of a LogR is a probability, we selected optimistic thresholds for the two window methods separately when predicting crises. We also employed a stepwise selection method to identify the statistically significant variables. However, this did not result in a good performance. Therefore, we did not consider the stepwise method further.

As before, we summarize some of the outcomes of our analysis in the

Appendix A. Here,

Table A1 and

Table A2 exhibit some of the outcomes of our analysis and we also present the plot of the final step of the loop calculation, showing only the intentionality of some of the variables. As shown, whether in the partial variables case or the all variables case, the lagged value and the average of five days of crisis are the most intentional.

4.4. Random Forests

An RF [

33,

34,

35] is an advanced learning algorithm employed for both classification and regression created by Ho [

41]. A forest in the RF model is made up of trees, where a greater number of trees denotes a more robust forest. The RF algorithm creates decision trees from data samples, obtains a prediction from each, and selects the best solution by means of voting. Compared with a single decision tree, this is an ensemble method. Because it can reduce overfitting by averaging the results. RFs are known as bagging or bootstrap aggregation methods. By identifying the best segmentation feature from the random subset of available features, further randomness can be introduced.

In RF, a large number of predictors can provide strong generalization; our model contains 121 predictors. In order to improve the accuracy of model prediction, we must obtain an optimal value of m, the number of variables available for splitting at each tree node. If m is relatively low, then both the inter-tree correlation and the strength of each tree will decrease. To find the optimal value, we employed the grid-search method. In addition to m, we tuned the number of trees using the grid-search method. To reduce the computing time, we applied the ranger package in R to construct the prediction model, employing the tuneRF function in the randomForest package.

We provide the RF variable importance plots in the last loop calculation in

Appendix A. In

Figure A5 and

Figure A6, we present the importance of each indicator for the classification outcome. The obtained ranking is based on the mean decrease in the Gini. This is the average value of the total reduction of variables in node impurities, weighted by the proportion of samples that reach the node in each decision tree in RF. Here, a higher mean decrease in the Gini indicates higher variable importance. We found that in the partial variables case, the lags of the natural gas price and the natural gas return are relatively important; however, in the case of all variables, this was not the case.

4.5. Neural Networks

In the field of machine learning and cognitive science, an NN [

34] is a mathematical or computational model, which imitates the structure and function of a biological neural network (animal central nervous system, especially the brain), employed to estimate or approximate functions. Like other machine learning methods, NN have been used to solve a variety of problems, such as credit rating classification problems, speech recognition, and so on. The most usually considered multilayer feedforward network is composed of three types of layers. First, in the input layer, many neurons accept a large number of nonlinear input messages. The input message is called the input vector. Second, the hidden layer, which is the various layers composed of many neurons and links between the input layer and output layer. The hidden layer can have one or more layers. The number of neurons in the hidden layer is indefinite, but the greater the number, the more nonlinear the NN becomes, so that the robustness of the neural network is more significant. Third, in the output layer, messages are transmitted, analyzed, and weighed in the neuron link to form the output. The output message is called the output vector.

In order to increase the accuracy of the model prediction, we employed the grid-search method to find the best parameter size and decay. The parameter size is the number of units in the hidden layer and parameter decay is the regularization parameter to avoid over-fitting. We used nnet package in R to develop the model prediction.

5. Model Evaluation

In this section, we evaluate the robustness of our methods by performing a comprehensive experimental evaluation procedure. Specifically, we use several criteria to evaluate the aforementioned models in terms of crisis event prediction.

5.1. Verification Methods

Accuracy is measured by the discriminating power of the rating power of the rating system. It is the most commonly used indicator for classification, and it estimates the overall effectiveness of an approach by estimating the probability of the true value of the class label. In the following section, we introduce a series of indicators that are widely employed to quantitatively estimate the discriminatory power of each model.

In machine learning, we can derive a square 2 × 2 matrix, as shown in

Table 5, which we used as the basis of our research.

Bekkar et al. [

42] state that imbalanced data learning is one of the challenging problems in data mining, and consider that skewed classes in the level distribution can lead to misleading assessment methods and, thus, bias in the classification. To resolve this problem, they present a series of alternatives for imbalanced data learning assessment, which we introduce below.

Bekkar et al. [

42] defined sensitivity and specificity as follows:

Sensitivity and specificity assess the effectiveness of an algorithm on a single class for positive and negative outcomes, respectively. The evaluation measures are as follows:

G-mean: The geometric mean is the product of the sensitivity and specificity. This metric can indicate the valance between the classification performance of the majority and minority classes. In addition, the G-mean measures the degree to which we avoid overfitting on the negative class, and the degree to which the positive class is marginalized. The formula is as follows:

Here, a low G-mean value means the prediction performance of the positive cases is poor, even if the negative cases are correctly classified by the algorithm.

LP: The positive likelihood ratio is that between the probability of predicting an example as positive when it is really positive, and the probability of a positive prediction when it is not positive. The formula is as follows:

LN: The negative likelihood ratio is that between the probability of predicting an example as negative when it is really positive, and the probability of predicting a case as negative when it is actually negative. The formula is as follows:

DP: The discriminant power, a measure that summarizes sensitivity and specificity. The formula is as follows:

DP values can evaluate the degree to which the algorithm differentiates between positive and negative cases. DP values lower than one indicate that the algorithm differentiates poorly between the two, and values higher than three indicate that it performs well.

BA: Balanced accuracy, calculated as the average of the sensitivity and specificity. The algorithm is as follows:

If the classifier performs equally well in both classes, this term reduces to the conventional accuracy measure. However, if the classification performs well in the majority class (in our study, “no crisis”), the balance accuracy will drop sharply. Therefore, the BA considers the majority class and minority class (in our study, “crisis”) equally.

WBA: The weighted balance accuracy. Under the weighting scheme 75%: 25%, the WBA emphasizes sensitivity over specificity, and is defined by Chatzis et al. [

23] as follows:

Youden’ s

: An index that evaluates the ability of an algorithm to avoid failure. This index incorporates the connection between sensitivity and specificity, as well as a linear correspondence with balanced accuracy:

F-measure: The F-measure employs the same contingency matrix as that of relative usefulness. Powers [

43] shows that an optimal prediction implies an F-measure of one. The formula is as follows:

DM-test: The Diebold–Mariano test is a quantitative approach to evaluate the forecast accuracy of US natural gas crises predicting models in our paper [

44]. In our paper, the DM-test can help us to discriminate the significant differences of the predicting accuracy between the XGboost, SVM, LogR, RF, and NN models based on the scheme of quantitative analysis and about the loss function. We employed the squared-error loss function to do the DM-test.

To simplify the explanation of its values, the F-measure was defined such that a higher value of indicates a better ability to avoid classifying incorrectly.

After a series of illustrations of the algorithm above, we derived a variety of methods to evaluate the model prediction ability. Here, we used all of the methods to derive a comprehensive and accurate view of the models’ performance. Lastly, we calculated an optimal US natural gas market collapse probability critical point for each fitted model, thus obtaining the optimal sensitivity and specificity measures. We focused on false negatives rather than false positives, because the goal of our work was to construct a supervised warning mechanism, which can predict as many correct signals as possible while reducing the incidence of false negatives.

5.2. Models Prediction Results

We identified 121 predictors and 6146 records for the period 29 September 1994 to 18 December 2019. In addition to using the two window methods, we determined the performance of the models when predicting US natural gas crises using natural gas-related predictors only (16 predictors) and all predictors (121 predictors) separately. In the following, we refer to the combination of the moving window method and the natural gas-related predictors as the “partial variables with the moving window” method. Similarly, we have the “partial variables with expanding window” method. When we combined the moving window method with all variables, we have the “all variables with a moving window” method and, similarly, we have the “all variables with an expanding window” method.

As shown in

Table 6 and

Table 7, when we applied the partial explanatory variables (16 variables) to develop the machine learning models, XGboost provided the best empirical performance for both window methods, followed by the LogR in the moving window method and NN in the expanding window method since the Youden’s

of XGboost was the highest in both two window methods, which means that XGboost has a better ability to avoid misclassification. Furthermore, the BA and WBA of XGboost was also the highest, which means that XGboost performs well in both crises’ prediction and no-crisis forecasting. When we employed all explanatory variables (121 variables), XGboost clearly still performed best in both dynamic methods, followed by the SVM in the moving window and LogR in the expanding window from Youden’s

values. Similarly, the BA and WBA of XGboost was also the highest in the all variables situation.

In addition, because

Table 6 and

Table 7 show that the forecasting performances of XGboost are great and far exceed the other four models, when implementing the DM-test, we only evaluated the forecast accuracy between XGboost and the other four models. From

Table 8 and

Table 9, we can see from the results of DM-test that in both the partial variables and all variables situations and whether by the moving window method or expanding window method, according to the DM-test based on the squared-error loss, all the DM-test values >1.96, the zero hypothesis is rejected at the 5% level of significance; that is to say, the observed differences between XGboost and other four models are significant and XGboost in US natural gas crises prediction accuracy is best between the five models used in our paper.

Summarizing the results across the five model evaluations in the test sample, it is evident that XGboost outperforms the other machine learning models. So far, there are very few papers related to forecasting US natural gas crises through machine learning. Thus, our research has made a certain contribution to the prediction of US natural gas crises.

5.3. Confusion Matrix Results

Referring to Chatzis et al. [

23], we computed the final classification performance confusion matrices for the evaluated machine learning models (see

Table 10 and

Table 11). Here, a false alarm means a false positive rate, and the hit rate denotes the proportion of correct crisis predictions in the total number of crises. For the best performing model, XGboost, we found that the false alarms do not exceed 25%, and the highest hit rate is 49%. Thus, our US natural gas crisis prediction accuracy can reach 49% using all variables with the moving window method.

6. Conclusions

We proposed a dynamic moving window and expanding window method that combines XGboost, an SVM, a LogR, an RF, and an NN as machine learning techniques to predict US natural gas crises. We proposed the following full procedure. First, we selected the most significant US natural gas financial market indicators, which we believe can be employed to predict US natural gas crises. Second, we combined the aforementioned dynamic methods with other methods (e.g., the returns and lags of the raw prices) to process the daily data set, and defined a crisis for the machine learning model. Third, we used the five machine learning models (XGboost, SVM, LogR, RF, and NN) with dynamic methods to forecast the crisis events. Finally, we evaluated the performance of each model using various validation measures. We demonstrated that combining dynamic methods with machine learning models can achieve a better performance in terms of predicting US natural gas crises. In addition, our empirical results indicated that XGboost with the moving window method achieves a good performance in predicting such crises.

Our main conclusions can be summarized as follows. From the various verification methods, DM-test results, and confusion matrix results, XGboost with a moving window approach achieved a good performance in predicting US natural gas crises, particularly in the partial variables case. In addition, LogR in the moving window method and NN in the expanding window method did not perform badly in the partial variables situation whereas SVM in the moving window and LogR in the expanding window did not perform badly in the all variables situation. Our conclusions can help us to forecast the crises of the US natural gas market more accurately. Because financial markets are contagious, policymakers must consider the potential effect of a third market. This is equally important for asset management investors, because diversified benefits may no longer exist during volatile times. Therefore, these findings can help investors and policymakers detect crises, and thus take preemptive actions to minimize potential losses.

The novel contributions of our study can be summarized as follows. First, to the best of our knowledge, this study is the first to combine dynamic methodologies with machine learning to predict US natural gas crises. A lot of the previous studies are about the currency exchange crises, bank credit crises, and so on. So far, there are very few papers related to forecasting US natural gas crises through machine learning. Second, we implemented XGboost, a popular and advanced machine learning model in the financial crisis prediction field. Additionally, we found that XGboost outperforms in forecasting US natural gas crises. Third, we employed various parameter tuning methods (e.g., the grid-search) to improve the prediction accuracy. Fourth, we used novel evaluation methods appropriate for imbalanced data sets to measure the model performance and we also employed the DM-test method and found that the forecasting performance of XGboost is significant. Finally, we employed in-depth explanatory variables that cover the spectrum of major financial markets.

Before combining the dynamic methodologies with machine learning techniques, we performed ordinary machine learning, in that 70% of the data was used as training data and 30% was used as test data. However, the prediction performance of this method was not so satisfactory. Although our research is the first to combine machine learning techniques with dynamic methodologies for US natural gas crisis prediction, the prediction accuracy of our model can be improved. This is left to future research. Lastly, because economic conditions change continuously and crises continue to occur, predicting crisis events will remain an open research issue.