Efficient Energy Management Based on Convolutional Long Short-Term Memory Network for Smart Power Distribution System

Abstract

:1. Introduction

- A novel end-to-end deep learning model for energy load forecasting framework based on encoder–decoder network architecture is proposed and designed. The encoding network consists of ConvLSTM and decoder with recurrent neural network-based LSTM, which serves to make the day ahead forecast.

- We formulate energy load forecasting as a spatio-temporal sequence forecasting problem that can be solved under the general sequence-to-sequence learning framework. Experiments prove that ConvLSTM-based architecture is more effective in capturing long-range dependencies.

- The performance of the proposed architecture is analyzed by training it with different optimizers viz. Adam and RMSProp to choose the optimal model.

- The proposed framework has good scalability and can be generalized to other similar application scenarios. In this work, we exploited univariate datasets ranging from a single household to city-wide electricity consumption.

- Quantitative and qualitative analyses are performed to forecast week-ahead energy consumption and the efficacy of the proposed model is confirmed in both cases compared with the existing state-of-the-art approaches.

2. Related Work

2.1. Smart Power Distribution Systems

2.2. Time Series Energy Load Forecasting

2.2.1. Statistical-Based Methods

2.2.2. Artificial Intelligence Methods

| Model | Author | Approach | Dataset Domain | Year | Dataset |

|---|---|---|---|---|---|

| Statistical Modelling | Yuting ji et al. [23] | Conditional Hidden Semi Markov Model | Household appliances | 2020 | Pecan street database |

| J. Nowicka-Zagrajek et al. [24], | ARMA | CAISO | 2002 | California, USA | |

| S.Sp. Pappas et al. [30] | ARMA | Hellenic Public Power Corporation S.A. | 2018 | Greece | |

| Nepal, B. et al. [31] | ARIMA | East Campus of Chubu University | 2019 | Japan | |

| Fatima Amara et al. [25] | ACCE with Linear Regression Model | Household electricity consumption | 2019 | Montreal, Quebec | |

| K.P. Amber et al. [32] | Multiple Regression Model | Administration building electricity consumption | 2015 | London, UK | |

| M. R. Braun et al. [33] | Multiple Regression Model | Supermarket electricity consumption | 2014 | Yorkshire, UK | |

| AI-Based Modelling | S.M. Mahfuz et al. [26] | Fuzzy Logic Controller | Residential apartment | 2020 | Memphis, TN, USA |

| Galicia et al. [27] | Ensembles | Spanish Peninsula | 2017 | Spanish electricity load data | |

| Grzegorz Dudek et al. [34] | LSTM and ETS | 35 European countries | 2020 | ENTSO-E | |

| Ljubisa Sehovac et al. [35] | S2S with Attention | Commercial building | 2020 | IESO, Ontario, Canada | |

| Yuntian Chen et al. [28] | Ensemble Long Short-Term Memory | Provincial (12 districts) | 2021 | Beijing, China | |

| T. Y. Kim et al. [36] | CNN-LSTM | Household electricity consumption | 2019 | UCI household electricity consumption dataset | |

| W. Kong et al. [37] | Sequence-to-Sequence | Household electricity consumption | 2019 | New South Wales, Australia | |

| D. Syed et al. [38] | LSTM | Household Electricity Consumption | 2021 | UCI household electricity consumption dataset | |

| Kunjin Chen et al. [39] | ResNet and Ensemble ResNet | North American Utility Dataset, ISO-NE, and GEFCom 2014 | 2018 | North American Utility Dataset, ISO-NE, and GEFCom 2014 | |

| Mohammad F. et al. [40] | Feed Forward Neural Network and Recurrent Neural Network | NYISO | 2019 | New York, USA |

3. Deep Learning Architectures for Energy Load Forecasting Modelling

3.1. LSTM-Based Architecture

3.2. LSTM Encoder–Decoder-Based Architecture

3.3. CNN- and LSTM-Based Architecture

3.4. ConvLSTM Architecture

4. The Proposed Energy Load Forecasting System

4.1. Data Sources

- Smart Home Energy Management System (SHEMS):

- Smart Grid Energy Management System (SGEMS):

4.2. Data Preprocessing

4.3. Forecasting Model

4.4. Decision

5. Proposed Deep Learning Architecture for Energy Load Forecasting

5.1. ConvLSTM-LSTM Architecture for Energy Load Forecasting

5.2. Implementation Details

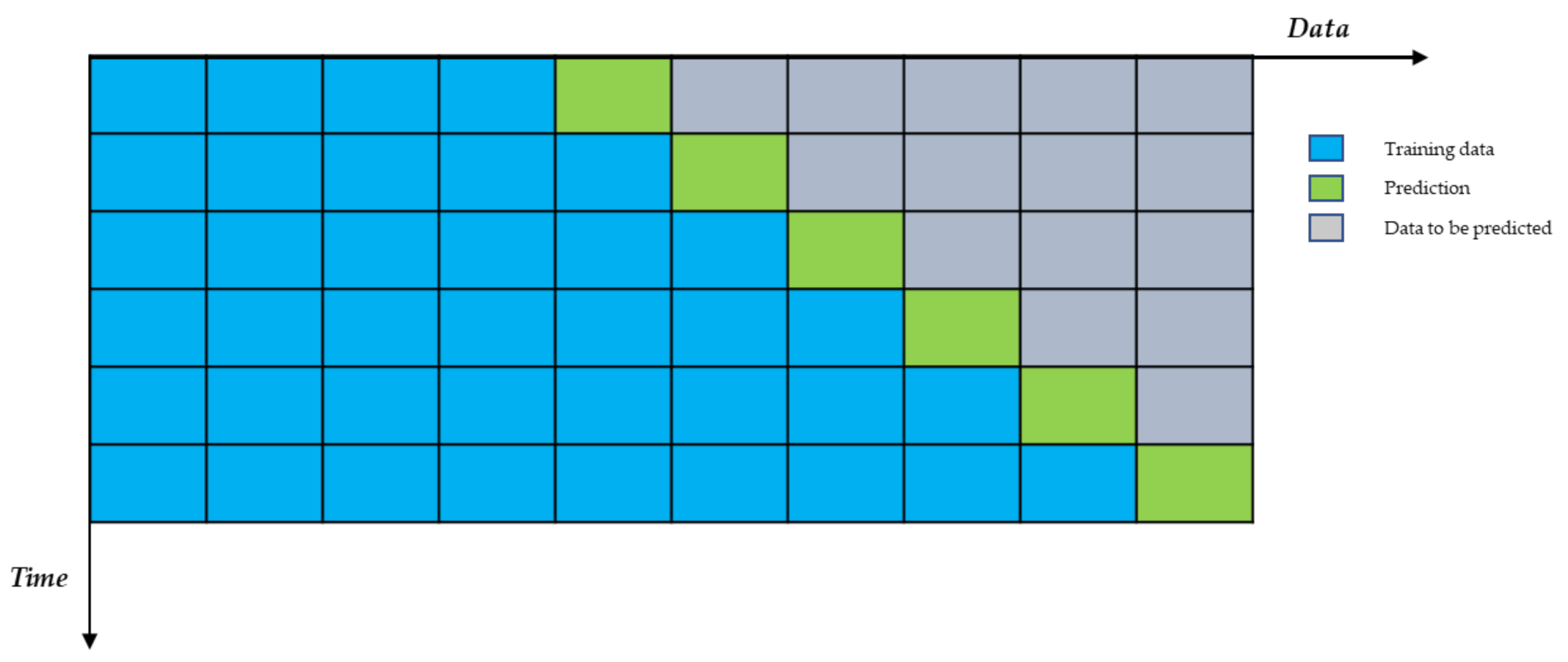

5.3. Backtesting/Validating Proposed Deep Learning Model for Energy Load Forecasting

5.4. Evaluation Metrics

6. Experimental Results and Discussion

6.1. Data Description

6.1.1. UCI Individual Household Dataset

6.1.2. New York Independent System Operator (NYISO) Dataset

6.2. Parameter Selection for Training the Proposed Energy Load Forecasting Model

6.3. Performance with UCI Individual Household Dataset

6.4. Performance with New York Independent System Operator (NYISO) Dataset

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Feng, C.; Wang, Y.; Chen, Q.; Ding, Y.; Strbac, G.; Kang, C. Smart grid encounters edge computing: Opportunities and applications. Adv. Appl. Energy 2021, 1, 100006. [Google Scholar] [CrossRef]

- Gavriluta, C. Smart Grids Overview—Scope, Goals, Challenges, Tasks and Guiding Principles. In Proceedings of the 2016 IEEE International Forum on Smart Grids for Smart Cities, Paris, France, Day 16–18 October 2016. [Google Scholar]

- Alotaibi, I.; Abido, M.A.; Khalid, M.; Savkin, A.V. A Comprehensive Review of Recent Advances in Smart Grids: A Sustainable Future with Renewable Energy Resources. Energies 2020, 13, 6269. [Google Scholar] [CrossRef]

- IEEE Smart Grid Big Data Analytics; Mchine Learning; Artificial Intelligence in the Smart Grud Working Group. Big Data Analytics in the Smart Grid. IEEE Smart Grid. 2017. Available online: https://smartgrid.ieee.org/images/files/pdf/big_data_analytics_white_paper.pdf (accessed on 27 September 2021).

- Weir, R.; Providing Non-Stop Power for Critical Electrical Loads. The Hartford Steam Boiler Inspection and Insurance Company. Available online: https://www.hsb.com/TheLocomotive/ProvidingNon-StopPowerforCriticalElectricalLoads.aspx (accessed on 29 June 2021).

- IRENA. Innovation landscape Brief: Peer-To-Peer Electricity Trading; International Renewable Energy Agency: Abu Dhabi, United Arab Emirates, 2020. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2016, 770–778. [Google Scholar] [CrossRef] [Green Version]

- Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 26–28 October 2014. [Google Scholar]

- Siraj, A.; Chantsalnyam, T.; Tayara, H.; Chong, K.T. RecSNO: Prediction of Protein S-Nitrosylation Sites Using a Recurrent Neural Network. IEEE Access 2021, 9, 6674–6682. [Google Scholar] [CrossRef]

- Alam, W.; Tayara, H.; Chong, K.T. XG-ac4C: Identification of N4-acetylcytidine (ac4C) in mRNA using eXtreme gradient boosting with electron-ion interaction pseudopotentials. Sci. Rep. 2020, 10, 20942. [Google Scholar] [CrossRef] [PubMed]

- Abdelbaky, I.; Tayara, H.; Chong, K.T. Prediction of kinase inhibitors binding modes with machine learning and reduced descriptor sets. Sci. Rep. 2021, 11, 706. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; Petersen, S.; et al. Human-level control through deep reinforcement learning. Nature 2015, 7540, 518–529. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Bai, M.; Goecke, R. Investigating LSTM for Micro-Expression Recognition. In Proceedings of the Companion Publication of the 2020 International Conference on Multimodal Interaction, Utrecht, The Netherlands, 25–29 October 2020; Association for Computing Machinery (ACM): Utrecht, The Netherlands, 2020; pp. 7–11. [Google Scholar]

- Mohd, M.; Qamar, F.; Al-Sheikh, I.; Salah, R. Quranic Optical Text Recognition Using Deep Learning Models. IEEE Access 2021, 9, 38318–38330. [Google Scholar] [CrossRef]

- Shaoxiang, C.; Yao, T.; Jiang, Y.-G. Deep Learning for Video Captioning: A Review. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence (IJCAI-19), Macao, China, 10–16 August 2019. [Google Scholar]

- Shi, X.; Zhourong, C.; Wang, H.; Yeung, D.-Y.; Wong, W.-K.; Woo, W.-C. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. In NIPS; NeurIPS: Montreal, QC, Canada, 2015. [Google Scholar]

- Aman, S.; Simmhan, Y.; Prasanna, V.K. Energy management systems: State of the art and emerging trends. IEEE Commun. Mag. 2013, 51, 114–119. [Google Scholar] [CrossRef]

- Hussain, S.; El-Bayeh, C.Z.; Lai, C.; Eicker, U. Multi-Level Energy Management Systems Toward a Smarter Grid: A Review. IEEE Access 2021, 9, 71994–72016. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, C.; Jiang, L.; Xie, S.; Zhang, Y. Intelligent Edge Computing for IoT-Based Energy Management in Smart Cities. IEEE Netw. 2019, 33, 111–117. [Google Scholar] [CrossRef]

- Mohan, N.; Soman, K.; Kumar, S.S. A data-driven strategy for short-term electric load forecasting using dynamic mode decomposition model. Appl. Energy 2018, 232, 229–244. [Google Scholar] [CrossRef]

- Syed, D.; Abu-Rub, H.; Ghrayeb, A.; Refaat, S.S. Household-Level Energy Forecasting in Smart Buildings Using a Novel Hybrid Deep Learning Model. IEEE Access 2021, 9, 33498–33511. [Google Scholar] [CrossRef]

- Ji, Y.; Buechler, E.; Rajagopal, R. Data-Driven Load Modeling and Forecasting of Residential Appliances. IEEE Trans. Smart Grid 2020, 11, 2652–2661. [Google Scholar] [CrossRef] [Green Version]

- Nowicka-Zagrajeka, J.; Weronb, R. Modeling electricityloads in California: ARMA models with hyperbolic noise. Signal Process. 2002, 82, 1903–1915. [Google Scholar] [CrossRef] [Green Version]

- Amara, F.; Agbossou, K.; Dubé, Y.; Kelouwani, S.; Cardenas, A.; Hosseini, S.S. A residual load modeling approach for household short-term load forecasting application. Energy Build. 2019, 187, 132–143. [Google Scholar] [CrossRef]

- Alam, S.M.M.; Ali, M.H. Equation Based New Methods for Residential Load Forecasting. Energies 2020, 13, 6378. [Google Scholar] [CrossRef]

- Galicia, A.; Torres, J.; Martínez-Álvarez, F.; Troncoso, A. A novel spark-based multi-step forecasting algorithm for big data time series. Inf. Sci. 2018, 467, 800–818. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, D. Theory-guided deep-learning for electrical load forecasting (TgDLF) via ensemble long short-term memory. Adv. Appl. Energy 2021, 1, 100004. [Google Scholar] [CrossRef]

- Gamboa, J. Deep Learning for Time-Series Analysis, in Seminar on Collaborative Intelligence. arXiv 2017, arXiv:1701.01887. [Google Scholar]

- Pappas, S.; Ekonomou, L.; Karampelas, P.; Karamousantas, D.; Katsikas, S.; Chatzarakis, G.; Skafidas, P. Electricity demand load forecasting of the Hellenic power system using an ARMA model. Electr. Power Syst. Res. 2010, 80, 256–264. [Google Scholar] [CrossRef]

- Nepal, B.; Yamaha, M.; Yokoe, A.; Yamaji, T. Electricity load forecasting using clustering and ARIMA model for energy management in buildings. Jpn. Arch. Rev. 2019, 3, 62–76. [Google Scholar] [CrossRef] [Green Version]

- Amber, K.P.; Aslam, M.W.; Mahmood, A.; Kousar, A.; Younis, M.Y.; Akbar, B.; Chaudhary, G.Q.; Hussain, S.K. Energy Consumption Forecasting for University Sector Buildings. Energies 2017, 10, 1579. [Google Scholar] [CrossRef] [Green Version]

- Braun, M.; Altan, H.; Beck, S. Using regression analysis to predict the future energy consumption of a supermarket in the UK. Appl. Energy 2014, 130, 305–313. [Google Scholar] [CrossRef] [Green Version]

- Dudek, G.; Pelka, P.; Smyl, S. A Hybrid Residual Dilated LSTM and Exponential Smoothing Model for Midterm Electric Load Forecasting. IEEE Trans. Neural Netw. Learn. Syst. 2021, PP, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Sehovac, L.; Grolinger, K. Deep Learning for Load Forecasting: Sequence to Sequence Recurrent Neural Networks with At-tention. IEEE Access 2020, 8, 36411–36426. [Google Scholar] [CrossRef]

- Kim, T.-Y.; Cho, S.-B. Predicting residential energy consumption using CNN-LSTM neural networks. Energy 2019, 182, 72–81. [Google Scholar] [CrossRef]

- Kong, W.; Dong, Z.Y.; Jia, Y.; Hill, D.J.; Xu, Y.; Zhang, Y. Short-Term Residential Load Forecasting Based on LSTM Recurrent Neural Network. IEEE Trans. Smart Grid 2019, 10, 841–851. [Google Scholar] [CrossRef]

- Syed, D.; Abu-Rub, H.; Ghrayeb, A.; Refaat, S.S.; Houchati, M.; Bouhali, O.; Banales, S. Deep Learning-Based Short-Term Load Forecasting Approach in Smart Grid with Clustering and Consumption Pattern Recognition. IEEE Access 2021, 9, 54992–55008. [Google Scholar] [CrossRef]

- Chen, K.; Chen, K.; Wang, Q.; He, Z.; Hu, J.; He, J. Short-Term Load Forecasting with Deep Residual Networks. IEEE Trans. Smart Grid 2018, 10, 3943–3952. [Google Scholar] [CrossRef] [Green Version]

- Mohammad, F.; Kim, Y.-C. Energy load forecasting model based on deep neural networks for smart grids. Int. J. Syst. Assur. Eng. Manag. 2019, 11, 824–834. [Google Scholar] [CrossRef]

- Marino, D.L.; Amarasinghe, K.; Manic, M. Building energy load forecasting using Deep Neural Networks. In Proceedings of the IECON 2016—42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, Italy, 23–26 October 2016; pp. 7046–7051. [Google Scholar]

- Rahman, S.A.; Adjeroh, D.A. Deep Learning using Convolutional LSTM estimates Biological Age from Physical Activity. Sci. Rep. 2019, 9, 11425. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hu, W.-S.; Li, H.-C.; Pan, L.; Li, W.; Tao, R.; Du, Q. Feature Extraction and Classification Based on Spatial-Spectral ConvLSTM Neural Network for Hyperspectral Images. Computing Research Repository (CoRR). arXiv 2019, arXiv:1905.03577. [Google Scholar]

- Zhang, A.; Bian, F.; Niu, W.; Wang, D.; Wei, S.; Wang, S.; Li, Y.; Zhang, Y.; Chen, Y.; Shi, Y.; et al. Short Term Power Load Forecasting of Large Buildings Based on Multi-view ConvLSTM Neural Network. In Proceedings of the 2020 IEEE 4th Conference on Energy Internet and Energy System Integration (EI2), Wuhan, China, 30 October–1 November 2020; pp. 4154–4158. [Google Scholar]

- Zhang, L.; Lu, L.; Wang, X.; Zhu, R.M.; Bagheri, M.; Summers, R.M.; Yao, J. Spatio-Temporal Convolutional LSTMs for Tumor Growth Prediction by Learning 4D Longitudinal Patient Data. IEEE Trans. Med. Imaging 2019, 39, 1114–1126. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Smartgrid.gov. Advanced Metering Infrastructure and Customer Systems: Results from the Smart Grid Investment Grant Program. 2016. Available online: https://www.energy.gov/sites/prod/files/2016/12/f34/AMI%20Summary%20Report_09-26-16.pdf (accessed on 27 September 2021).

- Han, J.; Kamber, M.; Pei, J. Data Preprocessing. Data Mining, 3rd ed.; Elsevier: Amsterdam, The Netherlands, 2012; pp. 83–124. [Google Scholar]

- Hyndman, R.J.; Athanasopoulos, G. Forecasting: Principles and Practice, 2nd ed.; OTexts: Melbourne, Australia, 2018. [Google Scholar]

- Jason, B. How To Backtest Machine Learning Models for Time Series Forecasting. 2019. Available online: https://machinelearningmastery.com/backtest-machine-learning-models-time-series-forecasting/ (accessed on 21 July 2021).

- Hebrail, G.; Berard, A. Individual Household Electric Power Consumption Data Set. 2012. Available online: https://archive.ics.uci.edu/ml/datasets/Individual+household+electric+power+consumption (accessed on 16 December 2020).

- NYISO Hourly Loads. Available online: https://www.nyiso.com/load-data (accessed on 10 May 2018).

- Tieleman, T.; Hinton, G. Lecture 6.5-rmsprop: Divide the gradient by a running average of its recent magnitude. COURSERA Neural Netw. Mach. Learn. 2012, 4, 26–31. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the International Conference Learning Representations (ICLR), San Diego, CA, USA, 5–8 May 2015. [Google Scholar]

| Layer | Hyperparameter Setting | Output Shape | Number of Parameters |

|---|---|---|---|

| ConvLSTM2D | Filters = 128, Kernel_Size = (1, 3), Activation = ‘Relu’, Input_Shape = (n_steps, 1, n_length, n_features) | (None, 2, 1, 5, 128) | 198,656 |

| ConvLSTM2D | Filters = 64, Kernel_Size = (1, 3) | (None, 2, 1, 5, 64) | 147,712 |

| ConvLSTM2D | Filters = 32, Kernel_Size = (1, 3) | (None, 2, 1, 5, 32) | 36,992 |

| ConvLSTM2D | Filters = 16, Kernel_Size = (1, 3) | (None, 1, 3, 16) | 9280 |

| Flatten | _ | (None, 192) | 0 |

| Repeat Vector | _ | (None, 7, 192) | 0 |

| LSTM | 200, Activation = ‘Relu’, Kernel_Regularizer = Regularizers.L2(0.001) | (None, 7, 200) | 288,800 |

| Dropout | 0.5 | (None, 7, 200) | 0 |

| LSTM | 200, Activation = ‘Relu’, Kernel_Regularizer = Regularizers.L2(0.001) | (None, 7, 200) | 320,800 |

| Dropout | 0.5 | (None, 7, 200) | 0 |

| Time Distributed (Dense) | 100, Activation = ‘Relu’ | (None, 7, 100) | 20,100 |

| Time Distributed (Dense) | 1 | (None, 7, 1) | 101 |

| Total # Parameters | 1,022,441 |

| Attribute | Description | Value Range |

|---|---|---|

| Datetime | Value designating a particular day MM/DD/YYY | [12/16/2006–11/26/2010] |

| Global active power (GAP) | Household global minute-averaged active power (in kilowatt) | [250.298–4773.386] |

| Global reactive power (GRP) | Household global minute-averaged reactive power (in kilowatt) | [34.922–417.834] |

| Voltage | Minute-averaged voltage (in volt) | [93,552.53–356,306.4] |

| Global intensity (GI) | Household global minute-averaged current intensity (in ampere) | [1164–20,200.4] |

| Sub metering 1 (S1) | It corresponds to the kitchen, containing mainly a dishwasher, an oven and a microwave, hot plates not being electric- but gas-powered (in watt-hour of active energy) | [0–11,178] |

| Sub metering 1 (S1) | It corresponds to the laundry room, containing a washing machine, a tumble-drier, a refrigerator and a light (in watt-hour of active energy) | [0–12,109] |

| Sub metering 3 (S3) | It corresponds to an electric water heater and an air conditioner (in watt-hour of active energy) | [1288–23,743] |

| Hyperparameter | Value |

|---|---|

| Batch Size | 64, 128, 256 |

| Epoch | 100, 1000 |

| Activation function | ReLU, tanh |

| Dropout after LSTM layers | 50% |

| L2 regularization in LSTM layer | 0.001 |

| Loss Function | Mean Absolute Error (MSE) |

| Optimizer | Adam, RMSProp |

| Learning rate | 0.001 |

| Model | RMSE | MSE | MAPE |

|---|---|---|---|

| CNNLSTM | 0.668 | 0.439 | 406.03 |

| LSTM-LSTM | 0.648 | 0.392 | 423.75 |

| ConvLSTM-BiLSTM | 0.650 | 0.416 | 429.39 |

| ConvLSTM-LSTM | 0.605 | 0.386 | 390.07 |

| Model | RMSE | MSE | MAPE |

|---|---|---|---|

| CNNLSTM | 0.634 | 0.485 | 432.3 |

| LSTM-LSTM | 0.646 | 0.457 | 441.2 |

| ConvLSTM-BiLSTM | 0.617 | 0.411 | 395.13 |

| ConvLSTM-LSTM | 0.610 | 0.368 | 350.97 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mohammad, F.; Ahmed, M.A.; Kim, Y.-C. Efficient Energy Management Based on Convolutional Long Short-Term Memory Network for Smart Power Distribution System. Energies 2021, 14, 6161. https://doi.org/10.3390/en14196161

Mohammad F, Ahmed MA, Kim Y-C. Efficient Energy Management Based on Convolutional Long Short-Term Memory Network for Smart Power Distribution System. Energies. 2021; 14(19):6161. https://doi.org/10.3390/en14196161

Chicago/Turabian StyleMohammad, Faisal, Mohamed A. Ahmed, and Young-Chon Kim. 2021. "Efficient Energy Management Based on Convolutional Long Short-Term Memory Network for Smart Power Distribution System" Energies 14, no. 19: 6161. https://doi.org/10.3390/en14196161

APA StyleMohammad, F., Ahmed, M. A., & Kim, Y.-C. (2021). Efficient Energy Management Based on Convolutional Long Short-Term Memory Network for Smart Power Distribution System. Energies, 14(19), 6161. https://doi.org/10.3390/en14196161