A Comparative Analysis of the ARIMA and LSTM Predictive Models and Their Effectiveness for Predicting Wind Speed

Abstract

:1. Introduction

2. Literature Review

3. Methodology and Data Source

3.1. Data Source

3.2. Time Series

3.3. ARIMA

3.3.1. Autoregressive Process (AR)

3.3.2. Moving Average Process (MA)

3.3.3. Autoregressive Moving Average (ARMA) Models

3.3.4. Autoregressive Integrated Moving Average (ARIMA) Models

4. Artificial Neural Networks (ANNs)

5. Deep Learning

5.1. Recurrent Neural Network (RNN)

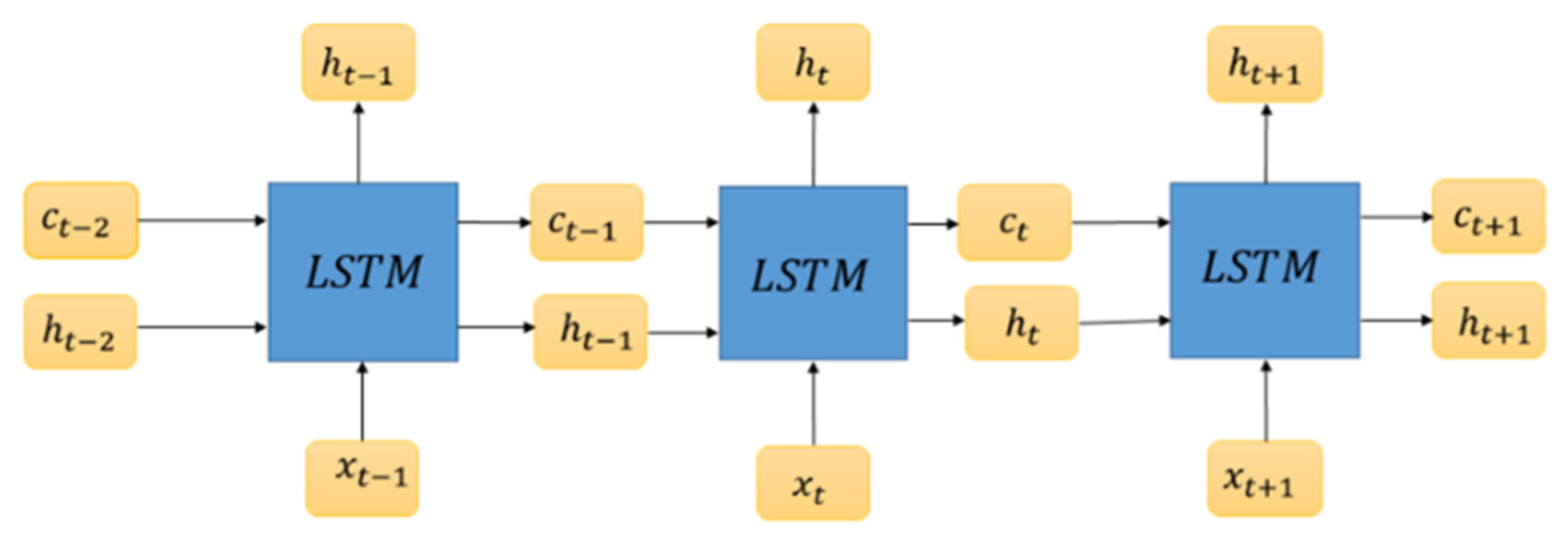

5.2. Long Short-Term Memory (LSTM)

6. Classification of Wind Power Forecasting According to Time-Scales

7. Forecast Validation

7.1. Root Mean Square Error (RMSE)

7.2. Mean Absolute Error (MAE)

8. Results

8.1. ARIMA

8.2. LSTM

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kerem, A.L.; Kirbas, I.; Saygın, A. Performance Analysis of Time Series Forecasting Models for Short Term Wind Speed Prediction. In Proceedings of the International Conference on Engineering and Natural Sciences (ICENS), Sarajevo, Bosnia and Herzegovina, 24–28 May 2016; pp. 2733–2739. [Google Scholar]

- Kirbas, I.; Kerem, A. Short-term wind speed prediction based on artificial neural network models. Meas. Control. 2016, 49, 183–190. [Google Scholar] [CrossRef]

- Hanifi, S.; Liu, X.; Lin, Z.; Lotfian, S. A critical review of wind power forecasting methods—Past, present and future. Energies 2020, 13, 3764. [Google Scholar] [CrossRef]

- Narayana, M.; Putrus, G.; Jovanovic, M.; Leung, P.S. Predictive control of wind turbines by considering wind speed forecasting techniques. In Proceedings of the 2009 44th International Universities Power Engineering Conference (UPEC), Glasgow, UK, 1 September 2009; pp. 1–4. [Google Scholar]

- National Research Council. Electricity from Renewable Resources: Status, Prospects, and Impediments; National Academies Press: Washington, DC, USA, 2010; pp. 65–73. [Google Scholar]

- Lydia, M.; Kumar, S.S. A comprehensive overview on wind power forecasting. In Proceedings of the 2010 Conference Proceedings IPEC, Singapore, 27 October 2010; pp. 268–273. [Google Scholar]

- Lee, D.; Baldick, R. Short-term wind power ensemble prediction based on Gaussian processes and neural networks. IEEE Trans. Smart Grid 2013, 5, 501–510. [Google Scholar] [CrossRef]

- Chandra, R.; Goyal, S.; Gupta, R. Evaluation of deep learning models for multi-step ahead time series prediction. IEEE Access 2021, 9, 83105–83123. [Google Scholar] [CrossRef]

- Liu, H.; Mi, X.; Li, Y. Wind speed forecasting method based on deep learning strategy using empirical wavelet transform, long short-term memory neural network and Elman neural network. Energy Convers. Manag. 2018, 156, 498–514. [Google Scholar] [CrossRef]

- Adebiyi, A.A.; Adewumi, A.O.; Ayo, C.K. Comparison of ARIMA and artificial neural networks models for stock price prediction. J. Appl. Math. 2014, 2014, 614342. [Google Scholar] [CrossRef] [Green Version]

- Madhiarasan, M. Accurate prediction of different forecast horizons wind speed using a recursive radial basis function neural network. Prot. Control. Mod. Power Syst. 2020, 5, 22. [Google Scholar] [CrossRef]

- Elsaraiti, M.; Merabet, A.; Al-Durra, A. Time Series Analysis and Forecasting of Wind Speed Data. In Proceedings of the 2019 IEEE Industry Applications Society Annual Meeting, Baltimore, MD, USA, 29 September–3 October 2019; pp. 1–5. [Google Scholar]

- Grigonytė, E.; Butkevičiūtė, E. Short-term wind speed forecasting using ARIMA model. Energetika 2016, 62, 45–55. [Google Scholar] [CrossRef] [Green Version]

- Meyler, A.; Kenny, G.; Quinn, T. Forecasting Irish Inflation Using ARIMA Models; Munich Personal RePEc Archive: Munich, Germany, 1998. [Google Scholar]

- Kam, K.M. Stationary and Non-Stationary Time Series Prediction Using State Space Model and Pattern-Based Approach; The University of Texas at Arlington: Arlington, TX, USA, 2014. [Google Scholar]

- Tian, Z.; Wang, G.; Ren, Y. Short-term wind speed forecasting based on autoregressive moving average with echo state network compensation. Wind. Eng. 2020, 44, 152–167. [Google Scholar] [CrossRef]

- Prema, V.; Sarkar, S.; Rao, K.U.; Umesh, A. LSTM based deep learning model for accurate wind speed prediction. ICTACT J. Data Sci. Mach. Learn. 2019, 1, 6–11. [Google Scholar]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A search space odys-sey. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2222–2232. [Google Scholar] [CrossRef] [Green Version]

- Goyal, A.; Krishnamurthy, S.; Kulkarni, S.; Kumar, R.; Vartak, M.; Lanham, M.A. A solution to forecast demand using long short-term memory recurrent neural networks for time series forecasting. In Proceedings of the Midwest Decision Sciences Institute Conference, Indianapolis, IN, USA, 12–14 April 2018. [Google Scholar]

- Schmidhuber, J.; Hochreiter, S. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar]

- Hochreiter, S. The vanishing gradient problem during learning recurrent neural nets and problem solutions. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef] [Green Version]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef]

- Salman, A.G.; Heryadi, Y.; Abdurahman, E.; Suparta, W. Weather Forecasting Using Merged Long Short-Term Memory Model (LSTM) and Autoregressive Integrated Moving Average (ARIMA) Model. J. Comput. Sci. 2018, 14, 930–938. [Google Scholar] [CrossRef] [Green Version]

- Masum, S.; Liu, Y.; Chiverton, J. Multi-step time series forecasting of electric load using machine learning models. In Proceedings of the International conference on artificial intelligence and soft computing, Zakopane, Poland, 3–7 June 2018; pp. 148–159. [Google Scholar]

- Wu, W.; Chen, K.; Qiao, Y.; Lu, Z. Probabilistic short-term wind power forecasting based on deep neu-ral networks. In Proceedings of the 2016 International Conference on Probabilistic Methods Applied to Power Systems (PMAPS), Beijing, China, 16 October 2016; pp. 1–8. [Google Scholar]

- Karakoyun, E.S.; Cibikdiken, A.O. Comparison of arima time series model and lstm deep learning algorithm for bitcoin price forecasting. In Proceedings of the The 13th Multidisciplinary Academic Conference, Prague, Czech Republic, 24 May 2018; Volume 2018, pp. 171–180. [Google Scholar]

- Sandhu, K.S.; Nair, A.R. A comparative study of ARIMA and RNN for short term wind speed forecasting. In Proceedings of the 2019 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, 6 July 2019; pp. 1–7. [Google Scholar]

- Xie, A.; Yang, H.; Chen, J.; Sheng, L.; Zhang, Q. A Short-Term Wind Speed Forecasting Model Based on a Multi-Variable Long Short-Term Memory Network. Atmosphere 2021, 12, 651. [Google Scholar] [CrossRef]

- Werngren, S. Comparison of Different Machine Learning Models for Wind Turbine Power Predictions; Uppsala University: Uppsala, Sweden, 2018. [Google Scholar]

- Karapanagiotidis, P. Dynamic State-Space Models; University of Toronto: Toronto, ON, Canada, 2014. [Google Scholar]

- Newbold, P. ARIMA model building and the time series analysis approach to forecasting. J. Forecast. 1983, 2, 23–35. [Google Scholar] [CrossRef]

- Şeyda Çorba, B.; Pelin, K. Wind Speed and Direction Forecasting Using Artificial Neural Networks and Autoregressive Integrated Moving Average Methods. Am. J. Eng. Res. 2018, 7, 240–250. [Google Scholar]

- Gill, N.S. Artificial Neural Networks Applications and Algorithms. 2019. Available online: https://www.xenonstack.com/blog/artificial-neural-network-applications (accessed on 7 April 2021).

- Sharma, S.; Sharma, S. Activation functions in neural networks. Towards Data Sci. 2017, 6, 310–316. [Google Scholar]

- Garver, M.S. Using data mining for customer satisfaction research. Mark. Res. 2002, 14, 8. [Google Scholar]

- Soman, S.S.; Zareipour, H.; Malik, O.; Mandal, P. A review of wind power and wind speed forecasting methods with different time horizons. In Proceedings of the North American Power Symposium, Arlington, TX, USA, 26 September 2010; pp. 1–8. [Google Scholar]

- Foley, A.M.; Leahy, P.G.; Marvuglia, A.; McKeogh, E.J. Current methods and advances in forecasting of wind power generation. Renew. Energy 2012, 37, 1–8. [Google Scholar] [CrossRef] [Green Version]

| Timescale | Range Based on Soman et al. (2010) | Range Based on Foley et al. (2012) |

|---|---|---|

| Short term | 30 min to 6 h ahead | 1–72 h ahead |

| Medium term | 6 h to day ahead | 3–7 days ahead |

| Long term | Day ahead to 1 week or more ahead | Multiple days ahead |

| AR (p) | |||||

|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | ||

| MA (q) | 0 | 6.6490 | 4.4601 | 4.4275 | 4.4101 |

| 1 | 4.4149 | 4.4101 | 4.2975 | 4.2912 | |

| 2 | 4.4037 | 4.4037 | 4.2904 | 4.2996 |

| Models | RMSE | MAE |

|---|---|---|

| ARIMA | 3.423 | 2.772 |

| LSTM | 3.124 | 2.457 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Elsaraiti, M.; Merabet, A. A Comparative Analysis of the ARIMA and LSTM Predictive Models and Their Effectiveness for Predicting Wind Speed. Energies 2021, 14, 6782. https://doi.org/10.3390/en14206782

Elsaraiti M, Merabet A. A Comparative Analysis of the ARIMA and LSTM Predictive Models and Their Effectiveness for Predicting Wind Speed. Energies. 2021; 14(20):6782. https://doi.org/10.3390/en14206782

Chicago/Turabian StyleElsaraiti, Meftah, and Adel Merabet. 2021. "A Comparative Analysis of the ARIMA and LSTM Predictive Models and Their Effectiveness for Predicting Wind Speed" Energies 14, no. 20: 6782. https://doi.org/10.3390/en14206782