Image Preprocessing for Outdoor Luminescence Inspection of Large Photovoltaic Parks

Abstract

1. Introduction

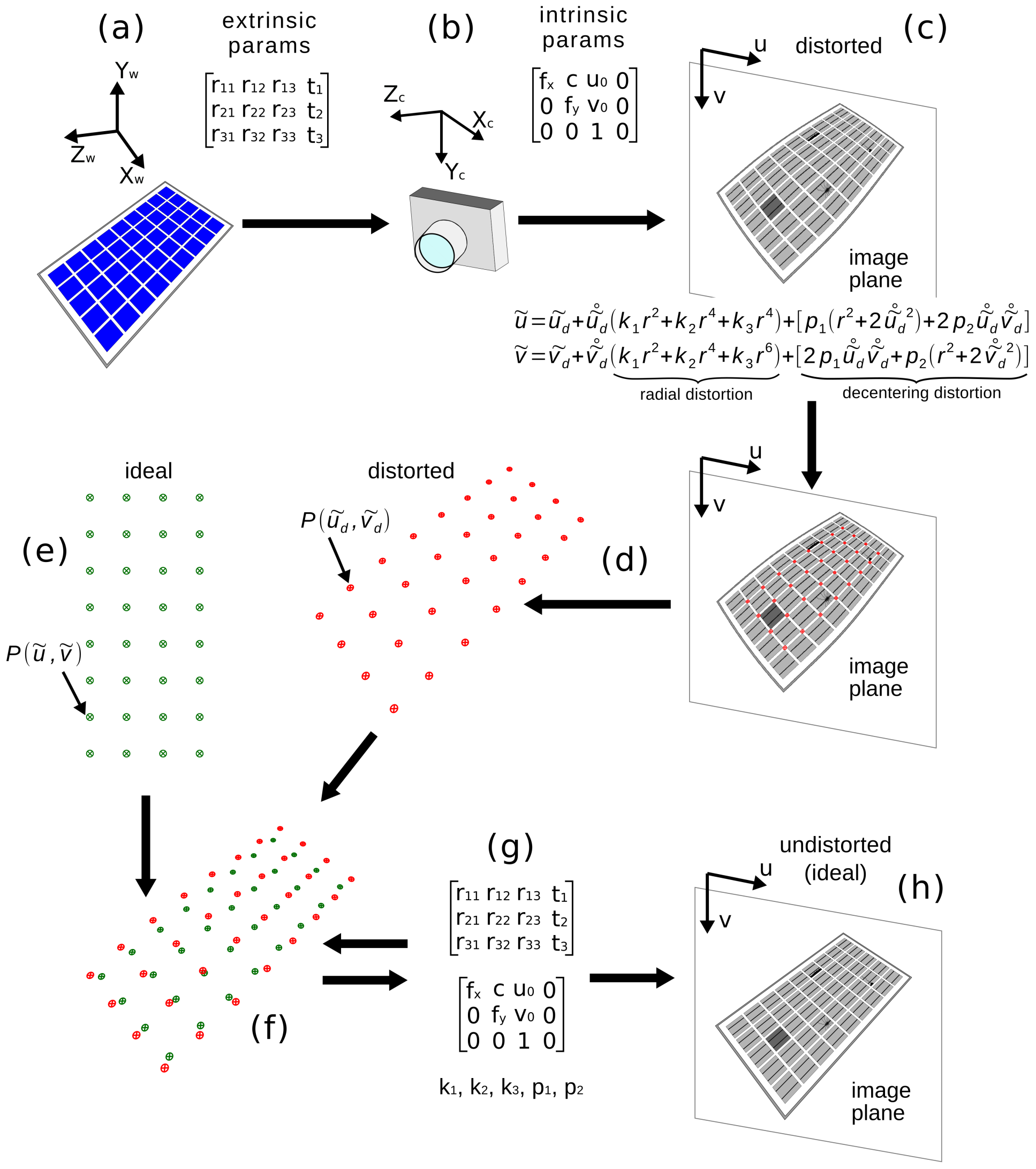

2. Calibration Principle

3. Pattern Detection Procedure

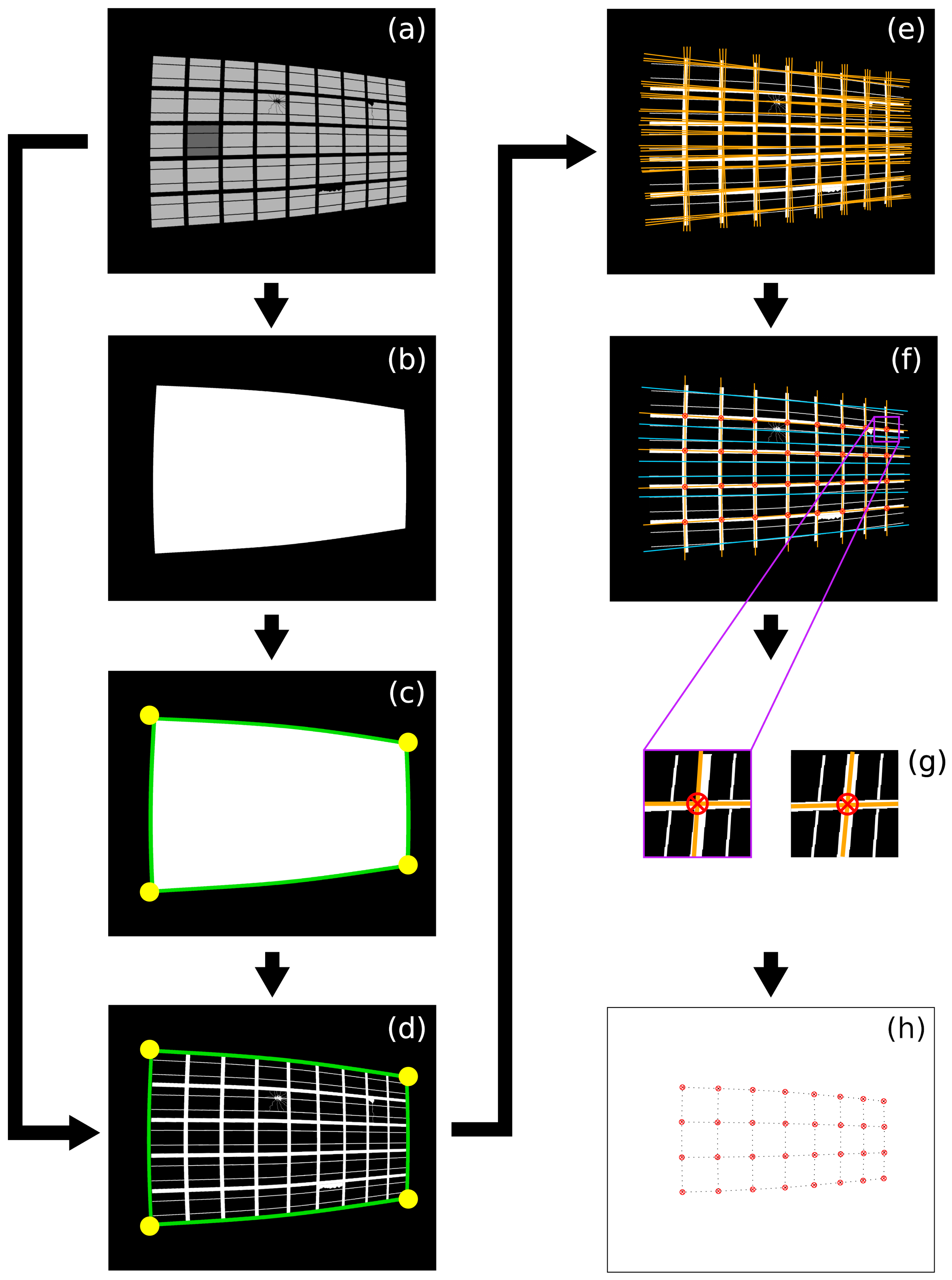

3.1. PV Module Corner Detection

3.2. Binarization for Cell Gap Detection

3.3. Identification of Line Structures

- Filter out straight lines by angle and position that do not match the perspective of the PV module

- Reduce the number of straight lines for one curved line structure to one straight line with the closest representation

- Distinguish between cell gap line and busbar line

- Weight () of each line—sum of distance weighted pixel intensity.

- (Intersection) of lines. Wherever two found lines of the same cluster (long-to-long or short-to-short module edge) intersect, the line with the higher weight is assumed as the valid line and is used for further steps.

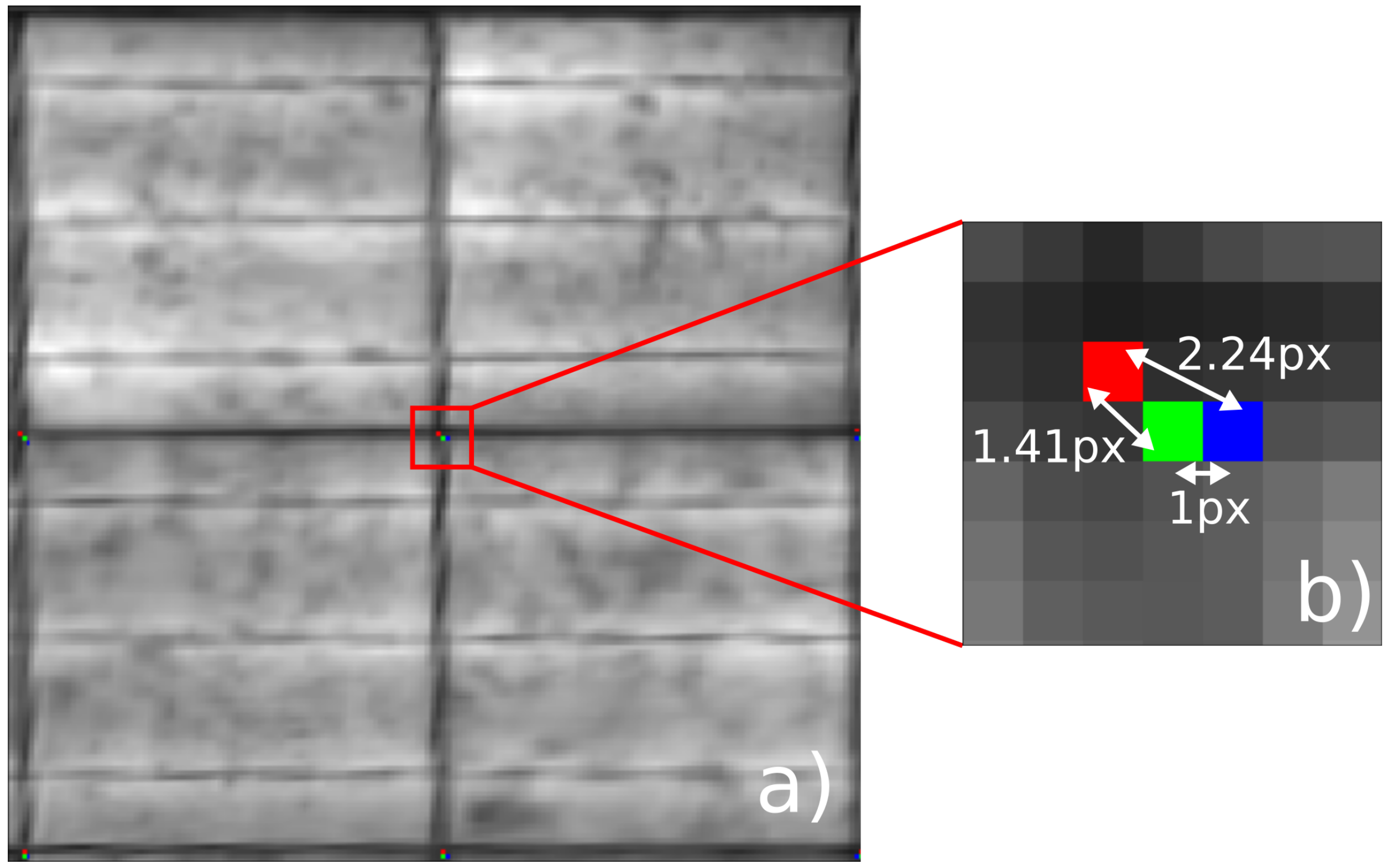

3.4. Precise Cell Gap Joint (CGJ) Search in ROI

4. Experiment

4.1. Setup

4.2. Results

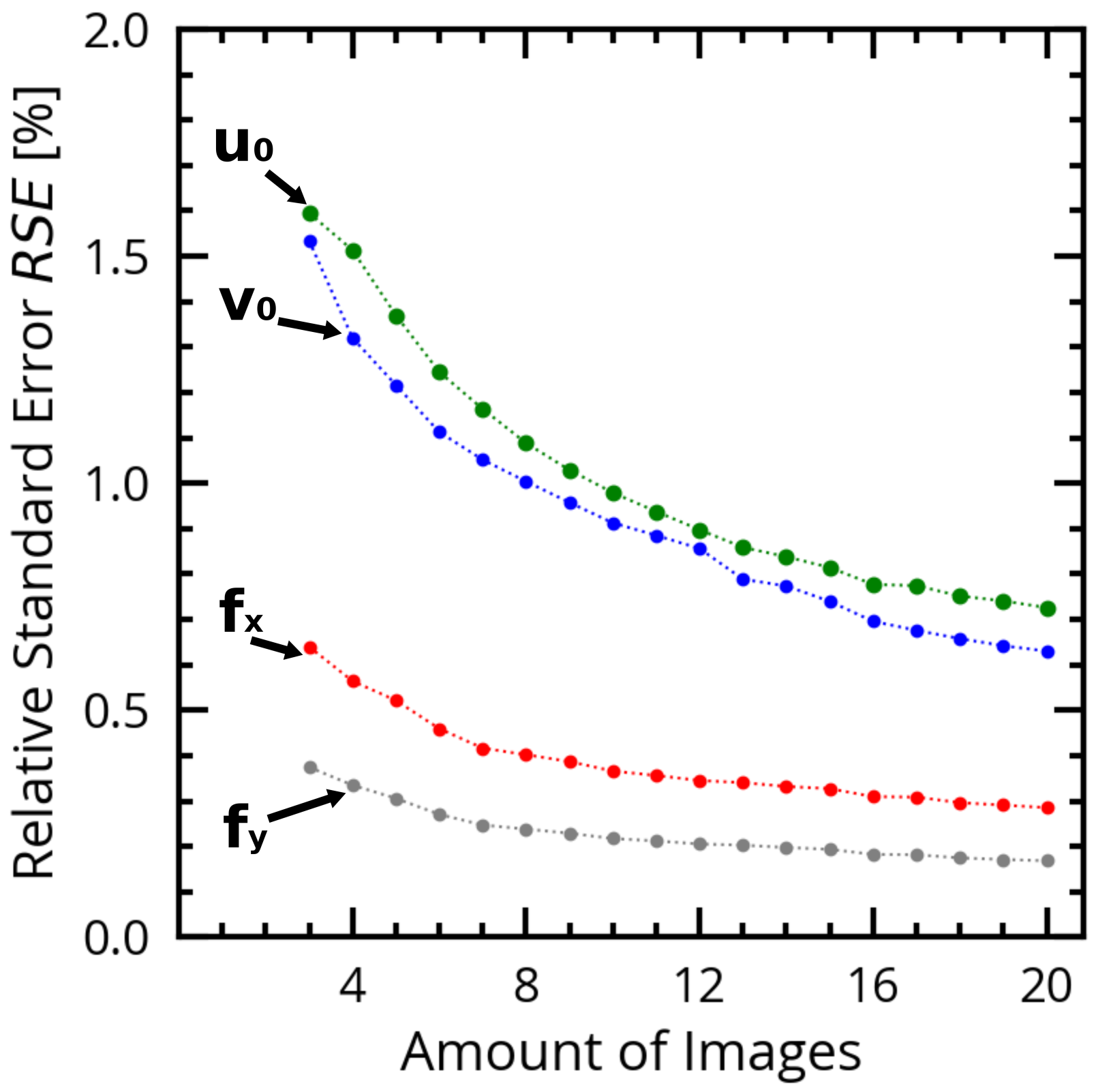

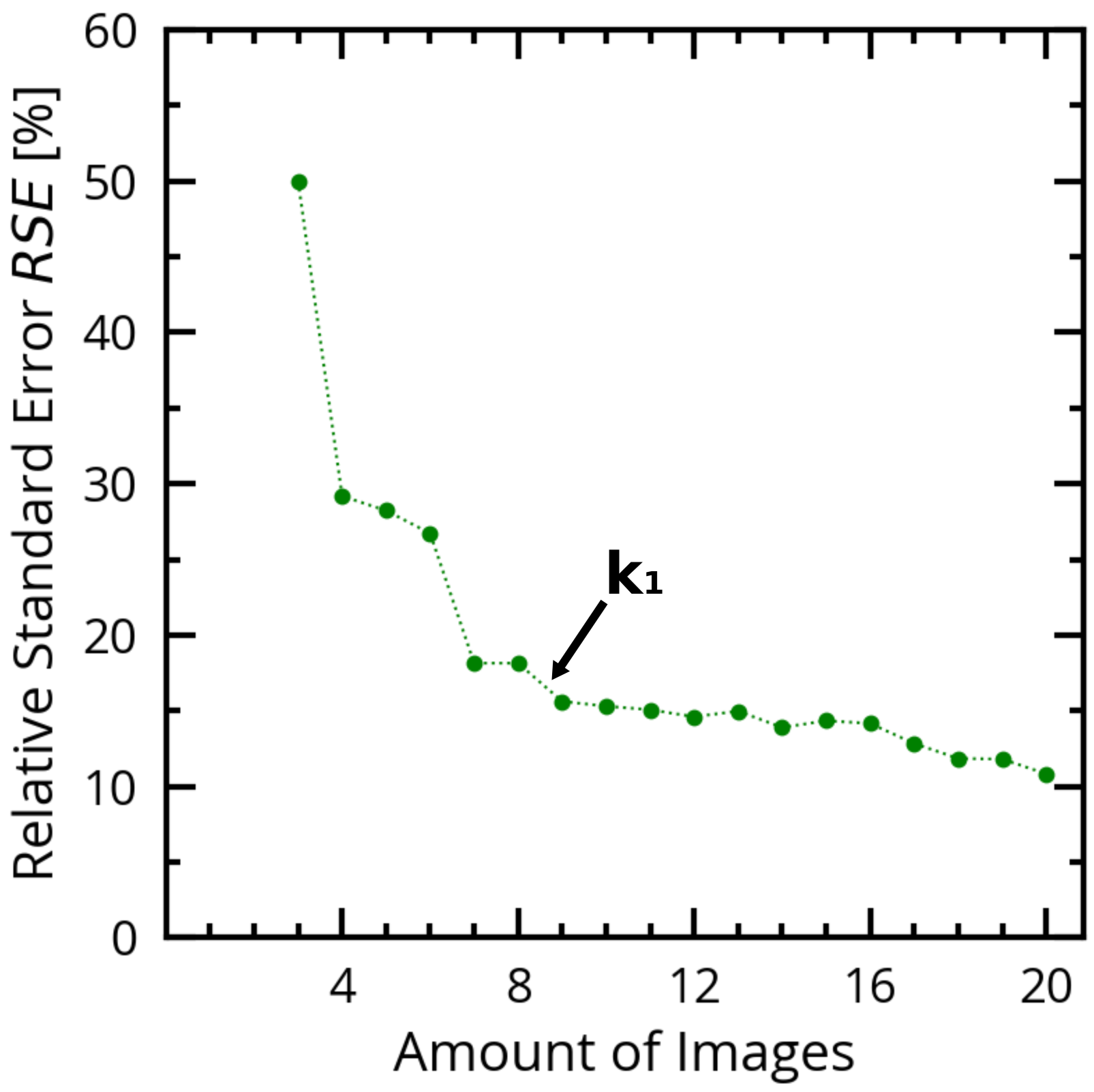

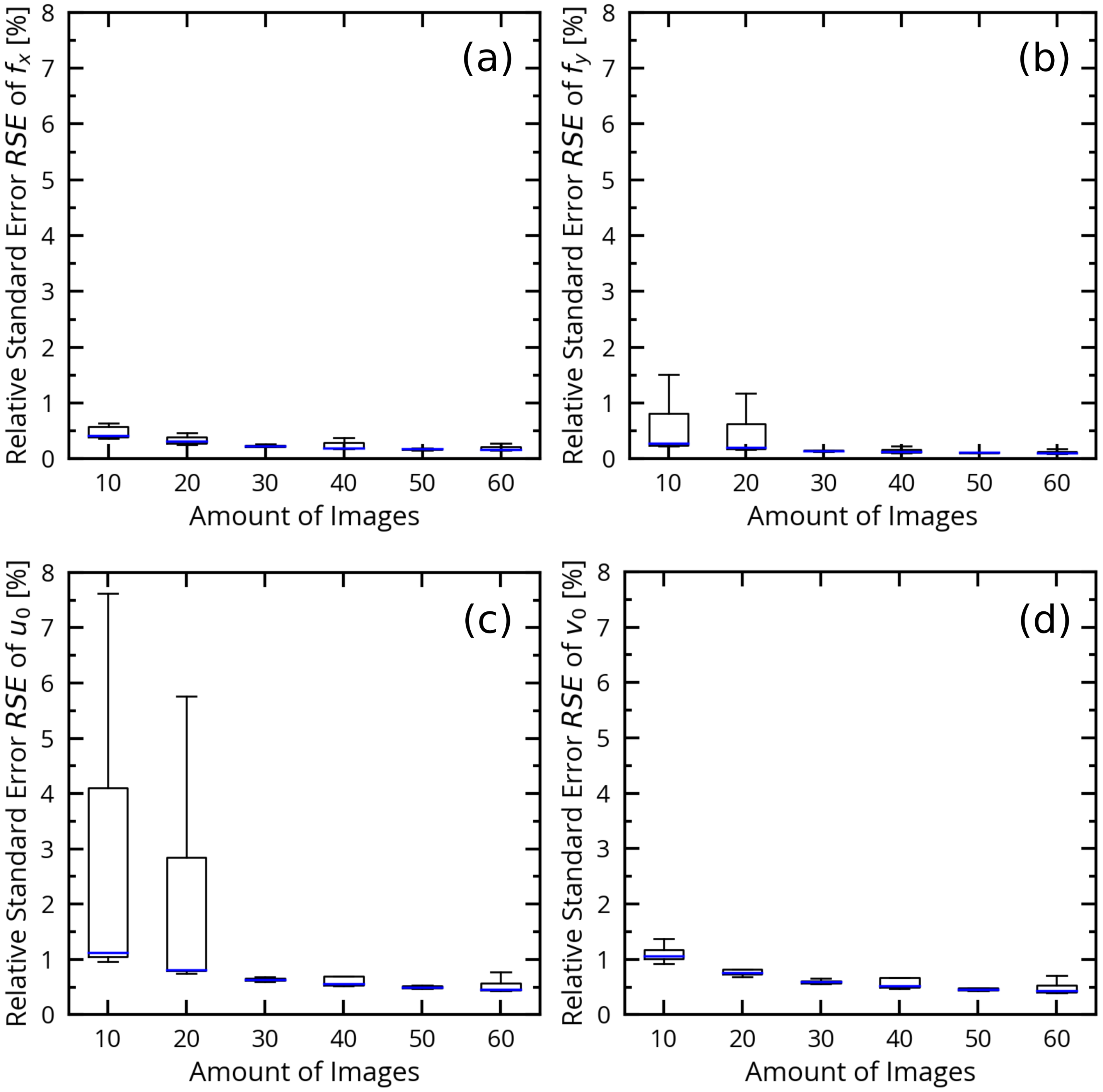

4.2.1. Experiment 1—Parameter Estimation Quality Depending on the Amount of Image Planes

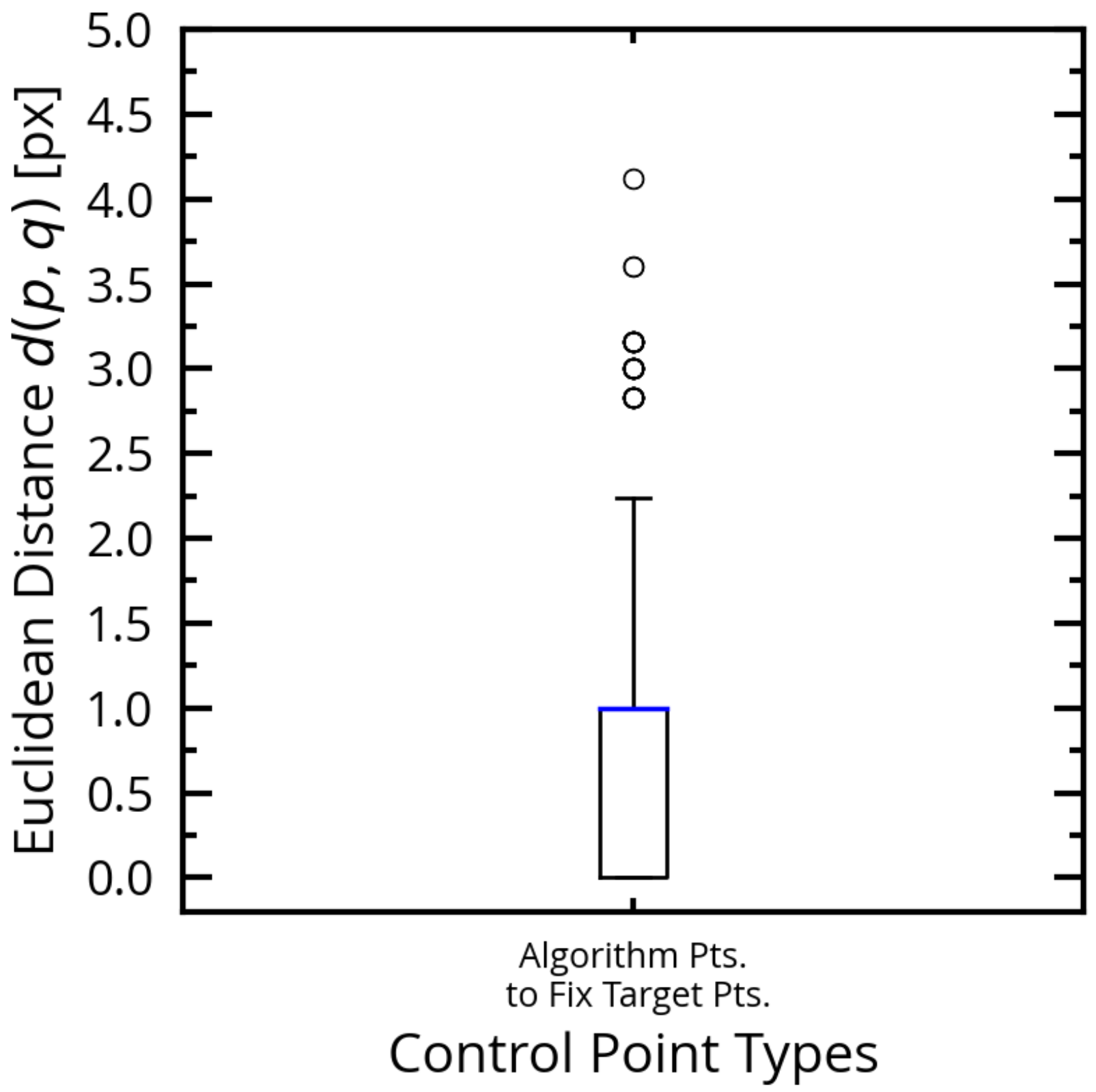

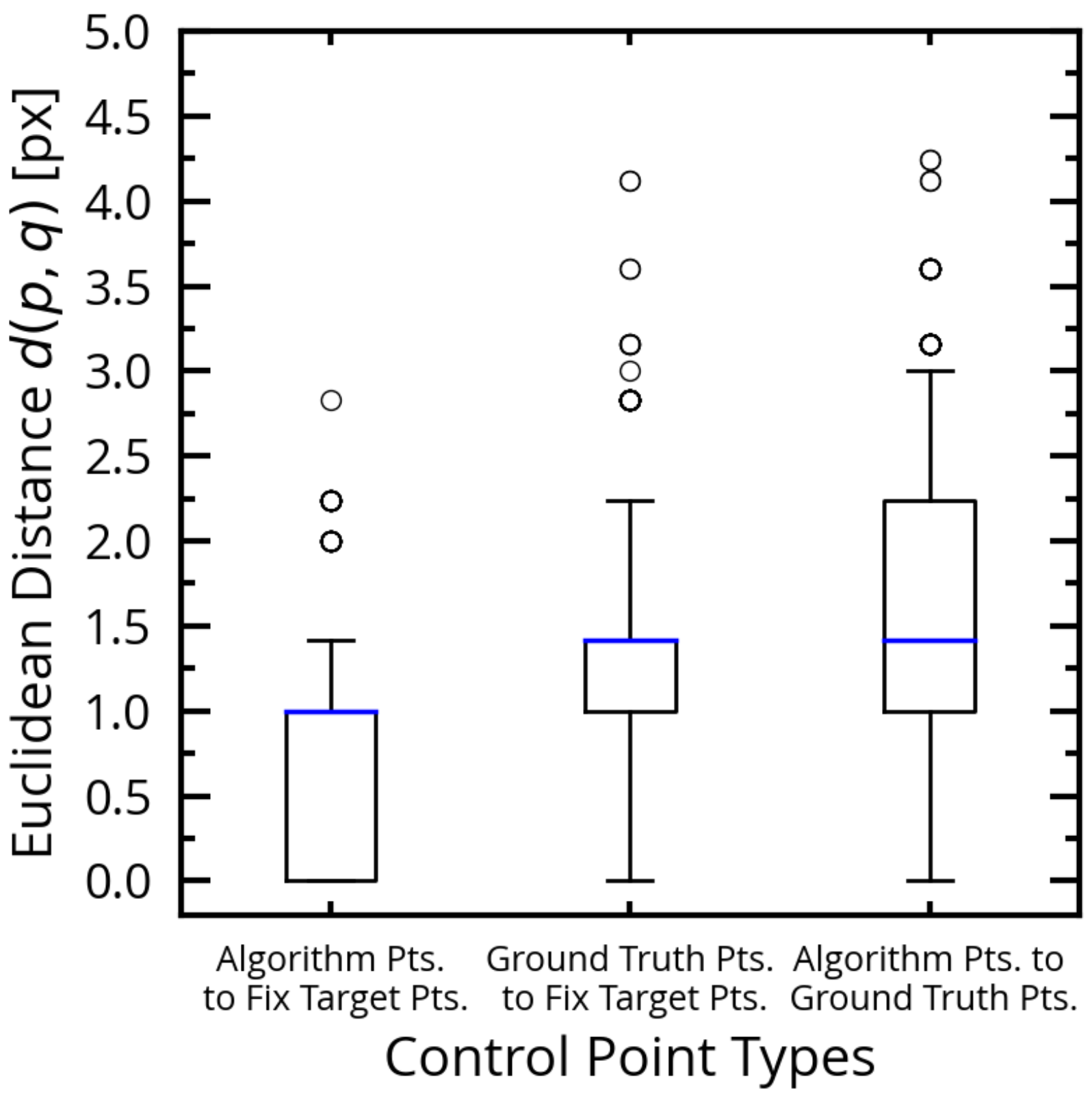

4.2.2. Experiment 2—Calibration Quality for Large On-Field Datasets

Quality Evaluation Metric

- Ideal equidistant grid points, in a undistorted and perspective transformed image—target points (of perspective transformation)

- CGJ points as detected in the distorted image, undistorted and perspective transformed—source

- Ground truth CGJ points labeled by an expert in the distortion corrected and perspective transformed image—real location of CGJ after correction

Self Calibration Quality

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DUT | Device Under Test |

| EL | Electroluminescence |

| CGJ | Cell Gap Joints |

| KF | Key Figures |

| ML | Machine Learning |

| PV | Photovoltaic |

| PID | Potential Induced Degradation |

| CMOS | Complementary Metal-Oxide-Semiconductor |

| ROI | Region Of Interest |

| SE | Standard Error |

| RSE | Relative Standard Error |

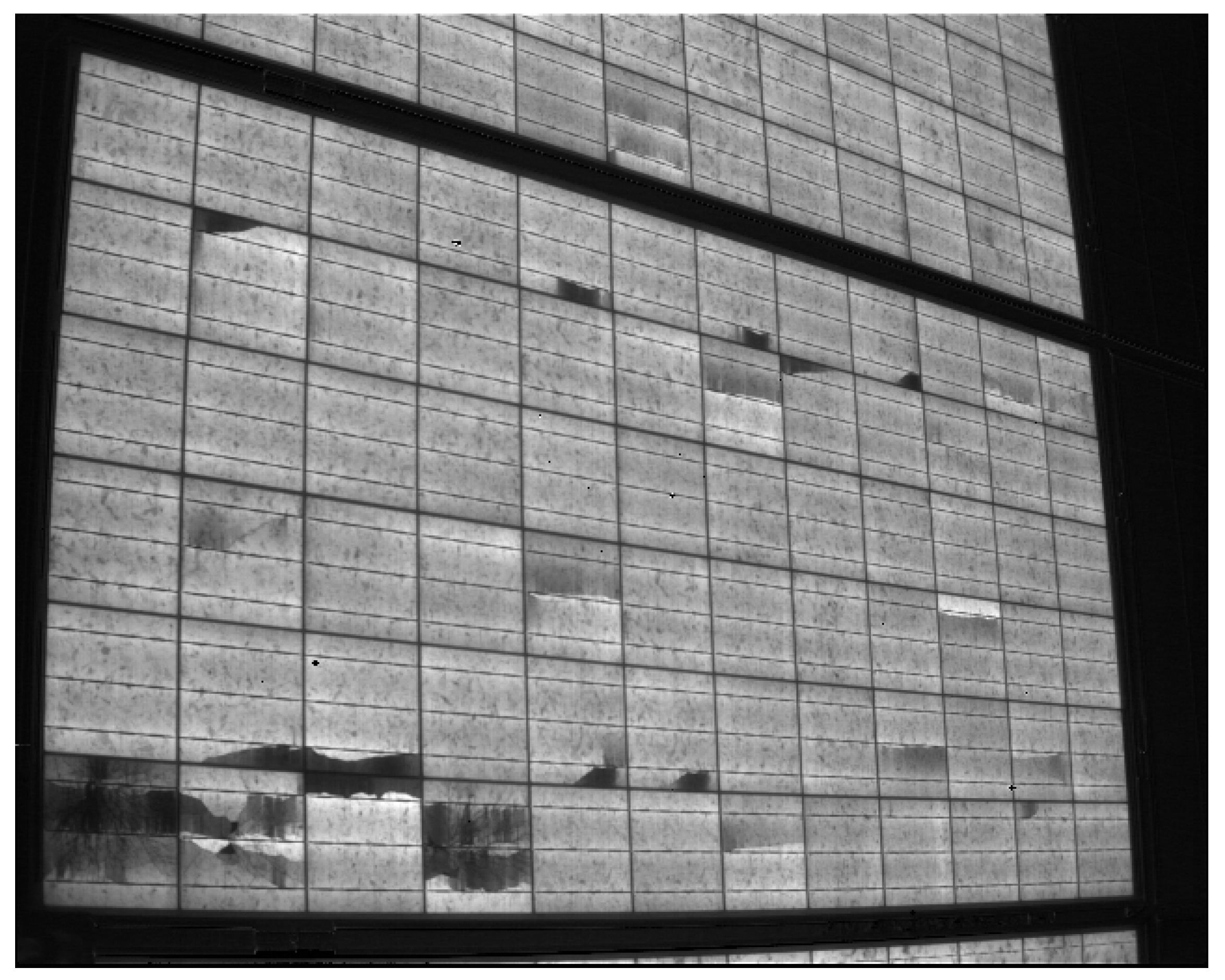

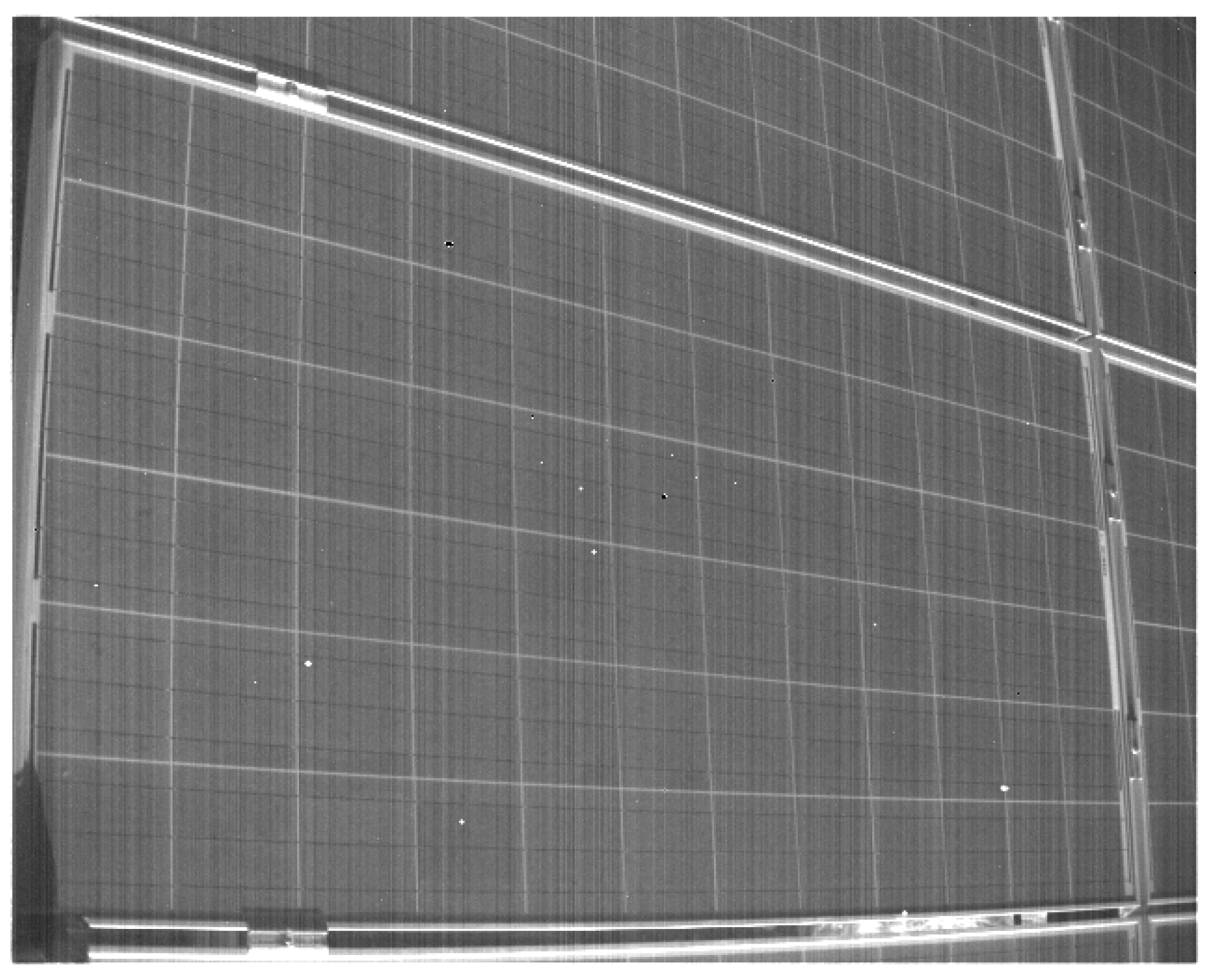

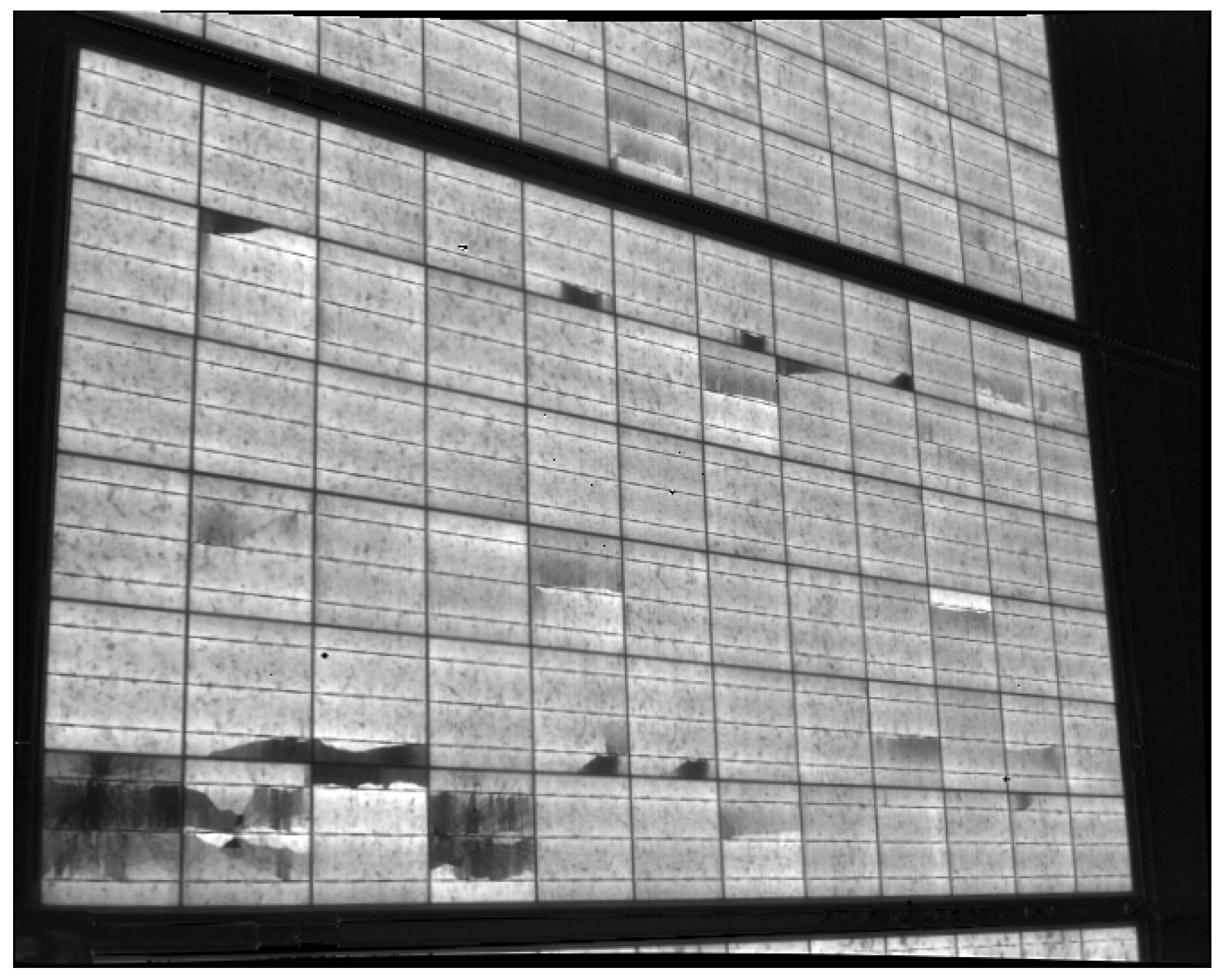

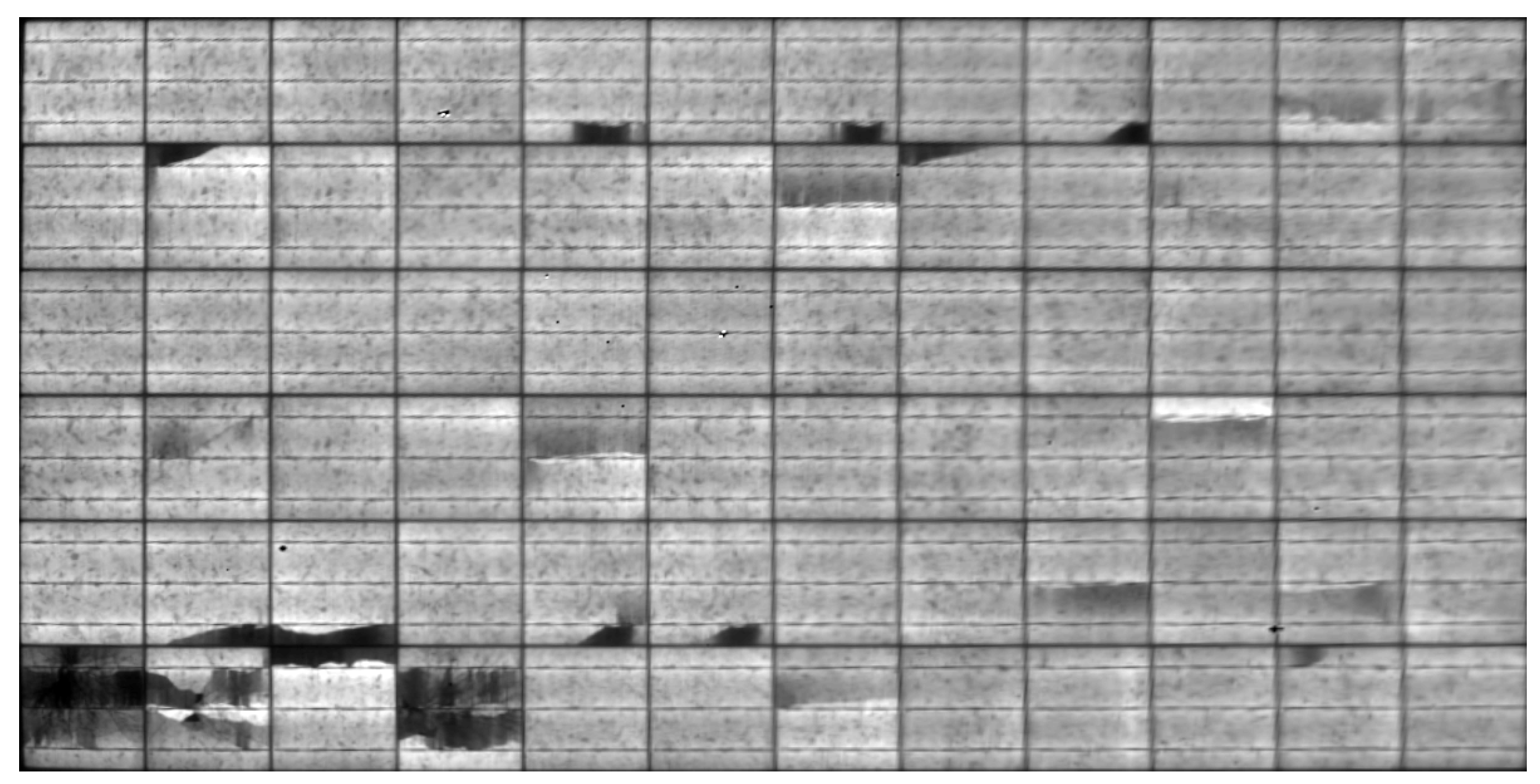

Appendix A. Images after the Processing Steps

References

- Kropp, T.; Schubert, M.; Werner, J.H. Quantitative Prediction of Power Loss for Damaged Photovoltaic Modules Using Electroluminescence. Energies 2018, 11, 1172. [Google Scholar] [CrossRef]

- Stoicescu, L.; Reuter, M.; Werner, J.H. DaySy: Luminescence Imaging of PV Modules in Daylight. In Proceedings of the 29th European Photovoltaic Solar Energy Conference and Exhibition, Amsterdam, The Netherlands, 22–26 September 2016; pp. 2553–2554. [Google Scholar] [CrossRef]

- Deitsch, S.; Buerhop-Lutz, C.; Sovetkin, E.; Steland, A.; Maier, A.; Gallwitz, F.; Riess, C. Segmentation of Photovoltaic Module Cells in Electroluminescence Images. arXiv 2018, arXiv:1806.06530. [Google Scholar]

- Devernay, F.; Faugeras, O. Straight lines have to be straight. Mach. Vis. Appl. 2001, 13, 14–24. [Google Scholar] [CrossRef]

- Bedrich, K.; Bliss, M.; Betts, T.R.; Gottschalg, R. Electroluminescence Imaging of PV Devices: Uncertainty due to Optical and Perspective Distortion. In Proceedings of the 31st European Photovoltaic Solar, Energy Conference and Exhibition, Hamburg, Germany, 14–18 September 2015; pp. 1748–1752. [Google Scholar] [CrossRef]

- Bedrich, K.G.; Bliss, M.; Betts, T.R.; Gottschalg, R. Electroluminescence imaging of PV devices: Camera calibration and image correction. In Proceedings of the 2016 IEEE 43rd Photovoltaic Specialists Conference (PVSC), Portland, OR, USA, 5–10 June 2016; pp. 1532–1537. [Google Scholar] [CrossRef]

- Bedrich, K.G. Quantitative Electroluminescence Measurements of PV Devices. Ph.D. Thesis, Loughborough University, Loughborough, UK, 2017. [Google Scholar]

- OpenCV Team. Camera Calibration and 3D Reconstruction. 2019. Available online: https://docs.opencv.org/2.4/modules/calib3d/doc/camera_calibration_and_3d_reconstruction.html (accessed on 10 August 2020).

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Heikkila, J.; Silven, O. A four-step camera calibration procedure with implicit image correction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 1106–1112. [Google Scholar] [CrossRef]

- The Mathworks Inc. What Is Camera Calibration? 2020. Available online: https://de.mathworks.com/help/vision/ug/camera-calibration.html (accessed on 10 August 2020).

- Bedrich, K.G.; Chai, J.; Wang, Y.; Aberle, A.G.; Bliss, M.; Bokalic, M.; Doll, B.; Köntges, M.; Huss, A.; Lopez-Garcia, J.; et al. 1st International Round Robin on EL Imaging: Automated Camera Calibration and Image Normalisation. In Proceedings of the 35th European Photovoltaic Solar Energy Conference and Exhibition, Brussels, Belgium, 24–28 September 2018; pp. 1049–1056. [Google Scholar] [CrossRef]

- Sobel, I.; Feldman, G. A 3 × 3 isotropic gradient operator for image processing. Unpublished but first presented by Sobel and Feldman at a talk at the Stanford Artificial Project (1968). In Pattern Classification and Scene Analysis; Duda, R., Hart, P., Eds.; John Wiley and Sons: New York, NY, USA, 1973; pp. 271–272. [Google Scholar] [CrossRef]

- Mantel, C.; Villebro, F.; Parikh, H.R.; Spataru, S.; dos Reis Benatto, G.A.; Sera, D.; Poulsen, P.B.; Forchhammer, S. Method for Estimation and Correction of Perspective Distortion of Electroluminescence Images of Photovoltaic Panels. IEEE J. Photovolt. 2020, 10, 1797–1802. [Google Scholar] [CrossRef]

- Duda, R.O.; Hart, P.E. Use of the Hough Transformation to Detect Lines and Curves in Pictures. Commun. ACM 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Sanjay, G.B.; Mehta, S.; Vajpai, J. Adaptive Local Thresholding for Edge Detection. IJCA Proc. Natl. Conf. Adv. Technol. Appl. Sci. NCATAS(2) 2014, 2, 15–18. [Google Scholar]

- Brown, D.C. Close-Range Camera Calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Sun, Q.; Wang, X.; Xu, J.; Wang, L.; Zhang, H.; Yu, J.; Su, T.; Zhang, X. Camera self-calibration with lens distortion. Optik 2016, 127, 4506–4513. [Google Scholar] [CrossRef]

- Mallon, J.; Whelan, P. Precise radial un-distortion of images. In Proceedings of the 17th International Conference on Pattern Recognition, ICPR 2004, Cambridge, UK, 26 August 2004; Volume 1, pp. 18–21. [Google Scholar] [CrossRef]

- Wei, G.-Q.; Ma, S.D. Implicit and explicit camera calibration: Theory and experiments. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 469–480. [Google Scholar] [CrossRef]

- Tsai, R. A versatile camera calibration technique for high-accuracy 3D machine vision metrology using off-the-shelf TV cameras and lenses. IEEE J. Robot. Autom. 1987, 3, 323–344. [Google Scholar] [CrossRef]

- Faig, W. Calibration of Close-Range Photogrammetric Systems: Mathematical Formulation. Photogramm. Eng. Remote Sens. 1975, 41, 1479–1486. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the Sixth International Conference on Computer Vision (IEEE Cat. No.98CH36271), Bombay, India, 7 January 1998; Volume 1, pp. 839–846. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Süße, H.; Rodner, E. Bildverarbeitung und Objekterkennung: Computer Vision in Industrie und Medizin; Springer Vieweg: Wiesbaden, Germany, 2014. [Google Scholar]

- Suzuki, S.; Be, K. Topological structural analysis of digitized binary images by border following. Comput. Vis. Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Zhang, Z. Flexible camera calibration by viewing a plane from unknown orientations. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 1, pp. 666–673. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kölblin, P.; Bartler, A.; Füller, M. Image Preprocessing for Outdoor Luminescence Inspection of Large Photovoltaic Parks. Energies 2021, 14, 2508. https://doi.org/10.3390/en14092508

Kölblin P, Bartler A, Füller M. Image Preprocessing for Outdoor Luminescence Inspection of Large Photovoltaic Parks. Energies. 2021; 14(9):2508. https://doi.org/10.3390/en14092508

Chicago/Turabian StyleKölblin, Pascal, Alexander Bartler, and Marvin Füller. 2021. "Image Preprocessing for Outdoor Luminescence Inspection of Large Photovoltaic Parks" Energies 14, no. 9: 2508. https://doi.org/10.3390/en14092508

APA StyleKölblin, P., Bartler, A., & Füller, M. (2021). Image Preprocessing for Outdoor Luminescence Inspection of Large Photovoltaic Parks. Energies, 14(9), 2508. https://doi.org/10.3390/en14092508