A Synthetic Approach for Datacenter Power Consumption Regulation towards Specific Targets in Smart Grid Environment

Abstract

:1. Introduction

2. Related Work

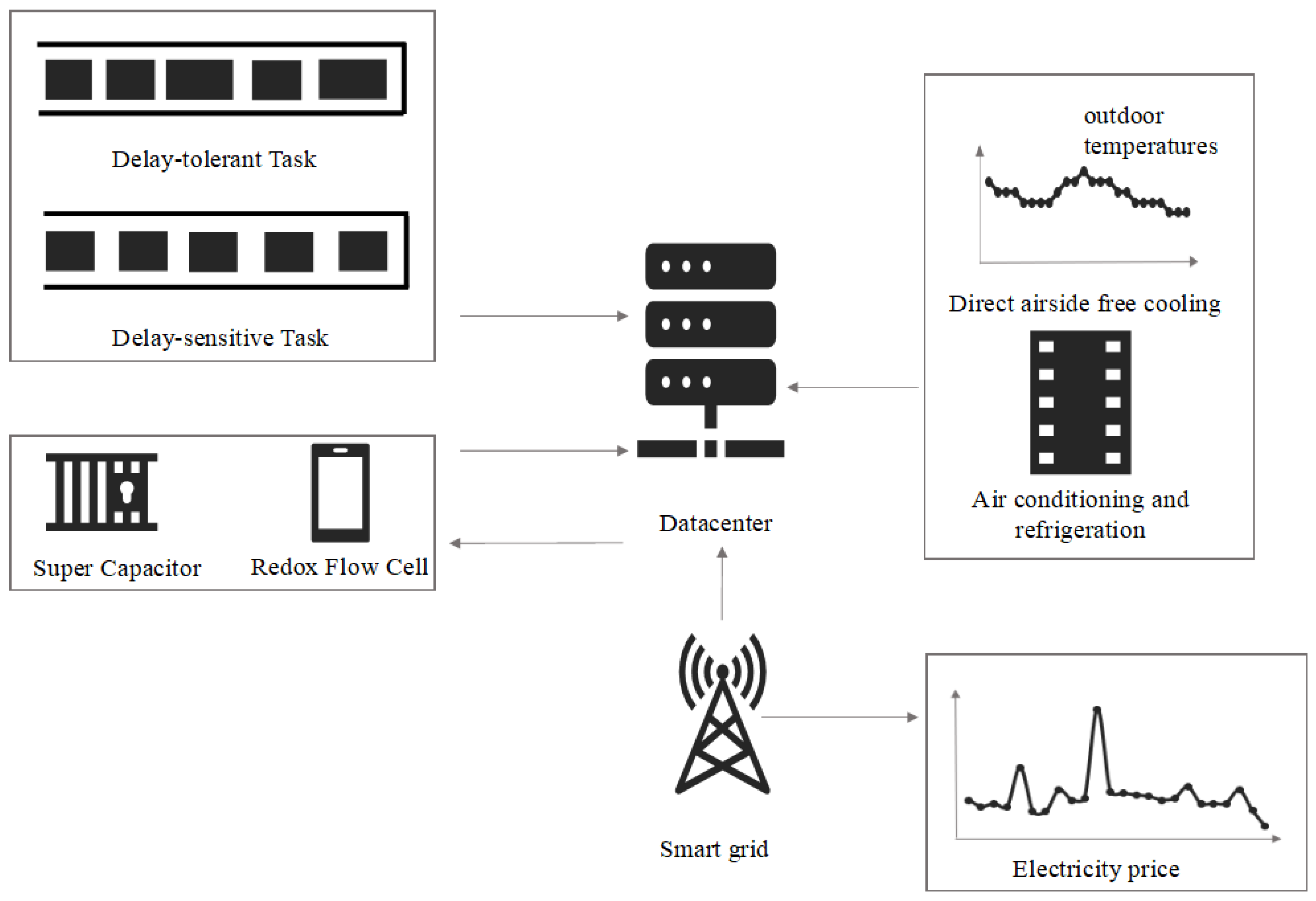

3. System Model

3.1. System Architecture

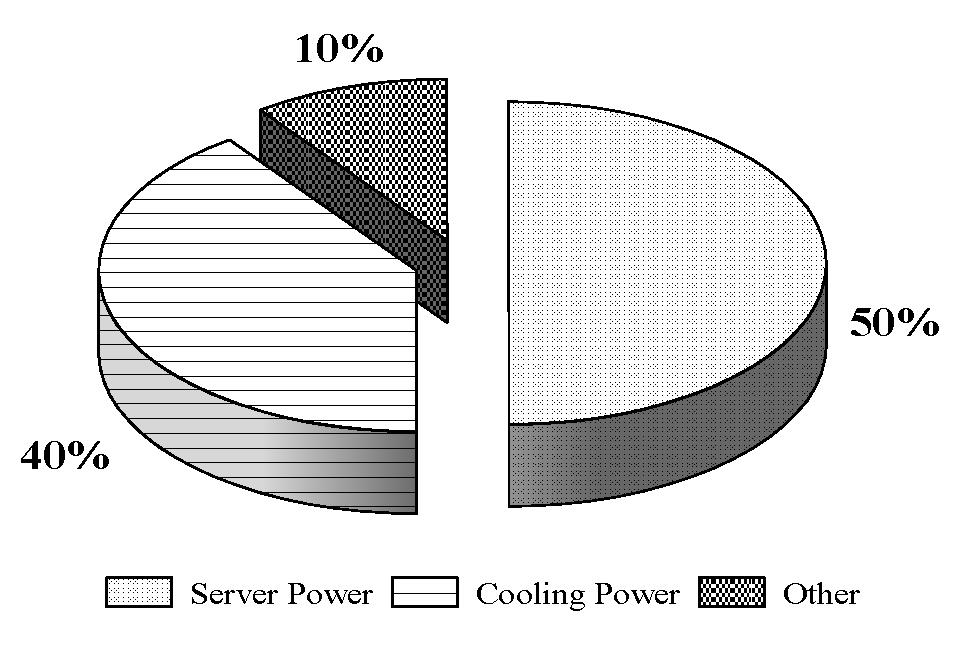

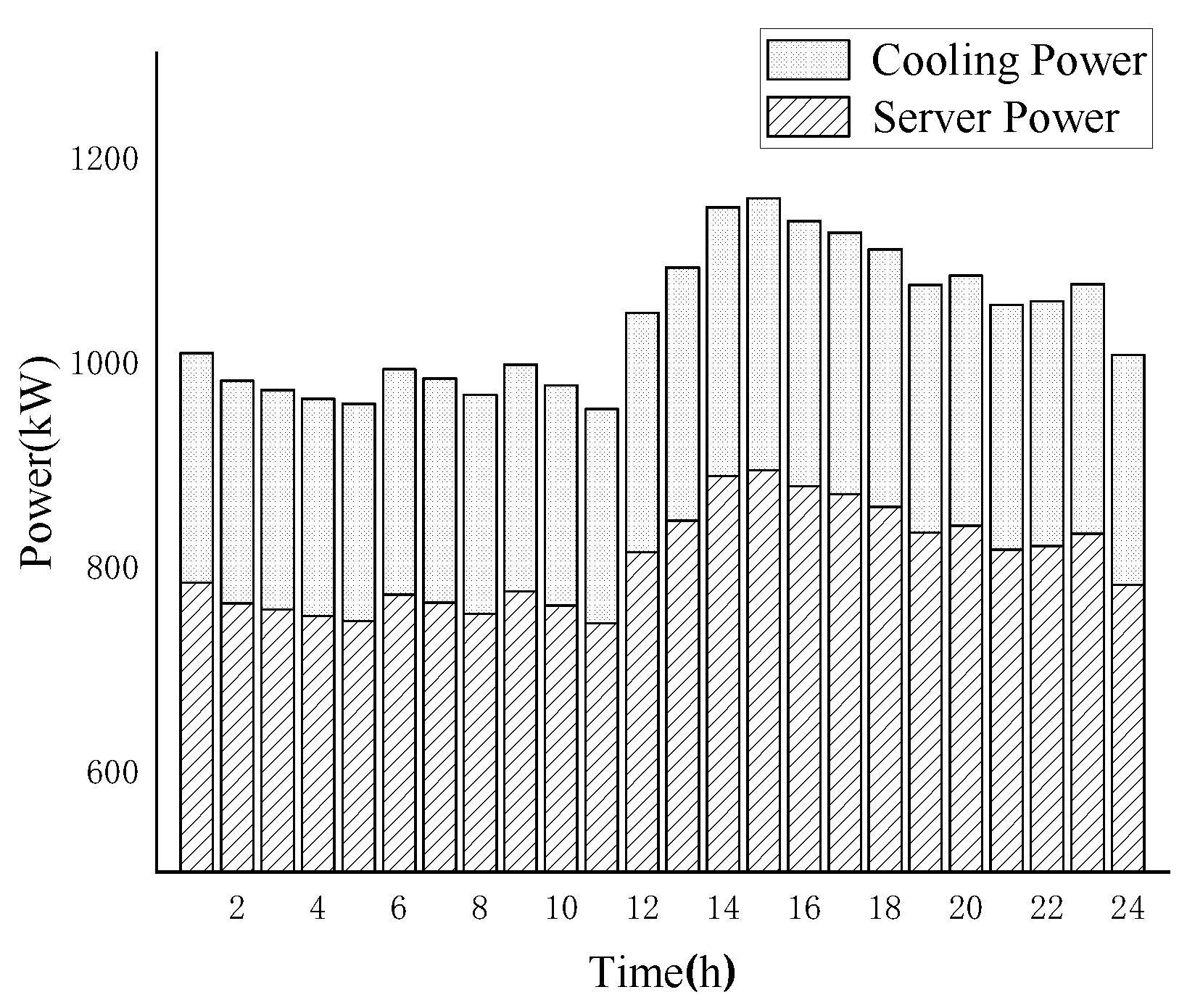

3.2. Modeling Datacenter Power Consumption

3.3. Modeling Energy Storage Devices

3.4. Task Scheduling and Frequency Scaling Models

3.4.1. Task Scheduling Model

3.4.2. Frequency Scaling Model

3.5. Modeling the Cooling System

3.5.1. Air Conditioning Cooling Model

3.5.2. Direct Airside Free Cooling Model

3.6. Cost Model

3.6.1. Operating Cost Model

3.6.2. Penalty Model

4. Problem Definition and Solution

4.1. Problem Definition

4.2. Solution to the Optimization Problem

4.2.1. Model Simplification

4.2.2. Design of Solution Approaches

- Dynamic Optimal Scheduling Method (DOSM)

- (a)

- Choose an appropriate penalty factor r(0), expected error ξ and decline factor c;

- (b)

- Select the initial point X(0) in the feasible region and set k = 0;

- (c)

- Establish the penalty function , starting from the point X(k−1), and use the unconstrained optimization method to find the extreme points of the penalty function ;

- (d)

- Use termination criterion to judge the convergence; if the conditions are met, stop the iteration, and then the best point of the objective function is ; otherwise, let , , k = k + 1, and go to (c).

- 2.

- Alternative Strategies.

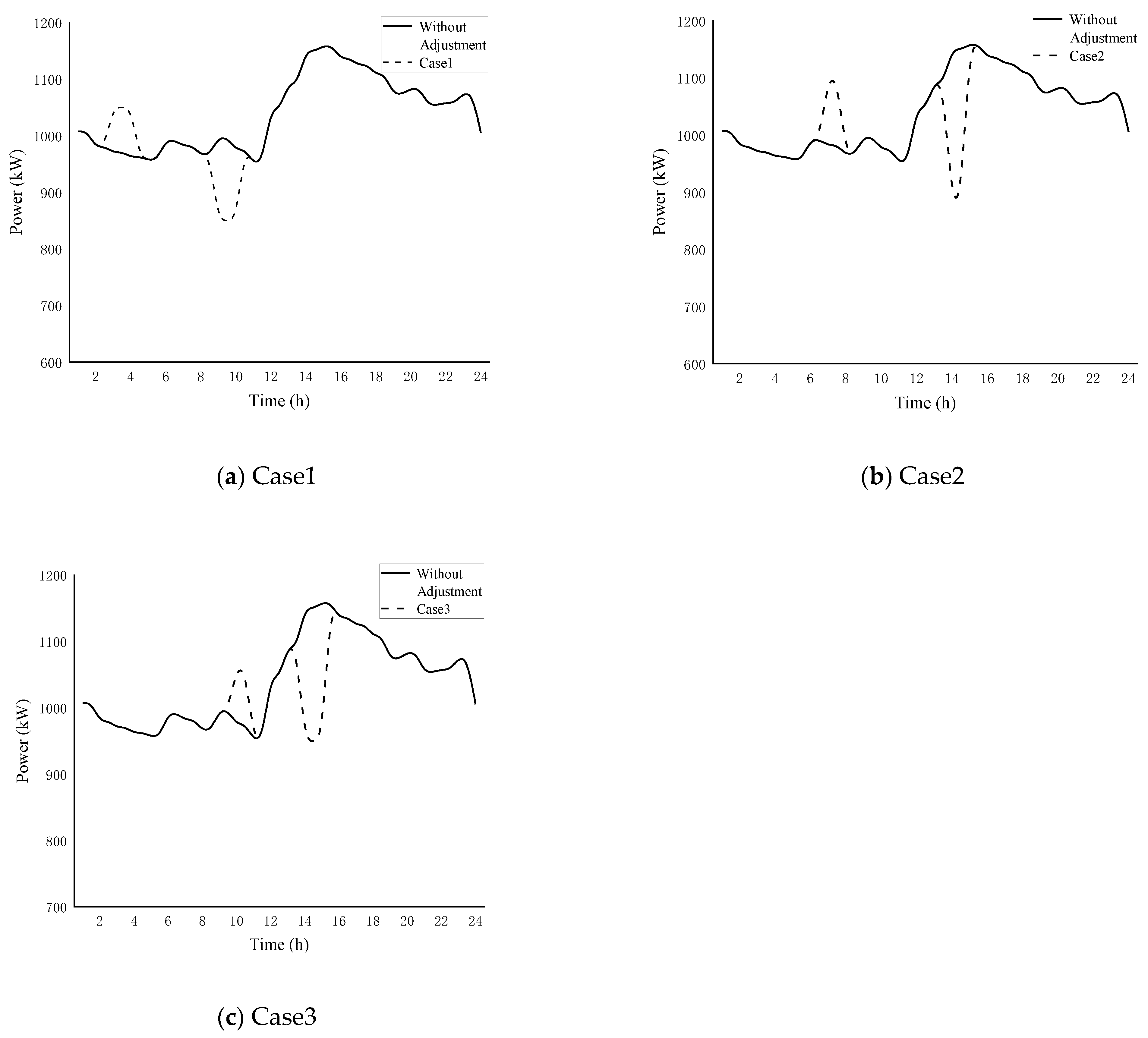

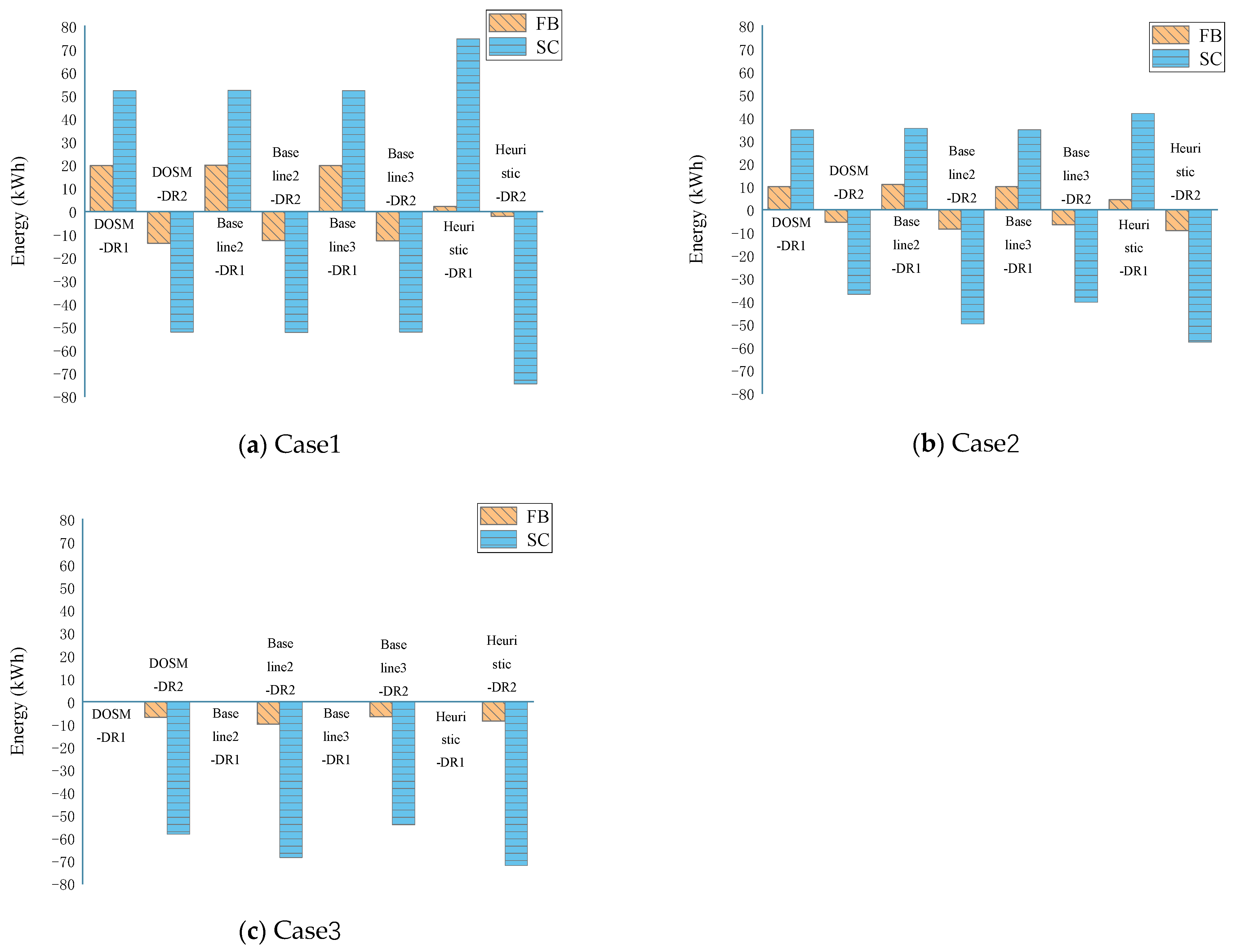

5. Evaluation and Analysis

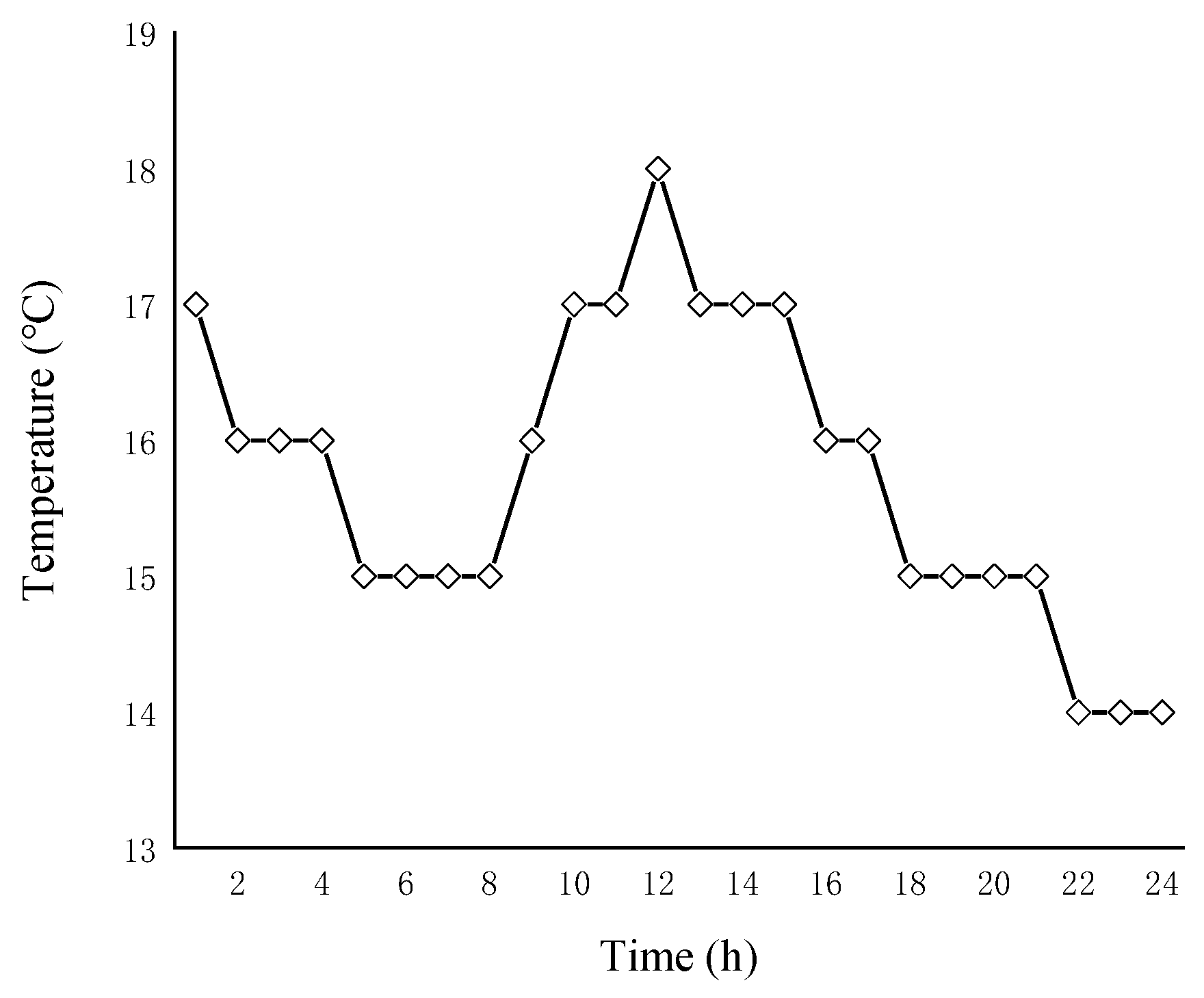

5.1. Environment and Parameter Setting

5.2. Experimental Setup

5.3. Analysis of Experimental Results

6. Summary and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yang, F.; Chien, A.A. Extreme scaling of supercomputing with stranded power: Costs and capabilities. arXiv 2016, arXiv:1607.02133. [Google Scholar]

- Xu, J. Design and Implementation of IDC Virtualization Energy-Saving Environmental Dispatch Based on Cloud Computing Solutions; Fudan University: Shanghai, China, 2013. [Google Scholar]

- Du, Z.; Hu, J.; Chen, Y.; Cheng, Z.; Wang, X. Optimized QoS-aware replica placement heuristics and applications in astronomy data grid. J. Syst. Softw. 2011, 84, 1224–1232. [Google Scholar] [CrossRef]

- Bahrami, S.; Wong, V.W.S.; Huang, J. Data Center Demand Response in Deregulated Electricity Markets. IEEE Trans. Smart Grid 2019, 10, 2820–2832. [Google Scholar] [CrossRef]

- Zhang, Z.; Shen, C.; Fu, G.; Li, Y. Renewable power accommodation capability evaluation based on regional power balance. Hebei J. Ind. Sci. Technol. 2016, 33, 120–125. [Google Scholar]

- Liu, P.; Chu, P. Wind power and photovoltaic power: How to improve the accommodation capability of renewable electricity generation in China? Int. J. Energy Res. 2018. [Google Scholar] [CrossRef]

- Celik, B.; Rostirolla, G.; Caux, S.; Stolf, P. Analysis of demand response for datacenter energy management using GA and time-of-use prices, In Proceedings of the 2019 IEEE PES Innovative Smart Grid Technologies Europe (ISGT-Europe), Bucharest, Romania, 29 September–2 October 2019.

- Jung, H.; Wang, H.; Hu, T. Control design for robust tracking and smooth transition in power systems with battery/supercapacitor hybrid energy storage devices. J. Power Sources 2014, 267, 566–575. [Google Scholar] [CrossRef]

- Huang, Y.; Zeng, G.; Lu, D.; Fang, Z.; Huang, B. Voltage Stabilization in Micro Grid Based on Flexible Characteristic of Data Center Power. Electron. Sci. Technol. 2017, 30, 100–103. [Google Scholar]

- Wu, S.L.; Li, S.S.; Gu, F.C.; Chen, P.H.; Chen, H.C. Application of Super-Capacitor in Photovoltaic Power Generation System. In Proceedings of the 2019 IEEE International Conference of Intelligent Applied Systems on Engineering (ICIASE), Fuzhou, China, 26–29 April 2019. [Google Scholar]

- Zhao, M.; Wang, X. Datacenter Power Consumption Regulation towards Specific Targets in Smart Grid Environment. In Proceedings of the 2021 Asia-Pacific Conference on Communications Technology and Computer Science (ACCTCS), Shenyang, China, 22–24 January 2021; pp. 33–36. [Google Scholar]

- Zhang, X.; He, Z.; Li, C. Research on energy saving algorithms for datacenter in cloud computing systems. Appl. Res. Comput. 2013, 30, 961–964. [Google Scholar]

- Wu, W. Research on Data Center Energy Saving Based on DVFS; Harbin Institute of Technology: Harbin, China, 2017. [Google Scholar]

- Huai, W. Research on DVFS-Based Task Scheduling Problem in Data Centers; Nanjing University: Nanjing, China, 2014. [Google Scholar]

- Wu, W.; Yang, R.; Li, M. Energy efficiency strategy in cloud data center based on DVFS-aware and dynamic virtual machines consolidation. Appl. Res. Comput. 2018, 35, 2484–2488. [Google Scholar]

- Tang, C.J.; Dai, M.R.; Chuang, C.C.; Chiu, F.S.; Lin, W.S. A load control method for small data centers participating in demand response programs. Future Gener. Comput. Syst. 2014, 32, 232–245. [Google Scholar] [CrossRef]

- Ko, J.S.; Huh, J.H.; Kim, J.C. Improvement of Energy Efficiency and Control Performance of Cooling System Fan Applied to Industry 4.0 Data Center. Electronics 2019, 8, 582. [Google Scholar] [CrossRef] [Green Version]

- Nadjahi, C.; Louahlia, H.; Stéphane, L. A review of thermal management and innovative cooling strategies for data center. Sustain. Comput. Inform. Syst. 2018, 19, 14–28. [Google Scholar] [CrossRef]

- Li, Z.; Lin, Y. Energy-saving study of green data center based on the natural cold source. In Proceedings of the International Conference on Information Management, Xi’an, China, 23–24 November 2013. [Google Scholar]

- TakahashI, M.; Takamatu, T.; Shunsuke, O. Indirect External Air Cooling Type Energy-Saving Hybrid Air Conditioner for Data Centers, “F-COOL NEO”. Fuji Electr. Rev. 2014, 60, 59–64. [Google Scholar]

- Chen, H.; Coskun, A.K.; Caramanis, M.C. Real-time power control of data centers for providing Regulation Service. In Proceedings of the 52nd IEEE Conference on Decision and Control, Firenze, Italy, 10–13 December 2013. [Google Scholar]

- Narayanan, I.; Wang, D.; Mamun, A.A.; Fathy, H.K.; James, S. Evaluating energy storage for a multitude of uses in the datacenter. In Proceedings of the 2017 IEEE International Symposium on Workload Characterization (IISWC), Seattle, WA, USA, 1–3 October 2017. [Google Scholar]

- Guruprasad, R.; Murali, P.; Krishnaswamy, D.; Kalyanaraman, S. Coupling a small battery with a datacenter for frequency regulation. In Proceedings of the 2017 IEEE Power & Energy Society General Meeting, Chicago, IL, USA, 16–20 July 2017. [Google Scholar]

- Longjun, L.; Hongbin, S.; Chao, L.; Tao, L.; Zheng, Z. Exploring Customizable Heterogeneous Power Distribution and Management for Datacenter. IEEE Trans. Parallel Distrib. Syst. 2018, 29, 2798–2813. [Google Scholar]

- Mamun, A.; Narayanan, I.; Wang, D.; Sivasubramaniam, A.; Fathy, H.K. Multi-objective optimization of demand response in a datacenter with lithium-ion battery storage. J. Energy Storage 2016, 7, 38. [Google Scholar] [CrossRef] [Green Version]

- Yuan, L. Research on Precise Power Control Technology Based on Request Tracing; University of Chinese Academy of Sciences: Huairou District, Beijing, China, 2011. [Google Scholar]

- Huang, Y.; Wang, P.; Xie, G.; An, J.X. Data Center Energy Cost Optimization in smart grid: A review. J. Zhejiang Univ. (Eng. Sci. Ed.) 2016, 50, 2386–2399. [Google Scholar] [CrossRef]

- Yang, T.; Zhao, Y.; Pen, H.; Wang, Z. Data center holistic demand response algorithm to smooth microgrid tie-line power fluctuation. Appl. Energy 2018, 231, 277–287. [Google Scholar] [CrossRef]

- Zhang, X.; Li, Z. Development of data center cooling technology. J. Eng. Thermophys. 2017, 38, 226–227. [Google Scholar]

- Su, L.; Dong, K.; Sun, Q.; Huang, Z.; Liu, J. Research Progress on Energy Saving of Data Center Cooling System. Adv. New Renew. Energy 2019, 7, 93–104. [Google Scholar]

- Zhang, H.; Shao, S.; Tian, C. Research Advances in Free Cooling Technology of Data Centers. J. Refrig. 2016, 37, 46–57. [Google Scholar]

- Wang, Y.; Wang, X.; Zhang, Y. Leveraging thermal storage to cut the electricity bill for datacenter cooling. Workshop Power-aware Comput. Syst. ACM 2011, 8, 1–5. [Google Scholar]

- Saadi, Y.; Kafhali, S.E. Energy-efficient strategy for virtual machine consolidation in cloud environment. Soft Comput. 2020, 2, 14845–14859. [Google Scholar] [CrossRef]

- Akbari, A.; Khonsari, A.; Ghoreyshi, S.M. Thermal-Aware Virtual Machine Allocation for Heterogeneous Cloud Data Centers. Energies 2020, 13, 2880. [Google Scholar] [CrossRef]

- Zheng, W.; Ma, K.; Wang, X. Exploiting thermal energy storage to reduce data center capital and operating expenses. In Proceedings of the 2014 IEEE 20th International Symposium on High Performance Computer Architecture (HPCA), Orlando, FL, USA, 15–19 February 2014; pp. 132–141. [Google Scholar]

- Guo, Y.; Gong, Y.; Fang, Y.; Geng, X. Energy and Network Aware Workload Management for Sustainable Data Centers with Thermal Storage. Parallel and Distributed Systems. IEEE Trans. 2014, 25, 2030–2042. [Google Scholar]

- Chen, S.; Pedram, M. Efficient Peak Shaving in a Data Center by Joint Optimization of Task Assignment and Energy Storage Management. In Proceedings of the 2016 IEEE 9th International Conference on Cloud Computing (CLOUD), San Francisco, CA, USA, 27 June–2 July 2016. [Google Scholar]

- Tang, Q.; Gupta, S.K.S.; Stanzione, D.; Cayton, P. Thermal-Aware Task Scheduling to Minimize Energy Usage of Blade Server Based Datacenters. In Proceedings of the 2006 2nd IEEE International Symposium on Dependable, Autonomic and Secure Computing, Indianapolis, IN, USA, 29 September–1 October 2006. [Google Scholar]

- Moore, J.; Chase, J.S.; Ranganathan, P.; Sharma, R. Making scheduling cool: Temperature-aware workload placement in data centers. In Proceedings of the Usenix Annual Technical Conference, Anaheim, CA, USA, 10–15 April 2005; p. 5. [Google Scholar]

- Yang, Y.; Yang, Z. The Requirement of Data Center Equipment and the Selection of Cooling System. Refrig. Air-Cond. 2015, 9, 95–100. [Google Scholar]

- Zhao, M.; Wang, X. A Survey of Research on Datacenters Using Energy Storage Devices to Participate in Smart Grid Demand Response. In Proceedings of the 2020 IEEE International Conference on Power, Intelligent Computing and Systems (ICPICS), Shenyang, China, 28–30 July 2020. [Google Scholar]

- You, Y. Introduction to flow battery. Ups Appl. 2015, 9, 17–19. [Google Scholar]

- Chang, Z.; Wang, Z.; Yuan, T.; Wang, Q.; Li, G.; Wang, N. Research on comprehensive modeling of vanadium redox flow battery. Adv. Technol. Electr. Eng. Energy 2019, 38, 73–80. [Google Scholar]

- Skyllas-Kazacos, M.; Chakrabarti, M.H.; Hajimolana, S.A.; Mjalli, F.S. Progress in Flow Battery Research and Development. J. Electrochem. Soc. 2011, 158, R55. [Google Scholar] [CrossRef]

- Shigematsu, T. Redox flow battery for energy storage. S Tech. Rev. 2011, 73, 4–13. [Google Scholar]

- Lin, H.; Xie, Y. Application of Different Energy Storage Components in UPS. World Power Supply 2014, 11, 49–55. [Google Scholar]

- Ou, Y.; Luo, W. Application of Flywheel Energy Storage Technology in Data Center and Communication Station. Telecom Power Technol. 2019, 36, 72–74. [Google Scholar]

- Mengshu, S.; Yuankun, X.; Paul, B.; Tang, J.; Wang, Y.; Lin, X. Hierarchical and hybrid energy storage devices in data centers: Architecture, control and provisioning. PLoS ONE 2018, 13, e0191450. [Google Scholar]

- Zheng, W.; Ma, K.; Wang, X. Hybrid Energy Storage with Supercapacitor for Cost-efficient Data Center Power Shaving and Capping. IEEE Trans. Parallel Distrib. Syst. 2016, 1, 1. [Google Scholar] [CrossRef]

- Cong, T.N. Progress in electrical energy storage system: A critical review. Prog. Nat. Sci. 2009, 3, 23–44. [Google Scholar]

- Ding, N.; Huang, X. Analysis on the Trend of Storage Lithium Battery Application in the Data Central Computer Rooms. Fin. Tech. Time 2018, 10, 82–85. [Google Scholar]

- Pierson, J.M.; Baudic, G.; Caux, S.; Celik, S.; Costa, G.D.; Grange, L.; Haddad, M. DATAZERO: Datacenter with Zero Emission and Robust management using renewable energy. IEEE Access 2019, 99, 1. [Google Scholar] [CrossRef]

- Wang, D.; Ren, C.; Sivasubramaniam, A.; Urgaonkar, B.; Fathy, H. Energy Storage in Datacenters: What, Where, and How much? ACM SIGMETRICS Perform. Eval. Rev. 2012, 40, 1. [Google Scholar] [CrossRef]

- Hainan, Z.; Shao, S.; Xu, H.; Zou, H.; Tian, C. Free cooling of data centers: A review. Renew. Sustain. Energy Rev. 2014, 35, 171–182. [Google Scholar]

- Cupelli, L.; Thomas, S.; Jahangiri, P.; Fuchs, M.; Monti, F.; Muller, D. Data Center Control Strategy for Participation in Demand Response Programs. IEEE Trans. Ind. Inf. 2018, 11, 1. [Google Scholar] [CrossRef]

- Gao, T. Research on Cooling Methods Optimization of Data Center in North China; North China Electric Power University: Beijing, China, 2019. [Google Scholar]

- Yang, F. Analysis of Natural Cooling Methods in Data Center. China Sci. Technol. 2015, 9, 2. [Google Scholar]

- Yu, H. Discussion on the Energy Saving Scheme of Wind Side Natural Cooling in Data center. Intell. Build. Smart City 2018, 2, 40–41. [Google Scholar]

- Geng, H.; Li, J.; Zou, C. Discussion on Outdoor Air Cooling Energy Saving Technology of Data Center in Temperate Zone. Heat. Vent. Air Cond. 2017, 10, 19–25. [Google Scholar]

- Li, X.; Si, Y. Control strategy of UPS for Data Center Based on Economic Dispatch. Power Grid Anal. Study 2019, 47, 31–37. [Google Scholar]

- Lu, J. Energy Saving and Economy Analysis of Data Center Air Conditioning System Based on Application of Natural Cooling Technology; Hefei University of Technology: Hefei, China, 2016. [Google Scholar]

- Garg, S.K.; Gopalaiyengar, S.K.; Buyya, R. SLA-Based Resource Provisioning for Heterogeneous Workloads in a Virtualized Cloud Datacenter. In Proceedings of the 11th International Conference, ICA3PP, Melbourne, Australia, 24–26 October 2011. [Google Scholar]

- Available online: https://github.com/alibaba/clusterdata/blob/v2018/cluster-trace-v2018/trace_2018.md (accessed on 20 August 2020).

- Zhang, Y. A QoS-Based Energy-Aware Task Scheduling Method in Cloud Environment; Nanjing University of Information Science and Technology: Nanjing, China, 2018. [Google Scholar]

- Zhongshan Nuopi Information Technology Co., Ltd. Available online: https://www.nowapi.com/api/weather.history (accessed on 8 July 2018).

- An Exelon Company. Available online: https://hourlypricing.comed.com/live-prices/?date=20201008 (accessed on 8 October 2020).

- Taiwan Power Company. Available online: https://www.taipower.com.tw/tc/index.aspx (accessed on 20 August 2020).

| Notation | Description | Unit |

|---|---|---|

| Constant | ||

| The difference between peak power and idle power of the server | ||

| The idle power of the server | ||

| The discharging efficiency of the ESD | ||

| The charging efficiency of the ESD | ||

| Self-discharge rate of the ESD | ||

| The ramp rate | ||

| The ratio of discharge rate to recharge rate | ||

| The maximum power | ||

| The penalty coefficient | ||

| The highest operating frequency of the server | ||

| The mass flow rate | ||

| The specific heat capacity of air | ||

| The air density | ||

| The pressure drop | ||

| The fan efficiency | ||

| The minimum value of safe temperature | ||

| The maximum value of safe temperature | ||

| The number of servers | ||

| The maximum processing capacity of the server | (tasks)/s | |

| The length of a time interval | ||

| Variables | ||

| The power of the datacenter | ||

| The power of the server | ||

| The energy consumption of the server | ||

| CPU utilization | ||

| The power consumption of the cooling system | ||

| The energy consumption of the cooling system | ||

| The device capacity | ||

| Needed energy stored by ESD | ||

| The energy stored at time | ||

| Depth of discharge | ||

| The energy loss | ||

| The task arrival time of delay-sensitive task | ||

| The arrival time of delay-tolerant task | ||

| The tolerable delay time interval | ||

| The busy time of the server | ||

| The initial average number of tasks in the i-th time slot | ||

| The inlet temperature of the server | ||

| The heat distribution matrix | ||

| The mass flow | ||

| The outdoor temperature | ||

| The power of the fan | ||

| The electricity price | $/kWh | |

| The air conditioning supply temperature | ||

| The safe temperature of the server entrance | ||

| The actual charging power at time t | ||

| The discharging power at time t | ||

| The effective discharging power | ||

| The rechargeable power | ||

| The number of tasks moving in in the i-th time slot | ||

| The number of tasks moving out in the i-th time slot | ||

| The operating frequency of the host | ||

| The adjustable power consumption of the air conditioning cooling | ||

| The adjustable power consumption of the direct airside free cooling | ||

| The adjustable power consumption of the super capacitor | ||

| The adjustable power consumption of the flow battery | ||

| The adjustable power consumption of the task scheduling | ||

| The adjustable power consumption of the DVFS method | ||

| The deviation between the adjusted power and the target power | ||

| The operating cost of ESD | $ | |

| The operating cost of the cooling system | $ | |

| The penalty of task delay scheduling | $ | |

| The penalty of inaccurate adjustment | $ | |

| Description | Abbreviation |

|---|---|

| Energy Storage Devices | ESD |

| Demand Response | DR |

| Dynamic Voltage and Frequency Scaling | DVFS |

| Quality of Service | QoS |

| Service Level Agreement | SLA |

| Virtual Machine | VM |

| Coefficient of Performance | CoP |

| Flow Battery | FB |

| Super Capacitor | SC |

| Uninterruptible Power Supply | UPS |

| Depth of Discharge | DoD |

| Dynamic Optimal Scheduling Method | DOSM |

| Cost ($) | DOSM | Baseline1 | Baseline2 | Baseline3 | Heuristic |

|---|---|---|---|---|---|

| Case1 | 1096.07 | 6455.68 | 1567.96 | 2438.27 | 1372.38 |

| Case2 | 458.66 | 3165.08 | 2360.59 | 1693.05 | 864.97 |

| Case3 | 652.46 | 5225.01 | 4700.42 | 1721.83 | 1405.42 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, M.; Wang, X. A Synthetic Approach for Datacenter Power Consumption Regulation towards Specific Targets in Smart Grid Environment. Energies 2021, 14, 2602. https://doi.org/10.3390/en14092602

Zhao M, Wang X. A Synthetic Approach for Datacenter Power Consumption Regulation towards Specific Targets in Smart Grid Environment. Energies. 2021; 14(9):2602. https://doi.org/10.3390/en14092602

Chicago/Turabian StyleZhao, Mengmeng, and Xiaoying Wang. 2021. "A Synthetic Approach for Datacenter Power Consumption Regulation towards Specific Targets in Smart Grid Environment" Energies 14, no. 9: 2602. https://doi.org/10.3390/en14092602

APA StyleZhao, M., & Wang, X. (2021). A Synthetic Approach for Datacenter Power Consumption Regulation towards Specific Targets in Smart Grid Environment. Energies, 14(9), 2602. https://doi.org/10.3390/en14092602