Big Data-Based Early Fault Warning of Batteries Combining Short-Text Mining and Grey Correlation

Abstract

:1. Introduction

- (1)

- A novel data-driven scheme for early fault warning is proposed with the fusion of the short-text mining and grey correlation to find the key faults and clarify the prediction model. The short-texting mining is applied to analyze the manually filled vehicle maintenance data and categorize the key faults in batteries together with the grey correlation that can establish the relationship between the vehicle state data and the main faults. The scheme can make it possible to choose the data highly correlated with faults and lower the implementation difficulty for the later machine learning algorithm.

- (2)

- The scheme is an early fault warning method for comprehensive failures instead of an approach for a sort of specific failure. Therefore, it can analyze and pre-warn critical faults in the vehicle, such as poor consistency of cells, parameter errors, communication failure, etc. Moreover, it can be applied to the analysis of general EVs, not limited to the electric buses studied in this research. Specifically, the critical faults are extracted from a pile of sample data produced by EVs, analyzed by the grey correlation, and classified using the SVM algorithm.

- (3)

- The presented scheme can reduce the computational complexity of the machine learning algorithm for model construction without additional hardware cost, which is more practicable and efficient in actual implementation. Besides, it also provides higher effectiveness and robustness with the comparison of different model functions and parameters.

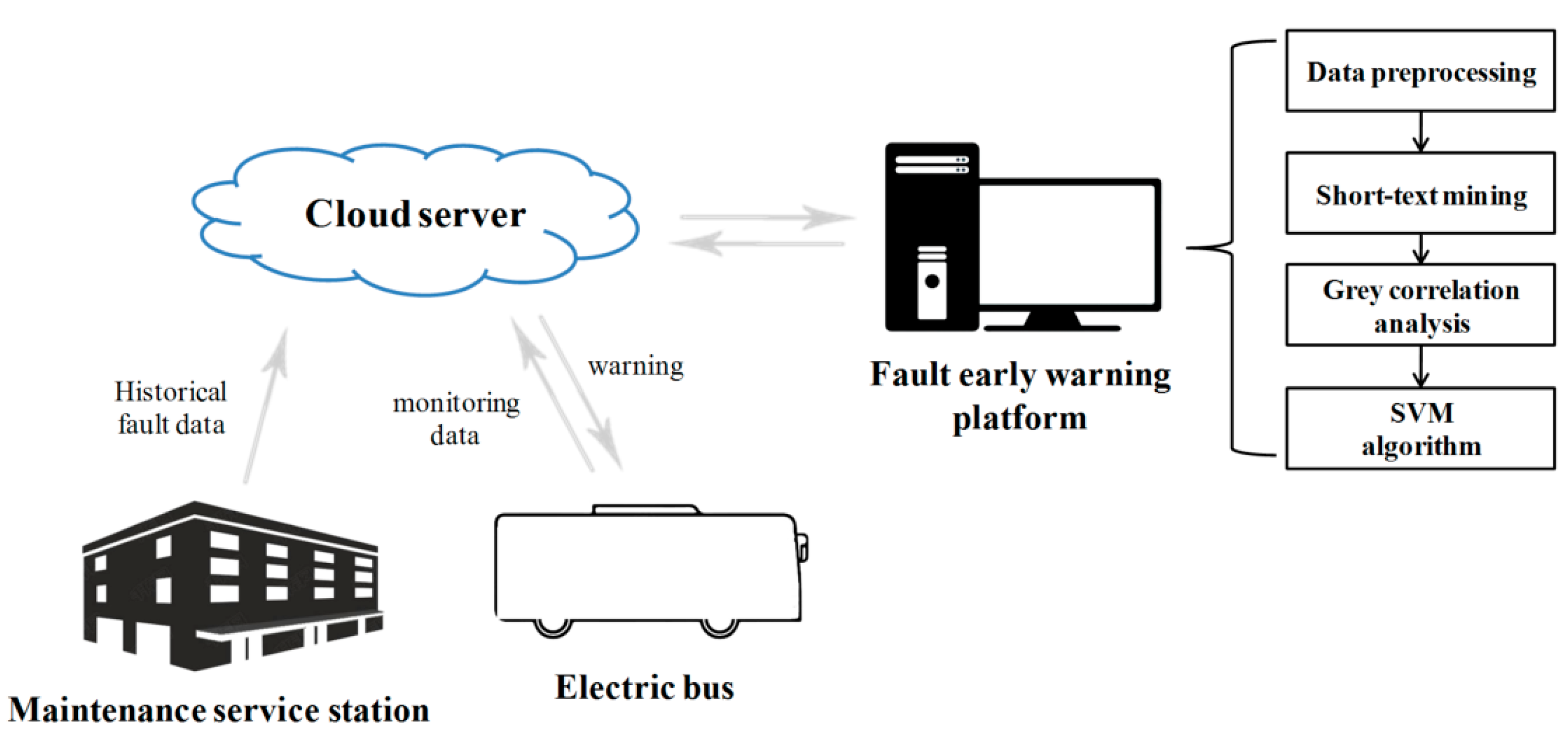

2. Description of the Early Fault Warning Scheme

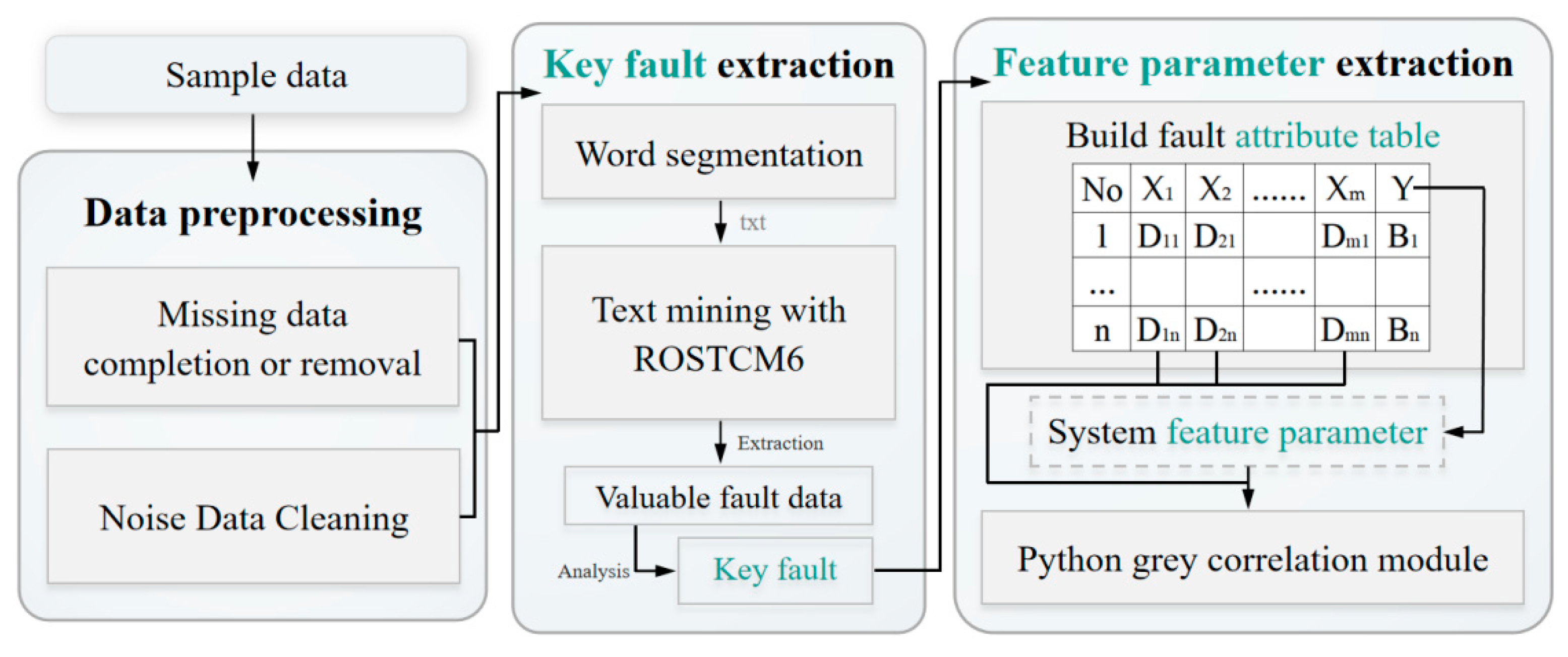

2.1. Data Preprocessing

2.2. Short-Text Mining

- (1)

- Feature Selection Based on Statistical Words Occurrence Frequency: The occurrence frequency of keywords in the text is the key topic feature for analysis. The words occurring more often are retained, and the rest can be deleted to improve the accuracy of words in frequency screening. The term frequency-inverse document frequency (TF-IDF) algorithm is a relatively mature method for text mining, which can be defined with the calculation [35]:where is the occurrence frequency of the keyword in the sample text ; is the total number of keywords in the ; N is the total number of short texts in the training model; and is the number of texts containing keywords in the training model.

- (2)

- Information Gain: Based on the information entropy, this method is utilized to measure the proportion of a feature in classification and the amount of the information supplied. Concretely, it gauges the expected reduction in entropy [36]. The difference value between feature entropy can be introduced according to the amount of information:where is the occurrence probability of keyword category in the sample text; is the total number of keyword categories in the sample text; is the occurrence probability of keyword in the sample text; is the occurrence probability of keyword in the keyword category ; is the occurrence probability of keyword in the sample text; and is the occurrence probability of keyword category without keyword .

2.3. Grey Correlation Analysis

- (1)

- Determining the Sequence of Analysis: Two series should be determined in this step, including the reference series reflecting the feature of system behavior and the comparison series that can affect system behavior.The reference series can be formulated as:where is the kth variable in the initial sequence .The comparison series can be established as:where is the kth variable in .

- (2)

- Nondimensionalization of Variables: Given the data sequence , the mean change method can be employed. First, the average of each sequence can be calculated. Then, the average value with the data in the original sequence can be divided to generate a new data sequence. Last, the sequence average can be used to reflect the dynamic changes in the data.

- (3)

- Calculating the Difference Series, Extreme Value, and Grey Correlation Coefficient: Based on the dimensionless transformation, the related calculation can be obtained:where is the difference sequence; is the maximum value of ; is the minimum of ; is the grey correlation coefficient; and is the resolution coefficient.

- (4)

- Calculating the Correlation Value and Sorting: The correlation degree can be defined as the average value between the comparison series and the reference series:

2.4. SVM Algorithm

2.5. Model Selection and Parameter Tuning for Machine Learning

3. Key Fault and Feature Parameter Extraction of Electric Buses

3.1. Data Preprocessing

- (1)

- Missing Data Completion or Removal: Due to the inconsistency of the time axis of the intercepted data, the real-time state data of the vehicle may lose individual data. The data acquisition frequency is set as 10 s. Some data that change rapidly cannot be easily completed. Still, for data whose state does not change quickly, such as battery voltage and the state of charge (SOC), the interpolation mean method can be used for numerical substitution, or the last adjacent value can be used.

- (2)

- Noise Data Cleaning: Noise data refer to erroneous data caused by system logic or information interference during acquisition. Noise data severely impact the results of later data processing, which need to be located first in data cleaning to find the adjacent problem data and remove it. Cleaning these noise data needs to be screened one by one according to the feature of electric buses and the correlation with the faults, and special tools are used for prevention and elimination.

3.2. Key Fault Extraction of Electric Buses Based on Short-Text Mining

3.3. Feature Parameter Extraction Based on Grey Correlation Analysis

- (1)

- Data normalization

- (2)

- Data standardization

- (3)

- Comparison with standard elements

- (4)

- Taking out the maximum and minimum values in the matrix

- (5)

- Calculation result

- (6)

- Calculating the mean value to obtain the grey correlation value

4. Results and Discussion of Early Fault Warning Based on SVM

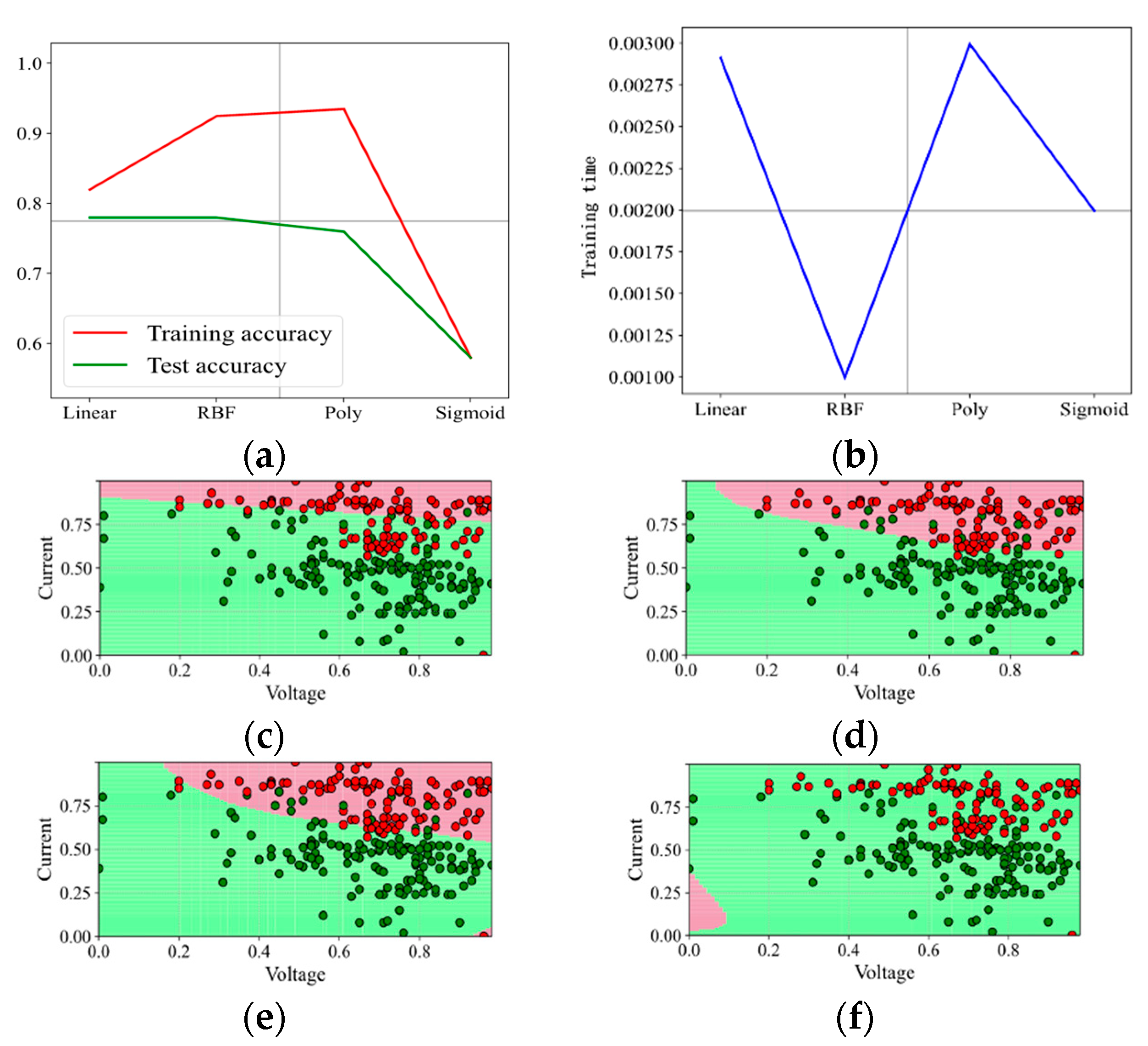

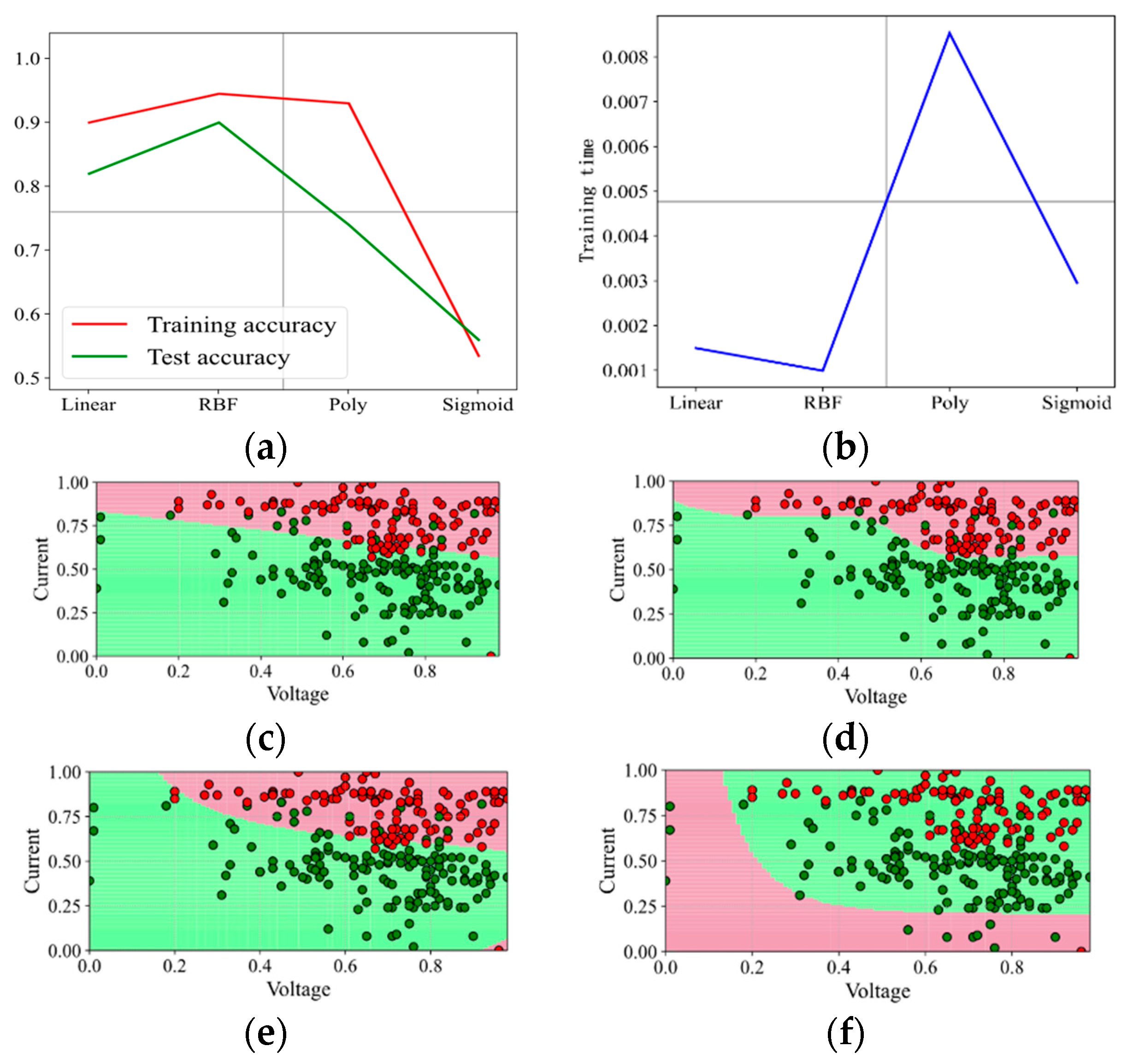

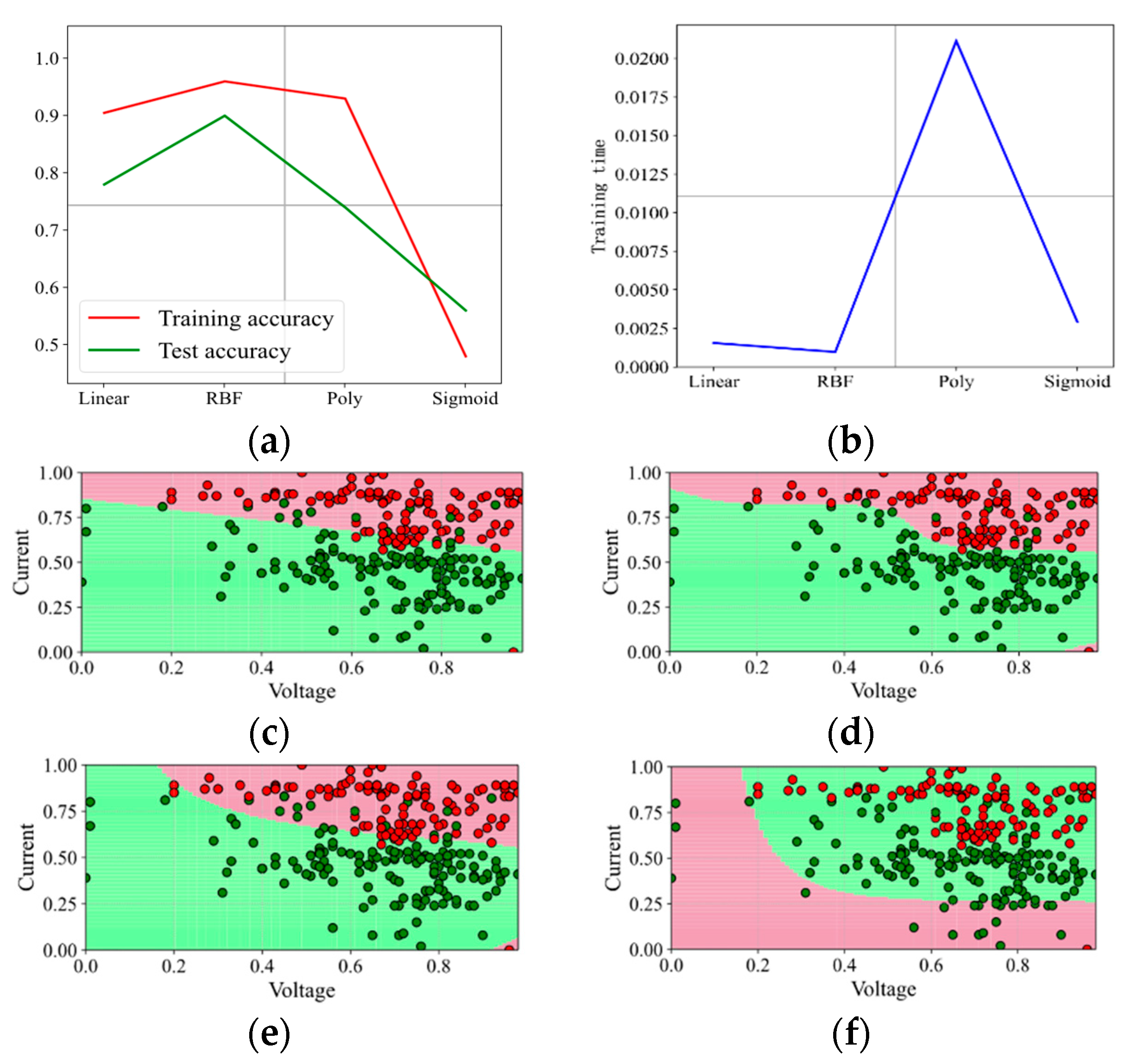

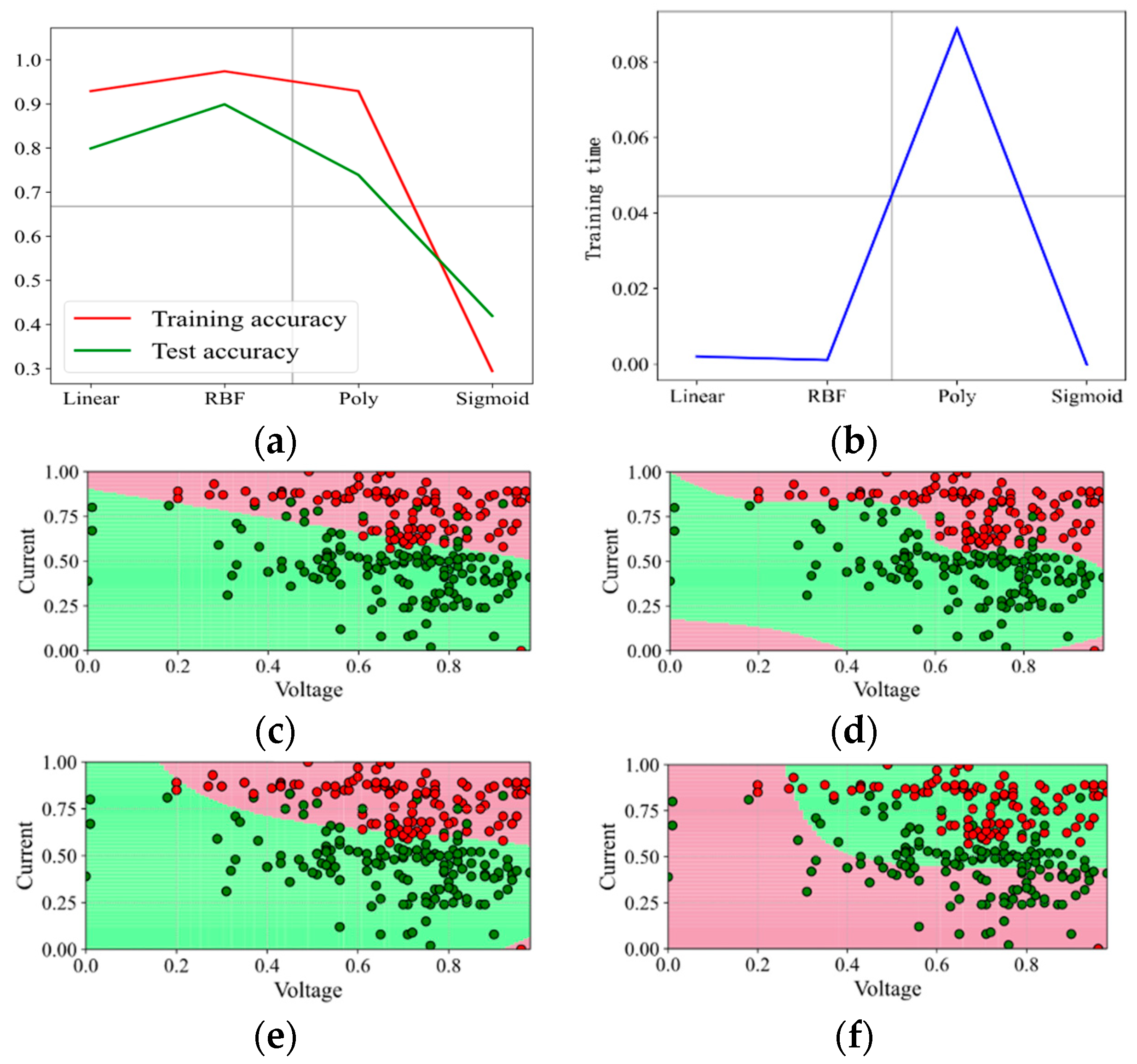

4.1. Kernel Function Selection

- (1)

- Read the data and divide it into the training set and test set.

- (2)

- Set the penalty factor C; evaluate the training accuracy and the computing efficiency of the model.

- (3)

- Visualize the model results and guide to set the next value of C.

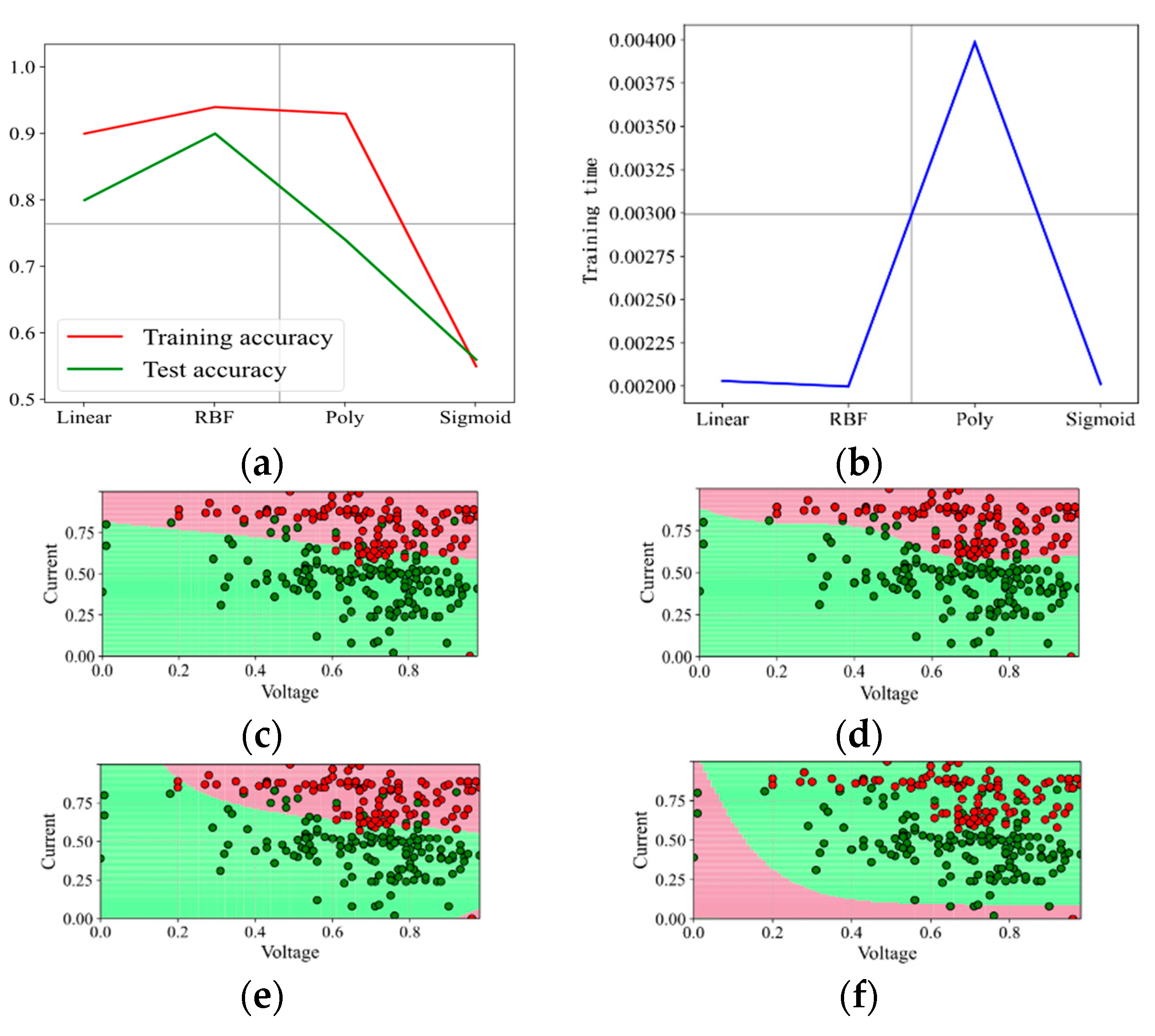

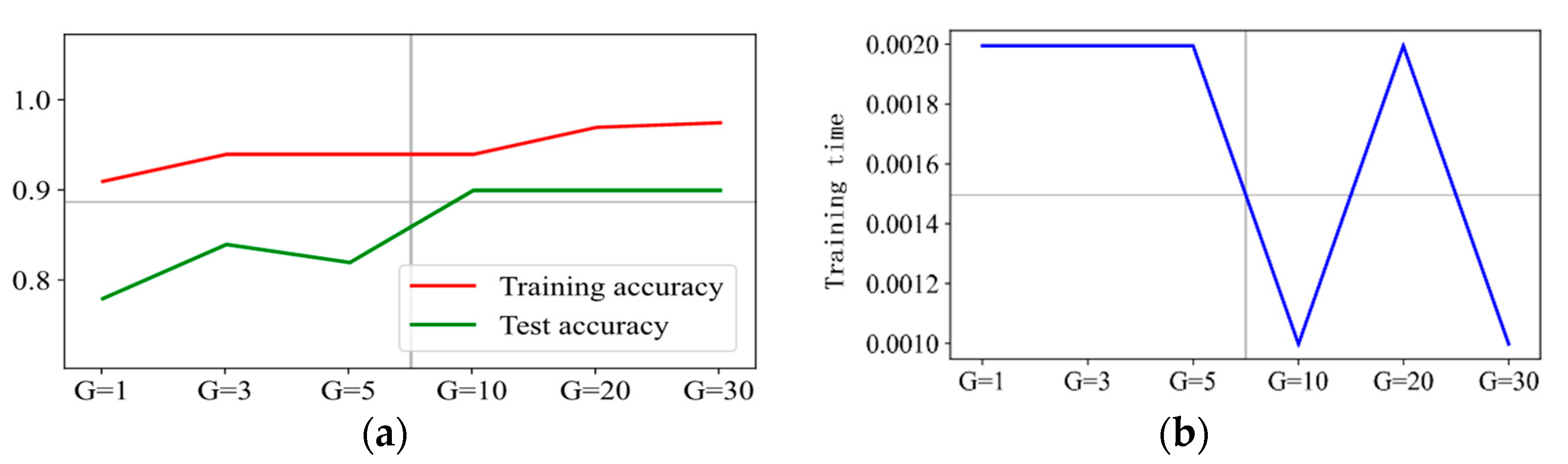

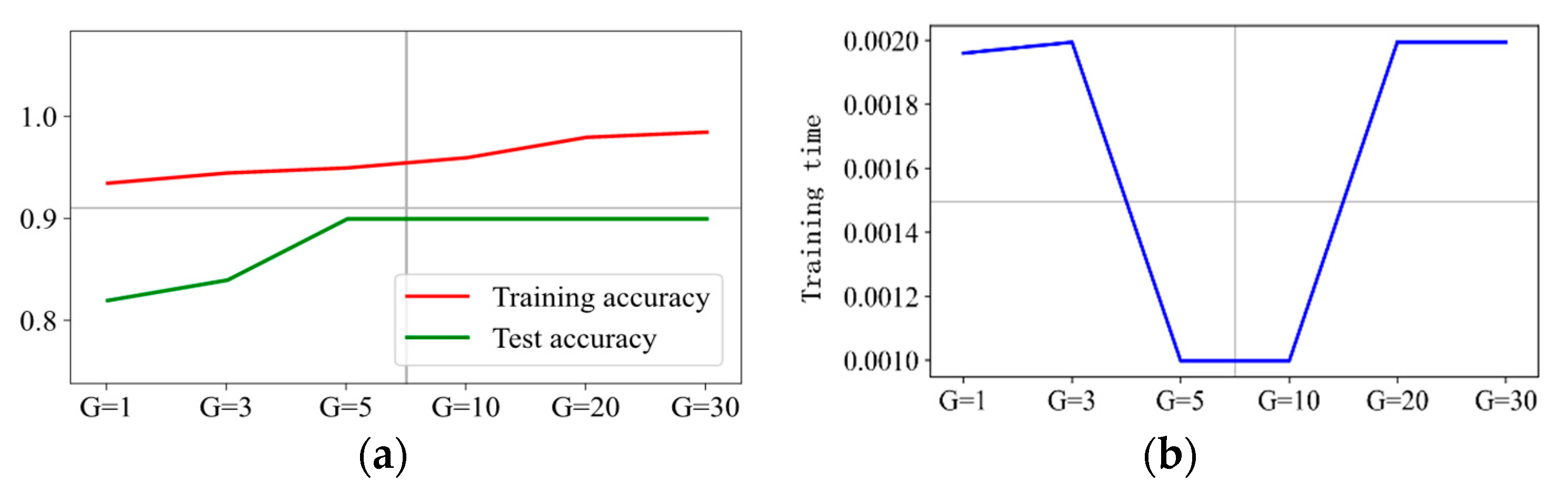

4.2. Tuning Hyperparameters of the Kernel Function RBF

- (1)

- Reading the data and dividing it into the training set and test set.

- (2)

- Setting the penalty factor C and passing it to six models with different hyperparameters respectively.

- (3)

- Model training.

- (4)

- Evaluating the effect of accuracy and operation efficiency of the model.

- (5)

- Visualizing the model results and setting the next value C.

- (6)

- Repeating the above operations until the model effect is stable and finding a suitable combination of penalty factor C and hyperparameter .

4.3. Experimental Verification

- (1)

- Extract the state parameters of some faulty electric buses one hour before the fault to form a real vehicle fault data set.

- (2)

- Input the fault data set into the model for calculation, record the results of early fault warning, and fill the data with the correct prediction and wrong prediction into the confusion matrix.

- (3)

- Extract the state parameters of some non-faulty electric buses to form a real vehicle fault-free data set.

- (4)

- Input the fault-free data set into the model for calculation, record the results of early fault warning, and fill the data with the correct prediction and wrong prediction into the confusion matrix.

- (5)

- Calculate the data in the confusion matrix, obtain the harmonic average value, and determine the prediction accuracy.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhao, Y.; Wang, Z.; Shen, Z.; Sun, F. Data-driven framework for large-scale prediction of charging energy in electric vehicles. Appl. Energy 2021, 282, 116175. [Google Scholar] [CrossRef]

- Sun, Z.; Han, Y.; Wang, Z.; Chen, Y.; Liu, P.; Qin, Z.; Song, C. Detection of voltage fault in the battery system of electric vehicles using statistical analysis. Appl. Energy 2022, 307, 118172. [Google Scholar] [CrossRef]

- Si, J.; Ma, J.; Niu, J.; Wang, E. An intelligent fault diagnosis expert system based on fuzzy neural network. J. Vib. Shock 2017, 36, 164–171. [Google Scholar]

- Xu, Z. Leakage prediction of swing cylinder in concrete pump truck based on the improved direct grey model. J. Wuhan Univ. Sci. Technol. (Nat. Sci. Ed.) 2015, 6, 459–462. [Google Scholar]

- Li, J. Research on Safety Evaluation Method and Application of Vehicle Running State. Master’s Thesis, Beijing Jiaotong University, Beijing, China, 2007. [Google Scholar]

- Hao, X. Wang Yunsong, deputy director of the Quality Development Bureau of the State Market Supervision Administration: The recall of cars in 2021 mainly focused on electronic and electrical appliances, engines and other assemblies. Prod. Reliab. Rep. 2022, 1, 65–66. [Google Scholar]

- Jiang, X.; Fu, X.; Yang, Z. Application of RBF neural network method in remote monitoring system for vehicle state. Automob. Technol. 2011, 3, 23–26. [Google Scholar]

- Wu, J.; Song, L.; Chen, J. Common faults and treatment of bus CAN bus. People’s Public Transp. 2012, 2, 64. [Google Scholar] [CrossRef]

- Zeng, S.; Pecht, M.; Wu, J. Status and perspectives of prognostics and health management technologies. Acta Aeronaut. Astronaut. Sin. 2005, 26, 626–632. [Google Scholar]

- Zhai, H.; Xue, L.; Pei, D. Investigation and statistical analysis of the distribution characteristics of bus fault. Automob. Technol. 2017, 2, 208–210. [Google Scholar]

- Chen, F. Research classification algorithm based on support vector machine. Comput. Netw. 2009, 35, 64–67. [Google Scholar]

- Xuan, R. Vehicle Health Management and Monitoring System Based on Big Data. Master’s Thesis, Southeast University, Nanjing, China, 2017. [Google Scholar]

- Yılmaz, A.; Bayrak, G. A new signal processing-based islanding detection method using pyramidal algorithm with undecimated wavelet transform for distributed generators of hydrogen energy. Int. J. Hydrogen Energy 2022, 47, 19821–19836. [Google Scholar] [CrossRef]

- Patil, A.; Mishra, B.; Harsha, S. A mechanics and signal processing based approach for estimating the size of spall in rolling element bearing. Eur. J. Mech. A/Solids 2021, 85, 104125. [Google Scholar] [CrossRef]

- Hanna, S.; Dick, C.; Cabric, D. Signal Processing-Based Deep Learning for Blind Symbol Decoding and Modulation Classification. IEEE J. Sel. Areas Commun. 2021, 40, 82–96. [Google Scholar] [CrossRef]

- Ma, M.; Wang, Y.; Duan, Q.; Wu, T.; Sun, J.; Wang, Q. Fault detection of the connection of lithium-ion power batteries in series for electric vehicles based on statistical analysis. Energy 2018, 164, 745–756. [Google Scholar] [CrossRef]

- Rubini, R.; Meneghetti, U. Application of the envelope and wavelet transform analyses for the diagnosis of incipient faults in ball bearings. Mech. Syst. Signal Processing 2001, 15, 287–302. [Google Scholar] [CrossRef]

- Cheng, J.; Yu, D.; Yang, Y. Application of an impulse response wavelet to fault diagnosis of rolling bearings. Mech. Syst. Signal Processing 2007, 21, 920–929. [Google Scholar] [CrossRef]

- Fadaei, A.; Khasteh, S. Enhanced K-means re-clustering over dynamic networks. Expert Syst. Appl. 2019, 132, 126–140. [Google Scholar] [CrossRef]

- Fränti, P.; Sieranoja, S. How much can k-means be improved by using better initialization and repeats? Pattern Recognit. 2019, 93, 95–112. [Google Scholar] [CrossRef]

- Yang, R.; Xiong, R.; Ma, S.; Lin, X. Characterization of external short circuit faults in electric vehicle Li-ion battery packs and prediction using artificial neural networks. Appl. Energy 2020, 260, 114253. [Google Scholar] [CrossRef]

- Lee, S.; Han, S.; Han, K.; Kim, Y.; Agarwal, S.; Hariharan, K.; Oh, B.; Yoon, J. Diagnosing various failures of lithium-ion batteries using artificial neural network enhanced by likelihood mapping. J. Energy Storage 2021, 40, 102768. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, J.; Wan, J.; Wu, S. A fault diagnosis method for small pressurized water reactors based on long short-term memory networks. Energy 2022, 239, 122298. [Google Scholar] [CrossRef]

- Kapucu, C.; Cubukcu, M. A supervised ensemble learning method for fault diagnosis in photovoltaic strings. Energy 2021, 227, 120463. [Google Scholar] [CrossRef]

- Zhang, Z. Research on Fault Early Warning Based on Big Data Mining. Master’s Thesis, Beijing University of Posts and Telecommunications, Beijing, China, 2018. [Google Scholar]

- Nan, J.; Deng, B.; Cao, W.; Tan, Z. Prediction for the Remaining Useful Life of Lithium–Ion Battery Based on RVM-GM with Dynamic Size of Moving Window. World Electr. Veh. J. 2022, 13, 25. [Google Scholar] [CrossRef]

- Ji, C.; Sun, W. A review on data-driven process monitoring methods: Characterization and mining of industrial data. Processes 2022, 10, 335. [Google Scholar] [CrossRef]

- Dina, N.; Yunardi, R.; Firdaus, A. Utilizing Text Mining and Feature-Sentiment-Pairs to Support Data-Driven Design Automation Massive Open Online Course. Int. J. Emerg. Technol. Learn. (iJET) 2021, 16, 134–151. [Google Scholar] [CrossRef]

- Xu, H.; Liu, Y.; Shu, C.; Bai, M.; Motalifu, M.; He, Z.; Wu, S.; Zhou, P.; Li, B. Cause analysis of hot work accidents based on text mining and deep learning. J. Loss Prev. Process Ind. 2022, 76, 104747. [Google Scholar] [CrossRef]

- Schmid, M.; Kneidinger, H.; Endisch, C. Data-driven fault diagnosis in battery systems through cross-cell monitoring. IEEE Sens. J. 2020, 21, 1829–1837. [Google Scholar] [CrossRef]

- Zhang, K.; Hu, X.; Liu, Y.; Lin, X.; Liu, W. Multi-fault Detection and Isolation for Lithium-Ion Battery Systems. IEEE Trans. Power Electron. 2021, 37, 971–989. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, P.; Wang, Z.; Zhang, L.; Hong, J. Fault and defect diagnosis of battery for electric vehicles based on big data analysis methods. Appl. Energy 2017, 207, 354–362. [Google Scholar] [CrossRef]

- Kang, M.; Tian, J. Machine Learning: Data Pre-processing. In Prognostics and Health Management of Electronics: Fundamentals, Machine Learning, and the Internet of Things; Springer: Berlin/Heidelberg, Germany, 2018; pp. 111–130. [Google Scholar] [CrossRef]

- Li, H. Functions and application of vehicle status analysis software based on vehicle on-board data. Urban Mass Transit 2015, 18, 150–152. [Google Scholar]

- Qaiser, S.; Ali, R. Text mining: Use of TF-IDF to examine the relevance of words to documents. Int. J. Comput. Appl. 2018, 181, 25–29. [Google Scholar] [CrossRef]

- Roobaert, D.; Karakoulas, G.; Chawla, N. Information Gain, Correlation and Support Vector Machines; Springer: Berlin/Heidelberg, Germany, 2006; pp. 463–470. [Google Scholar]

- Zhou, X. Research and Application of Grey Correlation Degree. Master’s Thesis, Jilin University, Changchun, China, 2007. [Google Scholar]

- Zhou, R.; Li, Z.; Chen, S. Parallel optimization sampling clustering K-means algorithm for big data processing. J. Comput. Appl. 2016, 36, 311–315. [Google Scholar]

- Scholkopf, B. Making large scale SVM learning practical. In Advances in Kernel Methods: Support Vector Learning; The MIT Press: London, UK, 1999; pp. 41–56. [Google Scholar]

- Otchere, D.; Ganat, T.; Gholami, R.; Ridha, S. Application of supervised machine learning paradigms in the prediction of petroleum reservoir properties: Comparative analysis of ANN and SVM models. J. Pet. Sci. Eng. 2021, 200, 108182. [Google Scholar] [CrossRef]

- Shang, X. Research on the State Subdivision and Fault Prediction of Public Traffic Vehicles Based on Big Data. Master’s Thesis, Beijing Jiaotong University, Beijing, China, 2018. [Google Scholar]

- Ossig, D.; Kurzenberger, K.; Speidel, S.; Henning, K.; Sawodny, O. Sensor fault detection using an extended Kalman filter and machine learning for a vehicle dynamics controller. In Proceedings of the IECON 2020 The 46th Annual Conference of the IEEE Industrial Electronics Society, Singapore, 18–21 October 2020; pp. 361–366. [Google Scholar] [CrossRef]

- Lewis, H.; Brown, M. A generalized confusion matrix for assessing area estimates from remotely sensed data. Int. J. Remote Sens. 2001, 22, 3223–3235. [Google Scholar] [CrossRef]

| Fault Names | Word Frequency |

|---|---|

| poor consistency of battery cells | 12,506 |

| open circuit (high voltage) | 6979 |

| communication failure between BMS and charger | 5781 |

| SOC value is too high | 5194 |

| motor controller undervoltage 24 V | 4690 |

| over temperature fault of charging base | 4522 |

| overcurrent (high pressure) | 3925 |

| heating or water-cooling relay failure | 3574 |

| battery cell voltage is too high | 2502 |

| No. | Parameter Properties | Y | |||||

|---|---|---|---|---|---|---|---|

| X1 | X2 | X3 | X4 | X5 | X6 | ||

| 1 | 0.68 | 0.53 | 0.69 | 0.16 | 0.00 | 1.00 | 1 |

| 2 | 0.73 | 0.54 | 0.76 | 0.00 | 0.00 | 0.70 | 0 |

| 3 | 0.98 | 0.39 | 0.95 | 0.00 | 0.00 | 0.00 | 0 |

| 4 | 0.66 | 0.55 | 0.69 | 0.00 | 0.00 | 1.00 | 1 |

| 5 | 0.75 | 0.54 | 0.81 | 0.49 | 0.00 | 0.00 | 0 |

| 6 | 0.56 | 0.12 | 0.44 | 0.00 | 0.00 | 0.00 | 0 |

| …… | …… | …… | …… | …… | …… | …… | …… |

| 249 | 0.49 | 1.00 | 0.63 | 0.62 | 0.99 | 0.00 | 1 |

| 250 | 0.70 | 0.49 | 0.74 | 0.21 | 0.00 | 0.60 | 0 |

| Vehicle Status | Grey Correlation Degree |

|---|---|

| total voltage | 0.712098 |

| total current | 0.904193 |

| SOC | 0.530654 |

| vehicle speed | 0.466952 |

| the travel of accelerator pedal | 0.494350 |

| the state of brake pedal | 0.483021 |

| Confusion Matrix | Actual Class | Total | ||

|---|---|---|---|---|

| Fault | No Fault | |||

| Prediction class | Fault | 46 | 12 | 54 |

| No Fault | 4 | 88 | 96 | |

| Total | 50 | 100 | 150 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nan, J.; Deng, B.; Cao, W.; Hu, J.; Chang, Y.; Cai, Y.; Zhong, Z. Big Data-Based Early Fault Warning of Batteries Combining Short-Text Mining and Grey Correlation. Energies 2022, 15, 5333. https://doi.org/10.3390/en15155333

Nan J, Deng B, Cao W, Hu J, Chang Y, Cai Y, Zhong Z. Big Data-Based Early Fault Warning of Batteries Combining Short-Text Mining and Grey Correlation. Energies. 2022; 15(15):5333. https://doi.org/10.3390/en15155333

Chicago/Turabian StyleNan, Jinrui, Bo Deng, Wanke Cao, Jianjun Hu, Yuhua Chang, Yili Cai, and Zhiwei Zhong. 2022. "Big Data-Based Early Fault Warning of Batteries Combining Short-Text Mining and Grey Correlation" Energies 15, no. 15: 5333. https://doi.org/10.3390/en15155333

APA StyleNan, J., Deng, B., Cao, W., Hu, J., Chang, Y., Cai, Y., & Zhong, Z. (2022). Big Data-Based Early Fault Warning of Batteries Combining Short-Text Mining and Grey Correlation. Energies, 15(15), 5333. https://doi.org/10.3390/en15155333