FedDP: A Privacy-Protecting Theft Detection Scheme in Smart Grids Using Federated Learning

Abstract

:1. Introduction

Contributions

- First, a novel framework, federated data privacy (FedDP), is presented for energy theft detection in SGs.

- Secondly, to improve the accuracy and to avoid bias of any single ML algorithm, an ensemble learning classifier is proposed in a federated learning environment, named the federated voting classifier (FVC).

- FVC can identify the energy theft in SGs, even in the presence of highly unbalanced data, with an accuracy of 91.67%, which is a relatively better performance than the existing techniques.

- Moreover, FVC can also significantly outperform other state-of-the-art algorithms in terms of execution time when implemented on the same hardware.

2. Literature Review

2.1. Energy Theft in SGs

2.2. Applications of Federated Learning

2.3. Federated Learning in Smart Grids

3. Methodology

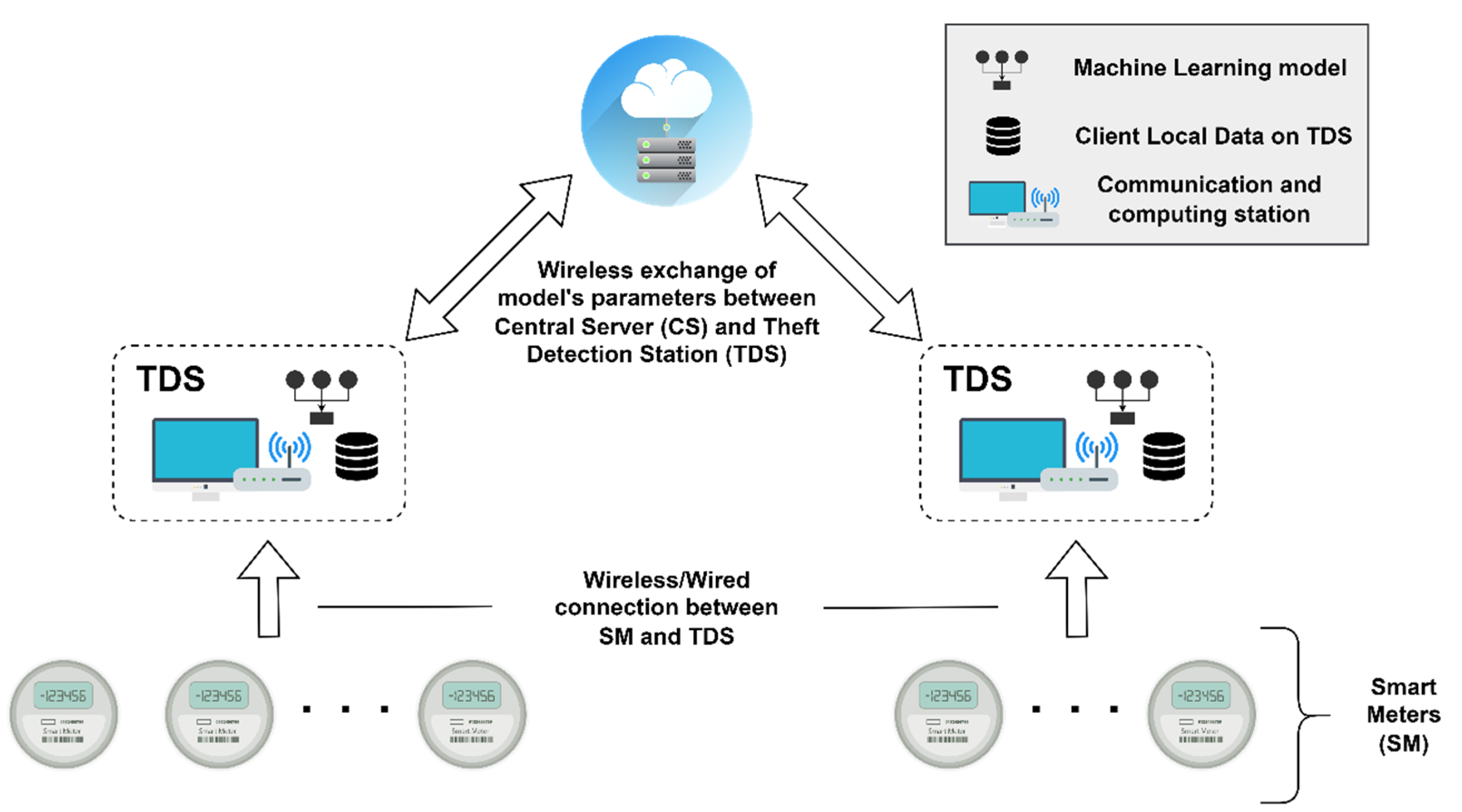

3.1. System Model

- (1)

- Theft detection station (TDS): A TDS can obtain real-time data on energy utilization from the group of SMs in its vicinity. This research assumes that (1) the wired or wireless connection between SM and TDS is secure. (2) TDS are low-powered devices but have sufficient storage and processing power that can store the data along with the training of the ML model. (3) Each TDS can automatically infer the data label that associates with previous data of an SM with electricity thievery. (4) TDS can also securely communicate with CS for the exchange of the ML model parameters. In FedDP, TDS is considered a federated client. During the training stage, TDSs download the model parameters (e.g., weights in the neural networks and number of neighbors, Leaf_size, etc., in KNN) from the server and evaluate them using local data.

- (2)

- Central server (CS): It is the initialization of the FL process and is responsible for broadcasting default parameters and learning models to all TDS. In the FL process, the CS can receive the model parameters, accumulate them, and can broadcast the improved parameters to all TDSs.

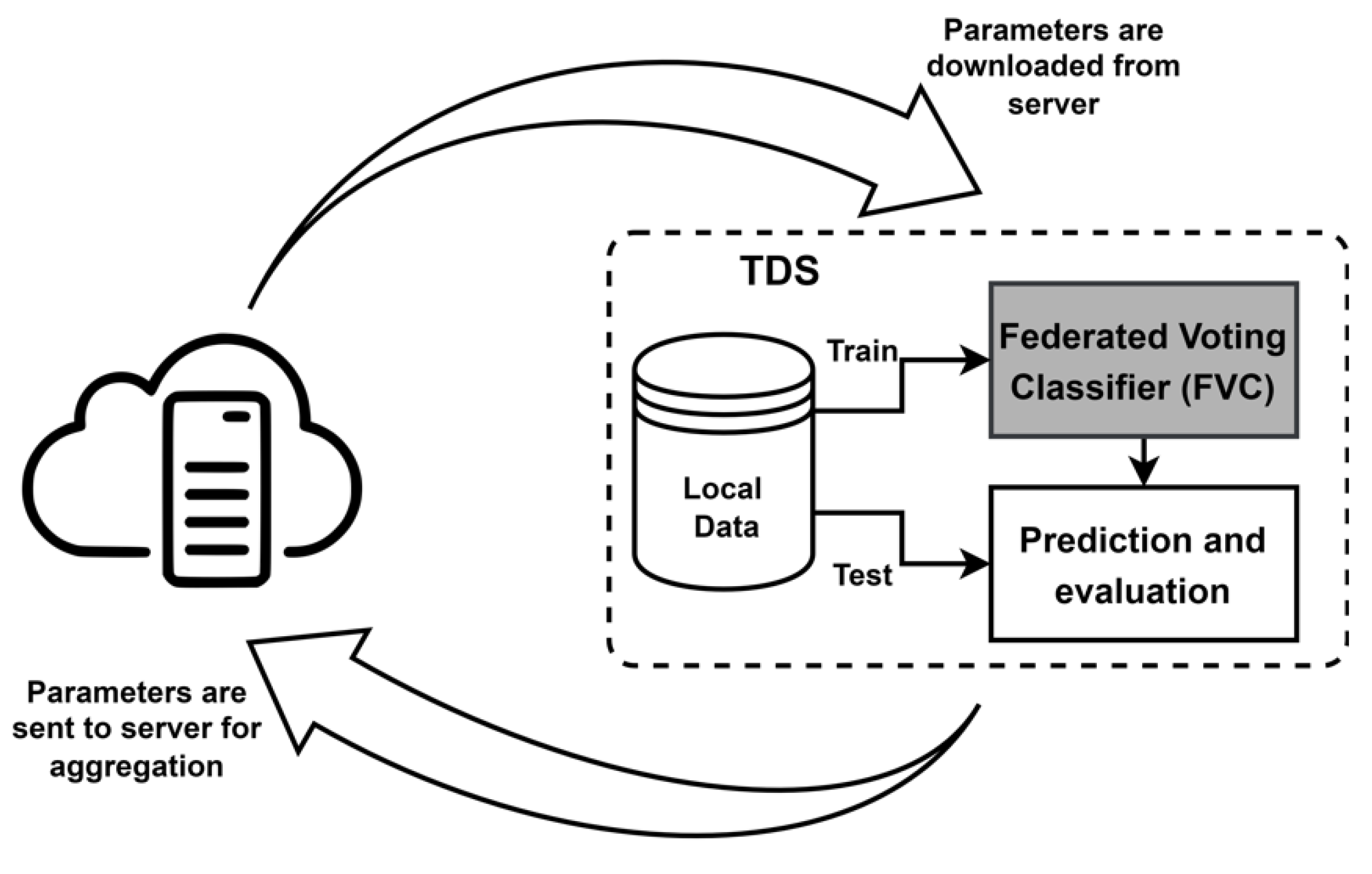

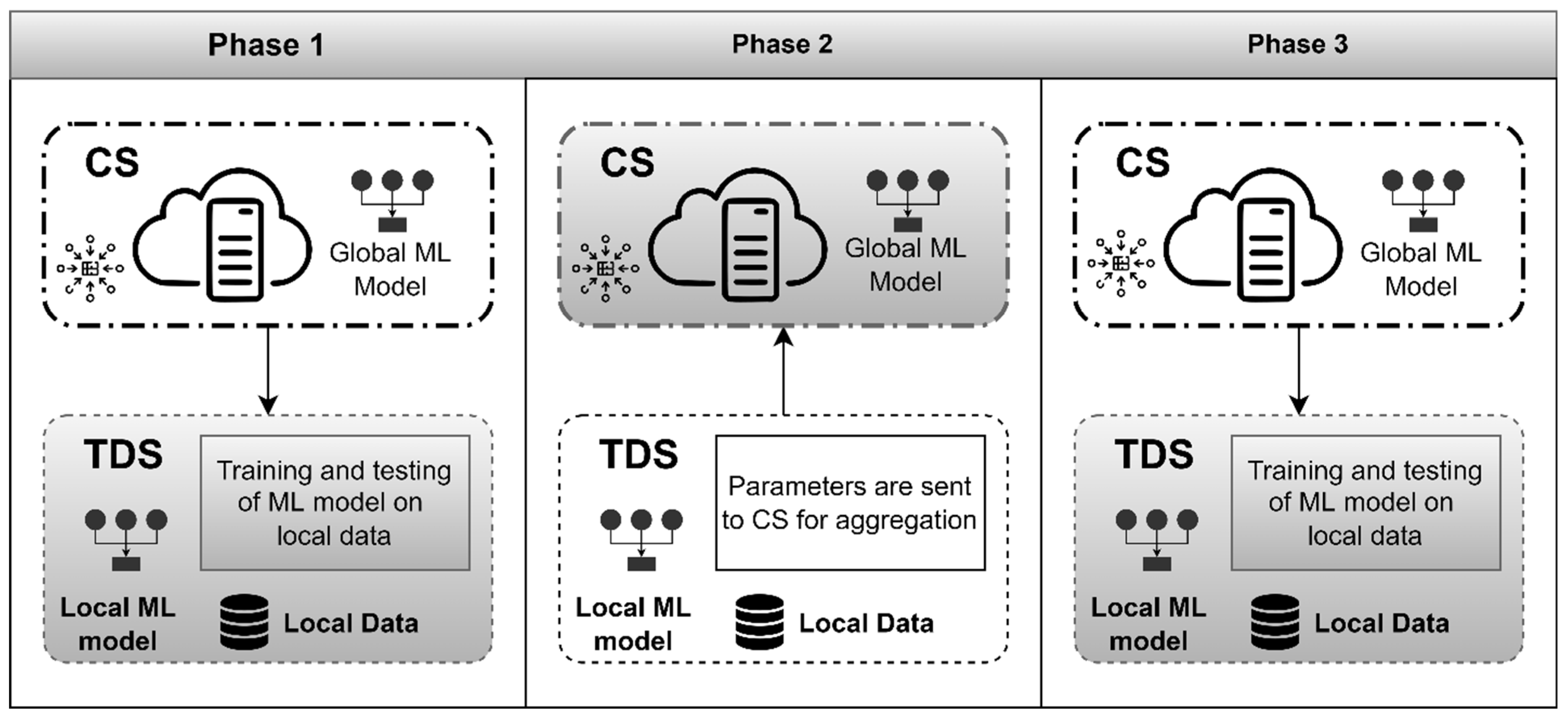

3.2. Privacy-Preserving FedDP

3.3. Predictive Methods

3.3.1. Random Forest (RF)

3.3.2. k-Nearest Neighbors

3.3.3. Bagging Classifier (BG)

3.4. Proposed FVC Algorithm

4. Methodology

4.1. Computing Platform

4.2. Dataset Description

4.3. Evaluation Metrics

5. Results and Discussion

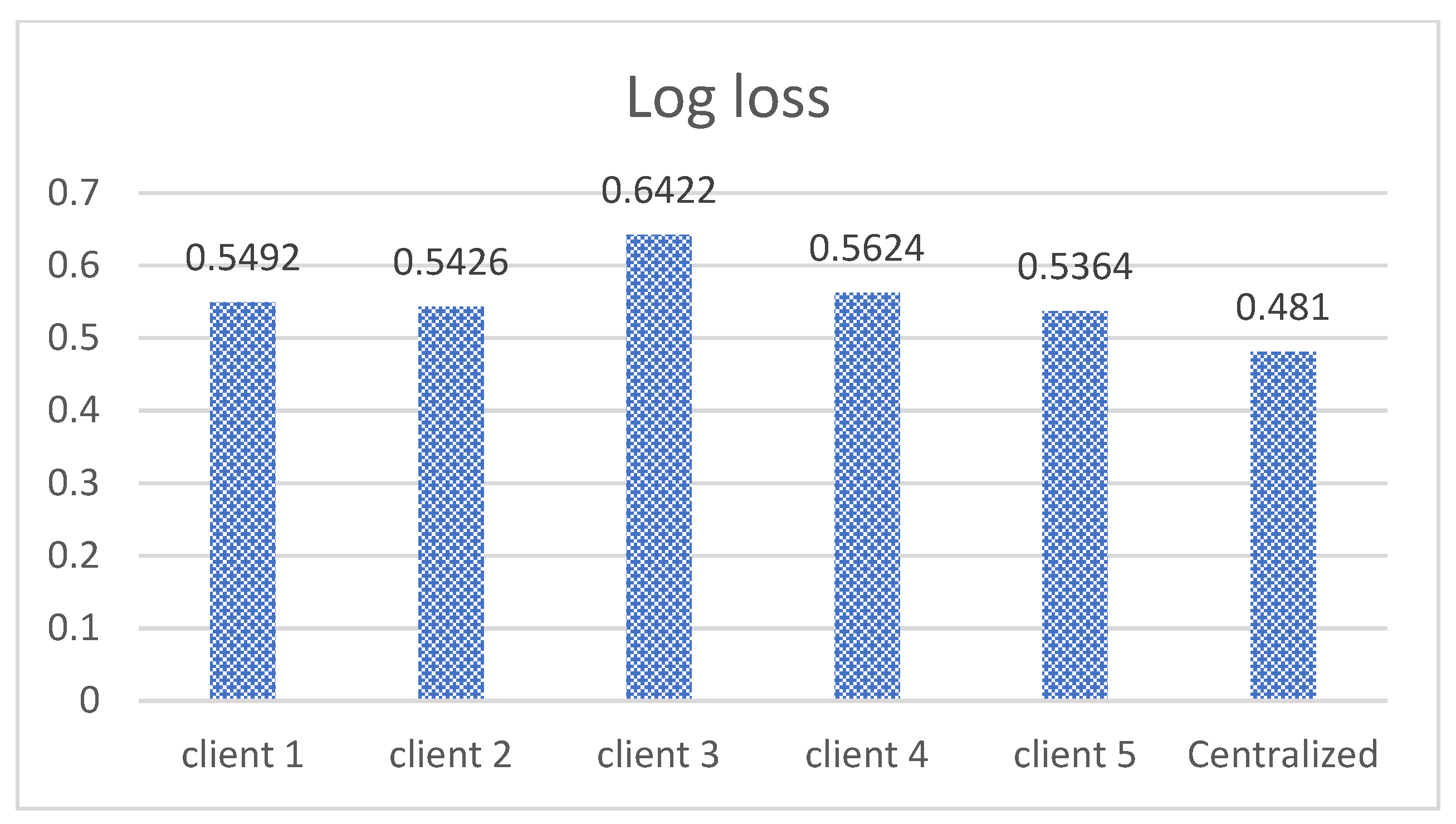

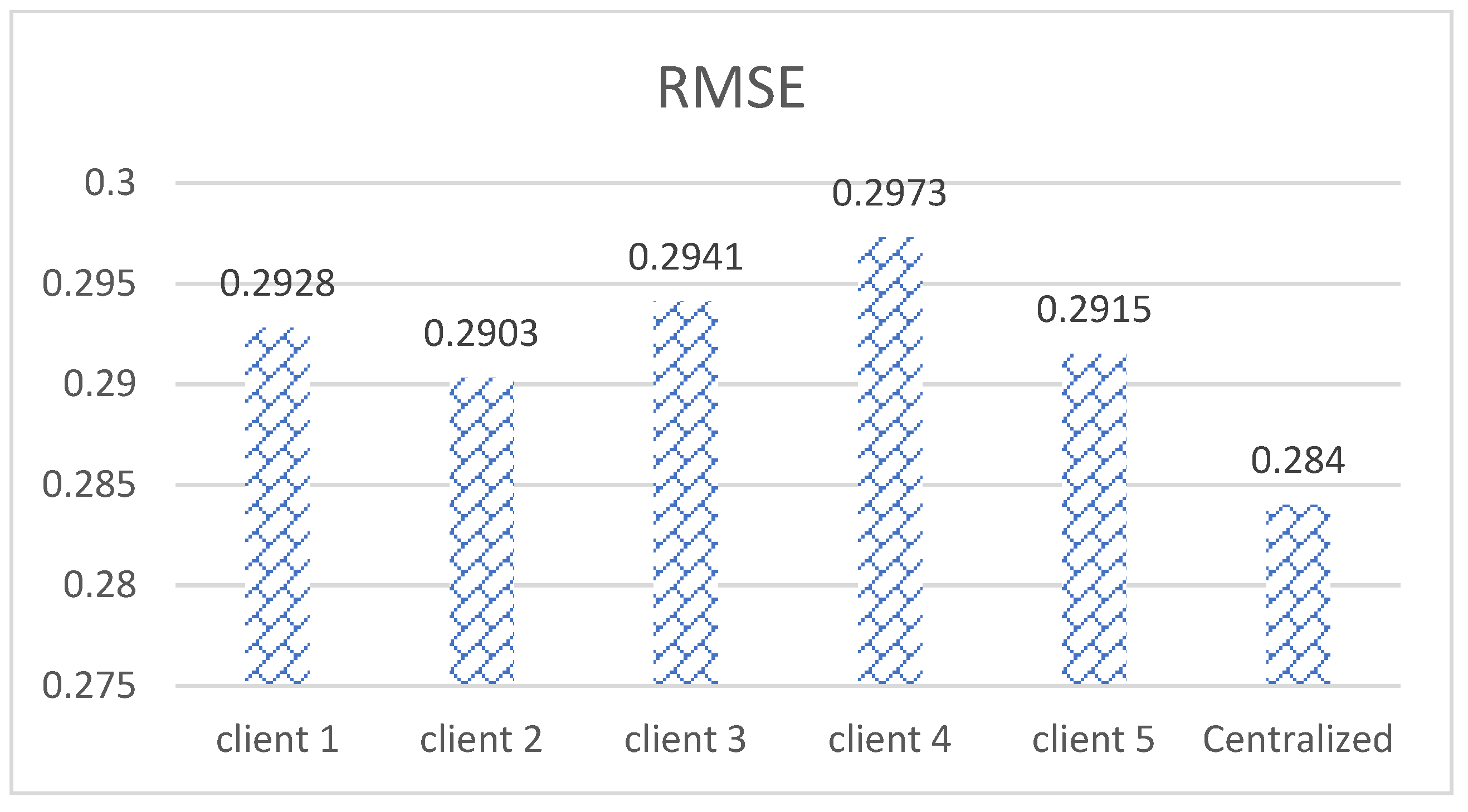

5.1. Comparison with Centralized Voting Classifier

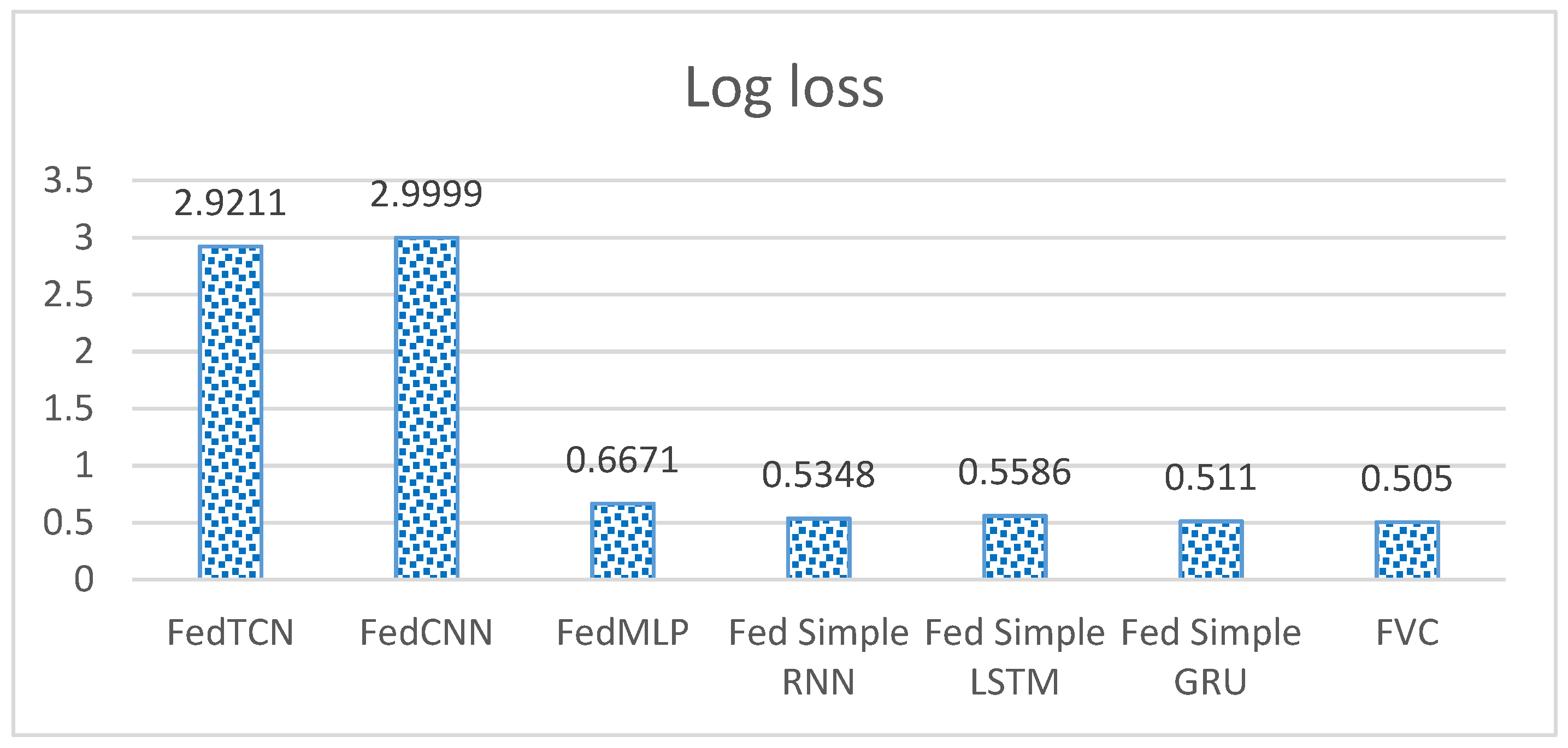

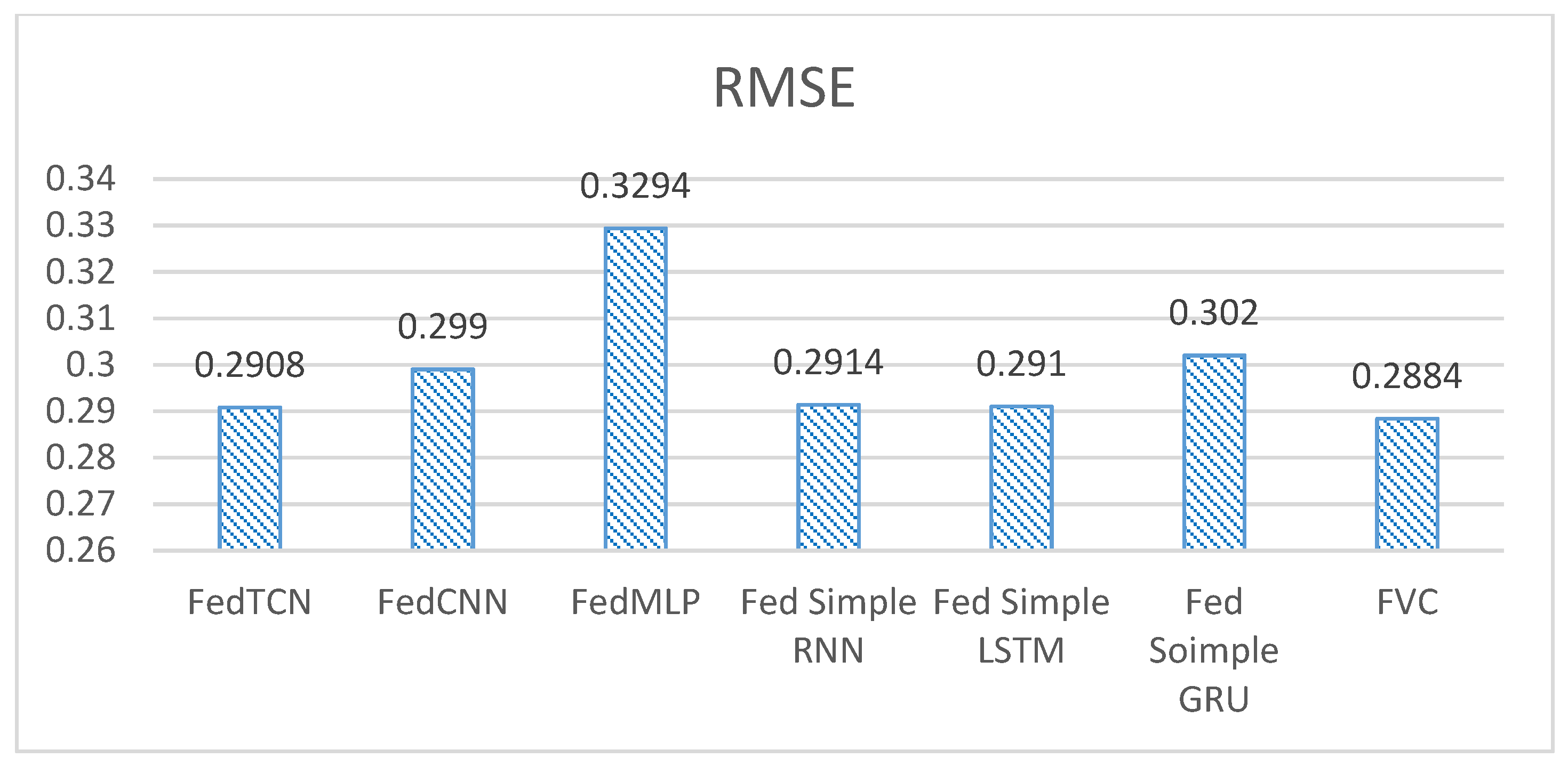

5.2. Comparison with Other State-of-the-Art Models

Computation Overhead

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Glossary

| federated client | |

| for a data sample | |

| Total number of samples in testing data | |

| label | |

| Classification model | |

| Classification model with optimal parameters | |

| and data point | |

| Total execution time of the federated process | |

| Overall loss of each TDS | |

| Time taken by the averaging algorithm running on the server | |

| Time taken by the server for communication | |

| federated client | |

| Whole dataset | |

| Testing dataset on each client | |

| Training dataset on each client | |

| Set of TDSs | |

| sample |

References

- Tariq, M.; Ali, M.; Naeem, F.; Poor, H.V. Vulnerability assessment of 6g-enabled smart grid cyber-physical systems. IEEE Internet Things J. 2021, 8, 5468–5475. [Google Scholar] [CrossRef]

- Zhang, X.; Biagioni, D.; Cai, M.; Graf, P.; Rahman, S. An Edge-Cloud Integrated Solution for Buildings Demand Response Using Reinforcement Learning. IEEE Trans. Smart Grid 2021, 12, 420–431. [Google Scholar] [CrossRef]

- Jiang, A.; Wei, H.; Deng, J.; Qin, H. Cloud-Edge Cooperative Model and Closed-Loop Control Strategy for the Price Response of Large-Scale Air Conditioners Considering Data Packet Dropouts. IEEE Trans. Smart Grid 2020, 11, 4201–4211. [Google Scholar] [CrossRef]

- Cui, L.; Qu, Y.; Gao, L.; Xie, G.; Yu, S. Detecting false data attacks using machine learning techniques in smart grid: A survey. J. Netw. Comput. Appl. 2020, 170, 102808. [Google Scholar] [CrossRef]

- Hussain, H.M.; Narayanan, A.; Nardelli, P.H.J.; Yang, Y. What is energy internet? Concepts, technologies, and future directions. IEEE Access 2020, 8, 183127–183145. [Google Scholar] [CrossRef]

- Feng, C.; Wang, Y.; Zheng, K.; Chen, Q. Smart Meter Data-Driven Customizing Price Design for Retailers. IEEE Trans. Smart Grid 2020, 11, 2043–2054. [Google Scholar] [CrossRef]

- Mohajeri, M.; Ghassemi, A.; Gulliver, T.A. Fast Big Data Analytics for Smart Meter Data. IEEE Open J. Commun. Soc. 2020, 1, 1864–1871. [Google Scholar] [CrossRef]

- Zhao, S.; Li, F.; Li, H.; Lu, R.; Ren, S.; Bao, H.; Lin, J.H.; Han, S. Smart and Practical Privacy-Preserving Data Aggregation for Fog-Based Smart Grids. IEEE Trans. Inf. Forensics Secur. 2021, 16, 521–536. [Google Scholar] [CrossRef]

- Alahakoon, D.; Yu, X. Smart Electricity Meter Data Intelligence for Future Energy Systems: A Survey. IEEE Trans. Ind. Inform. 2016, 12, 425–436. [Google Scholar] [CrossRef]

- Pandey, S.R.; Tran, N.H.; Bennis, M.; Tun, Y.K.; Manzoor, A.; Hong, C.S. A Crowdsourcing Framework for On-Device Federated Learning. IEEE Trans. Wirel. Commun. 2020, 19, 3241–3256. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Tao, D. Empowering Things with Intelligence: A Survey of the Progress, Challenges, and Opportunities in Artificial Intelligence of Things. IEEE Internet Things J. 2021, 8, 7789–7817. [Google Scholar] [CrossRef]

- Ahmad, T.; Chen, H.; Wang, J.; Guo, Y. Review of various modeling techniques for the detection of electricity theft in smart grid environment. Renew. Sustain. Energy Rev. 2018, 82, 2916–2933. [Google Scholar] [CrossRef]

- Xia, X.; Xiao, Y.; Liang, W. SAI: A Suspicion Assessment-Based Inspection Algorithm to Detect Malicious Users in Smart Grid. IEEE Trans. Inf. Forensics Secur. 2020, 15, 361–374. [Google Scholar] [CrossRef]

- McDaniel, P.; McLaughlin, S. Security and privacy challenges in the smart grid. IEEE Secur. Priv. 2009, 7, 75–77. [Google Scholar] [CrossRef]

- Taik, A.; Cherkaoui, S. Electrical Load Forecasting Using Edge Computing and Federated Learning. In Proceedings of the 2020–2020 IEEE International Conference on Communications (ICC), Online, 7–11 June 2020; Volume 2020. [Google Scholar] [CrossRef]

- Kang, J.; Xiong, Z.; Niyato, D.; Xie, S.; Zhang, J. Incentive Mechanism for Reliable Federated Learning: A Joint Optimization Approach to Combining Reputation and Contract Theory. IEEE Internet Things J. 2019, 6, 10700–10714. [Google Scholar] [CrossRef]

- Su, Z.; Wang, Y.; Luan, T.H.; Zhang, N.; Li, F.; Chen, T.; Cao, H. Secure and Efficient Federated Learning for Smart Grid With Edge-Cloud Collaboration. IEEE Trans. Ind. Inform. 2021, 18, 1333–1344. [Google Scholar] [CrossRef]

- Chen, Y.; Qin, X.; Wang, J.; Yu, C.; Gao, W. FedHealth: A Federated Transfer Learning Framework for Wearable Healthcare. IEEE Intell. Syst. 2020, 35, 83–93. [Google Scholar] [CrossRef]

- Wen, M.; Xie, R.; Lu, K.; Wang, L.; Zhang, K. FedDetect: A Novel Privacy-Preserving Federated Learning Framework for Energy Theft Detection in Smart Grid. IEEE Internet Things J. 2022, 9, 6069–6080. [Google Scholar] [CrossRef]

- Zheng, Z.; Yang, Y.; Niu, X.; Dai, H.N.; Zhou, Y. Wide and Deep Convolutional Neural Networks for Electricity-Theft Detection to Secure Smart Grids. IEEE Trans. Ind. Inform. 2018, 14, 1606–1615. [Google Scholar] [CrossRef]

- Punmiya, R.; Choe, S. Energy theft detection using gradient boosting theft detector with feature engineering-based preprocessing. IEEE Trans. Smart Grid 2019, 10, 2326–2329. [Google Scholar] [CrossRef]

- Li, W.; Logenthiran, T.; Phan, V.T.; Woo, W.L. A novel smart energy theft system (SETS) for IoT-based smart home. IEEE Internet Things J. 2019, 6, 5531–5539. [Google Scholar] [CrossRef]

- Ismail, M.; Shaaban, M.F.; Naidu, M.; Serpedin, E. Deep Learning Detection of Electricity Theft Cyber-Attacks in Renewable Distributed Generation. IEEE Trans. Smart Grid 2020, 11, 3428–3437. [Google Scholar] [CrossRef]

- Hoenkamp, R.; Huitema, G.; Moor, A.D. The neglected consumer: The case of the smart meter rollout in the Netherlands. Renew. Energy Law Policy Rev. 2011, 2, 269–282. [Google Scholar] [CrossRef]

- Yao, D.; Wen, M.; Liang, X.; Fu, Z.; Zhang, K.; Yang, B. Energy Theft Detection with Energy Privacy Preservation in the Smart Grid. IEEE Internet Things J. 2019, 6, 7659–7669. [Google Scholar] [CrossRef]

- Ibrahem, M.I.; Nabil, M.; Fouda, M.M.; Mahmoud, M.M.E.A.; Alasmary, W.; Alsolami, F. Efficient Privacy-Preserving Electricity Theft Detection with Dynamic Billing and Load Monitoring for AMI Networks. IEEE Internet Things J. 2021, 8, 1243–1258. [Google Scholar] [CrossRef]

- Nabil, M.; Ismail, M.; Mahmoud, M.M.E.A.; Alasmary, W.; Serpedin, E. PPETD: Privacy-Preserving Electricity Theft Detection Scheme with Load Monitoring and Billing for AMI Networks. IEEE Access 2019, 7, 96334–96348. [Google Scholar] [CrossRef]

- Hao, M.; Li, H.; Luo, X.; Xu, G.; Yang, H.; Liu, S. Efficient and Privacy-Enhanced Federated Learning for Industrial Artificial Intelligence. IEEE Trans. Ind. Inform. 2020, 16, 6532–6542. [Google Scholar] [CrossRef]

- Li, S.; Cheng, Y.; Liu, Y.; Wang, W.; Chen, T. Abnormal Client Behavior Detection in Federated Learning. arXiv 2019, arXiv:1910.09933. [Google Scholar]

- Sater, R.A.; Hamza, A.B. A Federated Learning Approach to Anomaly Detection in Smart Buildings. ACM Trans. Internet Things 2020, 2, 1–23. [Google Scholar] [CrossRef]

- Schneble, W.; Thamilarasu, G. Attack detection using federated learning in medical cyber-physical systems. International Conference on Computer Communications and Networks. In Proceedings of the 28th International Conference on Computer Communications and Networks (icccn), Valencia, Spain, 29 July–1 August 2019; pp. 1–8. [Google Scholar]

- Liu, Y.; Garg, S.; Nie, J.; Zhang, Y.; Xiong, Z.; Kang, J.; Hossain, M.S. Deep Anomaly Detection for Time-Series Data in Industrial IoT: A Communication-Efficient On-Device Federated Learning Approach. IEEE Internet Things J. 2021, 8, 6348–6358. [Google Scholar] [CrossRef]

- Li, B.; Wu, Y.; Song, J.; Lu, R.; Li, T.; Zhao, L. DeepFed: Federated Deep Learning for Intrusion Detection in Industrial Cyber-Physical Systems. IEEE Trans. Ind. Inform. 2021, 17, 5615–5624. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, X.; Shen, X.; Sun, H. A Federated Learning Framework for Smart Grids: Securing Power Traces in Collaborative Learning. arXiv 2019, arXiv:2103.11870. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cover, T.M.; Hart, P.E. Nearest Neighbor Pattern Classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Beutel, D.J.; Topal, T.; Mathur, A.; Qiu, X.; Parcollet, T.; de Gusmão, P.P.; Lane, N.D. Flower: A Friendly Federated Learning Research Framework. arXiv 2020, arXiv:2007.14390. [Google Scholar] [CrossRef]

- Mothukuri, V.; Khare, P.; Parizi, R.M.; Pouriyeh, S.; Dehghantanha, A.; Srivastava, G. Federated-Learning-Based Anomaly Detection for IoT Security Attacks. IEEE Internet Things J. 2022, 9, 2545–2554. [Google Scholar] [CrossRef]

| After 5 Rounds of FL | Client 1 | Client 2 | Client 3 | Client 4 | Client 5 | Centralized |

|---|---|---|---|---|---|---|

| Test 1 | 91.44 | 91.58 | 91.42 | 91.06 | 91.33 | 92.08 |

| Test 2 | 91.25 | 91.61 | 91.41 | 91.05 | 91.35 | 91.91 |

| Test 3 | 91.31 | 91.44 | 91.37 | 91.19 | 91.64 | 91.97 |

| Test 4 | 91.56 | 91.51 | 91.39 | 91.33 | 91.54 | 91.91 |

| Test 5 | 91.53 | 91.67 | 91.12 | 91.12 | 91.59 | 91.81 |

| Avg | 91.42 | 91.56 | 91.34 | 91.15 | 91.49 | 91.93 |

| After 5 Rounds of FL | Client 1 | Client 2 | Client 3 | Client 4 | Client 5 | Centralized |

|---|---|---|---|---|---|---|

| Test 1 | 88.78 | 88.68 | 88.77 | 87.81 | 88.00 | 90.75 |

| Test 2 | 88.00 | 88.75 | 88.63 | 88.07 | 87.86 | 90.45 |

| Test 3 | 88.26 | 88.19 | 88.46 | 88.40 | 89.02 | 90.57 |

| Test 4 | 89.32 | 88.22 | 88.49 | 88.99 | 88.47 | 90.41 |

| Test 5 | 89.16 | 89.03 | 87.45 | 88.10 | 88.80 | 90 |

| Avg | 88.70 | 88.57 | 88.36 | 88.27 | 88.43 | 90.43 |

| After 5 Rounds of FL | Client 1 | Client 2 | Client 3 | Client 4 | Client 5 | Centralized |

|---|---|---|---|---|---|---|

| Test 1 | 84.43 | 88.63 | 87.92 | 87.69 | 88.47 | 89.93 |

| Test 2 | 88.04 | 88.86 | 88.03 | 88.25 | 88.31 | 89.16 |

| Test 3 | 88.15 | 88.51 | 88.41 | 88.02 | 88.43 | 89.29 |

| Test 4 | 88.49 | 88.21 | 88.05 | 88.24 | 88.17 | 89.19 |

| Test 5 | 88.47 | 88.72 | 87.98 | 87.54 | 88.59 | 89.12 |

| Avg | 87.51 | 88.58 | 88.07 | 87.94 | 88.39 | 89.33 |

| After 5 Rounds of FL | Client 1 | Client 2 | Client 3 | Client 4 | Client 5 | Centralized |

|---|---|---|---|---|---|---|

| Test 1 | 91.44 | 91.58 | 91.42 | 91.06 | 91.33 | 91.84 |

| Test 2 | 91.25 | 91.61 | 91.41 | 91.05 | 91.35 | 91.87 |

| Test 3 | 91.31 | 91.44 | 91.37 | 91.19 | 91.64 | 91.97 |

| Test 4 | 91.56 | 91.51 | 91.39 | 91.33 | 91.54 | 91.90 |

| Test 5 | 91.53 | 91.67 | 91.12 | 91.12 | 91.59 | 91.83 |

| Avg | 91.41 | 91.56 | 91.34 | 91.15 | 91.49 | 91.88 |

| Models | Accuracy | Precision | F-Measure | Recall |

|---|---|---|---|---|

| FedTCN [19] | 91.54 | 83.79 | 87.50 | 91.28 |

| FedCNN [18] | 91.31 | 83.86 | 87.17 | 91.05 |

| FedMLP [31] | 89.14 | 86.37 | 87.48 | 89.14 |

| Fed Simple RNN | 91.50 | 83.73 | 87.45 | 88.10 |

| Fed Simple LSTM | 91.53 | 88.38 | 87.86 | 90.10 |

| Fed Simple GRU | 90.87 | 86.63 | 87.92 | 88.56 |

| FVC | 91.67 | 89.03 | 88.72 | 91.67 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ashraf, M.M.; Waqas, M.; Abbas, G.; Baker, T.; Abbas, Z.H.; Alasmary, H. FedDP: A Privacy-Protecting Theft Detection Scheme in Smart Grids Using Federated Learning. Energies 2022, 15, 6241. https://doi.org/10.3390/en15176241

Ashraf MM, Waqas M, Abbas G, Baker T, Abbas ZH, Alasmary H. FedDP: A Privacy-Protecting Theft Detection Scheme in Smart Grids Using Federated Learning. Energies. 2022; 15(17):6241. https://doi.org/10.3390/en15176241

Chicago/Turabian StyleAshraf, Muhammad Mansoor, Muhammad Waqas, Ghulam Abbas, Thar Baker, Ziaul Haq Abbas, and Hisham Alasmary. 2022. "FedDP: A Privacy-Protecting Theft Detection Scheme in Smart Grids Using Federated Learning" Energies 15, no. 17: 6241. https://doi.org/10.3390/en15176241

APA StyleAshraf, M. M., Waqas, M., Abbas, G., Baker, T., Abbas, Z. H., & Alasmary, H. (2022). FedDP: A Privacy-Protecting Theft Detection Scheme in Smart Grids Using Federated Learning. Energies, 15(17), 6241. https://doi.org/10.3390/en15176241