1. Introduction

Modern control systems rely on linear mechanisms, as most industries rely on PID (proportional integral derivative) controllers [

1]. However, those are constrained by their intrinsic linearity. As a good rule of thumb, higher gain values in a linear PID can lead to faster response times but can cause increased overshoot and the tendency to increase oscillations. In addition, changes in the controlled plant may cause instability in the system [

2].

Nonlinear controllers can overcome these limitations but are more complex to design. Adaptive control represents a particular class of nonlinear controllers that share several aspects with linear control, often employed in the industry [

3]. Several works deal with adaptive control techniques improving the reference tracking performance while guaranteeing robust behavior under parameter uncertainties [

4,

5,

6,

7]. Many of these strategies employ analysis with

/

norm [

8,

9] and parameter estimation with Lyapunov theory [

10] to ensure convergence and strong capabilities. Such techniques address robust features using adaptive controllers but are not capable of finding the best control parameters. Furthermore, they are not simple and usually require massive calculations.

Adaptive PID is a kind of adaptive control that uses the structure of the PID controller with an adaptive rule for the controller gains [

11,

12,

13]. It is often simpler to implement and presents itself as a good solution for the industry, where PID is already deployed in many facilities. In this case, the design schemes rely on deterministic methods or linear optimizations to achieve reasonable solutions [

14,

15].

GAPID (Gaussian Adaptive PID) is an adaptive control system that can generate fast and robust control systems with no loss in response quality, such as instability over plant changes or high overshoot levels. Its structure is relatively simple [

16]. However, most systems cannot have their parameters determined utilizing deterministic methods. In this sense, bio-inspired optimization algorithms are some of the best mechanisms for dealing with such tasks [

17,

18,

19].

Bio-inspired metaheuristics are inspired by the fundamental relationships among groups of living beings, such as bee colonies or flocks of birds, or even in the genetic mechanisms first stated by Charles Darwin [

20,

21,

22,

23]. They have recently gained attention for reasonable solutions for multimodal functions without prior knowledge [

24,

25]. This behavior is crucial since most real-world problems are challenging because they present multimodality, non-linearity, non-differentiability, and others [

26,

27].

However, these algorithms present different characteristics (relation between agents, fitness function choices, iterations evolution alternatives, etc.), which can lead to different results and performances for the same problem [

25,

28].

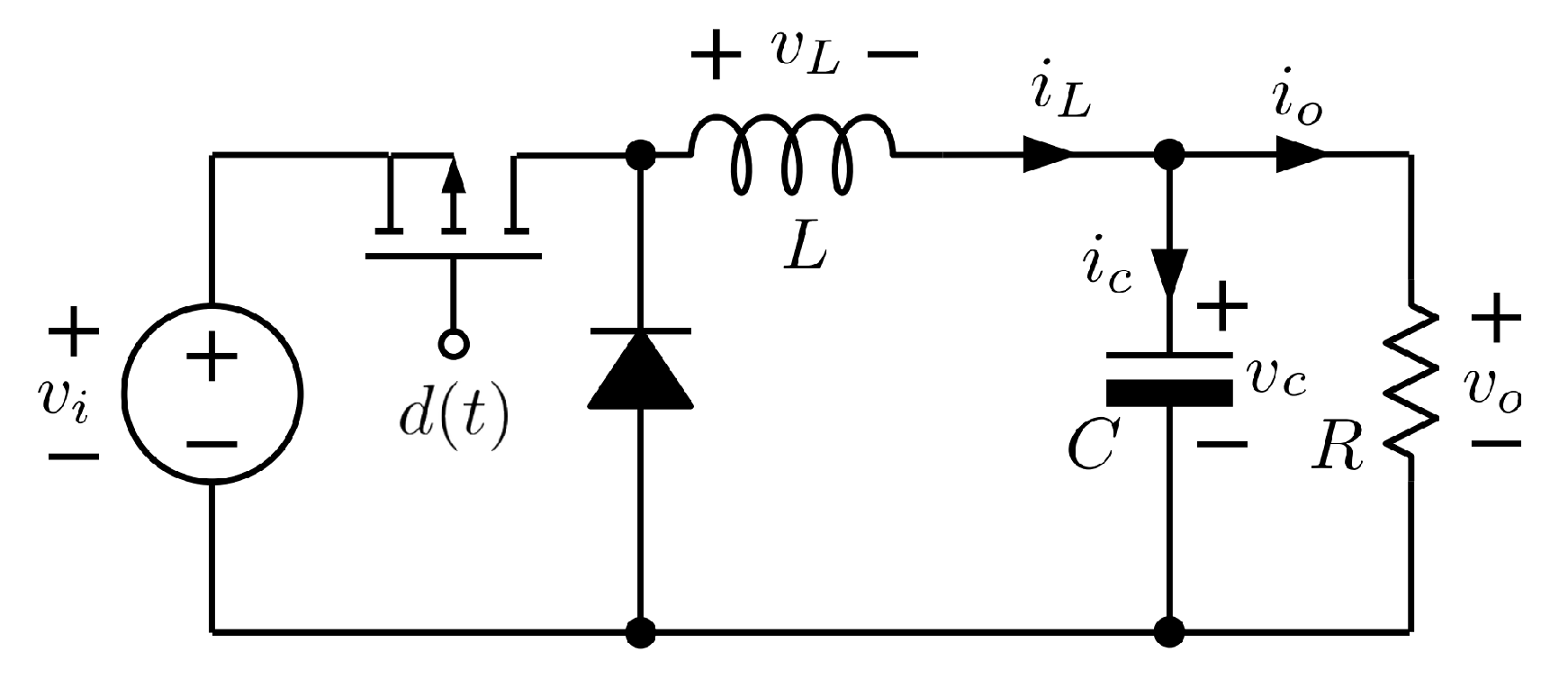

Power converters in the Buck topology are typical step-down converters found in millions of power supplies, mainly for electronic equipment. It operates in a closed loop to stabilize the output voltage supply, demanding a robust controller to guarantee such stabilization. Its mathematical model consists of a second-order plant that can exhibit an underdamped output depending on the operating conditions. A suitable controller is essential to compensate efficiently for this underdamping and other disturbances and provide a good quality power supply. These characteristics justify the purpose of studying optimization methods of adaptive PID for velocity control in this plant.

In this regard, this investigation analyzes and compares the performance of 30 bio-inspired optimization variations, divided equally between versions of Genetic Algorithms (GA), Differential Evolution (DE), Particle Swarm Optimization (PSO) [

29], all applied to a GAPID system controlling a Buck converter.

This exhaustive comparison is unprecedented, fills a gap in the literature, and represents this work’s significant contribution. Despite these being the most used algorithms to deal with optimization problems, it must be highlighted that there is still room for an extensive investigation of their application in GAPID [

30,

31].

In summary, the contributions of this investigation are as follows:

The insertion of a new metaheuristic method to this analysis (DE);

The utilization of a varied set of metaheuristics, searching to find better performance when dynamic alterations occur in the plant;

The application of metaheuristics and their variations in a GAPID controller and implemented in the Buck converter shows that this controller is able to provide optimal performance.

The rest of the paper is organized as follows:

Section 2 presents the current state of the art of adaptive control techniques;

Section 3 shows the theoretical framework of the GAPID controller;

Section 4 discuss the bio-inspired optimization models addressed to tune the GAPID;

Section 5 approaches the simulation development with the optimization strategies analysis, performance assessment, and statistical tests;

Section 6 presents the results achieved by the optimization methods as well as a critical analysis of such results;

Section 7 presents the main conclusions and future works.

2. State of the Art of Adaptive Control Techniques

In [

32], the authors proposed an online adaptive fuzzy gain scheduling PID (FGPID) controller for the load frequency control (LFC) of a three-area inter-connected modern power system. The performance was investigated by comparing it with a fixed structure controller. The adaptive terms of the proposed controller were derived from an appropriate Lyapunov function and are not dependent on the controlled system parameters. The results indicated that the proposed FGPID controller performs better than the other fixed structure controllers.

In [

33], the authors proposed a controller based on Fuzzy PID using an improved PSO algorithm to perform an adaptive fuzzy PID contour error cross-coupled control method. Compared with the traditional fuzzy PID cross-coupled control method, the fuzzy PID cross-coupled control based on the improved PSO algorithm can reduce the maximum contour error by 53.64%, which has a better control effect.

A comparison of three hybrid approaches (PID-PSO, Fuzzy-PSO, and GA-PSO) for the direct torque control (DTC) and velocity of the dual star induction motor (DSIM) drive was given in [

34]. The results showed that the performance of Fuzzy-PSO was better, reducing high torque ripples, improving rise time, and avoiding disturbances affecting the drive performance.

In [

35], the authors presented a PID controller based on the BP neural network, a self-tuning PID controller based on an improved BP neural network, with an improved Fletcher–Reeves conjugate gradient method. The presented results showed that the presented method reduced the overshoot of the PID control-based BP neural network, improved the speed of the BP neural network, and reduced the regulating time of PID control.

In [

36], a closed-loop motion control system based on a back propagation neural network PID controller by using a Xilinx field programmable gate array (FPGA) solution was proposed. The authors stated that the proposed system could realize the self-tuning of PID control parameters. The results indicated reliable performance, high real-time performance, and strong anti-interference. When compared to traditional MCU-based control methods, the speed convergence of the FPGA-based adaptive control method is much faster than three orders of magnitude, proving its superiority over traditional methods.

In [

37], the authors presented another self-adjusting PID controller based on a backpropagation artificial neural network, with GA for offline training, to control the speed of a DC motor. The difference reported was in using the error for network training, the maximum desired values of overshoots, settling times, and stationary errors as input data for the network. As a disadvantage, the authors report that the network’s performance is linked to the operating range of the systems, which implies that the accuracy is not constant and that, therefore, performance may decrease at the limits of system operation.

A time domain performance criterion based on the multi-objective Pareto front solutions was proposed in [

38]. The objective function tested was an automatic voltage regulator system (AVR) application using the PSO algorithm. The authors report that the simulation results showed a performance boost compared to traditional objective functions.

The use of PSO and GA methods for tuning the PID controller parameters was presented in [

39]. The authors reported that the proposed PSO method could avoid the shortcoming of premature convergence of the GA method, increasing the system performance with more robust stability and efficiency.

In [

40], a modified form of the gray wolf optimizer algorithm (GWO) with a novel fitness function has been presented to tune the controller parameters of an FOPID controller to control the terminal voltage of the AVR system, using a modified version of the GWO and a new fitness function. The authors stated robustness in the obtained results compared to other state-of-the-art techniques.

Ouyang and Pano, in [

41,

42], presented a position domain PID controller for a robotic manipulator that was tuned using three different metaheuristic optimization algorithms. In these works, DE, GA and PSO were used to optimize the gains of the controller alongside three distinct fitness functions for tuning a position domain PID controller (PDC-PID). The authors reported that the PSO and DE algorithms generally performed better than GA, which was always the first to converge.

Studies about the use of bio-inspired optimization algorithms in the design of this controller are being conducted up to the present. This control strategy is challenging to design as it does not have an algebraic solution for the adaptive parameters of the controller.

In this sense, in [

43], the authors presented six variations of genetic algorithms for tuning the GAPID and showed good enhancement of GAPID over traditional PID. The authors in [

44] did the same six variations but with PSO, which also demonstrated good enhancements of GAPID over PID. These two optimization algorithms also were employed in [

17], which were used in a plant based on a step-down DC–DC converter, and compared, demonstrating promising results and a slight advantage for PSO. The latter also presented faster convergence and lower computational cost; however, on the other hand, the agent positions on the last iterations were very concentrated, representing a low search capacity, and possibly converging to a sub-optimal solution instead of the global one.

As for the work in [

45], PSO, the Artificial Bee Colony (ABC) algorithm, and the WOA were compared to tuning the Gaussian Adaptive PID controller, considering the Buck converter with a resistive and a nonlinear load as a case study. The authors reported that PSO achieved the best results.

Finally, in [

46], the authors analyzed two metaheuristics optimization techniques, GA and PSO, with six variations each and compared them regarding their convergence, quality, and dispersion of solutions. The novelty is that the analysis included plant load variation, thus demanding a modification to the optimization strategy, and, as a result, the controller achieved a more robust behavior. It was reported that the obtained results proved that the GAPID presented fast responses with very low overshoot and good robustness to load changes, with minimal variations, which was impossible to achieve when using the traditional PID.

According to the last cited works, GA and PSO optimization techniques were used to tune the GAPID, and the latter produces better performance when comparing GA with PSO, while the DE was not addressed in these works. In this way, in the present study, a third optimization technique is inserted in order for it to be investigated around its possible variations and finally be compared with the results obtained from GA and PSO.

5. Methodology

In this work, an adaptive controller based on a Gaussian adaptive rule (GAPID) is addressed, which must be properly designed to satisfy the system’s needs with optimal performance. It is a very flexible controller due to the possibility of defining the Gaussian parameters for each adaptive PID gain. However, this also presents a problem: such a definition is not trivial, and no algebraic method exists to determine these parameters. Bio-inspired metaheuristics algorithms are used to accomplish this task. They must deal with a complex challenge because GAPID optimization is a multimodal problem. The main idea is to examine how different algorithms deal with such problems, analyzing their behavior during the optimization iterations and their results regarding the performance, convergence, dispersion, and correlations of the solutions.

Algorithm variations are addressed in approaches to changes in the selection, crossover, and mutation phases—for GA and similarly for DE—although there are other operators to be varied. As for the PSO, the variations are in the initial inertia variation and concerning the topology (ring or global). These algorithm’s variations are presented in

Section 4.

All the variations were implemented in MATLAB and SIMULINK, and the performance of the different optimization strategies was analyzed only in the simulation environment.

Graphics about the performance metric parameters (

Section 5.2) and statistical methods (

Section 5.3) are employed to provide scientific support to present the results (

Section 6) and conclusions.

5.1. Optimization Strategies Analysis

In this perspective, three basic algorithms were considered: GA, PSO, and DE metaheuristics [

25,

31,

56,

57]. For each optimization strategy, 10 different combinations were developed, totaling 30 distinct variations, which make up a relatively wide universe of analysis and comparison, which are listed and detailed below.

Roulette with a 70% crossover rate, single-point crossover, fixed mutation rate;

Roulette, single-point crossover, and fixed mutation rate;

Roulette with survival selection, single-point crossover, fixed mutation rate;

Tournament, single-point crossover, fixed mutation rate;

Tournament with survival selection, single-point crossover, fixed mutation rate;

Death tournament, single-point crossover, fixed mutation rate;

Stochastic sampling, single-point crossover, fixed mutation rate;

Tournament, arithmetic crossover, fixed mutation rate;

Tournament, single-point crossover, dynamic mutation rate;

Tournament, SBX crossover, fixed mutation rate.

No initial inertia , global topology;

Initial Inertia , global topology;

Decreasing Inertial weight , global topology;

No initial inertia with mutation, global topology;

No initial inertia with a random velocity increase, global topology;

No initial inertia, ring topology;

Initial inertia, ring topology;

Decreasing inertial weight , ring topology;

No initial inertia with mutation, ring topology;

No initial inertia with a random velocity increase, ring topology.

Rand/1/Bin;

Best/1/Bin;

Rand/2/Bin;

Target-to-Best/2/Bin;

Best/2/Bin;

Rand/1/Exp;

Best/1/Exp;

Rand/2/Exp;

Target-to-Best/2/Exp;

Best/2/Exp.

It is important to remark that all algorithms must share the same fundamental parameters, such as the size of the population and number of optimization repetitions, leaving only the particular variations parameters mutable, to enable a fair comparison between them. Besides that, knowing that some algorithms follow different concepts of evolution (AG and DE are evolutionary while PSO is swarm movement), special statistical tests such as the Shapiro–Wilks, Kruskall–Wallis, and Dunn–Sidak post-hoc tests were employed to determine the degree of correlation between the solutions of distinct algorithms.

Thus, for each optimization strategy (PSO, GA and DE), 50 agents were considered with a maximum of 100 iterations (stopping criteria) and 30 independent simulations with random uniform initialization.

5.2. Performance Assessment

A performance measurement method addressed to compare different results for the optimizations is used to evaluate the effectiveness of a set of adjusted parameters. The chosen method was the IAE (Integral of Absolute Error), where the modulus of the error between the set-point and the output is integrated. The mathematical expression for the IAE is presented in Equation (15).

The IAE value is then used to create a normalized fitness value between 0 and 1 that determines the quality of each set of parameters [

45]. The closer this value is to unity, the better. Equation (16) is used to do so.

5.3. Statistical Tests

It is necessary to apply statistical tests to compare the outputs to fair analyze the results of metaheuristics. Before the analysis, the results must be categorized regarding the parameterization. The Shapiro–Wilk test is a test of normality used in statistics that can determine if a sample of numbers came from a normal distribution [

61]. Its null hypothesis is that the samples are normal with unspecified mean and variance. The formula for the Shapiro–Wilk test can be observed in Equation (17):

where:

Vector is created from the expected values of the order statistics of independent and identical distributed random variables sampled from a standard normal distribution, is the covariance matrix of those normal order statistics, and is the sample mean.

Based on this normality test, it is usual to observe that part of the results is non-parametric. If this is the case, the following analysis shall apply the Kruskal–Wallis test. If the samples are normally distributed, then ANOVA could be used instead.

Kruskal–Wallis test is a non-parametric method based on ranking for testing whether samples originate from the same distribution [

62]. This analysis shows whether the various changes within each strategy changed the outcome of each optimization. The formula for the Kruskal–Wallis test can be observed in Equation (18):

where

is the number of observations in group i;

is the rank (among all observations) of observation j from group i;

N is the total number of observations across all groups;

;

and is the average rank of all observations on group i.

The third statistical test addressed is the Dunn–Sidak correction as a Post-Hoc analysis. This family-wise error test corrects for multiple comparisons through Equation (19), comparing each pair of fitness results samples.

where

k is a different null hypothesis of

significance level. Each null hypothesis that has a

p-value under

is rejected.

The null hypothesis of this test is as follows: for each paired comparison, the probability of a randomly selected value from one group is more extensive than a randomly selected value from the second group equals one-half, which can be understood as a median comparison test. When rejecting the null hypothesis, this test stipulates a significant difference between those two results.

To determine the validity of the results, Shapiro–Wilks p-test values are calculated for these cases. For that, 30 simulations of each variation were considered.

6. Experiments and Results

This section reports the results from the 30 addressed algorithm variations applied to the GAPID control of a buck converter. The experiments were performed using MATLAB and Simulink.

The Simulink model used in the simulations is depicted in

Figure 5, and the structure of the GAPID is presented in

Figure 6. The parameters of the Gaussian functions are continuously updated during the optimization process in the block “Gaussian”. The results are captured in the scopes as data vectors, to be processed and to have their IAE value calculated.

Table 2 shows the best values of fitness found for each optimization strategy alongside their mean and standard deviation values considering the 30 executions. The numbers in bold represent the highest fitness, highest mean fitness, and slightest standard deviation presented by each strategy (GA, DE, and PSO).

Based on these results,

Figure 7 presents a boxplot considering each algorithm’s fitness of the 30 runs. Note that GA variations are in green, PSO variations in blue, and DE variations in red.

During the execution of the algorithms, it was observed that the DE tends to use a greater number of iterations until convergence due to the crossover rate of 0.3, since it is possible to generate a greater number of iterations with the same computational power than other strategies.

Table 2 and boxplot in

Figure 7 reveal that GA showed variations with higher dispersion, with some of them being poor optimizers. One can observe that GA3 and GA5, which present the survival selection, single-point crossover, and fixed mutation rate, were the worst candidates. The PSO variations presented a more uniform behavior, especially the first four variations. In addition, it can be noted that the peaks in the boxplot were achieved by the DE variations, which also presented the highest mean fitness.

In the first view, one can observe that GA 10, DE 2, and PSO 2 achieved the best overall performance for each algorithm (highest fitness) regarding the 30 executions, highlighting DE 2. Regarding average fitness, GA 6, DE 2, and PSO 4 stood out considering the same algorithm.

It is important to observe that the GAPID parameters are linked to the PID gains, which means that GAPID represents a real improvement over the PID as they share the same design requirements, as can be seen in the IAE values shown in

Table 3, where the lower the value, the better.

Figure 8 shows the best, worst, and average fitness for each generation along the evolutionary process for the best run of DE 2. One can see from the blue curve that the algorithm presents a fast convergence, reaching, in this case, a result close to the maximum fitness achievable (

).

As a complex multimodal problem, one of the difficulties in finding the optimum point is that different input values are likely to result in similar fitness values. The best parameters found in each strategy are recorded in

Table 4 to present how different simulations found different local optima points.

Considering these findings, we present in

Figure 9 the response to the input step of 30 V considering the best set of parameters found by the GA 10, DE 2, PSO 2 for GAPID and the original PID control. It’s important to note that to make the waveforms easier to see, the PWM block has been contoured to hide the switching effects on the waveforms. The figure shows how the adaptive nature of the GAPID can lead to faster transient responses without destabilization. In

Figure 10 and

Figure 11, the outputs of the PID and GAPID are presented, but in this case, the PWM is considered, switching at 30 kHz.

By analyzing the waveforms, only GAPID optimized by DE 2 presented an overshoot, which was 1.04%, which means the DE resulted in slightly higher gains for GAPID, with a shorter rise time when compared to PSO and GA. In the case of the Buck converter, where the main objective is to generate a regulated output voltage, it does not pose a problem, but depending on the target application, this can be undesirable behavior. The responses of GAPID optimized by PSO and GA are fast and smooth, without overshoot, with a slight advantage over PSO, which can be seen as the best candidate solution to GAPID if overshoot is not allowable. GAPID optimized by PSO and GA perform quite similarly, but GAPID-DE is considerably distinct. That evidences the multimodal characteristic of the GAPID problem where all these solutions have a similar highest level of fitness.

6.1. Statistical Tests Application

To fairly compare the results from the different variations of the GA, DE, and PSO strategies, we applied Shapiro–Wilks, Kruskall–Wallis, and Dunn–Sidak tests, all with a significance level of .

As a way to determine the validity of the results, the

p-values of the Shapiro–Wilks test are presented, considering the 30 simulations of each variation in

Table 5, in order to evaluate the normality of the outputs (the values in bold with an asterisk are those that rejected the null hypothesis).

Table 5 reveals that after applying the Shapiro–Wilks test, most of the results were non-parametric (the errors are not from a normal distribution): GA 2, GA 3, GA 4, GA 6, GA 8, PSO 2, DE 1, DE 2, DE 4, and DE 6. In this sense, we considered non-parametric tests for the obtained results.

Then, the Kruskal–Wallis test was applied to each of the three main optimization strategies’ results. The p-values found were for GA, for DE and for PSO. Since all three p-values were lower than the standard limit, it can be considered that all variations led to a differential factor for the GAPID control performance.

Finally, the Dunn–Sidak correction post-hoc analysis was applied.

Table 6 presents the results comparing the variations that achieved the highest fitness (GA 10, DE 2, and PSO 2) with the other nine variations of the same algorithm on each row. When there is a significant statistical difference between the two variations, the corresponding cell is highlighted with light green for GA, light red for DE, and light blue for PSO.

As observed, the application of the test in the best genetic algorithm variation (GA 10—tournament, SBX crossover, 100% crossover rate, fixed mutation rate) did not reject the null hypothesis on the cases for GA 1 (roulette with a 70% crossover rate, single-point crossover, fixed mutation rate), GA 2 (roulette, single-point crossover, fixed mutation rate), GA 4 (tournament, single-point crossover, fixed mutation rate), GA 6 (death tournament, single-point crossover, fixed mutation rate), GA 7 (stochastic sampling, single-point crossover, fixed mutation rate), and GA 9 (tournament, single-point crossover, dynamic mutation rate), meaning that they are statistically equivalent.

For the Particle Swarm Optimization, the variations PSO 1 (no initial inertia, global topology), PSO 3 (decreasing inertia, global topology), PSO 4 (no initial inertia with mutation, global topology), PSO 5 (no initial inertia with a bomb, global topology), and PSO 6 (no initial inertia, ring topology) variations were considered to be statistically similar to the best candidate PSO 2 (initial inertia, global topology).

For DE with the best overall response, DE 2 (Best/1/Bin), the null hypothesis was accepted for DE 3 (Rand/2/Bin), DE 5 (Best/2/Bin), and DE 7 (Best/1/Exp).

Beyond the pairwise comparison for each GA variation with GA 10, each DE variation with DE 2, and each PSO variation with PSO 2 presented in

Table 6, we compared the DE 2 to the variations of other strategies. From these comparisons, the variations PSO 1, PSO2, PSO 3, and PSO 4 presented statistical similarity, while no GA variation was able to match that fitness value.

In summary, the overall best strategies variations were PSO 1, PSO 2, PSO 3, PSO 4, DE 3, DE 4, DE 5, and DE 7, while DE 2 presented the highest fitness. According to the Dunn–Sidak correction, these strategies have no statistical difference.

6.2. General Analysis: Algorithms Application

Analyzing the boxplots, Shapiro–Wilks, Kruskal–Wallis, and Dunn–Sidak test results, and the response to a step signal, it is possible to come up with some general observations:

For GAs:

Roulette-based strategies (GA 1 and 2) have an overall worse performance when compared to the tournament strategy (GA 4);

The stochastic sampling selection (GA 7) had lower fitness values when compared to the roulette (GA 2) and tournament (GA 4) strategies;

The survival selection (GA 3 and 5) was not beneficial for this optimization problem;

The death tournament (GA 6) resulted in a lower standard variation when compared to the regular tournament (GA 4);

The arithmetic crossover (GA 8) was not able to find high-quality parameters;

The dynamic mutation (GA 9) had an increased spread but with no real performance gain;

The SBX crossover (GA 10) obtained the best results for the tested GA variations. However, analyzing the Dunn–Sidak results, this strategy is statistically similar to the strategies GA 1, GA 4, GA 6, and GA 7.

For PSO:

Global topology (PSO 1 through 5) performed better than their ring topology counterparts (PSO 6 through 10);

Both decreasing inertia strategies (PSO 3 and PSO 7) offered the second and third lowest PSO errors, respectively;

The mutation variation (PSO 4 and 9) lowered the results spread and its optimizations were, on average, better than the other variations;

The random velocity increase (PSO 5 and 10) did not appear to have improved the performance of optimization;

The initial inertia variation with global topology (PSO 2) offered the highest fitness values for PSO strategies, but analyzing the Dunn–Sidak correction, this variation can be considered tied in efficiency to PSO 5 and PSO 7.

For DEs:

In general, Differential Evolution strategies achieved lower error values than both GAs and PSO strategies;

The binary crossover (DE 1 to 5) generally achieved better agents when compared to the exponential crossover (DE 6 to 10);

Rand/1 type strategies (DE 1 and 6) showed lower performance compared to other DEs;

Best/1 variations (DE 2 and 7) was the best mutation variation found on DE high-quality results;

Rand/2, Target-to-best, and Best/2 variations produced, in general, low fitness individuals compared to the best strategies,

In summary, the results showed that some variations of the algorithms could reach similar performances, despite the real response in the GAPID be not the same (see

Figure 9). However, analyzing the best performance achievable (

Table 2), the average performance (

Figure 7), and the smaller dispersion (

Figure 7), there is a strong indication that DE 2 is the most reasonable choice, even if it presents statistical similarity with other proposals.

7. Conclusions and Recommendation

In this work, we analyzed several variants of metaheuristic techniques to find the parameters of the GAPID control of a Buck converter. As far as we know, there is no broad comparative study related to metaheuristics applied to optimize the GAPID controller parameters.

We discuss the most relevant works on Buck converters, linear and adaptive control, and metaheuristic optimization, especially GA, DE, and PSO. A GAPID-controlled Buck converter was implemented using the MATLAB/Simulink platform, and experiments were carried out with 10 different variants of each of the GA, DE, and PSO algorithms.

The results obtained from each variant were compared using the Shapiro–Wilk normality test and then the Kruskall–Wallis and Dunn–Sidak statistical tests for non-parametric data. We found that the fitness values from some DE and PSO variants present a better performance than GA strategy, especially when PSO considers global topology and DE considers binary crossover. However, the highest fitness was achieved by the DE 2 (Best/1/Bin).

In future work, further analysis can be done by applying the DE and PSO variants to tune different PID controllers and also studying the behavior of these metaheuristics using an automatic hyperparameter tuning approach on-the-fly. We highlight that the use of multi-objective approaches in such a problem is still an open question.