Development and Validation of a Nuclear Power Plant Fault Diagnosis System Based on Deep Learning

Abstract

1. Introduction

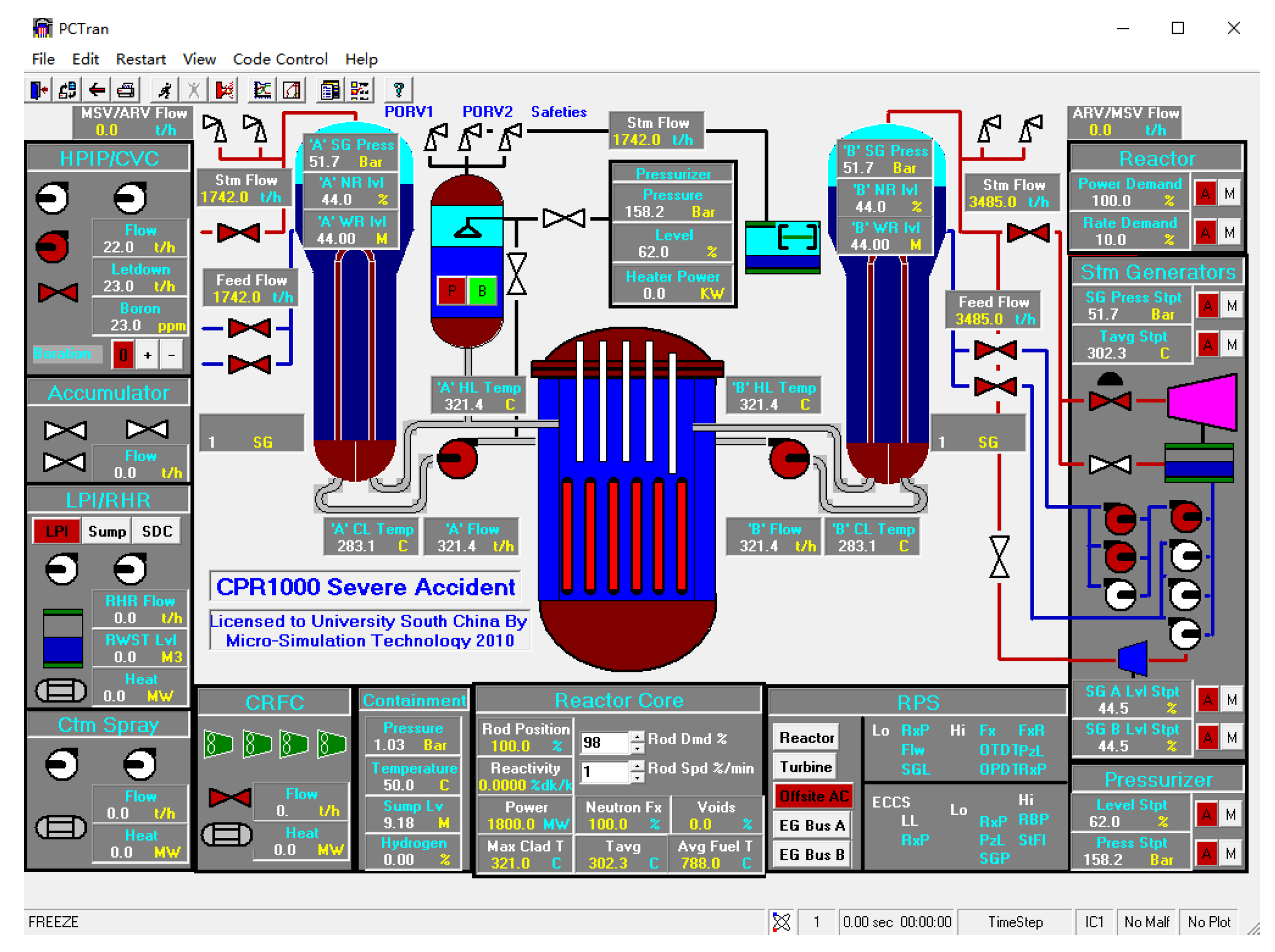

2. Introduction to PCTRAN

3. Deep Learning

3.1. LSTM Neural Network

3.2. Deep Neural Network

4. Results

4.1. Data Access

4.2. Data Pre-Processing

4.3. Model Training

4.4. Analysis of Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yong-Kuo, L.; Min-Jun, P.; Chun-Li, X.; Ya-Xin, D. Research and design of distributed fault diagnosis system in nuclear power plant. Prog. Nucl. Energy 2013, 68, 97–110. [Google Scholar] [CrossRef]

- Lee, S.L.; Seong, P.H. A Dynamic Neural Network Based Accident Diagnosis Advisory Systemfor Nuclear Power. Prog. Nucl. Energy 2005, 46, 268–281. [Google Scholar] [CrossRef]

- Yu, H. Application of Artificial Neural Network for NHR Fault Diagnosis. Nucl. Power Eng. 1999, 20, 434–439. [Google Scholar]

- Jichong, L.; Zhenping, C.; Jinsen, X.; Yu, T. Validation of Doppler temperature coefficients and assembly power distribution for the lattice code KYLIN V2.0. Front. Energy Res. 2021, 10, 898887. [Google Scholar] [CrossRef]

- Lei, J.; Yang, C.; Ren, C.; Li, W.; Liu, C.; Sun, A.; Li, Y.; Chen, Z.; Yu, T. Development and validation of a deep learning-based model for predicting burnup nuclide density. Int. J. Energy Res. 2022, 1–9. [Google Scholar] [CrossRef]

- Jaime, G.D.G. Integration of computerized operation support system on a nuclear power plant environment. Inst. Eng. Nucl. Prog. Rep. 2018, 3, 94. [Google Scholar]

- Sheng, Z.; Yin, Q. Equipment Condition Monitoring and Fault Diagnosis Technology and Application; Press of Chemical Industry: Beijing, China, 2003. [Google Scholar]

- Kim, K.; Aljundi, T.L.; Bartlett, E.B. Nuclear Power Plant Fault-Diagnosis Using Artificial Neural Networks; Dept. of Mechanical Engineering, Iowa State Univ. of Science and Technology: Ames, IA, USA, 1992. [Google Scholar]

- Wu, J.; Li, Q.; Chen, Q.; Peng, G.; Wang, J.; Fu, Q.; Yang, B. Evaluation, Analysis and Diagnosis for HVDC Transmission System Faults via Knowledge Graph under New Energy Systems Construction: A Critical Review. Energies 2022, 15, 8031. [Google Scholar] [CrossRef]

- Tran, Q.T.; Nguyen, S.D. Bearing Fault Diagnosis Based on Measured Data Online Processing, Domain Fusion, and ANFIS. Computation 2022, 10, 157. [Google Scholar] [CrossRef]

- Esakimuthu Pandarakone, S.; Mizuno, Y.; Nakamura, H. A comparative study between machine learning algorithm and artificial intelligence neural network in detecting minor bearing fault of induction motors. Energies 2019, 12, 2105. [Google Scholar] [CrossRef]

- Nsaif, Y.M.; Hossain Lipu, M.S.; Hussain, A.; Ayob, A.; Yusof, Y.; Zainuri, M.A. A New Voltage Based Fault Detection Technique for Distribution Network Connected to Photovoltaic Sources Using Variational Mode Decomposition Integrated Ensemble Bagged Trees Approach. Energies 2022, 15, 7762. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, Z.; Zhang, X.; Du, M.; Zhang, H.; Liu, B. Power System Fault Diagnosis Method Based on Deep Reinforcement Learning. Energies 2022, 15, 7639. [Google Scholar] [CrossRef]

- Katreddi, S.; Kasani, S.; Thiruvengadam, A. A Review of Applications of Artificial Intelligence in Heavy Duty Trucks. Energies 2022, 15, 7457. [Google Scholar] [CrossRef]

- Liu, Y.-K.; Xie, F.; Xie, C.-L.; Peng, M.-J.; Wu, G.-H.; Xia, H. Prediction of time series of NPP operating parameters using dynamic model based on BP neural network. Ann. Nucl. Energy 2015, 85, 566–575. [Google Scholar] [CrossRef]

- Saha, A.; Fyza, N.; Hossain, A.; Sarkar, M.R. Simulation of tube rupture in steam generator and transient analysis of VVER-1200 using PCTRAN. Energy Procedia 2019, 160, 162–169. [Google Scholar] [CrossRef]

- Horikoshi, N.; Maeda, M.; Iwasa, H.; Momoi, M.; Oikawa, Y.; Ueda, Y.; Kashiwazaki, Y.; Onji, M.; Harigane, M.; Yabe, H.; et al. The usefulness of brief telephonic intervention after a nuclear crisis: Long-term community-based support for Fukushima evacuees. Disaster Med. Public Health Prep. 2022, 16, 123–131. [Google Scholar] [CrossRef]

- Lei, J.; Ren, C.; Li, W.; Fu, L.; Li, Z.; Ni, Z.; Li, Y.; Liu, C.; Zhang, H.; Chen, Z.; et al. Prediction of crucial nuclear power plant parameters using long short-term memory neural networks. Int. J. Energy Res. 2022. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Boring, R.L.; Thomas, K.D.; Ulrich, T.A.; Lew, R.T. Computerized operator support systems to aid decision making in nuclear power plants. Procedia Manuf. 2015, 3, 5261–5268. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 2015, 28, 802–810. [Google Scholar]

- Lei, J.; Zhou, J.; Zhao, Y.; Chen, Z.; Zhao, P.; Xie, C.; Ni, Z.; Yu, T.; Xie, J. Prediction of burn-up nucleus density based on machine learning. Int. J. Energy Res. 2021, 45, 14052–14061. [Google Scholar] [CrossRef]

- Lei, J.; Zhou, J.; Zhao, Y.; Chen, Z.; Zhao, P.; Xie, C.; Ni, Z.; Yu, T.; Xie, J. Research on the preliminary prediction of nuclear core design based on machine learning. Nucl. Technol. 2022, 208, 1223–1232. [Google Scholar] [CrossRef]

- Quinlan, J.R. C4. 5: Programs for Machine Learning; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Gal, Y.; Ghahramani, Z. A theoretically grounded application of dropout in recurrent neural networks. Adv. Neural Inf. Process. Syst. 2016, 29, 1019–1027. [Google Scholar]

| Parameters | Parameter Description | Value |

|---|---|---|

| time_step | Time step | 1–10 |

| num | Number of hidden layers | 5 |

| num_units | Number of hidden neurons | 32, 32, 16, 8, 4 |

| activation | Activation function | Sigmoid, Relu, tanh |

| optimizer | Optimizer | adam, RMSProp, Adagrad, Adadelta |

| epoch | Number of iterations | 100–500 |

| batch | Batch Size | 16, 32, 64, 128 |

| dropout | Dropout | 0.1–0.5 |

| Operation Status | Tag |

|---|---|

| Loss of Coolant Accident | 0 |

| Steam Generator Tube Rupture | 1 |

| Steam Line Break Inside Containment | 2 |

| Normal Operation | 3 |

| Parameters | Parameter Description | Value |

|---|---|---|

| time_step | Time step | 1–10 |

| num | Number of hidden layers | 5 |

| num_units | Number of hidden neurons | 32, 32, 16, 8, 4 |

| activation | Activation function | Relu |

| optimizer | Optimizer | adam |

| epoch | Number of iterations | 100 |

| batch | Batch Size | 16 |

| dropout | Dropout | 0.5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, B.; Lei, J.; Xie, J.; Zhou, J. Development and Validation of a Nuclear Power Plant Fault Diagnosis System Based on Deep Learning. Energies 2022, 15, 8629. https://doi.org/10.3390/en15228629

Liu B, Lei J, Xie J, Zhou J. Development and Validation of a Nuclear Power Plant Fault Diagnosis System Based on Deep Learning. Energies. 2022; 15(22):8629. https://doi.org/10.3390/en15228629

Chicago/Turabian StyleLiu, Bing, Jichong Lei, Jinsen Xie, and Jianliang Zhou. 2022. "Development and Validation of a Nuclear Power Plant Fault Diagnosis System Based on Deep Learning" Energies 15, no. 22: 8629. https://doi.org/10.3390/en15228629

APA StyleLiu, B., Lei, J., Xie, J., & Zhou, J. (2022). Development and Validation of a Nuclear Power Plant Fault Diagnosis System Based on Deep Learning. Energies, 15(22), 8629. https://doi.org/10.3390/en15228629