Abstract

We review the latest modeling techniques and propose new hybrid SAELSTM framework based on Deep Learning (DL) to construct prediction intervals for daily Global Solar Radiation (GSR) using the Manta Ray Foraging Optimization (MRFO) feature selection to select model parameters. Features are employed as potential inputs for Long Short-Term Memory and a seq2seq SAELSTM autoencoder Deep Learning (DL) system in the final GSR prediction. Six solar energy farms in Queensland, Australia are considered to evaluate the method with predictors from Global Climate Models and ground-based observation. Comparisons are carried out among DL models (i.e., Deep Neural Network) and conventional Machine Learning algorithms (i.e., Gradient Boosting Regression, Random Forest Regression, Extremely Randomized Trees, and Adaptive Boosting Regression). The hyperparameters are deduced with grid search, and simulations demonstrate that the DL hybrid SAELSTM model is accurate compared with the other models as well as the persistence methods. The SAELSTM model obtains quality solar energy prediction intervals with high coverage probability and low interval errors. The review and new modelling results utilising an autoencoder deep learning method show that our approach is acceptable to predict solar radiation, and therefore is useful in solar energy monitoring systems to capture the stochastic variations in solar power generation due to cloud cover, aerosols, ozone changes, and other atmospheric attenuation factors.

1. Background Review

Demand for cleaner, green energy has been rapidly increasing in last few years as a result of the negative impacts of fossil fuel-based energies to our environment and their contributions to climate change. This has produced a growing interest on clear energy resources such as solar and wind power [1]. According to a report released by the International Renewable Energy Agency (IRENA), despite the COVID-19 pandemic, more than 260 GW of renewable energy capacity have been added in 2020, and this exceeds the previous record by nearly 50% [2]. One of the current most promising sources of energy is solar energy [3], particularly in photovoltaics (PV) technology, whose worldwide capacity (year 2020) has reached about the same level as the wind capacity, mostly because of expansions in Asia (78 GW), with significant capacity increases in China (49 GW) and Vietnam (11 GW). In addition, Japan added more than 5 GW, and on the other hand, the Republic of Korea added nearly 4 GW and the United States added 15 GW [2]. Moreover, the power output of PV panels is strongly correlated with Global Solar Radiation (GSR), which is influenced by many factors (for example, latitude, season, and sky conditions, among others) [4]. The GSR is highly intermittent and chaotic, and even the slightest fluctuation in solar radiation can have an impact on power supply security [5]. Considering this, the development of accurate GSR prediction models, especially those that can capture cloud cover effects on solar energy generation forecasts, is essential for ensuring an optimum energy dispatch and management practice. This becomes particularly important as rooftop solar power generation and its penetration into the grid increases.

There are usually four main types of models used in GSR prediction problems, which are classified into physical, empirical, statistical prediction, and Machine Learning (ML)-based models. The physical models look for relationships between GSR and other meteorological parameters [3], usually by means of Numerical Weather Prediction (NWP) systems. Despite its strong physical basis, there are challenges such as sourcing and selecting the inputs for NWP models [6,7,8], and there are also issues related to the high computation cost of these models. Among the most common models used is the empirical model, which is intended to develop a linear or nonlinear regression equation [9]. Although empirical models are easy and simple to operate, their accuracy is usually limited. Statistical models, such as the Autoregressive Integrated Moving-Average model (ARIMA) [10] and the Coupled Autoregressive and Dynamical System (CARDS) [11] model rely on the statistical correlation [12]. Although the precision of these statistical models is usually higher than empirical models, however, they fail to capture complex nonlinear relationships accurately between the GSR and other parameters. Furthermore, in statistical modeling process, historical data are taken into account, while other relevant weather conditions that influence solar GSR cannot be included [13]. ML-based approaches can be used to overcome this shortcoming by integrating various types of input data into prediction models, and these models have the ability to extract complex nonlinear features from multiple inputs [14]. During the last three decades, a wide range of ML models have been used for GSR prediction, such as Artificial Neural Networks (ANNs) [15,16], Recurrent Neural Networks (RNN) [14], evolutionary neural approaches [17,18], Extreme Learning Machines (ELM) [19,20,21,22], Ensemble Learning (EL) [23], Multivariate Adaptive Regression Spline (MARS) [24], Gaussian Processes [25], and Support Vector Machines (SVMs) [26,27,28], among others. These ML models offer higher accuracy than empirical and statistical models [29] as well as competitive behavior with less computational burden than NWP models, making them one of the most popular models that have been used previously in short-term [30], medium-term [31], and long-term [32] GSR prediction.

Despite having gained extensive attention in the past for several prediction applications, the ML-based approaches such as neural networks, ELMs, SVRs, etc., also suffer from a few major drawbacks: (a) selecting the correct input features for a model requires high expertise, thus making them unreliable and less capable of extracting the nonlinear features from GSR data [33]; (b) because of less generalization capability, these models are less effective in learning complex patterns and have the drawbacks of over-fitting, gradient disappearance, and excessive network training [34]; and (c) these models perform very well on relatively small datasets, but when the data size is huge, they may be subjected to instabilities and a rather slow convergence of their parameters [35]. Due to the tedious selection of features, a degree of over-fitting and somewhat high complexity linked to the datasets, exploring different promising approaches that relies on Deep Learning (DL) [36] to predict GSR is becoming the norm.

Models based on DL are proving useful in a multitude of areas for several reasons, including their ability to extract features faster, their power to generalize, and their capacity to handle big data [37]. The largest difference between conventional ML models and DL models is the number of transformations that the input data undergo before it reaches the output. In DL models, input data are transformed multiple times before the output is produced, whereas conventional ML models transform it only once or twice [38]. Consequently, DL models can learn highly complex patterns from data without any manual intervention and work extremely well for several applications such as image processing, pattern extraction, classification, and prediction. For instance, Long Short-Term Memory (LSTMs) networks are trending in solving time-series prediction problems, and thus, many studies have employed these models for GSR prediction [39,40,41,42,43,44]. Srivastava and Lessmann [45] developed an LSTM model using different meteorological parameters such as inputs based on air pressure, cloud cover, etc.; LSTM outperforms the Feed Forward Neural Networks (FFNN) and Gradient Boosting Regression (GBR) model for daily GSR prediction.

Aslam et al. [46] analyzed various state-of-the-art DL (LSTM, Gated Recurrent Unit (GRU)) and conventional ML (RNN, SVR, FFNN) models to predict GSR. Simulation results show that DL models perform better than the conventional ML models. Brahma and Wadhvani [47] proposed two different LSTM algorithms, namely Bidirectional LSTM (BiLSTM), Attention LSTM, and GRU for the prediction of daily GSR, and the results obtained suggest that BiLSTM has shown higher accuracy than other DL models. Furthermore, to improve the accuracy of GSR prediction, multiple ML or DL models were combined to take advantage of each single prediction model. The attention-based CNN model has been investigated by [48] in a study on the anomaly detection in quasi-periodic time series based on automatic data segmentation, while the study of [49] developed a data-driven evolutionary algorithm with perturbation-based ensemble surrogate method. Bendali et al. [50] propose an innovative hybrid method utilizing a genetic algorithm (GA) to optimize a deep neural network for solar radiation forecasting (GRU, LSTM, and RNN). Zang et al. [13] and Ghimire et al. [51] proposed a deep hybrid model that combines Convolutional Neural Network (CNN) and LSTM for GSR prediction. Likewise, Husein and Chung [52] proposed a hybrid, called LSTM-RNN, for daily GSR prediction. For a study in Queensland, Australia, Deo et al. [53] and Ghimire et al. [54] investigated the use of wavelet transform methods to improve solar radiation predictions, showing the efficacy of input data decomposition on the improved performance of wavelet-based models.

In accordance with this review, the following aspects summarize many of the shortcomings of existing studies: (a) many studies used historical records or antecedent values of GSR to predict the future thereby, ignoring the meteorological factors as inputs; (b) in the modeling process of these hybrid models, no feature selection algorithm has been used; (c) model testing results were unable to measure uncertainties in GSR prediction; and (d) nevertheless, not many studies have focused on the persistence model, which is difficult to surpass [55], sometimes even by the most advanced models [56].

Therefore, a key objective of this study is to address the research gaps listed above and develop a new DL hybrid Stacked LSTM Sequence to Sequence Autoencoder method, denoted as the SAELSTM model, adopted for daily GSR prediction at six solar farms in Queensland, Australia. The major contributions in developing the DL hybrid stacked LSTM sequence to sequence autoencoder (i.e., SAELSTM) model can be summarized as follows: (a) predictors from global climate model (GCM) meteorological data and ground-based data from Scientific Information for Landowners (SILO) were used for GSR predictions; (b) a Manta Ray Foraging Optimization (MRFO)-based feature selection process was implemented to select the best set of features for the problem; (c) LSTM-based seq2seq architectures were explored for GSR prediction and compared with Deep Neural Network (DNN), Gradient Boosting Regression (GBM), Random Forest Regression (RFR), Extremely Randomized Trees (ETR), and Adaptive Boosting Regression (ADBR), and (d) a prediction interval (PI) was calculated via quantile regression to quantify the level of uncertainty in the daily GSR prediction.

The structure of the paper is as follows: next, we summarize the most important characteristics of the main algorithms used in the proposed hybrid DL approach of GSR prediction, including the MRFO algorithm, LSTM network, the SAELSTM approach, and a summary of DL methods for comparison. Section 3 describes the study area considered and the data available for this study. Section 4 describes the proposed predictive model development for GSR prediction problems, and Section 5 discusses the results obtained and describes the comparison with alternative methods. Finally, Section 6 closes the paper with some conclusions and remarks on the research carried out and the results obtained.

2. Review of Theoretical Framework for ML and DL Techniques

2.1. Manta Ray Foraging Optimization (MRFO)

To develop the proposed DL, hybrid stacked LSTM sequence to sequence autoencoder (i.e., SAELSTM) model, we have adopted the Manta Ray Foraging Optimization (MRFO) method for feature selection. The MRFO is a bio-inspired novel algorithm that simulates the intelligent foraging behaviors of manta rays and the characteristics of their foraging behaviors [57]. The concept is applicable to our present solar radiation prediction problem given that the manta rays, on which the MRFO is based, have three distinct foraging strategies that they use to search for food, which form the fundamental search schemes of the MRFO to optimize the solution of our proposed solar radiation prediction problem.

- Chain foraging: When 50 or more manta rays begin foraging, they line up one after the other, forming an ordered line. Male manta rays are smaller than females and dive on top of their back stomachs to the beats of the female’s pectoral fins. As a result, plankton (prey or marine drifters) lost by past manta rays will be scooped up by those after them. Through working together in this manner, they can get the most plankton into their gills and increase their food rewards. This mathematical model of chain foraging is represented as follows [58]:where () = individual manta ray (m); r = random uniformly distributed number in . and MB = new or best position of manta ray in population, = weight coefficient as a function of each iteration.It is clear from Equation (1) that the previous manta ray in the chain and the spatial location of the strongest plankton clearly define the position update process in chain foraging.

- Cyclone foraging: When the abundance of plankton is very high, hundreds of manta rays group together in a cyclone foraging strategy. Their tail ends spiral along with the heads to form a spiraling vertex in the cyclone’s eye, and the purified water rises to the surface. This attracts plankton to their open mouths. Mathematically, this cyclone foraging is divided into two parts. The first half focuses on enhancing the exploration and is updated as [59]:where = individual created randomly:The adaptive weight coefficient () is varied as:where = current iteration and random uniformly distributed number, and is over .The second half concentrates on improving the exploitation, so the update is as per:

- Somersault foraging: This is the final foraging strategy with manta rays discovering the food supply and doing backwards somersaults to circle the plankton for attraction. Somersaulting is a spontaneous, periodic, local, and cyclical action that manta rays use to maximize their food intake. The third strategy is where an update of each individual occurs around an optimal position [60]:In Equation (7), S = somersault coefficient () controlling the domain of manta rays, and are random numbers within .

Based on a randomly generated number, the MRFO algorithm will switch between chain foraging and cyclone foraging [60,61]. Then, summersault foraging takes action to update individuals’ existing positions using the best solution available at the time. These three distinct foraging processes are used interchangeably to achieve the global optimum solution of the optimization problem, thus satisfying the predefined termination criterion.

2.2. Long Short-Term Memory Network (LSTM)

Recurrent Neural Networks (RNN) have lately been researched to accomplish the prediction problem due to the rapid development of DL, the rise of computation skills [45,51,52,62], and the failure of traditional ML methods to effectually reveal the intrinsic association between time-series data [63]. RNN has a short-term memory based on its recurring process in hidden layers correlating with contextual information. Furthermore, because of the issue related with gradient vanishing and explosion, RNNs are unable to provide long-term memory [64]. Hence, a Long Short-Term Memory network (LSTM) is proposed by researchers and has been used extensively in time-series prediction. LSTM is an RNN deformation structure that controls the memory information of time-series data by adding memory cells to the hidden layer. Information is passed between cells in the hidden layer by means of a series of programmable gates (input, output, and forget gate) [65]. LSTM can maintain the cell state through its gate mechanism, which can solve both short-term and long-term memory reliance problems, thus avoiding the vanishing gradient and explosion problem.

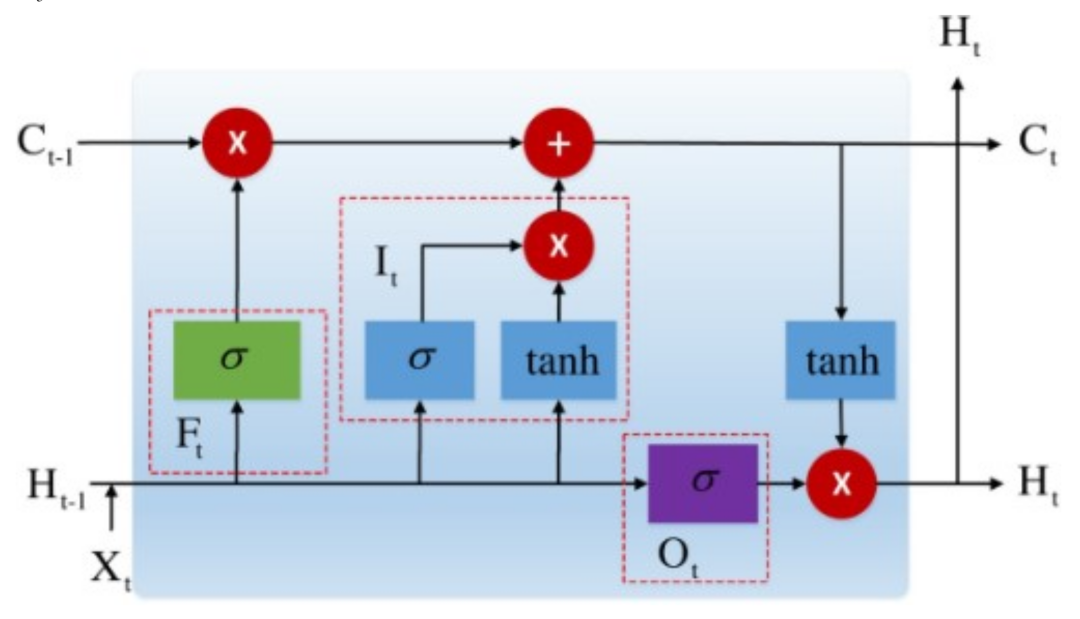

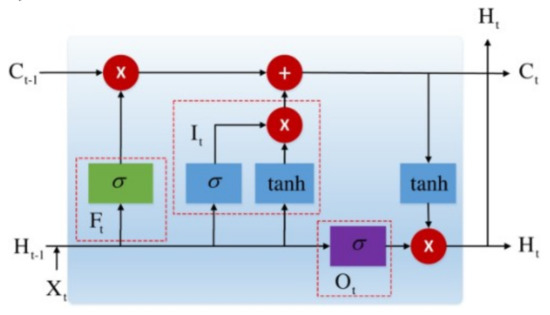

Figure 1 depicts the basic LSTM cell with three gates in a memory cell. The function of input gates is to keep track of the most recent information in a memory cell; the output gate function is to maintain control over the dissemination of the most up-to-date information throughout the remainder of the networks. The third gates (forget gates) function is to determine if the information should be deleted based on the status of the preceding cell. The equations below (8)–(15) explain how to implement and update the LSTM cell state and compute the LSTM outputs.

where = input vector; = output vector; = input gate outcome; = forget gate outcome; = output gate outcome; = finishing state in memory block; = temporary; = sigmoid function; , , , and are input weight matrices; , , , and are recurrent weight matrices; is output weight matrix; and , , , , and are the related bias vectors.

Figure 1.

Schematic of LSTM with = forget, = input, = output gate, = cell memory state, and = hidden state vector.

2.3. Stacked LSTM Sequence-to-Sequence Autoencoder (SAELSTM)

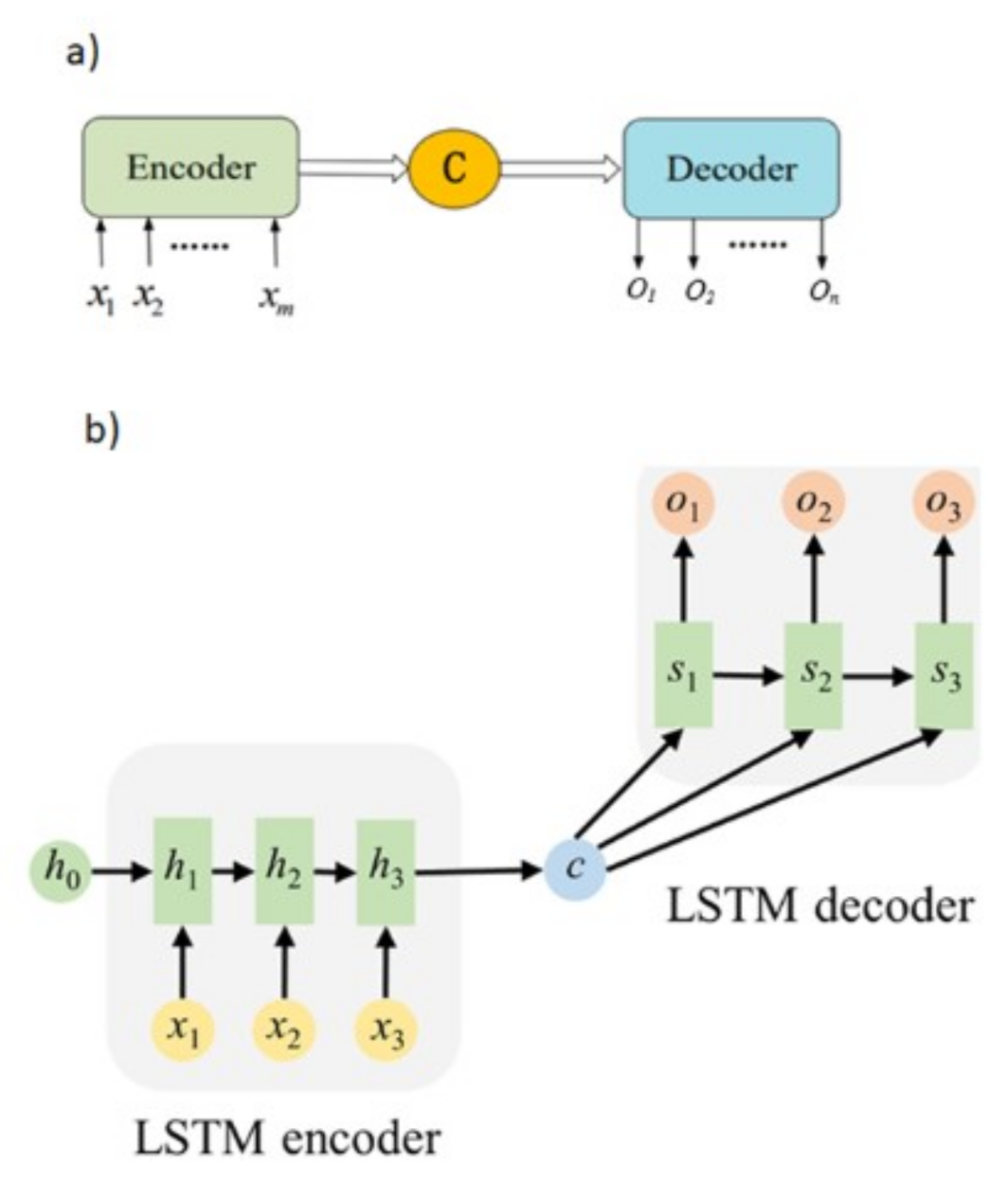

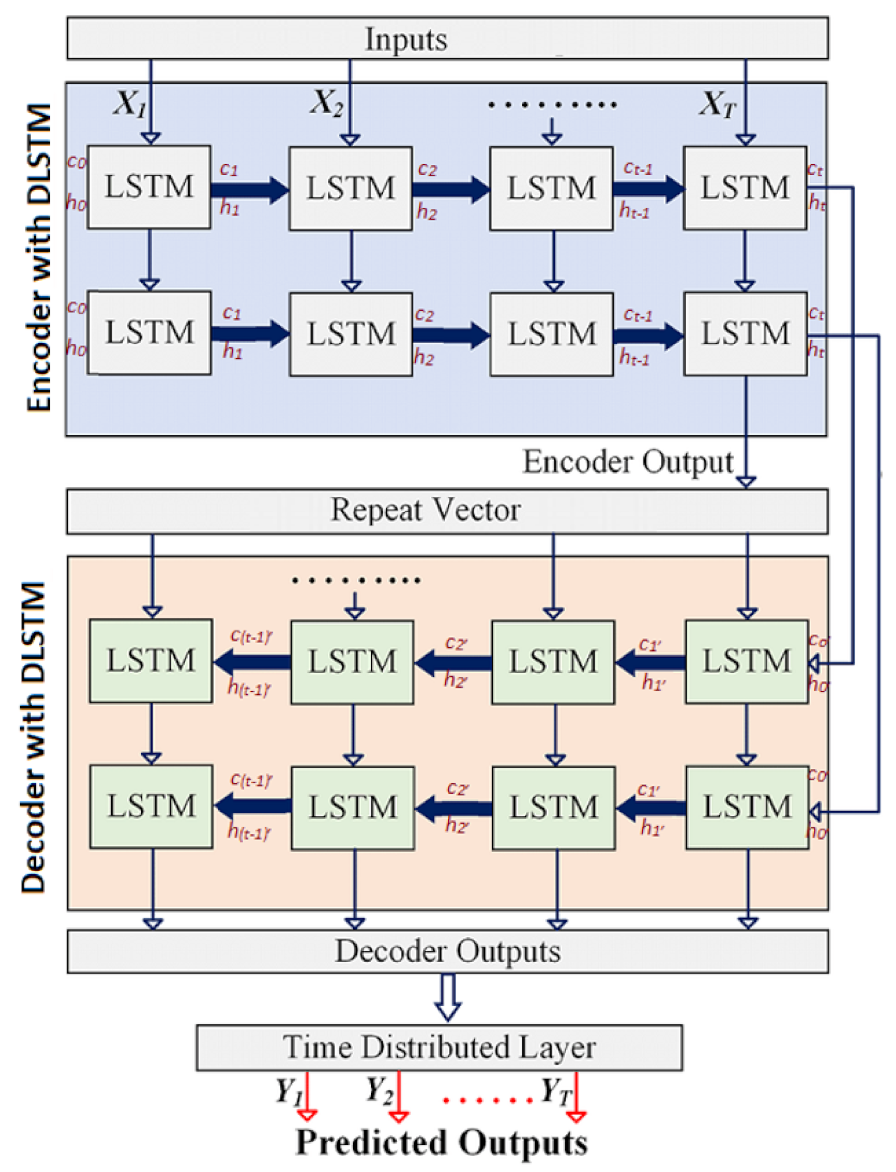

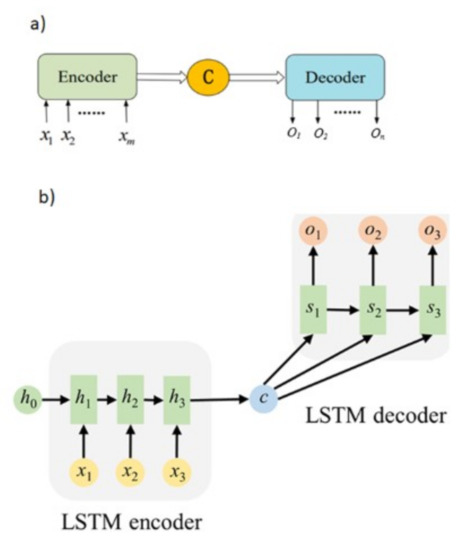

Our proposed DL hybrid stacked LSTM sequence-to-sequence autoencoder (i.e., SAELSTM) model has used the approach of Cho et al. [66], who introduced an RNN encoder–decoder network. This serves as a prototype for a sequence-to-sequence (seq2seq) model. The Seq2seq paradigm has recently become popular in the field of machine translation [67,68,69] and is made up of two parts: an encoder and a decoder, as illustrated in Figure 2a. Data are received by the encoder, which compresses it into a single vector. The vector at this point is known as a context vector, and the decoder uses it to create an output sequence. RNN or LSTM is used by the encoder to transform input into a hidden state vector. The encoder’s output vector is the latest RNN cell’s hidden state. The encoder sends the context vector to the decoder. The encoded context vector is utilized as the decoder network’s starting hidden state, and the output value of the previous time step is sent into the next LSTM unit as an input for progressive prediction.

Figure 2.

The schematic structure of the proposed sequence-to-sequence model. (a) A basic encoder-decoder system; (b) An extended encoder-decoder system with LSTM structure.

Mathematically, an encoder is formed by the input layer and the hidden layer, which compresses input data x from a high-dimensional representation into a low-dimensional representation Z. In the meantime, a decoder is formed by the hidden layer and the output layer, which reconstructs the input data from the appropriate codes. These transitions in the seq2seq learning can be signified mathematically by the standard neural network function passed through a sigmoid activation function (Equation (15)).

where W is weight matrices and b is the bias.

The encoder and decoder networks of the LSTM seq2seq model utilized in this study for GSR prediction are shown in Figure 2b. To use this seq2seq learning in GSR prediction, LSTM layers were stacked on the encoder and decoder parts of the model and called the stacked LSTM sequence-to-sequence autoencoder (SAELSTM). By stacking LSTMs, we may be able to improve our model’s prediction capability to comprehend more complicated representations of our time-series data in hidden layers by collecting information at various levels [70]. Moreover, on the figure, x and o are the input data and output data, c = encoder context vector and ht and st = hidden states in the encoder and decoder, which are respectively as follows:

Each encoder LSTM layer calculates context vector c, and this context vector will be replicated and sent to each decoder unit.

2.4. Benchmark Models

To validate the proposed deep learning hybrid stacked LSTM sequence-to-sequence autoencoder (i.e., SAELSTM) model, we adopted popular Machine Learning models: (i) Deep Neural Networks (DNN) as extensions of artificial neural network ([43,71,72,73,74,75,76,77]), (ii) Gradient Boosting Regressor (GBM) as an ensemble-based Machine Learning model [78,79,80,81], (iii) Random Forest Regression (RFR) as an ensemble-based Machine Learning model that uses an ensemble of Decision Trees to predicts outcomes [82,83,84,85,86,87,88,89,90], (iv) Extremely Randomized Trees Regression model (ETR) that uses bagging [91], and (v) the Adaptive Boosting Regression (ADBR) that aims to adaptively solve complex problems [10,92,93,94,95,96].

3. Study Area and Data Available

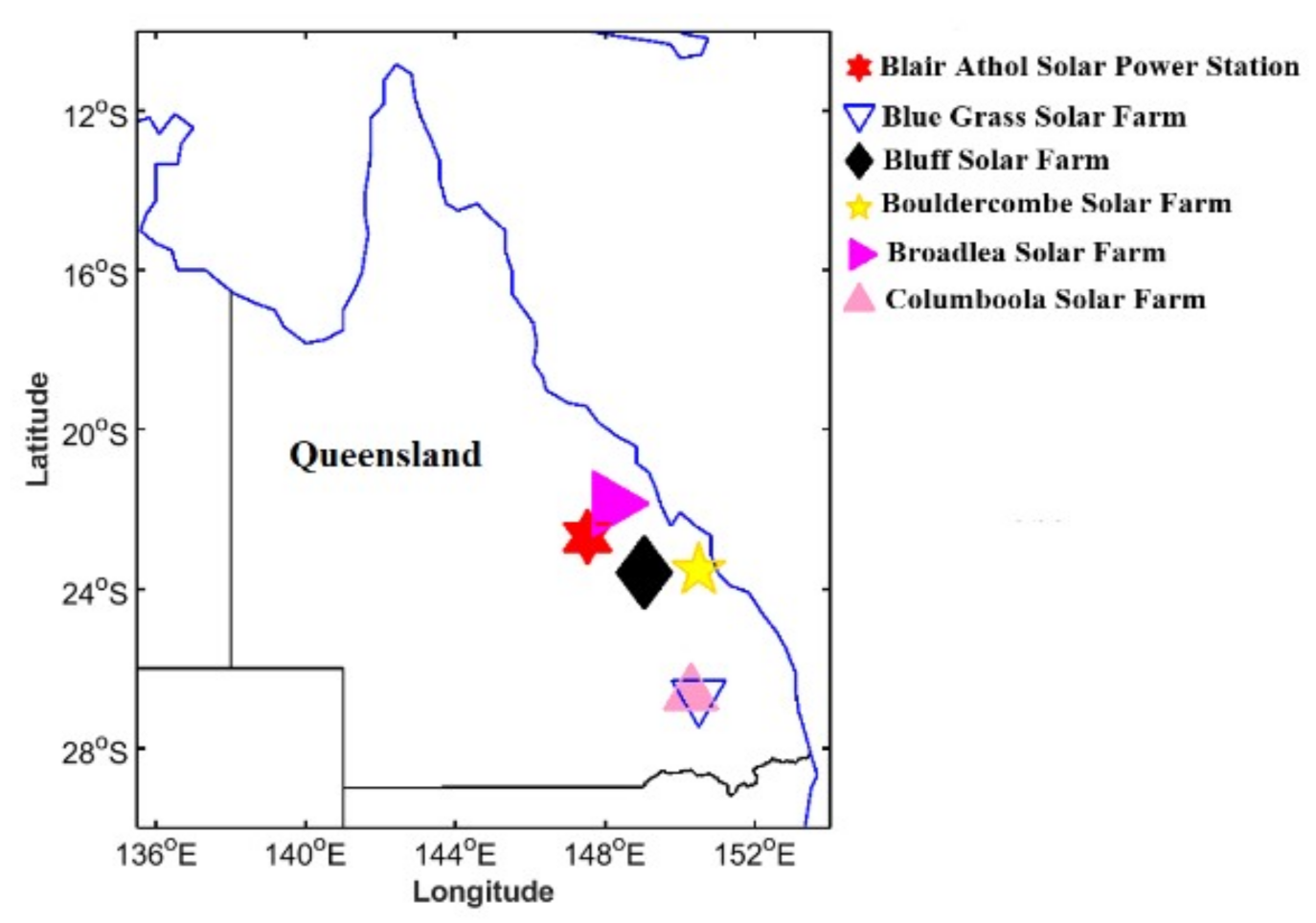

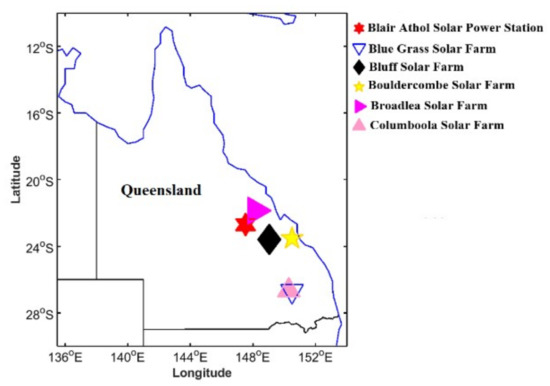

The proposed DL hybrid stacked LSTM sequence-to-sequence autoencoder (i.e., SAELSTM) model was built for Queensland, which is a region known as Australia’s sunshine state with 300 days of sunshine per year, a tropical climate, 8 to 9 h of sunshine per day, and average maximum and minimum temperatures of 25.3 and 15.7 °C, respectively [97]. As of March 2021, there are currently 44 large-scale renewable energy projects in Queensland (operating, under construction or financially committed). This roughly equates to an investment of $9.9 billion or 7000 construction jobs, or 5156 megawatts (MW) of renewable energy, and 12.6 million tons of carbon that can be saved per year. The state now has 6200 MW of renewable energy capacity, including rooftop solar PV and accounts for about 20% of total power consumption [98]. In this study, six solar farms in Queensland, Australia, ranging in size from 60 to 280 MW, were chosen for the study. The Bouldercombe solar farm (proposed to be developed by Eco Energy World) located 20 km southwest of Rockhampton, Queensland with 280 MW capacity. This solar farm will utilize 90,000 PV on a one-axis tracking system to capture the sun energy. The Bluff solar farm (proposed) entails the building of a 332 hectare (ha) solar farm with a capacity of 250 MW, which will generate power using PV panels with rotating axes to capture solar energy and transfer it to the local electrical grid through transmission lines.

The Blue Grass solar farm project site is 14 km from Chinchilla in Queensland, which is planned to be in the fully operational stage by the fourth quarter of 2021. This 200 MW solar farm will provide 420 Gigawatt hours (GWh) of green electricity per year, which is enough to power about 80,000 Queensland households. The Columboola Solar Farm (under construction by Sterling & Wilson) with 162 MW capacity project on 410 ha of grazing land is located in Queensland’s Western Downs and will utilize 407,171 next-generation bifacial solar panels that produce energy from the sun using both sides of the panel. Planned to be completed in 2022, the Columboola Solar Farm will generate approximately 440 GWh of renewable energy per year, which is enough renewable energy to power 75,000 homes for 35 years. The Broadlea and Blair Athol solar farms (both proposed) with a capacity of 100 MW and 60 MW are located at Blair Athol and Broadlea of North Queensland, respectively [99]. The study site details (the statistics of GSR) are shown in Table 1, and their locations are shown in Figure 3.

Table 1.

Statistical summary of daily global solar radiation (GSR; MJmday) in solar energy farms for Queensland Australia where the proposed deep learning hybrid SAELSTM model is implemented.

Figure 3.

The present study area showing six solar energy farms in Queensland Australia where the deep learning hybrid stacked LSTM sequence-to-sequence autoencoder (i.e., SAELSTM) model was developed to predict daily GSR.

In the supervised learning process, the predictive model is presented with example inputs (predictors) and their desired outputs (predictands), and the goal is to learn a general rule that maps inputs to outputs. Since GSR prediction is the supervised learning, we need the predictors and predictand. Therefore, this study has used the Global Climate Models (GCM) meteorological data (cloud parameters, humidity parameters, precipitation, wind speed, etc.) and ground-based observation data (Evaporation, Vapor Pressure, Relative Humidity at maximum and minimum temperature, Rainfall, Maximum and Minimum Temperature) from Scientific Information for Landowners (SILO) as the predictors. As the GSR (predictands or target) measurements for each site’s precise latitude and longitude are not publicly accessible, ground truth observations are obtained from the SILO database.

The Department of Science, Information Technology, Innovation and Arts under Queensland Government (DSITIA) manages the Long Paddock SILO database [100]. GCM outputs are collected from the online archive (Centre for Environmental Data Analysis) hosting CMIP5 project’s GCM output collection [101]. We adopt data from CSIRO-BOM ACCESS1-0 (grid size 1.25° × 1.875°) [102], MOHC Hadley-GEM2-CC (grid size 1.25° × 1.875°) [103], and the MRI MRI-CGCM3 (grid size 1.12148° × 1.125°) [104] with historical outputs spanning 1950-01-01T12:00:00 and 2006-01-01T00:00:00 indexed by longitude, latitude, time, atmospheric pressure (8 levels), or near-surface readings.

Table 2 provides a brief overview of each of the meteorological variables comprised in the dataset. This final dataset contained 20,455 × 75).

Table 2.

Predictor variables for daily GSR. (a) Atmospheric variables from global climate models and (b) Ground-based observational climate data from Scientific Information for Landowners (SILO) used to train the proposed Deep Learning hybrid SAELSTM model.

4. Predictive Model Development

Predictive models with time-series data require cleaning and filtering. Normalization of input variables, sometimes accomplished by scaling, is crucial in Machine Learning [105]. The intent of this normalization implementation is to eliminate the potential for numerically prominent variables to be favored over variables with miniature figures. Additionally, because kernel quantities rely largely on input vectors’ internal multiplication, there are calculation complications arising from large input variables [106]. Therefore, to overcome numerical complexities during modeling, the normalization of input vectors is essential. In this study, Equation (20) is applied so that each input variable is scaled linearly to a range [0, 1] [107].

where = input vector; the minimum and maximum of measured data are and is the scaled version of .

One of the fundamental concepts in the fields of Machine Learning and data mining is the concept of feature selection (FS), which enhances the performance of predictive models tremendously [17]. Furthermore, FS allows for the removal of irrelevant or partially relevant features, which in turn improves the performance of models [105]. In the course of time, researchers have applied several meta-heuristic optimization techniques for the purposes of FS, which overcome the limitations of traditional optimization techniques. Therefore, in this study, a new FS method based upon a meta-heuristic algorithm called Manta Ray Foraging Optimization (MRFO) was used. This MRFO mimics the feeding behavior of manta rays, which are one of the largest marine animals and explained in Section 2.1.

In FS techniques, one aspect that is critical is evaluation of the selected feature. As the proposed MRFO is a wrapper-based approach to FS, the evaluation process entails a learning algorithm (regressor). For this purpose, we used a known regression method, K-Nearest Neighbor (KNN). In general, FS is designed with two objectives: higher accuracy and a lower number of selected features. The combination of higher accuracy and fewer features selected indicate that the chosen subset is more accurate. This study has taken these two characteristics into account when creating the fitness function for our proposed MRFO FS. Due to the need to minimize the features, the root mean square error (RMSE), which is a complementary measure of regression accuracy, was selected. In this study, after normalizing all predictor variables, the MRFO FS algorithm is run with the following configurations:

- Population size .

- The number of maximum iterations (T) = 50.

- Somersault coefficient (S) = 2.

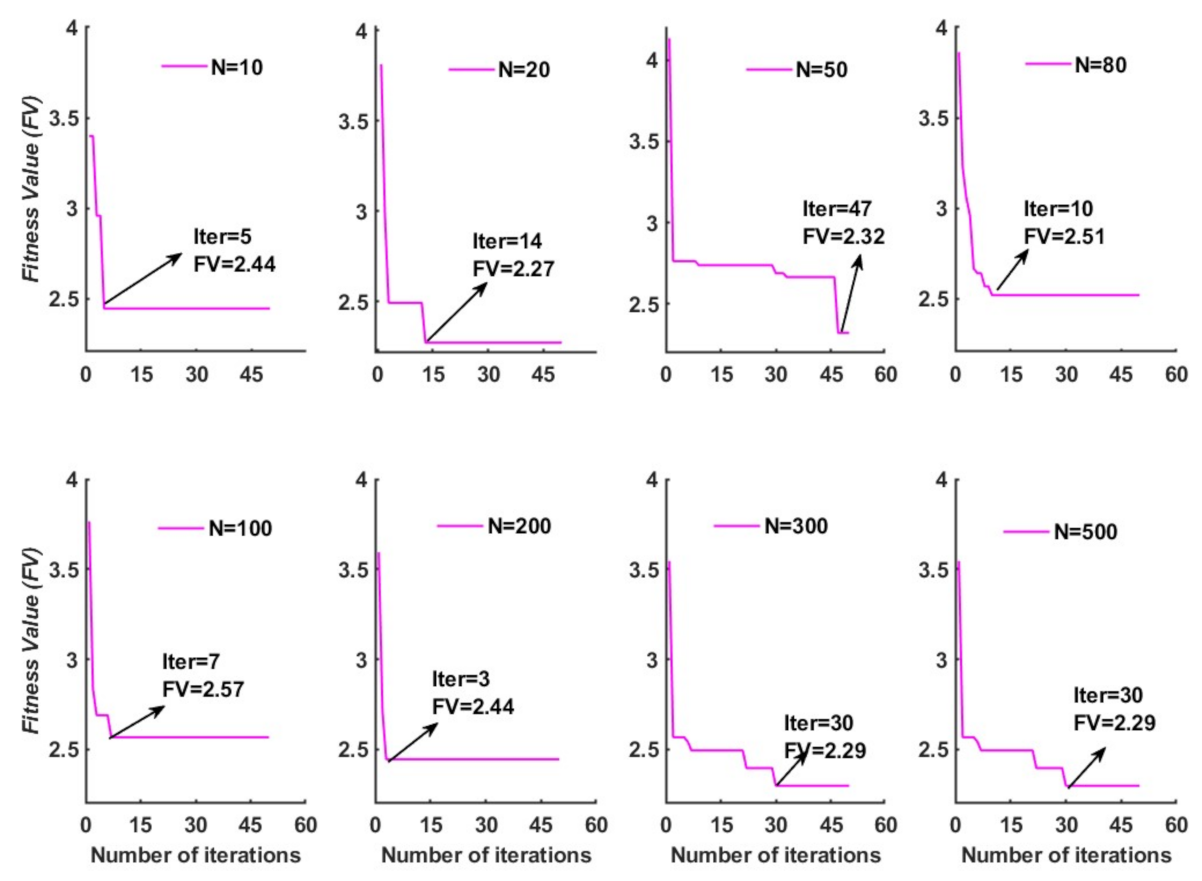

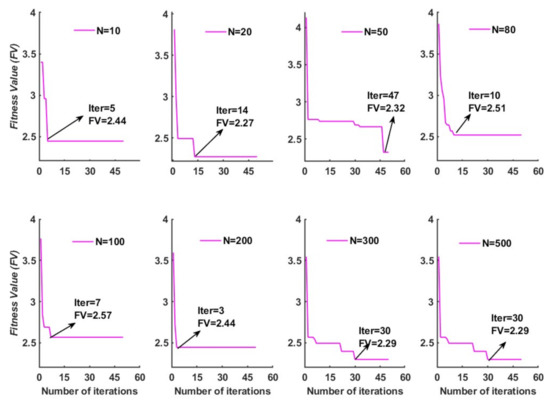

Similarly, we observed an effect of population size on MRFO performance in terms of root mean square error (fitness value, FV). To achieve this, we evaluated the proposed approaches for population sizes of 10, 20, 50, 80, 100, 200, 300, and 500. The convergence graph (Figure 4) shows the impact of different population sizes on FV for the Broadlea solar farm. From the convergence graph, it is apparent that increasing the size of the population is not always beneficial to FV. Along with this, the higher population size is computationally inefficient.

Figure 4.

Convergence curves for MRFO feature selection on the predictors for the case of Broadlea solar energy farm.

Therefore, for all other five solar farms, the value of the population size is set to 20 to balance the FV with the algorithm computation time. With this MRFO FS process, 16 meteorological predictors from the pool of 75 (data: 20,455 × 16) are selected for Blair Athol solar power station, Bluff solar farm, and Bouldercombe solar farm. Whereas for the Blue Grass solar farm and Columboola solar farm, 17 meteorological predictors (data: 20,455 × 17) are selected. Similarly, for the Broadlea solar farm, only 13 meteorological predictors (Data: 20,455 × 13) are selected. The predictors from the MRFO feature selection process for the prediction of GSR for all six solar farms are shown in Table 3.

Table 3.

The selected predictor (input) variable using Manta Ray Foraging Optimization (MRFO) feature selection for the proposed deep learning hybrid SAELSTM model. For abbreviations, readers should refer to Table 2 (example: relative humidity 1000 hPa pressure height).

Table 3 reveals that the optimal set of meteorological predictors are somewhat site-specific as the MRFO feature selection method selects different predictors for the different study sites. For example, 16 predictors (in a different order) are selected for the Blair Athol solar power station, whereas for the Blue Grass solar farm, there are 17 predictors, and for the Bluff Solar Farm, we have 16 best predictors. Interestingly, for the Broadlea solar farm, the MRFO feature selection process resulted in 13 meteorological predictors, and for the Columboola solar farm, there were 17 predictor variables—again in different order or predictor type. While the exact cause of these diverse list of screened predictors is not clear, it is possible that the strength of the features related to the measured GSR are different for the different study sites.

Lastly, before feeding the data into the ML model, training and testing data are created for the purpose of predicting daily GSR. Training datasets are used to train a model, and testing datasets are used to estimate the model’s range of capability. Throughout previous research, it was found that 70-30 % was usually used for data division during training and testing, and that there is no standard way of dividing data. In this study, for training, 54 years of data are used (20,089 data points), validation uses 20% of the data in the training set (4018 data points), and testing uses 1 year of data (365 data points). Moreover, to prevent look-ahead bias, only the training set was used for optimization, and the testing set was only used to test the model’s performance to predict the daily GSR.

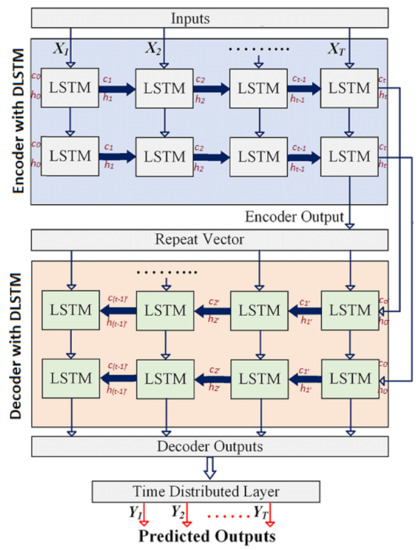

4.1. Stacked LSTM Sequence to Sequence Autoencoder Model Development

As mentioned in Section 2.3, this study utilizes the LSTM-based seq2seq model in prediction of GSR for six solar farms of Queensland, Australia. Furthermore, we have added two layers: namely, a repeat vector layer and a time-distributed dense layer in the SAELSTM model. The repeat vector layer repeats the context vector received from the encoder and feeds it to the decoder as an input. This is repeated for n steps, where n is the number of future steps that must be predicted [108].

Similarly, to maintain one-to-one relationships on input and output, we have employed a wrapper layer called a time-distributed dense layer. Furthermore, the flattened output of the decoder is mixed with the time steps if a time-distributed dense layer is not utilized for sequential data. However, if this layer is used, the output for each time step is received individually. In particular, the LSTM encoder extracts features from predictor variables and then passes on the hidden state of its last time step to the LSTM decoder. Each output time step contains the future variables. The LSTM decoder output is transformed directly by a fully connected time-wrapped layer to predict output at each subsequent step. The proposed methodology step-wise is shown in Figure 5.

Figure 5.

The stacked LSTM sequence-to-sequence autoencoder (i.e., SAELSTM) architecture used to predict GSR at six solar energy farms in Queensland, Australia. Note: Detailed description of the notations in Section 4.3.

- The encoder layer of the SAELSTM receives as an input a sequence X of predictor variables after MRFO FS, which are represented as with terms to time series in time step.

- The encoder recursively handles the input sequence (X) of length t. Then, it updates the cell memory state vector and hidden state vector at time step t. Afterwards, the encoder summarizes the input sequence in and .

- An encoder output is fed through a repeat vector layer, which is then fed into a decoder layer.

- Afterwards, the decoder layer of SAELSTM adopts Ct and ht from an encoder as initial cell memory state vector. The initial hidden state vectors C0’ and h0’ for t’ length are at the respective time step.

- Afterwards, the decoder layer of SAELSTM uses the final vectors Ct and ht passed from the encoder as initial cell memory state vectors and initial hidden state vectors C0’ and h0’ for t’ length of time step.

- The learning of features is performed by the decoder as included in the original input to generate multiple outputs with N-time step ahead.

- Using a time-distributed dense layer, each time step has a fully connected layer that separates the outputs (GSR). The prediction accuracy of the SAELSTM model can be evaluated here.

It is vital to select hyperparameters sensibly when designing an ML model in order to achieve optimal performance. For example, hyperparameters include the optimization and tuning of model structures, the step size of a gradient-based optimization, and data presentation, all of which have significant effects on the learning process. A grid search method based on five-fold cross-validation was utilized to optimize all the hyperparameters in the SAELSTM model. During SAELSTM model training, the activation function ‘ReLU’ is applied to the LSTM layers to handle vanishing gradients, allowing learning to be more rapid and effective [109]. Furthermore, Adam is chosen as the optimization algorithm with a constant learning rate of (lr) 0.001; decay rate & and epsilon () of . The Adam optimization algorithm is computationally efficient, has a reasonable memory requirement, is invariant to gradient rescaling, and is well-suited to handling large datasets [110]. Additionally, the regularization method called early stopping (es) [111] is used in developing predictive models, which quits the training process by controlling validation loss before a certain number of iterations.

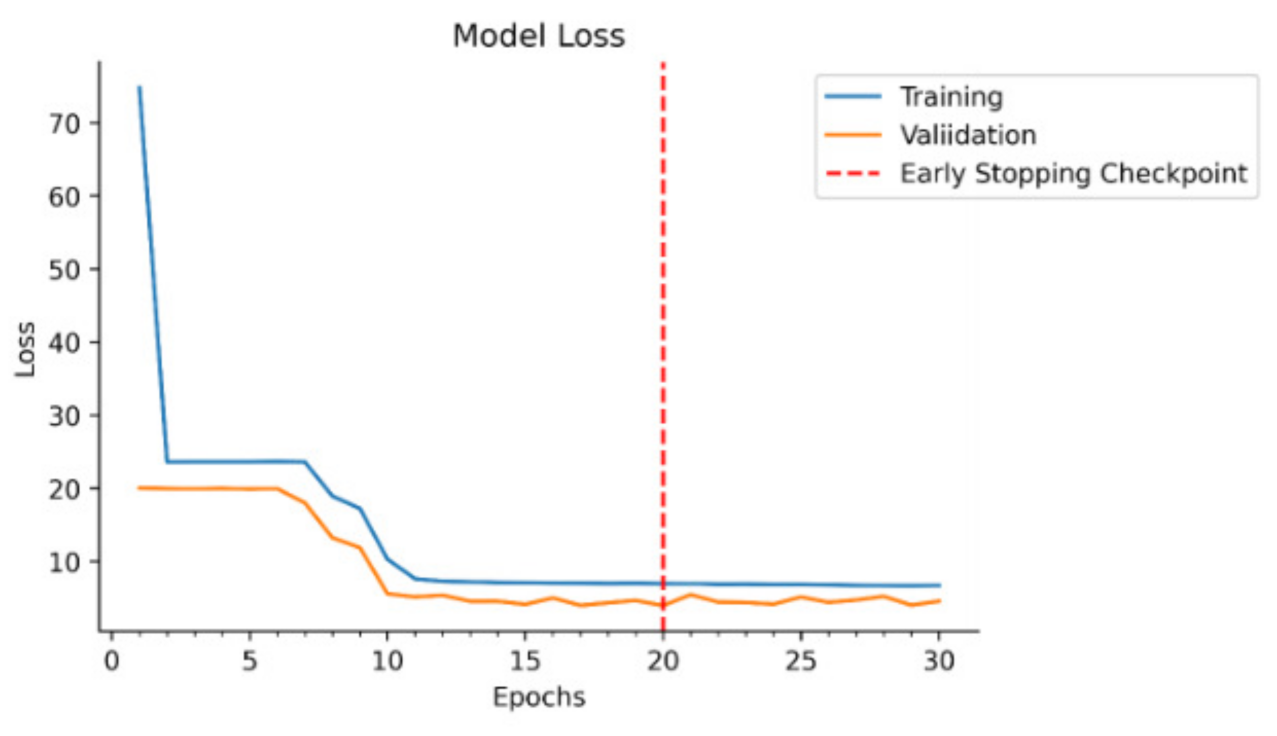

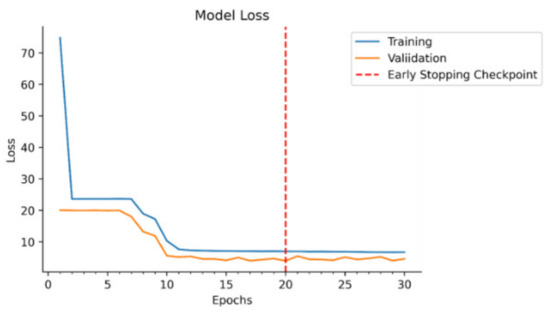

During model development, this study also uses the ‘ReduceLROnPlateau’ scheduler, which reduces the learning rate when a validation loss stops improving. As a start, ‘ReduceLROnPlateau’ uses the default learning rate of the optimizer (0.001). It is configured with patience (number of epochs with no improvement before the learning rate is reduced) of 8 and a factor of () 0.2. Table A1 (in the Appendix A) lists the search space and optimized results. Figure 6 illustrates that the training and validation losses of the SAELSTM model with optimum parameters (Broadlea solar farm gradually) decrease as the epoch increases, indicating the satisfactory performance of the SAELSTM training.

Figure 6.

Training and validation loss, or mean square error in model development phase. Early stopping callbacks are used to halt the model if no improvement in loss for a certain number of predefined epochs is evident.

4.2. Benchmark Model Development

We compared the proposed deep learning hybrid stacked LSTM sequence-to-sequence autoencoder (i.e., SAELSTM) model with five forecast models: Deep Neural Network (DNN), Gradient Boosting Regression (GBM), Random Forest Regression (RFR), Extremely Randomized Trees (ETR), and Adaptive Boosting Regression (ADBR) were performed to validate its predictive efficacy. All the proposed (SAELSTM) as well as benchmark models were built using Python under the framework of Keras 2.2.4 [112,113] on TensorFlow 1.13.1 [114,115]. The hyperparameters of the benchmark models are also derived by using grid search (see Table A1 in Appendix A). The training process of all the models was conducted on a system that has the CPU type of Intel®Core™i7 with 32GB RAM.

4.3. Performance Evaluation Metrics Considered

In the past, several approaches have been used to evaluate model efficiency. However, since each metric has its own strengths and weaknesses, the current study uses a collection of common statistical metrics approaches (e.g., Correlation (r), root mean square error (RMSE), mean absolute error (MAE), relative root mean square error (RRMSE), relative mean absolute error (RMAE), Willmott’s Index (WI), Nash–Sutcliffe Equation (NS), Legates and McCabe’s (LM), and Explained Variance Score ()) represented below [51,53,54,105,106,116,117,118,119,120,121] in Equations (21)–(31).

where and are the observed and predicted value of GSR, and are the observed and predicted mean of GSR, p stands for the model prediction, x stands for the observation, stands for perfect prediction (persistence), and r stands for the reference prediction.

For a better model performance,

- r can be in the range of and , MAE, RMSE = 0 (perfect fit) to ∞ (worst fit);

- RRMSE and RMAE ranges from 0% to 100%. For model evaluation, the precision is excellent if , good if , fair if , and poor if [122].

- WI, which is improvement to RMSE and MAE and overcomes the insensitivity issues with differences between observed and predicted not squared. We have from 0 (worst fit) to 1 (perfect fit) [123].

- NSE compares the variance of observed and predicted GSR and ranges from (the worst fit) to 1 (perfect fit) [124].

- LM is a more robust metric developed to address the limitations of both the WI and [119] and the value ranges between 0 and 1 (ideal value).

- uses biased variance for explaining the fraction of variance and ranges from 0 to 1.

Furthermore, the overall model performance was ranked using the Global Performance Indicator (GPI) [125]. GPI was calculated using the six metrics.

where = median of scaled values of statistical indicator, j = 1 for RMSE, MAE, MAPE, RRMSE, and RRMSE (), for r; = scaled value of the statistical indicator j for model i with larger GPI indicating a better performance.

We evaluated the model performance with Kling–Gupta Efficiency (KGE) [126] and Absolute Percentage Bias (APB; %) [127]. Mathematically, these metrics are stated as follows:

where r is the correlation coefficient, and is the coefficient of variation.

This study also use the promoting percentage of absolute percentage bias (), mean absolute error (), and root mean square error () [128] to compare various models that have been used in the GSR prediction.

where , , and refer to the objective model (i.e., SAELSTM) performance metrics and , , and refer to the benchmark model performance metrics.

Additionally, the performance to prediction direction of movement was measured by a Directional Symmetry (DS) as follows:

where:

An assessment criterion known as the Diebold–Mariano (DM) test, Harvey, Leybourne, and Newbold (HLN) was used to test the statistical significance of all models under study; these statistical tests are done to further evaluate the model prediction performance and directional prediction performance from a statistical standpoint. When comparing models, the alternative model outperforms the comparative model when DM statistics > 0, HLN statistics > 0. The key steps of the DM and HLN tests are defined in previous literature [129,130,131].

4.4. Prediction Interval

To ascertain the importance of the proposed deep learning hybrid stacked LSTM sequence-to-sequence autoencoder (i.e., SAELSTM) model in solar energy monitoring systems, this study has generated a prediction interval (PI) using quantile regression to quantify the level of uncertainty associated with the GSR prediction [132]. With quantile regression, it is possible to get prediction at different quantile levels and therefore gain a better picture of the prediction. Quantile regression not only makes it easy to get multiple quantile prediction, but it also calculates PI [133].

To generate the PI, during training of the proposed (SAELSTM) model as well as benchmark models, quantile loss function was used instead of RMSE. However, as opposed to deterministic prediction, prediction interval provides more information. Since the uncertainty factor in the prediction affects the decision-making process, it is necessary to evaluate the PI [134]. These PIs show the upper and lower bounds for the entity being predicted as well as the corresponding confidence level [135].

In this study, a quantitative measure of the prediction interval’s quality was also calculated by examining (i) prediction interval coverage probability (PICP), (ii) mean prediction interval width (MPIW). Theoretically, PI with a higher PICP and a lower MPIW are best [136] and can be defined by Equations (40) and (41) [137,138].

where is the binary value 1 if the target value is within the and otherwise 0, is the upper limit, is the lower limit, and T is the number of testing samples.

5. Results and Discussion

An extensive evaluation of the proposed deep hybrid SAELSTM model compared with the DL model (DNN) as well as the conventional ML models (GBM, RFR, ETR, and ADBR) has been conducted after the prediction of GSR at six solar farms located in Queensland, Australia. To achieve optimal features for the predictor variables, the Manta Ray Foraging Optimization (MRFO) feature selection algorithm was incorporated. In order to find the optimal hyperparameter for deep hybrid SAELSTM as well as comparative models, a grid search method based on five-fold cross-validation was used. Based on predictor metrices (Section 4.3 and Section 4.4) and visual plots, the models were assessed based on prediction results using the testing dataset. The model that showed the lowest RMSE, MSE, RRMSE, RMAE, MAPE, and APB values and the highest KGE, NSE, r, LM, and WI was chosen, and finally, the models were ranked on the basis of GPI.

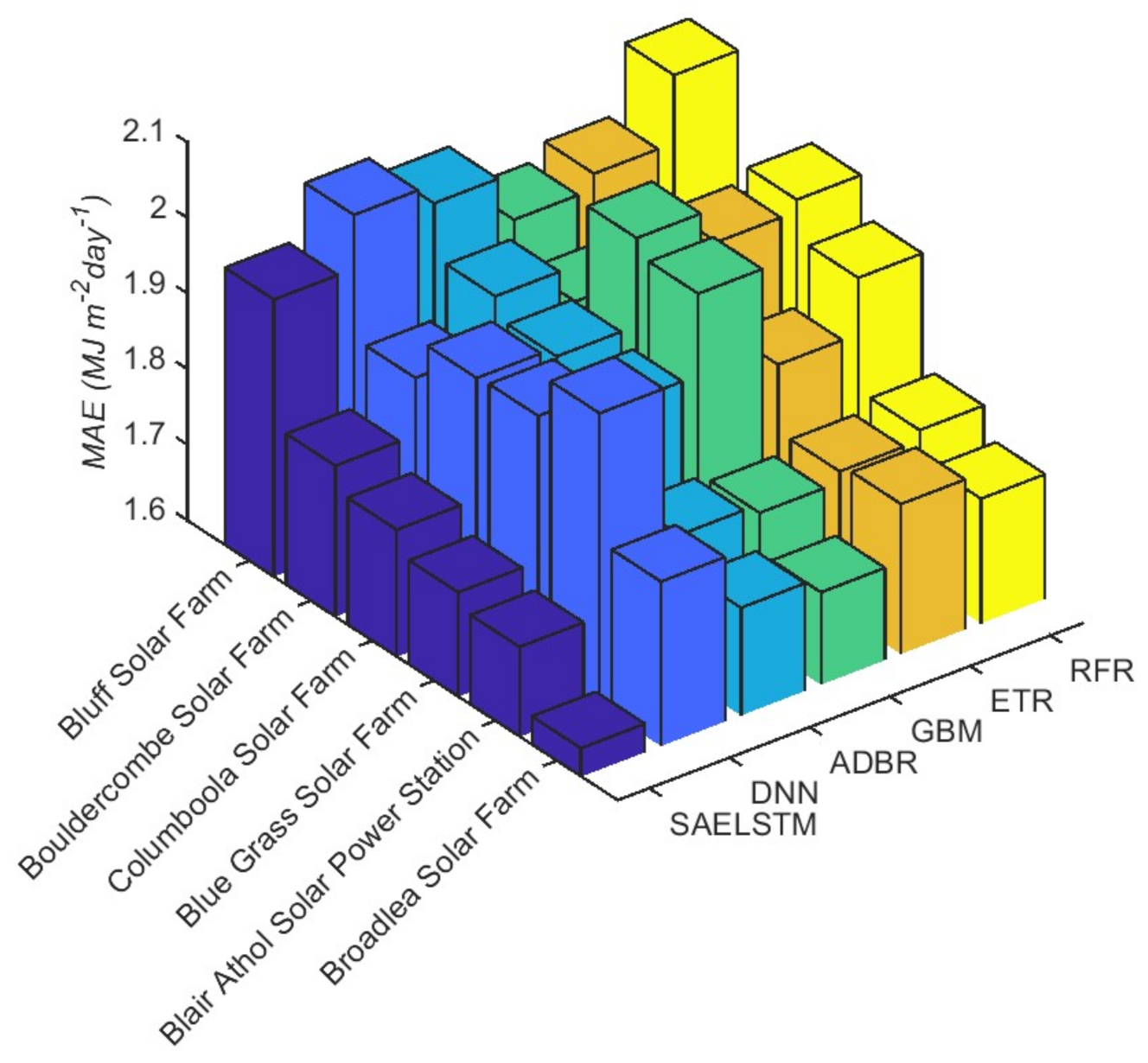

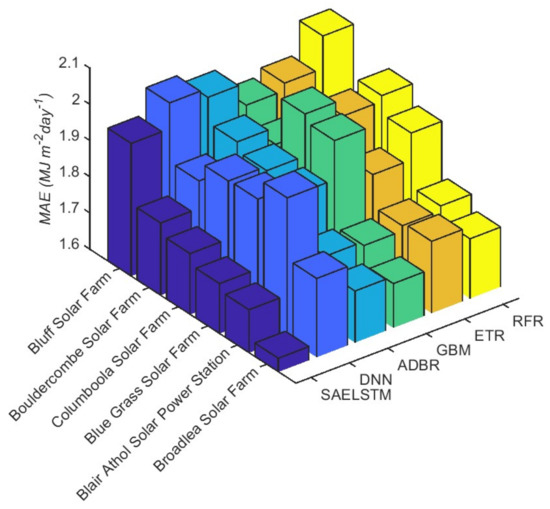

In terms of statistical metrics r, RMSE, and MAE, Table 4 and Figure 7 analyze the robustness of the deep hybrid SAELSTM against the comparison DL model and traditional ML models. In predicting GSR using all six solar farms, the proposed (deep hybrid SAESTM) model outperformed the alternative models used in this study. The results recorded the highest r value from the deep hybrid SAESTM model () and the lowest RMSE and MAE values (RMSE (MJmday) and MAE (MJmday) in comparison with the other models. Consequently, it was clear that the deep hybrid SAESTM model is superior to DNN and other comparing models.

Table 4.

The performance of the proposed deep learning hybrid SAELSTM vs. counterpart comparison models in terms of correlation coefficient (r) and root mean square error (RMSE, MJmday) in the model’s testing phase.

In Table 5, we employed multi-scale WI and NSE criterion to analyze the performance of the deep hybrid SAELSTM model vs. the DNN, ADBR, GBM, ETR, and RFR models. For the case of Blue Grass Solar Farm, the optimum values of WI (≈0.930) and NSE (≈0.863) were produced by the SAELSTM model followed by those for an ETR (WI ≈ 0.908, NSE ≈ 0.833), the ADBR model (WI ≈ 0.906, NSE ≈ 0.82), the DNN model (WI ≈ 0.904, NSE ≈ 0.828), the GBM model (WI ≈ 0.902, NSE ≈ 0.823), and the RFR model (WI ≈ 0.902, NSE ≈ 0.824). Similarly for the other five farms, high performance was yielded by the SAELSTM model in comparison with other methods.

Table 5.

As per Table 5 but measured in terms of the Willmott’s Index (WI) and Nash–Sutcliffe coefficients (NSE).

The performance of the SAELSTM model was further evaluated using two other metrics of LM and (Table 6). For the Blue Grass solar farm, the SAELSTM model with high LM (≈0.665) and (≈0.867) outperformed all the other DL models and the conventional ML models. Likewise, the SAELSTM model of the other five solar farms (Blair Athol solar power station, Bluff solar farm, Bouldercombe solar farm, Broadlea solar farm, and Columboola solar farm) performed substantially better proofing than the deep hybrid SAELSTM model, indicating its superior accuracy in predicting GSR compared to the other models developed in this work.

Table 6.

As per Table 5 but measured in terms of the Legates and McCabes index () and explained variance score ().

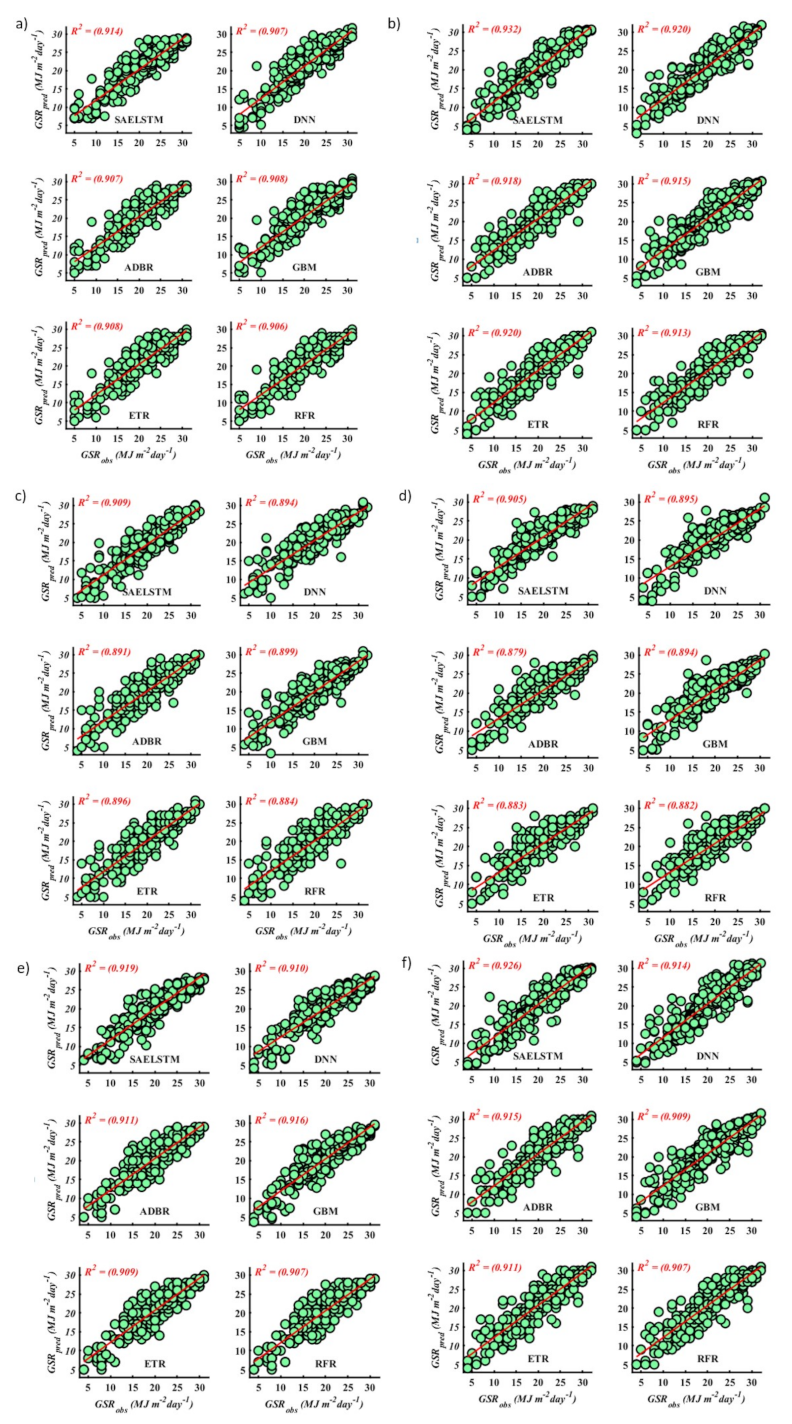

In order to overcome the limitation of the objective metrics, diagnostic plots were used to show the ability and suitability of the deep hybrid SAELSTM model in GSR prediction. Figure 8 shows scatter plots of the observed and predicted GSR resulting from the deep hybrid SAELSTM model, the DL models, and the conventional ML models during the testing phase at all six solar farms. For better illustration, both the linear fit equation line and the Coefficient of Determination (R) , which gives a measure on the adequacy of the model [139], have been included. As it can also be seen by the scatter plot, the SAELSTM model performs the best, since the scatter points are close to the line in comparison to the other models, which are scattered farther from the line. The scatter plot concurs with the results of r, RMSE, MAE, LM, NSE, WI, and metrices as well.

Figure 8.

Scatter plots of the observed (GSR) and predicted (GSR) daily GSR for solar farms in Queensland. (Note: the line in red is the least-squares fit line () to the respective scatter plots where y is the predicted GSR and x is the observed GSR. Names for each model are provided in Table 3 and Table A1) stated in Appendix A. (a) Blair Athol Solar Power Station, (b) Blue Grass Solar Farm, (c) Bluff solr Farm, (d) Bouldercombe Solar Farm, (e) Broadlea Solar Farm, (f) Columboola Solar Farm.

To compare the model performances in prediction of GSR at the sites that differ geographically, physically, and climatically, alternative relative metrics such as RRMSE and RMAE were used. Table 7 presents these statistical metrics showing that the deep hybrid SAELSTM model had the lowest RRMSE and RMAE compared to the DNN, ADBR, GBM, ETR, and RFR approaches for all six solar farms. For example, the proposed study model yielded RRMSE ≈ 11.617% compared with 13.126 for DNN, 12.910 for ADBR, 13.259 for GBM, 12.868 for ETR, and 13.208 for RFR when the Blue Grass solar farm data were used. In all six sites, the deep hybrid SAELSTM model resulted in the lowest values of both RRMSE and RMAE, and they were lower than those of the other comparative models, indicating that the SAELSTM is undoubtedly the best option.

Table 7.

As per Table 5 but measured in terms of the relative root mean square error (RRMSE, %) and relative mean absolute error (RMAE, %) in the testing phase.

The predictability of the deep hybrid SAELSTM model is further evaluated by comparing promoting percentages, which are presented via incremental performance () of the objective model over competing approaches, where, for example, = − tests the difference in relative RMAE of the SAELSTM and DNN model. During the testing phase, Table 8 contains further details regarding these values, making a clear comparison study. It is evident that deep hybrid SAELSTM performs better than the DL model (DNN) and the other conventional ML models (ADBR, GBM, ETR, and RFR).

Table 8.

The promoting percentage of comparison, against objective (i.e., deep learning hybrid SAELSTM) model with percentage values indicating the improvement of the objective model over the benchmark models. Note: is the promoting percentages of relative mean absolute error, is the promoting percentages of relative root mean square error, and is the promoting percentages of absolute percentage bias.

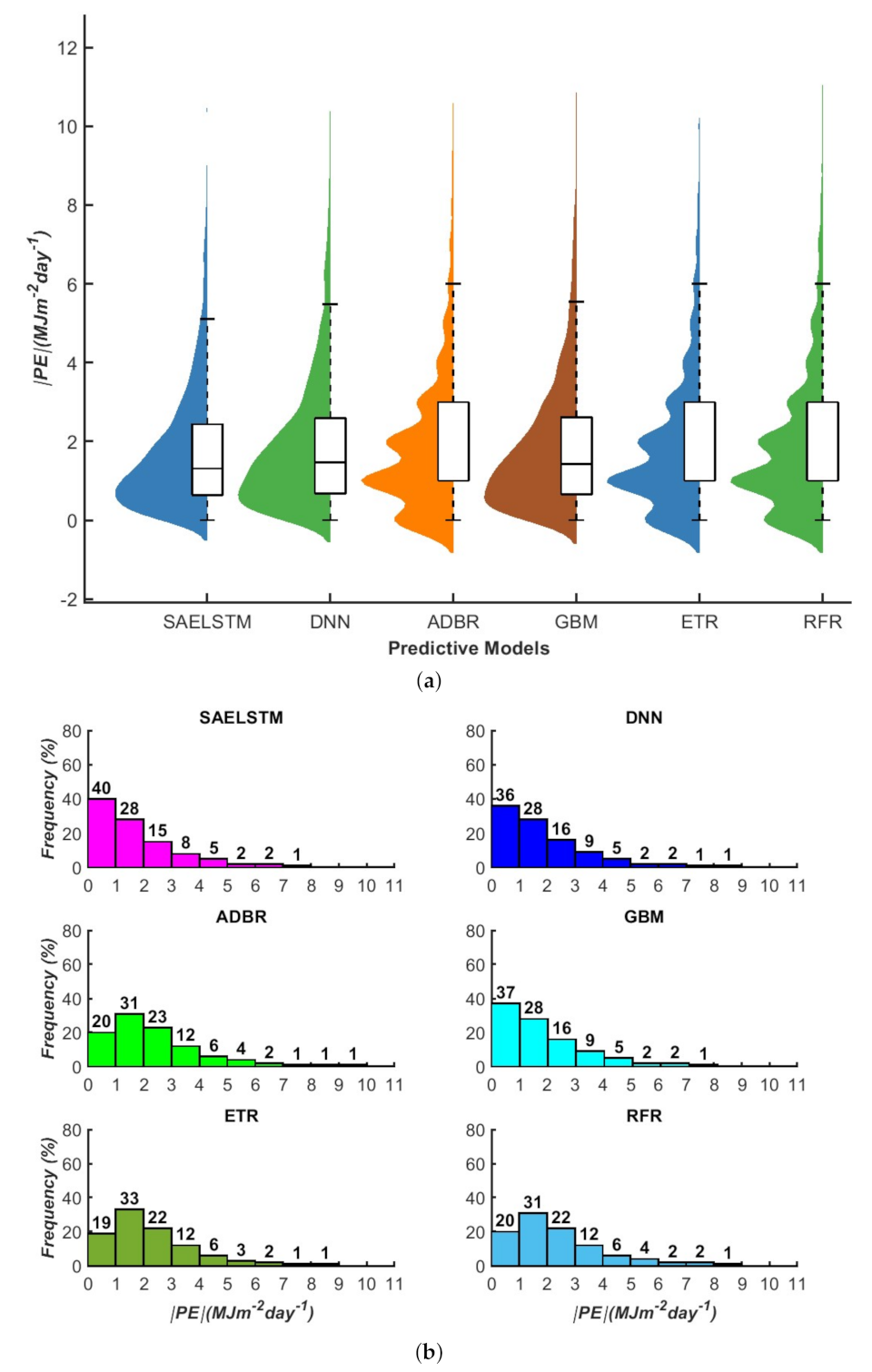

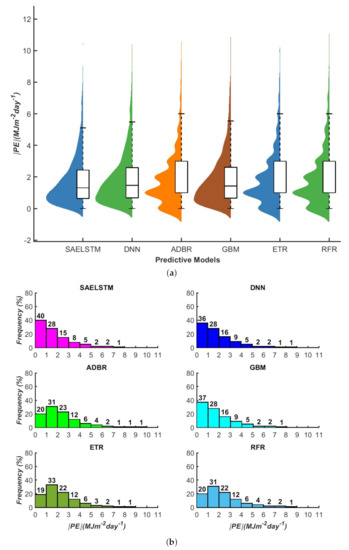

A graphical analysis of model performance is as important to numerically evaluate the proposed model. To support our early results, we show in Figure 9a the violon plot of the deep hybrid SAELSTM model in comparison with the other models developed in this pilot study utilizing boxplots of the absolute prediction error () in the testing data. As shown in the figure, the distribution above the upper adjacent values represents the outliers of the extreme |PE|, along with their upper quartile, median, and lower quartile. The distribution of the |PE| error acquired by the deep hybrid SAELSTM model for all sites exhibits a much smaller quartile relative to the DNN, GBM, ADBR, ETR, and RFR. Additionally, to better understand the model’s precision for real-world renewable energy applications, the frequency of |PE| has been shown in different error bands (Figure 9b). The histogram of |PE| within an error bracket of MJmday revealed this frequency that resulted from . Concurrent with our earlier results, the most accurate prediction of daily GSR is made by the proposed deep hybrid SAELSTM model. Remarkably, it is clear that 40% of all the values are reported within the smallest error bracket of MJmday, whereas that for the DNN, GBM, ADBR, ETR, and RFR models are ≈36%, 37%, 20%, 19%, and 20%, respectively. This result also concurs with the errors being distributed into larger brackets for the DNN, GBM, ADBR, ETR, and RFR models.

Figure 9.

(a) Violin plots of prediction error (PE) generated in the testing phase for daily GSR prediction. (b) The cumulative frequency of daily forecast error ( MJmday) for all tested sites. (For model names, see Table 3 and Table A1 in Appendix A).

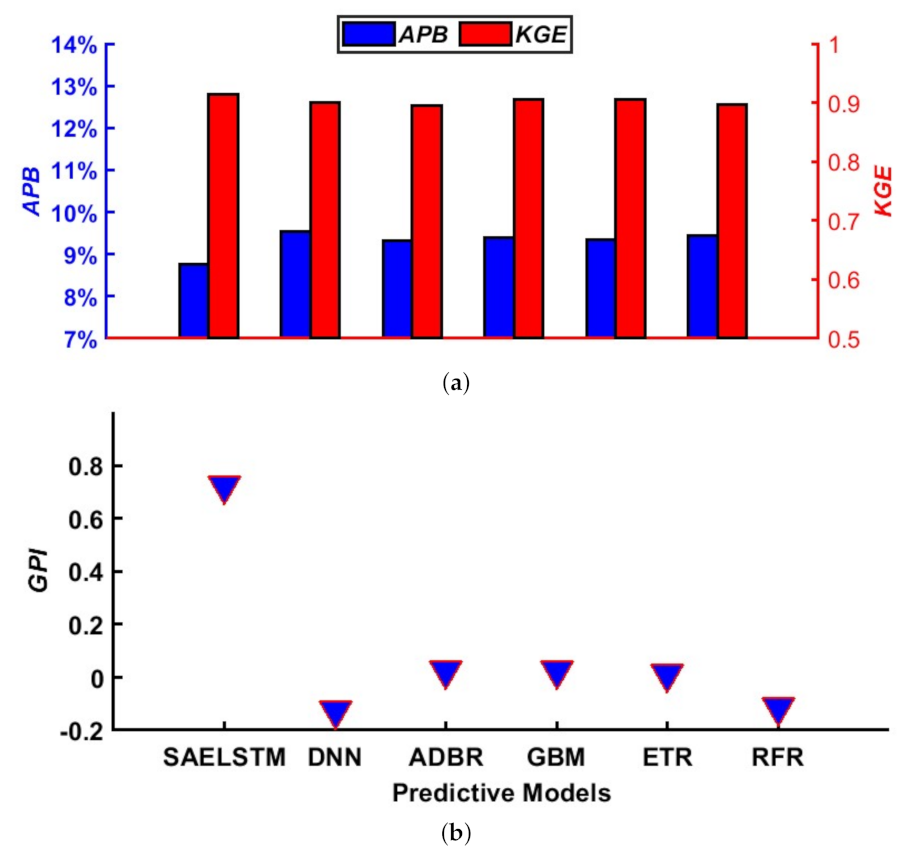

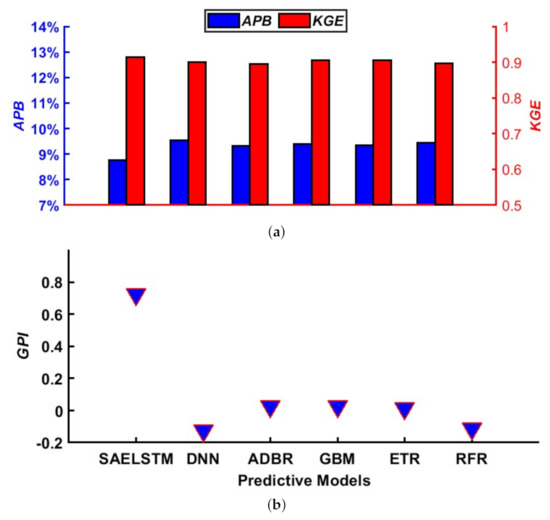

While this study adopted the Nash–Sutcliffe coefficient to evaluate the proposed deep hybrid SAELSTM model, the three components of the NSE of model errors (i.e., correlation, bias, ratio of variances or coefficients of variation) were also investigated in Figure 10 to further check the performance in a balanced way using Kling–Gupta efficiency (KGE). Hence, the efficacy of the SAELSTM model was further verified using KGE and the absolute percentage bias (APB). With a relatively high KGE (≈0.914) and a comparatively low APB (≈8.763), the results showed the superior performance of the deep hybrid SAELSTM predictive model, far exceeding that for the counterpart models, as illustrated Figure 10a. Furthermore, the ranking of the models is performed according to the prediction efficiency using the GPI-based metrics. In general, we note that the GPI takes the values from −0.114 to 0.726, as shown in Figure 10b. Indeed, the highest value (≈0.726) is obtained by the deep hybrid SAELSTM predictive model that further proves the capability of the proposed model to forecast daily GSR data.

Figure 10.

(a, top) Bar plots of prediction error chart showing a comparison of the proposed SAELSTM model using APB, percentage, and KGE in the testing phase. (b, bottom) Global performance indicator (GPI) of CLC model compared with other counterpart models. (Note: Names for each model are provided in Table 3 and Table A1 in Appendix A).

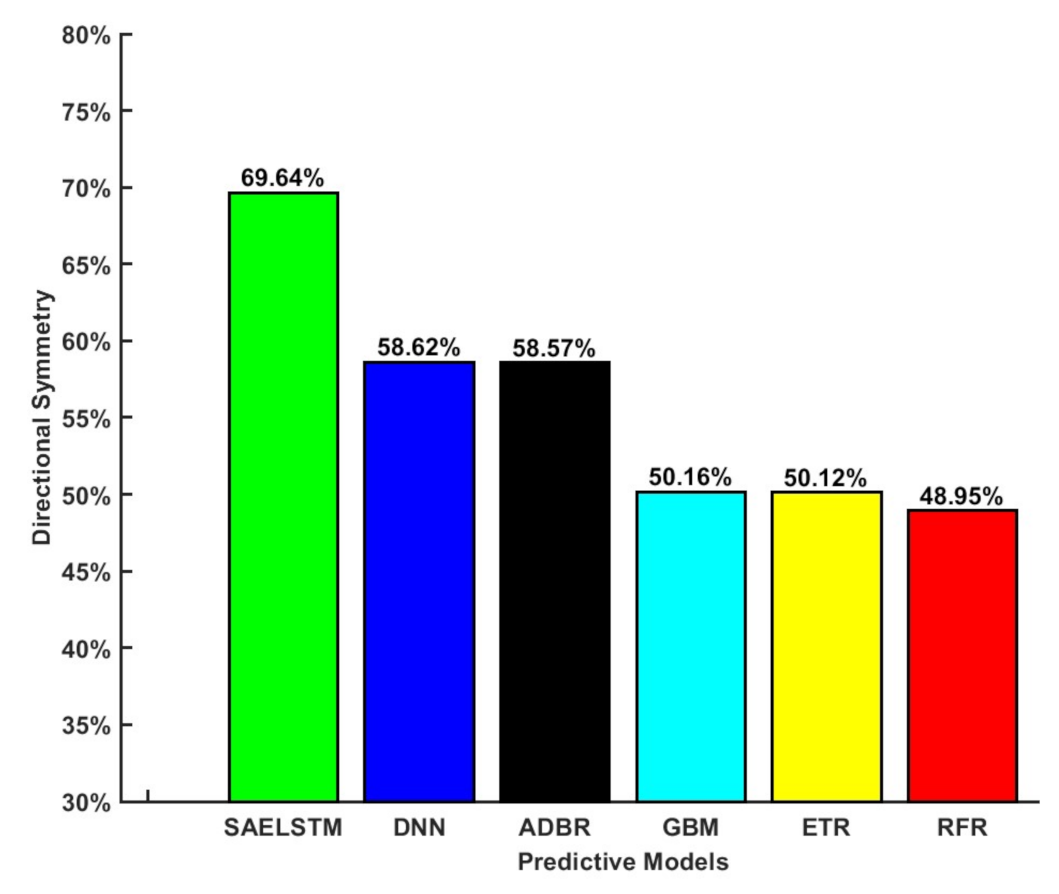

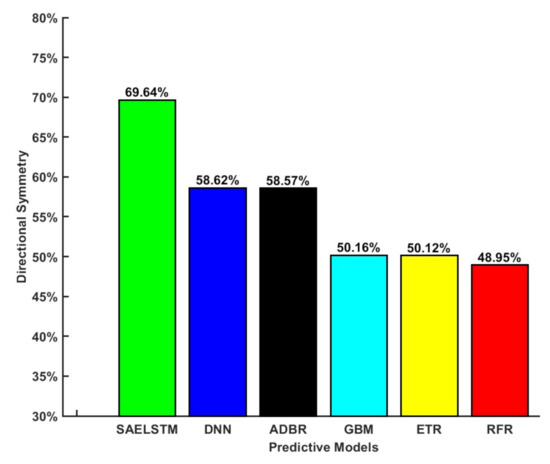

To reaffirm the superior performance of the deep hybrid SAELSTM predictive model, several statistical methods by utilizing the Diebold–Mariano (DM) and the Harvey, Leybourne, and Newbold (HLN) tests were also employed where the statistical significance of all the predictive models under this study are examined. The purpose of these tests is to deduce if the deep hybrid SAELSTM predictive model is significantly more accurate than the other comparative models (Table 9a,b). Notably, the models in the column of these tables are compared with the models in the rows, and if the result is positive, the model in the column would most likely outperform the model in the row. By contrast, if it is negative, then the one in the row is superior. Similar to this result, Figure 11 shows that the DS (i.e., directional prediction accuracy) of the deep hybrid SAELSTM predictive model is greater than the other five models (an average of 69.64% compared with 58.62%, 58.57%, 50.16%, 51.12%, and 48.95%, respectively, for the DNN, ADBR, GBM, ETR, and RFR models). Congruent with the previous findings and taking together the results of DM, HLN, and DS tests, we argue that the deep hybrid SAELSTM model can predict the daily GSR data more accurately than the other models. Additionally, the RMSE values of the deep hybrid SAELSTM model and the comparative counterpart models are now compared with the RMSE values of the model developed using only the clear-sky index persistence measure [140], which is denoted as the skill score (SS) and the RMSE ratio (RMSEr) [141]. Notably, all the comparative models appear to have significantly lower SS and RMSE values relative to the deep hybrid SAELSTM predictive model, as shown in Table 9c,d.

Table 9.

(a) Diebold–Mariano (DM) test statistics. To interpret this, we compare the column of the table with rows. For a positive result, the model in the column would be superior and with a negative result, the model in the row is superior. (b) Harvey, Leybourne, and Newbold statistics. (c) The Skill Score Metric (SS) for the proposed Deep Learning hybrid SAELSTM, as well as other Deep Learning and comparative models. (d) The performance of the proposed Deep Learning hybrid SAELSTM model with comparative benchmark models measured by the ratio of the root mean square error (RMSE).

Figure 11.

The performance of the proposed SAELSTM model compared to other counterpart models under study in terms of directional symmetry (DS) criteria. (Note: Names for each model are provided in Table 3 and Table A1 in the Appendix A.)

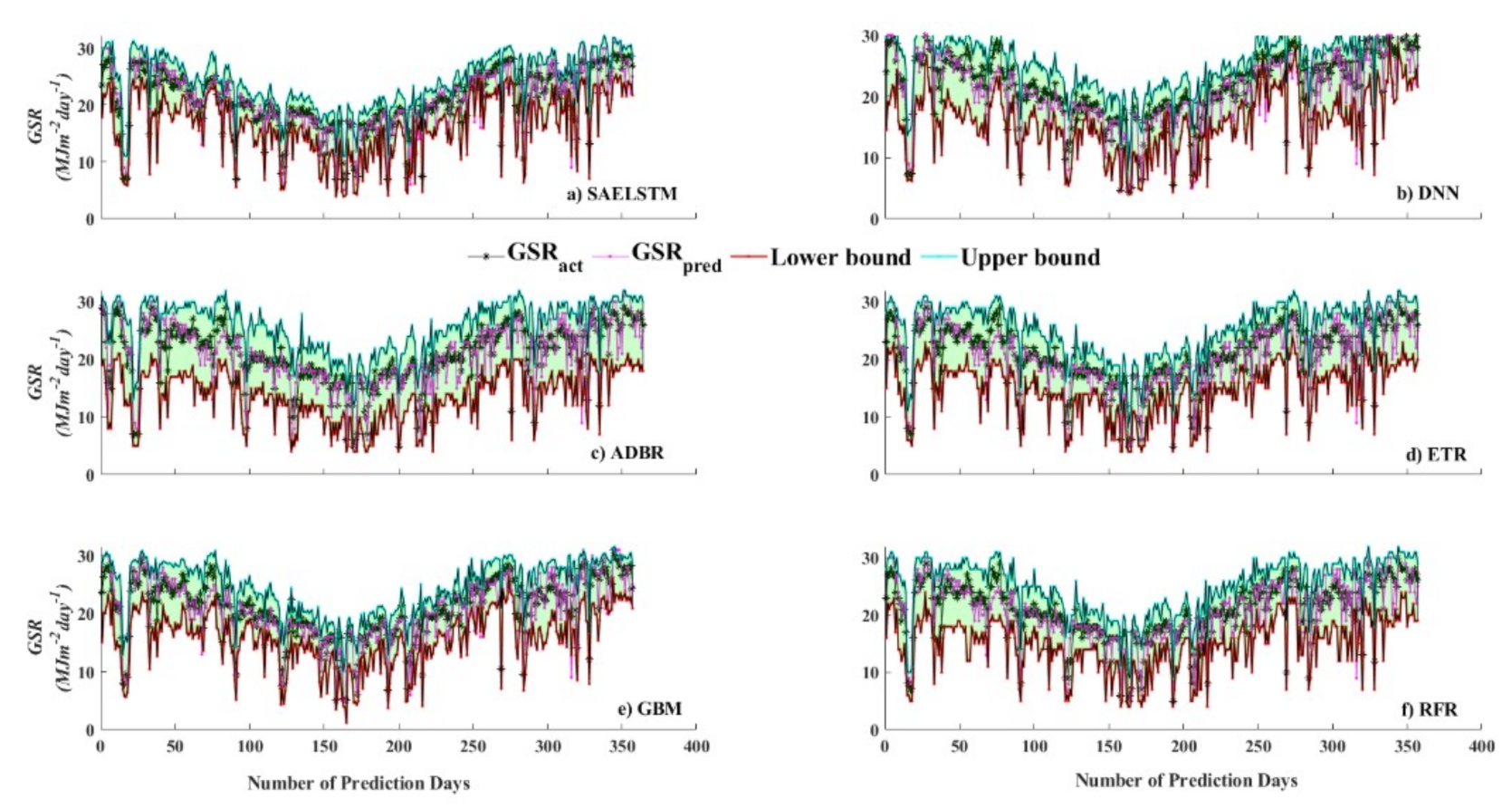

Furthermore, this study has also done the interval prediction (IP), we verify the mean width (MPIW) and coverage probability (PICP) of the interval, both of which are indicators of whether the interval is suitable. This IP will can help solar plant managers better evaluate the effectiveness and safety of the power system as well as manage risks and costs accurately. The IP evaluation metrics of deep hybrid SAELSTM as well as other comparative models for all six solar sites are shown in Table 10. Compared to the deep hybrid model SAELSTM (PCP ≈ 95% and MPIW ≈ 8.50), the ADBR model produced the higher PICP (97%) and higher MPIW (11.169) using the Columboola solar farm. However, if PICP exceeds the prediction interval nominal confidence (PINC = 90%), the smaller the MPIW, the more accurate the model’s prediction. Therefore, we can conclude from Table 10 that the deep hybrid SAELSTM model yielded the low MPIW compared to the other Deep Learning and conventional ML models. Obviously, the PICP values of all the models under this study are greater than PINC, but the MPIW varies drastically. For instance, in the case of Bouldercombe, the metrics (PICP <MPIW>) were 95% <9.483>, 93% <10.685>, 96% <13.511>, 94% <11.656>, 93% <9.852>, and 93% <11.250> for SAELSTM, DNN, ADBR, GBM, ETR, and RFR respectively.

Table 10.

The prediction interval quality metrics on six benchmarking models (best result in bold). The best model was assessed with high prediction interval coverage probability (PICP) and low mean prediction interval width (MPIW).

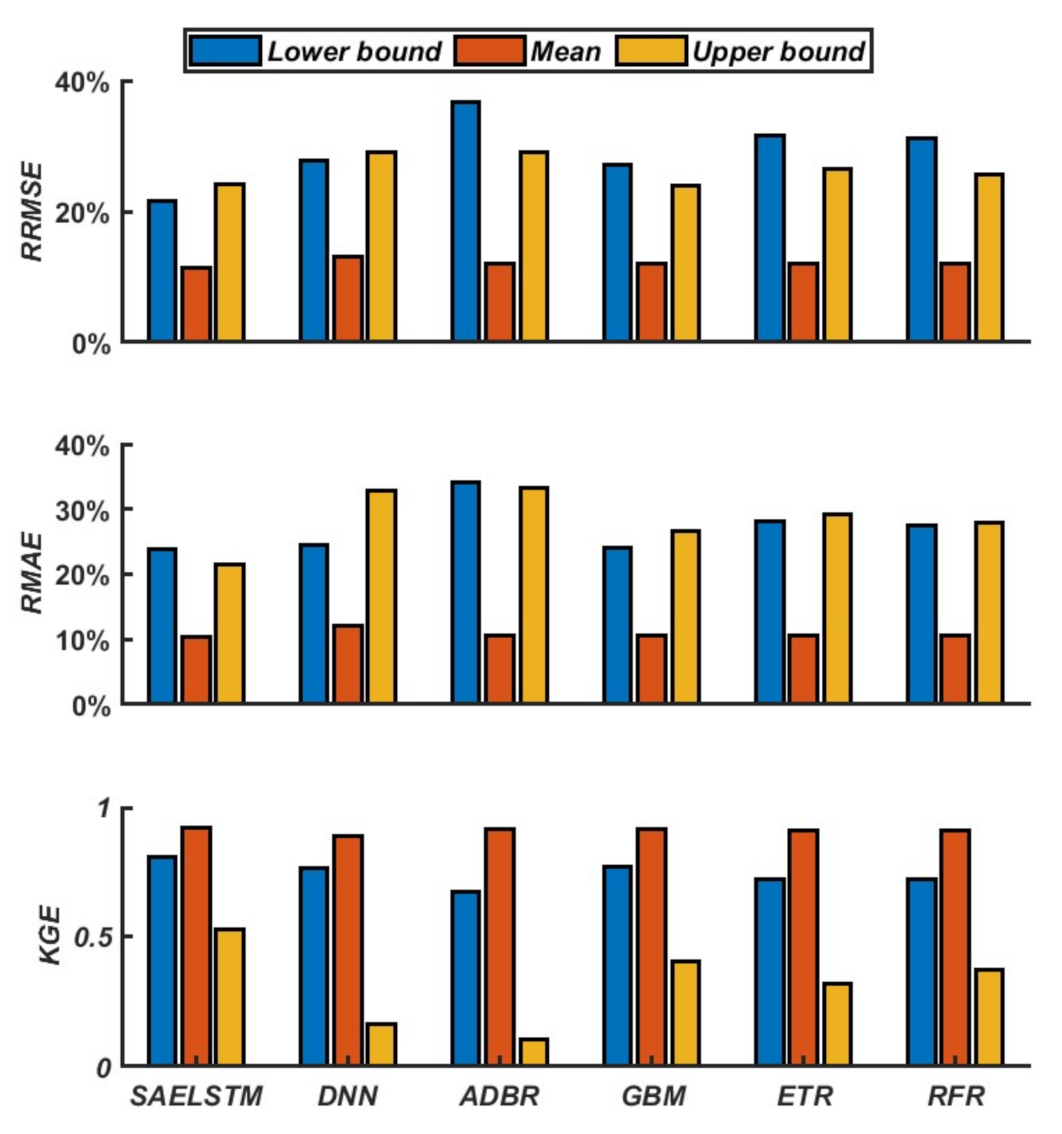

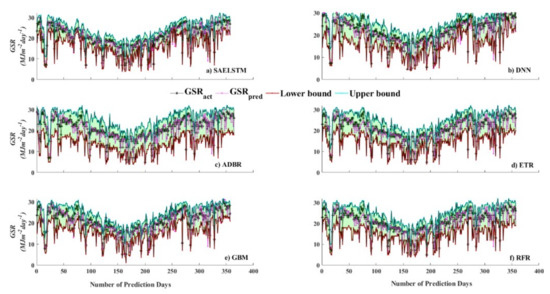

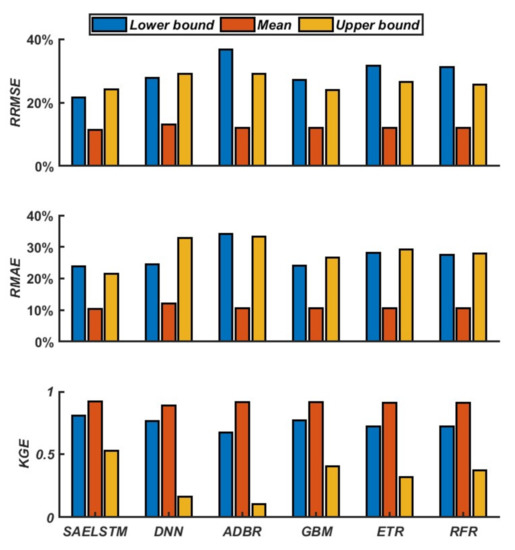

Figure 12 depicts the upper bound and lower bound for the 90% prediction interval between SAELSTM and other comparative models in daily GSR prediction. Furthermore, to affirm the suitability of the deep hybrid SAELSTM model in IP, we calculate the RRMSE, RMAE, and KGE of the upper bound, lower bound, and mean of the GSR interval (Figure 13).

Figure 12.

A comparative result showing the upper bound and lower bound for the 90% prediction interval between the proposed SAELSTM and other comparative models.

Figure 13.

Performance metrics comparison of the proposed SAELSTM and other comparative counterpart models for upper, lower, and mean predication in terms of RRMSE, RMAE, and KGE.

Figure 13 shows that the RRMSE and RMAE for the deep hybrid SAELSTM model is significantly low, whereas the KGE was high, for all lower bounds, mean, and upper bounds, indicating that the deep hybrid SAELSTM can better reflect the uncertainty of GSR.

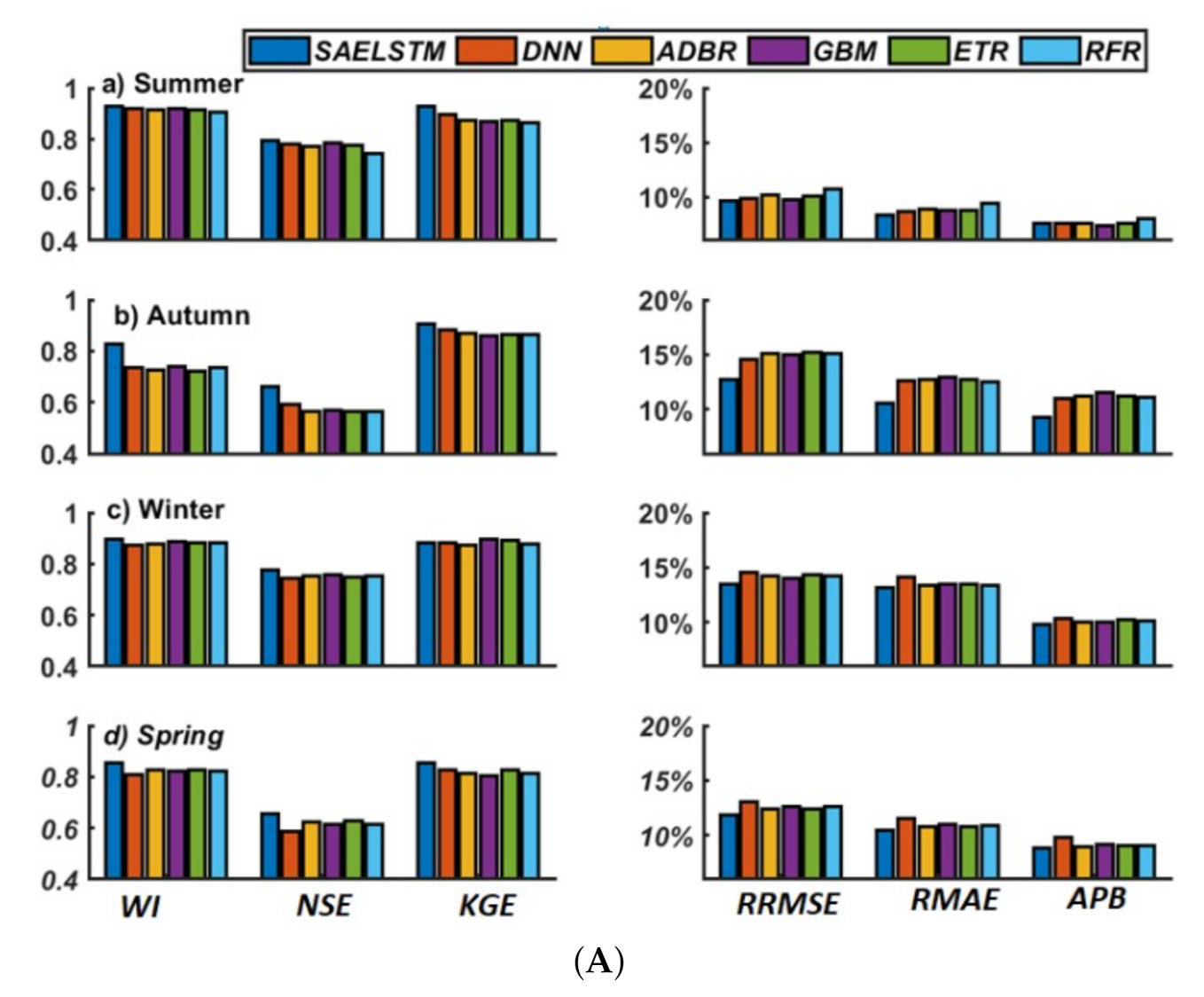

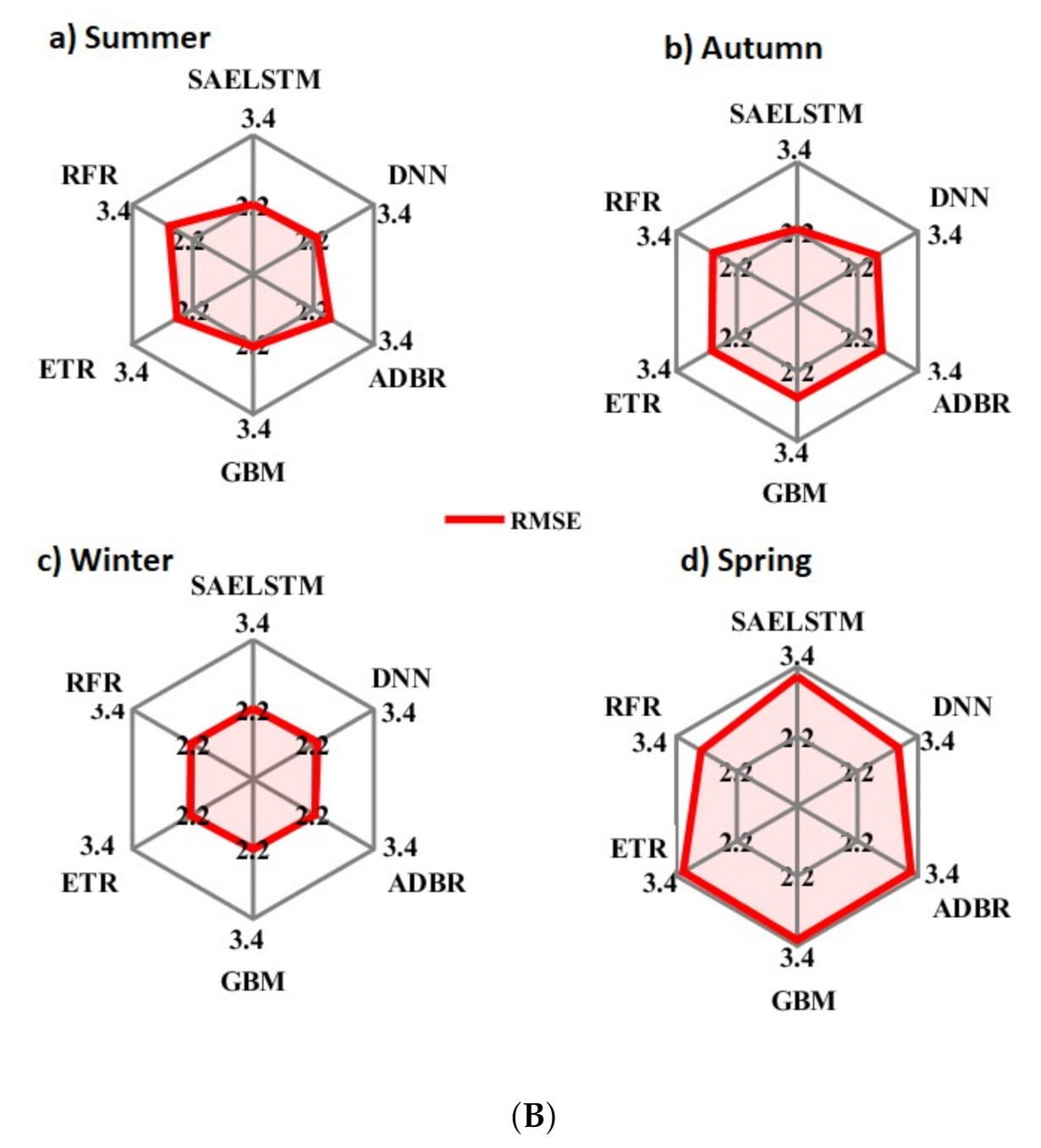

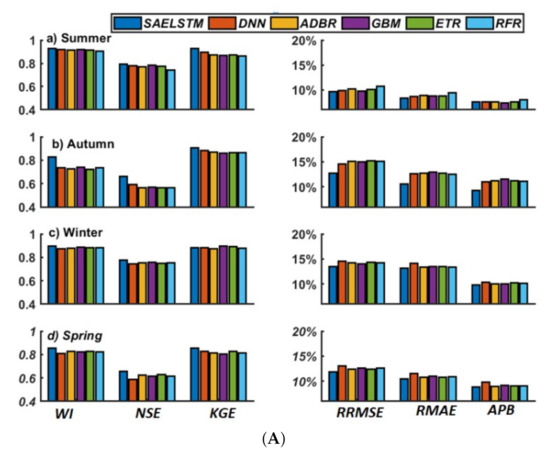

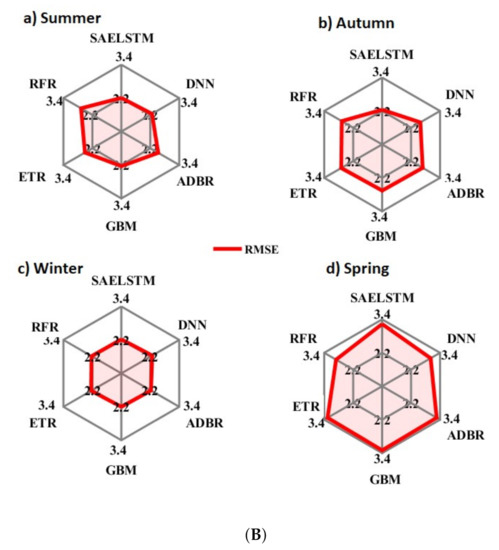

As an additional evaluation of the deep hybrid SAELSTM predictive model, the data of all study sites are divided into four distinct seasons, and the simulations are repeated for all models.

Figure Figure 14a is a representation of the model in terms of the performance measures of WI, NSE, KGE, RRMSE, RMAE, and APB for all four seasons. Concurrent with previous deductions for daily GSR predictions, the proposed deep hybrid SAELSTM model appears to register the best seasonal performance, with a lower value of RRMSE, RMAE, and APB and a higher value of WI, NSE, and KGE compared with equivalent metrics for the DNN, ADBR, GBM, ETR, and RFR models.

Figure 14.

(A) Seasonal performance evaluation of proposed SAELSTM model compared to other artificial intelligence-based model in terms of WI, NSE, KGE, RRMSE (%), RMAE (%), and APB, (%). (a) Summer, (b) Autumn, (c) Winter, and (d) Spring. (B) Seasonal performance evaluation of proposed SAELSTM model compared to other artificial intelligence-based models in terms of RMSE. (Note: Names for each model are provided in Table 3 and Table A1 in Appendix A).

The deep hybrid SAELSTM predictive model is seen to produce a lower RMSE for the spring season (≈2.120 MJmday), followed by that of Autumn (≈2.244 MJmday), Summer (≈2.408 MJmday), and Winter (≈2.733 MJmday), as shown in Figure Figure 14b. In accordance with this finding, we contend that the deep hybrid SAELSTM predictive model is deemed suitable for both daily and seasonal GSR predictions.

6. Conclusions, Limitations, and Future Research Directions

The goal of this study has been to develop an end-to-end method of predicting daily GSR based on a hybrid Deep Learning (DL) Stacked LSTM-based seq2seq (SAELSTM) model. For this purpose, six solar energy farms located in Queensland, Australia were selected as the study sites, and a number of predictors from Global Climate Models (GCM) meteorological data and ground-based observation data from Scientific Information for Landowners (SILO) were used. To build the proposed DL hybrid SAELSTM model, we have integrated the Manta Ray Foraging Optimization (MRFO) feature selection process to select the optimal features. Then, these optimal features are used as the input to the LSTM-based seq2seq architecture to predict the GSR. Comparisons with a different DL model (DNN) and conventional ML-based models (GBM, ADBR, ETR, and RFR) have been carried out.

The simulation results obtained have revealed that the accuracy of the deep hybrid SAELSTM model is substantially better than comparative models, and they confirm that the deep hybrid learning models can accurately predict GSR. In addition, prediction intervals were constructed using quantile regression to quantify the uncertainty in model parameters. The quality of predictive indicators generated by the proposed deep hybrid SAELSTM model as well as comparative models are evaluated using PICP and MPIW performance indices. Comparing the proposed deep hybrid SAELSTM model to other DL as well as conventional ML models, the results obtained have shown that the deep hybrid SAELSTM model is more effective and superior for obtaining quality PIs with high PICP and low MPIW. In general, the proposed SAELSTM model offers superiority and innovations over other models.

While this study has demonstrated the efficacy of the proposed stacked LSTM sequence-to-sequence autoencoder model for global solar radiation prediction problems, we admit that future research in sequence-to-sequence modeling for solar energy should aim to improve the proposed predictive model by exploring cloud image-based predictions of the direct normal irradiance or the direct horizontal irradiance that are useful components of global solar radiation in photovoltaic power systems. In the present study, we have used only a stacked LSTM-based seq2seq model, but to improve the overall system, other kinds of deep learning models, such as the deep net, active learning, and transformer-based models can be tested in future studies so that real-time cloud cover (or rather total sky) images can be utilized to predict solar energy generation at solar farms and solar rooftop systems to assist solar-rich nations to reach their cleaner energy targets. The stacked LSTM-based seq2seq model can also be integrated with wavelet-based or ensemble mode decomposition approaches (e.g., Refs. [27,53,54] that have been shown to perform exceptionally well relative to the non-wavelet model. We have not yet investigated the specific effects of aerosols, atmospheric dust, ozone, and water vapor—all of which subtly affect the direct normal irradiance and the global horizontal irradiance. Considering that these effects are paramount in solar energy monitoring systems and especially relevant for behind-the-meter solar generation estimation, future research could also consider the utility of the stacked LSTM-based seq2seq model to include these exogenous effects on solar energy prediction. Advanced predictive frameworks such as deep reinforcement learning in situations where standard deep learning fails could also be developed in future research. In the renewable and sustainable energy sector, a deep hybrid based GSR predictive model can also contribute to strategic decisions (such as smart grid integration of solar energy into real-time energy management systems), as well as enabling governments and investors to make more informed decisions in the future planning of solar energy system installations. The present modeling strategies, improved through novel methods such as reinforcement learning, deep net, active learning, and transformer-based models to directly incorporate sky images in a PV system power monitoring system, can be used for applications such as physical modeling of wind and wave energy utilization and climate change scenarios with artificial intelligence models providing quality predictions.

Author Contributions

Conceptualization, S.G. and R.C.D.; methodology, S.G.; software, S.G.; validation, S.G., R.C.D., S.S.-S. and D.C.-P.; investigation, S.G. and H.W.; validation, S.G., R.C.D., S.S.-S. and D.C.-P.; resources, H.W.; data curation, S.G. and R.C.D.; writing—original draft preparation, S.G. and R.C.D.; writing—review and editing, S.S.-S., M.S.A.-M. and D.C.-P.; visualization, S.G., R.C.D., M.S.A.-M. and H.W.; supervision, R.C.D., S.S.-S. and H.W.; project administration, S.G. and R.C.D. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been partially supported by the project PID2020-115454GB-C21 of the Spanish Ministry of Science and Innovation (MICINN).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data were acquired from: (i) Department of Science, Information Technology, Innovation, and the Arts (DSITIA), Queensland Government, (ii) the Centre for Environmental Data Analysis (CEDA)from the server for CMIP5 project’s GCM output collection for CSIRO-BOM ACCESS1-0, MOHC Hadley-GEM2-CC and the MRI MRI-CGCM3.

Acknowledgments

The authors thank the data providers and reviewers for their thoughtful comments, suggestions and the review process.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Model Development Parameters

Table A1 summarizes the model development parameters.

Table A1.

(a) The proposed Deep Learning hybrid SAELSTM (i.e., stacked LSTM sequence-to-sequence autoencoder) and Deep Neural Network (DNN) models. (b) The comparison of machine learning models: Random Forest Regression (RFR), Adaboost Regression (ADBR), Gradient Boosting Machine (GBM), and Extremely Random Tree Regression (ETR). Note: ReLU = Rectified Linear Units.

Table A1.

(a) The proposed Deep Learning hybrid SAELSTM (i.e., stacked LSTM sequence-to-sequence autoencoder) and Deep Neural Network (DNN) models. (b) The comparison of machine learning models: Random Forest Regression (RFR), Adaboost Regression (ADBR), Gradient Boosting Machine (GBM), and Extremely Random Tree Regression (ETR). Note: ReLU = Rectified Linear Units.

| Predictive Models | Model Hyperparameters | Hyperparameter Selection | Blair Athol Solar Power Station | Blue Grass Solar Farm | Bluff Solar Farm | Bouldercombe Solar Farm | Broadlea Solar Farm | Columboola Solar Farm |

|---|---|---|---|---|---|---|---|---|

| SAELSTM | Encoder LSTM cell 1 | [10,20,30,40,50,60] | 20 | 10 | 30 | 20 | 40 | 20 |

| Encoder LSTM cell 2 | [5,10,15,25] | 10 | 5 | 25 | 15 | 25 | 15 | |

| Encoder LSTM cell 3 | [6,8,10,15] | 6 | 6 | 10 | 15 | 15 | 10 | |

| Decoder LSTM cell 1 | [80,90,100,200] | 100 | 80 | 200 | 100 | 100 | 90 | |

| Decoder LSTM cell 2 | [40,50,60,70,100] | 70 | 60 | 50 | 100 | 60 | 50 | |

| Decoder LSTM cell 3 | [5,10,15,20,25,30] | 20 | 15 | 25 | 15 | 30 | 15 | |

| Activation function | ReLU | |||||||

| Epochs | [300,400,500,600,700] | 400 | 500 | 300 | 500 | 400 | 500 | |

| Drop rate | [0,0.1,0.2] | 0.1 | 0.2 | 0 | 0 | 0.2 | 0.1 | |

| Batch Size | [5,10,15,20,25,30] | 10 | 15 | 15 | 20 | 10 | 10 | |

| DNN | Hiddenneuron 1 | [100,200,300,400,50] | 300 | 100 | 100 | 200 | 100 | 200 |

| Hiddenneuron 2 | [20,30,40,50,60,70] | 60 | 70 | 40 | 50 | 30 | 20 | |

| Hiddenneuron 3 | [10,20,30,40,50] | 40 | 30 | 50 | 20 | 10 | 40 | |

| Hiddenneuron 4 | [5,6,7,8,12,15,18] | 15 | 18 | 7 | 12 | 15 | 18 | |

| Epochs | [100,200,400,500] | 500 | 200 | 100 | 200 | 100 | 400 | |

| Batch Size | [5,10,15,20,25,30] | 5 | 10 | 20 | 15 | 15 | 20 | |

| Predictive Models | Model hyperparameters | Hyperparameter Selection | Blair Athol Solar Power Station | Blue Grass Solar Farm | Bluff Solar Farm | Bouldercombe Solar Farm | Broadlea Solar Farm | Columboola Solar Farm |

| RFR | The maximum depth of the tree | [5,8,10,20,25] | 20 | 25 | 8 | 25 | 20 | 10 |

| The number of trees in the forest | [50,100,150,200] | 150 | 100 | 50 | 100 | 50 | 200 | |

| Minimum number of samples to split an internal node | [2,4,6,8,10] | 6 | 8 | 10 | 10 | 8 | 10 | |

| The number of features to consider when looking for the best split. | [’auto’, ’sqrt’, ’log2’] | auto | auto | auto | auto | auto | auto | |

| ADBR | The maximum number of estimators at which boosting is terminated | [50,100,150,200] | 150 | 200 | 100 | 150 | 200 | 150 |

| learning rate | [0.01,0.001,0.005] | 0.01 | 0.001 | 0.01 | 0.01 | 0.005 | 0.001 | |

| The loss function to use when updating the weights after each boosting iteration | [‘linear’, ‘square’, ‘exponential’] | square | square | square | square | square | square | |

| GBM | Number of neighbors | [5,10,20,30,50,100] | 50 | 30 | 20 | 30 | 50 | 20 |

| Algorithm used to compute the nearest neighbors | [‘auto’, ‘ball_tree’, ‘kd_tree’, ‘brute’] | auto | auto | auto | auto | auto | auto | |

| Leaf size passed to BallTree or KDTree | [10,20,30,50,60,70] | 10 | 30 | 20 | 10 | 30 | 10 | |

| ETR | The number of trees in the forest | [10,20,30] | 30 | 10 | 10 | 20 | 30 | 20 |

| The maximum depth of the tree | [5,8,10,20,25] | 8 | 10 | 5 | 8 | 10 | 5 | |

| The number of features to consider when looking for the best split | [’auto’, ’sqrt’, ’log2’] | auto | auto | auto | auto | auto | auto | |

| Minimum number of samples to split an internal node | [5,10,15,20] | 15 | 10 | 20 | 15 | 20 | 15 |

References

- Gielen, D.; Boshell, F.; Saygin, D.; Bazilian, M.D.; Wagner, N.; Gorini, R. The role of renewable energy in the global energy transformation. Energy Strategy Rev. 2019, 24, 38–50. [Google Scholar] [CrossRef]

- Gielen, D.; Gorini, R.; Wagner, N.; Leme, R.; Gutierrez, L.; Prakash, G.; Asmelash, E.; Janeiro, L.; Gallina, G.; Vale, G.; et al. Global Energy Transformation: A Roadmap to 2050; Hydrogen Knowledge Centre: Derby, UK, 2019; Available online: https://www.h2knowledgecentre.com/content/researchpaper1605 (accessed on 1 December 2021).

- Farivar, G.; Asaei, B. A new approach for solar module temperature estimation using the simple diode model. IEEE Trans. Energy Convers. 2011, 26, 1118–1126. [Google Scholar] [CrossRef]

- Pazikadin, A.R.; Rifai, D.; Ali, K.; Malik, M.Z.; Abdalla, A.N.; Faraj, M.A. Solar irradiance measurement instrumentation and power solar generation forecasting based on Artificial Neural Networks (ANN): A review of five years research trend. Sci. Total Environ. 2020, 715, 136848. [Google Scholar] [CrossRef]

- Amiri, B.; Gómez-Orellana, A.M.; Gutiérrez, P.A.; Dizène, R.; Hervás-Martínez, C.; Dahmani, K. A novel approach for global solar irradiation forecasting on tilted plane using Hybrid Evolutionary Neural Networks. J. Clean. Prod. 2021, 287, 125577. [Google Scholar] [CrossRef]

- Yang, K.; Koike, T.; Ye, B. Improving estimation of hourly, daily, and monthly solar radiation by importing global data sets. Agric. For. Meteorol. 2006, 137, 43–55. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Ghamisi, P.; Piles, M.; Werner, M.; Cuadra, L.; Moreno-Martínez, A.; Izquierdo-Verdiguier, E.; Muñoz-Marí, J.; Mosavi, A.; Camps-Valls, G. Machine learning information fusion in Earth observation: A comprehensive review of methods, applications and data sources. Inf. Fusion 2020, 63, 256–272. [Google Scholar] [CrossRef]

- García-Hinde, O.; Terrén-Serrano, G.; Hombrados-Herrera, M.; Gómez-Verdejo, V.; Jiménez-Fernández, S.; Casanova-Mateo, C.; Sanz-Justo, J.; Martínez-Ramón, M.; Salcedo-Sanz, S. Evaluation of dimensionality reduction methods applied to numerical weather models for solar radiation forecasting. Eng. Appl. Artif. Intell. 2018, 69, 157–167. [Google Scholar] [CrossRef]

- Jiang, Y. Computation of monthly mean daily global solar radiation in China using artificial neural networks and comparison with other empirical models. Energy 2009, 34, 1276–1283. [Google Scholar] [CrossRef]

- Al-Musaylh, M.S.; Deo, R.C.; Adamowski, J.F.; Li, Y. Short-term electricity demand forecasting with MARS, SVR and ARIMA models using aggregated demand data in Queensland, Australia. Adv. Eng. Inform. 2018, 35, 1–16. [Google Scholar] [CrossRef]

- Huang, J.; Korolkiewicz, M.; Agrawal, M.; Boland, J. Forecasting solar radiation on an hourly time scale using a Coupled AutoRegressive and Dynamical System (CARDS) model. Sol. Energy 2013, 87, 136–149. [Google Scholar] [CrossRef]

- Shadab, A.; Said, S.; Ahmad, S. Box–Jenkins multiplicative ARIMA modeling for prediction of solar radiation: A case study. Int. J. Energy Water Resour. 2019, 3, 305–318. [Google Scholar] [CrossRef]

- Zang, H.; Liu, L.; Sun, L.; Cheng, L.; Wei, Z.; Sun, G. Short-term global horizontal irradiance forecasting based on a hybrid CNN-LSTM model with spatiotemporal correlations. Renew. Energy 2020, 160, 26–41. [Google Scholar] [CrossRef]

- Mishra, S.; Palanisamy, P. Multi-time-horizon solar forecasting using recurrent neural network. In Proceedings of the 2018 IEEE Energy Conversion Congress and Exposition (ECCE), Portland, OR, USA, 23–27 September 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 18–24. [Google Scholar]

- Elminir, H.K.; Areed, F.F.; Elsayed, T.S. Estimation of solar radiation components incident on Helwan site using neural networks. Sol. Energy 2005, 79, 270–279. [Google Scholar] [CrossRef]

- Al-Musaylh, M.S.; Deo, R.C.; Adamowski, J.F.; Li, Y. Short-term electricity demand forecasting using machine learning methods enriched with ground-based climate and ECMWF Reanalysis atmospheric predictors in southeast Queensland, Australia. Renew. Sustain. Energy Rev. 2019, 113, 109293. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Deo, R.C.; Cornejo-Bueno, L.; Camacho-Gómez, C.; Ghimire, S. An efficient neuro-evolutionary hybrid modelling mechanism for the estimation of daily global solar radiation in the Sunshine State of Australia. Appl. Energy 2018, 209, 79–94. [Google Scholar] [CrossRef]

- Guijo-Rubio, D.; Durán-Rosal, A.; Gutiérrez, P.; Gómez-Orellana, A.; Casanova-Mateo, C.; Sanz-Justo, J.; Salcedo-Sanz, S.; Hervás-Martínez, C. Evolutionary artificial neural networks for accurate solar radiation prediction. Energy 2020, 210, 118374. [Google Scholar] [CrossRef]

- Bouzgou, H.; Gueymard, C.A. Minimum redundancy–maximum relevance with extreme learning machines for global solar radiation forecasting: Toward an optimized dimensionality reduction for solar time series. Sol. Energy 2017, 158, 595–609. [Google Scholar] [CrossRef]

- Al-Musaylh, M.S.; Deo, R.C.; Li, Y. Electrical energy demand forecasting model development and evaluation with maximum overlap discrete wavelet transform-online sequential extreme learning machines algorithms. Energies 2020, 13, 2307. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Casanova-Mateo, C.; Pastor-Sánchez, A.; Sánchez-Girón, M. Daily global solar radiation prediction based on a hybrid Coral Reefs Optimization–Extreme Learning Machine approach. Sol. Energy 2014, 105, 91–98. [Google Scholar] [CrossRef]

- Aybar-Ruiz, A.; Jiménez-Fernández, S.; Cornejo-Bueno, L.; Casanova-Mateo, C.; Sanz-Justo, J.; Salvador-González, P.; Salcedo-Sanz, S. A novel grouping genetic algorithm–extreme learning machine approach for global solar radiation prediction from numerical weather models inputs. Sol. Energy 2016, 132, 129–142. [Google Scholar] [CrossRef]

- AlKandari, M.; Ahmad, I. Solar power generation forecasting using ensemble approach based on deep learning and statistical methods. Appl. Comput. Inform. 2020. [Google Scholar] [CrossRef]

- AL-Musaylh, M.S.; Al-Daffaie, K.; Prasad, R. Gas consumption demand forecasting with empirical wavelet transform based machine learning model: A case study. Int. J. Energy Res. 2021, 45, 15124–15138. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Casanova-Mateo, C.; Muñoz-Marí, J.; Camps-Valls, G. Prediction of daily global solar irradiation using temporal Gaussian processes. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1936–1940. [Google Scholar] [CrossRef]

- Chen, J.L.; Li, G.S. Evaluation of support vector machine for estimation of solar radiation from measured meteorological variables. Theor. Appl. Climatol. 2014, 115, 627–638. [Google Scholar] [CrossRef]

- Al-Musaylh, M.S.; Deo, R.C.; Li, Y.; Adamowski, J.F. Two-phase particle swarm optimized-support vector regression hybrid model integrated with improved empirical mode decomposition with adaptive noise for multiple-horizon electricity demand forecasting. Appl. Energy 2018, 217, 422–439. [Google Scholar] [CrossRef]

- Al-Musaylh, M.S.; Deo, R.C.; Li, Y. Particle swarm optimized–support vector regression hybrid model for daily horizon electricity demand forecasting using climate dataset. In Proceedings of the 3rd International Conference on Power and Renewable Energy, Berlin, Germany, 21–24 September 2018; Volume 64. [Google Scholar] [CrossRef]

- Hocaoğlu, F.O. Novel analytical hourly solar radiation models for photovoltaic based system sizing algorithms. Energy Convers. Manag. 2010, 51, 2921–2929. [Google Scholar] [CrossRef]

- Rodríguez-Benítez, F.J.; Arbizu-Barrena, C.; Huertas-Tato, J.; Aler-Mur, R.; Galván-León, I.; Pozo-Vázquez, D. A short-term solar radiation forecasting system for the Iberian Peninsula. Part 1: Models description and performance assessment. Sol. Energy 2020, 195, 396–412. [Google Scholar] [CrossRef]

- Linares-Rodríguez, A.; Ruiz-Arias, J.A.; Pozo-Vázquez, D.; Tovar-Pescador, J. Generation of synthetic daily global solar radiation data based on ERA-Interim reanalysis and artificial neural networks. Energy 2011, 36, 5356–5365. [Google Scholar] [CrossRef]

- Sivamadhavi, V.; Selvaraj, R.S. Prediction of monthly mean daily global solar radiation using Artificial Neural Network. J. Earth Syst. Sci. 2012, 121, 1501–1510. [Google Scholar] [CrossRef] [Green Version]

- Khodayar, M.; Kaynak, O.; Khodayar, M.E. Rough deep neural architecture for short-term wind speed forecasting. IEEE Trans. Ind. Informatics 2017, 13, 2770–2779. [Google Scholar] [CrossRef]

- Bengio, Y. Learning Deep Architectures for AI; Now Publishers Inc.: Delft, The Netherlands, 2009; Available online: https://books.google.es/books?hl=es&lr=&id=cq5ewg7FniMC&oi=fnd&pg=PA1&dq=Learning+Deep+Architectures+for+AI%7D%3B+%5Chl%7BNow+Publishers+Inc.:city,+country&ots=Kpi7OXklKw&sig=JHafuLqX_O0_PsqA7BaPLFOY_zg&redir_esc=y#v=onepage&q&f=false (accessed on 1 December 2021).

- Sun, S.; Chen, W.; Wang, L.; Liu, X.; Liu, T.Y. On the depth of deep neural networks: A theoretical view. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Wang, F.; Zhang, Z.; Liu, C.; Yu, Y.; Pang, S.; Duić, N.; Shafie-Khah, M.; Catalão, J.P. Generative adversarial networks and convolutional neural networks based weather classification model for day ahead short-term photovoltaic power forecasting. Energy Convers. Manag. 2019, 181, 443–462. [Google Scholar] [CrossRef]

- Kawaguchi, K.; Kaelbling, L.P.; Bengio, Y. Generalization in deep learning. arXiv 2017, arXiv:1710.05468. [Google Scholar]

- Khodayar, M.; Wang, J. Spatio-temporal graph deep neural network for short-term wind speed forecasting. IEEE Trans. Sustain. Energy 2018, 10, 670–681. [Google Scholar] [CrossRef]

- Ziyabari, S.; Du, L.; Biswas, S. Short-term Solar Irradiance Forecasting Based on Multi-Branch Residual Network. In Proceedings of the 2020 IEEE Energy Conversion Congress and Exposition (ECCE), Detroit, MI, USA, 11–15 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2000–2005. [Google Scholar]

- Abdel-Nasser, M.; Mahmoud, K.; Lehtonen, M. Reliable solar irradiance forecasting approach based on choquet integral and deep LSTMs. IEEE Trans. Ind. Informatics 2020, 17, 1873–1881. [Google Scholar] [CrossRef]