Evaluation Methodology for Physical Radar Perception Sensor Models Based on On-Road Measurements for the Testing and Validation of Automated Driving

Abstract

:1. Introduction

Motivation

2. State-of-the-Art

2.1. Classification of Virtual Sensor Models

2.2. Assessment Methods of Virtual Sensors

3. Methodology

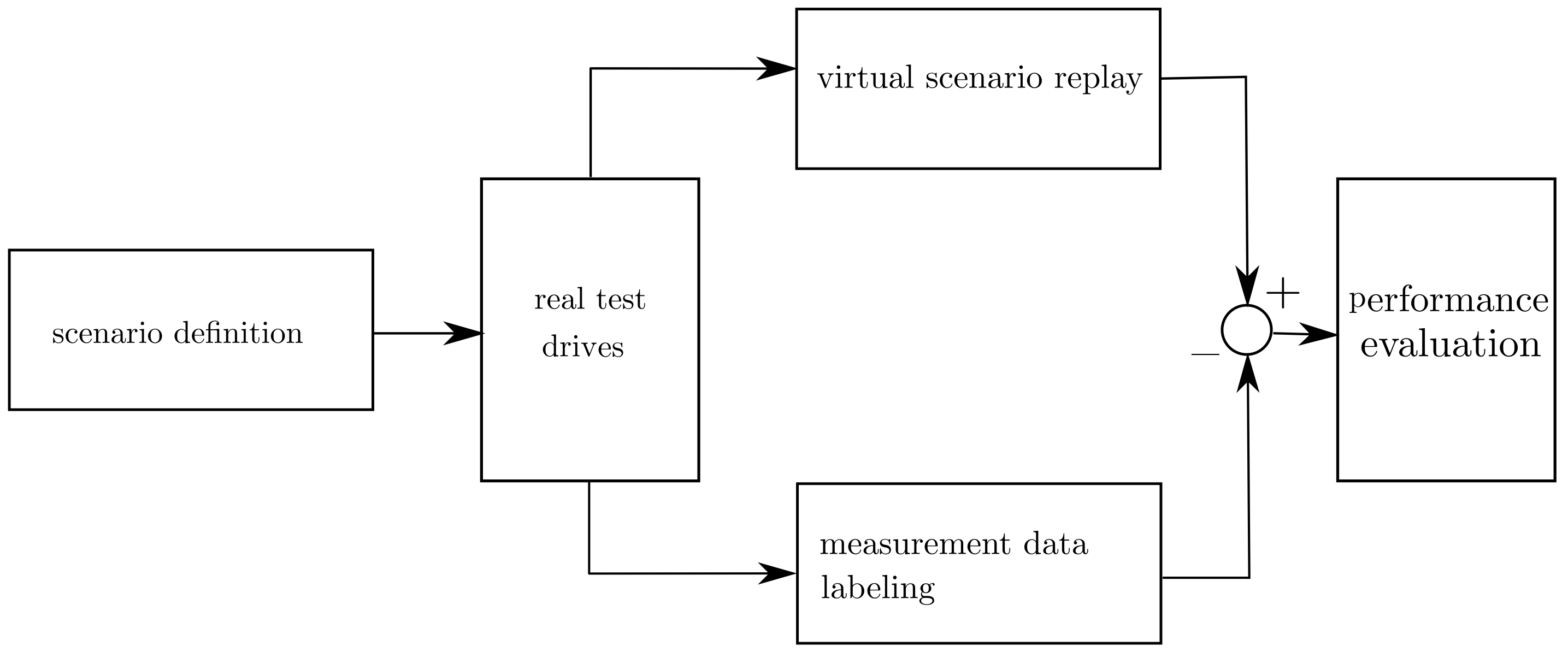

3.1. Dynamic Ground Truth Sensor Model Validation Approach

3.2. On-Road Measurements

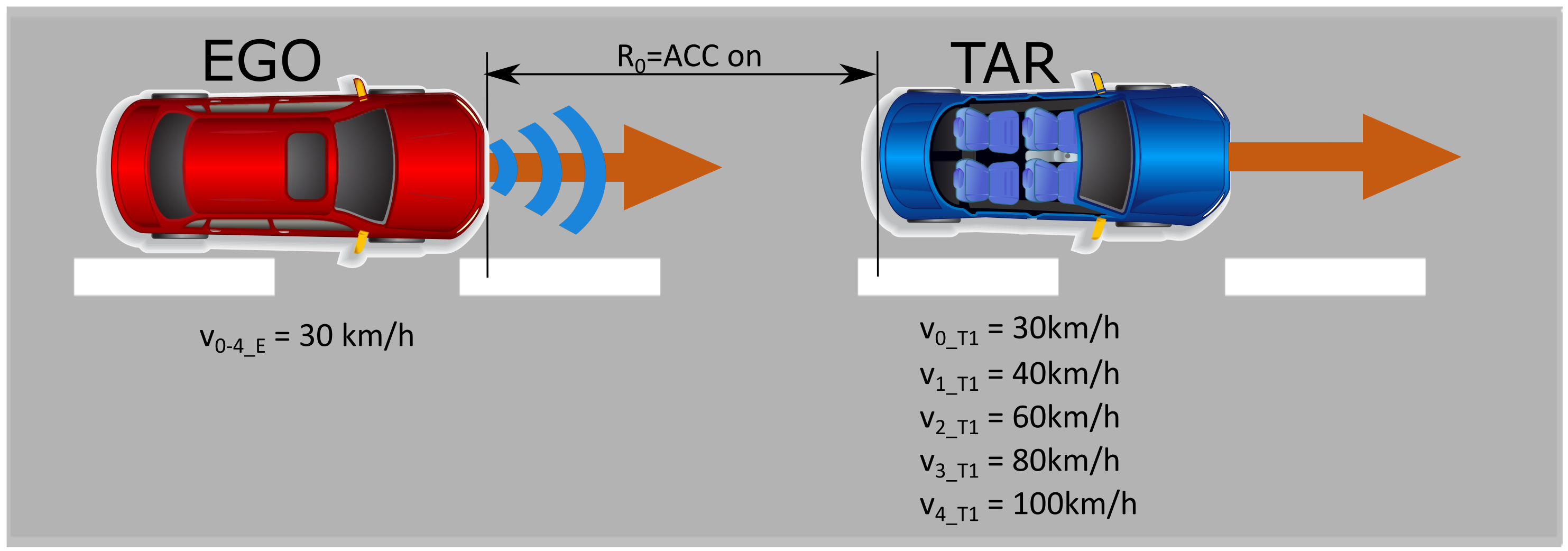

3.2.1. Driving Scenario

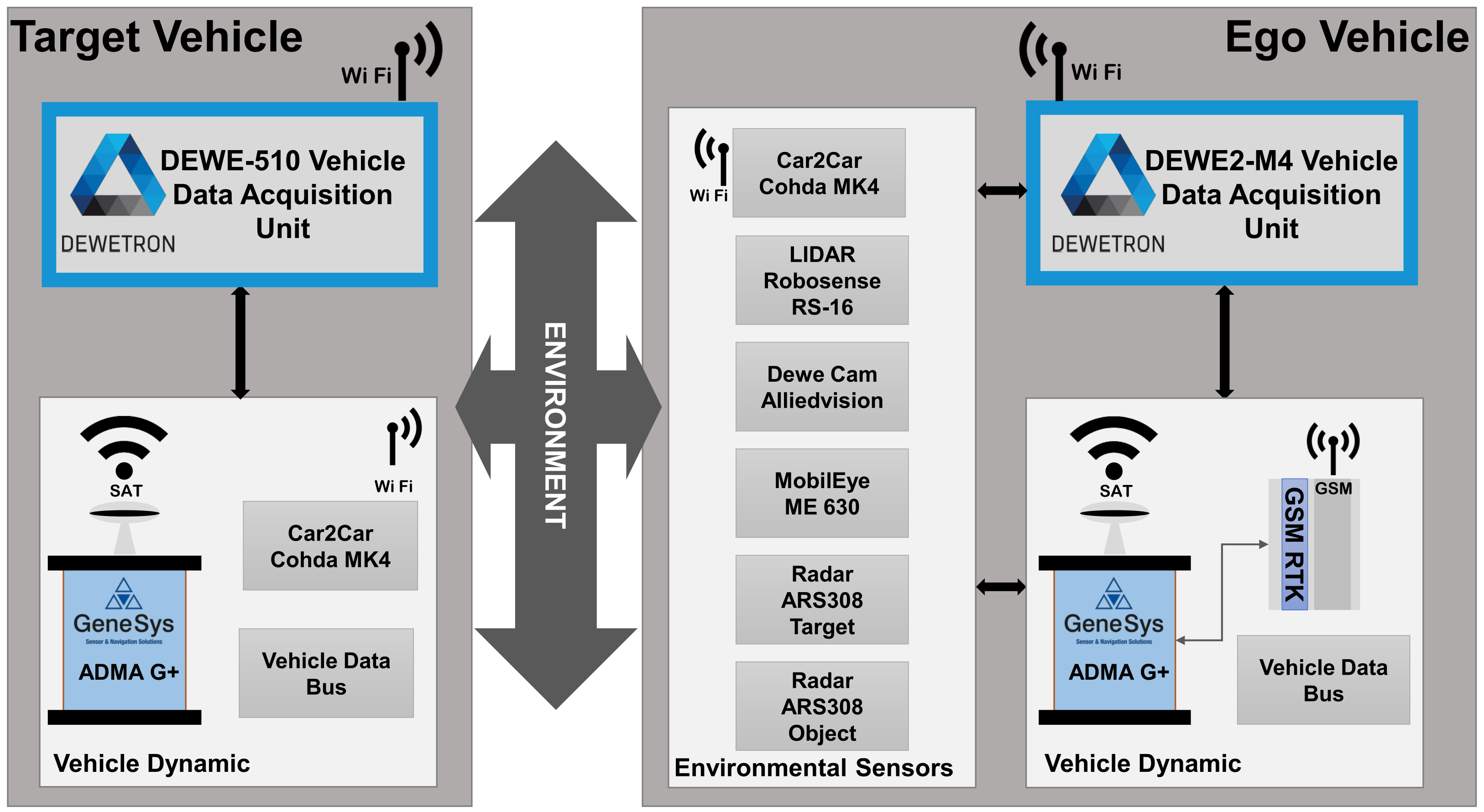

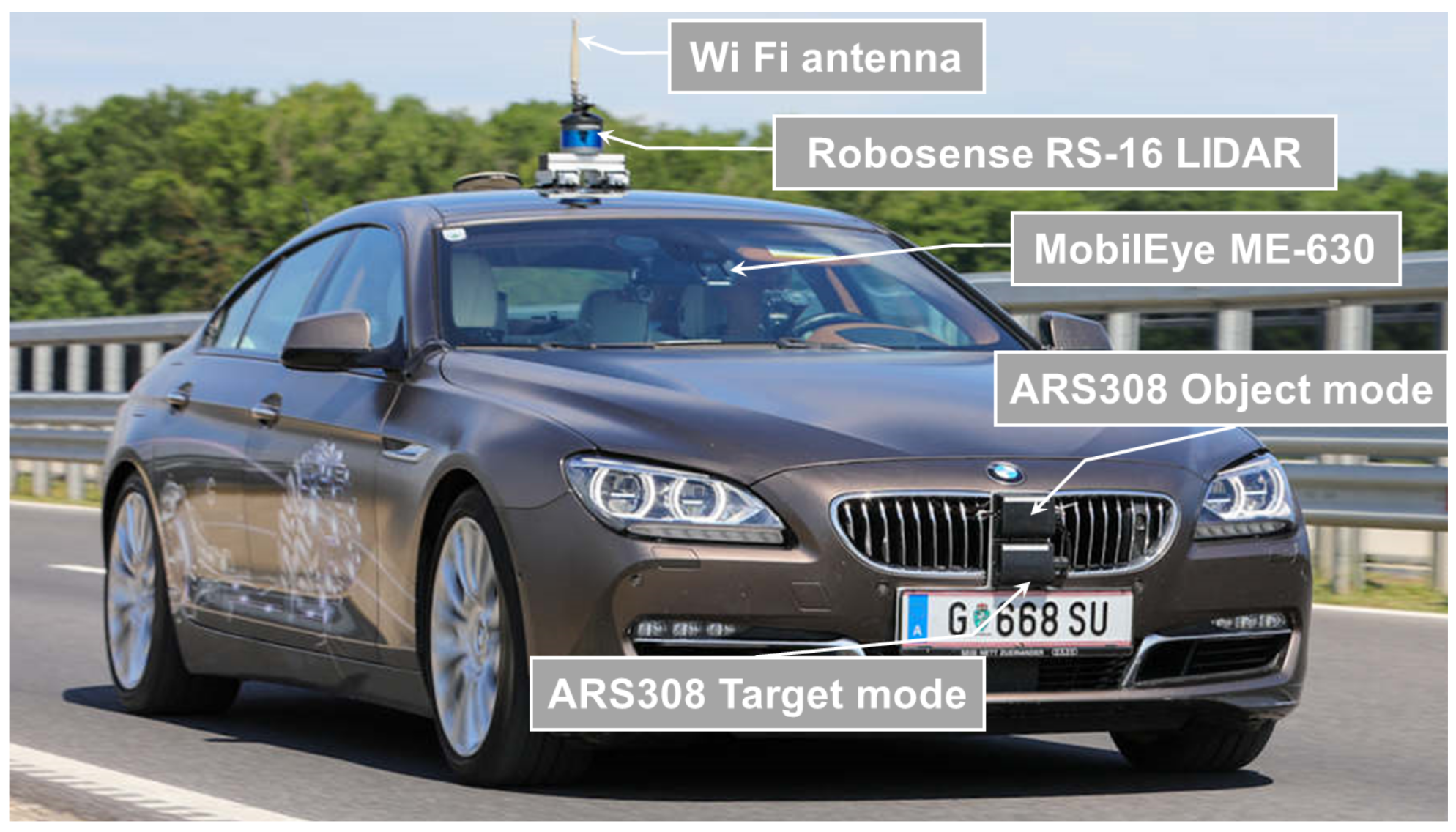

3.2.2. Vehicle Set-Up and Measurement System

- Continental ARS 308 RADAR sensor configured to detect “targets”, also referred as low-level data and providing a new data set for each scan period.

- Continental ARS 308 RADAR sensor, configured to detect “objects” also referred to as highly processed data, provides information on the output of the tracking algorithm over several measurement periods.

- Robosense RS-16 LIDAR sensor, provides the data point cloud of the 360° sensor field of view.

- MobilEye ME-630 Front Camera Module, provides information of traffic signs, traffic participants, lane markings etc.

- Video Camera, provides visual information of the driving scenarios, used during post processing.

3.3. Re-Simulation of Experiments

- lane borders and markings,

- lane centre lines,

- curbs and barriers,

- traffic signs and light pole and

- road markings.

3.3.1. IPG RSI Radar Sensor Model

- Multipath/repeated path propagation.

- Relative Doppler shift.

- Road clutter.

- False positive/negative detections of targets.

3.3.2. Parameter Setting of the Sensor Model

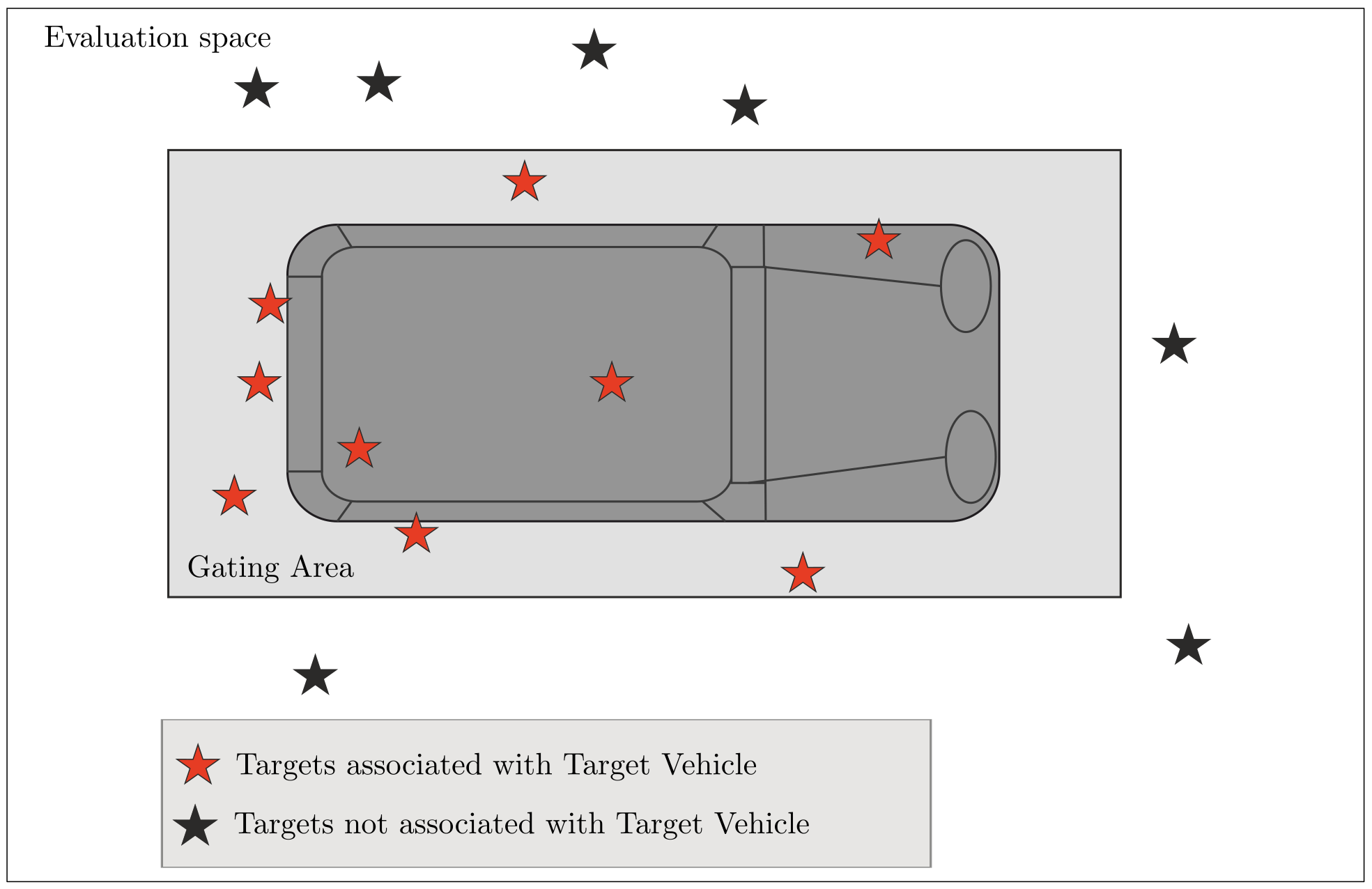

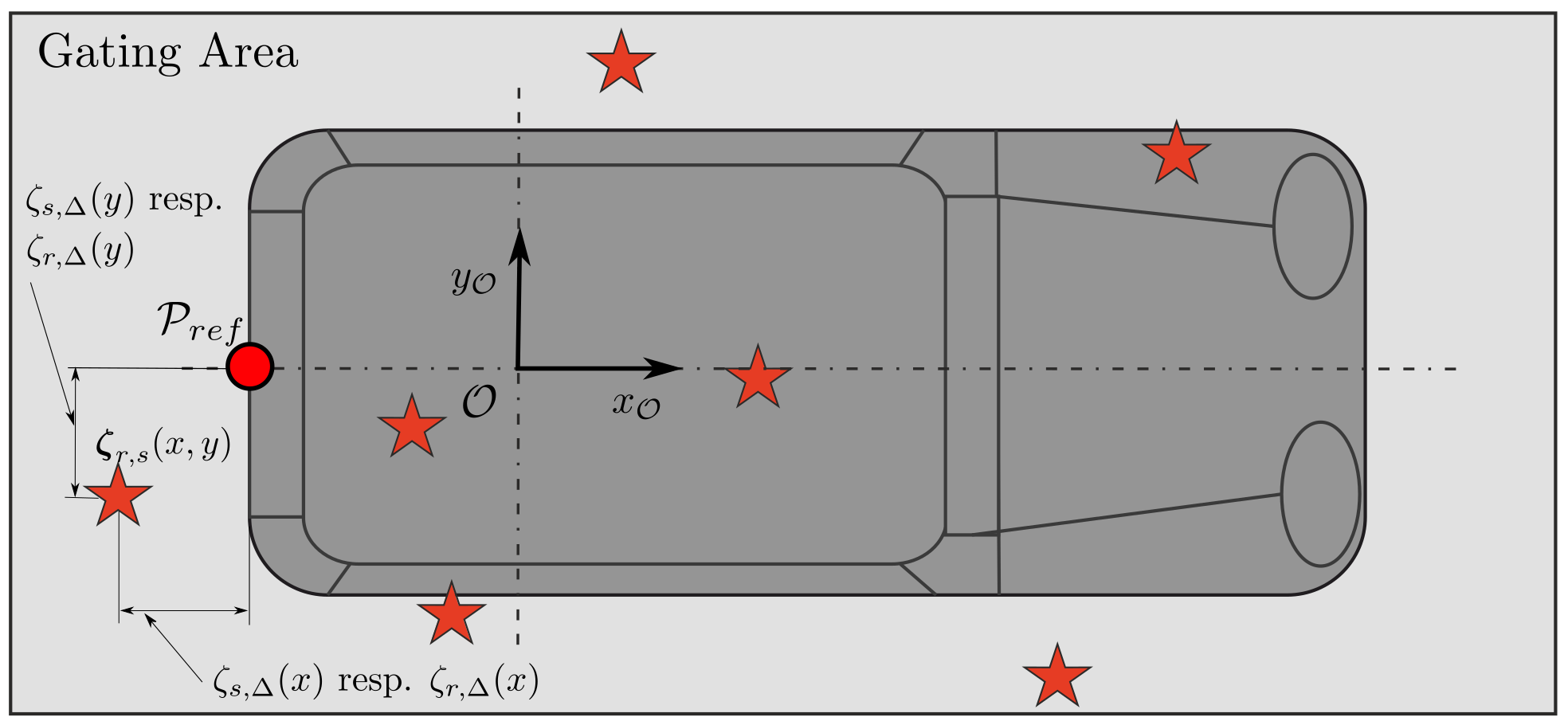

3.4. Labelling of Radar Measurement Data

3.5. Evaluation Procedure

3.6. Validation Metrics for Comparing Probability Distributions

4. Results

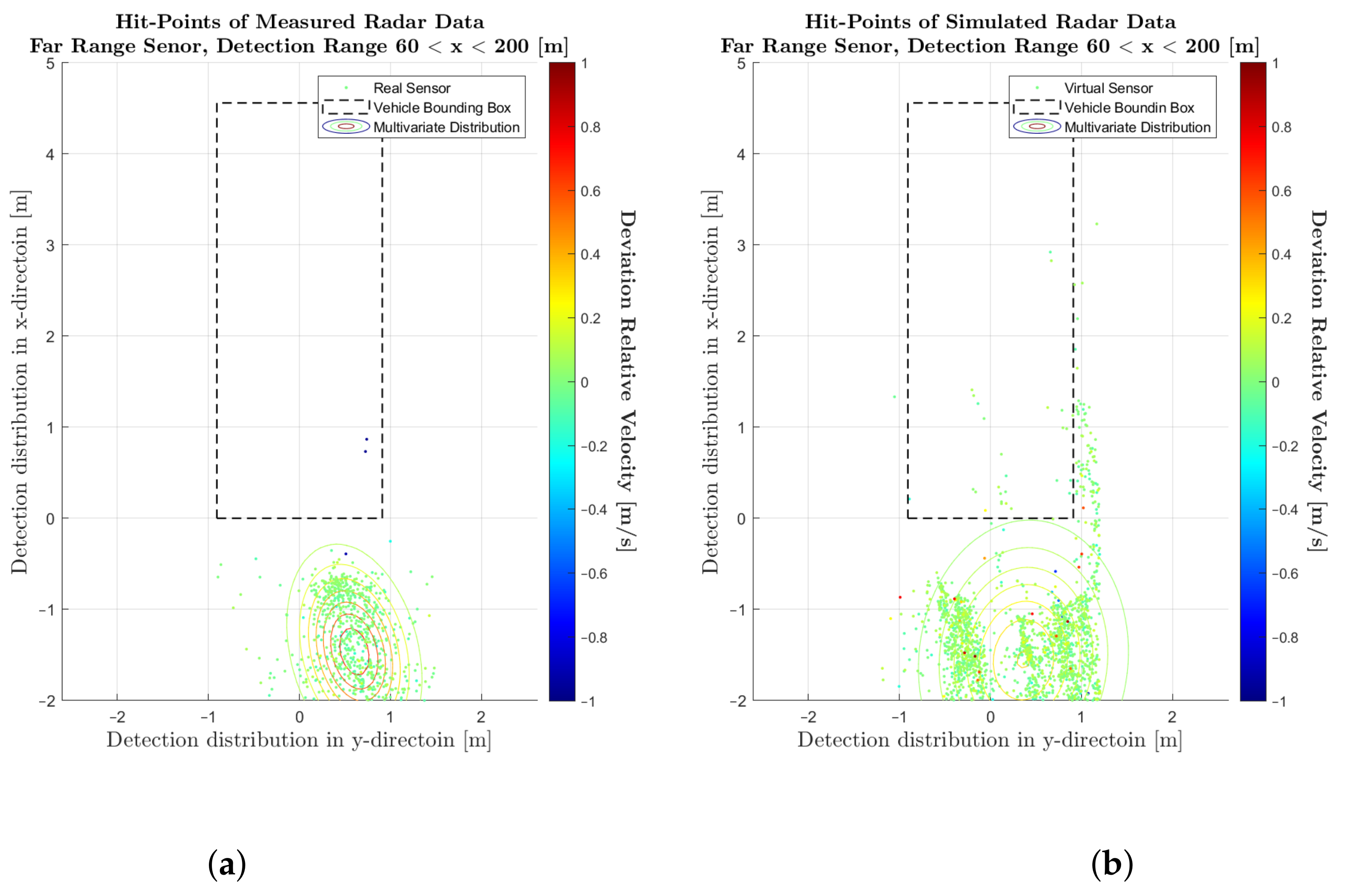

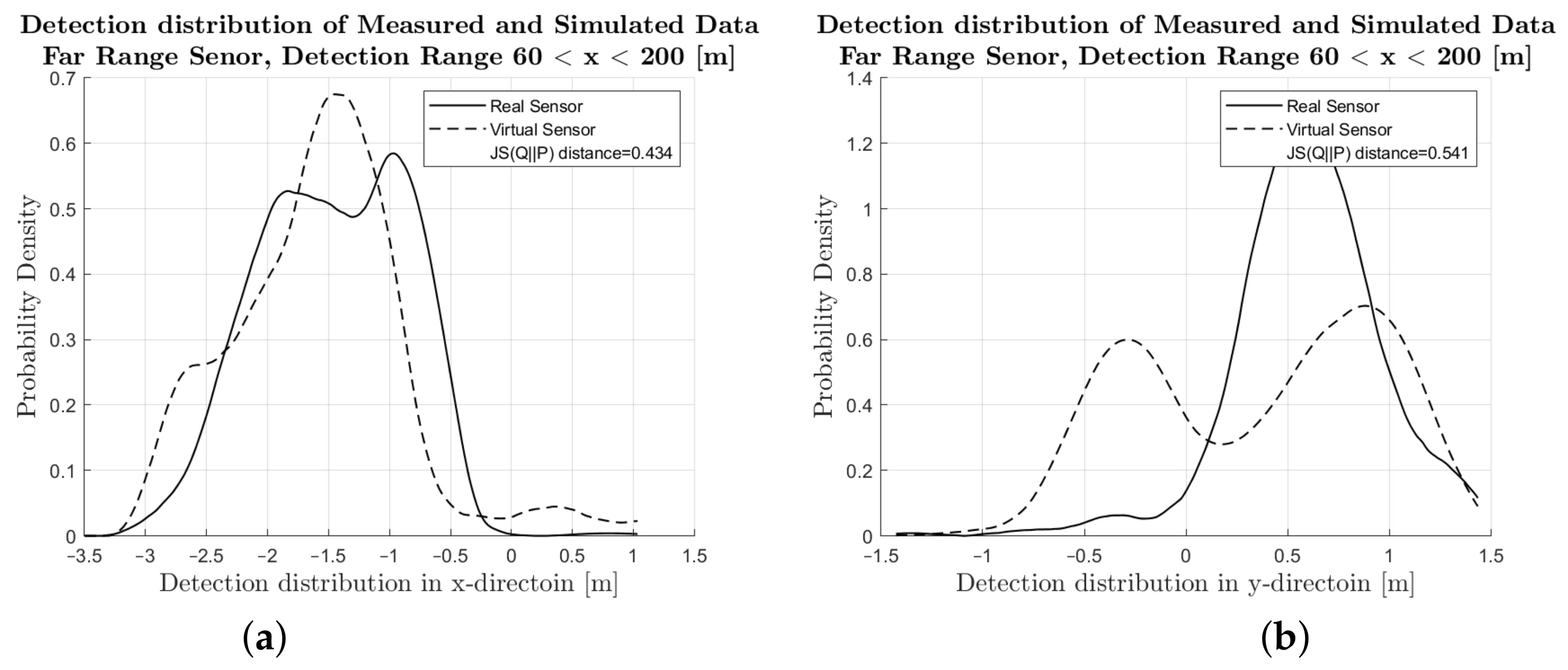

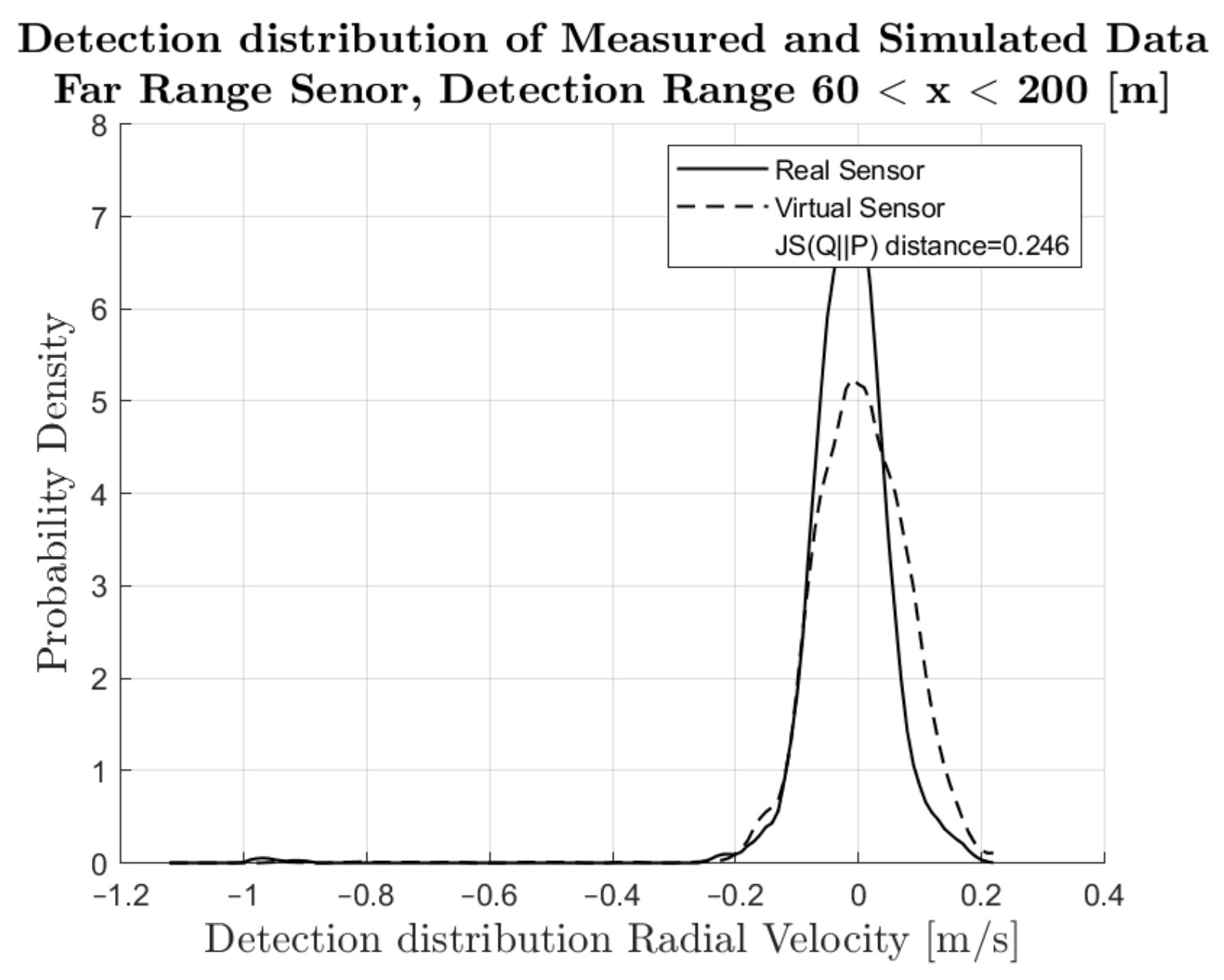

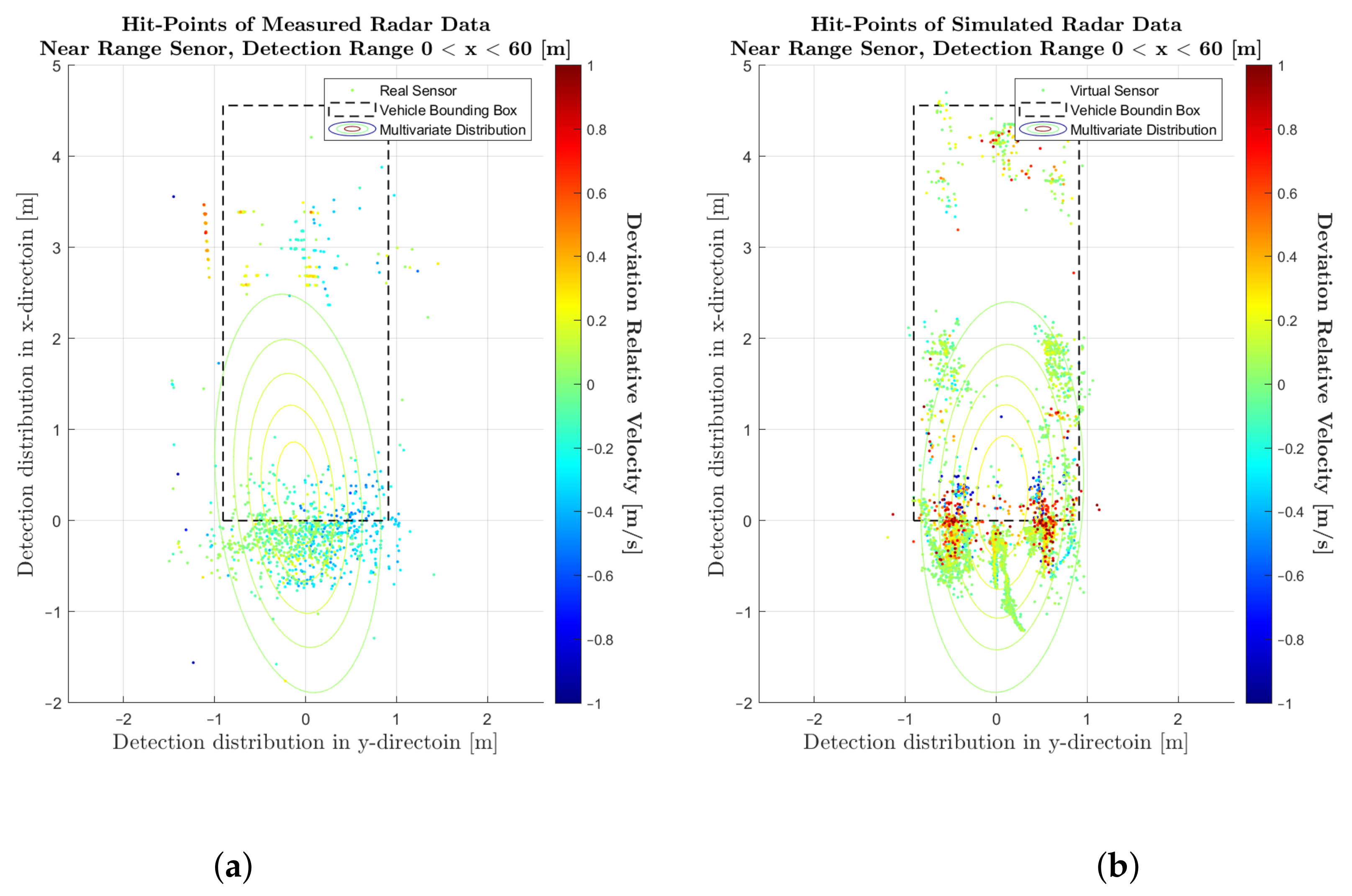

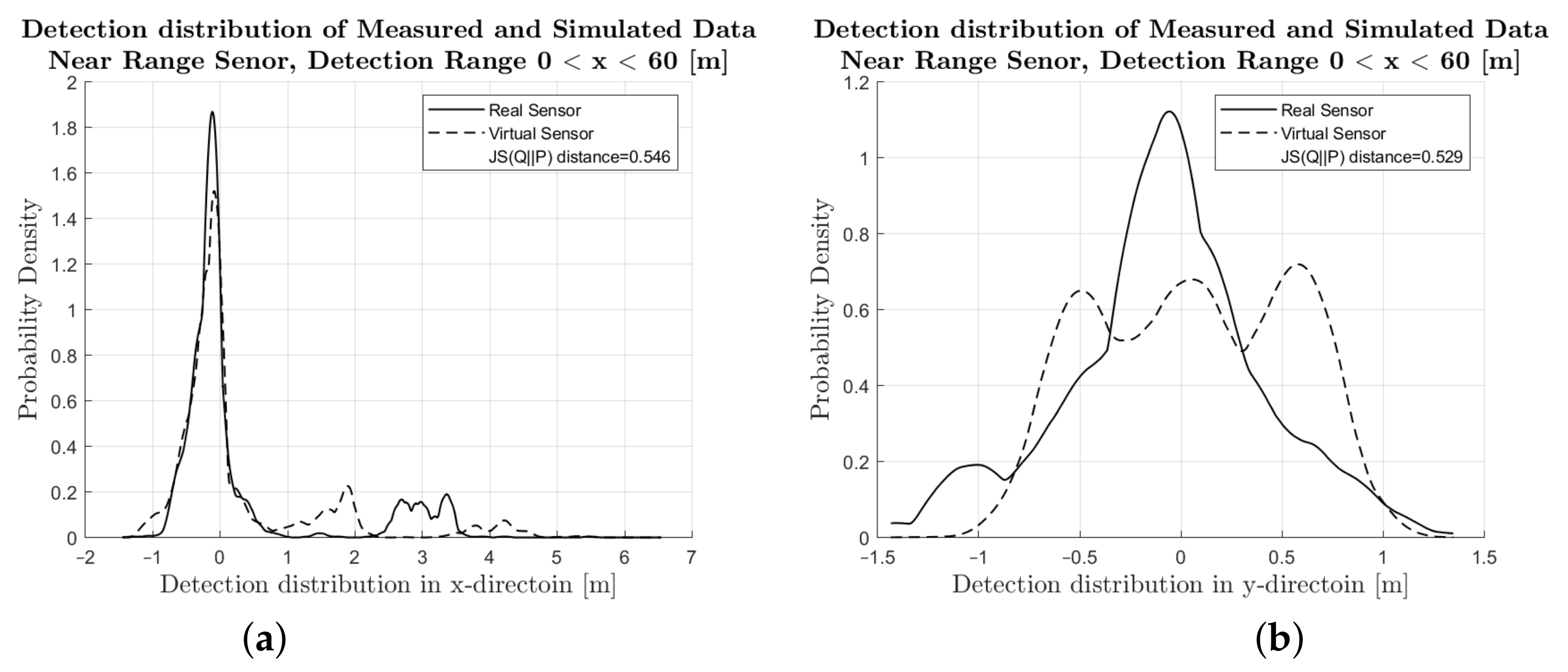

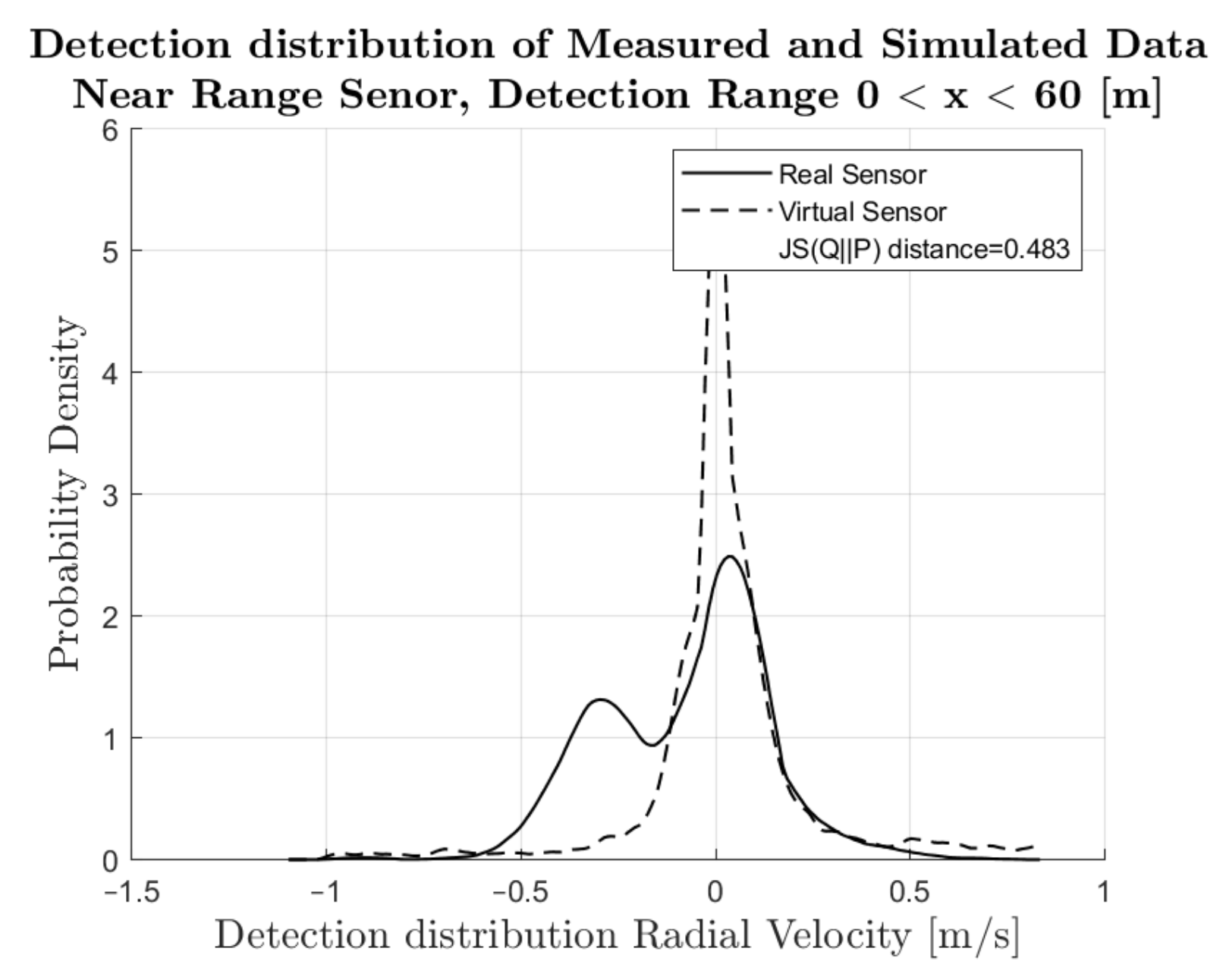

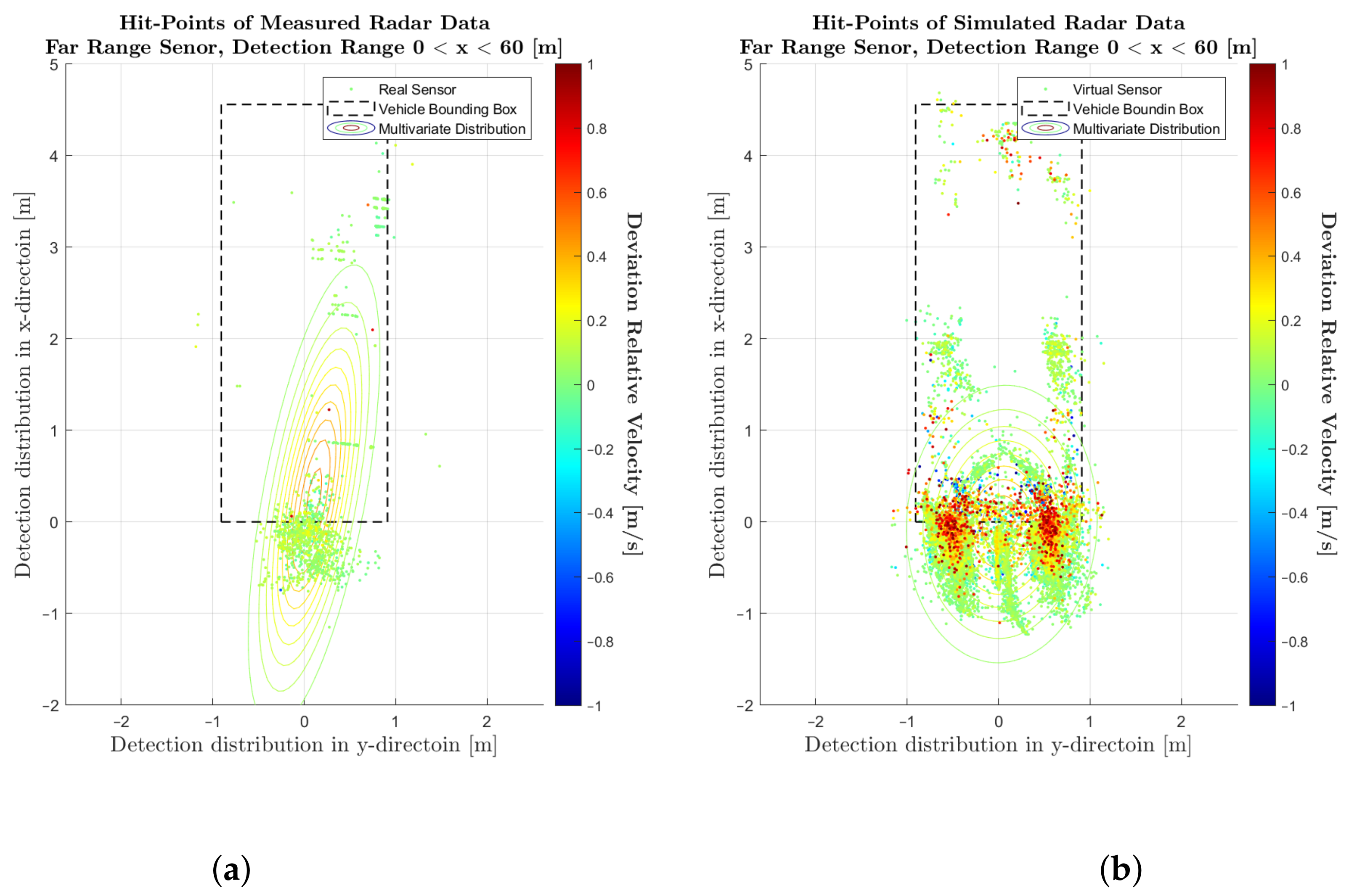

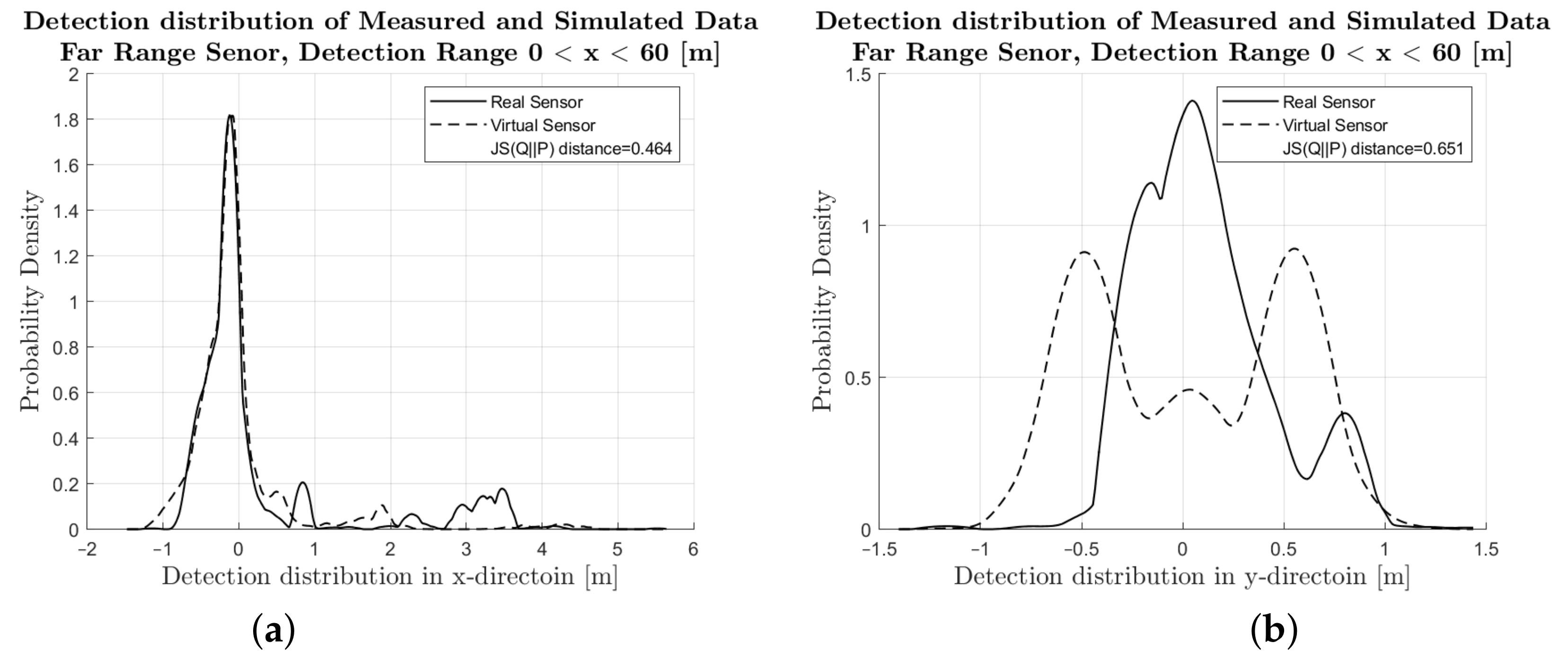

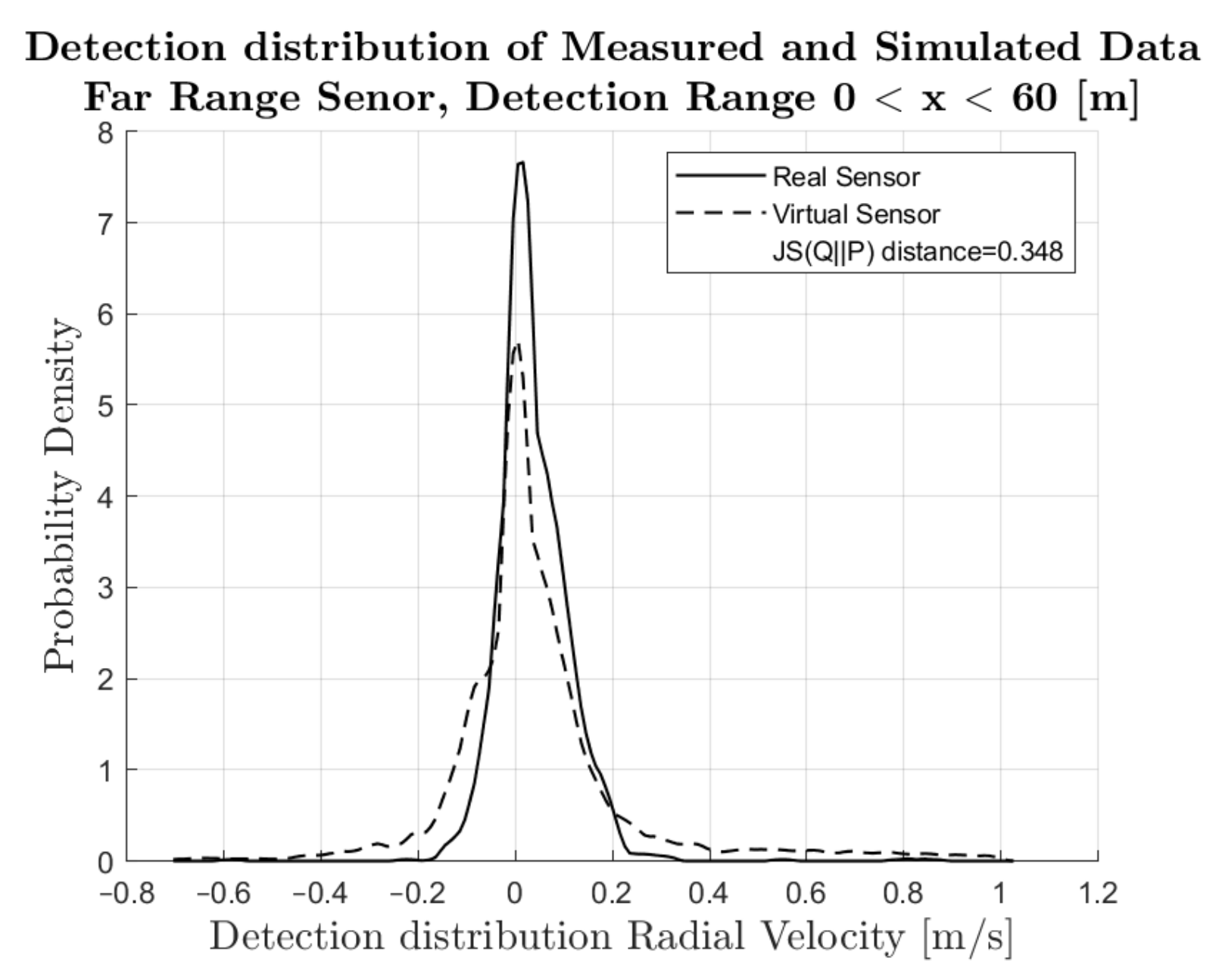

4.1. Comparison of Simulated and Measured Radar Signals

4.2. Performance Metrics

5. Discussion

Limitations

- Limitations for dynamic objects: Since the UHD map in the simulation did not include any static objects, such as bridges, traffic signs, roadside barriers, vegetation and others, we only focused on the dynamic objects. The method can be enhanced for static objects in case the ground truth is annotated in the virtual sensor data.

- Limitations for the investigated radar phenomena: Here, we focused on a specific radar related phenomenon, the rapid fluctuation of the measured RCS over azimuth angles. Other phenomena as described in [26], such as multipath-propagation and separability were not covered here, since the real world driving tests included some limitations detected afterwards. The method can be extended to other phenomena, one has to define suitable driving scenarios and performance criteria.

- Limitations of specific benchmark results: Since no parameter tuning was performed in the IPG RSI model, the results obtained are not a direct indicator of the capabilities of the sensor model. However, the method can be used to improve the quality of the modelling by fine-tuning the model parameters. Only after finding the best fit does the quality assessment become complete and can be directly compared with another model.

- Limitations for vehicle contours: According to the literature, the Jensen–Shannon divergence can be extended to a multivariate space with independent components, which allows for the comparison of multivariate random variables, making it possible to consider the contour of the vehicle. However, in this paper, we focused on the development of the methodology and data where the results are based on included one type of target vehicle. Hence, the difference of the rear wall of different vehicles can not be explicitly taken into account.

6. Summary

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Soteropoulos, A.; Pfaffenbichler, P.; Berger, M.; Emberger, G.; Stickler, A.; Dangschat, J.S. Scenarios of Automated Mobility in Austria: Implications for Future Transport Policy. Future Transp. 2021, 1, 747–764. [Google Scholar] [CrossRef]

- DESTATIS. Verkehr. Verkehrsunfälle. In Technical Report Fachserie 8 Reihe 7; Statistisches Bundesamt: Wiesbaden, Germany, 2020; p. 47. [Google Scholar]

- Dingus, T.A.; Guo, F.; Lee, S.; Antin, J.F.; Perez, M.; Buchanan-King, M.; Hankey, J. Driver crash risk factors and prevalence evaluation using naturalistic driving data. Proc. Natl. Acad. Sci. USA 2016, 113, 2636–2641. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tirachini, A.; Antoniou, C. The economics of automated public transport: Effects on operator cost, travel time, fare and subsidy. Econ. Transp. 2020, 21, 100151. [Google Scholar] [CrossRef]

- Winner, H.; Hakuli, S.; Lotz, F.; Singer, C. (Eds.) Handbuch Fahrerassistenzsysteme: Grundlagen, Komponenten und Systeme für Aktive Sicherheit und Komfort; ATZ-MTZ-Fachbuch Book Series; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Kalra, N.; Paddock, S.M. Driving to safety: How many miles of driving would it take to demonstrate autonomous vehicle reliability? Transp. Res. Part A Policy Pract. 2016, 94, 182–193. [Google Scholar] [CrossRef]

- Szalay, Z. Next Generation X-in-the-Loop Validation Methodology for Automated Vehicle Systems. IEEE Access 2021, 9, 35616–35632. [Google Scholar] [CrossRef]

- Yonick, G. New Assessment/Test Method for Automated Driving (NATM): Master Document (Working Documents). 2021. Available online: https://unece.org/sites/default/files/2021-04/ECE-TRANS-WP29-2021-61e.pdf (accessed on 18 January 2022).

- ISO 26262-2:2018; Road Vehicles—Functional Safety—Part 2: Management of Functional Safety. International Standardization Organization: Geneva, Switzerland, 2018.

- Schlager, B.; Muckenhuber, S.; Schmidt, S.; Holzer, H.; Rott, R.; Maier, F.M.; Saad, K.; Kirchengast, M.; Stettinger, G.; Watzenig, D.; et al. State-of-the-Art Sensor Models for Virtual Testing of Advanced Driver Assistance Systems/Autonomous Driving Functions. SAE Int. J. Connect. Autom. Veh. 2020, 3, 233–261. [Google Scholar] [CrossRef]

- Cao, P.; Wachenfeld, W.; Winner, H. Perception–sensor modeling for virtual validation of automated driving. IT Inf. Technol. 2015, 57, 243–251. [Google Scholar] [CrossRef]

- Chen, S.; Chen, Y.; Zhang, S.; Zheng, N. A Novel Integrated Simulation and Testing Platform for Self-Driving Cars With Hardware in the Loop. IEEE Trans. Intell. Veh. 2019, 4, 425–436. [Google Scholar] [CrossRef]

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef] [PubMed]

- Safety First for Automated Driving. 2019. Available online: https://group.mercedes-benz.com/dokumente/innovation/sonstiges/safety-first-for-automated-driving.pdf (accessed on 3 March 2022).

- Maier, M.; Makkapati, V.P.; Horn, M. Adapting Phong into a Simulation for Stimulation of Automotive Radar Sensors. In Proceedings of the 2018 IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Munich, Germany, 15–17 April 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Slavik, Z.; Mishra, K.V. Phenomenological Modeling of Millimeter-Wave Automotive Radar. In Proceedings of the 2019 URSI Asia-Pacific Radio Science Conference (AP-RASC), New Delhi, India, 9–15 March 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Cao, P. Modeling Active Perception Sensors for Real-Time Virtual Validation of Automated Driving Systems. Ph.D. Thesis, Technische Universität, Darmstadt, Germany, 2018. [Google Scholar]

- Schaermann, A. Systematische Bedatung und Bewertung Umfelderfassender Sensormodelle. Ph.D. Thesis, Technische Universität München, München, Germany, 2019. [Google Scholar]

- Gubelli, D.; Krasnov, O.A.; Yarovyi, O. Ray-tracing simulator for radar signals propagation in radar networks. In Proceedings of the 2013 European Radar Conference, Nuremberg, Germany, 9–11 October 2013; pp. 73–76. [Google Scholar]

- Anderson, H. A second generation 3-D ray-tracing model using rough surface scattering. In Proceedings of the Vehicular Technology Conference, Atlanta, GA, USA, 28 April–1 May 1996; pp. 46–50. [Google Scholar] [CrossRef]

- Sargent, R.G. Verification and validation of simulation models. In Proceedings of the 2010 Winter Simulation Conference, Baltimore, MD, USA, 5–8 December 2010; pp. 166–183. [Google Scholar] [CrossRef] [Green Version]

- Oberkampf, W.L.; Trucano, T.G. Verification and validation benchmarks. Nucl. Eng. Des. 2008, 238, 716–743. [Google Scholar] [CrossRef] [Green Version]

- Roth, E.; Dirndorfer, T.J.; von Neumann-Cosel, K.; Gnslmeier, T.; Kern, A.; Fischer, M.O. Analysis and Validation of Perception Sensor Models in an Integrated Vehicle and Environment Simulation. In Proceedings of the 22nd International Technical Conference on the Enhanced Safety of Vehicles, Washington, DC, USA, 13–16 June 2011. [Google Scholar]

- Holder, M.; Rosenberger, P.; Winner, H.; D’hondt, T.; Makkapati, V.P.; Maier, M.; Schreiber, H.; Magosi, Z.; Slavik, Z.; Bringmann, O.; et al. Measurements revealing Challenges in Radar Sensor Modeling for Virtual Validation of Autonomous Driving. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2616–2622. [Google Scholar] [CrossRef] [Green Version]

- Tihanyi, V.; Tettamanti, T.; Csonthó, M.; Eichberger, A.; Ficzere, D.; Gangel, K.; Hörmann, L.B.; Klaffenböck, M.A.; Knauder, C.; Luley, P.; et al. Motorway Measurement Campaign to Support R&D Activities in the Field of Automated Driving Technologies. Sensors 2021, 21, 2169. [Google Scholar] [CrossRef] [PubMed]

- European Initiative to Enable Validation for Highly Automated Safe and Secure Systems. 2016–2019. Available online: https://www.enable-s3.eu (accessed on 20 December 2021).

- Szalay, Z.; Hamar, Z.; Simon, P. A Multi-layer Autonomous Vehicle and Simulation Validation Ecosystem Axis: ZalaZONE. In Intelligent Autonomous Systems 15; Strand, M., Dillmann, R., Menegatti, E., Ghidoni, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; pp. 954–963. [Google Scholar]

- CAPS-ACC Technical Refernce Guide. 2013. Available online: https://ccc.dewetron.com/dl/52af1137-c874-4439-8ba0-7770d9c49862 (accessed on 12 January 2022).

- An Introduction to GNSS, GPS GLONASS BeiDou Galileo and Other Global Navigation Satellite Systems. 2015. Available online: https://novatel.com/an-introduction-to-gnss/chapter-5-resolving-errors/real-time-kinematic-rtk (accessed on 18 January 2022).

- IPG CarMaker. Reference Manual(V 8.1.1); IPG Automotive GmbH: Karlsruhe, Germany, 2019. [Google Scholar]

- Wellershaus, C. Performance Assessment of a Physical Sensor Model for Automated Driving. Master’s Thesis, Graz University of Technology, Graz, Austria, 2021. [Google Scholar]

- Wang, X.; Challa, S.; Evans, R. Gating techniques for maneuvering target tracking in clutter. IEEE Trans. Aerosp. Electron. Syst. 2002, 38, 1087–1097. [Google Scholar] [CrossRef]

- Oberkampf, W.; Roy, C. Verification and Validation in Scientific Computing; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar] [CrossRef]

- Keimel, C. Design of Video Quality Metrics with Multi-Way Data Analysis—A Data Driven Approach; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Skolnik, M.I. Introduction to Radar Systems, 3rd ed.; McGraw-Hill: New York, NY, USA, 2001. [Google Scholar]

- Maupin, K.; Swiler, L.; Porter, N. Validation Metrics for Deterministic and Probabilistic Data. J. Verif. Valid. Uncertain. Quantif. 2019, 3, 031002. [Google Scholar] [CrossRef]

- Lin, J. Divergence measures based on the Shannon entropy. IEEE Trans. Inf. Theory 1991, 37, 145–151. [Google Scholar] [CrossRef] [Green Version]

- Endres, D.; Schindelin, J. A new metric for probability distributions. IEEE Trans. Inf. Theory 2003, 49, 1858–1860. [Google Scholar] [CrossRef] [Green Version]

| Modeling Approach | ||||

|---|---|---|---|---|

| Deterministic | Statistical | Field Propagation | ||

| data | object list | o | o | |

| level | low-level detection | (o) | o | |

| Evaluated Variable | JS-Distance in [%] |

|---|---|

| 54.8 | |

| 53.1 | |

| 51 |

| Evaluated Variable | JS-Distance in [%] |

|---|---|

| 46.5 | |

| 65.1 | |

| 34.2 |

| Evaluated Variable | JS-Distance in [%] |

|---|---|

| 44.1 | |

| 52.8 | |

| 25.6 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Magosi, Z.F.; Wellershaus, C.; Tihanyi, V.R.; Luley, P.; Eichberger, A. Evaluation Methodology for Physical Radar Perception Sensor Models Based on On-Road Measurements for the Testing and Validation of Automated Driving. Energies 2022, 15, 2545. https://doi.org/10.3390/en15072545

Magosi ZF, Wellershaus C, Tihanyi VR, Luley P, Eichberger A. Evaluation Methodology for Physical Radar Perception Sensor Models Based on On-Road Measurements for the Testing and Validation of Automated Driving. Energies. 2022; 15(7):2545. https://doi.org/10.3390/en15072545

Chicago/Turabian StyleMagosi, Zoltan Ferenc, Christoph Wellershaus, Viktor Roland Tihanyi, Patrick Luley, and Arno Eichberger. 2022. "Evaluation Methodology for Physical Radar Perception Sensor Models Based on On-Road Measurements for the Testing and Validation of Automated Driving" Energies 15, no. 7: 2545. https://doi.org/10.3390/en15072545

APA StyleMagosi, Z. F., Wellershaus, C., Tihanyi, V. R., Luley, P., & Eichberger, A. (2022). Evaluation Methodology for Physical Radar Perception Sensor Models Based on On-Road Measurements for the Testing and Validation of Automated Driving. Energies, 15(7), 2545. https://doi.org/10.3390/en15072545