Abstract

As the popularity of electric vehicles (EVs) and smart grids continues to rise, so does the demand for batteries. Within the landscape of battery-powered energy storage systems, the battery management system (BMS) is crucial. It provides key functions such as battery state estimation (including state of charge, state of health, battery safety, and thermal management) as well as cell balancing. Its primary role is to ensure safe battery operation. However, due to the limited memory and computational capacity of onboard chips, achieving this goal is challenging, as both theory and practical evidence suggest. Given the immense amount of battery data produced over its operational life, the scientific community is increasingly turning to cloud computing for data storage and analysis. This cloud-based digital solution presents a more flexible and efficient alternative to traditional methods that often require significant hardware investments. The integration of machine learning is becoming an essential tool for extracting patterns and insights from vast amounts of observational data. As a result, the future points towards the development of a cloud-based artificial intelligence (AI)-enhanced BMS. This will notably improve the predictive and modeling capacity for long-range connections across various timescales, by combining the strength of physical process models with the versatility of machine learning techniques.

1. Introduction

The transportation sector’s shift towards electrification is crucial for reducing carbon emissions and improving air quality [1]. Improving battery performance will enhance the benefits of electrifying transportation. Lithium-ion batteries have undergone significant advancements over the past decade [2], but proper evaluation and management practices are still lacking [3]. The widespread adoption of battery-powered electric vehicles (EVs) has been hindered by numerous challenges, including range anxiety [4] and battery aging [5]. The implementation of onboard battery management systems (BMS) provides tools to address these issues by determining the state of charge (SOC) and state of health (SOH) of the battery as well as the thermal management and cell balancing during the system’s operational lifetime [6,7,8]. Throughout the last decade, significant strides have been accomplished in reaching this objective via the evolution of sophisticated learning algorithms [9]. However, despite advances in multiphysics and multiscale battery modeling, seamless integration of academic progress into existing onboard BMS remains a challenge [10]. The computing ability of the onboard BMS is constrained by factors such as cost, power consumption, and size limitations [11].

The onboard BMS for EV applications requires compact and energy-efficient systems, limiting the processing power that can be incorporated. Furthermore, the high cost of advanced processors and components may be a significant hurdle, particularly in cost-sensitive automotive applications. Consequently, BMS is designed to execute essential tasks like battery cell monitoring and balancing, which do not demand extensive computing power. However, the accuracy of predicting battery characteristics under real-life operational conditions such as aging and dynamic environments is often limited. This is largely attributed to the calibration of the model under laboratory-controlled conditions, which may not accurately reflect the complex and varied conditions experienced in the field.

Recent developments in statistical modeling and machine learning present exciting opportunities for predicting cell behaviors by distilling key characteristics from an immense volume of multi-fidelity observational data [12,13]. Nonetheless, these advanced learning techniques often necessitate meticulous design and complex execution. Before implementation, it is essential to develop a comprehensive solution, and a cloud-based digital solution may be a viable option [14,15]. In recent years, general-purpose Central Processing Units (CPUs) that power cloud server farms have replaced specialized mainframe processors [16], providing researchers and start-up companies with access to public computing resources from commercial providers such as Amazon, Google, and Microsoft [17]. The EV and energy storage industries have also embraced this trend, with companies such as Bosch [18], Panasonic [19], and Huawei [20] launching cloud-based software, referred to as software as a service (SaaS). For instance, Bosch’s ‘battery in the cloud’ SaaS offering, through leveraging vast data from vehicle fleets to create digital twins, promises to enhance battery life cycles by 20%. Meanwhile, Panasonic’s Universal Battery Management Cloud (UBMC) service aspires to discern cell state and optimize battery operations. Huawei’s SaaS, on the other hand, offers a public cloud computing and storage service tailored for EV companies. This service utilizes a purely data-driven model, embedded in its cloud monitoring system, aiming to predict cell faults by uncovering complex patterns within extensive EV battery datasets. On a broader scale, China has established the National Monitoring and Management Platform for New Energy Vehicles (NMMP-NEV) [21]. This expansive data platform provides remote fault diagnosis for over 6 million EVs.

In this review, we start by providing an overview of the functions and techniques utilized for onboard BMS, as discussed in Section 2. We then delve into the key technologies employed in cloud BMS in Section 3, followed by a comprehensive analysis of artificial intelligence (AI) and machine learning (ML) applications for battery state prediction in Section 4. Given the rapidly evolving nature of this field, we also offer insights into its current limitations and future directions.

2. Onboard BMS

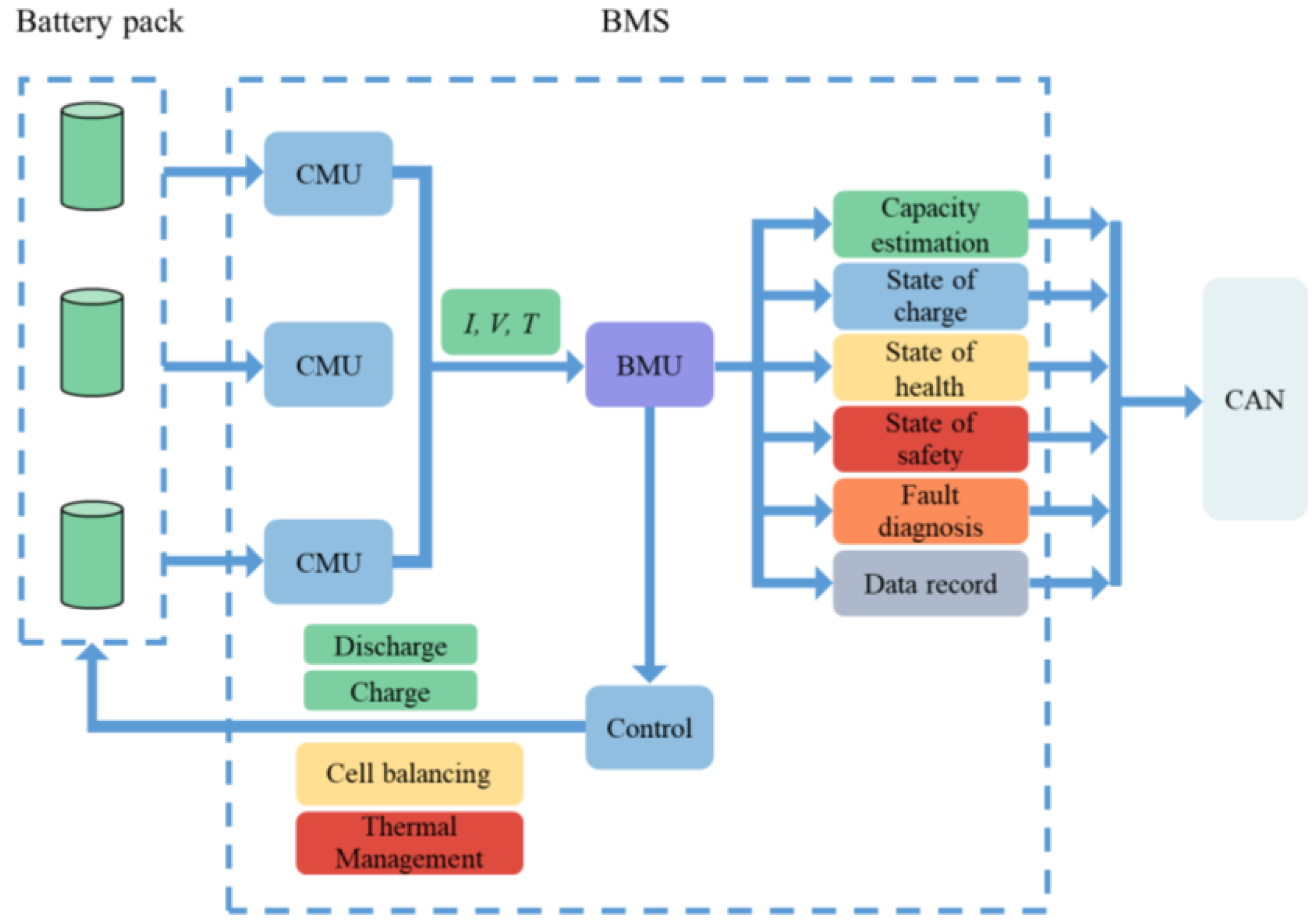

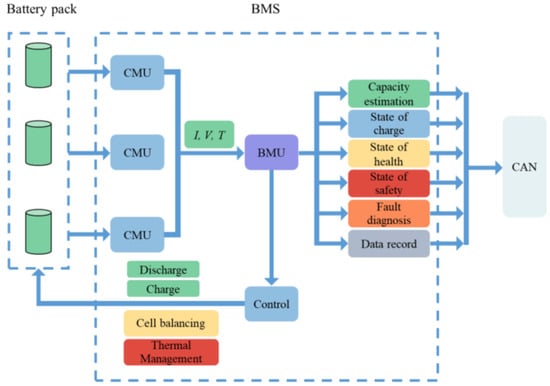

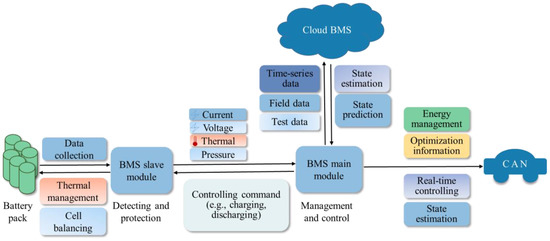

For large-scale EV or grid-scale energy storage applications, BMS is a technology that monitors the performance of a battery system, which is typically composed of multiple battery cells arranged in a matrix configuration [22,23]. BMS ensures that the battery system can reliably work within a targeted range of voltage and current for a specific duration of time, even under varying load conditions. By monitoring the battery’s system operations, BMS helps to keep operating conditions under control and stabilize employment. BMS can process and analyze data from various sensors and control algorithms in real-time and aims to improve performance and ensure safe operation by adjusting battery parameters [24]. BMS technology is essential for many applications, including EVs, renewable energy systems, and portable electronics, and is continually evolving to meet the demands of increasingly sophisticated battery systems. However, BMS systems typically have limited computing power and data storage capacity. The onboard BMS presently cannot be used as a specialized technology designed to optimize battery performance but rather a general-purpose computing system used to manage the battery system under a given program. Estimation of SOC and SOH, thermal management, cell balancing, and so on are the main functions of the onboard BMS (Figure 1). An onboard BMS is a dedicated hardware and software system installed directly within the battery pack of an EV. It monitors and controls various parameters such as voltage, current, temperature, and SOC for individual cells or the entire battery pack. The primary objectives of an onboard BMS are to ensure safe and efficient operation, optimize battery performance, extend battery life, and prevent thermal runaway or other hazardous conditions. The onboard BMS communicates with other vehicle systems and provides real-time information to the driver or user.

Figure 1.

Onboard BMS for field applications (abbreviations: CMU, Communication Management Unit; BMU, Battery Management Unit).

2.1. SOC

A crucial function of an onboard BMS is to precisely ascertain the SOC. Essentially, SOC represents the comparison of the battery’s current capacity to its fully charged capacity, serving as an equivalent to the stored charge measured in Coulombs. SOC in battery management is generally defined as:

where represents the battery’s capacity in its present state, while denotes the battery’s capacity when fully charged. The Ampere-hour (Ah) counting [25] and open-circuit voltage (OCV) [26] are commonly used for onboard BMS due to their low computational complexity. However, it is susceptible to certain limitations that impact the accuracy of Ah counting, including erroneous SOC initialization, drifts caused by current sensor noise, and battery capacity variations. Furthermore, the OCV can only be accurately gauged when the battery is not in use, which hinders its ability to provide real-time SOC estimates during operation. In BMS for EVs, equivalent circuit models (ECMs) are chiefly used because of their lower computational demand and fewer input requirements than electrochemical models. Utilizing networks of resistors and capacitors, ECMs simulate cell behavior tied to diffusion and charge-transfer processes [27]. Hence, they serve as a pragmatic approach for real-time operation and management of onboard battery systems in EVs. Early and typical examples of ECMs are the Rint model, Randles model, Thevenin model, etc. Despite their computational efficiency, most equivalent circuit models (ECMs) have limited accuracy in predicting battery characteristics, particularly during complex loading conditions and cell aging. This limitation is due to the fact that model parameters are designed based on laboratory conditions and often lack the incorporation of multifrequency impedance measurements [28,29,30].

The SOC of a battery is a crucial parameter for field applications since it signifies the remaining energy capacity within the battery system. The necessity for precise and real-time monitoring of SOC is underlined by several reasons:

- (a)

- Range estimation: SOC is a primary factor in determining the remaining driving range of an EV. By continuously monitoring the SOC, drivers can better plan their trips and avoid anxiety.

- (b)

- Optimal battery performance: Maintaining the battery within an optimal SOC range helps preserve its health and prolong its life. Operating the battery at extreme SOC levels (either too high or too low) can accelerate battery degradation and reduce its overall lifespan.

- (c)

- Charging management: Knowledge of the current SOC is crucial for optimizing charging strategies. It allows for better estimation of the required charging time and enables the use of smart charging algorithms that can balance the charging load on the grid and minimize charging costs.

- (d)

- Energy management: SOC information is vital for the efficient management of energy consumption in EVs. The onboard energy management system uses SOC data to optimize power distribution between various vehicle systems, ensuring efficient use of energy and enhancing overall performance.

- (e)

- Diagnostics and prognostics: Monitoring SOC over time, along with other battery parameters, can provide valuable insights into the battery’s health and aid in the early detection of potential issues. This can help prevent unexpected battery failures and enable predictive maintenance, minimizing downtime and maintenance costs.

2.2. SOH

The SOH describes the capacity of a fully charged battery relative to its nominal capacity at the point of manufacture when it was brand new. Upon manufacturing, a battery’s State of Health (SOH) starts at 100% and diminishes to 80% at its end of life (EOL). Within the battery manufacturing industry, EOL is typically characterized as the stage when the actual capacity at full charge dwindles to 80% of its initial nominal value. The count of charge/discharge cycles left until the battery attains its EOL is denoted as the battery’s Remaining Useful Life (RUL). Consequently, SOH can be articulated as:

where represents the nominal capacity of the battery when it is brand new.

Battery degradation is a complex issue that involves numerous electrochemical reactions taking place in the anode, cathode, and electrolyte [31,32]. The operating conditions have a critical impact on the degradation process and ultimately impact the battery lifetime. Predicting the remaining battery lifespan with precision under a variety of operating conditions is of utmost importance to ensure reliable performance and timely maintenance, as well as for battery second-life applications [33]. Onboard SOH estimation is used to determine the health of a battery system during its operating lifetime. The battery capacity frequently serves as a health indicator, given its association with the energy storage potential of batteries and its immediate influence on the remaining operational duration and overall lifespan of the batteries. Computational tools have provided insights into fundamental battery physics, but despite the advances in first principles and atomistic calculations, they are unable to accurately predict battery performance under realistic conditions. As is the case for SOC estimation for online applications, the most commonly used onboard SOH estimation methods are ECMs with limited accuracy. Data-driven approaches can provide a better nonlinear fitting capability [34,35,36,37]. However, due to the computational complexity, it is challenging to make most existing advanced methods widespread and practical. This could potentially be attributed to the substantial computational resources required to accurately estimate the SOH of a battery, especially when it is exposed to various operating and environmental conditions during its lifetime. In addition, the need to continuously monitor and analyze battery performance can place a significant burden on the vehicle’s onboard system and affect overall vehicle performance.

In field applications such as EVs, battery SOH provides an indication of the battery’s overall condition and its remaining useful life. Precise and timely evaluation of SOH is crucial for various reasons:

- (a)

- Battery life prediction: Monitoring SOH allows for better estimation of the battery’s RUL, enabling vehicle owners and fleet managers to plan for battery replacements or upgrades, thus minimizing unexpected downtime and associated costs.

- (b)

- Performance optimization: As a battery degrades, its capacity and power capabilities decrease, affecting the vehicle’s range, acceleration, and overall performance. By keeping track of the battery’s SOH, the energy management system can optimize the power distribution among various vehicle systems, ensuring consistent performance and preserving battery life.

- (c)

- Safety assurance: A deteriorating battery may pose safety risks, such as an increased probability of thermal runaway events, which can lead to fires or explosions. Monitoring SOH can help identify potential safety hazards early, allowing for preventive measures to be taken in case of anomalous capacity degradation.

- (d)

- Charging management: Knowledge of the battery’s SOH is vital for adapting charging strategies that account for its current condition. As battery health declines, charging algorithms can be adjusted to minimize further degradation and maintain safe operation.

- (e)

- Warranty management: SOH information can be used by manufacturers to manage warranty claims more effectively and ensure that battery performance remains within the specified warranty limits.

- (f)

- Second-life applications: Accurate SOH assessment can facilitate the identification of batteries suitable for applications in their second life, like stationary energy storage systems, once their performance in EVs has degraded below acceptable levels.

- (g)

- Residual value estimation: The SOH is a pivotal factor in establishing the residual value of an EV in the used vehicle market, as it directly impacts the battery’s remaining useful life and the vehicle’s overall performance.

2.3. Thermal Management

The thermal management system plays a crucial role in ensuring optimal performance and longevity of the battery system [38]. It aims to maintain an average temperature that balances performance and life, as determined by the battery manufacturer. The thermal management system should fulfill the requirements outlined by the vehicle manufacturer, such as compactness, affordability, reliability, easy installation, low energy consumption, accessibility for maintenance, compatibility with varying climate conditions, and provision for ventilation as needed [39]. Various methods can be employed for these processes, such as the utilization of air for temperature regulation and ventilation, liquid for thermal control, insulation for maintaining temperature levels, and phase change materials for thermal storage. Alternatively, a combination of these methods can be used. The approach can be either passive, where it relies on environmental conditions, or active, where an internal source is employed for heating or cooling purposes. The battery’s electronic control unit manages the control strategy.

The battery’s temperature directly affects its discharge power, energy, and charge acceptance during regenerative braking, which can impact the vehicle’s fuel economy and driving experience. Moreover, temperature significantly determines the battery’s lifespan [40]. Hence, batteries ought to function within a temperature spectrum that’s optimal for their electrochemical processes, as specified by the manufacturer. However, this range may be narrower than the vehicle’s specified operating range, as determined by the manufacturer. For instance, the optimal operating temperature window for a lithium-ion battery is typically between 20 °C and 40 °C. However, the operating temperature range for an EV battery system might be much wider, extending from as low as −20 °C to as high as 55 °C. Hence, thermal management is crucial for EV applications, and can be summarized for the following reasons:

- (a)

- Performance optimization: Maintaining optimal temperature ranges for battery cells is essential for achieving peak performance levels, ensuring efficient energy utilization, and extending the driving range of electric vehicles.

- (b)

- Safety: Effective thermal management helps prevent thermal runaway, which can lead to battery fires or explosions. By closely monitoring and regulating the temperature, potential hazards can be mitigated.

- (c)

- Battery life extension: Prolonged exposure to extreme temperatures can degrade battery materials, leading to a reduction in overall battery life. Proper thermal management helps maintain the battery within its optimal operating temperature range, thus prolonging its lifespan.

- (d)

- Charging efficiency: Effective thermal management enables faster and more efficient charging of batteries by minimizing temperature-related inefficiencies and maintaining safe charging conditions.

- (e)

- Consistent performance: By maintaining consistent temperature conditions within the battery pack, thermal management systems ensure that the battery’s performance remains stable and predictable, regardless of external environmental factors.

At present, EVs do not have temperature information for every cell within the battery pack due to practical constraints associated with the large number of required sensors. Adding more sensors and wiring can increase the battery pack’s weight and complexity, leading to reduced vehicle efficiency and performance. Additionally, the cost of adding more sensors and wiring can be prohibitively expensive for mass-produced EVs that need to be cost-effective for consumers. Hence, EVs typically rely on strategically placed temperature sensors within the battery pack to provide an overall temperature reading, rather than individual readings for each cell. To enhance the batteries’ safe operation, one possible way is to develop advanced data-driven learning algorithms that leverage time-resolved data (voltage and current). However, this comes at the cost of computing efficiency losses.

2.4. Cell Balancing

A crucial element of electric vehicles, the battery balancing system (BBS), is composed of two main components: the balancing circuitry and the control strategy governing the balancing process [41]. To transfer charge between cells and maintain balance, the balancing circuit can be either passive or active [42]. Passive balancing relies on resistance to convert excess energy in a high-charge cell into heat, which is then dissipated until the charge is equalized with a low-charge cell. Passive balancing is simple, cost-effective, and easy to implement, but it generates substantial heat and has low balancing efficiency. Unlike passive balancing, active balancing utilizes energy carriers to transfer energy from the cells with high SOC to the cells with low SOC inside the battery pack. While active balancing is the preferred choice for applications that operate at high temperatures and require rapid balancing, it does increase the complexity of the circuit.

The architectural composition of the BBS holds paramount importance, yet the balancing control strategy it employs shares an equally critical role in dictating the circuit’s overall conversion efficiency and speed of balance, as indicated by references [43,44]. These balancing tactics can be classified according to the control variable they utilize, such as the state of charge (SOC), cell voltage, or capacity. Creating an optimized balancing control strategy tailor-made for a specific battery system and its corresponding application is vital to guaranteeing a balanced operation that is both efficient and effective.

The merits of utilizing cell balancing span several areas, including:

- (a)

- Capacity utilization: Cell balancing ensures that all cells within a battery pack are utilized to their full capacity, maximizing the overall energy storage and extraction capability. This, in turn, optimizes the vehicle’s driving range and performance.

- (b)

- Lifespan extension: Imbalances in cell voltages can lead to some cells aging faster than others, ultimately reducing the overall battery pack’s lifespan. Cell balancing helps equalize the charge and discharge cycles across all cells, promoting even wear and prolonging the battery pack’s life.

- (c)

- Safety enhancement: Unbalanced cells can cause overcharging or over discharging, which may lead to thermal runaway and other safety risks. Cell balancing prevents these issues by ensuring that all cells are charged and discharged within their safe operating limits.

- (d)

- Performance consistency: Cell imbalances can result in inconsistent performance and reduced efficiency. By maintaining balanced cells, the battery pack can deliver predictable and stable performance, improving the overall driving experience.

- (e)

- Reduced Maintenance: Employing cell balancing can minimize the frequency of maintenance checks and services. By ensuring uniformity in cell usage, the system reduces the possibility of individual cell failures and maintains the overall health of the battery pack. This, in turn, lowers maintenance costs and offers greater convenience to the user.

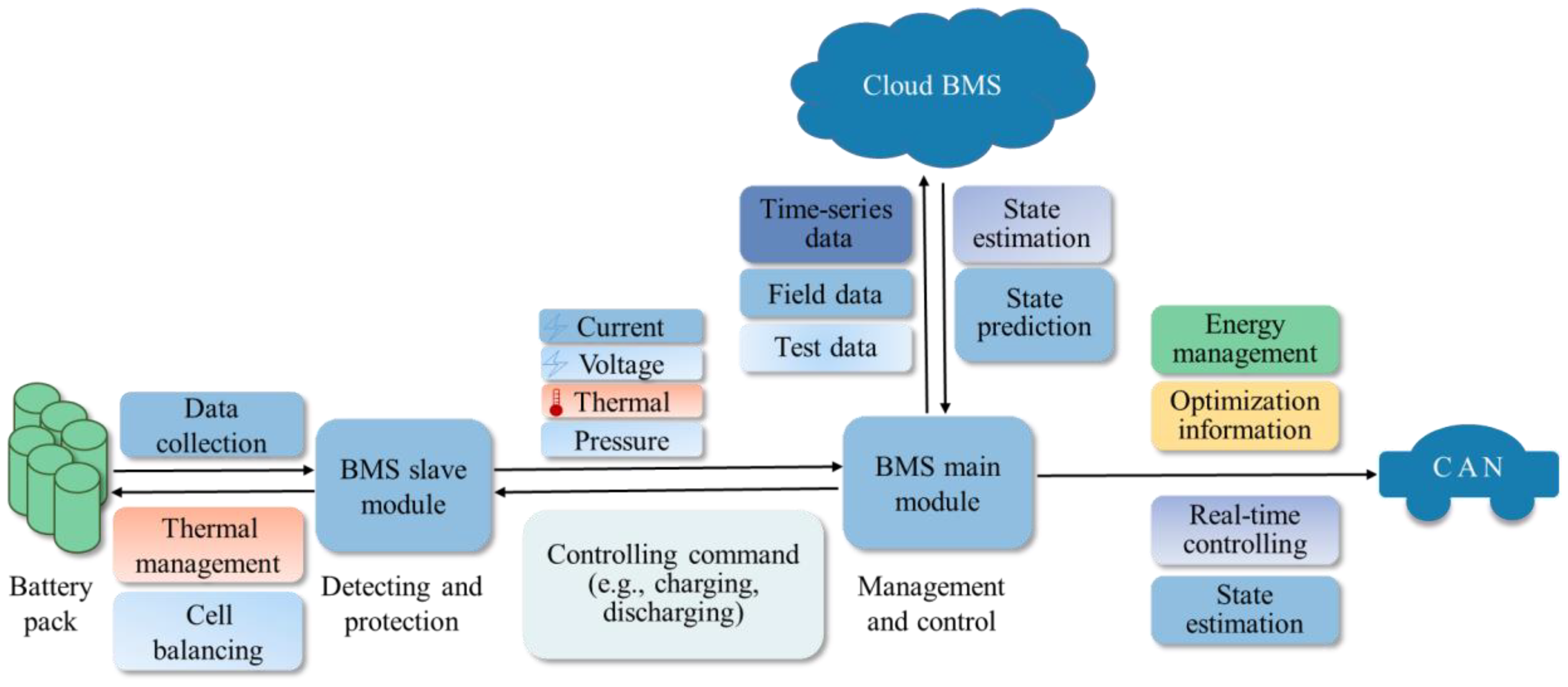

3. Key Components and Technologies of Cloud-BMS

Machine learning has emerged as a powerful instrument; however, it necessitates substantial quantities of high-quality and pertinent observational samples. The computational complexity associated with this requirement surpasses the capabilities of onboard Battery Management Systems (BMS). Cloud-based BMS (Figure 2) provides a brand-new digital solution, as it can process and analyze data in a more efficient and flexible manner. Sensor measurements can be uploaded to the cloud, enabling machine learning to continually learn from these data points while harnessing the vast wealth of information present in the samples. A cloud BMS enables remote monitoring, diagnostics, and even predictive maintenance, improving overall battery management and reducing the need for manual inspections or on-site intervention. The cloud BMS can also facilitate fleet management by aggregating data from multiple vehicles or energy storage systems, allowing operators to optimize energy consumption and plan maintenance schedules more efficiently. Additionally, a Cloud BMS can enable over-the-air (OTA) updates to the onboard BMS firmware and algorithms, further enhancing battery performance and extending its lifespan.

Figure 2.

Cloud-based framework for battery management in EV applications.

3.1. IoT-Devices

Given the extensive embrace of Internet of Things (IoT) technology [45], end-use devices have gained the ability to collect and analyze vast amounts of data across various spatial and temporal scales. Equipped with electronics and network connectivity, these devices hold a key position in monitoring and management. As the number of sensors is expected to reach trillions in the near future, integrating data streams with diverse levels of fidelity into real-world applications and battery models becomes increasingly feasible.

The physical, chemical, and electrochemical performance of batteries can exhibit significant variations due to dynamic loading conditions such as current rate, operating voltage, temperature, and more. Consequently, continuous monitoring throughout the operational lifetime is of paramount importance [46]. The onboard Battery Management System (BMS) enables the transfer of sensor measurements from the battery cells to the IoT component, employing the Controller Area Network (CAN) protocol for communication. To optimize resource utilization while efficiently transmitting a substantial volume of sequential data generated by both private and fleet vehicles, the message queuing telemetry transport (MQTT) protocol [47] enables bidirectional communication between the device and the cloud. The infrastructure can effortlessly support millions of IoT devices, seamlessly accommodating their operations. Moreover, the data stored in the onboard memory can be efficiently transmitted to the cloud system using TCP/IP protocols, ensuring smooth and reliable upload processes. Modern cities’ IoT systems provide infrastructure for remote data transmission through the use of IoT actuators and on-board sensors. For a more detailed explanation of the next-generation IoT, please refer to [48].

3.2. Cloud Server-Farm

A cloud server farm is a large-scale data center infrastructure that offers remote data storage and analysis capabilities, including real-time monitoring, early warning systems, and intelligent diagnosis over the internet. This beckons scientists as data sets continue to expand [49]. Cloud storage and computing has been widely recognized and acknowledged as a highly effective and flexible solution for remote monitoring, especially in the context of large-scale EV applications [50]. In this context, developers have the flexibility to seamlessly tailor their cloud to meet their specific needs and demands, thereby achieving maximum efficiency and convenience. The cloud based BMS has the capability to learn and analyze the continuous flow of the charging and discharging data of battery systems, enabling the generation of health information.

The cloud BMS can learn and analyze the continuous stream of time-series battery data and generate electronic health records, which provide insightful information about the battery’s performance and health status. Java and Go are among the most commonly used programming languages for cloud development, providing developers with robust and efficient tools to create sophisticated and reliable cloud applications. Additionally, PHP offers a flexible and effective solution for web developers to design interactive interfaces and engage with the vast amount of data generated by the system [51].

In order to implement the battery-cloud system efficiently, it is essential for users to have some basic computing skills, but more importantly, it requires a deep understanding and knowledge of the learning task at hand, particularly in the context of complex, nonlinear multiphysics battery systems that exhibit gappy and noisy boundary conditions. Moreover, modeling of battery systems for field applications, such as prognostics and predictive health management (PHM), is often prohibitively expensive and requires complex formulations, new algorithms, and elaborate computer codes.

3.3. Machine Learning

In spite of the progress achieved in forecasting the dynamics of battery systems utilizing fundamental principles, atomic-level analysis, or methods rooted in physics, a notable obstacle persists due to the lack of all-encompassing prognostic models capable of establishing robust connections between cell properties, underlying mechanisms, and the states of the cell. The prognostication and modeling of battery systems’ multi-dimensional behavior, influenced by various spatio-temporal factors, emphasize the necessity for a revolutionary approach. Deep learning has exhibited extraordinary advancements in addressing enduring quandaries faced by the artificial intelligence community [52]. The widespread availability of open-source software and the automation capabilities of material tools have seamlessly integrated machine learning into computational frameworks. Prominent software libraries like TensorFlow [53,54], PyTorch [55,56], and JAX [57] contribute significantly to the analysis of cell performance by harnessing diverse data modalities encompassing time series data, spectral data, laboratory tests, field data, and more.

In the realm of predictive modeling of battery systems, there has been a recent push towards synergistically integrating machine learning tools with cloud computing. In this context, researchers and engineers can access real-time data streams and perform real-time analysis and predictions of battery performance, which is pivotal when it comes to the design and optimization of battery systems. The integration of machine learning algorithms, cloud computing, and big data analysis has created a powerful ecosystem for the representation of multiscale and multiphysics battery systems. By incorporating actual sensor data to calibrate the models, a battery-powered digital twin strives to emulate the dynamics of the physical entity in a digital environment. Physics-informed learning is poised to emerge as a driving force in the transformative era of digital twins, thanks to its innate ability to seamlessly integrate physical models and data.

A recent illustration of this innovative learning approach is Physics-Informed Neural Networks (PINNs). The integration of data from measurements and partial differential equations (PDEs) is flawlessly accomplished by PINNs through the incorporation of these PDEs into the neural networks. This approach exhibits exceptional adaptability, allowing it to effectively handle a wide range of PDE types, including integer-order PDEs, fractional PDEs, and stochastic PDEs. To illustrate its effectiveness, the PINN model can be successfully employed to solve forward problems utilizing the viscous Burgers’ equation, which can be represented as:

The physics-uninformed networks act as a surrogate for the PDE solution u(x, t), whereas the physics-informed networks characterize the PDE residual. The loss function encompasses both a supervised loss, incorporating data measurements of u obtained from initial and boundary conditions, and an unsupervised loss, which captures the PDE discrepancy:

where

The two sets of points, {} and {()}, correspond to samples taken from initial and boundary locations and the complete domain, respectively. To effectively balance the relationship between the two loss terms, weight, and are utilized. The neural network undergoes training using gradient-based optimizers like Adam to minimize the loss until it is below a predefined threshold ε. For a detailed discussion and introduction of PINN, one can refer to a comprehensive review [58].

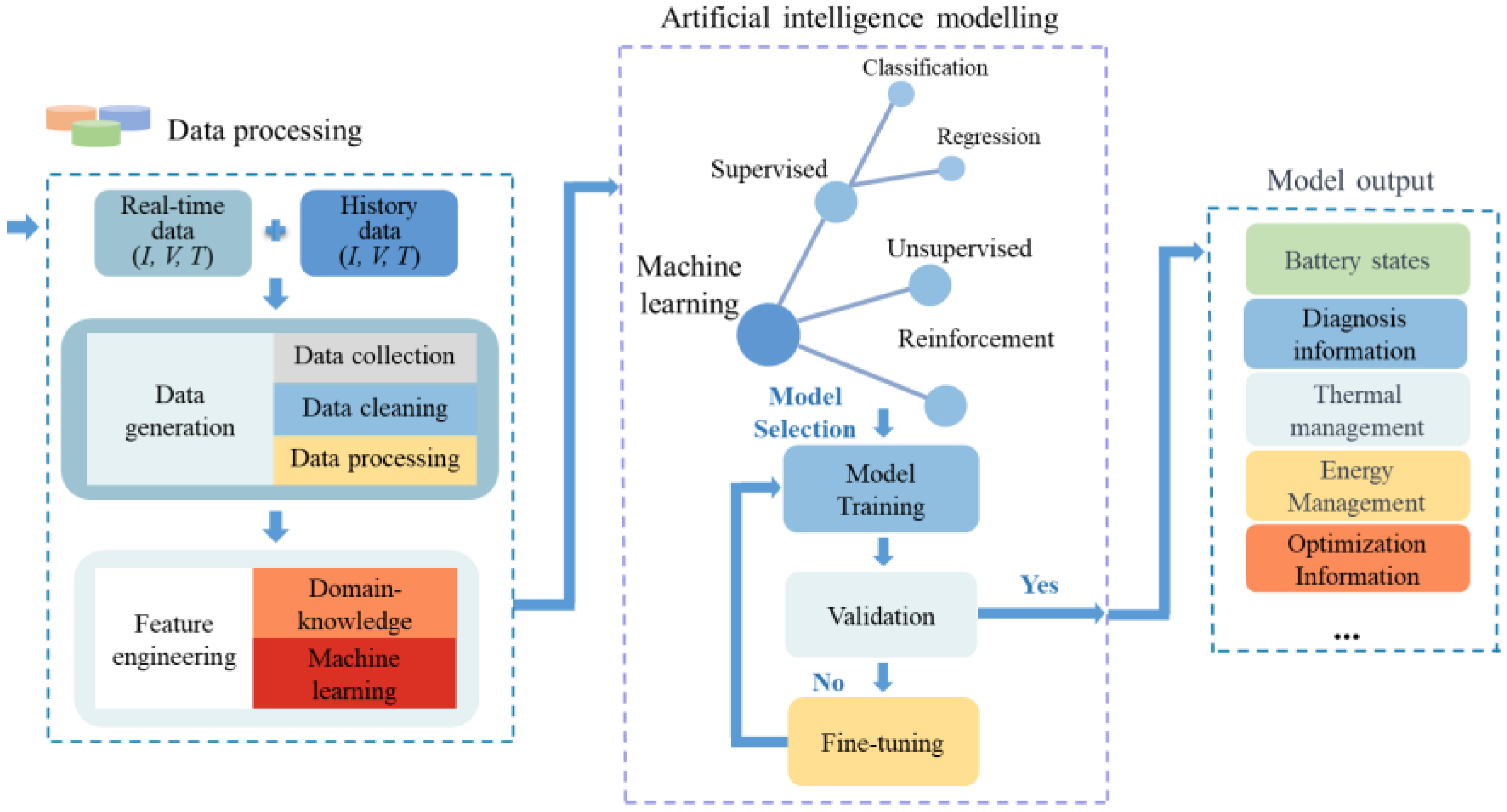

4. AI Modelling for Battery State Prediction

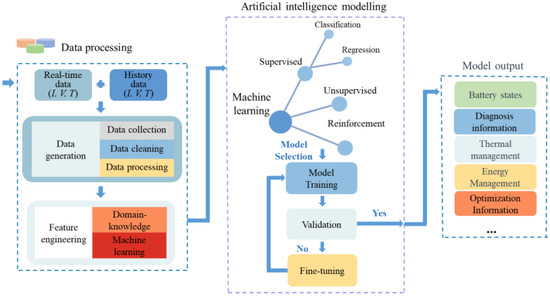

Machine learning plays a vital role in AI modeling as it empowers systems to learn and enhance their performance through data-driven experiences, without the need for explicit programming. Machine learning algorithms analyze large datasets to identify patterns and relationships, which are then used to make predictions and decisions. Machine learning utilizes a customizable function with adjustable parameters to accurately predict battery behavior, often through the use of experimental training data [59]. This allows for generalization to other battery systems. A representative example includes transfer learning, which leverages data-driven mechanisms to govern personalized health status prediction tasks [60]. Machine learning has surfaced as a powerful tool for analyzing the ever-growing amount of time-series data, but it faces limitations when dealing with complex spatio-temporal systems. To overcome this, deep learning has attracted significant attention over the past few years, with its ability to automatically extract spatio-temporal features. Deep learning has made significant progress in addressing challenges that have previously proven difficult for the artificial intelligence community. It has proven to be particularly effective in identifying complex structures in large data sets for battery systems [61,62,63,64]. This would not only improve the accuracy of models for forecasting and long-range spatial connections across multiple timescales but also enable a deeper understanding of complex physical processes. In this section, we provide a concise overview of the recent advancements in battery state estimation achieved through diverse machine learning methods (Figure 3).

Figure 3.

AI and machine learning for modelling and predicting battery states.

4.1. SOC

Machine learning (ML) methods have exhibited remarkable efficacy in accurately interpolating between data points, even for high-dimensional tasks. With the ability to learn complex patterns and relationships within data, ML models can accurately capture the underlying structure of the data, allowing for effective interpolation and prediction. For example, a gated recurrent unit (GRU)-based recurrent neural network (RNN) has shown good performance in estimating the battery SOC using data from varied loading patterns [65]. Despite the training process demanding several hours in a GPU environment, the testing phase demonstrated remarkably swift execution, even within a CPU environment. This underscores the efficiency and efficacy, in precisely estimating SOC, which serves as a crucial parameter for management and control in diverse applications. In another study, a stacked bidirectional long-short-term memory (LSTM) neural network was applied to estimate the cell SOC [66]. The study focuses on three main improvements: (1) the use of bidirectional LSTM to capture temporal dependencies in both forward and backward directions within time-series data; (2) the stacking of bidirectional LSTM layers to create a deep model with increased capacity to process nonlinear and dynamic LiB data; and (3) a detailed comparison and analysis of multiple parameters that affect the estimation performance of the proposed method. The results demonstrate the effectiveness of the approach and its potential to enhance SOC estimation. A single hidden layer GRU-RNN algorithm with momentum optimization for SOC estimation is proposed [67]. GRU is a streamlined variant of LSTM that integrates the forget and input gates into a singular update gate, resulting in reduced parameters and enhanced computational efficiency compared to LSTM. The algorithm employs the momentum gradient method, which balances the current gradient direction and historical gradient direction to prevent oscillations in the weight change and improve the speed of SOC estimation. The performance of the algorithm is evaluated under varying parameters, including β, noise variances σ, epochs, and the number of hidden layer neurons. The results of the study provide insights into the accuracy and efficiency of the GRU-RNN-based momentum algorithm in estimating the SOC of lithium batteries, demonstrating its potential as a promising approach for battery management and control in various applications. More recently, a combined SOC estimation method called gated recurrent unit adaptive Kalman filter (GRU-AKF) was proposed, which is both robust and efficient [68]. The method eliminates the requirement for developing a complex battery model by employing a GRU-RNN for initial SOC estimation and establishing a nonlinear relationship between observed data and SOC across the entire temperature range. Subsequently, the Adaptive Kalman Filter (AKF) is utilized to refine the SOC estimated by the GRU-RNN, resulting in the final estimated SOC. The proposed GRU-AKF exhibits enhanced adaptability to practical battery applications, facilitated by the improved adaptive approach. The design cost of the estimation method is reduced since the hyperparameters of the network do not need to be carefully designed as the output SOC is further processed by the AKF. AKF offers an effective tool for estimating the state of a dynamic system based on noisy measurements. The method is specifically designed to address the challenge of noisy data in dynamic systems, where conventional data-driven approaches may fall short in delivering accurate outcomes. The study’s findings showcase the efficacy of the proposed method in accurately estimating SOC for batteries.

In addition to the RNN model, self-supervised transformer model is another deep learning method that has attracted a lot of attention for predicting cell SOC. For example, transformer-based SOC estimation was used to leverage self-supervised learning to achieve higher accuracy with limited data availability within a constrained timeframe [69]. The framework additionally integrates cutting-edge deep learning techniques, including the Ranger optimizer, time series data augmentation, and the Log-Cosh loss function, to enhance accuracy. The acquired parameters can be efficiently transferred to another cell by fine-tuning, even with limited data available within a short timeframe. Another study proposes a hybrid methodology for SOC estimation of batteries by employing a sliding window to pre-process data, using a Transformer network to capture the relationship between observational data and SOC, and feeding the result into an adaptive observer [70]. The effectiveness of the proposed method is validated across different temperatures using US06 data, demonstrating accurate SOC estimation with less than 1% Root Mean Square Error (RMSE) and maximum error in the majority of temperature scenarios. The proposed method surpasses LSTM-based approaches and exhibits the ability to provide reliable predictions even for temperatures not included in the training dataset.

4.2. SOH

In a recent research endeavor, a battery health and uncertainty management pipeline (BHUMP) is introduced as a machine learning-driven solution, showcasing its adaptability to various charging protocols and discharge current rates. Notably, BHUMP excels at making accurate predictions without the need for specific knowledge about battery design, chemistry, or operating temperature [71]. The study underscores the significance of incorporating machine learning techniques in conjunction with charge curve segments to effectively capture battery degradation within a limited timeframe. However, the authors stress that even if the algorithm produces low errors, it is crucial to perform uncertainty quantification tests to ensure its reliability before deploying it in real-world applications.

Differential approaches, namely incremental capacity and differential voltages are frequently employed to identify causes of deterioration in online applications. One research study, for example, combines the Support Vector Regression (SVR) algorithm with a multi-timescale parameter identification approach based on Extended Kalman Filter-Recursive Least Squares (EKF-RLS) and a known relationship model between representative RC (Resistor-Capacitor) parameters and State of Health (SOH) [72]. The study’s results showcase that the proposed method achieves Mean Absolute Error (MAE) and Root Mean Square Error (RMSE) values below 3% for SOH prediction, utilizing both static and dynamic observational data. This suggested technique demonstrates excellent capability in accurately estimating SOH in complex dynamic environments, offering high accuracy, robustness, and practicality.

In recent years, some large datasets relating to batteries during the daily operation of EVs have been collected and analyzed. For example, one study used 147 vehicle data points from two sources to verify a proposed method for estimating the capacity and internal resistance of EV batteries [73]. The results demonstrate that the estimation results converge to the true trend, with a maximum estimation error of less than 4% for the capacity of sampled real EVs. The proposed method can accurately estimate the battery capacity of EVs and enable life prediction using current cloud data. Another study proposed a SOH estimation method for EV batteries based on discrete incremental capacity analysis that is robust, compatible, computationally efficient, and memory-efficient [74]. The SOH of EVs does not decrease linearly with mileage but shows stagnation and fluctuations due to seasonal temperature variations, driving habits, and charging strategies.

To emphasize the importance of cloud-based AI modeling for battery BMS, ensemble machine learning offers opportunities to accurately predict SOH using only daily operating charging data (i.e., voltage, current, and temperature) [75]. A two-step approach is employed to reduce noise in battery data, while domain-specific features derived from IC (incremental capacity) and DV (differential voltage) analysis offer physically consistent representations of intricate battery degradation patterns. To enhance prediction accuracy and model generalization, a stacking technique is adopted, leveraging four base-level models (linear regression, random forest regression, gaussian process regression, and gradient boosting regression) along with a meta-learner. The proposed multi-model fusion method exhibits robustness, stability, and compatibility with diverse usage histories, making it a valuable tool for forecasting cell capacity and constructing battery pack trajectories. Furthermore, the study indicates that with the advancement of onboard computing capabilities, the proposed method can be seamlessly migrated from cloud-BMS to onboard-BMS by employing feature engineering techniques and constructing lookup tables. In summary, this study demonstrates the potential of integrating onboard observational samples with data-driven machine learning models to predict the dynamics of complex systems like lithium-ion batteries, even in the presence of missing/noisy data and uncertain boundary conditions.

Reinforcement learning, which combines machine learning principles with neuroscientific approaches, offers a normative framework for agents to learn policies and optimize their behavior in response to rewards received from interacting with the environment [76]. In battery prognostics and health management applications, such as optimizing fast-charging protocols, the BMS acts as the agent, making decisions (like determining the applied current) based on rewards for each possible action while interacting with the environment (the battery) [77]. A pseudo-two-dimensional electrochemical model, Doyle-Fuller-Newman [78], is employed to predict the evolution of multiphysics battery systems by capturing macro-scale physics, including lithium concentration in solids and electrolytes, solid electric potential, electrolyte electric potential, ionic current, molar ion fluxes, and cell temperature. The Deep Deterministic Policy Gradient (DDPG)-based reinforcement learning demonstrates a remarkable ability to handle continuous state and action spaces by updating the control policy in the actor-critic network architectures, thereby reducing the likelihood of safety hazards during fast-charging protocols.

4.3. Battery Safety and Thermal Management

In addition to SOC and SOH estimation, cloud-based BMS can also be tailored to a much more complex problem—that is, battery failure. Lithium-ion batteries are multiphysics and multiscale systems, and their safety and reliability are crucial due to their widespread adoption in various applications. However, given the intricate nature of battery behavior, accurately predicting failures remains a formidable challenge, given the lack of understanding of the underlying degradation mechanisms. In light of the ever-evolving cell and battery designs, the multitude of potential failure scenarios and associated risks make it impractical to comprehensively understand the origins and consequences of each through laboratory testing alone. While computational modeling can reduce the number of required experiments, its effective implementation can be limited by rigorous validation requirements and computational resources.

The establishment of a “safety envelope”, defining the operational range in which individual cells can function safely, is essential for ensuring the overall safety of electric vehicle battery packs. However, the challenge lies in acquiring a substantial dataset of battery failure tests. In a recent study, researchers developed a highly accurate computational model for lithium-ion pouch cells, incorporating calibrated constitutive models for each material composing the cell [79]. To construct a data-driven safety envelope, supervised machine learning techniques were applied to a vast matrix of severe mechanical loading scenarios. This study demonstrates the synergistic combination of numerical data generation and machine learning modeling to forecast the safety of battery systems.

Emerging technologies are addressing previously challenging obstacles by providing accessible and effective solutions, highlighting the significance of cloud-based AI modeling in battery BMS. Machine learning approaches utilizing data-driven frameworks excel at accurately forecasting complex nonlinear systems. A specific research study [80] focuses on the development of a tightly integrated cloud-based machine learning system for predicting real-life EV battery failure. By leveraging graphite/NMC cells, a data-driven early-prediction model is created, enabling the generation of longitudinal electronic health records through digital twins. The proposed hybrid semi-supervised machine learning model combines observational, empirical, physical, and statistical insights, achieving a 7.7% test error utilizing field data. Cloud-based machine learning approaches exemplify the significance of adopting a multifaceted strategy for continuous lifelong learning. These approaches not only provide a novel means of forecasting battery failure but also underscore the value of incorporating diverse methods to enhance accuracy and robustness.

Thermal management is a critical aspect in the context of battery systems, and a specific study [81] conducted a comprehensive analysis of the performance of a liquid-cooled Battery Thermal Management System (BTMS). The study primarily concentrated on the analysis of experimental data pertaining to air conditioning and the exploration of design considerations for the liquid-cooled Battery Thermal Management System (BTMS). By integrating these thermal characteristics, a more accurate and efficient operation of the liquid cooled BTMS can be achieved, thus contributing to the overall improvement of the HPACS for EVs. This can be achieved by coupling the battery electrochemical model with the machine learning model of HPACS and optimizing the liquid cooled BTMS based on the automatic calibration model and battery electrochemical model, leading to more efficient system optimization. In another case study, a multiphysics approach was employed to demonstrate the temperature-position-dependent thermal conductivity of Heat Pipes (HPs) [82]. By leveraging the multiphysics nature of HPs, which provides variable thermal conductivity, valuable insights into heat pipe efficiency can be gained. Increasing the condensation surface area of the heat pipes enables a reduction in the size and number of heat pipes required for cooling applications. However, it is crucial to utilize advanced methods to analyze the complex equations, multiphysics phenomena, and boundary conditions associated with these systems. By employing such advanced techniques, a deeper understanding of thermal management can be achieved, leading to improved design and performance of battery systems.

Machine learning techniques, such as physics-informed machine learning [58] offer a promising direction to follow. Such learning approach blends mathematical models with noisy data, utilizing neural networks or other kernel-based regression networks. By incorporating physical invariants into specialized network architectures, this approach can improve accuracy, training speed, and generalization. Additionally, this technique can automate the satisfaction of certain physical invariants for more effective implementation.

5. Current Limitations

5.1. Multiscale and Multiphysics Problems

While physics-informed learning has achieved remarkable success in various applications, ongoing efforts are being made to address challenges that involve multiple scales and physics. It is recommended to initially study each physics in isolation before integrating them, as learning multiple physics concurrently can pose computational challenges. Additionally, it is important to utilize fine-scale simulation data selectively to gain a broader understanding of the physics at a coarser scale. The existing body of research primarily focuses on models that specialize in predicting the SOH and Remaining Useful Life (RUL) over multiple cycles, as well as the SOC within a single charge/discharge cycle. However, to achieve a more comprehensive understanding of battery performance, it is necessary to develop a model that can forecast the long-term SOH from any arbitrary point in the charge/discharge cycle. This can be accomplished through a hybrid approach that combines sophisticated models capable of accurately forecasting the SOC up to a specific point in the cycle, such as a fully charged state, with a SOH model that takes into account multiple cycles. By integrating both short-term and long-term dynamics models, a comprehensive model of battery development can be created, enabling more accurate and reliable predictions of battery performance.

5.2. Gap between Lab Tests and Field Conditions

High-throughput testing offers a valuable means to obtain large and reliable datasets for machine learning applications. Various electrochemical techniques, such as cyclic voltammetry, galvanostatic charge/discharge, and electrochemical impedance spectroscopy, enable precise and reliable measurements of batteries’ lifetime, rate capability, capacity, and impedance. This comprehensive approach ensures that the acquired data reflects real-world conditions and provides a solid foundation for machine learning algorithms to analyze and extract valuable insights from the battery performance characteristics. Large amounts of meaningful data can be swiftly generated. Machine learning models can then be trained using this data, and the battery testing process can be expedited further by detecting poorly performing batteries based on their initial cycles.

One approach to reconciling standard laboratory tests with field data involves laboratory testing of batteries using representative loading patterns, based on characterized typical user driving patterns. This methodology offers the benefit of a controlled environment with high-precision equipment and frequent characterizations. However, it is crucial to supplement this approach with field data for multiple reasons. Firstly, laboratory experimentation is limited in scope and cannot encompass all necessary conditions over an extended duration, particularly as the number of aging parameters grows exponentially. In contrast, field data is readily accessible and covers the complete range of operating conditions, offering a relatively cost-effective option as the cells are already in practical use. Secondly, laboratory testing is artificial and may diverge from real-world usage. Constraints in time and equipment within the laboratory often result in extreme conditions and short resting periods between cycles, potentially leading to an underestimation of battery lifespan and an excessive design of battery packs. Thirdly, external factors encountered in real-world environments, such as seasonal temperature variations or mechanical vibrations that contribute to failure, are not accounted for in laboratory settings. Fourthly, the accumulation of additional data is always valuable in enhancing statistical confidence when constructing models for battery lifespan and performance, considering both inherent factors related to manufacturing variability and external factors associated with usage patterns. The standardization of methodologies for interpreting not only accelerated cycle aging data but also accelerated calendar aging and scenarios involving a combination of cycle and calendar aging is crucial to extending the applicability of models beyond laboratory-accelerated aging tests to real-world applications. Lastly, gaining a comprehensive understanding of the influence of cycles and calendar conditions on battery lifespan is imperative for both laboratory and field applications.

5.3. Data Generation and Model Training

Accurately predicting the state of batteries, both in real-time and offline, is critical to enhancing cycle life and ensuring safety by enabling informed engineering and adaptation to unfavorable conditions. However, due to the wide range of battery options and constantly evolving pack designs, it is challenging to predict cell behavior under various conditions. One promising approach to address this is to use finite-element model data to train machine learning algorithms that can predict cell performance when exposed to different loading conditions. While this approach offers numerous benefits, it also encounters a common obstacle faced by data-driven methods, which is the acquisition of trustworthy, abundant, high-quality, and pertinent experimental data. This requires overcoming obstacles such as experimental data collection and curation as well as ensuring that the data is representative of real-world usage scenarios. Overcoming these challenges and obtaining the necessary data will be crucial in the application of machine learning for battery prediction.

The battery dataset provided by the Prognostics Center of Excellence at NASA Ames is extensively utilized by researchers [83]. These datasets involve subjecting batteries to various operational profiles at different temperatures. Impedance measurements are recorded after each cycle. The NASA battery dataset is a valuable resource for researchers who are interested in studying battery performance, aging, and prognostics. Making open data and software available is a promising approach to enhancing the transferability of models and making them more useful for battery design. This involves the systematic generation of datasets and their release for reuse by other researchers.

Harnessing data-based methods for discerning materials, estimating the lifespan and efficiency of lithium-ion batteries, has proven promising, leading to a heightened interest in utilizing these methodologies to amplify the prognostic abilities related to cell behaviors. The recent developments in amassing and processing vast quantities of data have paved the way for real-time learning and forecasting of battery operations. For example, Severson and colleagues [84] recently furnished an openly accessible dataset packed with a comprehensive array of battery data. This dataset comprises 124 LFP-graphite cells, subjected to diverse quick-charging conditions, varying from 3.6 to 6 C, and evaluated within a temperature-controlled chamber at 30 °C, achieving up to 80% of their original capacities. The cells underwent one or two charging steps, such as 6 C charging from 0% to 40% State of Charge (SOC), followed by a 3 C charging process up to 80% SOC. Additionally, all cells were charged from 80% to 100% SOC using a 1 C Constant Current-Constant Voltage (CC-CV) phase to 3.6 V and depleted with a 4 C CC-CV phase down to 2.0 V, with the end current regulated at C/50. During the cyclic evaluation, both cell temperature and internal resistance were documented at 80% SOC. This dataset offers a valuable resource for those looking to delve into battery performance, especially pertaining to rapid charging conditions. The feature-based machine learning model adeptly utilized voltage and capacity data from the initial 100 cycles (roughly 10% of the overall lifespan) of equivalent commercial cells to build a straightforward regression model capable of forecasting the cycle lifespan with approximately 90% precision. Nevertheless, a pressing query persists: How might data-based methods be employed to anticipate cell behavior within ever-changing field uses? Additionally, can these strategies provide an efficient solution to comprehend and predict the reaction of emerging cell and pack configurations to authentic environmental conditions, thus enhancing the efficacy of battery systems?

In certain scenarios, gathering training data can be a costly and challenging task. In such cases, there is a growing need to develop high-performance learning models that can be trained using data from different domains that is more easily accessible. This technique is commonly known as transfer learning [85]. Transfer learning offers a practical solution to the challenge of obtaining sufficient training data and has become increasingly relevant in a wide range of fields. With the help of transfer learning, it is possible to achieve high-performing models without incurring excessive costs or resource allocation.

While the exploration of negative transfer remains somewhat sparse, infusing negative transfer methods into transfer learning frameworks could be a potent path for forthcoming research. One conceivable trajectory entails the creation of solutions that cater to multiple origin domains, potentially enhancing the filtering out of irrelevant data. A further promising field is the concept of optimal transfer, a process that aims to selectively convey certain information from a source domain to maximize the performance of the target learner. Although there is some intersection between negative and optimal transfer, optimal transfer concentrates on boosting the performance of the target, whereas negative transfer emphasizes the detrimental influence exerted by the source domain on the target learner.

6. Outlook

6.1. Cloud-End Collaboration

In BMS, a collaboration with cloud computing capitalizes on the substantial computational power and storage space offered by cloud servers, overcoming the constraints of traditional BMS and paving the way for the use of advanced algorithms such as deep learning and reinforcement learning. The BMS’s 5G communication module is used to capture real-time battery data, which can then be employed to build battery models in the cloud. This allows for a two-way dynamic correlation between the digital twin model and the actual battery, enabling detailed and secure battery management throughout its lifespan through online learning and model updating. The data gathered from the batteries and their associated digital twin models throughout their full lifespan is used to construct an optimal performance improvement path via the application of smart OTA remote program update technology. In order to cater to the escalating needs of battery management, the immediate processing abilities of the embedded system are integrated with the high-level intelligence offered by the cloud platform. To enhance the efficiency of the system further, the notion of a collaborative management model that incorporates cloud, edge, and end is introduced.

6.2. Digital Twins

Leveraging a digital twin, a virtual counterpart of the physical object, may serve as a bridge linking laboratory experiments to real-world uses [86]. The digital twin, integrating sensor readings from real-world scenarios into computational models, can faithfully simulate the conductivity of lithium-ion batteries under a variety of operational states like random discharge, dynamic charge, and idle stages. Fundamental aspects like voltage, current, temperature, and so on can be derived from the onboard BMS and used to optimize the digital twin. The goal is to improve the predictive ability of the digital twin under realistic conditions, which can lead to a better understanding and evaluation of battery behavior. The use of IoT technology, such as the MQTT protocol, enables the collection of large amounts of sequential data from the ever-increasing running time of EVs. This data can be seamlessly transmitted to the cloud for analysis, improving our understanding of complex battery behavior under different operating conditions.

Despite the potential benefits of digital twins, several challenges need to be solved before their widespread implementation can become a reality. Firstly, acquiring accurate and comprehensive observational data can be difficult, as it may be scarce and noisy and can take various forms. Secondly, physics-based computational models can be complex to set up and calibrate, requiring significant effort in pre-processing and determining initial and boundary conditions, making their use in real-time applications impractical. Additionally, the physical models of complex systems are often only partially understood, with conservation laws that do not provide a complete system of equations without further assumptions. Physics-informed learning, however, offers a solution to these issues by seamlessly blending physical models with data and utilizing automatic differentiation to eliminate the need for mesh generation.

6.3. Data-Model Fusion

Simulations based on fundamental principles are commonly employed to probe material degradation, incorporating techniques like density functional theory and molecular dynamics. Additionally, the transformation of microstructures and formations, such as lithium dendrite creation and phase segregation of active electrode materials, is examined using physics-rooted models like the phase field method. Despite the phase field modeling not yet achieving complete cell simulation, the simulation results align well with experimental data. In order to carry out in situ computations, a machine learning model needs to be trained first, followed by the establishment of a database comprising previous outcomes from multiscale first principles and phase-field simulations. This model serves as a proxy for the simulations; if machine learning demonstrates significant uncertainty, an additional simulation is conducted, added to the database, and the machine learning model is retrained. This iterative process of active learning holds the potential to drastically reduce the number of simulations required to understand a system. Similar machine learning applications can be utilized in experimental design and to eliminate costly experiments. Further studies focusing on the mechanical properties of solid electrolytes and voltage demonstrate how machine learning can expedite simulations.

As the fusion of physics-based modeling and machine learning progresses, researchers are likely to frequently encounter situations where multiple models of the same phenomenon are developed using the same training data, or even data that is equally informative. Even though their predictions based on the training data are nearly identical, this could lead to differentially trained networks. To address this issue, the construction of machine learning-based transformations between theories, models of varying complexity, and predictive models that can be validated is crucial. This will ensure that a phenomenon retains a unique and clear physical interpretation, even when multiple models are used to describe it. The merging of data and models can yield enhanced representations of physical systems by capitalizing on the strengths of each information source.

6.4. Explainable AI

In several scientific disciplines, the prevailing trend is an overflow of observational data that often surpasses our ability to understand and analyze effectively. Despite machine learning (ML) methodologies showing substantial promise and early successes, they continue to face hurdles in deriving significant insights from the wealth of data at hand. Furthermore, a sole reliance on data-driven models can lead to accurate correlations with observed data, but such models might produce physically inconsistent or implausible predictions due to extrapolation or biases inherent in the data, potentially diminishing their generalizing capabilities. In many instances, AI systems fall short of offering clear explanations of their autonomous actions to human users. While some argue that the emphasis on explain ability is misguided and unnecessary for specific AI applications, it remains vital for a number of key applications where users need to understand, trust, and effectively manage their AI counterparts. Explainable AI (XAI) systems [87], striving to improve their understandability for human users by delivering explanations of their actions, hold promise for enriching materials science and battery modeling. They can contribute to a more thorough understanding of the underlying physics, more effective hypothesis testing, and a higher level of confidence in learning models. By granting researchers the ability to interpret and visualize decision-making processes in complex models, XAI can assist in identifying crucial features and parameters impacting material and cell characteristics. This understanding can further promote the creation of new materials with superior properties and deepen our comprehension of their behavior under varying conditions. Furthermore, the transparency and interpretability provided by XAI methods can foster trust in learning models, empowering researchers to make well-informed decisions and draw accurate conclusions. In designing more effective, user-friendly AI systems, certain basic principles and domain-specific knowledge must be taken into consideration. Specifically, an XAI system should be capable of articulating its capabilities and insights, justifying its past actions, outlining its current trajectory, and revealing crucial information that steers its decisions.

7. Conclusions

Artificial intelligence and machine learning methods are increasingly being utilized to reveal patterns and insights from the expanding volume of battery data. However, these approaches often require craftsmanship and intricate implementations, especially when system dynamics are predominantly influenced by spatio-temporal context. This is where cloud-based digital solutions come in. The cloud environment can be configured by us-ers/developers to meet their specific needs and requirements. Cloud-BMS opens up a new world for collecting observational data and assimilating it sensibly through the seamless integration of data and abstract mathematical operators. However, merely moving data to the cloud isn’t enough. New physics-based learning algorithms and computational frameworks are vital in addressing the challenges faced by complex battery systems, especially in real-time EV scenarios. Integrating AI and machine learning into BMS could boost battery diagnosis and prognosis accuracy. Furthermore, integrating cloud-based frameworks into the BMS can improve battery monitoring and management efficiency and scalability. Advanced sensing and monitoring technologies, such as wireless sensor networks and IoT devices, could allow for real-time data collection and analysis, enhancing battery management precision. The fusion of data-driven and physics-based modeling through physics-informed machine learning techniques promises to further boost battery management performance. The potential to model long-range correlations across multiple time scales, simulate thermodynamics and kinetics, and explore the dynamics of nonlinear battery systems holds promise for accelerating technology transfer from academic progress to real-world applications.

Author Contributions

Conceptualization and methodology, J.Z.; software, J.Z. and J.W.; writing—original draft preparation, D.S. and J.Z.; writing—review and editing, J.Z., A.F.B. and C.E.; visualization, J.Z. and Z.W.; supervision, resources, project administration, A.F.B. and Y.L.; funding acquisition, D.S. and Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This study was financially supported by [Independent Innovation Projects of the Hubei Longzhong Laboratory] grant number [2022ZZ-24], [Central Government to Guide Local Science and Technology Development fund Projects of Hubei Province] grant number [2022BGE267], [Basic Research Type of Science and Technology Planning Projects of Xiangyang City] grant number [2022ABH006759] and [Hubei Superior and Distinctive Discipline Group of “New Energy Vehicle and Smart Transportation”] grant number [XKTD072023].

Data Availability Statement

The data could not be shared due to confidentiality.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Liang, X.; Zhang, S.; Wu, Y.; Xing, J.; He, X.; Zhang, K.M.; Wang, S.; Hao, J. Air quality and health benefits from fleet electrification in China. Nat. Sustain. 2019, 2, 962–971. [Google Scholar] [CrossRef]

- Li, M.; Lu, J.; Chen, Z.; Amine, K. 30 years of lithium-ion batteries. Adv. Mater. 2018, 30, 1800561. [Google Scholar] [CrossRef] [PubMed]

- Meng, H.; Li, Y.F. A review on prognostics and health management (PHM) methods of lithium-ion batteries. Renew. Sustain. Energy Rev. 2019, 116, 109405. [Google Scholar] [CrossRef]

- Neubauer, J.; Wood, E. The impact of range anxiety and home, workplace, and public charging infrastructure on simulated battery electric vehicle lifetime utility. J. Power Source 2014, 257, 12–20. [Google Scholar] [CrossRef]

- Xiong, R.; Pan, Y.; Shen, W.; Li, H.; Sun, F. Lithium-ion battery aging mechanisms and diagnosis method for automotive applications: Recent advances and perspectives. Renew. Sustain. Energy Rev. 2020, 131, 110048. [Google Scholar] [CrossRef]

- Gabbar, H.A.; Othman, A.M.; Abdussami, M.R. Review of battery management systems (BMS) development and industrial standards. Technologies 2021, 9, 28. [Google Scholar] [CrossRef]

- Vichard, L.; Ravey, A.; Venet, P.; Harel, F.; Pelissier, S.; Hissel, D. A method to estimate battery SOH indicators based on vehicle operating data only. Energy 2021, 225, 120235. [Google Scholar] [CrossRef]

- Li, D.; Wang, L. Onboard health estimation approach with segment warping and trajectory self-learning for swappable lithium battery. J. Energy Storage 2022, 55, 105749. [Google Scholar] [CrossRef]

- Zhao, J.; Burke, A.F. Electric Vehicle Batteries: Status and Perspectives of Data-Driven Diagnosis and Prognosis. Batteries 2022, 8, 142. [Google Scholar] [CrossRef]

- Sulzer, V.; Mohtat, P.; Aitio, A.; Lee, S.; Yeh, Y.T.; Steinbacher, F.; Khan, M.U.; Lee, J.W.; Siegel, J.B.; Howey, D.A. The challenge and opportunity of battery lifetime prediction from field data. Joule 2021, 5, 1934–1955. [Google Scholar] [CrossRef]

- Peng, J.; Meng, J.; Chen, D.; Liu, H.; Hao, S.; Sui, X.; Du, X. A Review of Lithium-Ion Battery Capacity Estimation Methods for Onboard Battery Management Systems: Recent Progress and Perspectives. Batteries 2022, 8, 229. [Google Scholar] [CrossRef]

- Berecibar, M. Machine-learning techniques used to accurately predict battery life. Nature 2019, 568, 325–326. [Google Scholar] [CrossRef]

- Ng, M.F.; Zhao, J.; Yan, Q.; Conduit, G.J.; Seh, Z.W. Predicting the state of charge and health of batteries using data-driven machine learning. Nat. Mach. Intell. 2020, 2, 161–170. [Google Scholar] [CrossRef]

- Zhao, J.; Nan, J.; Wang, J.; Ling, H.; Lian, Y.; Burke, A.F. Battery Diagnosis: A Lifelong Learning Framework for Electric Vehicles. In Proceedings of the 2022 IEEE Vehicle Power and Propulsion Conference (VPPC), Merced, CA, USA, 1–4 November 2022; IEEE: New York, NY, USA, 2022; pp. 1–6. [Google Scholar]

- Zhao, J.; Burke, A.F. Battery prognostics and health management for electric vehicles under industry 4.0. J. Energy Chem. 2023. [Google Scholar] [CrossRef]

- Gibney, E. Europe sets its sights on the cloud: Three large labs hope to create a giant public-private computing network. Nature 2015, 523, 136–138. [Google Scholar] [CrossRef]

- Drake, N. How to catch a cloud. Nature 2015, 522, 115–116. [Google Scholar] [CrossRef]

- Bosch Mobility Solutions: Battery in the Cloud. Available online: https://www.bosch-mobility-solutions.com/en/solutions/software-and-services/battery-in-the-cloud/battery-in-the-cloud/ (accessed on 19 August 2022).

- Panasonic Announces UBMC Service: A Cloud-Based Battery Management Service to Ascertain Battery State in Electric Mobility Vehicles. Available online: https://news.panasonic.com/global/press/data/2020/12/en201210-1/en201210-1.pdf (accessed on 19 August 2022).

- HUAWEI: CloudLi. Available online: https://carrier.huawei.com/en/products/digital-power/telecom-energy/Central-Office-Power (accessed on 19 August 2022).

- National Monitoring and Management Platform for NEVs. Available online: http://www.bitev.org.cn/a/48.html (accessed on 19 August 2022).

- Xing, Y.; Ma, E.W.; Tsui, K.L.; Pecht, M. Battery management systems in electric and hybrid vehicles. Energies 2011, 4, 1840–1857. [Google Scholar] [CrossRef]

- Liu, K.; Li, K.; Peng, Q.; Zhang, C. A brief review on key technologies in the battery management system of electric vehicles. Front. Mech. Eng. 2019, 14, 47–64. [Google Scholar] [CrossRef]

- Xiong, R.; Li, L.; Tian, J. Towards a smarter battery management system: A critical review on battery state of health monitoring methods. J. Power Source 2018, 405, 18–29. [Google Scholar] [CrossRef]

- Yang, N.; Zhang, X.; Li, G. State of charge estimation for pulse discharge of a LiFePO4 battery by a revised Ah counting. Electrochim. Acta 2015, 151, 63–71. [Google Scholar] [CrossRef]

- Lee, S.; Kim, J.; Lee, J.; Cho, B.H. State-of-charge and capacity estimation of lithium-ion battery using a new open-circuit voltage versus state-of-charge. J. Power Source 2008, 185, 1367–1373. [Google Scholar] [CrossRef]

- Tran, M.K.; Mathew, M.; Janhunen, S.; Panchal, S.; Raahemifar, K.; Fraser, R.; Fowler, M. A comprehensive equivalent circuit model for lithium-ion batteries, incorporating the effects of state of health, state of charge, and temperature on model parameters. J. Energy Storage 2021, 43, 103252. [Google Scholar] [CrossRef]

- Tran, M.K.; DaCosta, A.; Mevawalla, A.; Panchal, S.; Fowler, M. Comparative study of equivalent circuit models performance in four common lithium-ion batteries: LFP, NMC, LMO, NCA. Batteries 2021, 7, 51. [Google Scholar] [CrossRef]

- Wei, Y.; Wang, S.; Han, X.; Lu, L.; Li, W.; Zhang, F.; Ouyang, M. Toward more realistic microgrid optimization: Experiment and high-efficient model of Li-ion battery degradation under dynamic conditions. eTransportation 2022, 14, 100200. [Google Scholar] [CrossRef]

- Wei, Y.; Han, T.; Wang, S.; Qin, Y.; Lu, L.; Han, X.; Ouyang, M. An efficient data-driven optimal sizing framework for photovoltaics-battery-based electric vehicle charging microgrid. J. Energy Storage 2022, 55, 105670. [Google Scholar] [CrossRef]

- Wei, Y.; Yao, Y.; Pang, K.; Xu, C.; Han, X.; Lu, L.; Li, Y.; Qin, Y.; Zheng, Y.; Wang, H.; et al. A Comprehensive Study of Degradation Characteristics and Mechanisms of Commercial Li (NiMnCo) O2 EV Batteries under Vehicle-To-Grid (V2G) Services. Batteries 2022, 8, 188. [Google Scholar] [CrossRef]

- Han, X.; Lu, L.; Zheng, Y.; Feng, X.; Li, Z.; Li, J.; Ouyang, M. A review on the key issues of the lithiumion battery degradation among the whole life cycle. eTransportation 2019, 1, 100005. [Google Scholar] [CrossRef]

- Hu, X.; Xu, L.; Lin, X.; Pecht, M. Battery lifetime prognostics. Joule 2020, 4, 310–346. [Google Scholar] [CrossRef]

- Deng, Z.; Hu, X.; Li, P.; Lin, X.; Bian, X. Data-driven battery state of health estimation based on random partial charging data. IEEE Trans. Power Electron. 2021, 37, 5021–5031. [Google Scholar] [CrossRef]

- Khaleghi, S.; Hosen, M.S.; Karimi, D.; Behi, H.; Beheshti, S.H.; Van Mierlo, J.; Berecibar, M. Developing an online data-driven approach for prognostics and health management of lithium-ion batteries. Appl. Energy 2022, 308, 118348. [Google Scholar] [CrossRef]

- Gou, B.; Xu, Y.; Feng, X. An ensemble learning-based data-driven method for online state-of-health estimation of lithium-ion batteries. IEEE Trans. Transp. Electrif. 2020, 7, 422–436. [Google Scholar] [CrossRef]

- Li, R.; Hong, J.; Zhang, H.; Chen, X. Data-driven battery state of health estimation based on interval capacity for real-world electric vehicles. Energy 2022, 257, 124771. [Google Scholar] [CrossRef]

- Wu, W.; Wang, S.; Wu, W.; Chen, K.; Hong, S.; Lai, Y. A critical review of battery thermal performance and liquid based battery thermal management. Energy Convers. Manag. 2019, 182, 262–281. [Google Scholar] [CrossRef]

- Kim, J.; Oh, J.; Lee, H. Review on battery thermal management system for electric vehicles. Appl. Therm. Eng. 2019, 149, 192–212. [Google Scholar] [CrossRef]

- Wang, X.; Wei, X.; Dai, H. Estimation of state of health of lithium-ion batteries based on charge transfer resistance considering different temperature and state of charge. J. Energy Storage 2019, 21, 618–631. [Google Scholar] [CrossRef]

- Barsukov, Y. Battery Cell Balancing: What to Balance and How; Texas Instruments: Dallas, TX, USA, 2009. [Google Scholar]

- Daowd, M.; Omar, N.; Van Den Bossche, P.; Van Mierlo, J. Passive and active battery balancing comparison based on MATLAB simulation. In Proceedings of the 2011 IEEE Vehicle Power and Propulsion Conference, Chicago, IL, USA, 6–9 September 2011; IEEE: New York, NY, USA, 2011; pp. 1–7. [Google Scholar]

- Turksoy, A.; Teke, A.; Alkaya, A. A comprehensive overview of the dc-dc converter-based battery charge balancing methods in electric vehicles. Renew. Sustain. Energy Rev. 2020, 133, 110274. [Google Scholar] [CrossRef]