Machine Learning Estimation of Battery Efficiency and Related Key Performance Indicators in Smart Energy Systems

Abstract

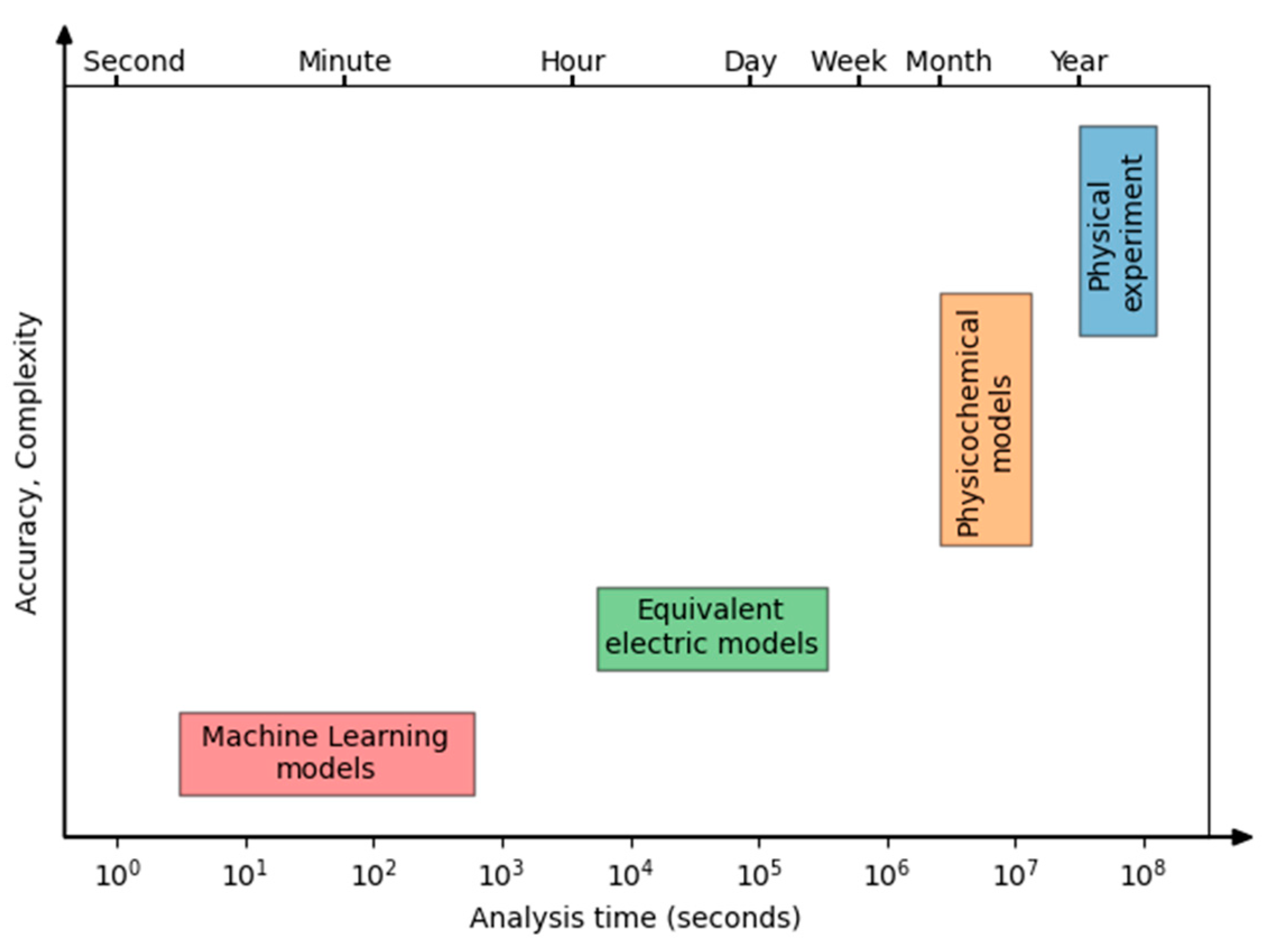

1. Introduction

2. Materials and Methods

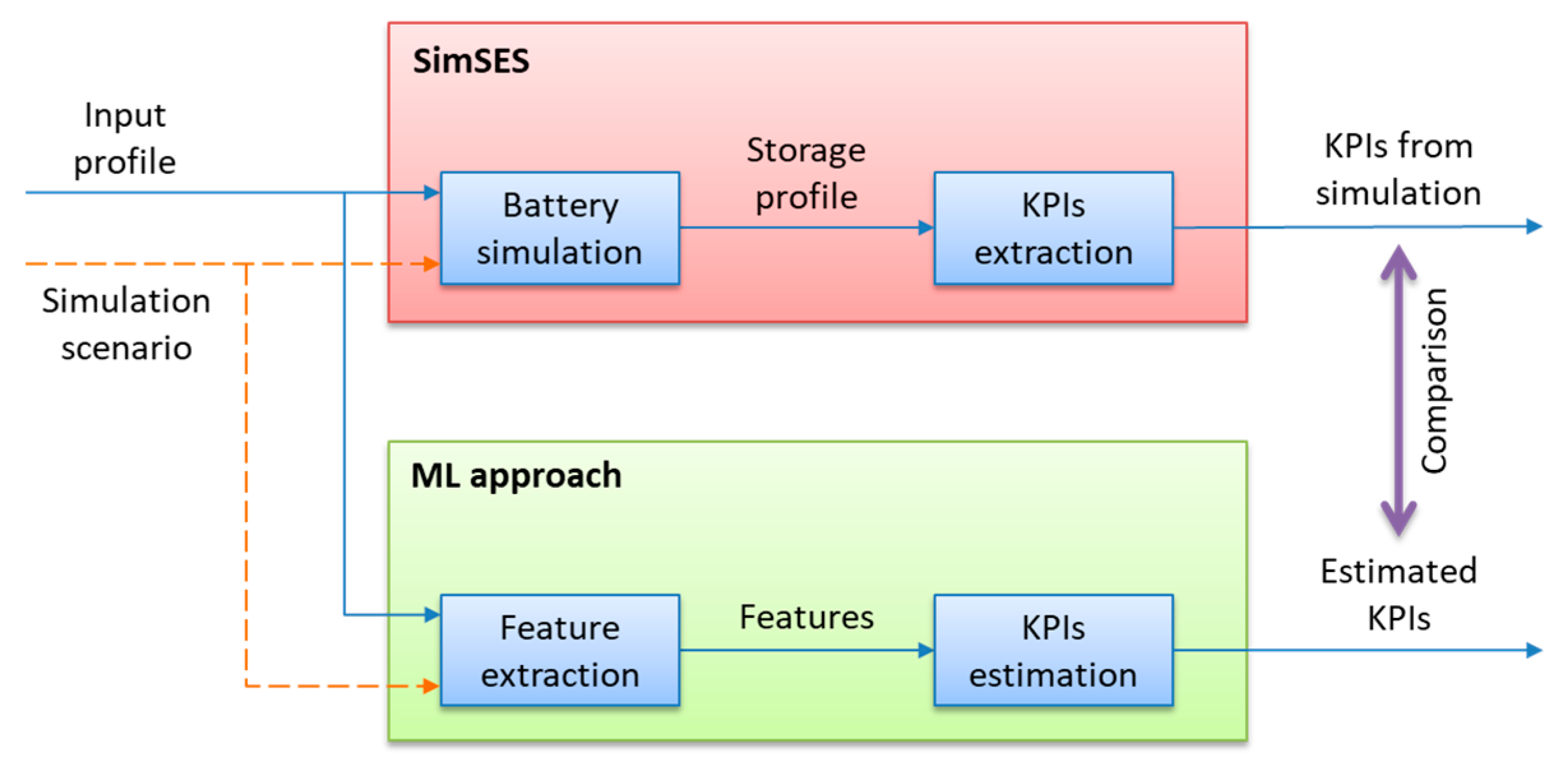

2.1. General Description

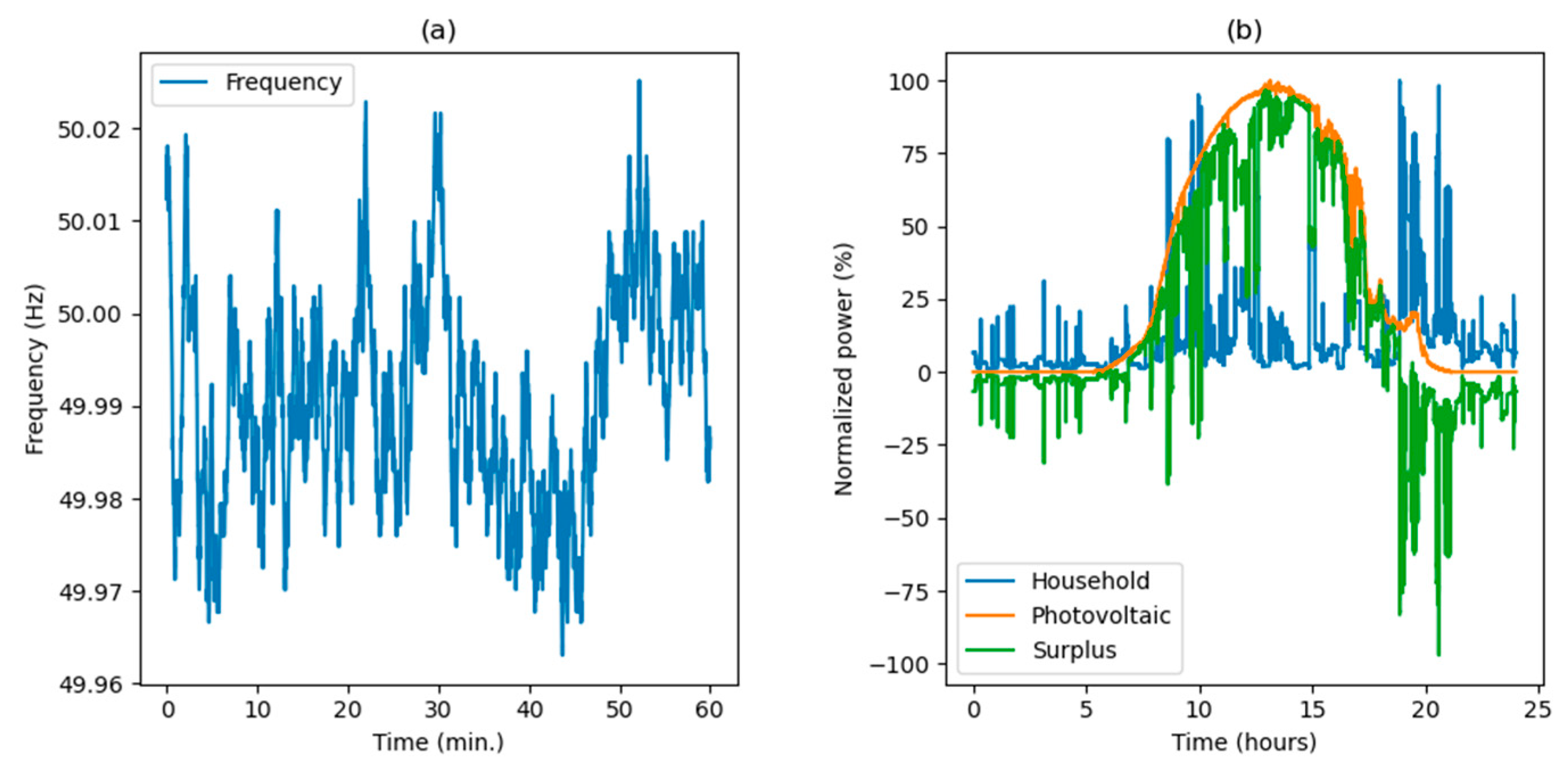

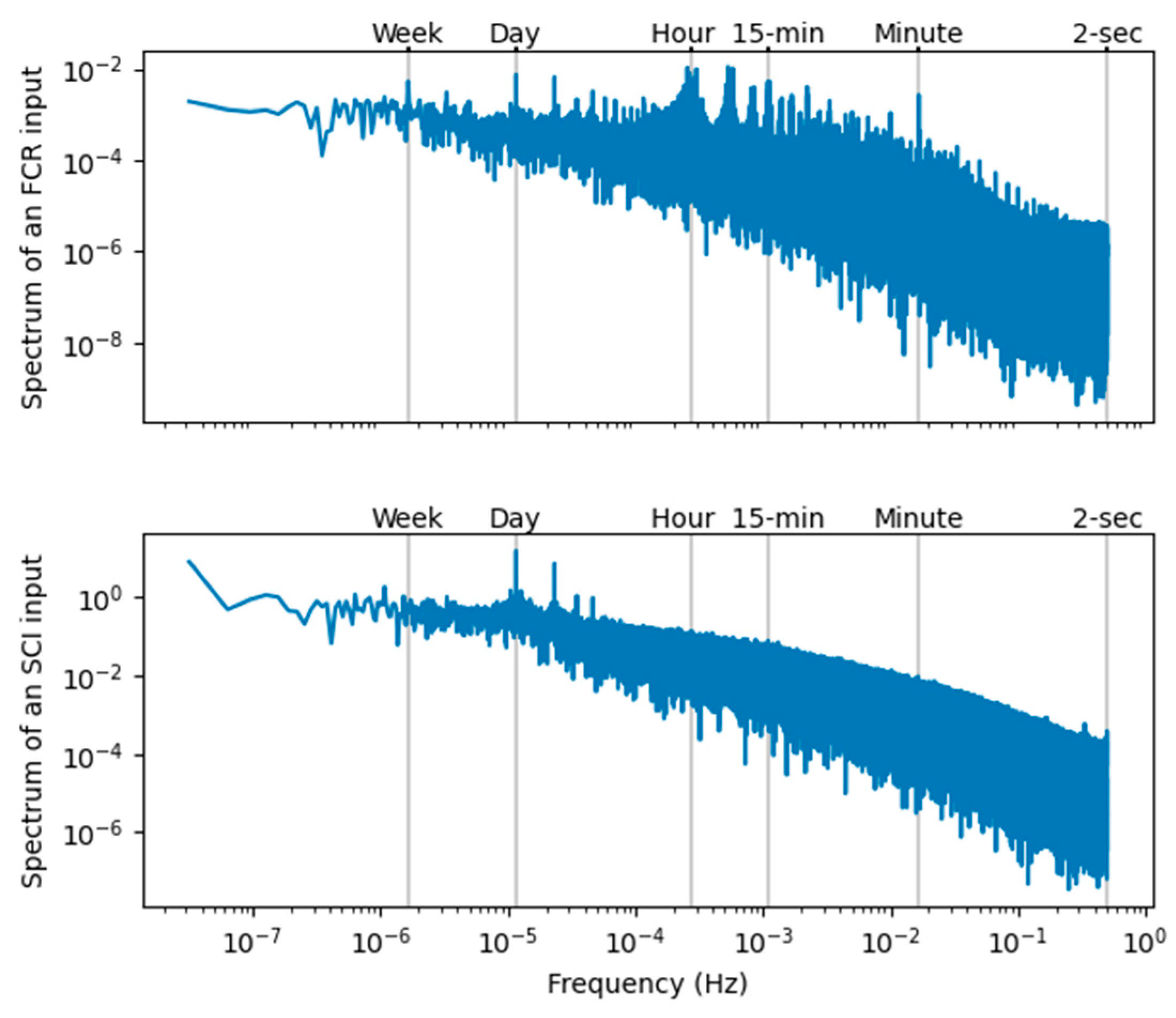

2.2. Datasets

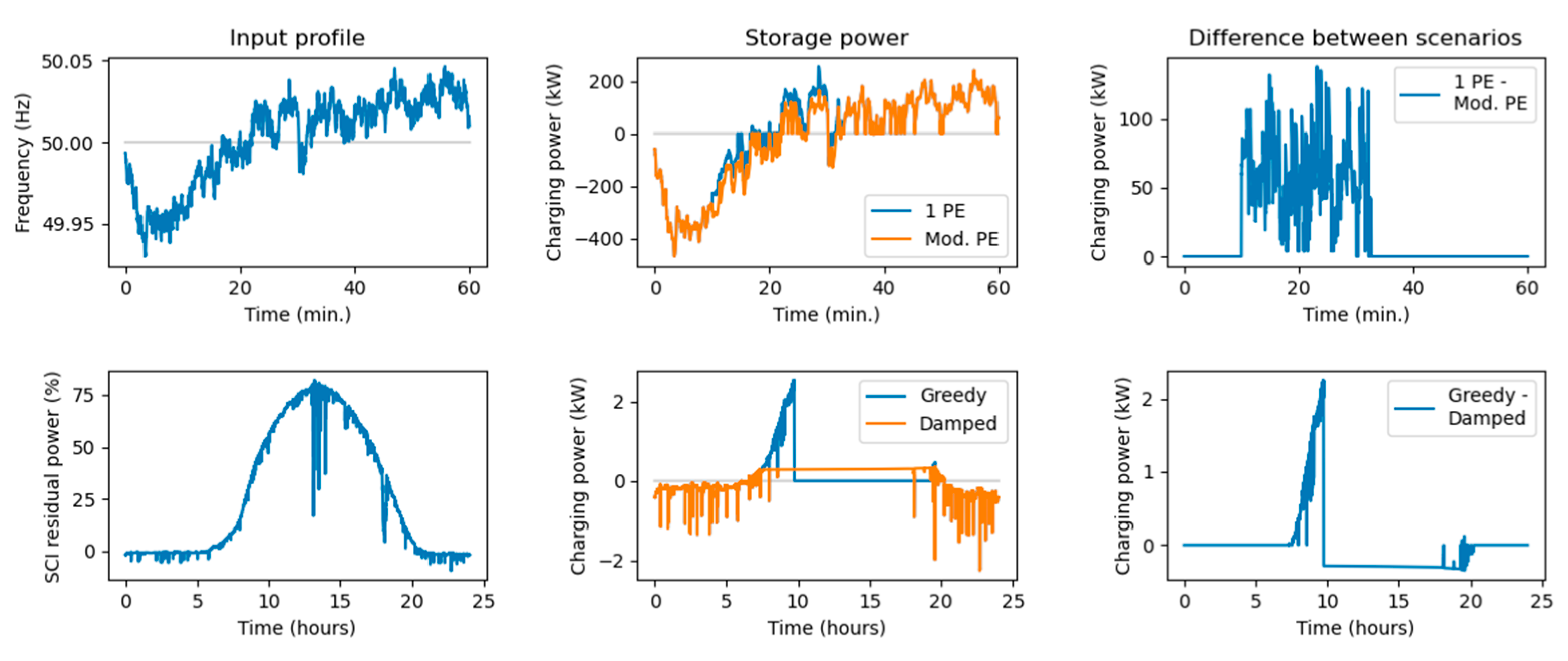

2.3. Simulation Scenarios

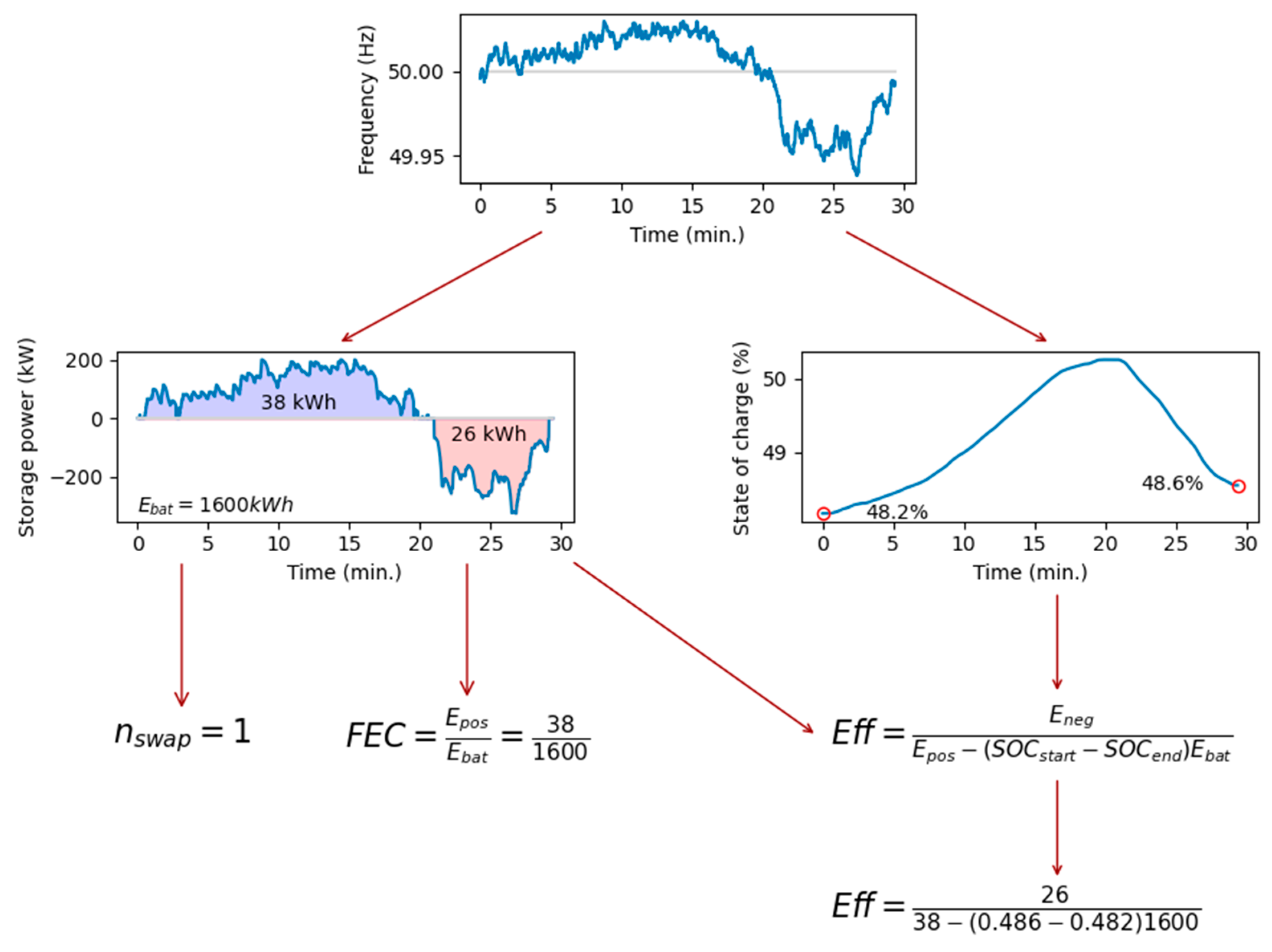

2.4. Key Performance Indicators

2.5. Regression Performance Metrics

2.6. Regression Models

3. Results

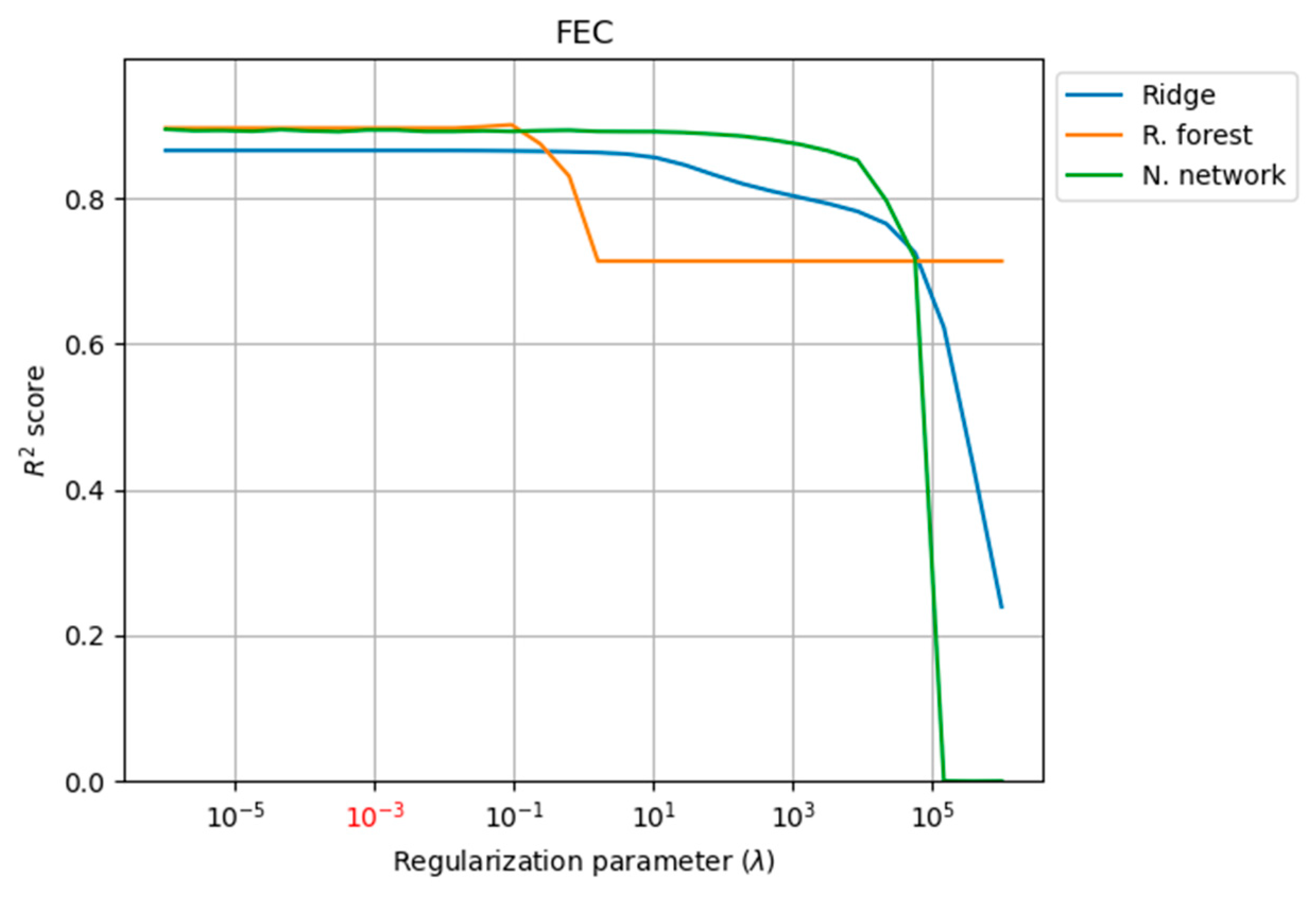

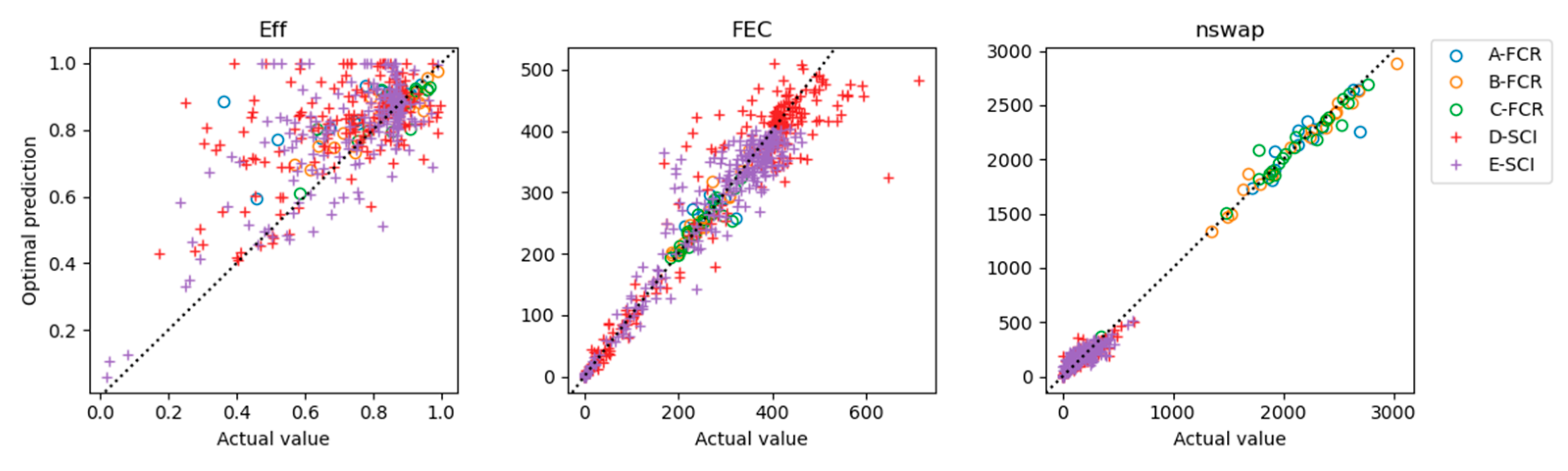

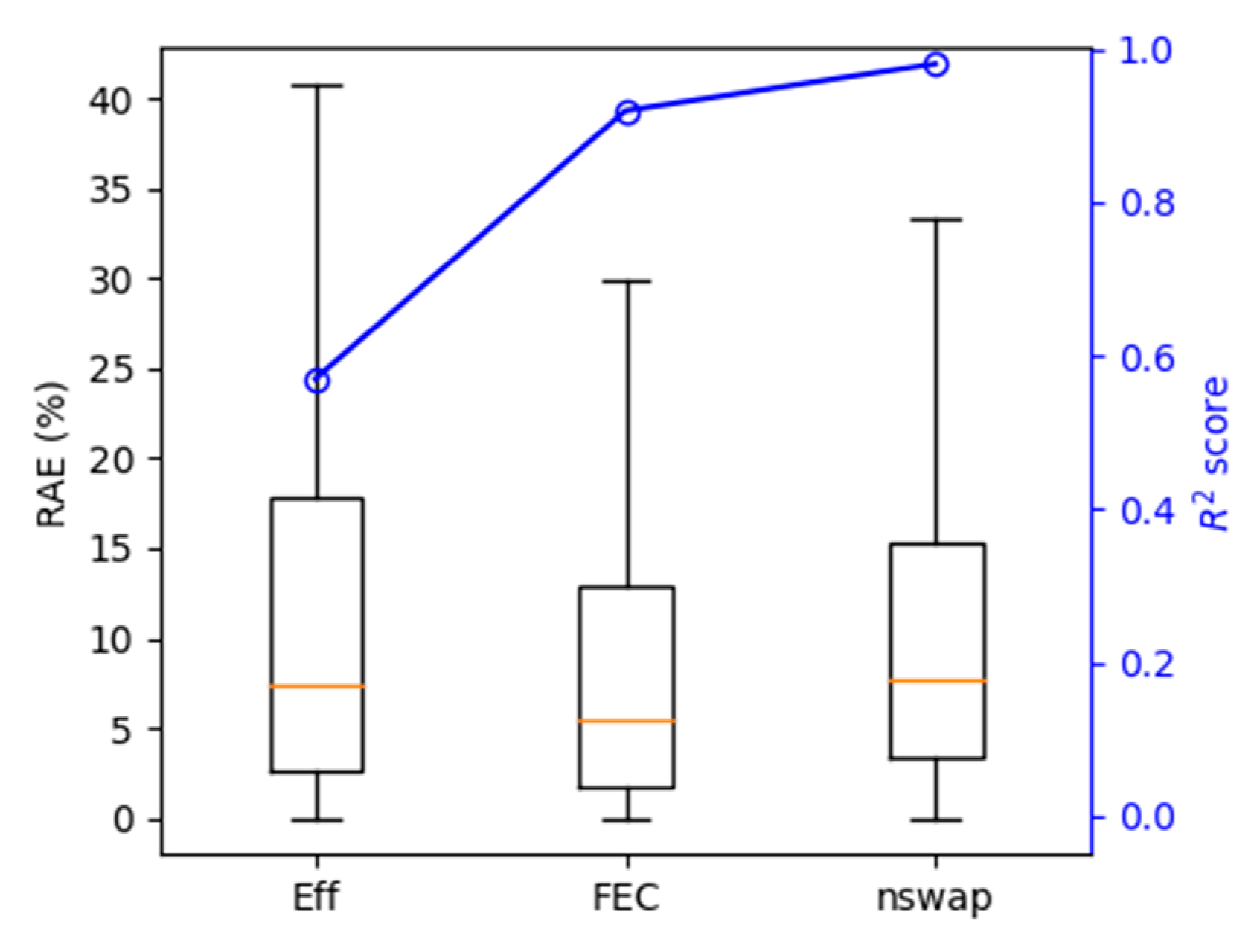

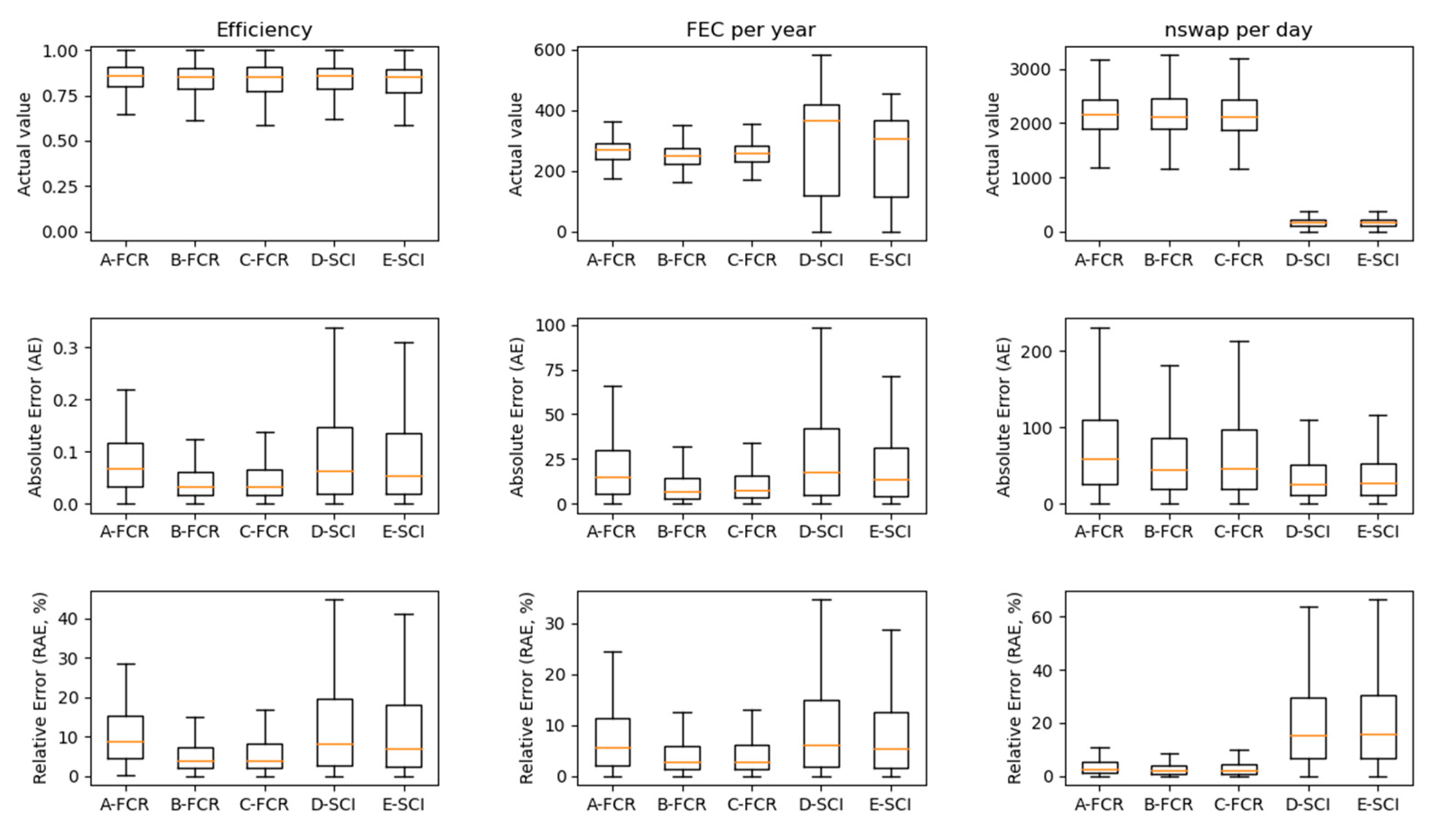

3.1. Optimal Models

3.2. Generalization of Predictions

4. Discussion

4.1. Interpreting Results

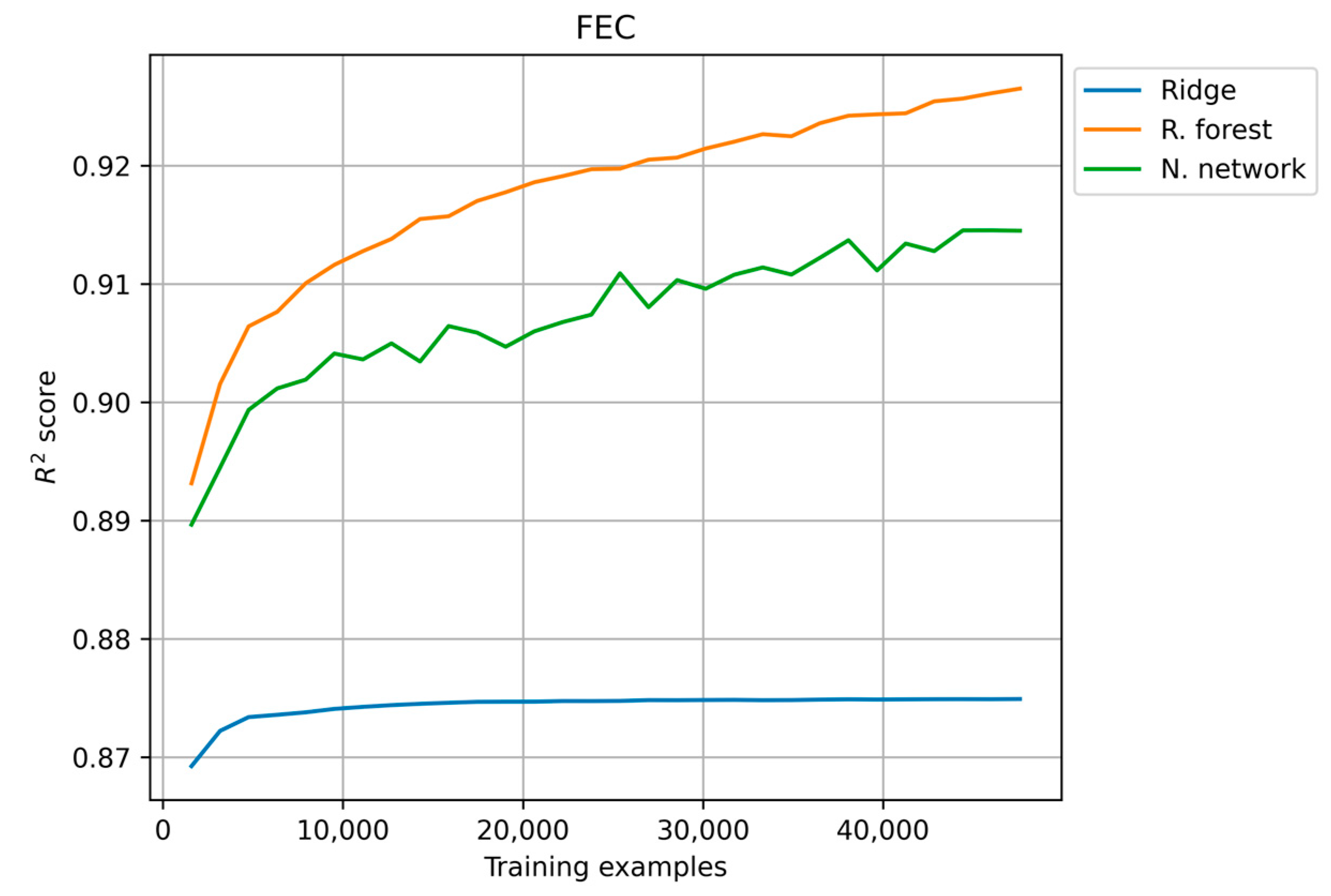

4.2. Learning Curves

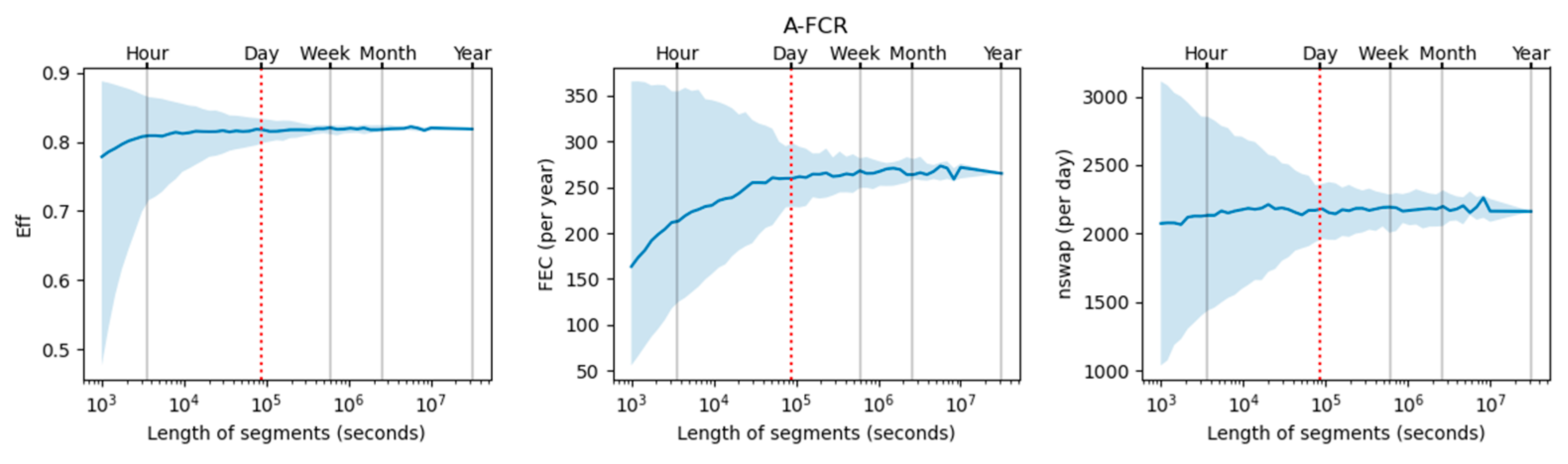

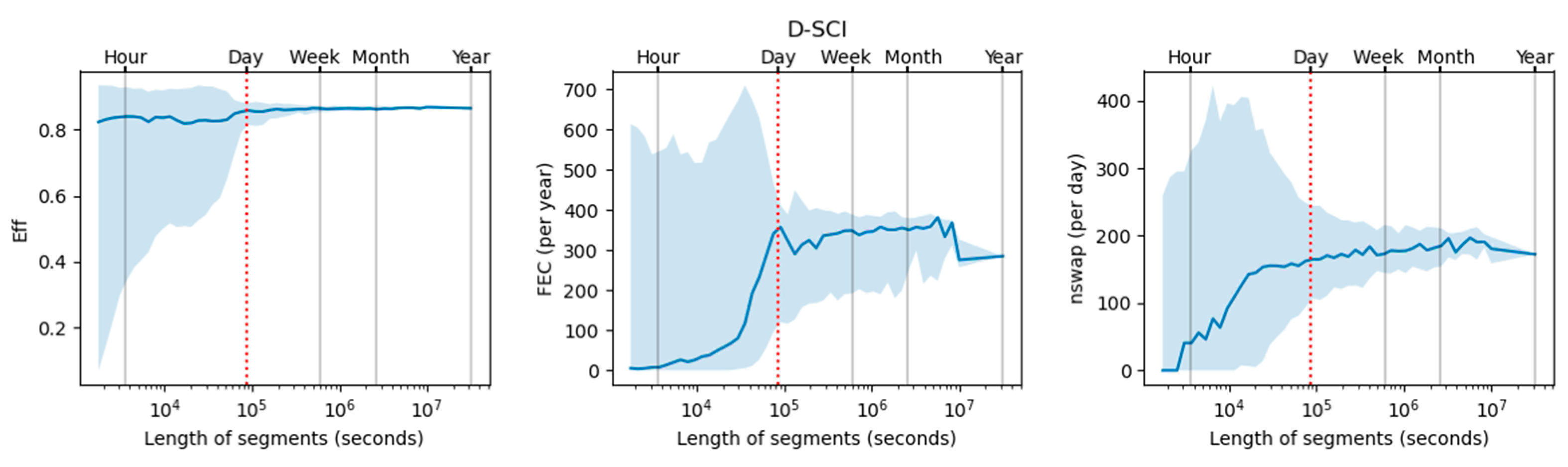

4.3. Length of Input Profiles

4.4. Sampling Times

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhao, Y.; Pohl, O.; Bhatt, A.I.; Collis, G.E.; Mahon, P.J.; Rüther, T.; Hollenkamp, A.F. A Review on Battery Market Trends, Second-Life Reuse, and Recycling. Sustain. Chem. 2021, 2, 167–205. [Google Scholar] [CrossRef]

- Figgener, J.; Stenzel, P.; Kairies, K.-P.; Linßen, J.; Haberschusz, D.; Wessels, O.; Angenendt, G.; Robinius, M.; Stolten, D.; Sauer, D.U. The Development of Stationary Battery Storage Systems in Germany–A Market Review. J. Energy Storage 2020, 29, 101153. [Google Scholar] [CrossRef]

- Figgener, J.; Hecht, C.; Haberschusz, D.; Bors, J.; Spreuer, K.G.; Kairies, K.-P.; Stenzel, P.; Sauer, D.U. The Development of Battery Storage Systems in Germany: A Market Review (Status 2023). arXiv 2023, arXiv:2203.06762v3. [Google Scholar] [CrossRef]

- Han, B.; Bompard, E.; Profumo, F.; Xia, Q. Paths toward Smart Energy: A Framework for Comparison of the EU and China Energy Policy. IEEE Trans. Sustain. Energy 2014, 5, 423–433. [Google Scholar] [CrossRef]

- Luís Schaefer, J.; Siluk, J.C.M.; Siluk, M.; De Carvalho, P.S. Critical Success Factors for the Implementation and Management of Energy Cloud Environments. Int. J. Energy Res. 2022, 46, 13752–13768. [Google Scholar] [CrossRef]

- Alhasnawi, B.N.; Jasim, B.H.; Esteban, M.D.; Guerrero, J.M. A Novel Smart Energy Management as a Service over a Cloud Computing Platform for Nanogrid Appliances. Sustainability 2020, 12, 9686. [Google Scholar] [CrossRef]

- ENTSO-E. Commission Regulation (EU) 2017/1485 of 2 August 2017 Establishing a Guideline on Electricity Transmission System Operation; ENTSO-E: Brussels, Belgium, 2017. [Google Scholar]

- Hesse, H.C.; Schimpe, M.; Kucevic, D.; Jossen, A. Lithium-Ion Battery Storage for the Grid—A Review of Stationary Battery Storage System Design Tailored for Applications in Modern Power Grids. Energies 2017, 10, 2107. [Google Scholar] [CrossRef]

- Resch, M.; Buehler, J.; Klausen, M.; Sumper, A. Impact of Operation Strategies of Large Scale Battery Systems on Distribution Grid Planning in Germany. Renew. Sustain. Energy Rev. 2017, 74, 1042–1063. [Google Scholar] [CrossRef]

- Henni, S.; Becker, J.; Staudt, P.; vom Scheidt, F.; Weinhardt, C. Industrial Peak Shaving with Battery Storage Using a Probabilistic Forecasting Approach: Economic Evaluation of Risk Attitude. Appl. Energy 2022, 327, 120088. [Google Scholar] [CrossRef]

- Gong, H.; Ionel, D.M. Improving the Power Outage Resilience of Buildings with Solar Pv through the Use of Battery Systems and Ev Energy Storage. Energies 2021, 14, 5749. [Google Scholar] [CrossRef]

- Lund, P.D. Improving the Economics of Battery Storage. Joule 2020, 4, 2543–2545. [Google Scholar] [CrossRef]

- Eyer, J.; Corey, G. Energy Storage for the Electricity Grid: Benefits and Market Potential Assessment Guide. Sandia Natl. Lab. 2010, 20, 5. [Google Scholar]

- Schmidt, A.P.; Bitzer, M.; Imre, Á.W.; Guzzella, L. Experiment-Driven Electrochemical Modeling and Systematic Parameterization for a Lithium-Ion Battery Cell. J. Power Sources 2010, 195, 5071–5080. [Google Scholar] [CrossRef]

- Liu, K.; Gao, Y.; Zhu, C.; Li, K.; Fei, M.; Peng, C.; Zhang, X.; Han, Q.-L. Electrochemical Modeling and Parameterization towards Control-Oriented Management of Lithium-Ion Batteries. Control Eng. Pract. 2022, 124, 105176. [Google Scholar] [CrossRef]

- Miranda, D.; Gonçalves, R.; Wuttke, S.; Costa, C.M.; Lanceros-Méndez, S. Overview on Theoretical Simulations of Lithium-Ion Batteries and Their Application to Battery Separators. Adv. Energy Mater. 2023, 13, 2203874. [Google Scholar] [CrossRef]

- Xia, L.; Najafi, E.; Li, Z.; Bergveld, H.J.; Donkers, M.C.F. A Computationally Efficient Implementation of a Full and Reduced-Order Electrochemistry-Based Model for Li-Ion Batteries. Appl. Energy 2017, 208, 1285–1296. [Google Scholar] [CrossRef]

- Moškon, J.; Gaberšček, M. Transmission Line Models for Evaluation of Impedance Response of Insertion Battery Electrodes and Cells. J. Power Sources Adv. 2021, 7, 100047. [Google Scholar] [CrossRef]

- Möller, M.; Kucevic, D.; Collath, N.; Parlikar, A.; Dotzauer, P.; Tepe, B.; Englberger, S.; Jossen, A.; Hesse, H. SimSES: A Holistic Simulation Framework for Modeling and Analyzing Stationary Energy Storage Systems. J. Energy Storage 2022, 49, 103743. [Google Scholar] [CrossRef]

- Gasper, P.; Collath, N.; Hesse, H.C.; Jossen, A.; Smith, K. Machine-Learning Assisted Identification of Accurate Battery Lifetime Models with Uncertainty. J. Electrochem. Soc. 2022, 169, 80518. [Google Scholar] [CrossRef]

- Aykol, M.; Herring, P.; Anapolsky, A. Machine Learning for Continuous Innovation in Battery Technologies. Nat. Rev. Mater. 2020, 5, 725–727. [Google Scholar] [CrossRef]

- Wei, Z.; He, Q.; Zhao, Y. Machine Learning for Battery Research. J. Power Sources 2022, 549, 232125. [Google Scholar] [CrossRef]

- Kucevic, D.; Tepe, B.; Englberger, S.; Parlikar, A.; Mühlbauer, M.; Bohlen, O.; Jossen, A.; Hesse, H. Standard Battery Energy Storage System Profiles: Analysis of Various Applications for Stationary Energy Storage Systems Using a Holistic Simulation Framework. J. Energy Storage 2020, 28, 101077. [Google Scholar] [CrossRef]

- 50Hertz Transmission GmbH. Archiv Netzfrequenz (in German): Daten Der Entso-E; 50Hertz Transmission GmbH: Berlin, Germany, 2019; Available online: https://www.50hertz.com/de/Transparenz/Kennzahlen/Regelenergie/ArchivNetzfrequenz (accessed on 19 July 2023).

- Tjaden, T.; Bergner, J.; Weniger, J.; Quaschning, V.; Solarspeichersysteme, F. Repräsentative Elektrische Lastprofile Für Wohngebäude in Deutschland Auf 1-Sekündiger Datenbasis. Hochsch. Für Tech. Und Wirtsch. HTW Berl. 2015. Available online: https://solar.htw-berlin.de/elektrische-lastprofile-fuer-wohngebaeude/ (accessed on 19 July 2023).

- Truong, C.N.; Naumann, M.; Karl, R.C.; Müller, M.; Jossen, A.; Hesse, H.C. Economics of Residential Photovoltaic Battery Systems in Germany: The Case of Tesla’s Powerwall. Batteries 2016, 2, 14. [Google Scholar] [CrossRef]

- Luque, J.; Personal, E.; Perez, F.; Romero-Ternero, M.; Leon, C. Low-Dimensional Representation of Monthly Electricity Demand Profiles. Eng. Appl. Artif. Intell. 2023, 119, 105728. [Google Scholar] [CrossRef]

- Luque, J.; Carrasco, A.; Personal, E.; Pérez, F.; León, C. Customer Identification for Electricity Retailers Based on Monthly Demand Profiles by Activity Sectors and Locations. IEEE Trans. Power Syst. 2023. [Google Scholar] [CrossRef]

- Luque, J.; Personal, E.; Garcia-Delgado, A.; Leon, C. Monthly Electricity Demand Patterns and Their Relationship with the Economic Sector and Geographic Location. IEEE Access 2021, 9, 86254–86267. [Google Scholar] [CrossRef]

- Yan, X.; Jia, M. A Novel Optimized SVM Classification Algorithm with Multi-Domain Feature and Its Application to Fault Diagnosis of Rolling Bearing. Neurocomputing 2018, 313, 47–64. [Google Scholar] [CrossRef]

- 50Hertz Transmission GmbH. Prequalification Process for Balancing Service Providers (FCR, aFRR, mFRR) in Germany . June 2022. Available online: https://www.regelleistung.net/xspproxy/api/StaticFiles/Regelleistung/Infos_f%C3%BCr_Anbieter/Wie_werde_ich_Regelenergieanbieter_Pr%C3%A4qualifikation/Pr%C3%A4qualifikationsbedingungen_FCR_aFRR_mFRR/PQ-Bedingungen-03.06.2022(englisch).pdf (accessed on 19 July 2023).

- Naumann, M.; Spingler, F.B.; Jossen, A. Analysis and Modeling of Cycle Aging of a Commercial LiFePO4/Graphite Cell. J. Power Sources 2020, 451, 227666. [Google Scholar] [CrossRef]

- Naumann, M.; Schimpe, M.; Keil, P.; Hesse, H.C.; Jossen, A. Analysis and Modeling of Calendar Aging of a Commercial LiFePO4/Graphite Cell. J. Energy Storage 2018, 17, 153–169. [Google Scholar] [CrossRef]

- Di Bucchianico, A. Coefficient of Determination (R2). Encycl. Stat. Qual. Reliab. 2008. [Google Scholar] [CrossRef]

- McDonald, G.C. Ridge Regression. Wiley Interdiscip. Rev. Comput. Stat. 2009, 1, 93–100. [Google Scholar] [CrossRef]

- Segal, M.R. Machine Learning Benchmarks and Random Forest Regression; UCSF: San Francisco, CA, USA, 2004; Available online: https://escholarship.org/uc/item/35x3v9t4 (accessed on 19 July 2023).

- Specht, D.F. A General Regression Neural Network. IEEE Trans. Neural Netw. 1991, 2, 568–576. [Google Scholar] [CrossRef] [PubMed]

- Arlot, S.; Celisse, A. A Survey of Cross-Validation Procedures for Model Selection. Stat. Surv. 2010, 4, 40–79. [Google Scholar] [CrossRef]

| Scenario | A-FCR | B-FCR | C-FCR | D-SCI | E-SCI |

|---|---|---|---|---|---|

| Application | FCR | FCR | FCR | SCI | SCI |

| Battery type | LFP:C | LFP:C | NMC:C | LFP:C | LFP:C |

| Storage Capacity | 1.6 MWh | 1.6 MWh | 1.6 MWh | 5 kWh | 5 kWh |

| Maximum Power | 1.6 MW | 1.6 MW | 1.6 MW | 5 kW | 5 kW |

| Power Electronic | Single unit | 3 modular | 3 modular | Single unit | Single unit |

| Operation strategy | FCR regulations Germany (status 2021) | Greedy | Feed-in damp | ||

| Name | Description |

|---|---|

| Energy storage capacity of the battery | |

| Positive energy (supplied to the battery); when | |

| Negative energy (drawn from the battery); when | |

| SOC at the start of the signal | |

| SOC at the end of the signal |

| Name | Description | Expression |

|---|---|---|

| Efficiency | ||

| Full Equivalent Cycles | ||

| Number of swaps (charging-discharging) | Defined in [23] |

| KPI | Single Target | Multi-Target | ||||

|---|---|---|---|---|---|---|

| Ridge | R. Forest | N. Network | Ridge | R. Forest | N. Network | |

| η | 0.011 | 0.122 | −0.064 | 0.016 | 0.570 | −0.034 |

| FEC | 0.811 | 0.927 | 0.918 | 0.866 | 0.919 | 0.902 |

| ηswap | 0.954 | 0.985 | 0.965 | 0.953 | 0.980 | 0.962 |

| KPI | MAE | MARE | R2 Score | Optimal Regressor | |

|---|---|---|---|---|---|

| Algorithm | Targets | ||||

| η | 0.056 | 7.38% | 0.570 | R. forest | Multi-target |

| FEC | 14.42 | 5.42% | 0.919 | R. forest | Single target |

| ηswap | 28.04 | 7.72% | 0.980 | R. forest | Single target |

| Algorithm | Processing Time | |

|---|---|---|

| Absolute | Relative | |

| Feature extraction | 2391 ms. | 99.41% |

| KPI estimation | 14 ms. | 0.59% |

| Total | 2406 ms. | 100.00% |

| KPI | Regression Algorithm | ||

|---|---|---|---|

| R. Forest | N. Network | ||

| 47,401 | |||

| 47,401 | |||

| 47,401 | |||

| Multi-target | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luque, J.; Tepe, B.; Larios, D.; León, C.; Hesse, H. Machine Learning Estimation of Battery Efficiency and Related Key Performance Indicators in Smart Energy Systems. Energies 2023, 16, 5548. https://doi.org/10.3390/en16145548

Luque J, Tepe B, Larios D, León C, Hesse H. Machine Learning Estimation of Battery Efficiency and Related Key Performance Indicators in Smart Energy Systems. Energies. 2023; 16(14):5548. https://doi.org/10.3390/en16145548

Chicago/Turabian StyleLuque, Joaquín, Benedikt Tepe, Diego Larios, Carlos León, and Holger Hesse. 2023. "Machine Learning Estimation of Battery Efficiency and Related Key Performance Indicators in Smart Energy Systems" Energies 16, no. 14: 5548. https://doi.org/10.3390/en16145548

APA StyleLuque, J., Tepe, B., Larios, D., León, C., & Hesse, H. (2023). Machine Learning Estimation of Battery Efficiency and Related Key Performance Indicators in Smart Energy Systems. Energies, 16(14), 5548. https://doi.org/10.3390/en16145548