A Multi-Agent Reinforcement Learning Method for Cooperative Secondary Voltage Control of Microgrids

Abstract

:1. Introduction

2. Problem Formulation

2.1. Preliminaries for POMDP

2.2. Microgrid Voltage Secondary Control

3. Methods

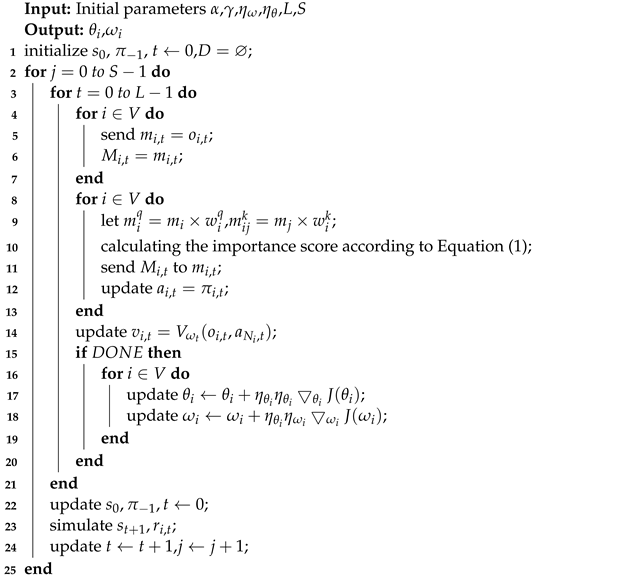

3.1. MA2C Algorithm

3.2. Attention Mechanisms

| Algorithm 1: AM-MA2C algorithm |

|

4. Simulation

5. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, H.; Li, F.; Xu, Y.; Rizy, D.T.; Adhikari, S. Autonomous and adaptive voltage control using multiple distributed energy resources. IEEE Trans. Power Syst. 2012, 28, 718–730. [Google Scholar] [CrossRef]

- Olivares, D.E.; Mehrizi-Sani, A.; Etemadi, A.H.; Cañizares, C.A.; Iravani, R.; Kazerani, M.; Hajimiragha, A.H.; Gomis-Bellmunt, O.; Saeedifard, M.; Palma-Behnke, R.; et al. Trends in microgrid control. IEEE Trans. Smart Grid 2014, 5, 1905–1919. [Google Scholar] [CrossRef]

- Korres, G.N.; Manousakis, N.M.; Xygkis, T.C.; Löfberg, J. Optimal phasor measurement unit placement for numerical observability in the presence of conventional measurements using semi-definite programming. IET Gener. Transm. Distrib. 2015, 9, 2427–2436. [Google Scholar] [CrossRef]

- Su, H.Y.; Kang, F.M.; Liu, C.W. Transmission grid secondary voltage control method using PMU data. IEEE Trans. Smart Grid 2016, 9, 2908–2917. [Google Scholar] [CrossRef]

- Wu, D.; Tang, F.; Dragicevic, T.; Vasquez, J.C.; Guerrero, J.M. A control architecture to coordinate renewable energy sources and energy storage systems in islanded microgrids. IEEE Trans. Smart Grid 2014, 6, 1156–1166. [Google Scholar] [CrossRef] [Green Version]

- Bidram, A.; Davoudi, A. Hierarchical structure of microgrids control system. IEEE Trans. Smart Grid 2012, 3, 1963–1976. [Google Scholar] [CrossRef]

- She, B.; Li, F.; Cui, H.; Wang, J.; Min, L.; Oboreh-Snapps, O.; Bo, R. Decentralized and coordinated V-f control for islanded microgrids considering der inadequacy and demand control. IEEE Trans. Energy Convers. 2023, 1–13, early access. [Google Scholar] [CrossRef]

- Rokrok, E.; Shafie-Khah, M.; Catalão, J.P. Review of primary voltage and frequency control methods for inverter-based islanded microgrids with distributed generation. Renew. Sustain. Energy Rev. 2018, 82, 3225–3235. [Google Scholar] [CrossRef]

- Xie, P.; Guerrero, J.M.; Tan, S.; Bazmohammadi, N.; Vasquez, J.C.; Mehrzadi, M.; Al-Turki, Y. Optimization-based power and energy management system in shipboard microgrid: A review. IEEE Syst. J. 2022, 16, 578–590. [Google Scholar] [CrossRef]

- Singh, P.; Paliwal, P.; Arya, A. A review on challenges and techniques for secondary control of microgrid. IOP Conf. Ser. Mater. Sci. Eng. 2019, 561, 012075. [Google Scholar] [CrossRef] [Green Version]

- Babayomi, O.; Zhang, Z.; Dragicevic, T.; Heydari, R.; Li, Y.; Garcia, C.; Rodriguez, J.; Kennel, R. Advances and opportunities in the model predictive control of microgrids: Part II–Secondary and tertiary layers. Int. J. Electr. Power Energy Syst. 2022, 134, 107339. [Google Scholar] [CrossRef]

- Bidram, A.; Davoudi, A.; Lewis, F.L.; Guerrero, J.M. Distributed cooperative secondary control of microgrids using feedback linearization. IEEE Trans. Power Syst. 2013, 28, 3462–3470. [Google Scholar] [CrossRef] [Green Version]

- Ning, B.; Han, Q.L.; Ding, L. Distributed finite-time secondary frequency and voltage control for islanded microgrids with communication delays and switching topologies. IEEE Trans. Cybern. 2020, 51, 3988–3999. [Google Scholar] [CrossRef] [PubMed]

- Mu, C.; Zhang, Y.; Jia, H.; He, H. Energy-storage-based intelligent frequency control of microgrid with stochastic model uncertainties. IEEE Trans. Smart Grid 2019, 11, 1748–1758. [Google Scholar] [CrossRef]

- Moharm, K. State of the art in big data applications in microgrid: A review. Adv. Eng. Inform. 2019, 42, 100945. [Google Scholar] [CrossRef]

- Mohammadi, F.; Mohammadi-Ivatloo, B.; Gharehpetian, G.B.; Ali, M.H.; Wei, W.; Erdinç, O.; Shirkhani, M. Robust control strategies for microgrids: A review. IEEE Syst. J. 2022, 16, 2401–2412. [Google Scholar] [CrossRef]

- Mu, C.; Wang, K.; Ma, S.; Chong, Z.; Ni, Z. Adaptive composite frequency control of power systems using reinforcement learning. CAAI Trans. Intell. Technol. 2022, 7, 671–684. [Google Scholar] [CrossRef]

- Nikmehr, N.; Ravadanegh, S.N. Optimal power dispatch of multi-microgrids at future smart distribution grids. IEEE Trans. Smart Grid 2015, 6, 1648–1657. [Google Scholar] [CrossRef]

- Wei, F.; Wan, Z.; He, H. Cyber-attack recovery strategy for smart grid based on deep reinforcement learning. IEEE Trans. Smart Grid 2019, 11, 2476–2486. [Google Scholar] [CrossRef]

- Zhang, Q.; Dehghanpour, K.; Wang, Z.; Qiu, F.; Zhao, D. Multi-agent safe policy learning for power management of networked microgrids. IEEE Trans. Smart Grid 2020, 12, 1048–1062. [Google Scholar] [CrossRef]

- Han, X.; Mu, C.; Yan, J.; Niu, Z. An autonomous control technology based on deep reinforcement learning for optimal active power dispatch. Int. J. Electr. Power Energy Syst. 2023, 145, 108686. [Google Scholar] [CrossRef]

- Duan, J.; Shi, D.; Diao, R.; Li, H.; Wang, Z.; Zhang, B.; Bian, D.; Yi, Z. Deep-reinforcement-learning-based autonomous voltage control for power grid operations. IEEE Trans. Power Syst. 2019, 35, 814–817. [Google Scholar] [CrossRef]

- Gao, Y.; Wang, W.; Yu, N. Consensus multi-agent reinforcement learning for volt-var control in power distribution networks. IEEE Trans. Smart Grid 2021, 12, 3594–3604. [Google Scholar] [CrossRef]

- Cao, D.; Zhao, J.; Hu, W.; Ding, F.; Huang, Q.; Chen, Z. Attention enabled multi-agent DRL for decentralized volt-VAR control of active distribution system using PV inverters and SVCs. IEEE Trans. Sustain. Energy 2021, 12, 1582–1592. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, Y.; Duan, J.; Qiu, G.; Liu, T.; Liu, J. DDPG-based multi-agent framework for SVC tuning in urban power grid with renewable energy resources. IEEE Trans. Power Syst. 2021, 36, 5465–5475. [Google Scholar] [CrossRef]

- Singh, V.P.; Kishor, N.; Samuel, P. Distributed multi-agent system-based load frequency control for multi-area power system in smart grid. IEEE Trans. Ind. Electron. 2017, 64, 5151–5160. [Google Scholar] [CrossRef]

- Yu, T.; Wang, H.; Zhou, B.; Chan, K.W.; Tang, J. Multi-agent correlated equilibrium Q(λ) learning for coordinated smart generation control of interconnected power grids. IEEE Trans. Power Syst. 2014, 30, 1669–1679. [Google Scholar] [CrossRef]

- Oroojlooy, A.; Hajinezhad, D. A review of cooperative multi-agent deep reinforcement learning. Appl. Intell. 2022, 53, 13677–13722. [Google Scholar] [CrossRef]

- Wang, J.; Xu, W.; Gu, Y.; Song, W.; Green, T.C. Multi-agent reinforcement learning for active voltage control on power distribution networks. Adv. Neural Inf. Process. Syst. 2021, 34, 3271–3284. [Google Scholar]

- Zhang, J.; Lu, C.; Si, J.; Song, J.; Su, Y. Deep reinforcement leaming for short-term voltage control by dynamic load shedding in china southem power grid. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; IEEE: Manhattan, NY, USA, 2018; pp. 1–8. [Google Scholar]

- Wei, Y.; Yu, F.R.; Song, M.; Han, Z. User scheduling and resource allocation in HetNets with hybrid energy supply: An actor-critic reinforcement learning approach. IEEE Trans. Wirel. Commun. 2017, 17, 680–692. [Google Scholar] [CrossRef]

- Bidram, A.; Lewis, F.L.; Davoudi, A.; Qu, Z. Frequency control of electric power microgrids using distributed cooperative control of multi-agent systems. In Proceedings of the 2013 IEEE International Conference on Cyber Technology in Automation, Control and Intelligent Systems, Nanjing, China, 26–29 May 2013; IEEE: Manhattan, NY, USA, 2013; pp. 223–228. [Google Scholar]

- Qu, Z.; Peng, J.C.H.; Yang, H.; Srinivasan, D. Modeling and analysis of inner controls effects on damping and synchronizing torque components in VSG-controlled converter. IEEE Trans. Energy Convers. 2020, 36, 488–499. [Google Scholar] [CrossRef]

- Guo, F.; Wen, C.; Mao, J.; Song, Y.D. Distributed secondary voltage and frequency restoration control of droop-controlled inverter-based microgrids. IEEE Trans. Ind. Electron. 2014, 62, 4355–4364. [Google Scholar] [CrossRef]

- Bidram, A.; Davoudi, A.; Lewis, F.L. A multiobjective distributed control framework for islanded AC microgrids. IEEE Trans. Ind. Inform. 2014, 10, 1785–1798. [Google Scholar] [CrossRef]

- Bakakeu, J.; Baer, S.; Klos, H.H.; Peschke, J.; Brossog, M.; Franke, J. Multi-agent reinforcement learning for the energy optimization of cyber-physical production systems. In Proceedings of the 2020 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), London, ON, Canada, 30 August–2 September 2020; pp. 143–163. [Google Scholar]

- Tomin, N.; Voropai, N.; Kurbatsky, V.; Rehtanz, C. Management of voltage flexibility from inverter-based distributed generation using multi-agent reinforcement learning. Energies 2021, 14, 8270. [Google Scholar] [CrossRef]

- Chu, T.; Wang, J.; Codecà, L.; Li, Z. Multi-agent deep reinforcement learning for large-scale traffic signal control. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1086–1095. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Y.; Zhang, B.; Xu, C.; Lan, T.; Diao, R.; Shi, D.; Wang, Z.; Lee, W.J. A data-driven method for fast ac optimal power flow solutions via deep reinforcement learning. J. Mod. Power Syst. Clean Energy 2020, 8, 1128–1139. [Google Scholar] [CrossRef]

- Guo, M.H.; Xu, T.X.; Liu, J.J.; Liu, Z.N.; Jiang, P.T.; Mu, T.J.; Zhang, S.H.; Martin, R.R.; Cheng, M.M.; Hu, S.M. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Cao, D.; Zhao, J.; Hu, W.; Ding, F.; Huang, Q.; Chen, Z.; Blaabjerg, F. Data-driven multi-agent deep reinforcement learning for distribution system decentralized voltage control with high penetration of PVs. IEEE Trans. Smart Grid 2021, 12, 4137–4150. [Google Scholar] [CrossRef]

- Mwakabuta, N.; Sekar, A. Comparative study of the IEEE 34 node test feeder under practical simplifications. In Proceedings of the 2007 39th North American Power Symposium, Manhattan, NY, USA, 30 September–2 October 2007; pp. 484–491. [Google Scholar]

- Shafiee, Q.; Guerrero, J.M.; Vasquez, J.C. Distributed secondary control for islanded microgrids-A novel approach. IEEE Trans. Power Electron. 2013, 29, 1018–1031. [Google Scholar] [CrossRef] [Green Version]

| DG1, DG2, DG3, DG4 | DG5, DG6 | |||

|---|---|---|---|---|

| DG | ||||

| 31.41 | 31.41 | |||

| 4 | 4 | |||

| 40 | 40 | |||

| Load1 | Load2 | Load3 | Load4 | |

| Load | ||||

| Random Seeds | AM-Attention | MA2C | IA2C |

|---|---|---|---|

| Case 1 | 0.24 | 0.18 | 0.19 |

| Case 2 | 0.23 | 0.21 | 0.18 |

| Case 3 | 0.22 | 0.21 | 0.19 |

| Case 4 | 0.24 | 0.22 | 0.19 |

| Case 5 | 0.22 | 0.21 | 0.20 |

| Average reward | 0.23 | 0.21 | 0.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, T.; Ma, S.; Tang, Z.; Xiang, T.; Mu, C.; Jin, Y. A Multi-Agent Reinforcement Learning Method for Cooperative Secondary Voltage Control of Microgrids. Energies 2023, 16, 5653. https://doi.org/10.3390/en16155653

Wang T, Ma S, Tang Z, Xiang T, Mu C, Jin Y. A Multi-Agent Reinforcement Learning Method for Cooperative Secondary Voltage Control of Microgrids. Energies. 2023; 16(15):5653. https://doi.org/10.3390/en16155653

Chicago/Turabian StyleWang, Tianhao, Shiqian Ma, Zhuo Tang, Tianchun Xiang, Chaoxu Mu, and Yao Jin. 2023. "A Multi-Agent Reinforcement Learning Method for Cooperative Secondary Voltage Control of Microgrids" Energies 16, no. 15: 5653. https://doi.org/10.3390/en16155653