Landfill Waste Segregation Using Transfer and Ensemble Machine Learning: A Convolutional Neural Network Approach

Abstract

1. Introduction

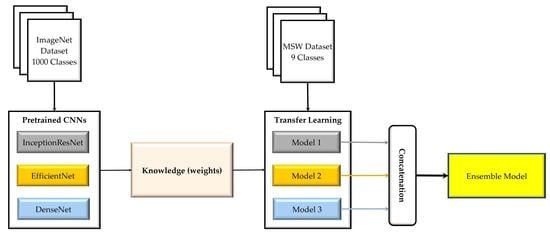

2. Pretrained CNN Models Architecture

2.1. Inception–ResNet

2.2. EfficientNet

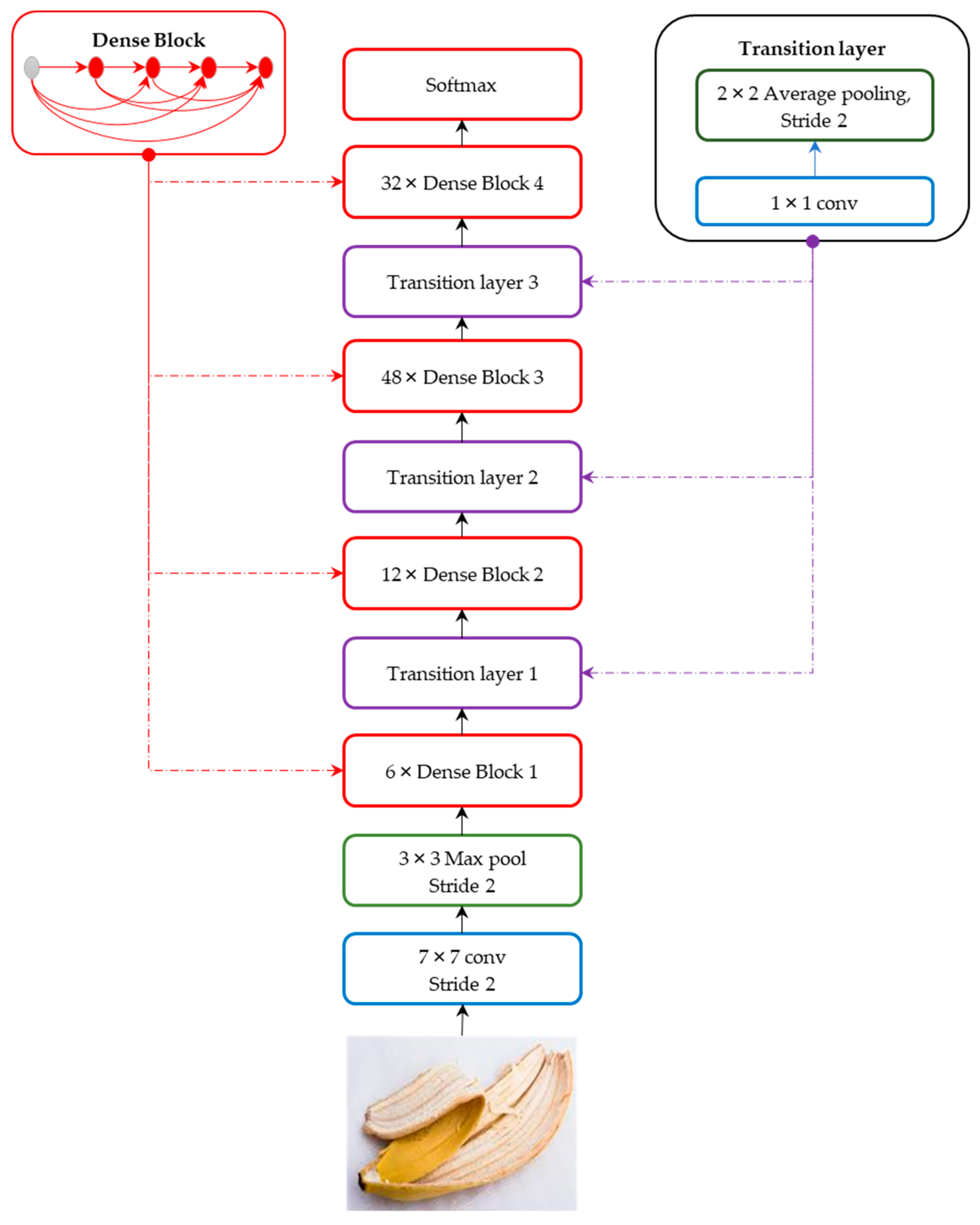

2.3. Densely Connected Convolutional Network (DenseNet)

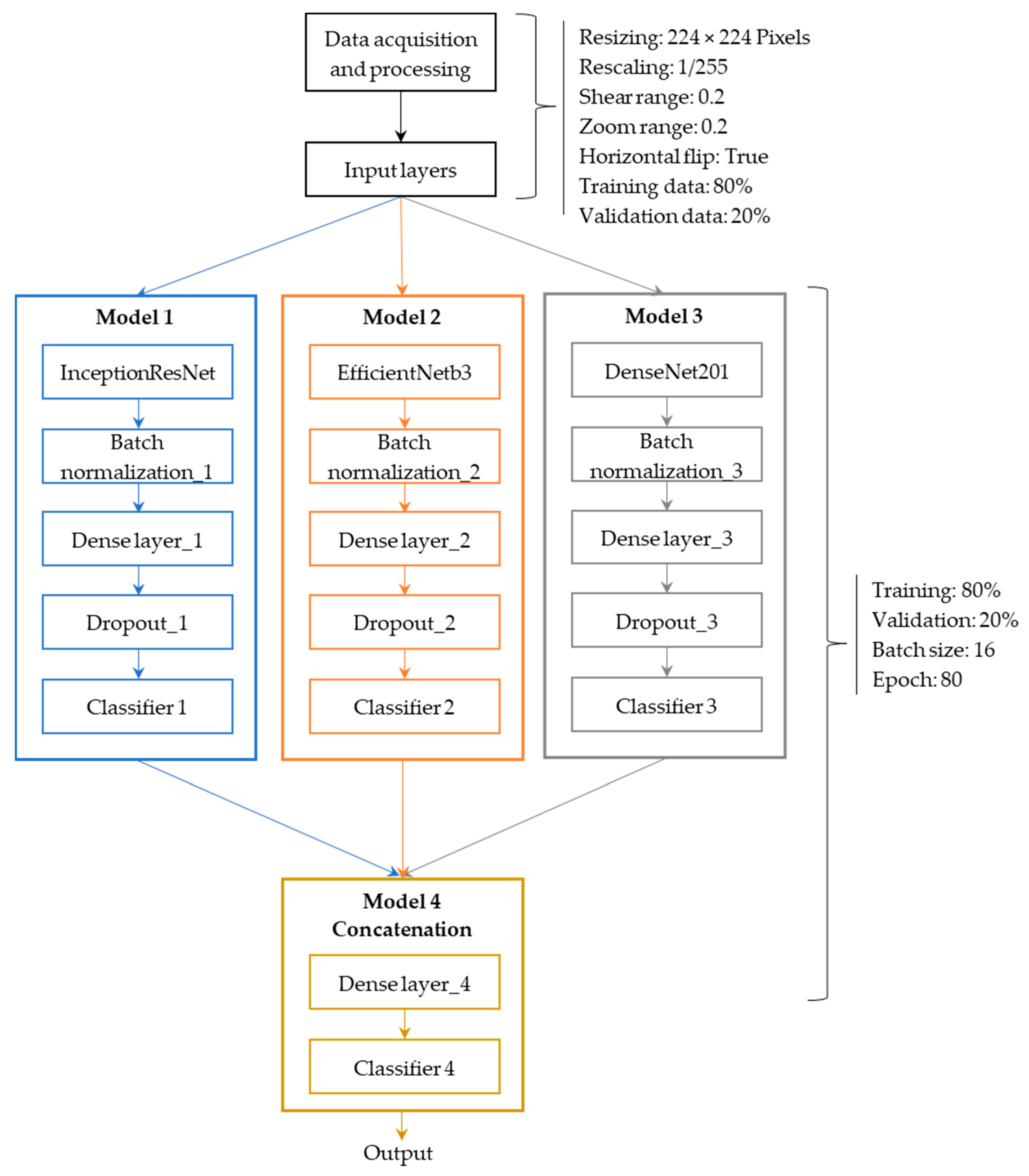

3. Methods

3.1. Deep Learning Libraries

3.2. Data Collection and Preprocessing

3.3. Ensemble Method

3.4. Experiment Setting

3.5. Performance Measures

4. Results and Discussion

4.1. Performance Metrics

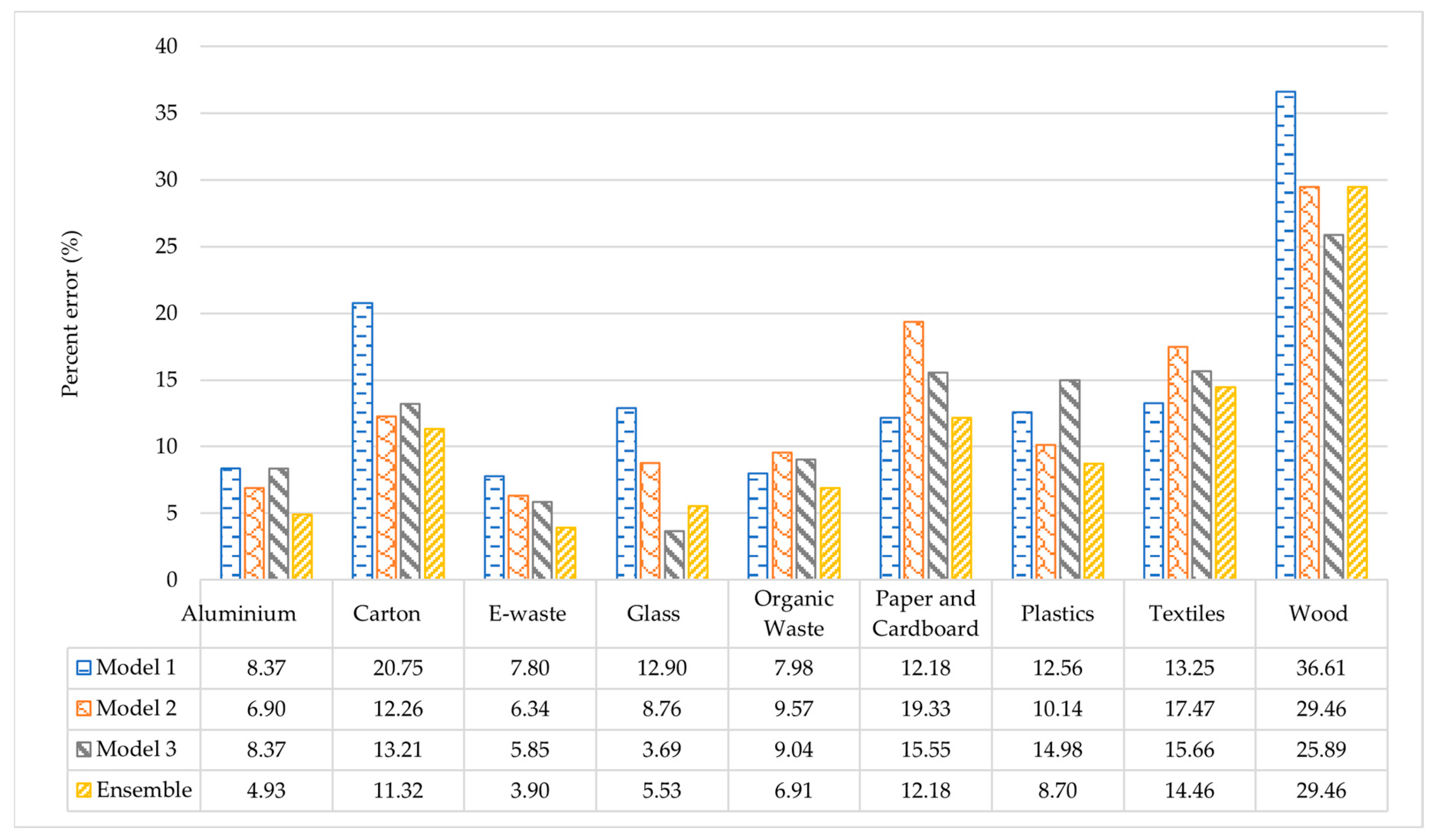

4.2. Error Per Class and Model

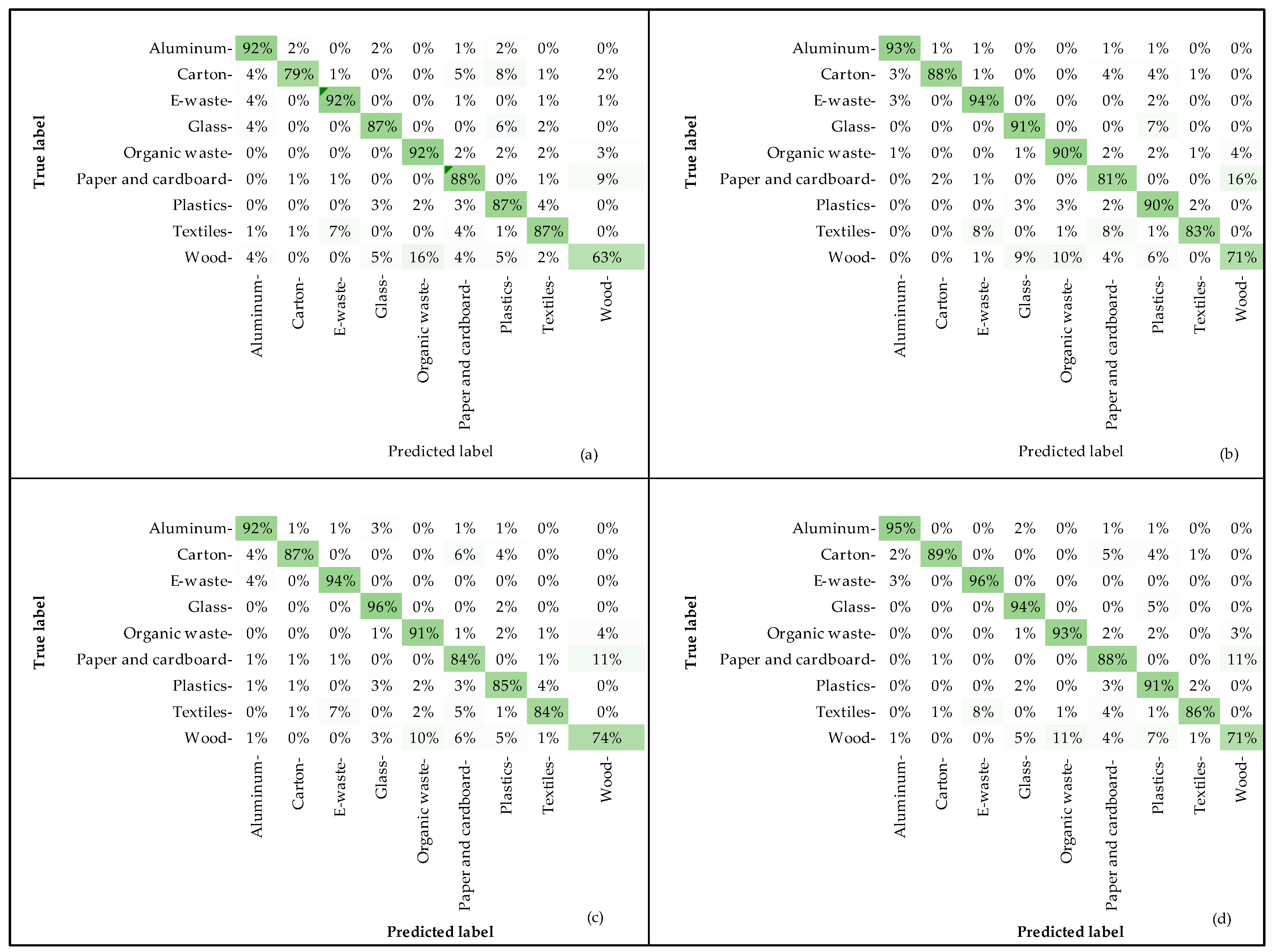

4.3. Confusion Matrix

4.4. Models Training Cost

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- US Environmental Protection Agency. National Overview: Facts and Figures on Materials, Wastes and Recycling. Available online: https://www.epa.gov/facts-and-figures-about-materials-waste-and-recycling/national-overview-facts-and-figures-materials (accessed on 1 March 2021).

- The World Bank. Solid Waste Management. Available online: https://www.worldbank.org/en/topic/urbandevelopment/brief/solid-waste-management (accessed on 24 March 2022).

- Ouedraogo, A.S.; Frazier, R.S.; Kumar, A. Comparative Life Cycle Assessment of Gasification and Landfilling for Disposal of Municipal Solid Wastes. Energies 2021, 14, 7032. [Google Scholar] [CrossRef]

- Gyawali, D.; Regmi, A.; Shakya, A.; Gautam, A.; Shrestha, S. Comparative analysis of multiple deep CNN models for waste classification. arXiv 2020, arXiv:2004.02168. [Google Scholar]

- Chu, Y.; Huang, C.; Xie, X.; Tan, B.; Kamal, S.; Xiong, X. Multilayer hybrid deep-learning method for waste classification and recycling. Comput. Intell. Neurosci. 2018, 2018, 5060857. [Google Scholar] [CrossRef] [PubMed]

- Huang, K.; Lei, H.; Jiao, Z.; Zhong, Z. Recycling waste classification using vision transformer on portable device. Sustainability 2021, 13, 11572. [Google Scholar] [CrossRef]

- Zhang, Q.; Yang, Q.; Zhang, X.; Bao, Q.; Su, J.; Liu, X. Waste image classification based on transfer learning and convolutional neural network. Waste Manag. 2021, 135, 150–157. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Xu, W.-Y.; Zhou, C.-B. Investigation of waste classification and collection actual effect and the study of long acting management in the community of Beijing. Huan Jing Ke Xue 2013, 34, 395–400. [Google Scholar] [PubMed]

- Li, F.-F.J.D.; Olga, R.; Alex, B.; Kai, L. About ImageNet. Available online: https://www.image-net.org/about.php (accessed on 14 September 2022).

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Elhamraoui, Z. InceptionResNetV2 Simple Introduction. Available online: https://medium.com/@zahraelhamraoui1997/inceptionresnetv2-simple-introduction-9a2000edcdb6 (accessed on 7 July 2022).

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Zhang, P.; Yang, L.; Li, D. EfficientNet-B4-Ranger: A novel method for greenhouse cucumber disease recognition under natural complex environment. Comput. Electron. Agric. 2020, 176, 105652. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Google. Welcome to Colaboratory Google (Google Colab); Google: Mountain View, CA, USA, 2022. [Google Scholar]

- Keras. About Keras. Available online: https://keras.io/about/ (accessed on 14 September 2022).

- Sekar, S. Waste Classification Date; Kaggle: San Francisco, CA, USA, 2019. [Google Scholar]

- Azis, F.A.; Suhaimi, H.; Abas, E. Waste classification using convolutional neural network. In Proceedings of the 2020 2nd International Conference on Information Technology and Computer Communications, Online, 12–14 August 2020; pp. 9–13. [Google Scholar]

- Susanth, G.S.; Livingston, L.J.; Livingston, L.A. Garbage Waste Segregation Using Deep Learning Techniques. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Chennai, India, 2–3 October 2020; p. 012040. [Google Scholar]

- Rahman, M.W.; Islam, R.; Hasan, A.; Bithi, N.I.; Hasan, M.M.; Rahman, M.M. Intelligent waste management system using deep learning with IoT. J. King Saud Univ.-Comput. Inf. Sci. 2020, 34, 2072–2087. [Google Scholar] [CrossRef]

- Mao, W.-L.; Chen, W.-C.; Wang, C.-T.; Lin, Y.-H. Recycling waste classification using optimized convolutional neural network. Resour. Conserv. Recycl. 2021, 164, 105132. [Google Scholar] [CrossRef]

- Miko, P.; Shiela, M.M.; Hilary, R.; Mary, J.S. i-BIN: An Intelligent Trash Bin for Automatic Waste Segregation and Monitoring System. In Proceedings of the 2019 IEEE 11th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Laoag, Philippines, 29 November–1 December 2019. [Google Scholar]

- Ruiz, V.; Sánchez, Á.; Vélez, J.F.; Raducanu, B. Automatic image-based waste classification. In Proceedings of the International Work-Conference on the Interplay Between Natural and Artificial Computation, Almería, Spain, 3–7 June 2019; pp. 422–431. [Google Scholar]

- Geetha, S.; Saha, J.; Dasgupta, I.; Bera, R.; Lawal, I.A.; Kadry, S. Design of Waste Management System Using Ensemble Neural Networks. Designs 2022, 6, 27. [Google Scholar] [CrossRef]

- David. Alternative to Colab Pro: Comparing Google’s Jupiter Notebooks to Gradient Notebooks (Updated). Available online: https://blog.paperspace.com/alternative-to-google-colab-pro/ (accessed on 26 December 2022).

- Strubell, E.; Ganesh, A.; McCallum, A. Energy and policy considerations for deep learning in NLP. arXiv 2019, arXiv:1906.02243. [Google Scholar]

- Davis, J.; Bizo, D.; Lawrence, A.; Rogers, O.; Smolaks, M. Uptime Institute Lobal Data Center Urvey 2022; Uptime Institute: New York, NY, USA, 2022. [Google Scholar]

- Techpowerup. Tesla T4 Specs. Available online: https://www.techpowerup.com/gpu-specs/tesla-t4.c3316 (accessed on 26 December 2022).

- U.S Environmental Protection Agency. Emissions & Generation Resource Integrated Database (eGRID). Available online: https://www.epa.gov/egrid (accessed on 26 December 2022).

| № | Classes | Class Items | Initial Database | Added Database | Total Number of Images |

|---|---|---|---|---|---|

| 1 | Aluminum | Canes, plates, bottles, leads, bottle openers, trash cans, cooking pots, car parts, and silverware | 1019 | - | 1019 |

| 2 | Carton | Juice, milk, and cigarettes boxes | 382 | 151 | 533 |

| 3 | E-waste | Batteries, electronics (computer, phones, etc.) circuit boards, microchips, cables, and chargers | - | 1029 | 1029 |

| 4 | Glass | Bottles, jars, containers, cups, decoration, plates, and pitchers | 1089 | - | 1089 |

| 5 | Organic waste | Fruits, vegetables, meats, fast food, meals, plants, seeds, cheese, bread, and eggshells | 1053 | - | 1053 |

| 6 | Paper and cardboard | Newspapers, magazines, books, shipping boxes, letters, envelopes, gift and pizza boxes, shredded paper, flyers, and stickers. | 1194 | - | 1194 |

| 7 | Plastics | Bottles, containers, cups, plates, food packaging, bags, silverware, furniture, cases, buckets, planting pots, and trash bins | 1035 | - | 1035 |

| 8 | Textiles | Clothes, curtains, towels, decorations, sheets, bags and fabric | 346 | 484 | 830 |

| 9 | Wood | Signs, furniture, cases, wood blocks, tiles, utensils, plates, silverware, wine cork, pellets, boards, baskets, mashed wood, and containers. | 418 | 146 | 564 |

| Total | 6536 | 1810 | 8346 |

| Ensemble | Model 1 | Model 2 | Model 3 | |

|---|---|---|---|---|

| Aluminum | 95 | 88 | 94 | 91 |

| Carton | 95 | 90 | 91 | 88 |

| E-waste | 93 | 92 | 90 | 92 |

| Glass | 93 | 92 | 91 | 92 |

| Organic waste | 92 | 88 | 90 | 89 |

| Paper & cardboard | 88 | 87 | 85 | 86 |

| Plastics | 87 | 82 | 83 | 89 |

| Textiles | 95 | 86 | 95 | 92 |

| Wood | 71 | 69 | 63 | 70 |

| # | Method | Data Source | Data Size | Number of Classes | Classes | Accuracy (%) | References |

|---|---|---|---|---|---|---|---|

| 1 | Inception V3 | - | 2433 | 6 | Cardboard, glass, paper, plastic, metal, and organic waste | 75 | [23] |

| 2 | ResNet | TrashNet | 2527 | 6 | Cardboard, glass, paper, plastic, metal, and trash | 89 | [24] |

| 3 | Inception-v3 | GitHub | 2400 | 6 | Cardboard, glass, paper, plastic, metal, and others | 93 | [19] |

| 4 | YOLO | - | 2527 | 6 | Cardboard, glass, paper, plastic, metal, and organic trash | 94 | [25] |

| 5 | DenseNet169 | TrashNet and Google images | 4163 | 6 | Cardboard, glass, paper, plastic, metal, and trash | 95 | [20] |

| 6 | ResNet-34 | GITHUB | 2560 | 6 | Cardboard, glass, paper, plastic, metal, and trash | 95 | [21] |

| 7 | Ensemble | Kaggle and Google images | 5559 | 6 | Cardboard, glass, paper, plastic, aluminum, and organic waste | 93 | This study |

| 8 | Ensemble | Kaggle and Google images | 8346 | 9 | Paper and cardboard, glass, plastic, aluminum, organic waste, carton, wood, textiles, and e-waste | 90 | This study |

| CNN Models | Training Time (Minutes) | Total Power (Wh) | Environmental Cost (g CO2 Equivalent) |

|---|---|---|---|

| Ensemble | 19.28 | 34.87 | 15.45 |

| Model 1 | 24.00 | 43.40 | 19.23 |

| Model 2 | 19.57 | 35.38 | 15.68 |

| Model 3 | 18.77 | 33.94 | 15.04 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ouedraogo, A.S.; Kumar, A.; Wang, N. Landfill Waste Segregation Using Transfer and Ensemble Machine Learning: A Convolutional Neural Network Approach. Energies 2023, 16, 5980. https://doi.org/10.3390/en16165980

Ouedraogo AS, Kumar A, Wang N. Landfill Waste Segregation Using Transfer and Ensemble Machine Learning: A Convolutional Neural Network Approach. Energies. 2023; 16(16):5980. https://doi.org/10.3390/en16165980

Chicago/Turabian StyleOuedraogo, Angelika Sita, Ajay Kumar, and Ning Wang. 2023. "Landfill Waste Segregation Using Transfer and Ensemble Machine Learning: A Convolutional Neural Network Approach" Energies 16, no. 16: 5980. https://doi.org/10.3390/en16165980

APA StyleOuedraogo, A. S., Kumar, A., & Wang, N. (2023). Landfill Waste Segregation Using Transfer and Ensemble Machine Learning: A Convolutional Neural Network Approach. Energies, 16(16), 5980. https://doi.org/10.3390/en16165980